一、Kubernetes节点上线和下线

1.新节点上线

1)准备工作

关闭防火墙firewalld、selinux

设置主机名

设置/etc/hosts

关闭swap

swapoff -a

永久关闭,vi /etc/fstab 注释掉swap那行

将桥接的ipv4流量传递到iptables链

modprobe br_netfilter ##生成bridge相关内核参数

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system # 生效

打开端口转发

echo "net.ipv4.ip_forward = 1" >> /etc/sysctl.conf

sysctl -p

时间同步

yum install -y chrony;

systemctl start chronyd;

systemctl enable chronyd

2)安装containerd

先安装yum-utils工具

yum install -y yum-utils

配置Docker官方的yum仓库,如果做过,可以跳过

yum-config-manager \

--add-repo \

https://download.docker.com/linux/centos/docker-ce.repo

安装containerd

yum install containerd.io -y

启动服务

systemctl enable containerd

systemctl start containerd

生成默认配置

containerd config default > /etc/containerd/config.toml

修改配置

vi /etc/containerd/config.toml

sandbox_image = "registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.9" # 修改为阿里云镜像地址

SystemdCgroup = true # 使用

systemd cgroup

重启containerd服务

systemctl restart containerd

3)配置kubernetes仓库

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

说明:kubernetes用的是RHEL7的源,和8是通用的

4)安装kubeadm和kubelet

yum install -y kubelet-1.27.2 kubeadm-1.27.2 kubectl-1.27.2

启动kubelet服务

systemctl start kubelet.service

systemctl enable kubelet.service

5)设置crictl连接 containerd

crictl config --set runtime-endpoint=unix:///run/containerd/containerd.sock

6)到master节点上,获取join token

kubeadm token create --print-join-command

7)到新节点,加入集群

kubeadm join 192.168.222.101:6443 --token uzopz7.ryng3lkdh2qwvy89 --discovery-token-cacert-hash sha256:1a1fca6d1ffccb4f48322d706ea43ea7b3ef2194483699952178950b52fe2601

8)master上查看node信息

kubectl get node

2. 节点下线

1)下线之前,先创建一个测试Deployment

命令行创建deployment,指定Pod副本为7

kubectl create deployment testdp2 --image=nginx:1.23.2 --replicas=7

查看Pod

kubectl get po -o wide

2)驱逐下线节点上的Pod,并设置不可调度(aminglinux01上执行)

kubectl drain aminglinux04 --ignore-daemonsets

3)恢复可调度(aminglinux01上执行)

kubectl uncordon aminglinux04

4)移除节点

kubectl delete node aminglinux04

二、Kubernetes高可用集群搭建(堆叠etcd模式)

堆叠etcd集群指的是,etcd和Kubernetes其它组件共用一台主机。

高可用思路:

1)使用keepalived+haproxy实现高可用+负载均衡

2)apiserver、Controller-manager、scheduler三台机器分别部署一个节点,共三个节点

3)etcd三台机器三个节点实现集群模式

机器准备(操作系统Rocky8.7):

| 主机名 | IP | 安装组件 |

| k8s-master01 | 192.168.100.11 | etcd、apiserver、Controller-manager、scheduller、 keepalived、haproxy、kubelet、containerd、kubeadm |

| k8s-master02 | 192.168.100.12 | etcd、apiserver、Controller-manager、scheduller、 keepalived、haproxy、kubelet、containerd、kubeadm |

| k8s-master03 | 192.168.100.13 | etcd、apiserver、Controller-manager、scheduller、 keepalived、haproxy、kubelet、containerd、kubeadm |

| k8s-node01 | 192.168.100.14 | kubelet、containerd、kubeadm |

| k8s-node01 | 192.168.100.15 | kubelet、containerd、kubeadm |

| -- | 192.168.100.200 |

1.准备工作

说明:5台机器都做

1)关闭防火墙firewalld、selinux

[root@bogon ~]# sed -i 's/SELINUX=enforcing/SELINUX=disabled/' /etc/selinux/config

[root@bogon ~]# systemctl disable firewalld; systemctl stop firewalld

Removed /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

2)设置主机名

hostnamectl set-hostname k8s-master01

3)设置/etc/hosts

echo "192.168.100.11 k8s-master01" >> /etc/hosts

echo "192.168.100.12 k8s-master01" >> /etc/hosts

echo "192.168.100.13 k8s-master01" >> /etc/hosts

echo "192.168.100.14 k8s-node01" >> /etc/hosts

echo "192.168.100.15 k8s-node02" >> /etc/hosts

4)关闭swap

swapoff -a

永久关闭, 注释掉swap那行

/dev/mapper/rl-root / xfs defaults 0 0

UUID=784fb296-c00c-4615-a4a6-583ae0156b04 /boot xfs defaults 0 0

#/dev/mapper/rl-swap none swap defaults 0 0

5)将桥接的ipv4流量传递到iptables链

modprobe br_netfilter ##生成bridge相关内核参数

[root@bogon ~]# cat > /etc/sysctl.d/k8s.conf << EOF

> net.bridge.bridge-nf-call-ip6tables = 1

> net.bridge.bridge-nf-call-iptables = 1

> net.ipv4.ip_forward = 1

> EOF

sysctl --system # 生效[root@bogon ~]# sysctl --system

* Applying /usr/lib/sysctl.d/10-default-yama-scope.conf ...

kernel.yama.ptrace_scope = 0

* Applying /usr/lib/sysctl.d/50-coredump.conf ...

kernel.core_pattern = |/usr/lib/systemd/systemd-coredump %P %u %g %s %t %c %h %e

kernel.core_pipe_limit = 16

* Applying /usr/lib/sysctl.d/50-default.conf ...

kernel.sysrq = 16

kernel.core_uses_pid = 1

kernel.kptr_restrict = 1

net.ipv4.conf.all.rp_filter = 1

net.ipv4.conf.all.accept_source_route = 0

net.ipv4.conf.all.promote_secondaries = 1

net.core.default_qdisc = fq_codel

fs.protected_hardlinks = 1

fs.protected_symlinks = 1

* Applying /usr/lib/sysctl.d/50-libkcapi-optmem_max.conf ...

net.core.optmem_max = 81920

* Applying /usr/lib/sysctl.d/50-pid-max.conf ...

kernel.pid_max = 4194304

* Applying /etc/sysctl.d/99-sysctl.conf ...

* Applying /etc/sysctl.d/k8s.conf ...

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

* Applying /etc/sysctl.conf ...

6)时间同步

yum install -y chrony;

systemctl start chronyd;

systemctl enable chronyd[root@bogon ~]# systemctl start chronyd

[root@bogon ~]# systemctl enable chronyd

Created symlink /etc/systemd/system/multi-user.target.wants/chronyd.service → /usr/lib/systemd/system/chronyd.service.

[root@bogon ~]#

2. 安装Keepalived + haproxy

1)用yum安装keepalived和haproxy(三台master上)

yum install -y keepalived haproxy

2)配置keepalived

master01上

vi /etc/keepalived/keepalived.conf #编辑成如下内容

global_defs {

router_id lvs-keepalived01 #router_id 机器标识。故障发生时,邮件通知会用到。

}v

rrp_script chk_haproxy {

script "/etc/keepalived/check_haproxy.sh"

interval 5

fall 2

}v

rrp_instance VI_1 { #vrrp实例定义部分

state MASTER #设置lvs的状态,MASTER和BACKUP两种,必须大写

interface ens33 #设置对外服务的接口

virtual_router_id 100 #设置虚拟路由标示,这个标示是一个数字,同一个vrrp实例使用唯一

标示

priority 100 #定义优先级,数字越大优先级越高,在一个vrrp——instance下,

master的优先级必须大于backup

advert_int 1 #设定master与backup负载均衡器之间同步检查的时间间隔,单位是秒

authentication { #设置验证类型和密码

auth_type PASS #主要有PASS和AH两种

auth_pass aminglinuX #验证密码,同一个vrrp_instance下MASTER和BACKUP密码必须相

同

}

virtual_ipaddress { #设置虚拟ip地址,可以设置多个,每行一个

192.168.222.200

}

mcast_src_ip 192.168.222.101 #master01的ip

track_script {

chk_haproxy

}

}

master02上

vi /etc/keepalived/keepalived.conf #编辑成如下内容

global_defs {

router_id lvs-keepalived01 #router_id 机器标识。故障发生时,邮件通知会用到。

}v

rrp_script chk_haproxy {

script "/etc/keepalived/check_haproxy.sh"

interval 5

fall 2

}v

rrp_instance VI_1 { #vrrp实例定义部分

state BACKUP #设置lvs的状态,MASTER和BACKUP两种,必须大写

interface ens33 #设置对外服务的接口

virtual_router_id 100 #设置虚拟路由标示,这个标示是一个数字,同一个vrrp实例使用唯一

标示

priority 90 #定义优先级,数字越大优先级越高,在一个vrrp——instance下,

master的优先级必须大于backup

advert_int 1 #设定master与backup负载均衡器之间同步检查的时间间隔,单位是秒

authentication { #设置验证类型和密码

auth_type PASS #主要有PASS和AH两种

auth_pass aminglinuX #验证密码,同一个vrrp_instance下MASTER和BACKUP密码必须相

同

}

virtual_ipaddress { #设置虚拟ip地址,可以设置多个,每行一个

192.168.222.200

}

mcast_src_ip 192.168.222.102 #master02的ip

track_script {

chk_haproxy

}

}

master03上

vi /etc/keepalived/keepalived.conf #编辑成如下内容

global_defs {

router_id lvs-keepalived01 #router_id 机器标识。故障发生时,邮件通知会用到。

}v

rrp_script chk_haproxy {

script "/etc/keepalived/check_haproxy.sh"

interval 5

fall 2

}v

rrp_instance VI_1 { #vrrp实例定义部分

state BACKUP #设置lvs的状态,MASTER和BACKUP两种,必须大写

interface ens33 #设置对外服务的接口

virtual_router_id 100 #设置虚拟路由标示,这个标示是一个数字,同一个vrrp实例使用唯一

标示

priority 80 #定义优先级,数字越大优先级越高,在一个vrrp——instance下,

master的优先级必须大于backup

advert_int 1 #设定master与backup负载均衡器之间同步检查的时间间隔,单位是秒

authentication { #设置验证类型和密码

auth_type PASS #主要有PASS和AH两种

auth_pass aminglinuX #验证密码,同一个vrrp_instance下MASTER和BACKUP密码必须相

同

}

virtual_ipaddress { #设置虚拟ip地址,可以设置多个,每行一个

192.168.222.200

}

mcast_src_ip 192.168.222.103 #master03的ip

track_script {

chk_haproxy

}

}

编辑检测脚本(三台master上都执行)

vi /etc/keepalived/check_haproxy.sh #内容如下

#!/bin/bash

ha_pid_num=$(ps -ef | grep ^haproxy | wc -l)

if [[ ${ha_pid_num} -ne 0 ]];then

exit 0

else

exit 1

fi

保存后,给执行权限

chmod a+x /etc/keepalived/check_haproxy.sh

3)配置haproxy(三台master上)

vi /etc/haproxy/haproxy.cfg ##修改为如下内容

global

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

stats socket /var/lib/haproxy/stats

ssl-default-bind-ciphers PROFILE=SYSTEM

ssl-default-server-ciphers PROFILE=SYSTEM

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

frontend k8s

bind 0.0.0.0:16443

mode tcp

option tcplog

tcp-request inspect-delay 5s

default_backend k8s

backend k8s

mode tcp

balance roundrobin

server master01 192.168.222.101:6443 check

server master02 192.168.222.102:6443 check

server master03 192.168.222.103:6443 check

4)启动haproxy和keepalived(三台master上执行)

启动haproxy

systemctl start haproxy; systemctl enable haproxy

启动keepalived

systemctl start keepalived; systemctl enable keepalived

[root@bogon ~]# systemctl start haproxy; systemctl enable haproxy

Created symlink /etc/systemd/system/multi-user.target.wants/haproxy.service → /usr/lib/systemd/system/haproxy.service.

[root@bogon ~]# systemctl start keepalived; systemctl enable keepalived

Created symlink /etc/systemd/system/multi-user.target.wants/keepalived.service → /usr/lib/systemd/system/keepalived.service.

[root@bogon ~]#

5)测试

首先查看ip

ip add #master01上已经自动配置上了192.168.222.200

[root@k8s-master01 ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:0c:29:a2:8c:65 brd ff:ff:ff:ff:ff:ff

altname enp3s0

inet 192.168.100.11/24 brd 192.168.100.255 scope global noprefixroute ens160

valid_lft forever preferred_lft forever

inet 192.168.100.200/32 scope global ens160

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fea2:8c65/64 scope link noprefixroute

valid_lft forever preferred_lft forever

[root@k8s-master01 ~]#

master01上关闭keepalived服务,vip会跑到master02上,再把master02上的keepalived服务关闭,vip会跑到master03上再把master02和master01服务开启

3. 安装Containerd(5台机器上都操作)

1)先安装yum-utils工具

yum install -y yum-utils ,yum如果安装有问题使用dnf install yum-utils

2)配置Docker官方的yum仓库,如果做过,可以跳过

yum-config-manager \

--add-repo \

https://download.docker.com/linux/centos/docker-ce.repo

[root@k8s-master01 ~]# yum-config-manager \

> --add-repo \

> https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

Repository extras is listed more than once in the configuration

Adding repo from: https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

[root@k8s-master01 ~]#

3)安装containerd

yum install containerd.io -y

4)启动服务

systemctl enable containerd

systemctl start containerd

5)生成默认配置

containerd config default > /etc/containerd/config.toml

[root@k8s-master01 ~]# systemctl enable containerd

Created symlink /etc/systemd/system/multi-user.target.wants/containerd.service → /usr/lib/systemd/system/containerd.service.

[root@k8s-master01 ~]# systemctl start containerd

[root@k8s-master01 ~]# containerd config default > /etc/containerd/config.toml

[root@k8s-master01 ~]#

6)修改配置

vi /etc/containerd/config.toml

sandbox_image = "registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.9" # 修改为阿里

云镜像地址

SystemdCgroup = true # 使用

systemd cgroup

7)重启containerd服务

systemctl restart containerd

4. 配置kubernetes仓库(5台机器都操作)

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

[root@k8s-master01 ~]# cat <<EOF > /etc/yum.repos.d/kubernetes.repo

> [kubernetes]

> name=Kubernetes

> baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

> enabled=1

> gpgcheck=1

> repo_gpgcheck=1

> gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yumkey.

> gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

> EOF

[root@k8s-master01 ~]#

说明:kubernetes用的是RHEL7的源,和8是通用的

5. 安装kubeadm和kubelet(5台机器都操作)

1)查看所有版本

yum --showduplicates list kubeadm

2)安装1.27.2版本

yum install -y kubelet-1.27.2 kubeadm-1.27.2 kubectl-1.27.2

3)启动kubelet服务

systemctl start kubelet.service

systemctl enable kubelet.service

[root@k8s-master01 ~]# systemctl start kubelet.service

[root@k8s-master01 ~]# systemctl enable kubelet.service

Created symlink /etc/systemd/system/multi-user.target.wants/kubelet.service → /usr/lib/systemd/system/kubelet.service.

[root@k8s-master01 ~]#

4)设置crictl连接 containerd(5台机器都操作)

crictl config --set runtime-endpoint=unix:///run/containerd/containerd.sock

[root@k8s-master01 ~]# crictl config --set runtime-endpoint=unix:///run/containerd/containerd.sock

[root@k8s-master01 ~]#

6. 用kubeadm初始化(master01上)

参考 https://kubernetes.io/zh-cn/docs/setup/production-environment/tools/kubeadm/high-availability/

kubeadm init --image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers --kubernetes-version=v1.28.2 --service-cidr=10.15.0.0/16 --pod-network-cidr=10.18.0.0/16 --upload-certs --control-plane-endpoint "192.168.100.200:16443"

输出:

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/configAlternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/You can now join any number of the control-plane node running the following command on each as root:

kubeadm join 192.168.100.200:16443 --token a9pwzp.86c7ujzy3e92f2qc \

--discovery-token-ca-cert-hash sha256:9dd17bece1e255a502ccba2f40de30297045cbe34af2dd742305219a01b4cd47 \

--control-plane --certificate-key 73e862799c6241fc1335f0c8de1d9ef99926710129f8c8d6d4732f249009f9adPlease note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.100.200:16443 --token a9pwzp.86c7ujzy3e92f2qc \

--discovery-token-ca-cert-hash sha256:9dd17bece1e255a502ccba2f40de30297045cbe34af2dd742305219a01b4cd47

[root@k8s-master01 ~]#

拷贝kubeconfig配置,目的是可以使用kubectl命令访问k8s集群

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

[root@k8s-master01 ~]# mkdir -p $HOME/.kube

[root@k8s-master01 ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8s-master01 ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

[root@k8s-master01 ~]#

7)将两个master加入集群

master02和master03上执行:

说明:以下join命令是将master加入集群

kubeadm join 192.168.100.200:16443 --token a9pwzp.86c7ujzy3e92f2qc \

--discovery-token-ca-cert-hash sha256:9dd17bece1e255a502ccba2f40de30297045cbe34af2dd742305219a01b4cd47 \

--control-plane --certificate-key 73e862799c6241fc1335f0c8de1d9ef99926710129f8c8d6d4732f249009f9ad

该token有效期为24小时,如果过期,需要重新获取token,方法如下:

kubeadm token create --print-join-command --certificate-key

57bce3cb5a574f50350f17fa533095443fb1ff2df480b9fcd42f6203cc014e6b

拷贝kubeconfig配置,目的是可以使用kubectl命令访问k8s集群

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

8)修改配置文件

master01上:

vi /etc/kubernetes/manifests/etcd.yaml

将--initial-cluster=master01=https://192.168.222.101:2380 改为 --initialcluster=

master01=https://192.168.222.101:2380,master02=https://192.168.222.102:2380,master03=https:/

master02上:

vi /etc/kubernetes/manifests/etcd.yaml

将--initialcluster=

master01=https://192.168.222.101:2380,master02=https://192.168.222.102:2380改为 -

-initialcluster=

master01=https://192.168.222.101:2380,master02=https://192.168.222.102:2380,master03=https:/

master03不用修改

9)将两个node加入集群

node01和node02上执行

说明:以下join命令是将node加入集群

kubeadm join 192.168.222.200:16443 --token y0xje6.ret5h4uv9ec2x62e \

--discovery-token-ca-cert-hash

sha256:2d12eeafb03e0c86da86cdc7144d1eb8adc82cba0d151230d99a77acec4d5a2e

该token有效期为24小时,如果过期,需要重新获取token,方法如下

kubeadm token create --print-join-command

查看node状态(master01上执行)

[root@k8s-master01 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master01 NotReady control-plane 7m58s v1.28.2

k8s-master02 NotReady control-plane 5m28s v1.28.2

k8s-master03 NotReady control-plane 5m18s v1.28.2

k8s-node01 NotReady <none> 79s v1.28.2

k8s-node02 NotReady <none> 8s v1.28.2

[root@k8s-master01 ~]#

7. 安装calico网络插件(master01上)

curl https://raw.githubusercontent.com/projectcalico/calico/v3.26.0/manifests/calico.yaml -O

如果遇到以下问题:

https://raw.githubusercontent.com/projectcalico/calico/v3.26.0/manifests/calico.yaml -O

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0curl: (7) Failed to connect to raw.githubusercontent.com port 443: Connection refused可在hosts文件里添加:

199.232.68.133 raw.githubusercontent.com

185.199.108.133 raw.githubusercontent.com

185.199.109.133 raw.githubusercontent.com

185.199.110.133 raw.githubusercontent.com

185.199.111.133 raw.githubusercontent.com

[root@k8s-master01 ~]# curl https://raw.githubusercontent.com/projectcalico/calico/v3.26.0/manifests/calico.yaml -O

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 238k 100 238k 0 0 56351 0 0:00:04 0:00:04 --:--:-- 56351

下载完后还需要修改⾥⾯定义 Pod ⽹络(CALICO_IPV4POOL_CIDR),与前⾯ kubeadm init 的 --podnetwork-cidr 指定的⼀样

vi calico.yaml

vim calico.yaml

# - name: CALICO_IPV4POOL_CIDR

# value: "192.168.0.0/16"

# 修改为:

- name: CALICO_IPV4POOL_CIDR

value: "10.18.0.0/16"

部署

kubectl apply -f calico.yaml

[root@k8s-master01 ~]# vim calico.yaml

[root@k8s-master01 ~]# kubectl apply -f calico.yaml

poddisruptionbudget.policy/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

serviceaccount/calico-node created

serviceaccount/calico-cni-plugin created

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgpfilters.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrole.rbac.authorization.k8s.io/calico-cni-plugin created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-cni-plugin created

daemonset.apps/calico-node created

deployment.apps/calico-kube-controllers created

[root@k8s-master01 ~]#

查看

kubectl get pods -n kube-system

[root@k8s-master01 ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-cf9cc4fb8-tjjx8 1/1 Running 0 74s

calico-node-4kzfj 1/1 Running 0 74s

calico-node-9kz2c 1/1 Running 0 74s

calico-node-d2zcx 1/1 Running 0 74s

calico-node-njmv8 1/1 Running 0 74s

calico-node-pf5t2 1/1 Running 0 74s

coredns-6554b8b87f-7vv24 1/1 Running 0 20m

coredns-6554b8b87f-r4x56 1/1 Running 0 20m

etcd-k8s-master01 1/1 Running 0 13m

etcd-k8s-master02 1/1 Running 0 13m

etcd-k8s-master03 1/1 Running 0 17m

kube-apiserver-k8s-master01 1/1 Running 0 20m

kube-apiserver-k8s-master02 1/1 Running 0 18m

kube-apiserver-k8s-master03 1/1 Running 1 (17m ago) 17m

kube-controller-manager-k8s-master01 1/1 Running 2 (13m ago) 20m

kube-controller-manager-k8s-master02 1/1 Running 1 (14m ago) 18m

kube-controller-manager-k8s-master03 1/1 Running 0 17m

kube-proxy-bv6bb 1/1 Running 0 17m

kube-proxy-jhwgf 1/1 Running 0 18m

kube-proxy-snsnh 1/1 Running 0 20m

kube-proxy-svv6g 1/1 Running 0 12m

kube-proxy-vsd8c 1/1 Running 0 12m

kube-scheduler-k8s-master01 1/1 Running 1 (17m ago) 20m

kube-scheduler-k8s-master02 1/1 Running 1 (14m ago) 18m

kube-scheduler-k8s-master03 1/1 Running 0 17m

[root@k8s-master01 ~]#

8. 在K8s里快速部署一个应用

1)创建deployment

kubectl create deployment testdp --image=nginx:1.25.5 ##deploymnet名字为testdp 镜像为

nginx:1.25.5

[root@k8s-master01 ~]# kubectl create deployment testdp --image=registry.cn-hangzhou.aliyuncs.com/*/nginx:1.25.2

deployment.apps/testdp created

[root@k8s-master01 ~]#

2)查看deployment

kubectl get deployment

[root@k8s-master01 ~]# kubectl get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

testdp 1/1 1 1 31s

3)查看pod

kubectl get pods

[root@k8s-master01 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

testdp-5b77968464-lt46x 1/1 Running 0 <invalid>

[root@k8s-master01 ~]#

4)查看pod详情

kubectl describe pod testdp-786fdb4647-pbm7b

[root@k8s-master01 ~]# kubectl describe pod testdp-5b77968464-lt46x

Name: testdp-5b77968464-lt46x

Namespace: default

Priority: 0

Service Account: default

Node: k8s-node01/192.168.100.14

Start Time: Sat, 10 Aug 2024 05:14:00 +0800

Labels: app=testdp

pod-template-hash=5b77968464

Annotations: cni.projectcalico.org/containerID: ec056ed1ddb541cd057953b4fc89c3ea5638ed93d2048914a55d4cfc3fa8ac1b

cni.projectcalico.org/podIP: 10.18.85.195/32

cni.projectcalico.org/podIPs: 10.18.85.195/32

Status: Running

IP: 10.18.85.195

IPs:

IP: 10.18.85.195

Controlled By: ReplicaSet/testdp-5b77968464

Containers:

nginx:

Container ID: containerd://8f5e42c7c71da2bdfbb43d7b2e135a9eb7ecdcded00d671c41c1b111e2dedb12

Image: registry.cn-hangzhou.aliyuncs.com/daliyused/nginx:1.25.5

Image ID: registry.cn-hangzhou.aliyuncs.com/daliyused/nginx@sha256:0e1ac7f12d904a5ce077d1b5c763b5750c7985e524f6083e5eaa7e7313833440

Port: <none>

Host Port: <none>

State: Running

Started: Sat, 10 Aug 2024 05:14:12 +0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-7zggq (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

kube-api-access-7zggq:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Pulling 102s kubelet Pulling image "registry.cn-hangzhou.aliyuncs.com/daliyused/nginx:1.25.5"

Normal Scheduled 101s default-scheduler Successfully assigned default/testdp-5b77968464-lt46x to k8s-node01

Normal Pulled 91s kubelet Successfully pulled image "registry.cn-hangzhou.aliyuncs.com/*/nginx:1.25.5" in 10.66s (10.66s including waiting)

Normal Created 91s kubelet Created container nginx

Normal Started 91s kubelet Started container nginx

[root@k8s-master01 ~]#

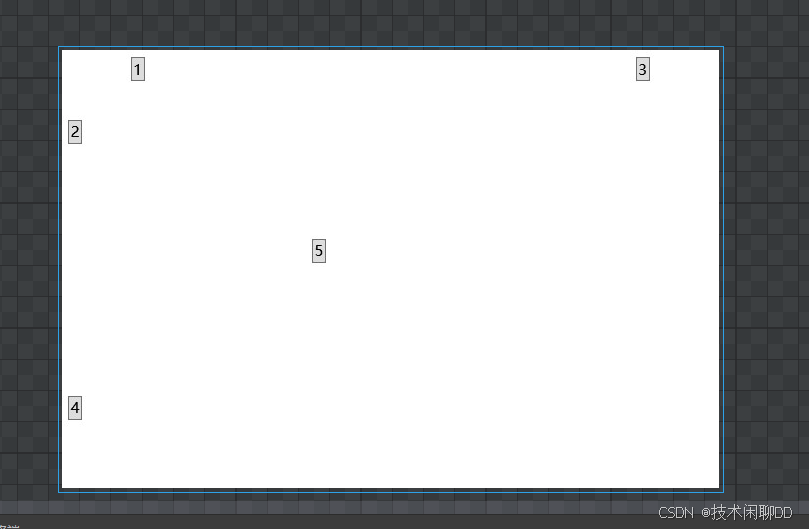

5)创建service,暴漏pod端口到node节点上

kubectl expose deployment testdp --port=80 --type=NodePort --target-port=80 --name=testsvc

[root@k8s-master01 ~]# kubectl expose deployment testdp --port=80 --type=NodePort --target-port=80 --name=testsvc

service/testsvc exposed

6)查看service

kubectl get svc

testsvc NodePort 10.15.232.70 <none> 80:31693/TCP

[root@k8s-master01 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.15.0.1 <none> 443/TCP 29m

testsvc NodePort 10.15.56.110 <none> 80:30545/TCP <invalid>

[root@k8s-master01 ~]#

可以看到暴漏端口为一个大于30000的随机端口,浏览器里访问 192.168.100.14:30545

![[BSidesCF 2019]Kookie1](https://i-blog.csdnimg.cn/direct/0d7a0cb6275840c8878f4ee7a4e7266f.png)