经典的卷积神经网络模型 - AlexNet

flyfish

AlexNet 是由 Alex Krizhevsky、Ilya Sutskever 和 Geoffrey Hinton 在 2012 年提出的一个深度卷积神经网络模型,在 ILSVRC-2012(ImageNet Large Scale Visual Recognition Challenge 2012)竞赛中取得了显著的成果,标志着深度学习在计算机视觉领域的一个重要里程碑。

ILSVRC 是一个大型视觉识别挑战赛,基于 ImageNet 数据集,每年吸引众多研究团队参加。 ILSVRC-2012 包含超过 1000 个类别和约 1500 万张带有注释的图片,是一个非常庞大的数据集。 AlexNet 在 ILSVRC-2012 竞赛中获得了 top-5 测试错误率为 15.3%,远低于当时其他参赛模型的错误率,通常在 26% 左右。top-5 错误率指的是模型预测中包含正确答案的前五个预测中至少有一个正确的比例。

import torchvision.models as models

# Load the pretrained AlexNet model

alexnet = models.alexnet(pretrained=True)

# Print the structure of the AlexNet model

print(alexnet)

AlexNet(

(features): Sequential(

(0): Conv2d(3, 64, kernel_size=(11, 11), stride=(4, 4), padding=(2, 2))

(1): ReLU(inplace=True)

(2): MaxPool2d(kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=False)

(3): Conv2d(64, 192, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(4): ReLU(inplace=True)

(5): MaxPool2d(kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=False)

(6): Conv2d(192, 384, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(7): ReLU(inplace=True)

(8): Conv2d(384, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(9): ReLU(inplace=True)

(10): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(11): ReLU(inplace=True)

(12): MaxPool2d(kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(avgpool): AdaptiveAvgPool2d(output_size=(6, 6))

(classifier): Sequential(

(0): Dropout(p=0.5, inplace=False)

(1): Linear(in_features=9216, out_features=4096, bias=True)

(2): ReLU(inplace=True)

(3): Dropout(p=0.5, inplace=False)

(4): Linear(in_features=4096, out_features=4096, bias=True)

(5): ReLU(inplace=True)

(6): Linear(in_features=4096, out_features=1000, bias=True)

)

)

在 AlexNet 模型中,输入和输出在以下位置:

- 输入 :

- 输入是通过模型的第一层

Conv2d(3, 64, kernel_size=(11, 11), stride=(4, 4), padding=(2, 2))。输入数据通常是形状为(N, 3, H, W)的张量,其中N是批次大小,3是通道数(例如,RGB 图像),H和W是图像的高度和宽度。

- 输出 :

- 输出是通过模型的最后一层

Linear(in_features=4096, out_features=1000, bias=True)。输出数据是形状为(N, 1000)的张量,其中N是批次大小,1000是类别数量(即,模型预测的每个类别的概率)。

import torch

import torchvision.models as models

# 加载预训练的 AlexNet 模型

alexnet = models.alexnet(pretrained=True)

# 创建一个示例输入,形状为 (1, 3, 224, 224)

input_data = torch.randn(1, 3, 224, 224)

# 通过模型进行前向传播,获取输出

output = alexnet(input_data)

# 输出结果的形状

print("输入形状:", input_data.shape)

print("输出形状:", output.shape)

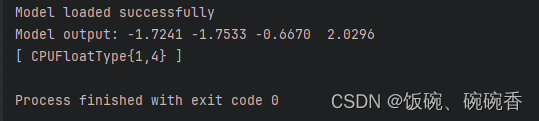

输入形状: torch.Size([1, 3, 224, 224])

输出形状: torch.Size([1, 1000])

AlexNet 模型的描述

-

原始图片 :3x224x224 三通道彩色图片

-

卷积层 1 :64核,11x11卷积核,stride=4,padding=2,输出64x55x55

激活层 1 :ReLU

池化层 1 :3x3池化核,stride=2,padding=0,输出64x27x27 -

卷积层 2 :192核,5x5卷积核,stride=1,padding=2,输出192x27x27

激活层 2 :ReLU

池化层 2 :3x3池化核,stride=2,padding=0,输出192x13x13 -

卷积层 3 :384核,3x3卷积核,stride=1,padding=1,输出384x13x13

激活层 3 :ReLU -

卷积层 4 :256核,3x3卷积核,stride=1,padding=1,输出256x13x13

激活层 4 :ReLU -

卷积层 5 :256核,3x3卷积核,stride=1,padding=1,输出256x13x13

激活层 5 :ReLU

池化层 3 :3x3池化核,stride=2,padding=0,输出256x6x6 -

全连接层 1 :4096个神经元,接Dropout(p=0.5)和ReLU

全连接层 2 :4096个神经元,接Dropout(p=0.5)和ReLU

全连接层 3 :1000个神经元做分类

自定义一个与PyTorch提供的AlexNet一样结构的模型

import torch

import torch.nn as nn

class AlexNet(nn.Module):

def __init__(self, num_classes=1000):

super(AlexNet, self).__init__()

self.features = nn.Sequential(

nn.Conv2d(3, 64, kernel_size=11, stride=4, padding=2),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3, stride=2),

nn.Conv2d(64, 192, kernel_size=5, stride=1, padding=2),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3, stride=2),

nn.Conv2d(192, 384, kernel_size=3, stride=1, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(384, 256, kernel_size=3, stride=1, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(256, 256, kernel_size=3, stride=1, padding=1),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3, stride=2)

)

self.avgpool = nn.AdaptiveAvgPool2d((6, 6))

self.classifier = nn.Sequential(

nn.Dropout(),

nn.Linear(256 * 6 * 6, 4096),

nn.ReLU(inplace=True),

nn.Dropout(),

nn.Linear(4096, 4096),

nn.ReLU(inplace=True),

nn.Linear(4096, num_classes),

)

def forward(self, x):

x = self.features(x)

x = self.avgpool(x)

x = torch.flatten(x, 1)

x = self.classifier(x)

return x

# 创建 AlexNet 实例

model = AlexNet(num_classes=1000)

# 打印模型结构

print(model)

nn.MaxPool2d 计算过程和可视化

import torch

import torch.nn as nn

# 创建一个示例输入,形状为 (1, 1, 4, 4)

input_data = torch.tensor([[[[1, 2, 3, 4],

[5, 6, 7, 8],

[9, 10, 11, 12],

[13, 14, 15, 16]]]], dtype=torch.float32)

# 创建最大池化层

maxpool = nn.MaxPool2d(kernel_size=2, stride=2)

# 计算最大池化层的输出

output = maxpool(input_data)

print(output)

可视化代码

可视化代码

import torch

import torch.nn as nn

import numpy as np

import matplotlib.pyplot as plt

import imageio

def visualize_maxpool2d():

input_data = torch.tensor([[[[1, 2, 3, 4],

[5, 6, 7, 8],

[9, 10, 11, 12],

[13, 14, 15, 16]]]], dtype=torch.float32)

maxpool = nn.MaxPool2d(kernel_size=2, stride=2)

original_data = input_data[0, 0].numpy()

pooled_data = maxpool(input_data).numpy()[0, 0]

frames = []

fig, axes = plt.subplots(1, 2, figsize=(10, 4))

# 绘制原始数据

cax1 = axes[0].matshow(original_data, cmap='Blues')

for (i, j), val in np.ndenumerate(original_data):

axes[0].text(j, i, f'{val:.0f}', ha='center', va='center', color='red')

axes[0].set_title('Original Data')

fig.colorbar(cax1, ax=axes[0])

# 初始化池化后的数据图

cax2 = axes[1].matshow(np.zeros_like(pooled_data), cmap='Blues', vmin=0, vmax=pooled_data.max())

axes[1].set_xticks([])

axes[1].set_yticks([])

axes[1].set_title(f'Max Pooled Data')

fig.colorbar(cax2, ax=axes[1])

# 更新函数

def update_frame(i, j):

sub_matrix = original_data[i*2:i*2+2, j*2:j*2+2]

pooled_value = pooled_data[i, j]

# 更新原始数据窗口的矩形框

rect = plt.Rectangle((j*2-0.5, i*2-0.5), 2, 2, linewidth=2, edgecolor='yellow', facecolor='none')

axes[0].add_patch(rect)

# 更新池化后的数据

new_pooled_data = np.zeros_like(pooled_data)

new_pooled_data[i, j] = pooled_value

cax2.set_array(new_pooled_data)

for (ii, jj), val in np.ndenumerate(new_pooled_data):

if val > 0:

axes[1].text(jj, ii, f'{val:.0f}', ha='center', va='center', color='red')

plt.savefig(f'maxpool_{i}_{j}.png')

frames.append(imageio.imread(f'maxpool_{i}_{j}.png'))

rect.remove() # 移除矩形框以便下一个帧绘制

for i in range(2):

for j in range(2):

update_frame(i, j)

# 保存为 GIF 动画

imageio.mimsave('maxpool.gif', frames, fps=1,loop=0)

# 可视化并保存 GIF 动画

visualize_maxpool2d()

nn.AdaptiveAvgPool2d 计算过程和可视化

import torch

import torch.nn as nn

# 创建一个示例输入,形状为 (1, 1, 4, 4)

input_data = torch.tensor([[[[1, 2, 3, 4],

[5, 6, 7, 8],

[9, 10, 11, 12],

[13, 14, 15, 16]]]], dtype=torch.float32)

# 创建自适应平均池化层

adaptive_avgpool = nn.AdaptiveAvgPool2d((2, 2))

# 计算自适应平均池化层的输出

output = adaptive_avgpool(input_data)

print(output)

tensor([[[[ 3.5000, 5.5000],

[11.5000, 13.5000]]]])

可视化代码

import torch

import torch.nn as nn

import numpy as np

import matplotlib.pyplot as plt

import imageio

def visualize_adaptiveavgpool2d():

input_data = torch.tensor([[[[1, 2, 3, 4],

[5, 6, 7, 8],

[9, 10, 11, 12],

[13, 14, 15, 16]]]], dtype=torch.float32)

adaptive_avgpool = nn.AdaptiveAvgPool2d((2, 2))

original_data = input_data[0, 0].numpy()

pooled_data = adaptive_avgpool(input_data).numpy()[0, 0]

frames = []

fig, axes = plt.subplots(1, 2, figsize=(10, 4))

# 绘制原始数据

cax1 = axes[0].matshow(original_data, cmap='Blues')

for (i, j), val in np.ndenumerate(original_data):

axes[0].text(j, i, f'{val:.0f}', ha='center', va='center', color='red')

axes[0].set_title('Original Data')

fig.colorbar(cax1, ax=axes[0])

# 初始化池化后的数据图

cax2 = axes[1].matshow(np.zeros_like(pooled_data), cmap='Blues', vmin=0, vmax=pooled_data.max())

axes[1].set_xticks([])

axes[1].set_yticks([])

axes[1].set_title(f'Adaptive Avg Pooled Data')

fig.colorbar(cax2, ax=axes[1])

# 更新函数

def update_frame(i, j):

h_step = original_data.shape[0] // 2

w_step = original_data.shape[1] // 2

sub_matrix = original_data[i*h_step:(i+1)*h_step, j*w_step:(j+1)*w_step]

pooled_value = pooled_data[i, j]

# 更新原始数据窗口的矩形框

rect = plt.Rectangle((j*w_step-0.5, i*h_step-0.5), w_step, h_step, linewidth=2, edgecolor='yellow', facecolor='none')

axes[0].add_patch(rect)

# 更新池化后的数据

new_pooled_data = np.zeros_like(pooled_data)

new_pooled_data[i, j] = pooled_value

cax2.set_array(new_pooled_data)

for (ii, jj), val in np.ndenumerate(new_pooled_data):

if val > 0:

axes[1].text(jj, ii, f'{val:.1f}', ha='center', va='center', color='red')

plt.savefig(f'adaptiveavgpool_{i}_{j}.png')

frames.append(imageio.imread(f'adaptiveavgpool_{i}_{j}.png'))

rect.remove() # 移除矩形框以便下一个帧绘制

for i in range(2):

for j in range(2):

update_frame(i, j)

# 保存为 GIF 动画

imageio.mimsave('adaptiveavgpool.gif', frames, fps=1,loop=0)

# 可视化并保存 GIF 动画

visualize_adaptiveavgpool2d()

ReLU(Rectified Linear Unit)

ReLU

(

x

)

=

(

x

)

+

=

max

(

0

,

x

)

\text{ReLU}(x) = (x)^+ = \max(0, x)

ReLU(x)=(x)+=max(0,x)

import numpy as np

import matplotlib.pyplot as plt

def relu(x):

return np.maximum(0, x)

# 生成输入数据

x = np.linspace(-10, 10, 400)

y = relu(x)

# 创建图形

plt.figure(figsize=(8, 6))

plt.plot(x, y, label='ReLU(x)', color='b')

plt.title('ReLU Activation Function')

plt.xlabel('Input')

plt.ylabel('Output')

plt.axhline(0, color='gray', lw=0.5)

plt.axvline(0, color='gray', lw=0.5)

plt.grid(True)

plt.legend()

plt.show()