文章目录

- 一、多代理对话:单口喜剧

- 1.1 Agent的基本功能

- 1.2 多代理对话示例:单口喜剧

- 1.2.1 创建Agent

- 1.2.2 开始对话

- 1.2.3 查看对话信息,自定义对话摘要

- 1.2.4 设置对话终止条件

- 二、顺序对话:客户入职

- 2.1 创建Agent

- 2.2 构建顺序对话流程

- 2.3 查看聊天结果和成本

- 三、代理反思:博客写作

- 3.1 创建撰写代理

- 3.2 创建评论代理进行反思和改进

- 3.3 创建审阅代理进行嵌套对话,实现复杂反思

- 3.3.1 创建不同的审阅代理

- 3.3.2 配置审阅对话

- 3.3.3 注册嵌套对话,启动聊天

- 课程主页《AI Agentic Design Patterns with AutoGen》、bilibili中文视频

- microsoft/autogen、autogen文档

一、多代理对话:单口喜剧

1.1 Agent的基本功能

在AutoGen中,Agent是一个可以代表人类意图执行操作的实体,发送消息,接收消息,执行操作,生成回复,并与其他代理交互。AutoGen具有一个名为Conversible Agent的内置代理类,它将不同类型的代理统一在同一个编程抽象中。

Conversible Agent带有许多内置功能,如使用大型语言模型配置生成回复、执行代码或函数、保持人工干预并检查停止响应等。你可以打开或关闭每个组件,并根据应用程序的需求进行定制,通过这些不同的功能,你可以使用相同的接口创建具有不同角色的代理。

下面先演示agent的基本功能,以下代码,可以直接在《AI Agentic Design Patterns with AutoGen》中成功运行。

- 导入OpenAI API密钥,配置LLM

使用getOpenAI API key函数从环境中导入OpenAI API密钥,并定义一个LLM配置。在本课程中,我们将使用GPT-3.5 Turbo作为模型。

from utils import get_openai_api_key

OPENAI_API_KEY = get_openai_api_key()

llm_config = {"model": "gpt-3.5-turbo"}

- 定义一个AutoGen agent

我们使用ConversibleAgent类定义一个名为chatbot的代理。将前面定义的LLM配置传递给这个ConversibleAgent,使其能够使用大型语言模型生成回复。

from autogen import ConversableAgent

agent = ConversableAgent(

name="chatbot",

llm_config=llm_config,

human_input_mode="NEVER",

)

human_input_mode(人类输入模式)设置为“NEVER”,这意味着agent将不会寻求人工输入,而是只使用大型语言模型生成回复。你也可以设置为“ALWAYS”,那么代理在生成回复之前总是会询问人工输入。这是该代理的基本设置,你还可以添加代码执行配置、函数执行和其他设置。

- 使用

generate_reply方法生成回复

调用agent的generateReply函数,并给它一个消息列表,例如“tell me a joke”,角色为用户。运行后,agent将回复一个笑话。

reply = agent.generate_reply(

messages=[{"content": "Tell me a joke.", "role": "user"}]

)

print(reply)

Sure! Here's a joke for you: Why did the math book look sad? Because it had too many problems.

如果再次调用generate_reply函数,并将内容更改为“repeat the joke”,代理不会重复笑话,因为每次调用generateReply函数时,它都会生成一个新的回复,而不会记住之前的回复。

reply = agent.generate_reply(

messages=[{"content": "Repeat the joke.", "role": "user"}]

)

print(reply)

Of course! Please provide me with the joke you would like me to repeat.

1.2 多代理对话示例:单口喜剧

接下来,我们创建一个多代理对话示例。我们将创建两个ConversibleAgent,模拟两个单口喜剧演员之间的对话。

1.2.1 创建Agent

创建两个agent,分别命名为Cassie和Joe,并给它们一个系统消息,让代理知道它们的名字并且它是一个单口喜剧演员。后者增加一个指示:“从上一个笑话的笑点开始下一个笑话”。

cathy = ConversableAgent(

name="cathy",

system_message=

"Your name is Cathy and you are a stand-up comedian.",

llm_config=llm_config,

human_input_mode="NEVER",

)

joe = ConversableAgent(

name="joe",

system_message=

"Your name is Joe and you are a stand-up comedian. "

"Start the next joke from the punchline of the previous joke.",

llm_config=llm_config,

human_input_mode="NEVER",

)

1.2.2 开始对话

调用agent的initiate_chat函数初始化对话信息。例如,让Joe开始对话,设置接收人为Cassie,并设置初始消息和对话的最大轮数。

chat_result = joe.initiate_chat(

recipient=cathy,

message="I'm Joe. Cathy, let's keep the jokes rolling.",

max_turns=2,

)

joe (to cathy):

I'm Joe. Cathy, let's keep the jokes rolling.

--------------------------------------------------------------------------------

cathy (to joe):

Sure thing, Joe! So, did you know that I tried to write a joke about the wind, but it just ended up being too drafty? It was full of holes!

--------------------------------------------------------------------------------

joe (to cathy):

Well, Cathy, that joke might have been breezy, but I like to think of it as a breath of fresh air!

--------------------------------------------------------------------------------

cathy (to joe):

I'm glad you found it refreshing, Joe! But let me ask you this - why did the math book look sad? Because it had too many problems!

--------------------------------------------------------------------------------

1.2.3 查看对话信息,自定义对话摘要

我们还可以检查对话历史和消耗的token数,比如使用pprint库打印对话历史,并检查tokens使用情况和总成本。

import pprint

pprint.pprint(chat_result.chat_history)

[{'content': "I'm Joe. Cathy, let's keep the jokes rolling.",

'role': 'assistant'},

{'content': 'Sure thing, Joe! So, did you know that I tried to write a joke '

'about the wind, but it just ended up being too drafty? It was '

'full of holes!',

'role': 'user'},

{'content': 'Well, Cathy, that joke might have been breezy, but I like to '

'think of it as a breath of fresh air!',

'role': 'assistant'},

{'content': "I'm glad you found it refreshing, Joe! But let me ask you this - "

'why did the math book look sad? Because it had too many '

'problems!',

'role': 'user'}]

pprint.pprint(chat_result.cost)

{'usage_excluding_cached_inference': {'gpt-3.5-turbo-0125': {'completion_tokens': 95,

'cost': 0.000259,

'prompt_tokens': 233,

'total_tokens': 328},

'total_cost': 0.000259},

你也可以查看此对话的摘要,默认情况下,我们使用最后一条信息作为对话的摘要。

pprint.pprint(chat_result.summary)

("I'm glad you found it refreshing, Joe! But let me ask you this - why did the "

'math book look sad? Because it had too many problems!')

你也可以设置不同的总结的方式,来获得更准确的摘要结果。比如,你可以设置 summary_method为reflection_with_llm(大模型总结),并给出具体的总结提示summary_prompt,这样大模型将在对话结束后按照提示总结这段对话的内容。

chat_result = joe.initiate_chat(

cathy,

message="I'm Joe. Cathy, let's keep the jokes rolling.",

max_turns=2,

summary_method="reflection_with_llm",

summary_prompt="Summarize the conversation",

)

pprint.pprint(chat_result.summary)

('The conversation is light-hearted and involves Joe and Cathy exchanging '

'jokes about wind and math problems.')

1.2.4 设置对话终止条件

如果你无法预测到哪一轮对话会结束,则可以更改对话终止条件。比如使用布尔函数isTerminationMessage来检测对话中是否包含其设置的终止消息"I gotta go",如果检测到则终止对话。同时,我们更改了cathy和joe的系统消息,告诉它们如果要结束对话,就说"I gotta go"。这样任何一个角色,检测到其接收的消息中包含"I gotta go",就会自动结束这段对话。

cathy = ConversableAgent(

name="cathy",

system_message=

"Your name is Cathy and you are a stand-up comedian. "

"When you're ready to end the conversation, say 'I gotta go'.",

llm_config=llm_config,

human_input_mode="NEVER",

is_termination_msg=lambda msg: "I gotta go" in msg["content"],

)

joe = ConversableAgent(

name="joe",

system_message=

"Your name is Joe and you are a stand-up comedian. "

"When you're ready to end the conversation, say 'I gotta go'.",

llm_config=llm_config,

human_input_mode="NEVER",

is_termination_msg=lambda msg: "I gotta go" in msg["content"] or "Goodbye" in msg["content"],

)

chat_result = joe.initiate_chat(

recipient=cathy,

message="I'm Joe. Cathy, let's keep the jokes rolling."

)

joe (to cathy):

I'm Joe. Cathy, let's keep the jokes rolling.

--------------------------------------------------------------------------------

cathy (to joe):

Hey there, Joe! Glad to hear you're ready for some laughs. So, did you hear about the claustrophobic astronaut? He just needed a little space!

--------------------------------------------------------------------------------

joe (to cathy):

Haha, good one! Did you hear about the mathematician who’s afraid of negative numbers? He’ll stop at nothing to avoid them!

--------------------------------------------------------------------------------

cathy (to joe):

Haha, that's a classic! How about this one: Why did the scarecrow win an award? Because he was outstanding in his field!

--------------------------------------------------------------------------------

joe (to cathy):

Haha, that's a good one too! Here's another one: Why couldn't the bicycle stand up by itself? Because it was two tired!

--------------------------------------------------------------------------------

cathy (to joe):

Haha, nice one, Joe! It seems like we're on a roll with these jokes. But hey, I gotta make sure we leave the audience wanting more, so I gotta go. Thanks for the laughs! Talk to you later!

--------------------------------------------------------------------------------

最后,我们测试一下agent是否记得对话状态。让Cassie发送另一个消息“What’s the last joke we talked about?”,并将接收人设置为Joe。这次,Joe能够记住上次的笑话,并且在相同的终止消息中结束对话。

cathy.send(message="What's last joke we talked about?", recipient=joe)

cathy (to joe):

What's last joke we talked about?

--------------------------------------------------------------------------------

joe (to cathy):

The last joke we talked about was: Why couldn't the bicycle stand up by itself? Because it was two tired!

--------------------------------------------------------------------------------

cathy (to joe):

Thanks, Joe! That was a good one. I gotta go now. Have a great day!

--------------------------------------------------------------------------------

以上只是使用代理构建对话的基本示例。在接下来的课程中,我们将学习其他对话模式和一些代理设计模式,包括工具使用、反思、规划和代码执行等。

二、顺序对话:客户入职

在本课中,你将学习如何利用多代理设计来完成涉及多个步骤的复杂任务。我们将构建多个代理之间的对话序列,这些代理将协作提供优质的产品客户入职体验。你还将体验如何将人类无缝地融入AI系统中。接下来,我们将编写代码实现这一过程。

Customer Onboarding指的是客户入职或新客户导入的过程,通常包括向新客户介绍产品/服务、收集客户信息、完成必要设置等步骤,将新客户成功纳入公司系统中。

在客户入职场景中,典型流程包括首先收集一些客户信息,然后调查客户的兴趣,最后基于收集的信息与客户互动。我们将客户入职任务分解为三个子任务:信息收集、兴趣调查和客户互动。

2.1 创建Agent

首先,我们使用AutoGen中的ConversibleAgent类实现这些代理。

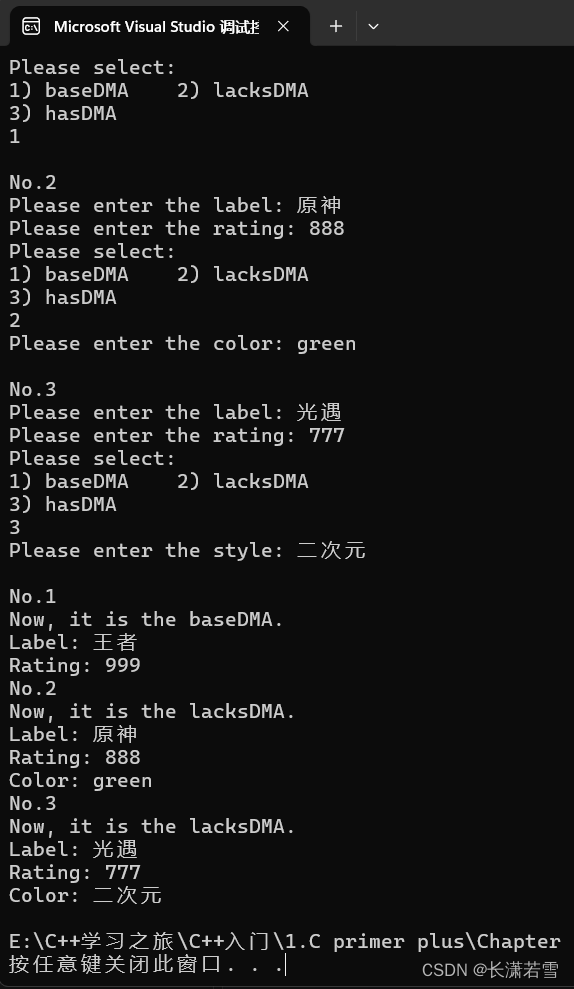

- 信息收集代理:目标是收集客户个人信息,可以通过设置系统消息来实现这一点。我们告诉代理它是

a helpful customer onboarding agent,并给出详细的指令。然后将human_input_mode设置为NEVER,因为这里使用大型语言模型生成响应。 - 兴趣调查代理:同上,设置系统消息为询问客户的兴趣或偏好,同样将

human_input_mode设置为NEVER。 - 客户互动代理:基于收集的用户个人信息和兴趣来进行互动,比如提供有趣的事实、笑话或故事。我们同样通过设置系统消息来实现这一点,并设置对话终止条件。

- 客户代理:此代理表示真实的客户,

human_input_mode设置为ALWAYS,以便此代理始终可以从真实客户那里获取意见。

from autogen import ConversableAgent

llm_config={"model": "gpt-3.5-turbo"}

onboarding_personal_information_agent = ConversableAgent(

name="Onboarding Personal Information Agent",

system_message='''You are a helpful customer onboarding agent,

you are here to help new customers get started with our product.

Your job is to gather customer's name and location.

Do not ask for other information. Return 'TERMINATE'

when you have gathered all the information.''',

llm_config=llm_config,

code_execution_config=False,

human_input_mode="NEVER",

)

onboarding_topic_preference_agent = ConversableAgent(

name="Onboarding Topic preference Agent",

system_message='''You are a helpful customer onboarding agent,

you are here to help new customers get started with our product.

Your job is to gather customer's preferences on news topics.

Do not ask for other information.

Return 'TERMINATE' when you have gathered all the information.''',

llm_config=llm_config,

code_execution_config=False,

human_input_mode="NEVER",

)

customer_engagement_agent = ConversableAgent(

name="Customer Engagement Agent",

system_message='''You are a helpful customer service agent

here to provide fun for the customer based on the user's

personal information and topic preferences.

This could include fun facts, jokes, or interesting stories.

Make sure to make it engaging and fun!

Return 'TERMINATE' when you are done.''',

llm_config=llm_config,

code_execution_config=False,

human_input_mode="NEVER",

is_termination_msg=lambda msg: "terminate" in msg.get("content").lower(),

)

customer_proxy_agent = ConversableAgent(

name="customer_proxy_agent",

llm_config=False,

code_execution_config=False,

human_input_mode="ALWAYS",

is_termination_msg=lambda msg: "terminate" in msg.get("content").lower(),

)

2.2 构建顺序对话流程

现在,我们可以创建一个顺序对话来完成客户入职过程。在这个例子中,每个对话都是一个特定入职代理和客户代理之间的双代理对话,我们为每段对话都设置了发起者、接收者和启动信息。

另外,顺序对话中,下一段对话任务依赖上一段对话的内容,所以需要对上一段对话内容进行总结。此处使用reflection_with_llm作为总结方法,并设置具体的summary_prompt来指导模型如何进行总结,以及返回的格式,将第一个对话中的客户个人信息总结并传递到第二个对话。

chats = [

{

"sender": onboarding_personal_information_agent,

"recipient": customer_proxy_agent,

"message":

"Hello, I'm here to help you get started with our product."

"Could you tell me your name and location?",

"summary_method": "reflection_with_llm",

"summary_args": {

"summary_prompt" : "Return the customer information "

"into as JSON object only: "

"{'name': '', 'location': ''}",

},

"max_turns": 2,

"clear_history" : True

},

{

"sender": onboarding_topic_preference_agent,

"recipient": customer_proxy_agent,

"message":

"Great! Could you tell me what topics you are "

"interested in reading about?",

"summary_method": "reflection_with_llm",

"max_turns": 1,

"clear_history" : False

},

{

"sender": customer_proxy_agent,

"recipient": customer_engagement_agent,

"message": "Let's find something fun to read.",

"max_turns": 1,

"summary_method": "reflection_with_llm",

},

]

至此,我们构建好了整个顺序对话流程。下面我们启动这个流程,而你将充当真实客户。

from autogen import initiate_chats

chat_results = initiate_chats(chats)

2.3 查看聊天结果和成本

在对话完成后,可以检查每个对话会话的结果。例如,检查客户的个人信息、兴趣总结和互动消息。同时,也可以查看每个对话的成本,包括总成本、prompt_tokens成本和completion_tokens成本。

for chat_result in chat_results:

print(chat_result.summary)

print("\n")

{

"name": "Alex",

"location": "China"

}

Alex from China is interested in reading about topics related to dogs.

Alex from China is interested in reading about topics related to dogs. The world's oldest known breed of domesticated dog is the Saluki, dating back to ancient Egypt. The Saluki is known for its speed and endurance, often kept as hunting dogs by pharaohs and nobles.

for chat_result in chat_results:

print(chat_result.cost)

print("\n")

{'usage_including_cached_inference': {'total_cost': 0.00013250000000000002, 'gpt-3.5-turbo-0125': {'cost': 0.00013250000000000002, 'prompt_tokens': 178, 'completion_tokens': 29, 'total_tokens': 207}}

{'usage_including_cached_inference': {'total_cost': 5.25e-05, 'gpt-3.5-turbo-0125': {'cost': 5.25e-05, 'prompt_tokens': 66, 'completion_tokens': 13, 'total_tokens': 79}}

{'usage_including_cached_inference': {'total_cost': 0.000416, 'gpt-3.5-turbo-0125': {'cost': 0.000416, 'prompt_tokens': 304, 'completion_tokens': 176, 'total_tokens': 480}},

本课中,我们学习了如何使用顺序对话完成一系列相互依赖的任务。在下一课中,你将学习如何实现嵌套对话的反思代理设计模式,其中一个对话或一系列对话嵌套在另一个对话中,类似于代理的独白。

三、代理反思:博客写作

在这节课中,您将学习代理反思框架(agent reflection framework),学习如何使用嵌套聊天对话模式来实现复杂的反思过程,并利用其强大功能创建高质量的博客文章。您将构建一个系统,其中一组审稿代理(reviewer agents)嵌套在评论代理(critic agent)中,作为内在独白,对撰写代理(writer agent)写的博客文章进行反思。

具体来说,这句话描述了一个多层次的反思和评审过程,其中

critic agent负责整体的反思,在critic agent内部,还有一组reviewer agents,这些reviewer agents是critic agent的内在独白,负责更细致的评审工作,它们的反馈和意见共同构成了critic agent的内在思考过程。

假设我们要写一篇关于deeplearning.ai的简洁但引人入胜的博客文章,要求文章不超过100字。我们首先创建一个撰写代理来完成这项任务。

3.1 创建撰写代理

使用AutoGen构建一个基于模型的助手代理作为撰写代理。然后你可以调用generate_reply函数并提供任务作为输入,让此代理直接撰写博客文章。

import autogen

llm_config = {"model": "gpt-3.5-turbo"}

task = '''

Write a concise but engaging blogpost about

DeepLearning.AI. Make sure the blogpost is

within 100 words.

'''

writer = autogen.AssistantAgent(

name="Writer",

system_message="You are a writer. You write engaging and concise "

"blogpost (with title) on given topics. You must polish your "

"writing based on the feedback you receive and give a refined "

"version. Only return your final work without additional comments.",

llm_config=llm_config,

)

reply = writer.generate_reply(messages=[{"content": task, "role": "user"}])

print(reply)

Title: "Unleashing the Power of AI with DeepLearning.AI"

Discover the incredible world of artificial intelligence with DeepLearning.AI. This innovative platform offers a gateway to mastering deep learning concepts and cutting-edge technology. Whether you are a beginner or an expert, DeepLearning.AI provides comprehensive courses designed to elevate your skills. With engaging content and practical insights, you can delve into the realms of machine learning, neural networks, and much more. Embrace the future of AI and unlock endless possibilities with DeepLearning.AI by your side. Start your journey today and empower yourself with the knowledge to shape tomorrow's technology landscape.

3.2 创建评论代理进行反思和改进

为了提升博客文章的质量,我们可以利用反思这一有效的代理设计模式。实现反思的方法之一,是引入另一个代理参与任务,比如通过引入评论代理对撰写代理的工作进行反思并提供反馈。

- 使用AutoGen创建一个评论代理,提示其对撰写代理的写作进行审查并提供反馈。

- 启动评论代理和撰写代理之间的对话,进行反复交流,撰写代理根据评论代理的反馈不断修改文章。

critic = autogen.AssistantAgent(

name="Critic",

is_termination_msg=lambda x: x.get("content", "").find("TERMINATE") >= 0,

llm_config=llm_config,

system_message="You are a critic. You review the work of "

"the writer and provide constructive "

"feedback to help improve the quality of the content.",

)

res = critic.initiate_chat(

recipient=writer,

message=task,

max_turns=2,

summary_method="last_msg"

)

Critic (to Writer):

Write a concise but engaging blogpost about

DeepLearning.AI. Make sure the blogpost is

within 100 words.

--------------------------------------------------------------------------------

Writer (to Critic):

Title: "Unleashing the Power of AI with DeepLearning.AI"

Discover the incredible world of artificial intelligence with DeepLearning.AI. This innovative platform offers a gateway to mastering deep learning concepts and cutting-edge technology. Whether you are a beginner or an expert, DeepLearning.AI provides comprehensive courses designed to elevate your skills. With engaging content and practical insights, you can delve into the realms of machine learning, neural networks, and much more. Embrace the future of AI and unlock endless possibilities with DeepLearning.AI by your side. Start your journey today and empower yourself with the knowledge to shape tomorrow's technology landscape.

--------------------------------------------------------------------------------

Critic (to Writer):

This blogpost on DeepLearning.AI delivers an engaging and informative introduction to the platform, capturing the essence of its offerings concisely. The use of compelling language and a call-to-action is commendable in drawing readers in. However, to enhance the review, consider briefly mentioning some key features of DeepLearning.AI that set it apart from other platforms in the AI education space. Providing a specific example of a successful outcome or a testimonial from a learner could also add credibility to the post. Overall, solid groundwork, but adding concrete details could elevate the impact of the review further.

--------------------------------------------------------------------------------

Writer (to Critic):

Title: "Unleashing the Power of AI: Explore DeepLearning.AI"

Dive into the world of artificial intelligence with DeepLearning.AI, a transformative platform reshaping AI education. Offering a blend of theory and hands-on experience, it sets itself apart by providing industry insights and real-world applications. From interactive projects to expert-led tutorials, DeepLearning.AI equips learners with practical skills essential in today's AI landscape. Imagine unlocking the potential to innovate, create, and lead in AI through this platform. Join a community of passionate learners and experts shaping the future. Embrace the AI revolution with DeepLearning.AI and chart your path to success in this dynamic field.

--------------------------------------------------------------------------------

3.3 创建审阅代理进行嵌套对话,实现复杂反思

上一节中,评论代理对撰写代理写的博客提供了反馈意见,帮助其进行改进,但是这份意见还是太过笼统。为了实现更复杂的反思工作流,我们将在评论代理内嵌套多个审阅代理,这些审阅代理分别审查博客文章的不同方面。

3.3.1 创建不同的审阅代理

SEO_reviewer:负责优化内容以提高其在搜索引擎中的排名,吸引流量。我们通过设置系统消息来实现这一点,下同。legal_reviewer:确保内容合法合规。ethics_reviewer:确保内容符合伦理规范,没有潜在的伦理问题。meta_reviewer:汇总所有评论代理的意见,给出最终建议。

SEO_reviewer = autogen.AssistantAgent(

name="SEO Reviewer",

llm_config=llm_config,

system_message="You are an SEO reviewer, known for "

"your ability to optimize content for search engines, "

"ensuring that it ranks well and attracts organic traffic. "

"Make sure your suggestion is concise (within 3 bullet points), "

"concrete and to the point. "

"Begin the review by stating your role.",

)

legal_reviewer = autogen.AssistantAgent(

name="Legal Reviewer",

llm_config=llm_config,

system_message="You are a legal reviewer, known for "

"your ability to ensure that content is legally compliant "

"and free from any potential legal issues. "

"Make sure your suggestion is concise (within 3 bullet points), "

"concrete and to the point. "

"Begin the review by stating your role.",

)

ethics_reviewer = autogen.AssistantAgent(

name="Ethics Reviewer",

llm_config=llm_config,

system_message="You are an ethics reviewer, known for "

"your ability to ensure that content is ethically sound "

"and free from any potential ethical issues. "

"Make sure your suggestion is concise (within 3 bullet points), "

"concrete and to the point. "

"Begin the review by stating your role. ",

)

meta_reviewer = autogen.AssistantAgent(

name="Meta Reviewer",

llm_config=llm_config,

system_message="You are a meta reviewer, you aggragate and review "

"the work of other reviewers and give a final suggestion on the content.",

)

3.3.2 配置审阅对话

- 定义反思消息生成函数

reflection_message,要求指定的审阅代理审查内容,并根据其角色提供反馈。 - 设置一个包含各个审阅代理任务配置的列表

review_chats。每个审阅代理会收到由reflection_message函数生成的消息,并在一轮对话中提供简短、具体的反馈。每个审阅代理的反馈会以JSON对象的形式返回。

def reflection_message(recipient, messages, sender, config):

return f'''Review the following content.

\n\n {recipient.chat_messages_for_summary(sender)[-1]['content']}'''

review_chats = [

{

"recipient": SEO_reviewer,

"message": reflection_message,

"summary_method": "reflection_with_llm",

"summary_args": {"summary_prompt" :

"Return review into as JSON object only:"

"{'Reviewer': '', 'Review': ''}. Here Reviewer should be your role",},

"max_turns": 1},

{

"recipient": legal_reviewer,

"message": reflection_message,

"summary_method": "reflection_with_llm",

"summary_args": {"summary_prompt" :

"Return review into as JSON object only:"

"{'Reviewer': '', 'Review': ''}.",},

"max_turns": 1},

{"recipient": ethics_reviewer,

"message": reflection_message,

"summary_method": "reflection_with_llm",

"summary_args": {"summary_prompt" :

"Return review into as JSON object only:"

"{'reviewer': '', 'review': ''}",},

"max_turns": 1},

{"recipient": meta_reviewer,

"message": "Aggregrate feedback from all reviewers and give final suggestions on the writing.",

"max_turns": 1},

]

列表中每一段对话都指定了一个审阅代理作为接收者,但是没有指定sender。因为稍后我们会将这个聊天列表注册给评论代理,评论代理将默认作为sender,此处不再需要显式地指定。

另外,我们需要设置正确的初始消息,以便嵌套的审阅者可以访问要审阅的内容。一种常见的方式是从聊天内容摘要中自动收集内容,这也是我们定义reflection_message函数的原因(收集上一轮对话的摘要信息作为下一轮对话的初始消息)。

3.3.3 注册嵌套对话,启动聊天

critic.register_nested_chats(

review_chats,

trigger=writer,

)

res = critic.initiate_chat(

recipient=writer,

message=task,

max_turns=2,

summary_method="last_msg"

)

critic.register_nested_chats: 将定义好的审阅聊天配置注册到评论代理(Critic)中,触发器选择writer。当评论代理收到撰写代理的内容时,它会自动触发这些嵌套的审稿过程。critic.initiate_chat: 启动评论代理与撰写代理的对话,传递写作任务,并设置最多2轮的对话轮次。总结方法为“last_msg”,即最后一条消息的内容。

通过这个多层次的代理系统,撰写代理(Writer)会首先创建一篇博客文章,然后评论代理(Critic)会触发嵌套的审稿过程,包括SEO、法律和道德方面的审查。最后,汇总审阅代理会整合所有反馈并提供最终的改进建议。这种结构化的审阅过程确保了内容的质量和合规性。

print(res.summary) # 返回最后改进的博客文章

Title: "Empower Your Future: Exploring DeepLearning.AI"

Unleash the potential of artificial intelligence through DeepLearning.AI, your gateway to mastering cutting-edge technology. From beginners to experts, discover comprehensive courses on deep learning, machine learning, and more. Shape tomorrow's technology landscape with engaging content and practical insights at your fingertips. Kickstart your journey today with DeepLearning.AI and empower yourself with the knowledge to drive innovation. Optimize your AI skills, enhance user engagement, and stay transparent with disclosed affiliations. Embrace the future with confidence, supported by accurate data and real-world examples. Dive into DeepLearning.AI and unlock endless possibilities for growth.

本课中,我们学习了如何使用嵌套聊天实现反思代理设计模式,特别是通过专门的工作流进行细致的反思。在下一课中,我们将进一步学习如何利用嵌套聊天提升代理设计模式。

![[书生·浦语大模型实战营]——LMDeploy 量化部署 LLM 实践](https://img-blog.csdnimg.cn/direct/9b284c3d82b7450788e3cc75181b6972.png)