〇、前言

相比YOLOv5/v7,除了YOLOv5n外,YOLOv7tiny的参数量较小,效果往往也相较YOLOv5n高上不少,又近来博主打算改进yolov7-tiny文件,但苦于其池化层部位是直接写在yaml中的,修改极为不便,因此对池化层做简化处理。

本次修改的目标是将yaml中池化层的代码修改为单层代码形式来代表,便于以后做替换、改进工作,因此,需要保证改进前后的参数量、GFLOPS相同。

此外,可能有小伙伴会疑问,为何博主不推荐把所有部位都简化?这是因为某些修改常常会修改于1x1的卷积,而不修改3x3的卷积;同理,亦有修改3x3的卷积,而不修改1x1的卷积的,除此之外,一篇结构中,并不是所有部位都用一种激活函数就好,很多时候需要不同部位采用不同激活函数,既然YOLOv7-tiny原版也保留该函数,那么博主也进行保留。同时,这么做更适合初学者理解,更为直观。所以博主更推荐大家只修改池化层的代码,这样也便于后续更细化地进行改进网络结构的工作。

接下来博主将会更新YOLOv5/v7的池化层改进代码,预计每篇修改1-2个池化层,尽量更新好目前常见且有效的代码,小伙伴们可关注博主后,以此篇代码为基础,进行进一步修改工作。

一、导言

池化层(Pooling Layer)是卷积神经网络(Convolutional Neural Networks, CNNs)中的一个重要组成部分,主要用于减少输入数据的空间尺寸(例如,图像的宽度和高度),同时保持其最重要的信息。这一过程称为下采样(downsampling)或者降维。池化操作通过提取特征图(feature maps)的摘要信息来实现,这些摘要信息通常是原特征的统计量,如最大值、平均值或其他聚合方式的结果。

池化层在目标检测中的作用至关重要,主要体现在以下几个方面:

-

位置不变性:池化操作(如最大池化或平均池化)可以降低网络对输入图像中特征位置的敏感度。这意味着即使目标在图像中的位置有所变动,池化层依然能够帮助网络捕捉到这些目标,增强模型的空间不变性。

-

特征降维:通过将输入特征图划分成多个小区域并对其进行聚合(如取最大值或平均值),池化层能够显著减少数据的维度,从而减少计算量和内存需求,加快训练速度,同时也降低了过拟合的风险。

-

特征选择:特别是在最大池化中,网络会选取每个区域中最显著的特征,这有助于提取图像中的关键信息,忽略不重要的细节,使得模型更加关注目标的显著特征。

-

固定大小的输出:在某些目标检测框架中,如Fast R-CNN中使用的ROI Pooling,池化层能够将不同大小和形状的候选区域(Regions of Interest, RoIs)转化为固定大小的特征图,这对于后续的全连接层处理是非常必要的,因为全连接层通常需要固定尺寸的输入。

-

多尺度特征:空间金字塔池化(Spatial Pyramid Pooling, SPP)等技术可以在不同尺度上进行池化,从而在单个模型中整合多尺度特征,这对于检测不同大小的目标尤为重要。

综上所述,池化层不仅提高了模型的效率和泛化能力,也为处理目标检测任务中的核心挑战提供了有效的解决方案。

二、准备工作

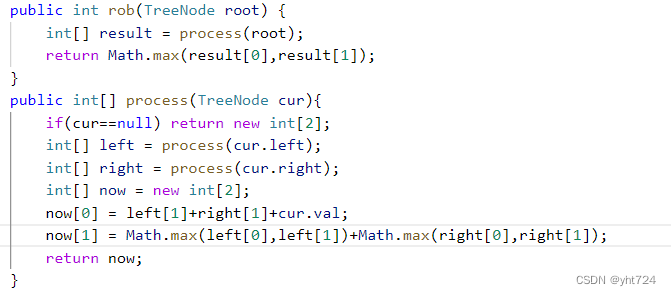

首先在YOLOv7的models文件夹下导入如下代码

##### yolov7-tiny-simplify mode #####

class v7tiny_SPP(nn.Module):

def __init__(self, c1, c2, n=1, shortcut=False, g=1, e=0.5, k=(5, 9, 13), act=True):

super(v7tiny_SPP, self).__init__()

c_ = int(2 * c2 * e) # hidden channels

self.cv1 = Conv(c1, c_, 1, 1)

self.cv2 = Conv(c1, c_, 1, 1)

self.m = nn.ModuleList([nn.MaxPool2d(kernel_size=x, stride=1, padding=x // 2) for x in k])

self.cv3 = Conv(4 * c_, c_, 1, 1)

self.cv4 = Conv(2 * c_, c2, 1, 1)

#self.act = nn.LeakyReLU() if act is True else (act if isinstance(act, nn.Module) else nn.Identity())

def forward(self, x):

x1 = self.cv1(x)

x2 = self.cv2(x)

x3 = torch.cat([x2] + [m(x2) for m in self.m], 1)

x4 = self.cv3(x3)

x5 = torch.cat((x1, x4), 1)

return self.cv4(x5)

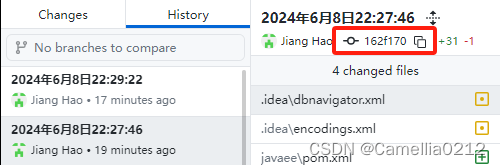

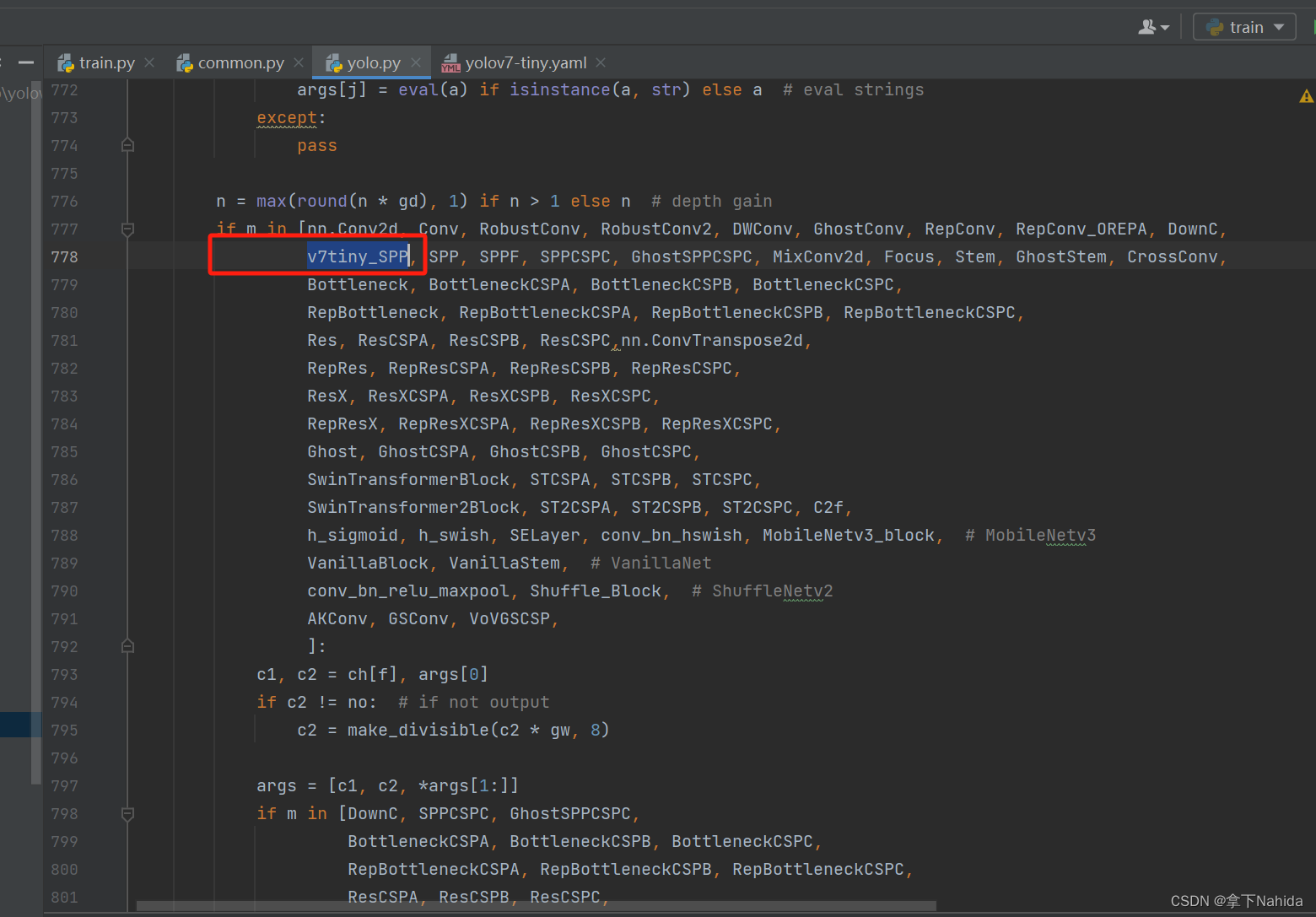

##### yolov7-tiny-simplify mode end #####其次在在YOLOv7项目文件下的models/yolo.py中搜索def parse_model(d, ch)

定位到如下行添加以下代码

v7tiny_SPP

三、YOLOv7-tiny改进工作

完成二后,在YOLOv7项目文件下的models文件夹下创建新的文件yolov7-tinynew.yaml,导入如下代码。

# parameters

nc: 80 # number of classes

depth_multiple: 1.0 # model depth multiple

width_multiple: 1.0 # layer channel multiple

# anchors

anchors:

- [10,13, 16,30, 33,23] # P3/8

- [30,61, 62,45, 59,119] # P4/16

- [116,90, 156,198, 373,326] # P5/32

# yolov7-tiny backbone

backbone:

# [from, number, module, args] c2, k=1, s=1, p=None, g=1, act=True

[[-1, 1, Conv, [32, 3, 2, None, 1, nn.LeakyReLU(0.1)]], # 0-P1/2

[-1, 1, Conv, [64, 3, 2, None, 1, nn.LeakyReLU(0.1)]], # 1-P2/4

[-1, 1, Conv, [32, 1, 1, None, 1, nn.LeakyReLU(0.1)]],

[-2, 1, Conv, [32, 1, 1, None, 1, nn.LeakyReLU(0.1)]],

[-1, 1, Conv, [32, 3, 1, None, 1, nn.LeakyReLU(0.1)]],

[-1, 1, Conv, [32, 3, 1, None, 1, nn.LeakyReLU(0.1)]],

[[-1, -2, -3, -4], 1, Concat, [1]],

[-1, 1, Conv, [64, 1, 1, None, 1, nn.LeakyReLU(0.1)]], # 7

[-1, 1, MP, []], # 8-P3/8

[-1, 1, Conv, [64, 1, 1, None, 1, nn.LeakyReLU(0.1)]],

[-2, 1, Conv, [64, 1, 1, None, 1, nn.LeakyReLU(0.1)]],

[-1, 1, Conv, [64, 3, 1, None, 1, nn.LeakyReLU(0.1)]],

[-1, 1, Conv, [64, 3, 1, None, 1, nn.LeakyReLU(0.1)]],

[[-1, -2, -3, -4], 1, Concat, [1]],

[-1, 1, Conv, [128, 1, 1, None, 1, nn.LeakyReLU(0.1)]], # 14

[-1, 1, MP, []], # 15-P4/16

[-1, 1, Conv, [128, 1, 1, None, 1, nn.LeakyReLU(0.1)]],

[-2, 1, Conv, [128, 1, 1, None, 1, nn.LeakyReLU(0.1)]],

[-1, 1, Conv, [128, 3, 1, None, 1, nn.LeakyReLU(0.1)]],

[-1, 1, Conv, [128, 3, 1, None, 1, nn.LeakyReLU(0.1)]],

[[-1, -2, -3, -4], 1, Concat, [1]],

[-1, 1, Conv, [256, 1, 1, None, 1, nn.LeakyReLU(0.1)]], # 21

[-1, 1, MP, []], # 22-P5/32

[-1, 1, Conv, [256, 1, 1, None, 1, nn.LeakyReLU(0.1)]],

[-2, 1, Conv, [256, 1, 1, None, 1, nn.LeakyReLU(0.1)]],

[-1, 1, Conv, [256, 3, 1, None, 1, nn.LeakyReLU(0.1)]],

[-1, 1, Conv, [256, 3, 1, None, 1, nn.LeakyReLU(0.1)]],

[[-1, -2, -3, -4], 1, Concat, [1]],

[-1, 1, Conv, [512, 1, 1, None, 1, nn.LeakyReLU(0.1)]], # 28

]

# yolov7-tiny head

head:

[[-1, 1, v7tiny_SPP, [256]], # 29

[-1, 1, Conv, [128, 1, 1, None, 1, nn.LeakyReLU(0.1)]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[21, 1, Conv, [128, 1, 1, None, 1, nn.LeakyReLU(0.1)]], # route backbone P4

[[-1, -2], 1, Concat, [1]],

[-1, 1, Conv, [64, 1, 1, None, 1, nn.LeakyReLU(0.1)]],

[-2, 1, Conv, [64, 1, 1, None, 1, nn.LeakyReLU(0.1)]],

[-1, 1, Conv, [64, 3, 1, None, 1, nn.LeakyReLU(0.1)]],

[-1, 1, Conv, [64, 3, 1, None, 1, nn.LeakyReLU(0.1)]],

[[-1, -2, -3, -4], 1, Concat, [1]],

[-1, 1, Conv, [128, 1, 1, None, 1, nn.LeakyReLU(0.1)]], # 39

[-1, 1, Conv, [64, 1, 1, None, 1, nn.LeakyReLU(0.1)]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[14, 1, Conv, [64, 1, 1, None, 1, nn.LeakyReLU(0.1)]], # route backbone P3

[[-1, -2], 1, Concat, [1]],

[-1, 1, Conv, [32, 1, 1, None, 1, nn.LeakyReLU(0.1)]],

[-2, 1, Conv, [32, 1, 1, None, 1, nn.LeakyReLU(0.1)]],

[-1, 1, Conv, [32, 3, 1, None, 1, nn.LeakyReLU(0.1)]],

[-1, 1, Conv, [32, 3, 1, None, 1, nn.LeakyReLU(0.1)]],

[[-1, -2, -3, -4], 1, Concat, [1]],

[-1, 1, Conv, [64, 1, 1, None, 1, nn.LeakyReLU(0.1)]], # 49

[-1, 1, Conv, [128, 3, 2, None, 1, nn.LeakyReLU(0.1)]],

[[-1, 39], 1, Concat, [1]],

[-1, 1, Conv, [64, 1, 1, None, 1, nn.LeakyReLU(0.1)]],

[-2, 1, Conv, [64, 1, 1, None, 1, nn.LeakyReLU(0.1)]],

[-1, 1, Conv, [64, 3, 1, None, 1, nn.LeakyReLU(0.1)]],

[-1, 1, Conv, [64, 3, 1, None, 1, nn.LeakyReLU(0.1)]],

[[-1, -2, -3, -4], 1, Concat, [1]],

[-1, 1, Conv, [128, 1, 1, None, 1, nn.LeakyReLU(0.1)]], # 57

[-1, 1, Conv, [256, 3, 2, None, 1, nn.LeakyReLU(0.1)]],

[[-1, 29], 1, Concat, [1]],

[-1, 1, Conv, [128, 1, 1, None, 1, nn.LeakyReLU(0.1)]],

[-2, 1, Conv, [128, 1, 1, None, 1, nn.LeakyReLU(0.1)]],

[-1, 1, Conv, [128, 3, 1, None, 1, nn.LeakyReLU(0.1)]],

[-1, 1, Conv, [128, 3, 1, None, 1, nn.LeakyReLU(0.1)]],

[[-1, -2, -3, -4], 1, Concat, [1]],

[-1, 1, Conv, [256, 1, 1, None, 1, nn.LeakyReLU(0.1)]], # 65

[49, 1, Conv, [128, 3, 1, None, 1, nn.LeakyReLU(0.1)]],

[57, 1, Conv, [256, 3, 1, None, 1, nn.LeakyReLU(0.1)]],

[65, 1, Conv, [512, 3, 1, None, 1, nn.LeakyReLU(0.1)]],

[[66, 67, 68], 1, IDetect, [nc, anchors]], # Detect(P3, P4, P5)

]

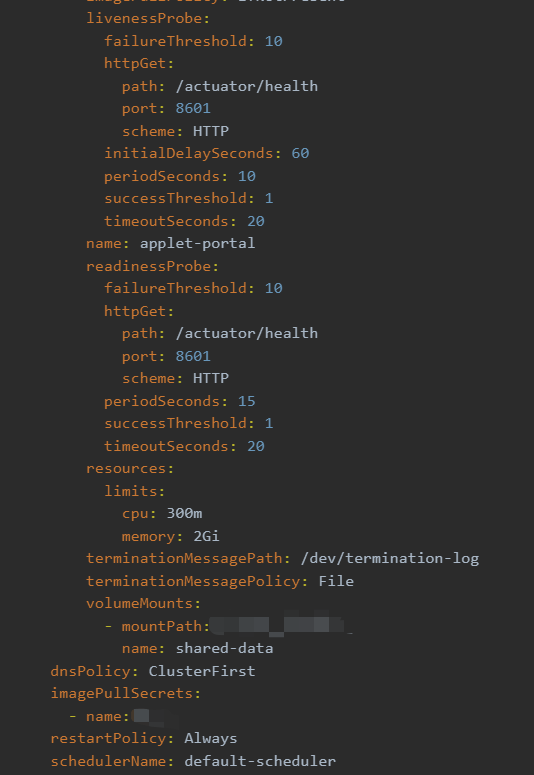

运行后,打印如下代码

from n params module arguments

0 -1 1 928 models.common.Conv [3, 32, 3, 2, None, 1, LeakyReLU(negative_slope=0.1)]

1 -1 1 18560 models.common.Conv [32, 64, 3, 2, None, 1, LeakyReLU(negative_slope=0.1)]

2 -1 1 2112 models.common.Conv [64, 32, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

3 -2 1 2112 models.common.Conv [64, 32, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

4 -1 1 9280 models.common.Conv [32, 32, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]

5 -1 1 9280 models.common.Conv [32, 32, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]

6 [-1, -2, -3, -4] 1 0 models.common.Concat [1]

7 -1 1 8320 models.common.Conv [128, 64, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

8 -1 1 0 models.common.MP []

9 -1 1 4224 models.common.Conv [64, 64, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

10 -2 1 4224 models.common.Conv [64, 64, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

11 -1 1 36992 models.common.Conv [64, 64, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]

12 -1 1 36992 models.common.Conv [64, 64, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]

13 [-1, -2, -3, -4] 1 0 models.common.Concat [1]

14 -1 1 33024 models.common.Conv [256, 128, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

15 -1 1 0 models.common.MP []

16 -1 1 16640 models.common.Conv [128, 128, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

17 -2 1 16640 models.common.Conv [128, 128, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

18 -1 1 147712 models.common.Conv [128, 128, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]

19 -1 1 147712 models.common.Conv [128, 128, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]

20 [-1, -2, -3, -4] 1 0 models.common.Concat [1]

21 -1 1 131584 models.common.Conv [512, 256, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

22 -1 1 0 models.common.MP []

23 -1 1 66048 models.common.Conv [256, 256, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

24 -2 1 66048 models.common.Conv [256, 256, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

25 -1 1 590336 models.common.Conv [256, 256, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]

26 -1 1 590336 models.common.Conv [256, 256, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]

27 [-1, -2, -3, -4] 1 0 models.common.Concat [1]

28 -1 1 525312 models.common.Conv [1024, 512, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

29 -1 1 657408 models.common.v7tiny_SPP [512, 256]

30 -1 1 33024 models.common.Conv [256, 128, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

31 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

32 21 1 33024 models.common.Conv [256, 128, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

33 [-1, -2] 1 0 models.common.Concat [1]

34 -1 1 16512 models.common.Conv [256, 64, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

35 -2 1 16512 models.common.Conv [256, 64, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

36 -1 1 36992 models.common.Conv [64, 64, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]

37 -1 1 36992 models.common.Conv [64, 64, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]

38 [-1, -2, -3, -4] 1 0 models.common.Concat [1]

39 -1 1 33024 models.common.Conv [256, 128, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

40 -1 1 8320 models.common.Conv [128, 64, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

41 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

42 14 1 8320 models.common.Conv [128, 64, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

43 [-1, -2] 1 0 models.common.Concat [1]

44 -1 1 4160 models.common.Conv [128, 32, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

45 -2 1 4160 models.common.Conv [128, 32, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

46 -1 1 9280 models.common.Conv [32, 32, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]

47 -1 1 9280 models.common.Conv [32, 32, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]

48 [-1, -2, -3, -4] 1 0 models.common.Concat [1]

49 -1 1 8320 models.common.Conv [128, 64, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

50 -1 1 73984 models.common.Conv [64, 128, 3, 2, None, 1, LeakyReLU(negative_slope=0.1)]

51 [-1, 39] 1 0 models.common.Concat [1]

52 -1 1 16512 models.common.Conv [256, 64, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

53 -2 1 16512 models.common.Conv [256, 64, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

54 -1 1 36992 models.common.Conv [64, 64, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]

55 -1 1 36992 models.common.Conv [64, 64, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]

56 [-1, -2, -3, -4] 1 0 models.common.Concat [1]

57 -1 1 33024 models.common.Conv [256, 128, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

58 -1 1 295424 models.common.Conv [128, 256, 3, 2, None, 1, LeakyReLU(negative_slope=0.1)]

59 [-1, 29] 1 0 models.common.Concat [1]

60 -1 1 65792 models.common.Conv [512, 128, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

61 -2 1 65792 models.common.Conv [512, 128, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

62 -1 1 147712 models.common.Conv [128, 128, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]

63 -1 1 147712 models.common.Conv [128, 128, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]

64 [-1, -2, -3, -4] 1 0 models.common.Concat [1]

65 -1 1 131584 models.common.Conv [512, 256, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

66 49 1 73984 models.common.Conv [64, 128, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]

67 57 1 295424 models.common.Conv [128, 256, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]

68 65 1 1180672 models.common.Conv [256, 512, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]

69 [66, 67, 68] 1 17132 models.yolo.IDetect [1, [[10, 13, 16, 30, 33, 23], [30, 61, 62, 45, 59, 119], [116, 90, 156, 198, 373, 326]], [128, 256, 512]]

Model Summary: 261 layers, 6014988 parameters, 6014988 gradients, 13.2 GFLOPS再和原版打印的结构对比

from n params module arguments

0 -1 1 928 models.common.Conv [3, 32, 3, 2, None, 1, LeakyReLU(negative_slope=0.1)]

1 -1 1 18560 models.common.Conv [32, 64, 3, 2, None, 1, LeakyReLU(negative_slope=0.1)]

2 -1 1 2112 models.common.Conv [64, 32, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

3 -2 1 2112 models.common.Conv [64, 32, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

4 -1 1 9280 models.common.Conv [32, 32, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]

5 -1 1 9280 models.common.Conv [32, 32, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]

6 [-1, -2, -3, -4] 1 0 models.common.Concat [1]

7 -1 1 8320 models.common.Conv [128, 64, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

8 -1 1 0 models.common.MP []

9 -1 1 4224 models.common.Conv [64, 64, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

10 -2 1 4224 models.common.Conv [64, 64, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

11 -1 1 36992 models.common.Conv [64, 64, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]

12 -1 1 36992 models.common.Conv [64, 64, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]

13 [-1, -2, -3, -4] 1 0 models.common.Concat [1]

14 -1 1 33024 models.common.Conv [256, 128, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

15 -1 1 0 models.common.MP []

16 -1 1 16640 models.common.Conv [128, 128, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

17 -2 1 16640 models.common.Conv [128, 128, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

18 -1 1 147712 models.common.Conv [128, 128, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]

19 -1 1 147712 models.common.Conv [128, 128, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]

20 [-1, -2, -3, -4] 1 0 models.common.Concat [1]

21 -1 1 131584 models.common.Conv [512, 256, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

22 -1 1 0 models.common.MP []

23 -1 1 66048 models.common.Conv [256, 256, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

24 -2 1 66048 models.common.Conv [256, 256, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

25 -1 1 590336 models.common.Conv [256, 256, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]

26 -1 1 590336 models.common.Conv [256, 256, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]

27 [-1, -2, -3, -4] 1 0 models.common.Concat [1]

28 -1 1 525312 models.common.Conv [1024, 512, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

29 -1 1 131584 models.common.Conv [512, 256, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

30 -2 1 131584 models.common.Conv [512, 256, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

31 -1 1 0 models.common.SP [5]

32 -2 1 0 models.common.SP [9]

33 -3 1 0 models.common.SP [13]

34 [-1, -2, -3, -4] 1 0 models.common.Concat [1]

35 -1 1 262656 models.common.Conv [1024, 256, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

36 [-1, -7] 1 0 models.common.Concat [1]

37 -1 1 131584 models.common.Conv [512, 256, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

38 -1 1 33024 models.common.Conv [256, 128, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

39 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

40 21 1 33024 models.common.Conv [256, 128, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

41 [-1, -2] 1 0 models.common.Concat [1]

42 -1 1 16512 models.common.Conv [256, 64, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

43 -2 1 16512 models.common.Conv [256, 64, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

44 -1 1 36992 models.common.Conv [64, 64, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]

45 -1 1 36992 models.common.Conv [64, 64, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]

46 [-1, -2, -3, -4] 1 0 models.common.Concat [1]

47 -1 1 33024 models.common.Conv [256, 128, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

48 -1 1 8320 models.common.Conv [128, 64, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

49 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

50 14 1 8320 models.common.Conv [128, 64, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

51 [-1, -2] 1 0 models.common.Concat [1]

52 -1 1 4160 models.common.Conv [128, 32, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

53 -2 1 4160 models.common.Conv [128, 32, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

54 -1 1 9280 models.common.Conv [32, 32, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]

55 -1 1 9280 models.common.Conv [32, 32, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]

56 [-1, -2, -3, -4] 1 0 models.common.Concat [1]

57 -1 1 8320 models.common.Conv [128, 64, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

58 -1 1 73984 models.common.Conv [64, 128, 3, 2, None, 1, LeakyReLU(negative_slope=0.1)]

59 [-1, 47] 1 0 models.common.Concat [1]

60 -1 1 16512 models.common.Conv [256, 64, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

61 -2 1 16512 models.common.Conv [256, 64, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

62 -1 1 36992 models.common.Conv [64, 64, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]

63 -1 1 36992 models.common.Conv [64, 64, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]

64 [-1, -2, -3, -4] 1 0 models.common.Concat [1]

65 -1 1 33024 models.common.Conv [256, 128, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

66 -1 1 295424 models.common.Conv [128, 256, 3, 2, None, 1, LeakyReLU(negative_slope=0.1)]

67 [-1, 37] 1 0 models.common.Concat [1]

68 -1 1 65792 models.common.Conv [512, 128, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

69 -2 1 65792 models.common.Conv [512, 128, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

70 -1 1 147712 models.common.Conv [128, 128, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]

71 -1 1 147712 models.common.Conv [128, 128, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]

72 [-1, -2, -3, -4] 1 0 models.common.Concat [1]

73 -1 1 131584 models.common.Conv [512, 256, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]

74 57 1 73984 models.common.Conv [64, 128, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]

75 65 1 295424 models.common.Conv [128, 256, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]

76 73 1 1180672 models.common.Conv [256, 512, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]

77 [74, 75, 76] 1 17132 models.yolo.IDetect [1, [[10, 13, 16, 30, 33, 23], [30, 61, 62, 45, 59, 119], [116, 90, 156, 198, 373, 326]], [128, 256, 512]]

Model Summary: 263 layers, 6014988 parameters, 6014988 gradients, 13.2 GFLOPS代码参数量一致,简化改进成功。

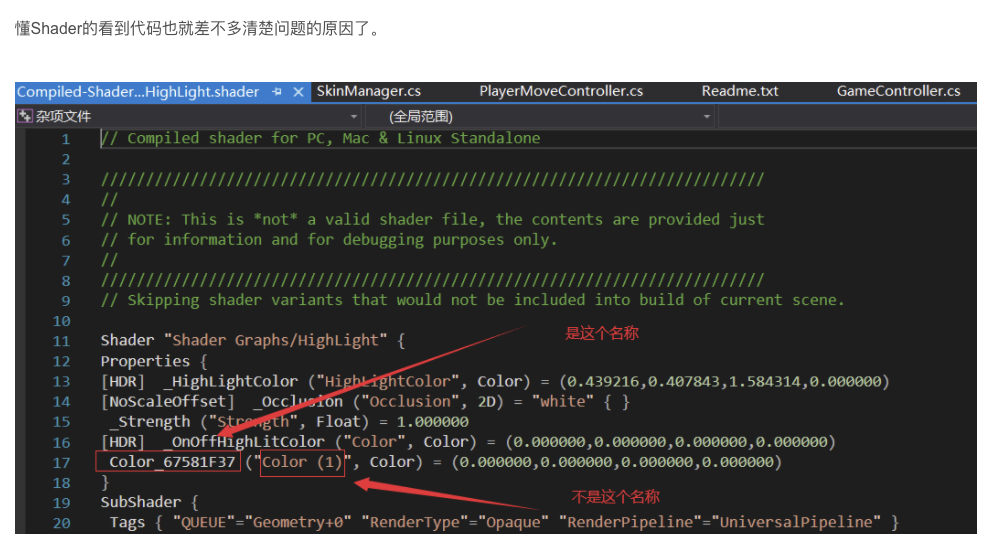

四、注意

若要改进你的网络结构的激活函数部分,请注意第二点中common修改的 v7tiny_SPP 代码部分的激活函数是根据本地文件common.py的Conv的激活函数。

具体如何修改激活函数部分详见我的博客:

【零基础保姆级教程】零基础如何快速进入YOLOv5/v7的科研状态?-CSDN博客

的二、2、部分!!!

同时,接下来我的博客的池化层修改大多以此为准,望周知。

更多文章产出中,主打简洁和准确,欢迎关注我,共同探讨!