💥💥💞💞欢迎来到本博客❤️❤️💥💥

🏆博主优势:🌞🌞🌞博客内容尽量做到思维缜密,逻辑清晰,为了方便读者。

⛳️座右铭:行百里者,半于九十。

📋📋📋本文目录如下:🎁🎁🎁

目录

💥1 概述

📚2 运行结果

🌈3 Matlab代码实现

🎉4 参考文献

💥1 概述

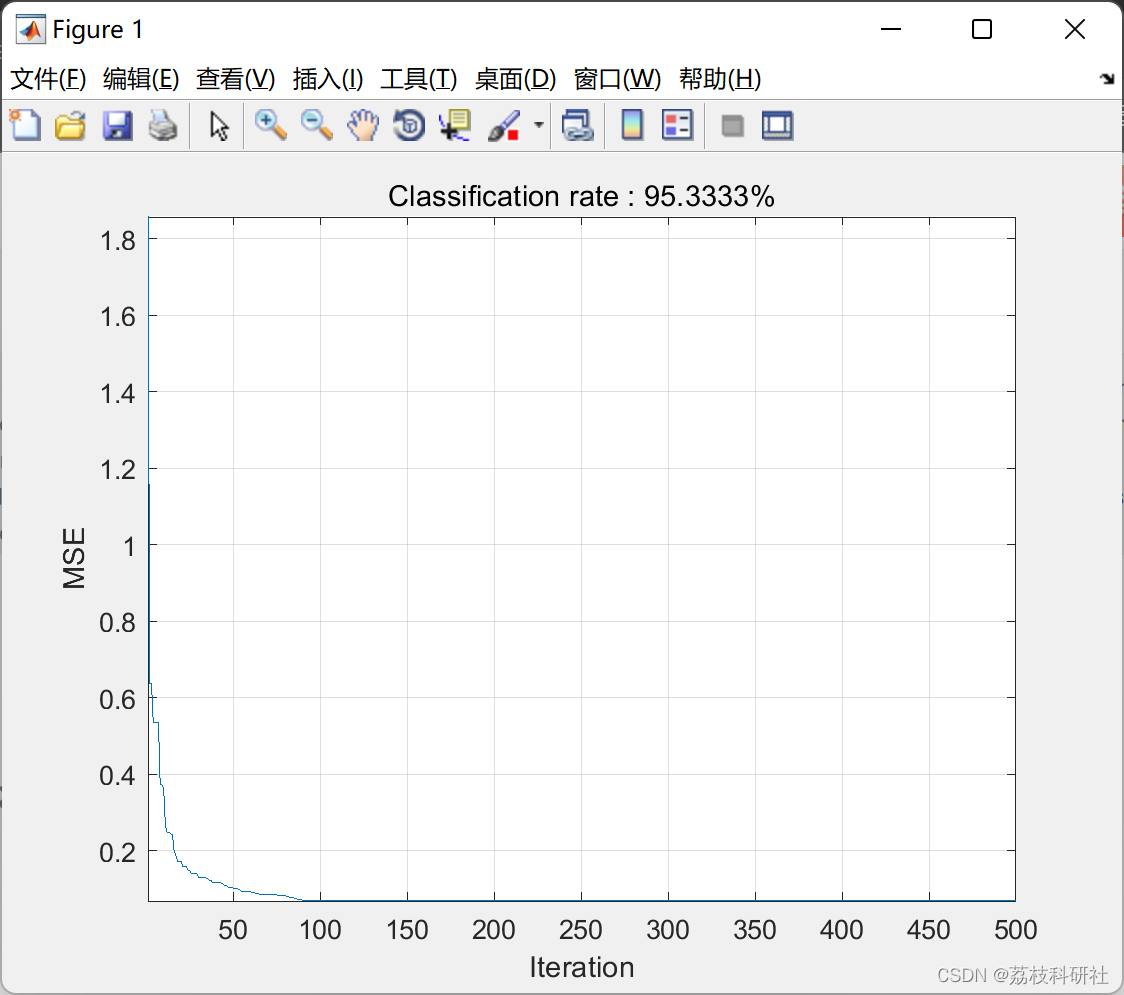

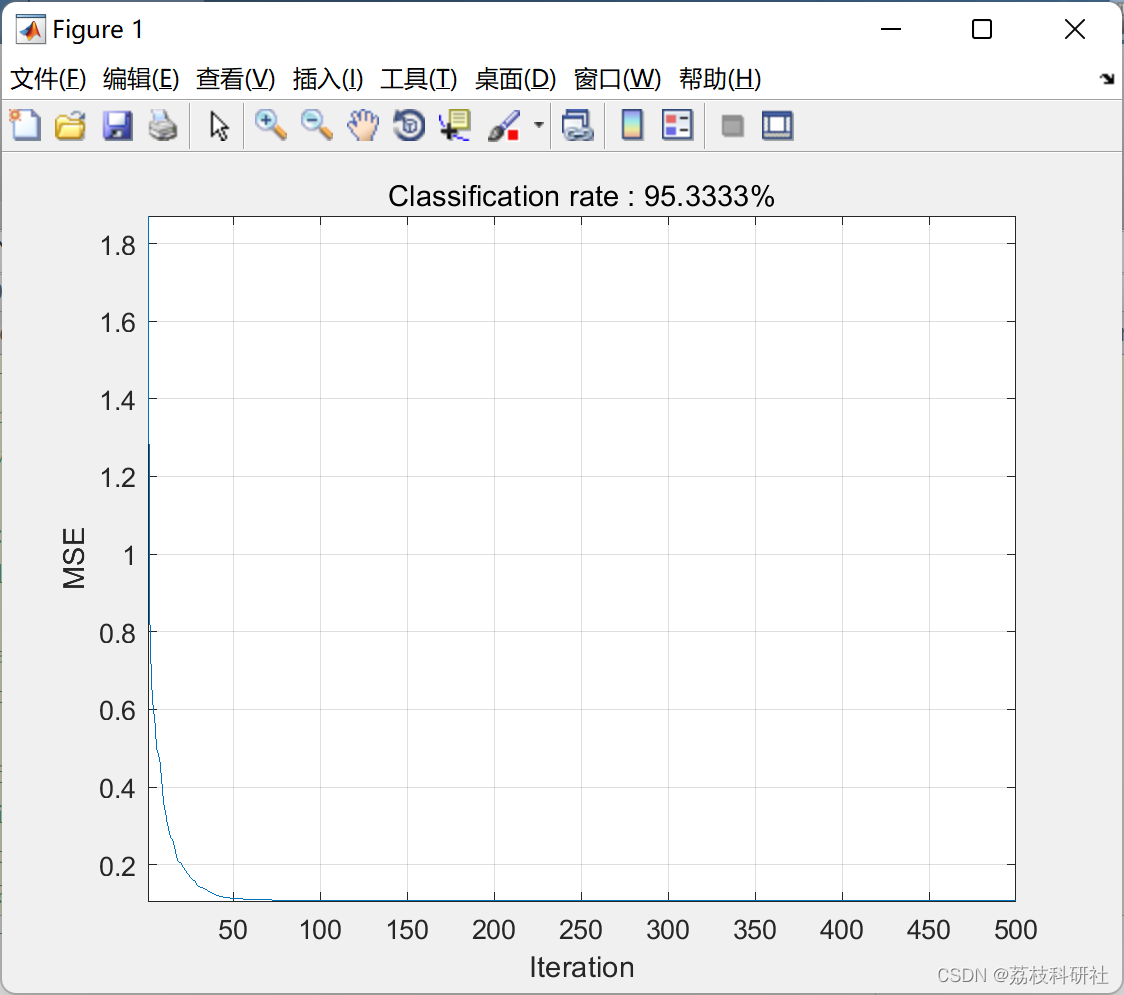

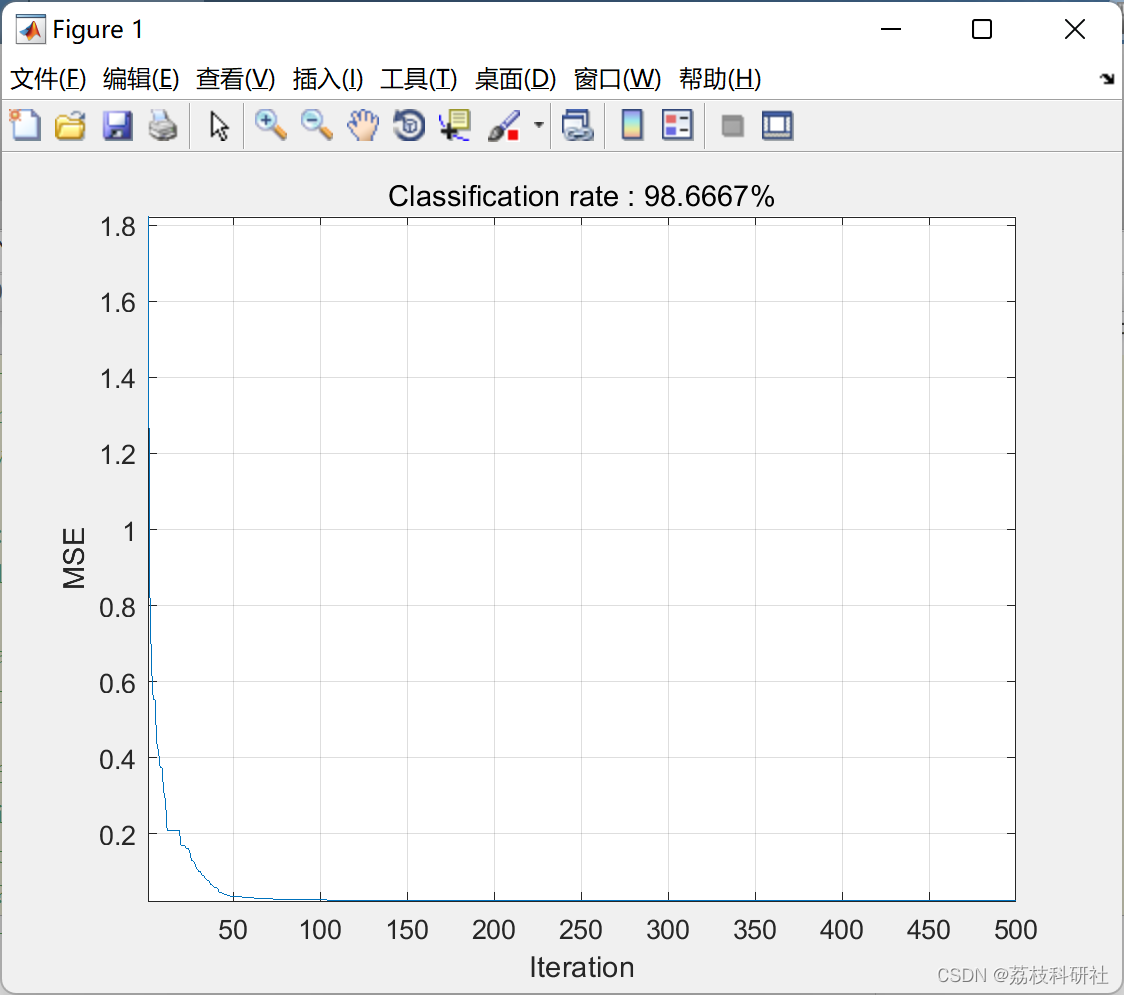

本文利用粒子群优化(PSO)和引力搜索算法(GSA)的混合体,称为PSOGSA,用于训练前馈神经网络(FNN)。该算法应用于众所周知的鸢尾花数据集。该程序是一个改进的前馈神经网络,使用称为PSOGSA的混合算法。

文献来源:

肤色识别的应用在基于内容的分析和人机交互中的应用得到了扩展。因此,实现一种有用的方法来分割皮肤像素可以帮助解决所呈现的问题。该文提出一种混合PSOGSA -ANN作为前馈神经网络(FNNs)的新训练方法,以研究解决皮肤分类问题的高效率。所提出的颜色分割算法直接应用于RGB色彩空间,无需色彩空间转换。实验结果表明,所提方法能够显著提高MLP算法在皮肤颜色识别问题上的性能。

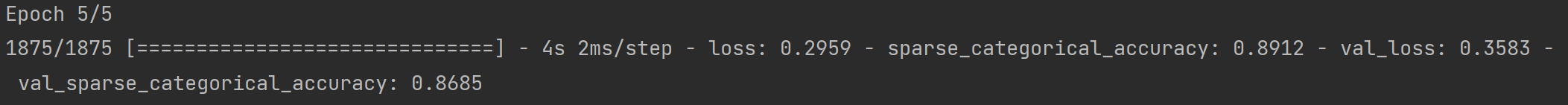

📚2 运行结果

部分代码:

部分代码:

x=sortrows(iris,2);

H2=x(1:150,1);

H3=x(1:150,2);

H4=x(1:150,3);

H5=x(1:150,4);

T=x(1:150,5);

H2=H2';

[xf,PS] = mapminmax(H2);

I2(:,1)=xf;

H3=H3';

[xf,PS2] = mapminmax(H3);

I2(:,2)=xf;

H4=H4';

[xf,PS3] = mapminmax(H4);

I2(:,3)=xf;

H5=H5';

[xf,PS4] = mapminmax(H5);

I2(:,4)=xf;

Thelp=T;

T=T';

[yf,PS5]= mapminmax(T);

T=yf;

T=T';

%% /FNN initial parameters//

HiddenNodes=15; %Number of hidden codes

Dim=8*HiddenNodes+3; %Dimension of masses in GSA

TrainingNO=150; %Number of training samples

%% GSA/

%Configurations and initializations

noP = 30; %Number of masses

Max_iteration = 500; %Maximum number of iteration

CurrentFitness =zeros(noP,1);

G0=1; %Gravitational constant

CurrentPosition = rand(noP,Dim); %Postition vector

velocity = .3*randn(noP,Dim) ; %Velocity vector

acceleration=zeros(noP,Dim); %Acceleration vector

mass(noP)=0; %Mass vector

force=zeros(noP,Dim);%Force vector

%Vectores for saving the location and MSE of the best mass

BestMSE=inf;

BestMass=zeros(1,Dim);

ConvergenceCurve=zeros(1,Max_iteration); %Convergence vector

%Main loop

Iteration = 0 ;

while ( Iteration < Max_iteration )

Iteration = Iteration + 1;

G=G0*exp(-20*Iteration/Max_iteration);

force=zeros(noP,Dim);

mass(noP)=0;

acceleration=zeros(noP,Dim);

%Calculate MSEs

for i = 1:noP

for ww=1:(7*HiddenNodes)

Weights(ww)=CurrentPosition(i,ww);

end

for bb=7*HiddenNodes+1:Dim

Biases(bb-(7*HiddenNodes))=CurrentPosition(i,bb);

end

fitness=0;

for pp=1:TrainingNO

actualvalue=My_FNN(4,HiddenNodes,3,Weights,Biases,I2(pp,1),I2(pp,2), I2(pp,3),I2(pp,4));

if(T(pp)==-1)

fitness=fitness+(1-actualvalue(1))^2;

fitness=fitness+(0-actualvalue(2))^2;

fitness=fitness+(0-actualvalue(3))^2;

end

if(T(pp)==0)

fitness=fitness+(0-actualvalue(1))^2;

fitness=fitness+(1-actualvalue(2))^2;

fitness=fitness+(0-actualvalue(3))^2;

end

if(T(pp)==1)

fitness=fitness+(0-actualvalue(1))^2;

fitness=fitness+(0-actualvalue(2))^2;

fitness=fitness+(1-actualvalue(3))^2;

end

end

fitness=fitness/TrainingNO;

CurrentFitness(i) = fitness;

end

best=min(CurrentFitness);

worst=max(CurrentFitness);

🌈3 Matlab代码实现

🎉4 参考文献

部分理论来源于网络,如有侵权请联系删除。

@inproceedings{Mohseni2013TrainingFN, title={Training feedforward neural networks using hybrid particle swarm optimization and gravitational search}, author={Mahmood Mohseni and Mehdi Ramezani}, year={2013} }

![[ESP][驱动]ST7701S RGB屏幕驱动](https://img-blog.csdnimg.cn/img_convert/55bd19e9ebc2f9ab367664848db56b8d.png)