2023.1.18

经过学习了计算图、链式法则、加法层、乘法层、激活函数层、Affine层、Softmax层的反向传播的实现。今天来学习反向传播法的算法实现,做一次总结;

实现的思路(“学习”的步骤):

一,前提

神经网络的“学习”是,在存在合适的权重和偏置下,对其调整以拟合训练数据的过程;

步骤1: 我们从训练数据中随机选取一部分数据(mini-batch),目的是减小其损失函数的值;

步骤2: 为了完成步骤1,需要求出各个权重参数的梯度,寻找mini-batch的损失函数的值减少最多的方向;

步骤3: 进行权重参数沿梯度的微小更新;

步骤4: 重复步骤1,2,3;

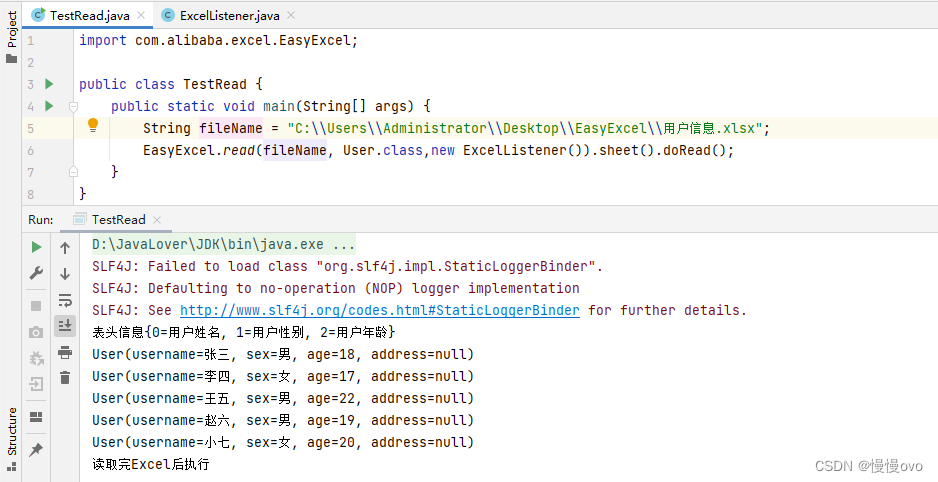

我们在步骤2上会用到误差传播法,高效计算梯度,观察代码:

这里只有了两层神经网络去是实现他:来观察神经网络的结构

class TwoLayerNet:

def __init__(self, input, hidden, output, weight__init__std=0.01):

# 权重的初始化 假设一个权重

self.params = {}

self.params['w1'] = weight__init__std * np.random.randn(input, hidden)

self.params['b1'] = np.zeros(hidden)

self.params['w2'] = weight__init__std * np.random.randn(hidden, output)

self.params['b2'] = np.zeros(output)

# 生成层

self.layers = OrderedDict()

self.layers['Affine1'] = Affine(self.params['w1'], self.params['b1'])

self.layers['ReLU1'] = ReLU()

self.layers['Affine2'] = Affine(self.params['w2'], self.params['b2'])

self.lastlayer = SoftmaxWithLoss()

def predict(self, x):

for layer in self.layers.values():

x = layer.forward(x)

return x

def loss(self, x, t): # x:测试数据;t:监督数据

y = self.predict(x)

return self.lastlayer.forward(y, t)

def accuracy(self, x, t):

y = self.predict(x)

y = np.argmax(y, axis=1) # 正确解标签

if t.ndim != 1:

t = np.argmax(t, axis=1)

accuracy = np.sum(y == t) / float(x.shape[0])

return accuracy

def numerical_grandient(self, x, t): # x:测试数据;t:监督数据

loss_w = lambda w: self.loss(x, t)

grads = {}

grads['w1'] = numerical_gradient(loss_w, self.params['w1'])

grads['b1'] = numerical_gradient(loss_w, self.params['b1'])

grads['w2'] = numerical_gradient(loss_w, self.params['w2'])

grads['b2'] = numerical_gradient(loss_w, self.params['b2'])

return grads

def gradient(self, x, t):

# forward

self.loss(x, t)

# backward

dout = 1

dout = self.lastlayer.backward(dout)

layers = list(self.layers.values())

layers.reverse()

# reserved() 是 Python 内置函数之一,其功能是对于给定的序列(包括列表、元组、字符串以及 range(n) 区间),该函数可以返回一个逆序序列的迭代器(用于遍历该逆序序列)

for layer in layers:

dout = layer.backward(dout)

# setting

grads = {}

grads['w1'] = self.layers['Affine1'].dw

grads['b1'] = self.layers['Affine1'].db

grads['w2'] = self.layers['Affine2'].dw

grads['b2'] = self.layers['Affine2'].db

return grads

OrderDict是有序字典:

记住向字典里添加元素的顺序,逆序输出;

Reserved函数:

# reserved() 是 Python 内置函数之一,其功能是对于给定的序列(包括列表、元组、字符串以及 range(n) 区间),该函数可以返回一个逆序序列的迭代器(用于遍历该逆序序列);

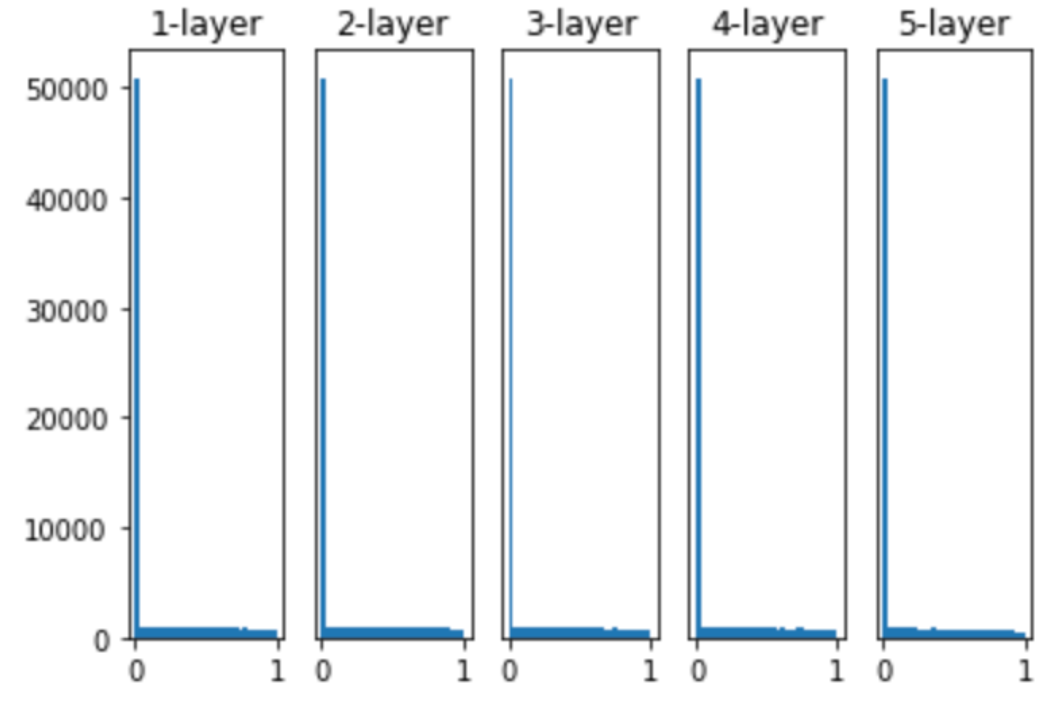

梯度确认利用数值微分和误差反向传播法的差分:

数值微分的特点是简单、速度慢、不易出错;误差反向传播法的特点是复杂、速度快,容易出错。所以我们来进行梯度确认,观察输出结果是否一致;

# 读入数据和梯度确认

x_batch = x_train[:3] # 3 张图片 (3个数字) 因为数值微分运行很慢,取3个结果观察

t_batch = t_train[:3]

grad_numberical = networks.numerical_grandient(x_batch, t_batch) # 数值微分法

grad_backprop = networks.gradient(x_batch, t_batch) # 误差反向传播法

# 求各个权重的绝对平均值

print("gradient recognition:", '\n')

for key in grad_numberical.keys():

diff = np.average(np.abs(grad_backprop[key] - grad_numberical[key]))

print(key + ":" + str(diff), file=outputfile)

# 输出结果:

# w1:0.0008062789370314258

# b1:0.007470903158435932

# w2:0.007911547556927193

# b2:0.4162550575209752

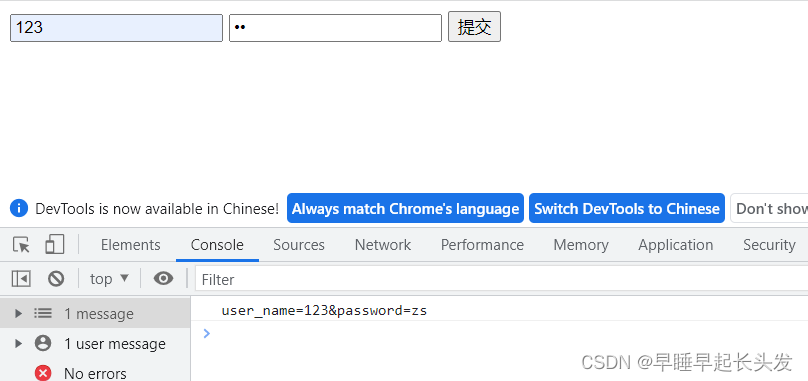

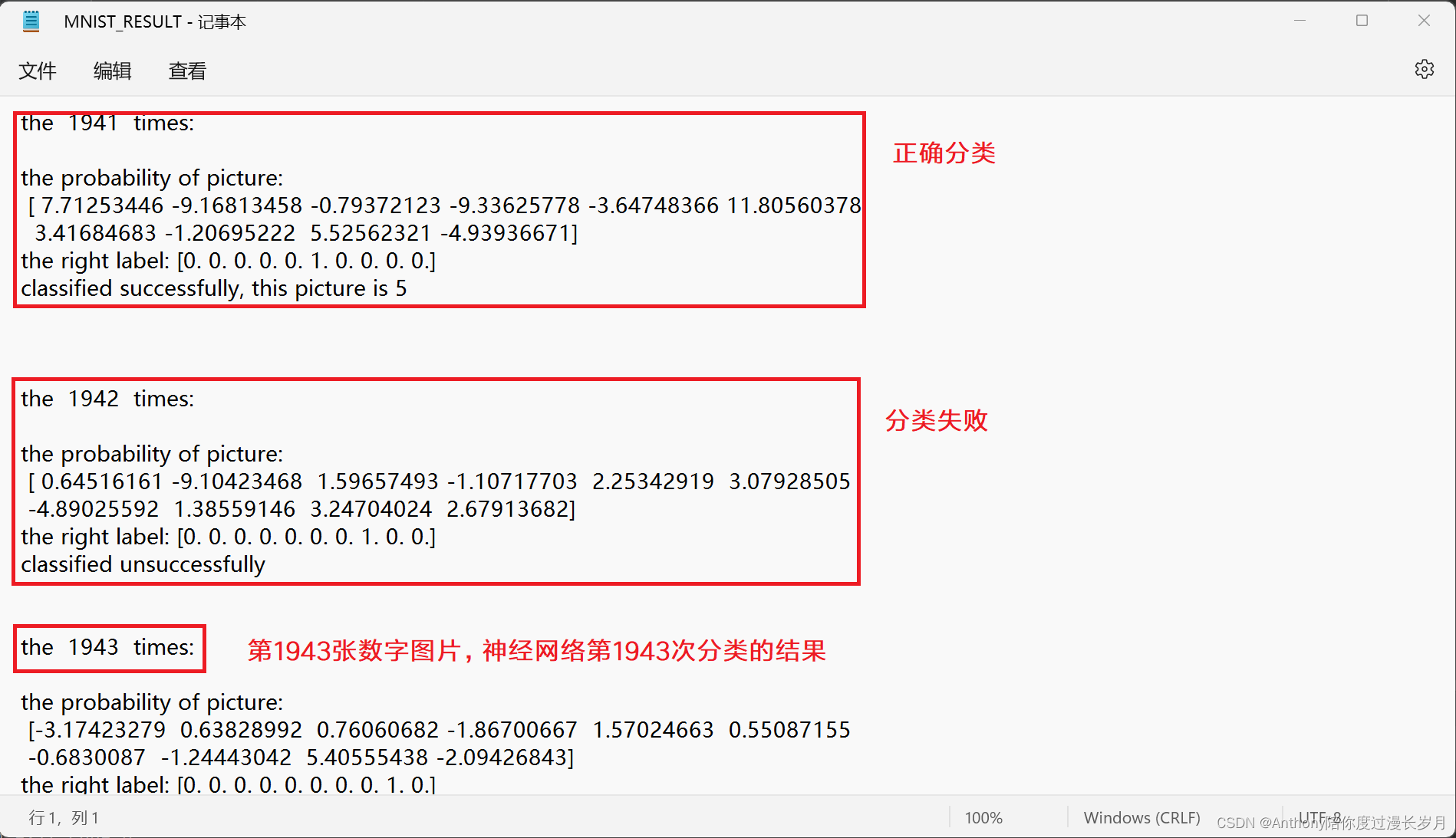

记录保存功能:

运行的结果非常多,为了更好的改进神经网络模型,对运行记录保存很有必要;

# 输出结果保存 def Result_save(name): path = "C:\\Users\\zzh\\Deshtop\\" # 自己根据需要设置保存路径 full_path = path + name + '.txt' # 也可以创建一个.doc的word文档 file = open(full_path, 'w') return file..............................................

...........代码运行部分.............

..............................................

outputfile.close()

这里我们将运行结果保存到了txt的文件中:

我们要将需要保存是运行结果print()中加入file=ouputfile,最后面一定的有outputfile.close(),否则都不会成功

好像这样:

print("the Accuracy:" + str(float(accuracy_cnt) / x_test.shape[0]), file=outputfile)

相比于之前的代码,这次的学习通过使用层的模块化,神经网络中可以自由地组装层,轻松构建自己喜欢的神经网络。

以下是完整代码:

import numpy as np

from collections import OrderedDict # 有序字典:记住向字典里添加元素的顺序

import sys, os

from dataset.mnist import load_mnist

sys.path.append(os.pardir)

# 数值微分

def numerical_gradient(f, x):

h = 1e-4 # 0.0001

grad = np.zeros_like(x)

it = np.nditer(x, flags=['multi_index'], op_flags=['readwrite']) # np.nditer() 迭代器处理多维数组

while not it.finished:

idx = it.multi_index

tmp_val = x[idx]

x[idx] = float(tmp_val) + h

fxh1 = f(x) # f(x+h)

x[idx] = tmp_val - h

fxh2 = f(x) # f(x-h)

grad[idx] = (fxh1 - fxh2) / (2 * h)

x[idx] = tmp_val # 还原值

it.iternext()

return grad

# 损失函数

def cross_entropy_error(y, t):

delta = 1e-7

return -1 * np.sum(t * np.log(y + delta))

# 激活函数

def softmax(x):

if x.ndim == 2:

x = x.T

x = x - np.max(x, axis=0)

y = np.exp(x) / np.sum(np.exp(x), axis=0)

return y.T

x = x - np.max(x)

return np.exp(x) / np.sum(np.exp(x))

def sigmoid(x1):

return 1 / (1 + np.exp(-x1))

# 加法层、乘法层、激活函数层、Affine层、Softmax层

class Addyer: # 加法节点

def __init__(self):

pass

def forward(self, x, y):

out = x + y

return out

def backward(self, dout):

dx = dout * 1

dy = dout * 1

return dx, dy

class Mullyer: # 乘法节点

def __init__(self): # __init__() 中会初始化实例变量

self.x = None

self.y = None

def forward(self, x, y):

self.x = y

self.y = x

out = x * y

return out

def backward(self, dout):

dx = dout * self.x

dy = dout * self.y

return dx, dy

class ReLU:

def __init__(self):

self.mask = None

def forward(self, x):

self.mask = (x <= 0)

out = x.copy()

out[self.mask] = 0

return out

def backward(self, dout):

dout[self.mask] = 0

dx = dout

return dx

class SoftmaxWithLoss:

def __init__(self):

self.loss = None

self.y = None

self.t = None

def forward(self, x, t):

self.t = t

self.y = softmax(x)

self.loss = cross_entropy_error(self.y, self.t)

return self.loss

def backward(self, dout=1):

batch_size = self.t.shape[0]

dx = (self.y - self.t) / batch_size

return dx

class Affine:

def __init__(self, w, b):

self.w = w

self.b = b

self.x = None

self.dw = None

self.db = None

def forward(self, x):

self.x = x

out = np.dot(x, self.w) + self.b

return out

def backward(self, dout):

dx = np.dot(dout, self.w.T)

self.dw = np.dot(self.x.T, dout)

self.db = np.sum(dout, axis=0)

return dx

class TwoLayerNet:

def __init__(self, input, hidden, output, weight__init__std=0.01):

# 权重的初始化 假设一个权重

self.params = {}

self.params['w1'] = weight__init__std * np.random.randn(input, hidden)

self.params['b1'] = np.zeros(hidden)

self.params['w2'] = weight__init__std * np.random.randn(hidden, output)

self.params['b2'] = np.zeros(output)

# 生成层

self.layers = OrderedDict()

self.layers['Affine1'] = Affine(self.params['w1'], self.params['b1'])

self.layers['ReLU1'] = ReLU()

self.layers['Affine2'] = Affine(self.params['w2'], self.params['b2'])

self.lastlayer = SoftmaxWithLoss()

def predict(self, x):

for layer in self.layers.values():

x = layer.forward(x)

return x

def loss(self, x, t): # x:测试数据;t:监督数据

y = self.predict(x)

return self.lastlayer.forward(y, t)

def accuracy(self, x, t):

y = self.predict(x)

y = np.argmax(y, axis=1) # 正确解标签

if t.ndim != 1:

t = np.argmax(t, axis=1)

accuracy = np.sum(y == t) / float(x.shape[0])

return accuracy

def numerical_grandient(self, x, t): # x:测试数据;t:监督数据

loss_w = lambda w: self.loss(x, t)

grads = {}

grads['w1'] = numerical_gradient(loss_w, self.params['w1'])

grads['b1'] = numerical_gradient(loss_w, self.params['b1'])

grads['w2'] = numerical_gradient(loss_w, self.params['w2'])

grads['b2'] = numerical_gradient(loss_w, self.params['b2'])

return grads

def gradient(self, x, t):

# forward

self.loss(x, t)

# backward

dout = 1

dout = self.lastlayer.backward(dout)

layers = list(self.layers.values())

layers.reverse()

# reserved() 是 Python 内置函数之一,其功能是对于给定的序列(包括列表、元组、字符串以及 range(n) 区间),该函数可以返回一个逆序序列的迭代器(用于遍历该逆序序列)

for layer in layers:

dout = layer.backward(dout)

# setting

grads = {}

grads['w1'] = self.layers['Affine1'].dw

grads['b1'] = self.layers['Affine1'].db

grads['w2'] = self.layers['Affine2'].dw

grads['b2'] = self.layers['Affine2'].db

return grads

# 输出结果保存

def Result_save(name):

path = "C:\\Users\\zzh\\Deshtop\\"

full_path = path + name + '.txt' # 也可以创建一个.doc的word文档

file = open(full_path, 'w')

return file

filename = 'MNIST_RESULT'

Result_save(filename)

output = sys.stdout

outputfile = open("C:\\Users\\zzh\\Deshtop\\" + filename + '.txt', 'w')

sys.stdout = outputfile

# 数据导入

(x_train, t_train), (x_test, t_test) = load_mnist(normalize=True, one_hot_label=True)

networks = TwoLayerNet(input=784, hidden=50, output=10)

# # 读入数据和梯度确认

# x_batch = x_train[:3] # 3 张图片 (3个数字) 因为数值微分运行很慢,取3个结果观察

# t_batch = t_train[:3]

#

# grad_numberical = networks.numerical_grandient(x_batch, t_batch) # 数值微分法

# grad_backprop = networks.gradient(x_batch, t_batch) # 误差反向传播法

#

# # 求各个权重的绝对平均值

# for key in grad_numberical.keys():

# diff = np.average(np.abs(grad_backprop[key] - grad_numberical[key]))

# print(key + ":" + str(diff))

#

# # w1:0.0008062789370314258

# # b1:0.007470903158435932

# # w2:0.007911547556927193

# # b2:0.4162550575209752

# 超参数

iters_num = 10000

train_size = x_train.shape[0] # 60000

batch_size = 100 # 批处理数量

learning_rate = 0.1

train_acc_list = []

test_acc_list = []

train_loss_list = []

iter_per_epoch = max(train_size / batch_size, 1)

print("MNIST classification", '\n', "Nerual Network is learning weight and bias", file=outputfile)

for i in range(iters_num):

batch_mask = np.random.choice(train_size, batch_size) # mini——batch 处理

x_batch = x_train[batch_mask]

t_batch = t_train[batch_mask]

grad = networks.gradient(x_batch, t_batch) # 通过误差反向传播法求梯度

# 更新权重、偏置参数

for key in ('w1', 'b1', 'w2', 'b2'):

networks.params[key] -= learning_rate * grad[key]

loss = networks.loss(x_batch, t_batch)

train_loss_list.append(loss)

# 每个epoch的识别精度 输出的是总的正确率 而不是一个数据(图片)的概率

if i % iter_per_epoch == 0:

train_acc = networks.accuracy(x_train, t_train)

test_acc = networks.accuracy(x_test, t_test)

train_acc_list.append(train_acc)

test_acc_list.append(test_acc)

print("train acc, test acc |" + str(train_acc) + ",", str(test_acc), file=outputfile)

# 输出的概率 利用更新好的参数去推理

print("the shape of weight and bias:", '\n', file=outputfile)

print(networks.params['w1'].shape, file=outputfile) # (784, 50)

print(networks.params['b1'].shape, file=outputfile) # (50,)

print(networks.params['w2'].shape, file=outputfile) # (50, 10)

print(networks.params['b2'].shape, file=outputfile) # (10,)

accuracy_cnt = 0

for i in range(x_test.shape[0]):

y = networks.predict(x_test[i])

print("the ", i + 1, " times:", '\n', file=outputfile)

print("the probability of picture:", '\n', y, file=outputfile)

print("the right label:", '\n', t_test[i], file=outputfile)

result = np.argmax(y)

answer = np.argmax(t_test[i])

if result == answer:

print("classified successfully, this picture is", result, '\n', file=outputfile)

accuracy_cnt += 1

else:

print("classified unsuccessfully", file=outputfile)

print('\n', file=outputfile)

print("the Accuracy:" + str(float(accuracy_cnt) / x_test.shape[0]), file=outputfile)

outputfile.close()

MINIST数据导入的代码:

# coding: utf-8

try:

import urllib.request

except ImportError:

raise ImportError('You should use Python 3.x')

import os.path

import gzip

import pickle

import os

import numpy as np

url_base = 'http://yann.lecun.com/exdb/mnist/'

key_file = {

'train_img': 'train-images-idx3-ubyte.gz',

'train_label': 'train-labels-idx1-ubyte.gz',

'test_img': 't10k-images-idx3-ubyte.gz',

'test_label': 't10k-labels-idx1-ubyte.gz'

}

dataset_dir = os.path.dirname(os.path.abspath(__file__))

save_file = dataset_dir + "/mnist.pkl"

train_num = 60000

test_num = 10000

img_dim = (1, 28, 28)

img_size = 784

def _download(file_name):

file_path = dataset_dir + "/" + file_name

if os.path.exists(file_path):

return

print("Downloading " + file_name + " ... ")

urllib.request.urlretrieve(url_base + file_name, file_path)

print("Done")

def download_mnist():

for v in key_file.values():

_download(v)

def _load_label(file_name):

file_path = dataset_dir + "/" + file_name

print("Converting " + file_name + " to NumPy Array ...")

with gzip.open(file_path, 'rb') as f:

labels = np.frombuffer(f.read(), np.uint8, offset=8)

print("Done")

return labels

def _load_img(file_name):

file_path = dataset_dir + "/" + file_name

print("Converting " + file_name + " to NumPy Array ...")

with gzip.open(file_path, 'rb') as f:

data = np.frombuffer(f.read(), np.uint8, offset=16)

data = data.reshape(-1, img_size)

print("Done")

return data

def _convert_numpy():

dataset = {}

dataset['train_img'] = _load_img(key_file['train_img'])

dataset['train_label'] = _load_label(key_file['train_label'])

dataset['test_img'] = _load_img(key_file['test_img'])

dataset['test_label'] = _load_label(key_file['test_label'])

return dataset

def init_mnist():

download_mnist()

dataset = _convert_numpy()

print("Creating pickle file ...")

with open(save_file, 'wb') as f:

pickle.dump(dataset, f, -1)

print("Done!")

def _change_one_hot_label(X):

T = np.zeros((X.size, 10))

for idx, row in enumerate(T):

row[X[idx]] = 1

return T

def load_mnist(normalize=True, flatten=True, one_hot_label=False):

"""读入MNIST数据集

Parameters

----------

normalize : 将图像的像素值正规化为0.0~1.0

one_hot_label :

one_hot_label为True的情况下,标签作为one-hot数组返回

one-hot数组是指[0,0,1,0,0,0,0,0,0,0]这样的数组

flatten : 是否将图像展开为一维数组

Returns

-------

(训练图像, 训练标签), (测试图像, 测试标签)

"""

if not os.path.exists(save_file):

init_mnist()

with open(save_file, 'rb') as f:

dataset = pickle.load(f)

if normalize:

for key in ('train_img', 'test_img'):

dataset[key] = dataset[key].astype(np.float32)

dataset[key] /= 255.0

if one_hot_label:

dataset['train_label'] = _change_one_hot_label(dataset['train_label'])

dataset['test_label'] = _change_one_hot_label(dataset['test_label'])

if not flatten:

for key in ('train_img', 'test_img'):

dataset[key] = dataset[key].reshape(-1, 1, 28, 28)

return (dataset['train_img'], dataset['train_label']), (dataset['test_img'], dataset['test_label'])

if __name__ == '__main__':

init_mnist()