下载HF AutoTrain 模型的配置文件

- 一.在huggingface上创建AutoTrain项目

- 二.通过HF用户名和autotrain项目名,拼接以下url,下载模型列表(json格式)到指定目录

- 三.解析上面的json文件、去重、批量下载模型配置文件(权重以外的文件)

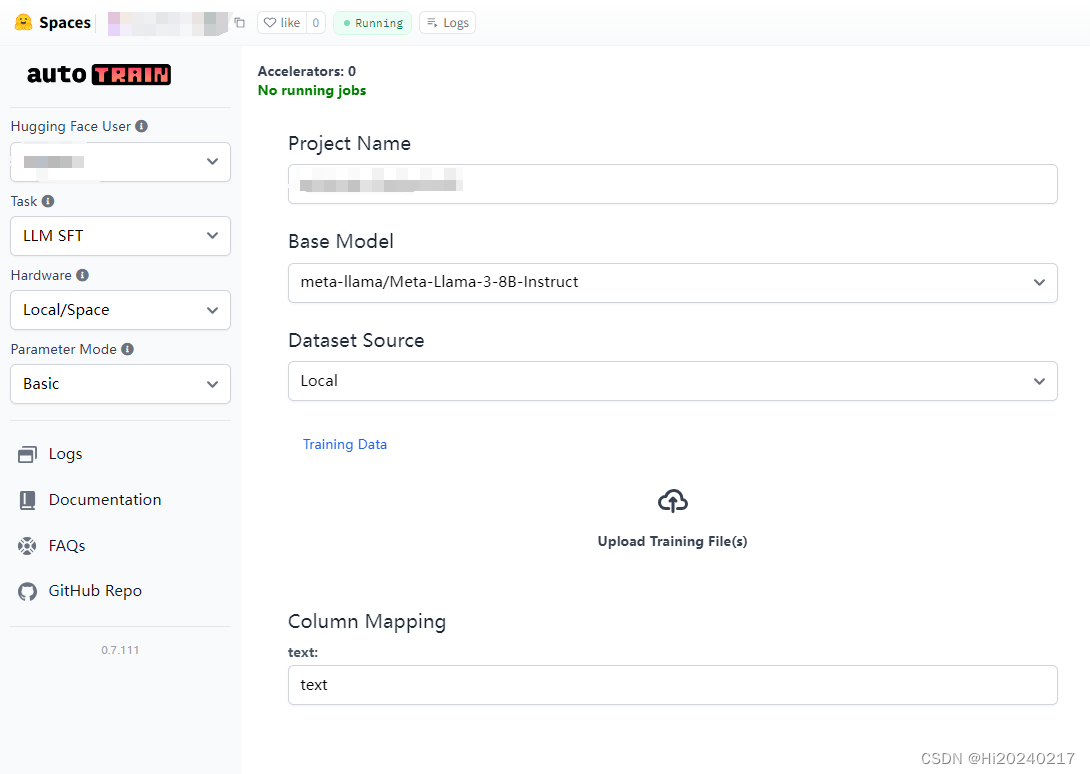

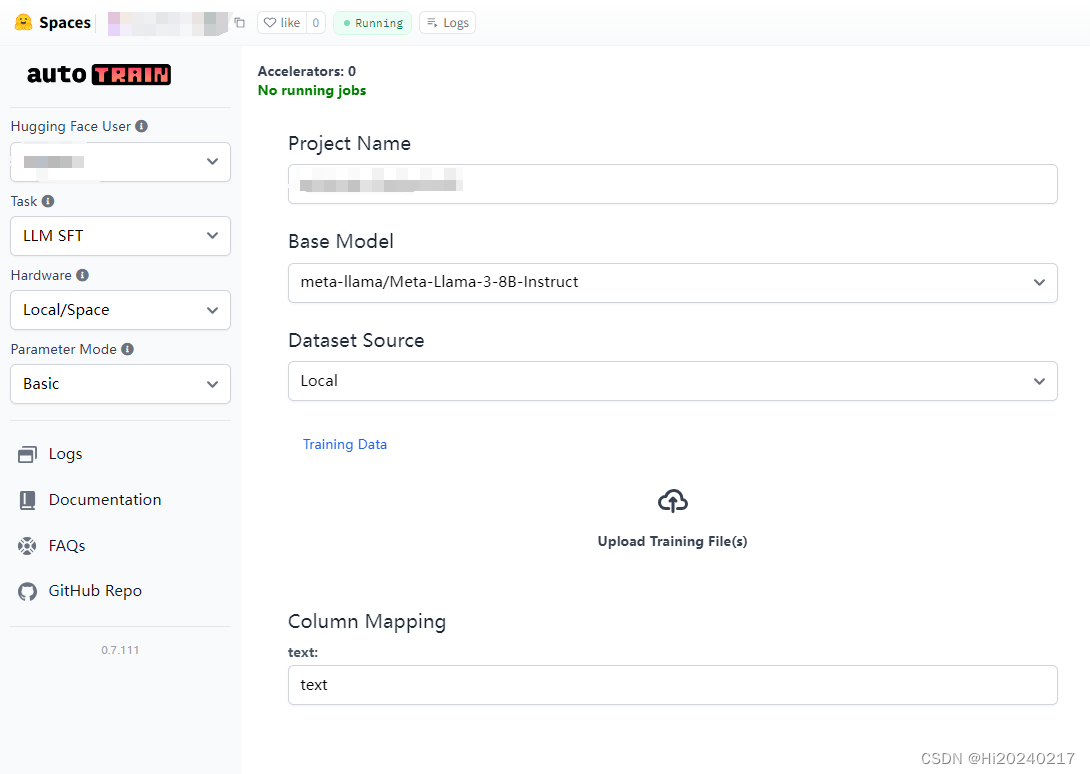

一.在huggingface上创建AutoTrain项目

二.通过HF用户名和autotrain项目名,拼接以下url,下载模型列表(json格式)到指定目录

mkdir model_names

cd model_names

wget https://username-projectname.hf.space/ui/model_choices/llm:sft -O llm_sft.txt

wget https://username-projectname.hf.space/ui/model_choices/llm:orpo -O llm_orpo.txt

wget https://username-projectname.hf.space/ui/model_choices/llm:generic -O llm_generic.txt

wget https://username-projectname.hf.space/ui/model_choices/llm:dpo -O llm_dpo.txt

wget https://username-projectname.hf.space/ui/model_choices/llm:reward -O llm_reward.txt

wget https://username-projectname.hf.space/ui/model_choices/text-classification -O text_classification.txt

wget https://username-projectname.hf.space/ui/model_choices/text-regression -O text_regression.txt

wget https://username-projectname.hf.space/ui/model_choices/seq2seq -O seq2seq.txt

wget https://username-projectname.hf.space/ui/model_choices/token-classification -O token_classification.txt

wget https://username-projectname.hf.space/ui/model_choices/dreambooth -O dreambooth.txt

wget https://username-projectname.hf.space/ui/model_choices/image-classification -O image_classification.txt

wget https://username-projectname.hf.space/ui/model_choices/image-object-detection -O image_object_detection.txt

三.解析上面的json文件、去重、批量下载模型配置文件(权重以外的文件)

from huggingface_hub import snapshot_download

from pathlib import Path

import os

import glob

import json

import tqdm

def download_model(repo_id):

models_path = Path.cwd().joinpath("models",repo_id)

models_path.mkdir(parents=True, exist_ok=True)

if len(glob.glob(os.path.join(models_path, "*.json")))>0:

return

snapshot_download(repo_id=repo_id,

allow_patterns=["*.json", "tokenizer*","README.md"],

local_dir=models_path,

resume_download=True,

token="hf_YOUR_TOKEN")

def load_meta_info():

file_path="meta.txt"

if os.path.exists(file_path):

repo_ids=[]

with open(file_path, "r") as f:

lines=f.readlines()

for line in lines:

items=line.strip().split(",")

repo_ids.append(items[0])

return repo_ids

repo_ids=set()

repo_id_model_type_map=dict()

for file in sorted(glob.glob("model_names/*.txt")):

model_type=os.path.basename(file).split(".")[0]

with open(file, "r") as f:

for item in json.loads(f.read().strip()):

repo_id=item["id"]

repo_ids.add(repo_id)

if repo_id not in repo_id_model_type_map:

repo_id_model_type_map[repo_id]=set()

repo_id_model_type_map[repo_id].add(model_type)

with open(file_path, "w") as f:

for repo_id in repo_ids:

model_types=repo_id_model_type_map[repo_id]

f.write(f"{repo_id}, {model_types}\n")

return repo_ids

for repo_id in tqdm.tqdm(load_meta_info()):

print(repo_id)

if repo_id in ["Corcelio/mobius","briaai/BRIA-2.3"]:

continue

download_model(repo_id)