上一篇写的beautifulsoup和request爬取出的结果有误。首先,TapTap网页以JS格式解析,且评论并没有“下一页”,而是每次加载到底部就要进行等待重新加载。我们需要做的,是模仿浏览器的行为,所以这里我们用Selenium的方式爬取。

下载ChromeDriver

ChromeDriver作用是给Pyhton提供一个模拟浏览器,让Python能够运行一个模拟的浏览器进行网页访问 用selenium进行鼠标及键盘等操作获取到网页真正的源代码。

官方下载地址:https://sites.google.com/a/chromium.org/chromedriver/downloads

注意,一定要下载自己chrome浏览器对应版本的驱动,根据自己的电脑版本下载对应系统的文件

以Windows版本为例,将下载好的chromedriver_win64.zip解压得到一个exe文件,将其复制到Python安装目录下的Scripts文件夹即可

爬虫操作

首先导入所需库

import pandas as pd

import time

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC滚动到底部的驱动

def scroll_to_bottom(driver):

# 使用 JavaScript 模拟滚动到页面底部

driver.execute_script("window.scrollTo(0, document.body.scrollHeight);")爬取评论

def get_taptap_reviews(url, max_reviews=50):

reviews = []

driver = webdriver.Chrome() # 需要安装 Chrome WebDriver,并将其路径添加到系统环境变量中

driver.get(url)

try:

# 等待评论加载完成

WebDriverWait(driver, 10).until(EC.presence_of_element_located((By.CLASS_NAME, "text-box__content")))

last_review_count = 0

while len(reviews) < max_reviews:

review_divs = driver.find_elements(By.CLASS_NAME, 'text-box__content')

for review_div in review_divs[last_review_count:]:

review = review_div.text.strip()

reviews.append(review)

if len(reviews) >= max_reviews:

break

if len(reviews) >= max_reviews:

break

last_review_count = len(review_divs)

# 模拟向下滚动页面

scroll_to_bottom(driver)

# 等待新评论加载

time.sleep(10) # 等待时间也可以根据实际情况调整,确保加载足够的评论

# 检查是否有新评论加载

new_review_divs = driver.find_elements(By.CLASS_NAME, 'text-box__content')

if len(new_review_divs) == len(review_divs):

break # 没有新评论加载,退出循环

finally:

driver.quit()

return reviews[:max_reviews]将评论输出到excel中

def save_reviews_to_excel(reviews, filename='taptap.xlsx'):

df = pd.DataFrame(reviews, columns=['comment'])

df.to_excel(filename, index=False)main

if __name__ == "__main__":

url = "https://www.taptap.cn/app/247283/review"

max_reviews = 50

reviews = get_taptap_reviews(url, max_reviews)

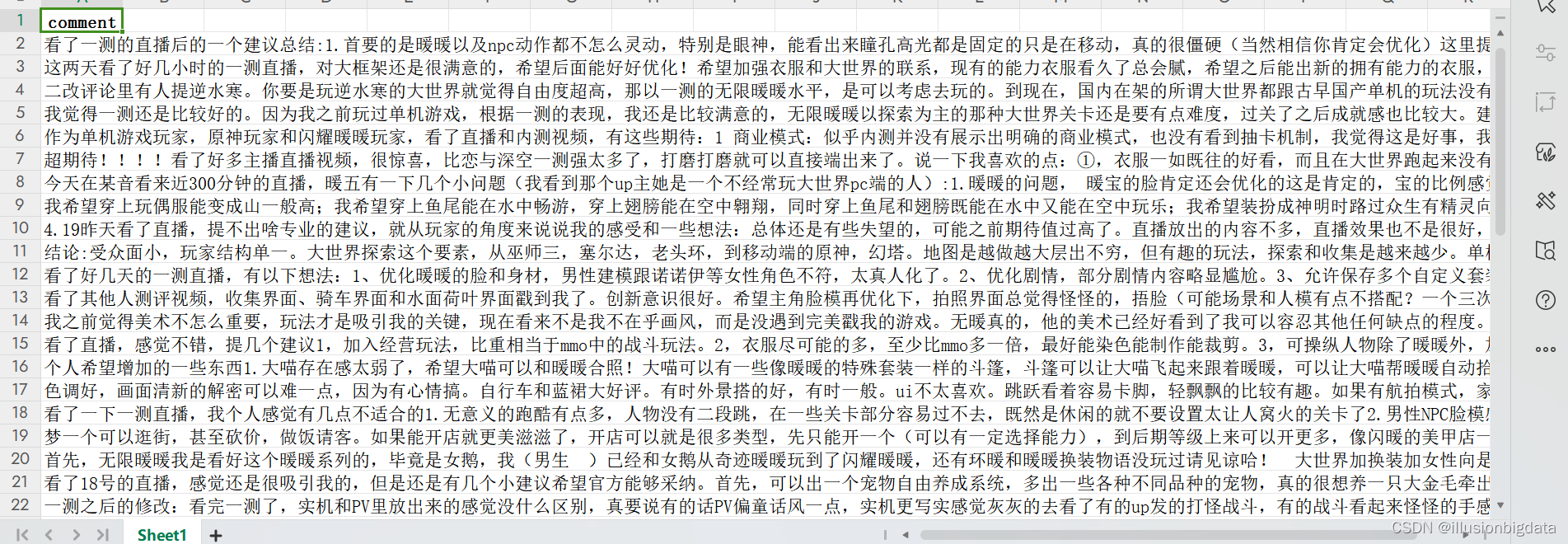

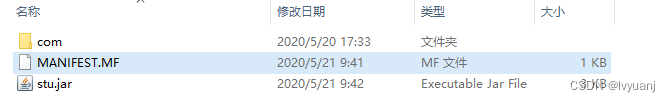

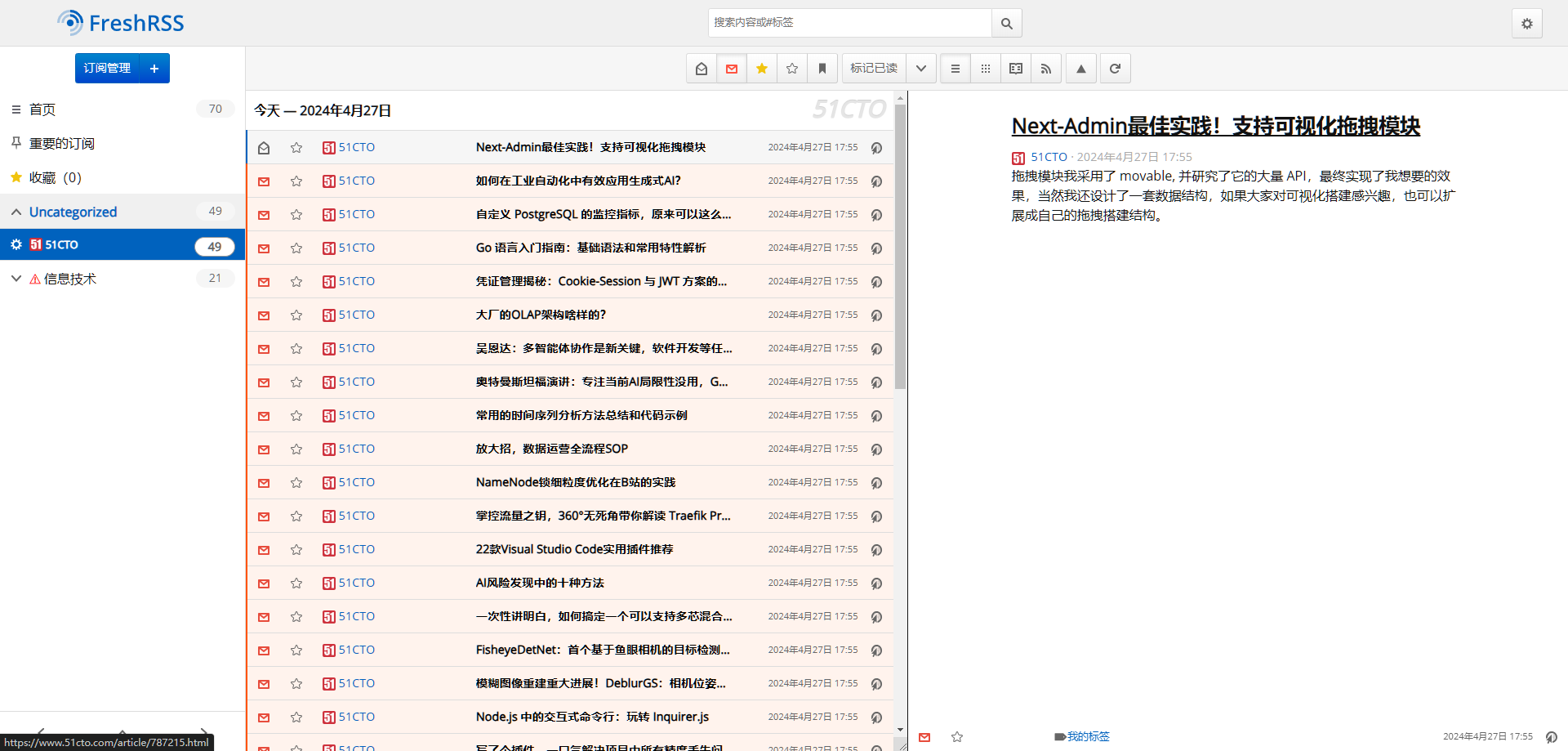

save_reviews_to_excel(reviews)查看输出的结果

代码汇总

import pandas as pd

import time

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

def scroll_to_bottom(driver):

# 使用 JavaScript 模拟滚动到页面底部

driver.execute_script("window.scrollTo(0, document.body.scrollHeight);")

def get_taptap_reviews(url, max_reviews=50):

reviews = []

driver = webdriver.Chrome() # 需要安装 Chrome WebDriver,并将其路径添加到系统环境变量中

driver.get(url)

try:

# 等待评论加载完成

WebDriverWait(driver, 10).until(EC.presence_of_element_located((By.CLASS_NAME, "text-box__content")))

last_review_count = 0

while len(reviews) < max_reviews:

review_divs = driver.find_elements(By.CLASS_NAME, 'text-box__content')

for review_div in review_divs[last_review_count:]:

review = review_div.text.strip()

reviews.append(review)

if len(reviews) >= max_reviews:

break

if len(reviews) >= max_reviews:

break

last_review_count = len(review_divs)

# 模拟向下滚动页面

scroll_to_bottom(driver)

# 等待新评论加载

time.sleep(10) # 等待时间也可以根据实际情况调整,确保加载足够的评论

# 检查是否有新评论加载

new_review_divs = driver.find_elements(By.CLASS_NAME, 'text-box__content')

if len(new_review_divs) == len(review_divs):

break # 没有新评论加载,退出循环

finally:

driver.quit()

return reviews[:max_reviews]

def save_reviews_to_excel(reviews, filename='taptap.xlsx'):

df = pd.DataFrame(reviews, columns=['comment'])

df.to_excel(filename, index=False)

if __name__ == "__main__":

url = "https://www.taptap.cn/app/247283/review"

max_reviews = 50

reviews = get_taptap_reviews(url, max_reviews)

save_reviews_to_excel(reviews)