模块出处

[CVPR 23] [link] [code] PIDNet: A Real-time Semantic Segmentation Network Inspired by PID Controllers

模块名称

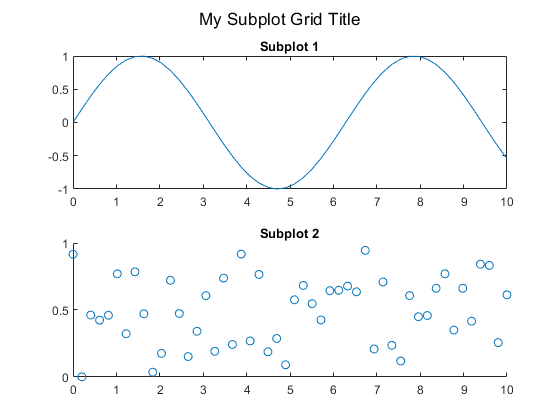

Parallel Aggregation Pyramid Pooling Module (PAPPM)

模块作用

多尺度特征提取,更大感受野

模块结构

模块代码

import torch

import torch.nn as nn

import torch.nn.functional as F

class PAPPM(nn.Module):

def __init__(self, inplanes, branch_planes, outplanes, BatchNorm=nn.BatchNorm2d):

super(PAPPM, self).__init__()

bn_mom = 0.1

# Avg 5,2 + Conv

self.scale1 = nn.Sequential(nn.AvgPool2d(kernel_size=5, stride=2, padding=2),

BatchNorm(inplanes, momentum=bn_mom),

nn.ReLU(inplace=True),

nn.Conv2d(inplanes, branch_planes, kernel_size=1, bias=False),

)

# Avg 9,4 + Conv

self.scale2 = nn.Sequential(nn.AvgPool2d(kernel_size=9, stride=4, padding=4),

BatchNorm(inplanes, momentum=bn_mom),

nn.ReLU(inplace=True),

nn.Conv2d(inplanes, branch_planes, kernel_size=1, bias=False),

)

# Avg 17,8 + Conv

self.scale3 = nn.Sequential(nn.AvgPool2d(kernel_size=17, stride=8, padding=8),

BatchNorm(inplanes, momentum=bn_mom),

nn.ReLU(inplace=True),

nn.Conv2d(inplanes, branch_planes, kernel_size=1, bias=False),

)

# Avg Global + Conv

self.scale4 = nn.Sequential(nn.AdaptiveAvgPool2d((1, 1)),

BatchNorm(inplanes, momentum=bn_mom),

nn.ReLU(inplace=True),

nn.Conv2d(inplanes, branch_planes, kernel_size=1, bias=False),

)

# Conv

self.scale0 = nn.Sequential(

BatchNorm(inplanes, momentum=bn_mom),

nn.ReLU(inplace=True),

nn.Conv2d(inplanes, branch_planes, kernel_size=1, bias=False),

)

self.scale_process = nn.Sequential(

BatchNorm(branch_planes*4, momentum=bn_mom),

nn.ReLU(inplace=True),

nn.Conv2d(branch_planes*4, branch_planes*4, kernel_size=3,

padding=1, groups=4, bias=False),

)

self.compression = nn.Sequential(

BatchNorm(branch_planes * 5, momentum=bn_mom),

nn.ReLU(inplace=True),

nn.Conv2d(branch_planes * 5, outplanes, kernel_size=1, bias=False),

)

self.shortcut = nn.Sequential(

BatchNorm(inplanes, momentum=bn_mom),

nn.ReLU(inplace=True),

nn.Conv2d(inplanes, outplanes, kernel_size=1, bias=False),

)

def forward(self, x):

algc = False

width = x.shape[-1]

height = x.shape[-2]

scale_list = []

x_ = self.scale0(x)

scale_list.append(F.interpolate(self.scale1(x), size=[height, width],

mode='bilinear', align_corners=algc)+x_)

scale_list.append(F.interpolate(self.scale2(x), size=[height, width],

mode='bilinear', align_corners=algc)+x_)

scale_list.append(F.interpolate(self.scale3(x), size=[height, width],

mode='bilinear', align_corners=algc)+x_)

scale_list.append(F.interpolate(self.scale4(x), size=[height, width],

mode='bilinear', align_corners=algc)+x_)

scale_out = self.scale_process(torch.cat(scale_list, 1))

out = self.compression(torch.cat([x_,scale_out], 1)) + self.shortcut(x)

return out

if __name__ == '__main__':

pappm = PAPPM(inplanes=1024, branch_planes=96, outplanes=256)

x = torch.randn([3, 1024, 8, 8])

out = pappm(x)

print(out.shape) # 3, 256, 8, 8

原文表述

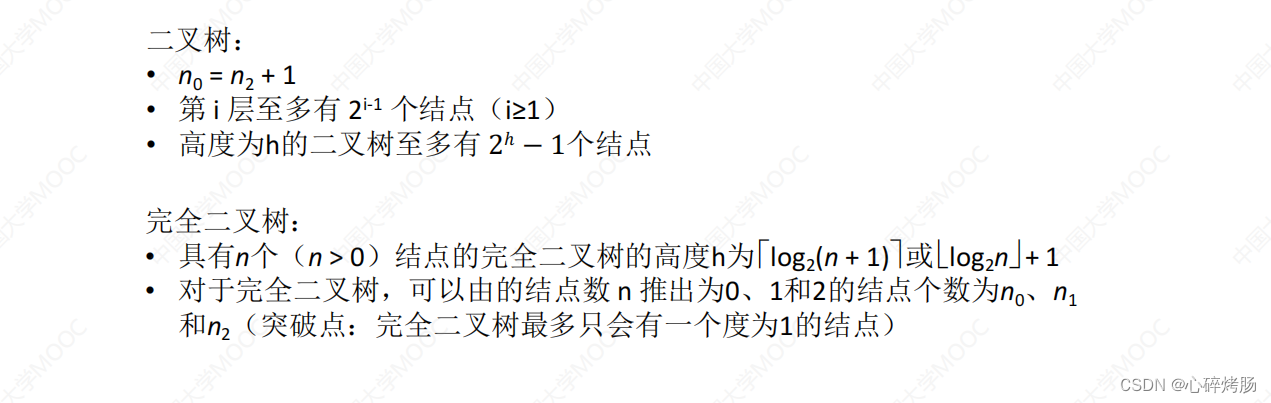

为了更好地构建全局场景先验,PSPNet引入了金字塔池化模块(PPM),在卷积层之前串联多尺度池化映射,形成局部和全局上下文表示。文献[20]提出的深度聚合PPM(DAPPM)进一步提高了PPM的上下文嵌入能力,并显示出卓越的性能。然而,DAPPM的计算过程无法就其深度进行并行化,耗时较长,而且DAPPM每个尺度包含的通道过多,可能会超出轻量级模型的表示能力。因此,我们修改了DAPPM中的连接,使其可并行化,如图6所示,并将每个尺度的通道数从128个减少到96个。这种新的上下文采集模块被称为并行聚合PPM(PAPPM),并应用于PIDNet-M和PIDNet-S,以保证它们的速度。注意,对于更大的变体PIDNet-L,考虑到其深度,我们选择DAPPM。