文章目录

- 一、前言

- 二、配置APIServer和安装Metrics

- 2.1 APIServer开启Aggregator

- 2.2 安装Metrics Server (需要用到metris.yaml)

- 安装metrics Server之前

- 安装metrics Server之中

- 全部命令

- 实践演示

- 安装metrics Server之后

- 三、使用HPA测试 (需要使用到test.yaml,里面包括 deploy-service-hpa)

- 3.1 实践

- 四、HPA架构和原理

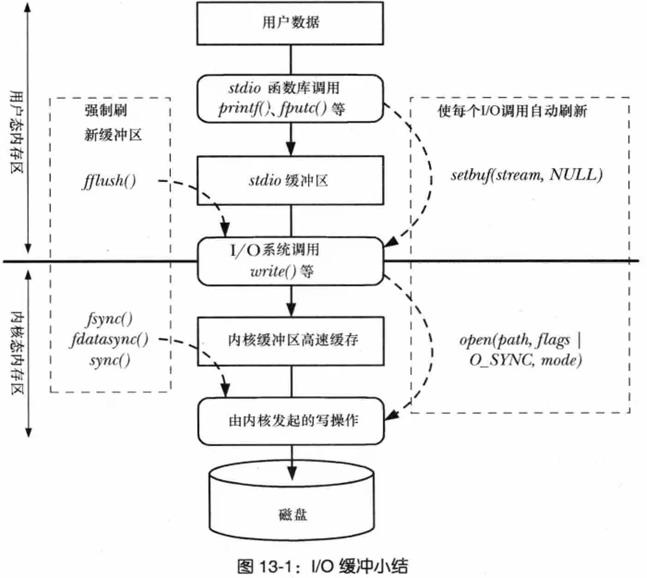

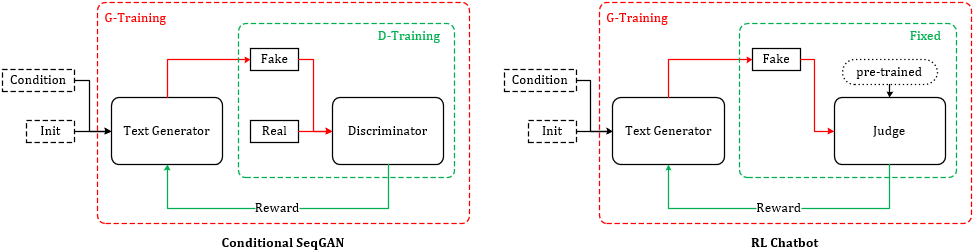

- 1)原理架构图

- 2)HPA扩缩容算法

- 3)HPA 对象定义

- 五、尾声

一、前言

参考资料:https://liugp.blog.csdn.net/article/details/126675958

二、配置APIServer和安装Metrics

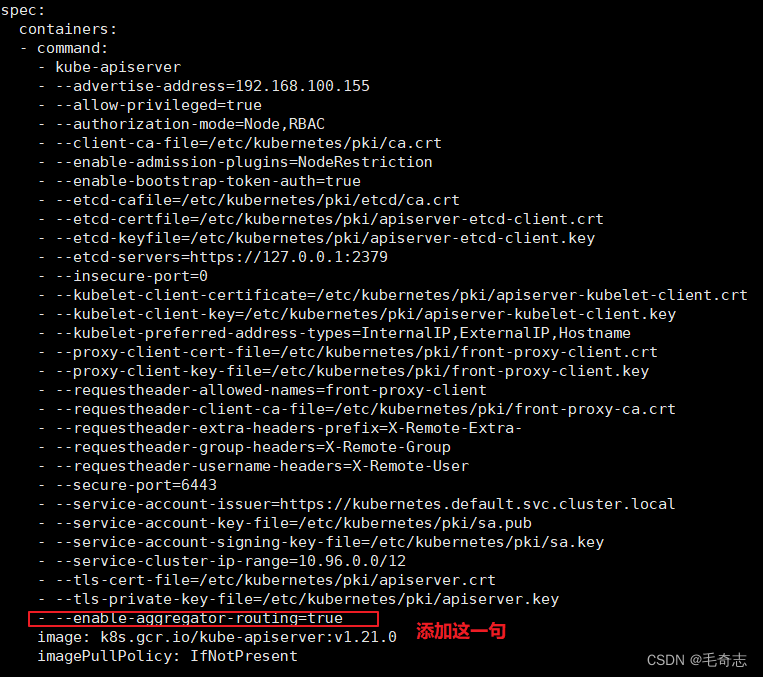

2.1 APIServer开启Aggregator

# 添加这行

# --enable-aggregator-routing=true

### 修改每个 API Server 的 kube-apiserver.yaml 配置开启 Aggregator Routing:修改 manifests 配置后 API Server 会自动重启生效。

vi /etc/kubernetes/manifests/kube-apiserver.yaml

### 添加这一句

--enable-aggregator-routing=true

### 最后:wq保存,API Server会自动重启生效

:wq

### 测试:docker ps | grep apiserver

docker ps | grep apiserver

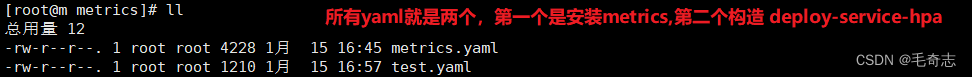

2.2 安装Metrics Server (需要用到metris.yaml)

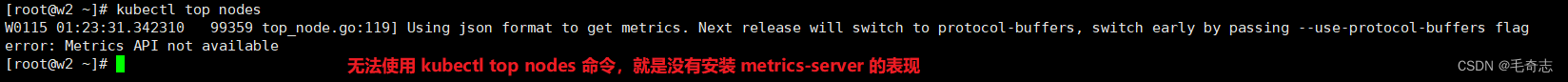

问题:为什么需要安装Metrics Server?

回答:部署并配置了 Metrics Server 的集群。 Kubernetes Metrics Server 从集群中的 kubelets 收集资源指标, 并通过 Kubernetes API 公开这些指标, 使用 APIService 添加代表指标读数的新资源。

安装metrics Server之前

安装metrics Server之中

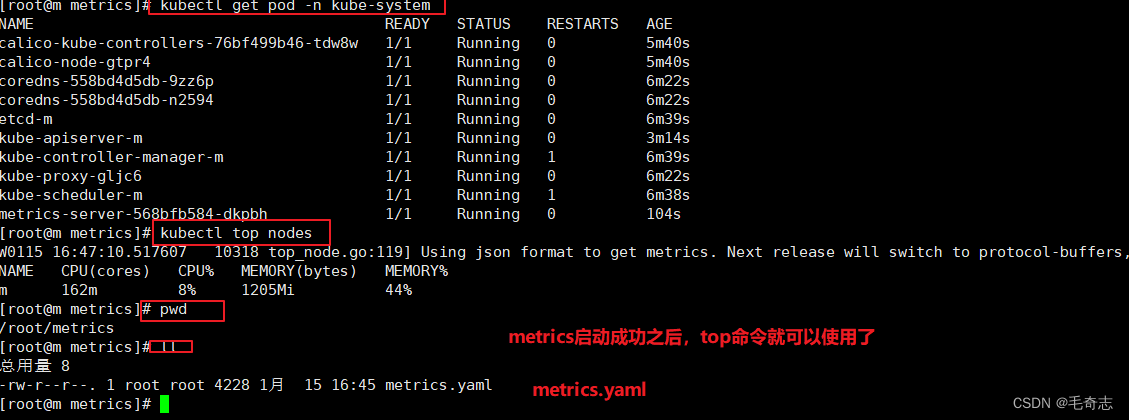

全部命令

wget https://github.com/kubernetes-sigs/metrics-server/releases/download/metrics-server-helm-chart-3.8.2/components.yaml

kubectl apply -f components.yaml

kubectl get pod -n kube-system | grep metrics-server

# 查看

kubectl get pod -n kube-system | grep metrics-server

# 查看node和pod资源使用情况

kubectl top nodes

kubectl top pods

实践演示

下载地址:wget https://github.com/kubernetes-sigs/metrics-server/releases/download/metrics-server-helm-chart-3.8.2/components.yaml

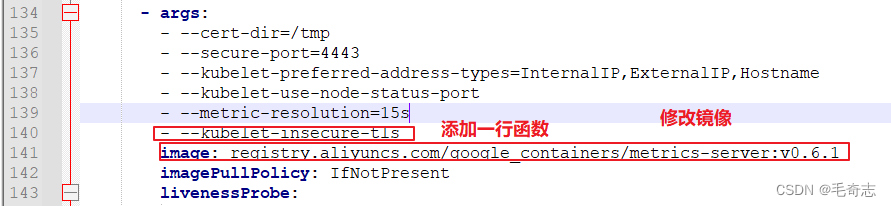

需要对下载的components.yaml修改两个地方

metrics-server pod无法启动,出现日志unable to fully collect metrics: … x509: cannot validate certificate for because … it doesn’t contain any IP SANs …

解决方法:在metrics-server中添加–kubelet-insecure-tls参数跳过证书校验

修改之后的metrics.yaml,如下:

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

rbac.authorization.k8s.io/aggregate-to-admin: "true"

rbac.authorization.k8s.io/aggregate-to-edit: "true"

rbac.authorization.k8s.io/aggregate-to-view: "true"

name: system:aggregated-metrics-reader

rules:

- apiGroups:

- metrics.k8s.io

resources:

- pods

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

rules:

- apiGroups:

- ""

resources:

- nodes/metrics

verbs:

- get

- apiGroups:

- ""

resources:

- pods

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server-auth-reader

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server:system:auth-delegator

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:metrics-server

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

ports:

- name: https

port: 443

protocol: TCP

targetPort: https

selector:

k8s-app: metrics-server

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

selector:

matchLabels:

k8s-app: metrics-server

strategy:

rollingUpdate:

maxUnavailable: 0

template:

metadata:

labels:

k8s-app: metrics-server

spec:

containers:

- args:

- --cert-dir=/tmp

- --secure-port=4443

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

- --metric-resolution=15s

- --kubelet-insecure-tls # 修改点1:取消tls校验

image: registry.aliyuncs.com/google_containers/metrics-server:v0.6.1 # 修改点2:换成国内镜像

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 3

httpGet:

path: /livez

port: https

scheme: HTTPS

periodSeconds: 10

name: metrics-server

ports:

- containerPort: 4443

name: https

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /readyz

port: https

scheme: HTTPS

initialDelaySeconds: 20

periodSeconds: 10

resources:

requests:

cpu: 100m

memory: 200Mi

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

volumeMounts:

- mountPath: /tmp

name: tmp-dir

nodeSelector:

kubernetes.io/os: linux

priorityClassName: system-cluster-critical

serviceAccountName: metrics-server

volumes:

- emptyDir: {}

name: tmp-dir

---

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

labels:

k8s-app: metrics-server

name: v1beta1.metrics.k8s.io

spec:

group: metrics.k8s.io

groupPriorityMinimum: 100

insecureSkipTLSVerify: true

service:

name: metrics-server

namespace: kube-system

version: v1beta1

versionPriority: 100

安装metrics Server之后

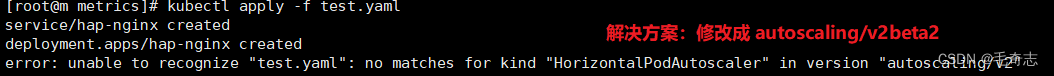

三、使用HPA测试 (需要使用到test.yaml,里面包括 deploy-service-hpa)

3.1 实践

kubectl apply -f test.yaml

yum install httpd -y

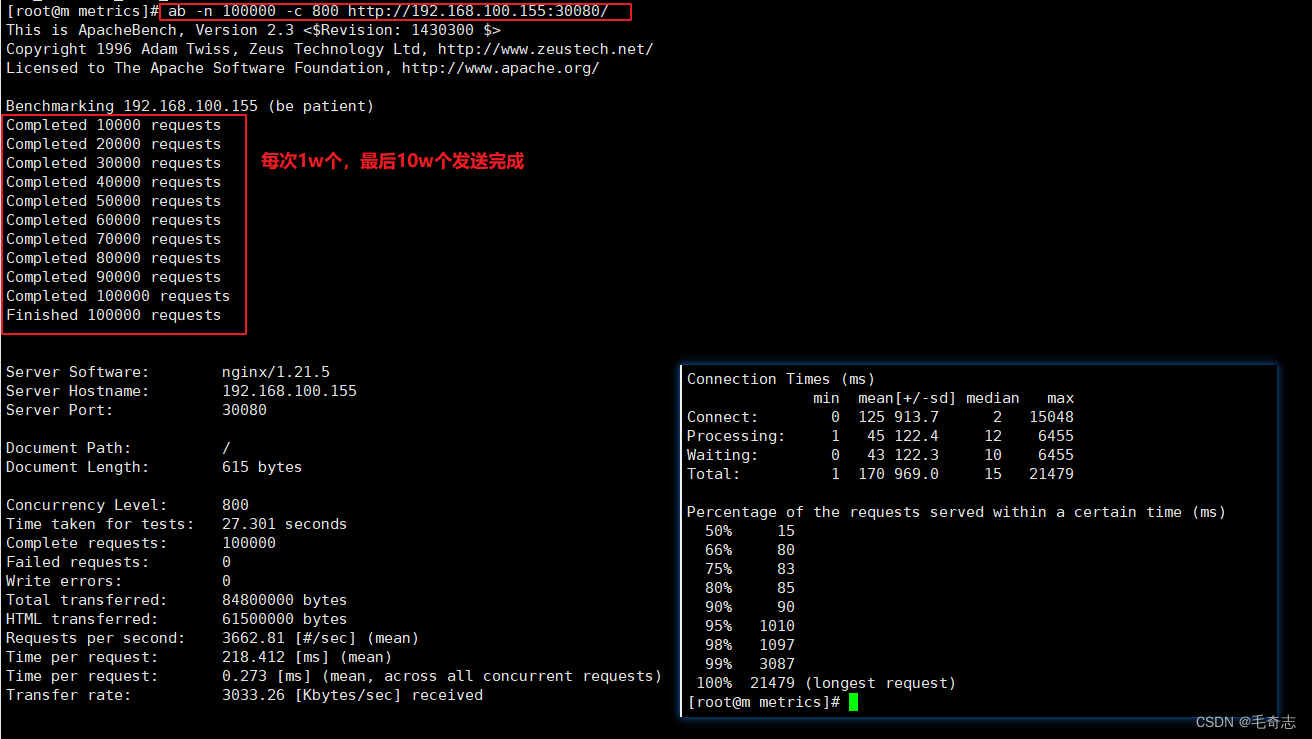

ab -n 100000 -c 800 http://192.168.100.155:30080/ #-c:并发数 -n:总请求数

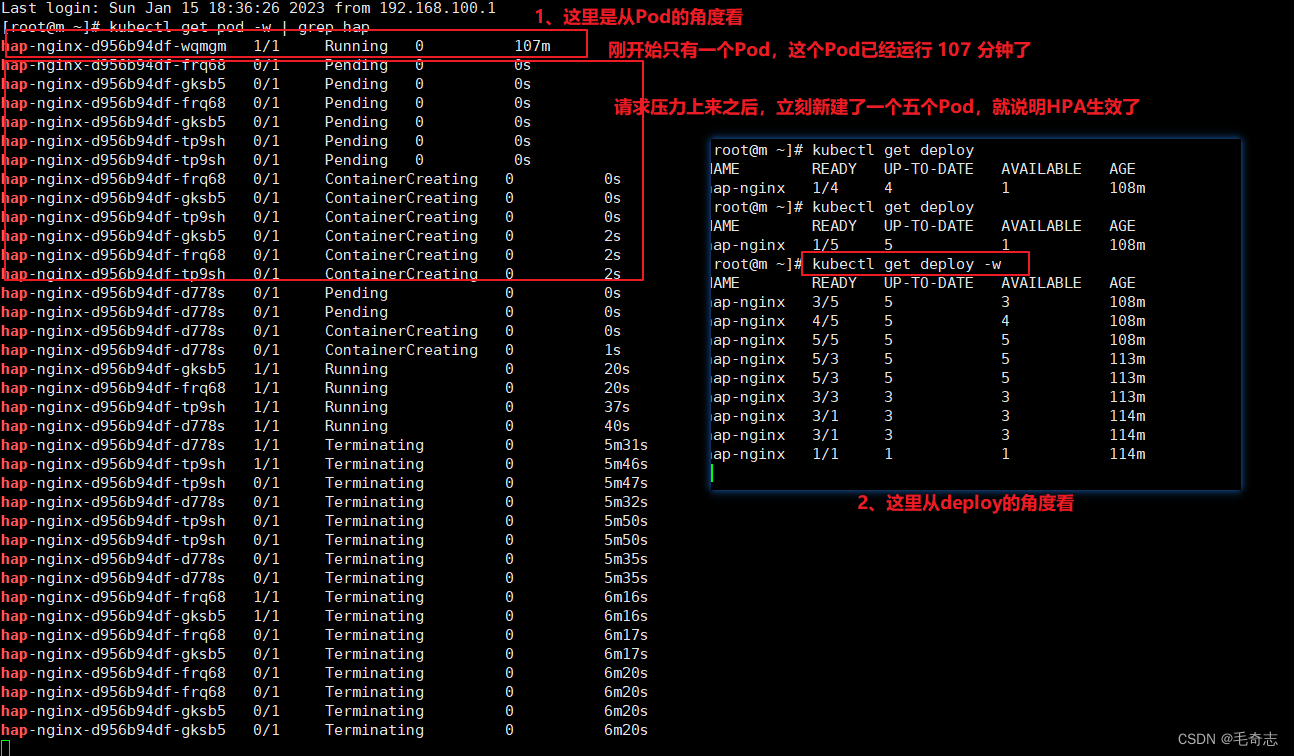

kubectl get pod -w | grep hap

apiVersion: autoscaling/v2beta2 # 注意:这里是 autoscaling/v2beta2 ,不能写成 autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

name: hap-nginx

spec:

maxReplicas: 10 # 最大扩容到10个节点(pod)

minReplicas: 1 # 最小扩容1个节点(pod)

metrics:

- resource:

name: cpu

target:

averageUtilization: 40 # CPU 平局资源使用率达到40%就开始扩容,低于40%就是缩容

# 设置内存

# AverageValue:40

type: Utilization

type: Resource

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: hap-nginx

---

apiVersion: v1

kind: Service

metadata:

name: hap-nginx

spec:

type: NodePort

ports:

- name: "http"

port: 80

targetPort: 80

nodePort: 30080

selector:

service: hap-nginx

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: hap-nginx

spec:

replicas: 1

selector:

matchLabels:

service: hap-nginx

template:

metadata:

labels:

service: hap-nginx

spec:

containers:

- name: hap-nginx

image: nginx:latest

resources:

requests:

cpu: 100m

memory: 100Mi

limits:

cpu: 200m

memory: 200Mi

# 从pod角度看,replicas数量变化

kubectl get pod -w | grep hap

# 从deploy角度看,replicas数量变化

kubectl get deploy -w | grep hap

从上图发现已经实现了根据CPU 动态扩容了

四、HPA架构和原理

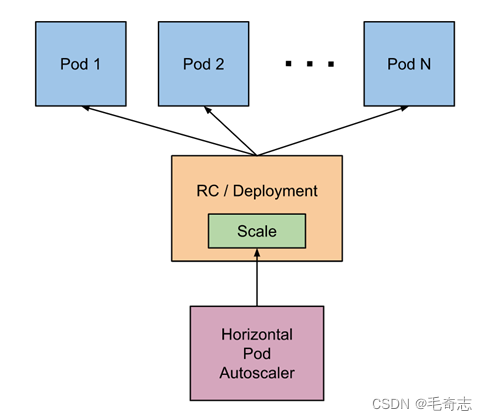

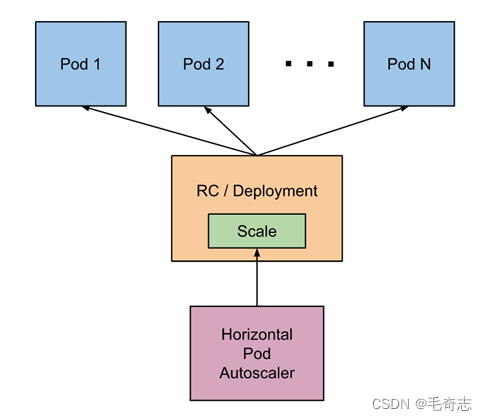

1)原理架构图

- 自动检测周期由 kube-controller-manager 的 --horizontal-pod-autoscaler-sync-period 参数设置(默认间隔为 15 秒)。

- metrics-server 提供 metrics.k8s.io API 为pod资源的使用提供支持。

- 15s/周期 -> 查询metrics.k8s.io API -> 算法计算 -> 调用scale 调度 -> 特定的扩缩容策略执行。

2)HPA扩缩容算法

从最基本的角度来看,Pod 水平自动扩缩控制器根据当前指标和期望指标来计算扩缩比例。

期望副本数 = ceil[当前副本数 * (当前指标 / 期望指标)]

1、扩容

如果计算出的扩缩比例接近 1.0, 将会放弃本次扩缩, 度量指标 / 期望指标接近1.0。

2、缩容

冷却/延迟: 如果延迟(冷却)时间设置的太短,那么副本数量有可能跟以前一样出现抖动。 默认值是 5 分钟(5m0s)–horizontal-pod-autoscaler-downscale-stabilization

3、特殊处理

- 丢失度量值:缩小时假设这些 Pod 消耗了目标值的 100%, 在需要放大时假设这些 Pod 消耗了 0% 目标值。 这可以在一定程度上抑制扩缩的幅度。

- 存在未就绪的pod的时候:我们保守地假设尚未就绪的 Pod 消耗了期望指标的 0%,从而进一步降低了扩缩的幅度。

- 未就绪的 Pod 和缺少指标的 Pod 考虑进来再次计算使用率。 如果新的比率与扩缩方向相反,或者在容忍范围内,则跳过扩缩。 否则,我们使用新的扩缩比例。

- 指定了多个指标, 那么会按照每个指标分别计算扩缩副本数,取最大值进行扩缩。

3)HPA 对象定义

apiVersion: autoscaling/v2beta2

kind: HorizontalPodAutoscaler

metadata:

name: nginx

spec:

behavior:

scaleDown:

policies:

- type: Pods

value: 4

periodSeconds: 60

- type: Percent

value: 10

periodSeconds: 60

stabilizationWindowSeconds: 300

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: nginx

minReplicas: 1

maxReplicas: 10

metrics:

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 50

HPA对象默认行为

behavior:

scaleDown:

stabilizationWindowSeconds: 300

policies:

- type: Percent

value: 100

periodSeconds: 15

scaleUp:

stabilizationWindowSeconds: 0

policies:

- type: Percent

value: 100

periodSeconds: 15

- type: Pods

value: 4

periodSeconds: 15

selectPolicy: Max

五、尾声

先定义metrics,然后定义deploy-service-hpa,最后使用 yum install httpd -y

ab -n 100000 -c 800 http://192.168.100.155:30080/ #-c:并发数 -n:总请求数 做压力测试就好了,如果看到 deploy 不断创建,可以证明扩缩容HPA生效了