LeNet网络

-

基本结构图

-

构造思路

- 先用卷积层来学习图片空间信息

- 池化层降低敏感度

- 全连接层来转换到类别空间

-

代码实现

import torch

from torch import nn

from d2l import torch as d2l

class Reshape(nn.Module):

def forward(self ,x):

return x.view(-1, 1, 28, 28)

# view函数的作用是重新调整张量的尺寸,-1表示自动计算该维度的大小,即批量个数保持不变

# 第二个1表示通道个数

# 后面两个维度表示图片大小

net = nn.Sequential(

Reshape(), nn.Conv2d(1, 6, kernel_size=5, padding=2), nn.Sigmoid(),

nn.AvgPool2d(kernel_size=2, stride=2),

nn.Conv2d(6, 16, kernel_size=5), nn.Sigmoid(),

nn.AvgPool2d(kernel_size=2, stride=2),

nn.Flatten(),

nn.Linear(16 * 5 * 5, 120),

nn.Sigmoid(),

nn.Linear(120, 84), nn.Sigmoid(),

nn.Linear(84, 10)

)

X = torch.rand(size=(1, 1, 28, 28), dtype=torch.float32)

# 通道,批量,大小

for layer in net:

X = layer(X)

print(layer.__class__.__name__, 'output shape: \t', X.shape)

# 查看每层信息

- 利用该网络进行测试Fashion数据集

batch_size = 256

train_iter, test_iter = d2l.load_data_fashion_mnist(batch_size=batch_size)

# 使用GPU计算模型在数据集上进行训练和计算

def evaluate_accuracy_gpu(net, data_iter, device=None):

"""使用GPU计算模型在数据集上的精度。"""

if isinstance(net, torch.nn.Module):

net.eval()

if not device:

device = next(iter(net.parameters())).device

metric = d2l.Accumulator(2)

for X, y in data_iter:

if isinstance(X, list):

X = [x.to(device) for x in X]

else:

X = X.to(device)

y = y.to(device)

metric.add(d2l.accuracy(net(X), y), y.numel())

return metric[0] / metric[1]

# 之后的训练都依赖于此

def train_ch6(net, train_iter, test_iter, num_epochs, lr, device):

"""用GPU训练模型(在第六章定义)。"""

def init_weights(m):

if type(m) == nn.Linear or type(m) == nn.Conv2d:

nn.init.xavier_uniform_(m.weight)

net.apply(init_weights)

print('training on', device)

net.to(device) # 移动到GPU

optimizer = torch.optim.SGD(net.parameters(), lr=lr)

loss = nn.CrossEntropyLoss()

animator = d2l.Animator(xlabel='epoch', xlim=[1, num_epochs],

legend=['train loss', 'train acc', 'test acc'])

timer, num_batches = d2l.Timer(), len(train_iter)

for epoch in range(num_epochs):

metric = d2l.Accumulator(3)

net.train()

for i, (X, y) in enumerate(train_iter):

timer.start()

optimizer.zero_grad()

X, y = X.to(device), y.to(device) # 输入和输出拿到GPU上

y_hat = net(X)

l = loss(y_hat, y)

l.backward() # 计算梯度

optimizer.step() # 迭代

with torch.no_grad():

metric.add(l * X.shape[0], d2l.accuracy(y_hat, y), X.shape[0])

timer.stop()

train_l = metric[0] / metric[2]

# 训练评估

lr, num_epochs = 0.9, 10

train_ch6(net, train_iter, test_iter, num_epochs, lr, d2l.try_gpu())

AlexNet—深度卷积神经网络

- 主要与LeNet的关系

- 本质上就是更深更大的LeNet网络

- 引入丢弃法

- 引入Maxpooling

- 提供了端到端实现的思路

- 激活函数由sigmod变到了relu(减缓梯度消失)

- 隐藏全连接层后加入丢弃层

- 数据增强技术

- 其实是对网络结构进行了更多超参数调整

- 代码实现

import torch

from torch import nn

from d2l import torch as d2l

net = nn.Sequential(

nn.Conv2d(1, 96, kernel_size=11, stride=4, padding=1), nn.ReLU(),

nn.MaxPool2d(kernel_size=3, stride=2),

nn.Conv2d(96, 256, kernel_size=5, padding=2), nn.ReLU(),

nn.MaxPool2d(kernel_size=3, stride=2),

nn.Conv2d(256, 384, kernel_size=3, padding=1), nn.ReLU(),

nn.Conv2d(384, 384, kernel_size=3, padding=1), nn.ReLU(),

nn.Conv2d(384, 256, kernel_size=3, padding=1), nn.ReLU(),

nn.MaxPool2d(kernel_size=3, stride=2), nn.Flatten(),

nn.Linear(6400, 4096), nn.ReLU(), nn.Dropout(p=0.5),

nn.Linear(4096, 4096), nn.ReLU(), nn.Dropout(p=0.5),

nn.Linear(4096, 10))

X = torch.randn(1, 1, 224, 224) # 构造单通道观察输出

for layer in net:

X = layer(X)

print(layer.__class__.__name__, 'Output shape:\t', X.shape)

# 数据集验证方式

batch_size = 128

train_iter, test_iter = d2l.load_data_fashion_mnist(batch_size, resize=224)

# Fashion-MNIST图像的分辨率 低于ImageNet图像。我们将它们增加到 224×224

lr, num_epochs = 0.01, 10

d2l.train_ch6(net, train_iter, test_iter, num_epochs, lr, d2l.try_gpu())

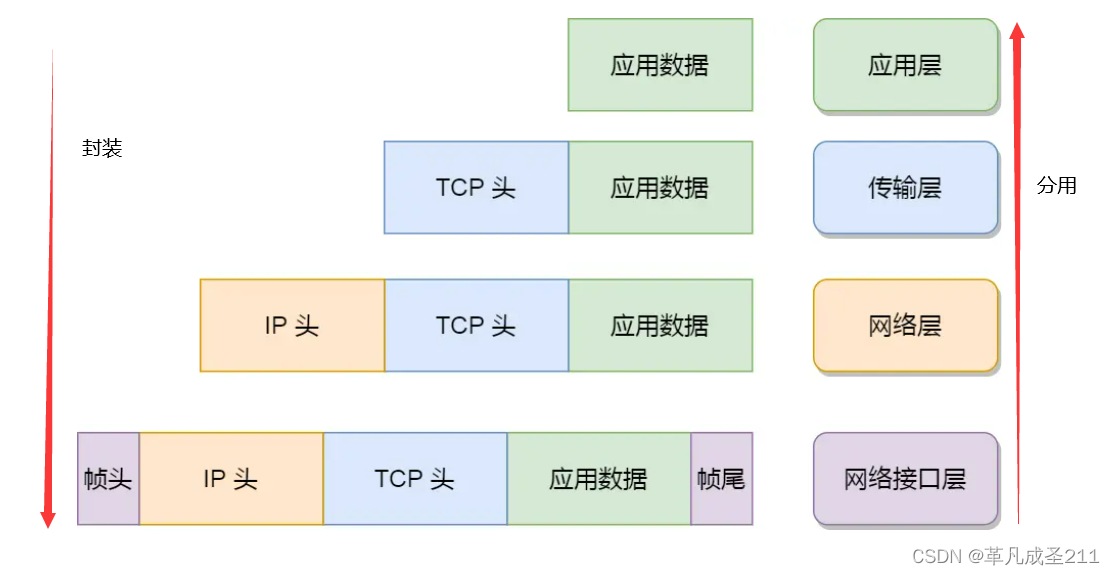

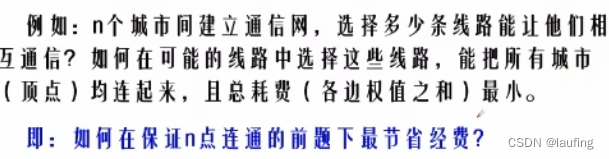

VGG块构建网络

-

基本架构—多个VGG块后接入全连接层

-

VGG通过不同重复层的次数来确定具体网络名称

-

基本架构图比较

-

当前三种网络对比

- LeNet

- 2卷积+池化层

- 2全连接层

- AlexNet

- 更大更深

- ReLU, Dropout, 数据增强

- VGG

- 更大更深的AlexNet

- LeNet

-

总结

- VGG通过可重复的卷积块来实现更深度的卷积神经网络

- 不同卷积深度和超参数可以得到不同复杂度的变种

-

代码实现VGG网络

import torch

from torch import nn

from d2l import torch as d2l

def vgg_block(num_convs, in_channels, out_channels):

layers = []

for _ in range(num_convs):

layers.append(

nn.Conv2d(in_channels, out_channels, kernel_size=3, padding=1))

layers.append(nn.ReLU())

in_channels = out_channels

layers.append(nn.MaxPool2d(kernel_size=2, stride=2))

return nn.Sequential(*layers) # *表示读取列表中所有的数据

conv_arch = ((1, 64), (1, 128), (2, 256), (2, 512), (2, 512))

# 5 块 卷积层数和输出通道数可以设计

def vgg(conv_arch):

conv_blks = []

in_channels = 1

for (num_convs, out_channels) in conv_arch:

conv_blks.append(vgg_block(num_convs, in_channels, out_channels))

in_channels = out_channels

return nn.Sequential(*conv_blks, nn.Flatten(),

nn.Linear(out_channels * 7 * 7, 4096), nn.ReLU(),

nn.Dropout(0.5), nn.Linear(4096, 4096), nn.ReLU(),

nn.Dropout(0.5), nn.Linear(4096, 10))

net = vgg(conv_arch)

X = torch.randn(size=(1, 1, 224, 224))

for blk in net:

X = blk(X)

print(blk.__class__.__name__, 'output shape:\t', X.shape)

# 观察每一块的形状

网络中的网络(NiN)

-

核心思想要取消全连接层,完全用卷积代替

-

1*1卷积核是关键

-

网络结构图

-

全连接层的问题

- 卷积层至少的参数需要

c i ∗ c o ∗ k 2 − − − k 是卷积核大小 c_i*c_o*k^2---k是卷积核大小 ci∗co∗k2−−−k是卷积核大小 - 全连接层需要的参数

- 对于卷积后的第一个全连接层

输入通道 ∗ 输入高和宽 ∗ 本层神经元个数 输入通道*输入高和宽*本层神经元个数 输入通道∗输入高和宽∗本层神经元个数 - 对于前一层也是全连接层

上层输出神经元个数 ∗ 本层神经元个数 上层输出神经元个数*本层神经元个数 上层输出神经元个数∗本层神经元个数

- 对于卷积后的第一个全连接层

- 卷积层至少的参数需要

-

NiN块结构

-

架构总结

- 无全连接层

- 交替使用NiN块和步幅为2的最大池化层

- 最后使用全局平均池化得到输出—其输入通道是类别数

-

总结

- 1*1卷积层其实就是全连接且增加了非线性

- NiN中用全局平均池化层来替代全连接层

- 不容易过拟合,参数更少

import torch

from torch import nn

from d2l import torch as d2l

def nin_block(in_channels, out_channels, kernel_size, strides, padding):

return nn.Sequential(

nn.Conv2d(in_channels, out_channels, kernel_size, strides, padding),

nn.ReLU(), nn.Conv2d(out_channels, out_channels, kernel_size=1),

nn.ReLU(), nn.Conv2d(out_channels, out_channels, kernel_size=1),

nn.ReLU())

net = nn.Sequential(

nin_block(1, 96, kernel_size=11, strides=4, padding=0),

nn.MaxPool2d(3, stride=2),

nin_block(96, 256, kernel_size=5, strides=1, padding=2),

nn.MaxPool2d(3, stride=2),

nin_block(256, 384, kernel_size=3, strides=1, padding=1),

nn.MaxPool2d(3, stride=2), nn.Dropout(0.5),

nin_block(384, 10, kernel_size=3, strides=1, padding=1),

nn.AdaptiveAvgPool2d((1, 1)),

nn.Flatten())

# 最后扁平化输出

X = torch.rand(size=(1, 1, 224, 224))

for layer in net:

X = layer(X)

print(layer.__class__.__name__, 'output shape:\t', X.shape)

# 查看每个块的输出形状

GoogLeNet

-

特点—在每一层中都具有并行计算的出现

- 参数更少—加入了1*1的卷积层

-

块结构展示

-

整体结构展示

-

总结

- inception块有四条不同超参数的卷积层和池化层的路来提取信息

- 主要优点是模型参数少

-

代码实现

import torch

from torch import nn

from torch.nn import functional as F

from d2l import torch as d2l

class Inception(nn.Module):

def __init__(self, in_channels, c1, c2, c3, c4, **kwargs):

super(Inception, self).__init__(**kwargs)

self.p1_1 = nn.Conv2d(in_channels, c1, kernel_size=1)

self.p2_1 = nn.Conv2d(in_channels, c2[0], kernel_size=1)

self.p2_2 = nn.Conv2d(c2[0], c2[1], kernel_size=3, padding=1)

self.p3_1 = nn.Conv2d(in_channels, c3[0], kernel_size=1)

self.p3_2 = nn.Conv2d(c3[0], c3[1], kernel_size=5, padding=2)

self.p4_1 = nn.MaxPool2d(kernel_size=3, stride=1, padding=1)

self.p4_2 = nn.Conv2d(in_channels, c4, kernel_size=1)

def forward(self, x):

p1 = F.relu(self.p1_1(x))

p2 = F.relu(self.p2_2(F.relu(self.p2_1(x))))

p3 = F.relu(self.p3_2(F.relu(self.p3_1(x))))

p4 = F.relu(self.p4_2(self.p4_1(x)))

return torch.cat((p1, p2, p3, p4), dim=1)

b1 = nn.Sequential(nn.Conv2d(1, 64, kernel_size=7, stride=2, padding=3),

nn.ReLU(), nn.MaxPool2d(kernel_size=3, stride=2,

padding=1))

b2 = nn.Sequential(nn.Conv2d(64, 64, kernel_size=1), nn.ReLU(),

nn.Conv2d(64, 192, kernel_size=3, padding=1),

nn.MaxPool2d(kernel_size=3, stride=2, padding=1))

b3 = nn.Sequential(Inception(192, 64, (96, 128), (16, 32), 32),

Inception(256, 128, (128, 192), (32, 96), 64),

nn.MaxPool2d(kernel_size=3, stride=2, padding=1))

b4 = nn.Sequential(Inception(480, 192, (96, 208), (16, 48), 64),

Inception(512, 160, (112, 224), (24, 64), 64),

Inception(512, 128, (128, 256), (24, 64), 64),

Inception(512, 112, (144, 288), (32, 64), 64),

Inception(528, 256, (160, 320), (32, 128), 128),

nn.MaxPool2d(kernel_size=3, stride=2, padding=1))

b5 = nn.Sequential(Inception(832, 256, (160, 320), (32, 128), 128),

Inception(832, 384, (192, 384), (48, 128), 128),

nn.AdaptiveAvgPool2d((1, 1)), nn.Flatten())

net = nn.Sequential(b1, b2, b3, b4, b5, nn.Linear(1024, 10))

X = torch.rand(size=(1, 1, 96, 96))

for layer in net:

X = layer(X)

print(layer.__class__.__name__, 'output shape:\t', X.shape)