一、K8s 使用 CephFS

CephFS是 Ceph 中基于RADOS(可扩展分布式对象存储)构建,通过将文件数据划分为对象并分布到集群中的多个存储节点上来实现高可用性和可扩展性。

首先所有 k8s 节点都需要安装 ceph-common 工具:

yum -y install epel-release ceph-common

二、静态供给方式

静态供给方式需要提前创建好 CephFS 给到 K8s 使用。

2.1 在 Ceph 中创建 FS 和 授权用户

创建存储池:

# 数据存储池

ceph osd pool create cephfs_data_pool 16

# 元数据存储池

ceph osd pool create ceph_metadata_pool 8

创建 FS :

ceph fs new k8s-cephfs cephfs_data_pool ceph_metadata_pool

创建用户 fs-user 并授权存储池 cephfs_data_pool

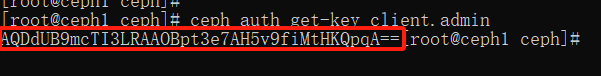

查看 admin 用户秘钥:

ceph auth get-key client.admin

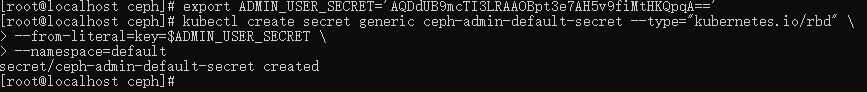

2.2 在 k8s 中创建 secret

export ADMIN_USER_SECRET='AQDdUB9mcTI3LRAAOBpt3e7AH5v9fiMtHKQpqA=='

kubectl create secret generic ceph-admin-default-secret --type="kubernetes.io/rbd" \

--from-literal=key=$ADMIN_USER_SECRET \

--namespace=default

2.3 pod 直接使用 CephFS 存储

vi cephfs-test-pod.yml

apiVersion: v1

kind: Pod

metadata:

name: cephfs-test-pod

spec:

containers:

- name: nginx

image: nginx

imagePullPolicy: IfNotPresent

volumeMounts:

- name: data-volume

mountPath: /usr/share/nginx/html/

volumes:

- name: data-volume

cephfs:

monitors: ["11.0.1.140:6789"]

path: /

user: admin

secretRef:

name: ceph-admin-default-secret

kubectl apply -f cephfs-test-pod.yml

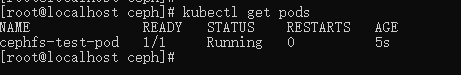

查看 pod :

kubectl get pods

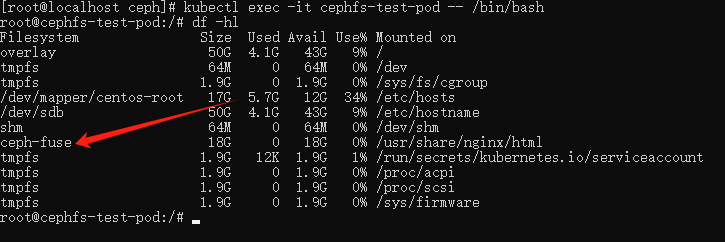

可以进到 pod 中查看分区情况:

kubectl exec -it cephfs-test-pod -- /bin/bash

df -hl

2.4 创建 PV 使用 CephFS 存储

vi cephfs-test-pv.yml

apiVersion: v1

kind: PersistentVolume

metadata:

name: cephfs-test-pv

spec:

accessModes: ["ReadWriteOnce"]

capacity:

storage: 2Gi

persistentVolumeReclaimPolicy: Retain

cephfs:

monitors: ["11.0.1.140:6789"]

path: /

user: admin

secretRef:

name: ceph-admin-default-secret

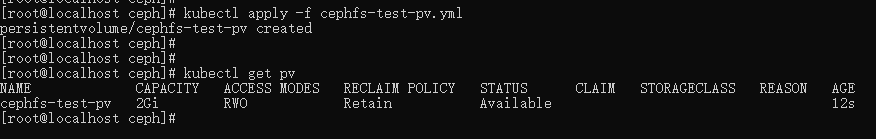

kubectl apply -f cephfs-test-pv.yml

创建 PVC 绑定 PV :

vi cephfs-test-pvc.yml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: cephfs-test-pvc

spec:

accessModes: ["ReadWriteOnce"]

resources:

requests:

storage: 2Gi

kubectl apply -f cephfs-test-pvc.yml

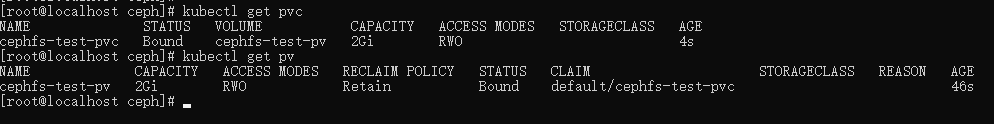

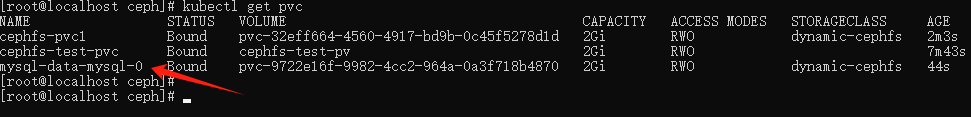

查看 pvc 和 pv :

kubectl get pvc

kubectl get pv

测试 pod 挂载 pvc:

vi cephfs-test-pod1.yml

apiVersion: v1

kind: Pod

metadata:

name: cephfs-test-pod1

spec:

containers:

- name: nginx

image: nginx

imagePullPolicy: IfNotPresent

volumeMounts:

- name: data-volume

mountPath: /usr/share/nginx/html/

volumes:

- name: data-volume

persistentVolumeClaim:

claimName: cephfs-test-pvc

readOnly: false

kubectl apply -f cephfs-test-pod1.yml

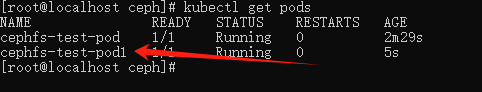

查看 pod :

kubectl get pods

三、动态供给方式

由于官方没有提供cephfs动态卷支持,这里使用社区提供的cephfs-provisioner 插件实现动态供给:

vi external-storage-cephfs-provisioner.yml

apiVersion: v1

kind: ServiceAccount

metadata:

name: cephfs-provisioner

namespace: kube-system

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: cephfs-provisioner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

- apiGroups: [""]

resources: ["secrets"]

verbs: ["create", "get", "delete"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: cephfs-provisioner

subjects:

- kind: ServiceAccount

name: cephfs-provisioner

namespace: kube-system

roleRef:

kind: ClusterRole

name: cephfs-provisioner

apiGroup: rbac.authorization.k8s.io

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: cephfs-provisioner

namespace: kube-system

rules:

- apiGroups: [""]

resources: ["secrets"]

verbs: ["create", "get", "delete"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: cephfs-provisioner

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: cephfs-provisioner

subjects:

- kind: ServiceAccount

name: cephfs-provisioner

namespace: kube-system

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: cephfs-provisioner

namespace: kube-system

spec:

selector:

matchLabels:

app: cephfs-provisioner

replicas: 1

strategy:

type: Recreate

template:

metadata:

labels:

app: cephfs-provisioner

spec:

containers:

- name: cephfs-provisioner

image: "registry.cn-chengdu.aliyuncs.com/ives/cephfs-provisioner:latest"

env:

- name: PROVISIONER_NAME

value: ceph.com/cephfs

command:

- "/usr/local/bin/cephfs-provisioner"

args:

- "-id=cephfs-provisioner-1"

serviceAccount: cephfs-provisioner

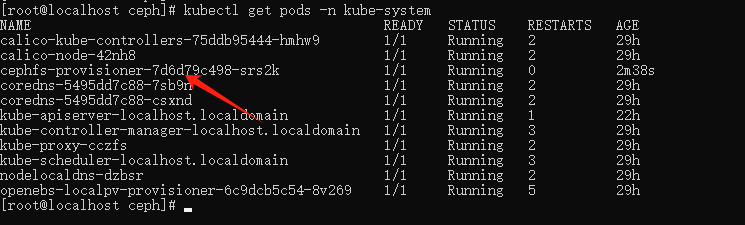

kubectl apply -f external-storage-cephfs-provisioner.yml

查看 pod:

kubectl get pods -n kube-system

3.1 创建 StorageClass

vi cephfs-sc.yml

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: dynamic-cephfs

provisioner: ceph.com/cephfs

parameters:

monitors: 11.0.1.140:6789

adminId: admin

adminSecretName: ceph-admin-default-secret

adminSecretNamespace: default

claimRoot: /volumes/kubernetes

kubectl apply -f cephfs-sc.yml

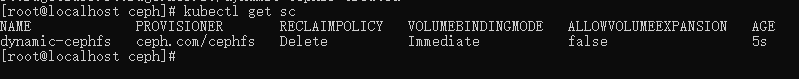

查看 SC:

kubectl get sc

3.4 测试创建 PVC

vi cephfs-pvc1.yml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: cephfs-pvc1

spec:

accessModes:

- ReadWriteOnce

storageClassName: dynamic-cephfs

resources:

requests:

storage: 2Gi

kubectl apply -f cephfs-pvc1.yml

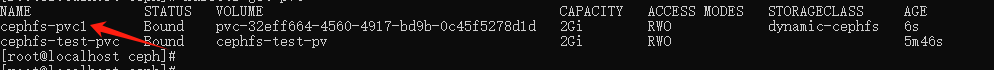

查看 pvc:

kubectl get pvc

创建 pod 使用上面 pvc :

vi cephfs-test-pod2.yml

apiVersion: v1

kind: Pod

metadata:

name: cephfs-test-pod2

spec:

containers:

- name: nginx

image: nginx

imagePullPolicy: IfNotPresent

volumeMounts:

- name: data-volume

mountPath: /usr/share/nginx/html/

volumes:

- name: data-volume

persistentVolumeClaim:

claimName: cephfs-pvc1

readOnly: false

kubectl apply -f cephfs-test-pod2.yml

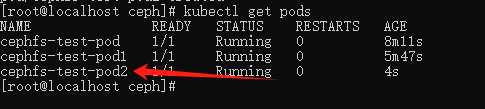

查看 pod :

kubectl get pods

3.5 测试使用 volumeClaimTemplates 动态创建 pv 和 pvc

vi mysql.yml

# headless service

apiVersion: v1

kind: Service

metadata:

name: mysql-hl

namespace: mysql

labels:

app: mysql-hl

spec:

clusterIP: None

ports:

- name: mysql-port

port: 3306

selector:

app: mysql

---

# NodePort service

apiVersion: v1

kind: Service

metadata:

name: mysql-np

namespace: mysql

labels:

app: mysql-np

spec:

clusterIP:

ports:

- name: master-port

port: 3306

nodePort: 31306

targetPort: 3306

selector:

app: mysql

type: NodePort

target-port:

externalTrafficPolicy: Cluster

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: mysql

spec:

serviceName: "mysql-hl"

replicas: 1

selector:

matchLabels:

app: mysql

template:

metadata:

labels:

app: mysql

spec:

containers:

- name: mysql

image: mysql:8.0.20

ports:

- containerPort: 3306

name: master-port

env:

- name: MYSQL_ROOT_PASSWORD

value: "root"

- name: TZ

value: "Asia/Shanghai"

volumeMounts:

- name: mysql-data

mountPath: /var/lib/mysql

volumeClaimTemplates:

- metadata:

name: mysql-data

spec:

accessModes: ["ReadWriteOnce"]

storageClassName: dynamic-cephfs

resources:

requests:

storage: 2Gi

kubectl apply -f mysql.yml

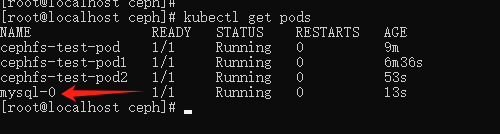

查看 pod :

查看 pvc :

查看 pv:

![[每周一更]-(第94期):认识英伟达显卡](https://img-blog.csdnimg.cn/direct/55778f93225c49e19100205642d2d51d.jpeg#pic_center)