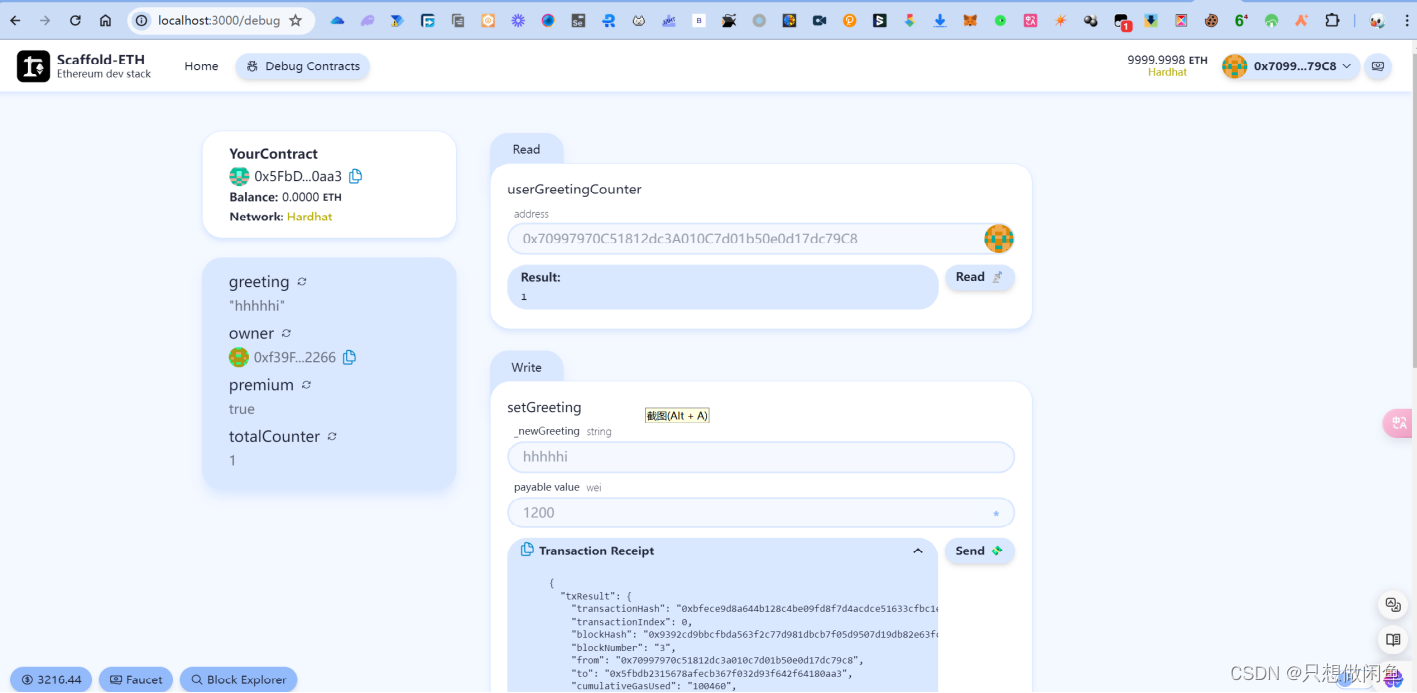

飞行机器人专栏(十四)-- Kinect DK 人体骨骼点运动提取方法_kinect怎么捕捉骨骼-CSDN博客文章浏览阅读971次,点赞24次,收藏17次。Azure Kinect DK 是一款开发人员工具包,配有先进的 AI 传感器,提供复杂的计算机视觉和语音模型。Kinect 将深度传感器、空间麦克风阵列与视频摄像头和方向传感器整合成一体式的小型设备,提供多种模式、选项和软件开发工具包 (SDK)。用于访问低级别传感器和设备的传感器 SDK。用于跟踪 3D 人体的人体跟踪 SDK。用于启用麦克风访问和基于 Azure 云的语音服务的 Azure AI 语音 SDK。此外,可将认知视觉服务与设备 RGB 相机配合使用。_kinect怎么捕捉骨骼https://blog.csdn.net/hhaowang/article/details/137856103?spm=1001.2014.3001.5501

Ubuntu 18.04/20.04 CV环境配置(下)--手势识别TRTpose+Kinect DK人体骨骼识别_ubuntu kinect骨骼测试-CSDN博客文章浏览阅读1.3k次。trt_pose_ros+ kinect实现手势识别和人体骨骼识别,用于机器人运动控制参考_ubuntu kinect骨骼测试https://blog.csdn.net/hhaowang/article/details/126761139?spm=1001.2014.3001.5501

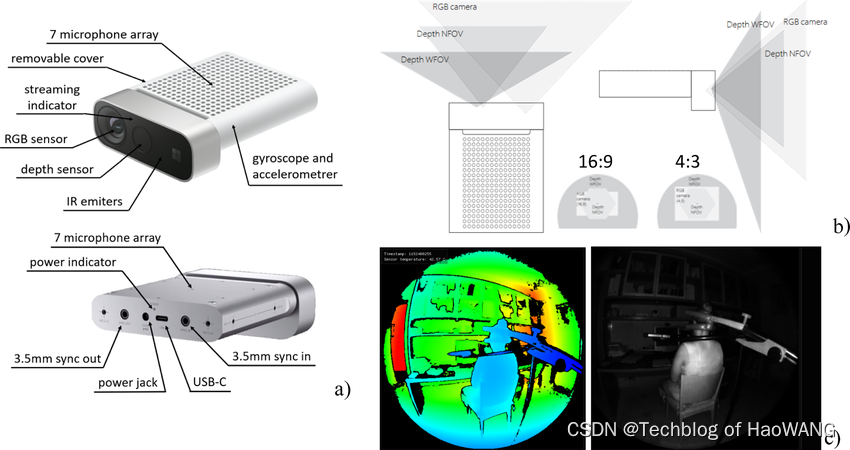

一、多设备标定方法

1.1 多设备优势

使用多个 Azure Kinect DK 设备进行视觉检测和骨骼点运动跟踪应用开发的一些主要好处包括:

- 视角覆盖范围增大:多个设备可提供更广泛的视角覆盖范围,从而捕获更全面的场景数据,增强应用程序的感知能力。

- 遮挡问题减少:多个设备可以互补覆盖,减少因单个设备视角产生的遮挡问题,提高检测和跟踪的准确性。

- 精度和鲁棒性提升:通过多个设备的数据融合,可以提高最终结果的精度和鲁棒性,减少噪声和错误的影响。

- 空间分辨率增加:使用多台设备可以增加总体的空间分辨率,捕获更细节的信息。

- 容错性增强:当某个设备出现故障时,其他设备可以继续工作,提高系统的容错性。

每个 Azure Kinect DK 设备附带 3.5 毫米同步端口(输入同步和输出同步),可将多个设备链接在一起。 连接设备后,软件可以协调设备之间的触发定时。

本文将介绍如何连接和同步设备。

使用多个 Azure Kinect DK 设备的好处

使用多个 Azure Kinect DK 设备的原因有很多,包括:

- 填补遮挡区域。 尽管 Azure Kinect DK 数据转换生成的是单个图像,但两个相机(深度和 RGB 相机)实际上保持着较小的一段距离。 这种偏移使得遮挡成为可能。 遮挡是指前景对象阻挡了设备上两个相机之一的背景对象的部分视角。 在生成的彩色图像中,前景对象看上去像是在背景对象上投射了一个阴影。

例如,在下图中,左侧相机可看到灰色像素“P2”。但是,白色前景对象会阻止右侧相机 IR 横梁。 右侧相机无法获取“P2”的数据。附加的同步设备可以提供遮挡的数据。

- 扫描三维对象。

- 将有效帧速率提升至 30 帧/秒 (FPS) 以上的值。

- 捕获同一场景的多个 4K 彩色图像,所有图像都在曝光中心时间点的 100 微秒 (μs) 内对齐。

- 增大相机的空间覆盖范围。

1.2 多设备连接

1.3 外部触发及时序设置

二、参数设置

要减少多个 Azure Kinect DK 设备之间的干扰,可以采取以下措施:

- 同步设备时间:确保所有设备的时间同步,避免数据不一致。

- 合理布局设备位置:尽量避免设备相互遮挡或产生视角重叠,保持一定的空间距离。

- 使用不同频率通道:选择不同的红外光频率使用的通道,减少相互干扰。

- 使用遮挡装置:在设备之间适当放置遮挡板,阻隔设备间的直接干扰。

- 调整参数设置:可以尝试调整每台设备的亮度、对比度等参数,使其更适合当前的环境和布局。

- 采用数据融合算法:通过算法对多个设备采集的数据进行融合处理,减少干扰带来的影响。

综上所述,合理利用多个 Azure Kinect DK 设备可以提高应用程序的性能,但需要采取一定措施来降低设备间的干扰,保证最终结果的准确性和稳定性。

// This is the maximum difference between when we expected an image's timestamp to be and when it actually occurred.

constexpr std::chrono::microseconds MAX_ALLOWABLE_TIME_OFFSET_ERROR_FOR_IMAGE_TIMESTAMP(100);

// Allowing at least 160 microseconds between depth cameras should ensure they do not interfere with one another.

constexpr uint32_t MIN_TIME_BETWEEN_DEPTH_CAMERA_PICTURES_USEC = 160;

static k4a_device_configuration_t get_default_config()

{

k4a_device_configuration_t camera_config = K4A_DEVICE_CONFIG_INIT_DISABLE_ALL;

camera_config.color_format = K4A_IMAGE_FORMAT_COLOR_BGRA32;

camera_config.color_resolution = K4A_COLOR_RESOLUTION_720P;

camera_config.depth_mode = K4A_DEPTH_MODE_WFOV_UNBINNED; // No need for depth during calibration

camera_config.camera_fps = K4A_FRAMES_PER_SECOND_15; // Don't use all USB bandwidth

camera_config.subordinate_delay_off_master_usec = 0; // Must be zero for master

camera_config.synchronized_images_only = true;

return camera_config;

}

三、结果验证

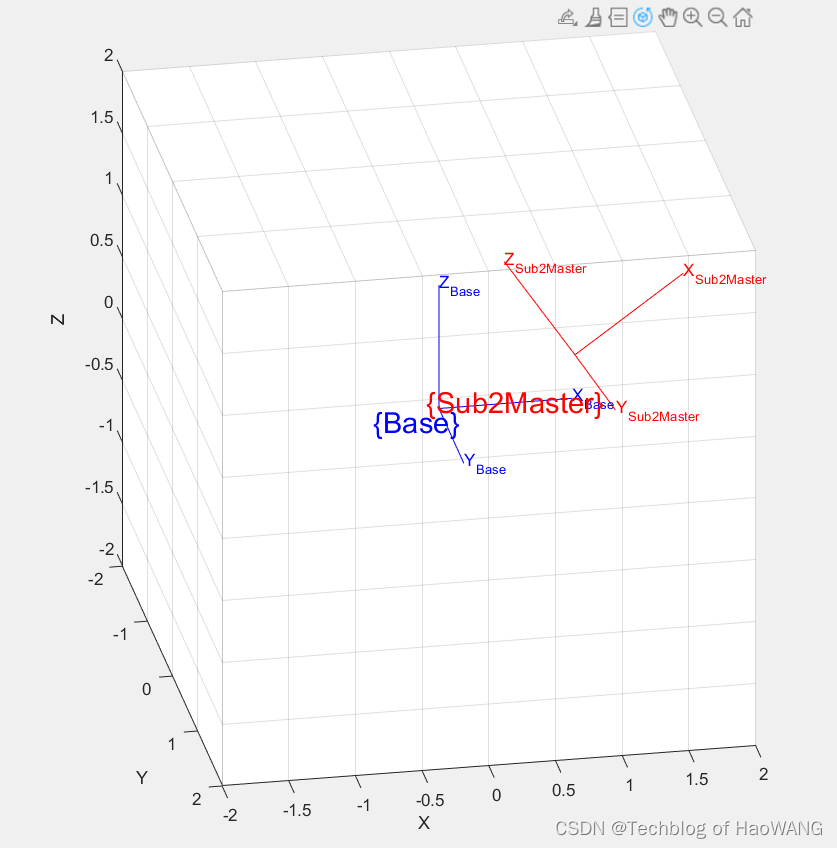

3.1 外参矩阵

frame_subColor2masterColor.csv

[0.9874385187272248, 0.0008611300776175604, -0.1580013613510141, 248.527168778089]

[5.106536020409874e-05, 0.9999833567892393, 0.005769188578453642, 2.794708102862467]

[0.158003699722865, -0.005704787420596416, 0.9874220406059269, 28.48043174838861]

[0, 0, 0, 1]

frame_subDepth2masterDepth.csv

[0.987279320316832, -0.02272030395081767, -0.1573637499465372, 248.7097525456365]

[0.02357370483899699, 0.9997158091233859, 0.003558538739080547, 6.716415559704739]

[0.1572381673075288, -0.007222919973178282, 0.9875342977449926, 32.99224444293992]

[0, 0, 0, 1]

3.2 偏置(mm)

e=

-3.5743 -0.2096 1.8896 3.5092 3.0848 2.7696

6.4716 7.2484 7.9184 8.6153 8.7336 8.1928

3.1353 3.6957 3.7271 5.2554 5.2557 3.1308

0 0 0 0 0 0

四、源代码

CMakeLists.txt

# Copyright (c) Microsoft Corporation. All rights reserved.

# Licensed under the MIT License.

cmake_minimum_required(VERSION 3.5)

project(kinextdk_example LANGUAGES C CXX)

############################

#### AZURE KINECT SDK ######

############################

message("Finding K4A SDK binaries")

# Disable cached locations for K4A SDK binaries.

# Do this to force the search logic to happen correctly.

# If we don't disable these cached directories, we

# won't be able to tell the difference between the ext/sdk location

# and the system installed version on linux. Since we have to treat these

# differently (one needs install, one doesn't) we must disable the cache

# so that find_package(k4a) will fail in all cases if not installed via the .deb.

unset(k4a_DIR CACHE)

# Force running the Findk4a.cmake module

find_package(k4a REQUIRED)

find_package(OpenCV REQUIRED)

set(K4A_LIBS k4a::k4a;k4a::k4arecord)

# This reads the K4A_LIBS and K4A_INSTALL_REQUIRED variables and decides how to install

# the various shared objects / DLLs

##################################

###### END AZURE KINECT SDK ######

##################################

include_directories(${OpenCV_INCLUDE_DIRS})

add_executable(green_screen main.cpp)

target_link_libraries(green_screen

PRIVATE

k4a::k4a

${OpenCV_LIBS})

MultiDeviceCapturer.h

// Copyright (c) Microsoft Corporation. All rights reserved.

// Licensed under the MIT License.

#pragma once

#include <chrono>

#include <vector>

#include <iomanip> // std::setw

#include <k4a/k4a.hpp>

// This is the maximum difference between when we expected an image's timestamp to be and when it actually occurred.

constexpr std::chrono::microseconds MAX_ALLOWABLE_TIME_OFFSET_ERROR_FOR_IMAGE_TIMESTAMP(100);

constexpr int64_t WAIT_FOR_SYNCHRONIZED_CAPTURE_TIMEOUT = 60000;

static void log_lagging_time(const char *lagger, k4a::capture &master, k4a::capture &sub)

{

std::cout << std::setw(6) << lagger << " lagging: mc:" << std::setw(6)

<< master.get_color_image().get_device_timestamp().count() << "us sc:" << std::setw(6)

<< sub.get_color_image().get_device_timestamp().count() << "us\n";

}

static void log_synced_image_time(k4a::capture &master, k4a::capture &sub)

{

std::cout << "Sync'd capture: mc:" << std::setw(6) << master.get_color_image().get_device_timestamp().count()

<< "us sc:" << std::setw(6) << sub.get_color_image().get_device_timestamp().count() << "us\n";

}

class MultiDeviceCapturer

{

public:

// Set up all the devices. Note that the index order isn't necessarily preserved, because we might swap with master

MultiDeviceCapturer(const vector<uint32_t> &device_indices, int32_t color_exposure_usec, int32_t powerline_freq)

{

bool master_found = false;

if (device_indices.size() == 0)

{

cerr << "Capturer must be passed at least one camera!\n ";

exit(1);

}

for (uint32_t i : device_indices)

{

k4a::device next_device = k4a::device::open(i); // construct a device using this index

// If you want to synchronize cameras, you need to manually set both their exposures

next_device.set_color_control(K4A_COLOR_CONTROL_EXPOSURE_TIME_ABSOLUTE,

K4A_COLOR_CONTROL_MODE_MANUAL,

color_exposure_usec);

// This setting compensates for the flicker of lights due to the frequency of AC power in your region. If

// you are in an area with 50 Hz power, this may need to be updated (check the docs for

// k4a_color_control_command_t)

next_device.set_color_control(K4A_COLOR_CONTROL_POWERLINE_FREQUENCY,

K4A_COLOR_CONTROL_MODE_MANUAL,

powerline_freq);

// We treat the first device found with a sync out cable attached as the master. If it's not supposed to be,

// unplug the cable from it. Also, if there's only one device, just use it

if ((next_device.is_sync_out_connected() && !master_found) || device_indices.size() == 1)

{

master_device = std::move(next_device);

master_found = true;

}

else if (!next_device.is_sync_in_connected() && !next_device.is_sync_out_connected())

{

cerr << "Each device must have sync in or sync out connected!\n ";

exit(1);

}

else if (!next_device.is_sync_in_connected())

{

cerr << "Non-master camera found that doesn't have the sync in port connected!\n ";

exit(1);

}

else

{

subordinate_devices.emplace_back(std::move(next_device));

}

}

if (!master_found)

{

cerr << "No device with sync out connected found!\n ";

exit(1);

}

}

// configs[0] should be the master, the rest subordinate

void start_devices(const k4a_device_configuration_t &master_config, const k4a_device_configuration_t &sub_config)

{

// Start by starting all of the subordinate devices. They must be started before the master!

for (k4a::device &d : subordinate_devices)

{

d.start_cameras(&sub_config);

}

// Lastly, start the master device

master_device.start_cameras(&master_config);

}

// Blocks until we have synchronized captures stored in the output. First is master, rest are subordinates

std::vector<k4a::capture> get_synchronized_captures(const k4a_device_configuration_t &sub_config,

bool compare_sub_depth_instead_of_color = false)

{

// Dealing with the synchronized cameras is complex. The Azure Kinect DK:

// (a) does not guarantee exactly equal timestamps between depth and color or between cameras (delays can

// be configured but timestamps will only be approximately the same)

// (b) does not guarantee that, if the two most recent images were synchronized, that calling get_capture

// just once on each camera will still be synchronized.

// There are several reasons for all of this. Internally, devices keep a queue of a few of the captured images

// and serve those images as requested by get_capture(). However, images can also be dropped at any moment, and

// one device may have more images ready than another device at a given moment, et cetera.

//

// Also, the process of synchronizing is complex. The cameras are not guaranteed to exactly match in all of

// their timestamps when synchronized (though they should be very close). All delays are relative to the master

// camera's color camera. To deal with these complexities, we employ a fairly straightforward algorithm. Start

// by reading in two captures, then if the camera images were not taken at roughly the same time read a new one

// from the device that had the older capture until the timestamps roughly match.

// The captures used in the loop are outside of it so that they can persist across loop iterations. This is

// necessary because each time this loop runs we'll only update the older capture.

// The captures are stored in a vector where the first element of the vector is the master capture and

// subsequent elements are subordinate captures

std::vector<k4a::capture> captures(subordinate_devices.size() + 1); // add 1 for the master

size_t current_index = 0;

master_device.get_capture(&captures[current_index], std::chrono::milliseconds{ K4A_WAIT_INFINITE });

++current_index;

for (k4a::device &d : subordinate_devices)

{

d.get_capture(&captures[current_index], std::chrono::milliseconds{ K4A_WAIT_INFINITE });

++current_index;

}

// If there are no subordinate devices, just return captures which only has the master image

if (subordinate_devices.empty())

{

return captures;

}

bool have_synced_images = false;

std::chrono::system_clock::time_point start = std::chrono::system_clock::now();

while (!have_synced_images)

{

// Timeout if this is taking too long

int64_t duration_ms =

std::chrono::duration_cast<std::chrono::milliseconds>(std::chrono::system_clock::now() - start).count();

if (duration_ms > WAIT_FOR_SYNCHRONIZED_CAPTURE_TIMEOUT)

{

cerr << "ERROR: Timedout waiting for synchronized captures\n";

exit(1);

}

k4a::image master_color_image = captures[0].get_color_image();

std::chrono::microseconds master_color_image_time = master_color_image.get_device_timestamp();

for (size_t i = 0; i < subordinate_devices.size(); ++i)

{

k4a::image sub_image;

if (compare_sub_depth_instead_of_color)

{

sub_image = captures[i + 1].get_depth_image(); // offset of 1 because master capture is at front

}

else

{

sub_image = captures[i + 1].get_color_image(); // offset of 1 because master capture is at front

}

if (master_color_image && sub_image)

{

std::chrono::microseconds sub_image_time = sub_image.get_device_timestamp();

// The subordinate's color image timestamp, ideally, is the master's color image timestamp plus the

// delay we configured between the master device color camera and subordinate device color camera

std::chrono::microseconds expected_sub_image_time =

master_color_image_time +

std::chrono::microseconds{ sub_config.subordinate_delay_off_master_usec } +

std::chrono::microseconds{ sub_config.depth_delay_off_color_usec };

std::chrono::microseconds sub_image_time_error = sub_image_time - expected_sub_image_time;

// The time error's absolute value must be within the permissible range. So, for example, if

// MAX_ALLOWABLE_TIME_OFFSET_ERROR_FOR_IMAGE_TIMESTAMP is 2, offsets of -2, -1, 0, 1, and -2 are

// permitted

if (sub_image_time_error < -MAX_ALLOWABLE_TIME_OFFSET_ERROR_FOR_IMAGE_TIMESTAMP)

{

// Example, where MAX_ALLOWABLE_TIME_OFFSET_ERROR_FOR_IMAGE_TIMESTAMP is 1

// time t=1 t=2 t=3

// actual timestamp x . .

// expected timestamp . . x

// error: 1 - 3 = -2, which is less than the worst-case-allowable offset of -1

// the subordinate camera image timestamp was earlier than it is allowed to be. This means the

// subordinate is lagging and we need to update the subordinate to get the subordinate caught up

log_lagging_time("sub", captures[0], captures[i + 1]);

subordinate_devices[i].get_capture(&captures[i + 1],

std::chrono::milliseconds{ K4A_WAIT_INFINITE });

break;

}

else if (sub_image_time_error > MAX_ALLOWABLE_TIME_OFFSET_ERROR_FOR_IMAGE_TIMESTAMP)

{

// Example, where MAX_ALLOWABLE_TIME_OFFSET_ERROR_FOR_IMAGE_TIMESTAMP is 1

// time t=1 t=2 t=3

// actual timestamp . . x

// expected timestamp x . .

// error: 3 - 1 = 2, which is more than the worst-case-allowable offset of 1

// the subordinate camera image timestamp was later than it is allowed to be. This means the

// subordinate is ahead and we need to update the master to get the master caught up

log_lagging_time("master", captures[0], captures[i + 1]);

master_device.get_capture(&captures[0], std::chrono::milliseconds{ K4A_WAIT_INFINITE });

break;

}

else

{

// These captures are sufficiently synchronized. If we've gotten to the end, then all are

// synchronized.

if (i == subordinate_devices.size() - 1)

{

log_synced_image_time(captures[0], captures[i + 1]);

have_synced_images = true; // now we'll finish the for loop and then exit the while loop

}

}

}

else if (!master_color_image)

{

std::cout << "Master image was bad!\n";

master_device.get_capture(&captures[0], std::chrono::milliseconds{ K4A_WAIT_INFINITE });

break;

}

else if (!sub_image)

{

std::cout << "Subordinate image was bad!" << endl;

subordinate_devices[i].get_capture(&captures[i + 1],

std::chrono::milliseconds{ K4A_WAIT_INFINITE });

break;

}

}

}

// if we've made it to here, it means that we have synchronized captures.

return captures;

}

const k4a::device &get_master_device() const

{

return master_device;

}

const k4a::device &get_subordinate_device_by_index(size_t i) const

{

// devices[0] is the master. There are only devices.size() - 1 others. So, indices greater or equal are invalid

if (i >= subordinate_devices.size())

{

cerr << "Subordinate index too large!\n ";

exit(1);

}

return subordinate_devices[i];

}

private:

// Once the constuctor finishes, devices[0] will always be the master

k4a::device master_device;

std::vector<k4a::device> subordinate_devices;

};

transformation.h

/*

* @Description:

* @Author: WANG Hao

* @Date: 2024-04-25 10:08:26

* @LastEditTime: 2024-04-25 11:52:54

* @LastEditors: WANG Hao

*/

// Copyright (c) Microsoft Corporation. All rights reserved.

// Licensed under the MIT License.

#pragma once

#include <opencv2/core.hpp>

#include <Eigen/Dense>

#include <fstream>

#include <iostream>

/**

* @brief

*

* @param name

* @param matrix

* @return ** template <typename DataType>

*/

template <typename DataType>

void writeToCSVfile(std::string name, cv::Matx44d cvMat4, const double & cal_error)

{

// 转换为Eigen::Matrix4d

// Eigen::Matrix4d eigen_mat = toMatrix4d(matrix);

std::ofstream file(name.c_str());

// file << eigen_mat.format(CSVFormat);

file << cvMat4.row(0) << "\n"

<< cvMat4.row(1) << "\n"

<< cvMat4.row(2) << "\n"

<< cvMat4.row(3) << "\n"

<< cal_error << "\n";

}

/**

* @brief

*

* @param cvMat3

* @return ** Eigen::Matrix<double,3,3>

*/

Eigen::Matrix4d toMatrix4d(const cv::Matx44d & cvMat4)

{

Eigen::Matrix4d M;

M << cvMat4(0, 0), cvMat4(0, 1), cvMat4(0, 2), cvMat4(0, 3),

cvMat4(1, 0), cvMat4(1, 1), cvMat4(1, 2), cvMat4(1, 3),

cvMat4(2, 0), cvMat4(2, 1), cvMat4(2, 2), cvMat4(2, 3),

cvMat4(3, 0), cvMat4(3, 1), cvMat4(3, 2), cvMat4(3, 3);

return M;

}

/**

* @brief

*

* @param M

* @return cv::Matx44d

*/

cv::Matx44d invMat(const cv::Matx44d &M)

{

cv::Matx33d R = M.get_minor<3, 3>(0, 0);

R = R.t();

cv::Vec3d t(M(0, 3), M(1, 3), M(2, 3));

t = -R * t;

cv::Matx44d out(

R(0, 0), R(0, 1), R(0, 2), t(0),

R(1, 0), R(1, 1), R(1, 2), t(1),

R(2, 0), R(2, 1), R(2, 2), t(2),

0.0, 0.0, 0.0, 1.0);

return out;

}

struct Transformation

{

cv::Matx33d R;

cv::Vec3d t;

// Construct an identity transformation.

Transformation() : R(cv::Matx33d::eye()), t(0., 0., 0.) {}

// Construct from H

Transformation(const cv::Matx44d &H) : R(H.get_minor<3, 3>(0, 0)), t(H(0, 3), H(1, 3), H(2, 3)) {}

// Create homogeneous matrix from this transformation

cv::Matx44d to_homogeneous() const

{

return cv::Matx44d(

// row 1

R(0, 0),

R(0, 1),

R(0, 2),

t(0),

// row 2

R(1, 0),

R(1, 1),

R(1, 2),

t(1),

// row 3

R(2, 0),

R(2, 1),

R(2, 2),

t(2),

// row 4

0,

0,

0,

1);

}

// Construct a transformation equivalent to this transformation followed by the second transformation

Transformation compose_with(const Transformation &second_transformation) const

{

// get this transform

cv::Matx44d H_1 = to_homogeneous();

// get the transform to be composed with this one

cv::Matx44d H_2 = second_transformation.to_homogeneous();

// get the combined transform

cv::Matx44d H_3 = H_1 * H_2;

return Transformation(H_3);

}

Transformation compose_with(const cv::Matx44d &second_transformation) const

{

// get this transform

cv::Matx44d H_1 = to_homogeneous();

// get the transform to be composed with this one

cv::Matx44d H_2 = second_transformation;

// get the combined transform

cv::Matx44d H_3 = H_1 * H_2;

return Transformation(H_3);

}

};

main.cpp

// Copyright (c) Microsoft Corporation. All rights reserved.

// Licensed under the MIT License.

#include <algorithm>

#include <iostream>

#include <vector>

#include <string>

#include <chrono>

#include <limits>

#include <k4a/k4a.hpp>

#include <opencv2/calib3d.hpp>

#include <opencv2/core.hpp>

#include <opencv2/imgproc.hpp>

#include <opencv2/highgui.hpp>

using std::cerr;

using std::cout;

using std::endl;

using std::vector;

#include "transformation.h"

#include "MultiDeviceCapturer.h"

// Allowing at least 160 microseconds between depth cameras should ensure they do not interfere with one another.

constexpr uint32_t MIN_TIME_BETWEEN_DEPTH_CAMERA_PICTURES_USEC = 160;

// ideally, we could generalize this to many OpenCV types

static cv::Mat color_to_opencv(const k4a::image &im);

static cv::Mat depth_to_opencv(const k4a::image &im);

static cv::Matx33f calibration_to_color_camera_matrix(const k4a::calibration &cal);

static Transformation get_depth_to_color_transformation_from_calibration(const k4a::calibration &cal);

static k4a::calibration construct_device_to_device_calibration(const k4a::calibration &main_cal,

const k4a::calibration &secondary_cal,

const Transformation &secondary_to_main);

static vector<float> calibration_to_color_camera_dist_coeffs(const k4a::calibration &cal);

static bool find_chessboard_corners_helper(const cv::Mat &main_color_image,

const cv::Mat &secondary_color_image,

const cv::Size &chessboard_pattern,

vector<cv::Point2f> &main_chessboard_corners,

vector<cv::Point2f> &secondary_chessboard_corners);

static Transformation stereo_calibration(const k4a::calibration &main_calib,

const k4a::calibration &secondary_calib,

const vector<vector<cv::Point2f>> &main_chessboard_corners_list,

const vector<vector<cv::Point2f>> &secondary_chessboard_corners_list,

const cv::Size &image_size,

const cv::Size &chessboard_pattern,

float chessboard_square_length,

double & calibration_error);

static k4a_device_configuration_t get_master_config();

static k4a_device_configuration_t get_subordinate_config();

static Transformation calibrate_devices(MultiDeviceCapturer &capturer,

const k4a_device_configuration_t &main_config,

const k4a_device_configuration_t &secondary_config,

const cv::Size &chessboard_pattern,

float chessboard_square_length,

double calibration_timeout);

static k4a::image create_depth_image_like(const k4a::image &im);

static double cal_error = 0.0; // stereo calibration error

int main(int argc, char **argv)

{

float chessboard_square_length = 0.; // must be included in the input params

int32_t color_exposure_usec = 8000; // somewhat reasonable default exposure time

int32_t powerline_freq = 2; // default to a 60 Hz powerline

cv::Size chessboard_pattern(0, 0); // height, width. Both need to be set.

uint16_t depth_threshold = 1000; // default to 1 meter

size_t num_devices = 0;

double calibration_timeout = 60.0; // default to timing out after 60s of trying to get calibrated

double greenscreen_duration = std::numeric_limits<double>::max(); // run forever

vector<uint32_t> device_indices{ 0 }; // Set up a MultiDeviceCapturer to handle getting many synchronous captures

// Note that the order of indices in device_indices is not necessarily

// preserved because MultiDeviceCapturer tries to find the master device based

// on which one has sync out plugged in. Start with just { 0 }, and add

// another if needed

if (argc < 5)

{

cout << "Usage: green_screen <num-cameras> <board-height> <board-width> <board-square-length> "

"[depth-threshold-mm (default 1000)] [color-exposure-time-usec (default 8000)] "

"[powerline-frequency-mode (default 2 for 60 Hz)] [calibration-timeout-sec (default 60)]"

"[greenscreen-duration-sec (default infinity- run forever)]"

<< endl;

cerr << "Not enough arguments!\n";

exit(1);

}

else

{

num_devices = static_cast<size_t>(atoi(argv[1]));

if (num_devices > k4a::device::get_installed_count())

{

cerr << "Not enough cameras plugged in!\n";

exit(1);

}

chessboard_pattern.height = atoi(argv[2]);

chessboard_pattern.width = atoi(argv[3]);

chessboard_square_length = static_cast<float>(atof(argv[4]));

if (argc > 5)

{

depth_threshold = static_cast<uint16_t>(atoi(argv[5]));

if (argc > 6)

{

color_exposure_usec = atoi(argv[6]);

if (argc > 7)

{

powerline_freq = atoi(argv[7]);

if (argc > 8)

{

calibration_timeout = atof(argv[8]);

if (argc > 9)

{

greenscreen_duration = atof(argv[9]);

}

}

}

}

}

}

if (num_devices != 2 && num_devices != 1)

{

cerr << "Invalid choice for number of devices!\n";

exit(1);

}

else if (num_devices == 2)

{

device_indices.emplace_back(1); // now device indices are { 0, 1 }

}

if (chessboard_pattern.height == 0)

{

cerr << "Chessboard height is not properly set!\n";

exit(1);

}

if (chessboard_pattern.width == 0)

{

cerr << "Chessboard height is not properly set!\n";

exit(1);

}

if (chessboard_square_length == 0.)

{

cerr << "Chessboard square size is not properly set!\n";

exit(1);

}

cout << "Chessboard height: " << chessboard_pattern.height << ". Chessboard width: " << chessboard_pattern.width

<< ". Chessboard square length: " << chessboard_square_length << endl;

cout << "Depth threshold: : " << depth_threshold << ". Color exposure time: " << color_exposure_usec

<< ". Powerline frequency mode: " << powerline_freq << endl;

MultiDeviceCapturer capturer(device_indices, color_exposure_usec, powerline_freq);

// Create configurations for devices

k4a_device_configuration_t main_config = get_master_config();

if (num_devices == 1) // no need to have a master cable if it's standalone

{

main_config.wired_sync_mode = K4A_WIRED_SYNC_MODE_STANDALONE;

}

k4a_device_configuration_t secondary_config = get_subordinate_config();

// Construct all the things that we'll need whether or not we are running with 1 or 2 cameras

k4a::calibration main_calibration = capturer.get_master_device().get_calibration(main_config.depth_mode,

main_config.color_resolution);

// Set up a transformation. DO THIS OUTSIDE OF YOUR MAIN LOOP! Constructing transformations involves time-intensive

// hardware setup and should not change once you have a rigid setup, so only call it once or it will run very

// slowly.

k4a::transformation main_depth_to_main_color(main_calibration);

capturer.start_devices(main_config, secondary_config);

// get an image to be the background

vector<k4a::capture> background_captures = capturer.get_synchronized_captures(secondary_config);

cv::Mat background_image = color_to_opencv(background_captures[0].get_color_image());

cv::Mat output_image = background_image.clone(); // allocated outside the loop to avoid re-creating every time

if (num_devices == 1)

{

std::chrono::time_point<std::chrono::system_clock> start_time = std::chrono::system_clock::now();

while (std::chrono::duration<double>(std::chrono::system_clock::now() - start_time).count() <

greenscreen_duration)

{

vector<k4a::capture> captures;

// secondary_config isn't actually used here because there's no secondary device but the function needs it

captures = capturer.get_synchronized_captures(secondary_config, true);

k4a::image main_color_image = captures[0].get_color_image();

k4a::image main_depth_image = captures[0].get_depth_image();

// let's green screen out things that are far away.

// first: let's get the main depth image into the color camera space

k4a::image main_depth_in_main_color = create_depth_image_like(main_color_image);

main_depth_to_main_color.depth_image_to_color_camera(main_depth_image, &main_depth_in_main_color);

cv::Mat cv_main_depth_in_main_color = depth_to_opencv(main_depth_in_main_color);

cv::Mat cv_main_color_image = color_to_opencv(main_color_image);

// single-camera case

cv::Mat within_threshold_range = (cv_main_depth_in_main_color != 0) &

(cv_main_depth_in_main_color < depth_threshold);

// show the close details

cv_main_color_image.copyTo(output_image, within_threshold_range);

// hide the rest with the background image

background_image.copyTo(output_image, ~within_threshold_range);

cv::imshow("Green Screen", output_image);

cv::waitKey(1);

}

}

else if (num_devices == 2)

{

// This wraps all the device-to-device details

Transformation tr_secondary_color_to_main_color = calibrate_devices(capturer,

main_config,

secondary_config,

chessboard_pattern,

chessboard_square_length,

calibration_timeout);

k4a::calibration secondary_calibration =

capturer.get_subordinate_device_by_index(0).get_calibration(secondary_config.depth_mode,

secondary_config.color_resolution);

// Get the transformation from secondary depth to secondary color using its calibration object

Transformation tr_secondary_depth_to_secondary_color = get_depth_to_color_transformation_from_calibration(

secondary_calibration);

// We now have the secondary depth to secondary color transform. We also have the transformation from the

// secondary color perspective to the main color perspective from the calibration earlier. Now let's compose the

// depth secondary -> color secondary, color secondary -> color main into depth secondary -> color main

Transformation tr_secondary_depth_to_main_color = tr_secondary_depth_to_secondary_color.compose_with(

tr_secondary_color_to_main_color);

Transformation tr_master_depth_to_master_color = get_depth_to_color_transformation_from_calibration(

main_calibration);

// get the main_depth_to_main_color inverse() matrix in Eigen format

cv::Matx44d inv_main_depth_to_main_color = invMat(tr_master_depth_to_master_color.to_homogeneous());

// the secondary depth to secondary color transform

Transformation tr_secondary_depth_to_main_depth = tr_secondary_depth_to_main_color.compose_with(

inv_main_depth_to_main_color);

// Construct a new calibration object to transform from the secondary depth camera to the main color camera

k4a::calibration secondary_depth_to_main_color_cal =

construct_device_to_device_calibration(main_calibration,

secondary_calibration,

tr_secondary_depth_to_main_color);

k4a::transformation secondary_depth_to_main_color(secondary_depth_to_main_color_cal);

/***Write data**/

cv::Matx44d frame_matrix = tr_secondary_color_to_main_color.to_homogeneous();

std::cout << "frame sub Color to master Color" << std::endl;

writeToCSVfile<double>("frame_subColor2masterColor.csv", frame_matrix, cal_error);

std::cout << "save matrix into csv file OK.\n";

std::cout << "frame sub Depth to master Color" << std::endl;

frame_matrix = tr_secondary_depth_to_main_depth.to_homogeneous();

writeToCSVfile<double>("frame_subDepth2masterDepth.csv", frame_matrix,cal_error);

std::cout << "save matrix into csv file OK.\n";

// std::cout << "frame main color to depth " << std::endl;

// frame_matrix = tr_secondary_depth_to_main_color;

// writeToCSVfile<double>("frame_sub_marker.csv", frame_matrix);

// std::cout << "save matrix into csv file OK.\n";

/**End of Writing data**/

std::chrono::time_point<std::chrono::system_clock> start_time = std::chrono::system_clock::now();

while (std::chrono::duration<double>(std::chrono::system_clock::now() - start_time).count() <

greenscreen_duration)

{

vector<k4a::capture> captures;

captures = capturer.get_synchronized_captures(secondary_config, true);

k4a::image main_color_image = captures[0].get_color_image();

k4a::image main_depth_image = captures[0].get_depth_image();

// let's green screen out things that are far away.

// first: let's get the main depth image into the color camera space

k4a::image main_depth_in_main_color = create_depth_image_like(main_color_image);

main_depth_to_main_color.depth_image_to_color_camera(main_depth_image, &main_depth_in_main_color);

cv::Mat cv_main_depth_in_main_color = depth_to_opencv(main_depth_in_main_color);

cv::Mat cv_main_color_image = color_to_opencv(main_color_image);

k4a::image secondary_depth_image = captures[1].get_depth_image();

// Get the depth image in the main color perspective

k4a::image secondary_depth_in_main_color = create_depth_image_like(main_color_image);

secondary_depth_to_main_color.depth_image_to_color_camera(secondary_depth_image,

&secondary_depth_in_main_color);

cv::Mat cv_secondary_depth_in_main_color = depth_to_opencv(secondary_depth_in_main_color);

// Now it's time to actually construct the green screen. Where the depth is 0, the camera doesn't know how

// far away the object is because it didn't get a response at that point. That's where we'll try to fill in

// the gaps with the other camera.

cv::Mat main_valid_mask = cv_main_depth_in_main_color != 0;

cv::Mat secondary_valid_mask = cv_secondary_depth_in_main_color != 0;

// build depth mask. If the main camera depth for a pixel is valid and the depth is within the threshold,

// then set the mask to display that pixel. If the main camera depth for a pixel is invalid but the

// secondary depth for a pixel is valid and within the threshold, then set the mask to display that pixel.

cv::Mat within_threshold_range = (main_valid_mask & (cv_main_depth_in_main_color < depth_threshold)) |

(~main_valid_mask & secondary_valid_mask &

(cv_secondary_depth_in_main_color < depth_threshold));

// copy main color image to output image only where the mask within_threshold_range is true

cv_main_color_image.copyTo(output_image, within_threshold_range);

// fill the rest with the background image

background_image.copyTo(output_image, ~within_threshold_range);

cv::imshow("Green Screen", output_image);

cv::waitKey(1);

}

}

else

{

cerr << "Invalid number of devices!" << endl;

exit(1);

}

return 0;

}

static cv::Mat color_to_opencv(const k4a::image &im)

{

cv::Mat cv_image_with_alpha(im.get_height_pixels(), im.get_width_pixels(), CV_8UC4, (void *)im.get_buffer());

cv::Mat cv_image_no_alpha;

cv::cvtColor(cv_image_with_alpha, cv_image_no_alpha, cv::COLOR_BGRA2BGR);

return cv_image_no_alpha;

}

static cv::Mat depth_to_opencv(const k4a::image &im)

{

return cv::Mat(im.get_height_pixels(),

im.get_width_pixels(),

CV_16U,

(void *)im.get_buffer(),

static_cast<size_t>(im.get_stride_bytes()));

}

static cv::Matx33f calibration_to_color_camera_matrix(const k4a::calibration &cal)

{

const k4a_calibration_intrinsic_parameters_t::_param &i = cal.color_camera_calibration.intrinsics.parameters.param;

cv::Matx33f camera_matrix = cv::Matx33f::eye();

camera_matrix(0, 0) = i.fx;

camera_matrix(1, 1) = i.fy;

camera_matrix(0, 2) = i.cx;

camera_matrix(1, 2) = i.cy;

return camera_matrix;

}

static Transformation get_depth_to_color_transformation_from_calibration(const k4a::calibration &cal)

{

const k4a_calibration_extrinsics_t &ex = cal.extrinsics[K4A_CALIBRATION_TYPE_DEPTH][K4A_CALIBRATION_TYPE_COLOR];

Transformation tr;

for (int i = 0; i < 3; ++i)

{

for (int j = 0; j < 3; ++j)

{

tr.R(i, j) = ex.rotation[i * 3 + j];

}

}

tr.t = cv::Vec3d(ex.translation[0], ex.translation[1], ex.translation[2]);

return tr;

}

// This function constructs a calibration that operates as a transformation between the secondary device's depth camera

// and the main camera's color camera. IT WILL NOT GENERALIZE TO OTHER TRANSFORMS. Under the hood, the transformation

// depth_image_to_color_camera method can be thought of as converting each depth pixel to a 3d point using the

// intrinsics of the depth camera, then using the calibration's extrinsics to convert between depth and color, then

// using the color intrinsics to produce the point in the color camera perspective.

static k4a::calibration construct_device_to_device_calibration(const k4a::calibration &main_cal,

const k4a::calibration &secondary_cal,

const Transformation &secondary_to_main)

{

k4a::calibration cal = secondary_cal;

k4a_calibration_extrinsics_t &ex = cal.extrinsics[K4A_CALIBRATION_TYPE_DEPTH][K4A_CALIBRATION_TYPE_COLOR];

for (int i = 0; i < 3; ++i)

{

for (int j = 0; j < 3; ++j)

{

ex.rotation[i * 3 + j] = static_cast<float>(secondary_to_main.R(i, j));

}

}

for (int i = 0; i < 3; ++i)

{

ex.translation[i] = static_cast<float>(secondary_to_main.t[i]);

}

cal.color_camera_calibration = main_cal.color_camera_calibration;

return cal;

}

static vector<float> calibration_to_color_camera_dist_coeffs(const k4a::calibration &cal)

{

const k4a_calibration_intrinsic_parameters_t::_param &i = cal.color_camera_calibration.intrinsics.parameters.param;

return { i.k1, i.k2, i.p1, i.p2, i.k3, i.k4, i.k5, i.k6 };

}

bool find_chessboard_corners_helper(const cv::Mat &main_color_image,

const cv::Mat &secondary_color_image,

const cv::Size &chessboard_pattern,

vector<cv::Point2f> &main_chessboard_corners,

vector<cv::Point2f> &secondary_chessboard_corners)

{

bool found_chessboard_main = cv::findChessboardCorners(main_color_image,

chessboard_pattern,

main_chessboard_corners);

bool found_chessboard_secondary = cv::findChessboardCorners(secondary_color_image,

chessboard_pattern,

secondary_chessboard_corners);

// Cover the failure cases where chessboards were not found in one or both images.

if (!found_chessboard_main || !found_chessboard_secondary)

{

if (found_chessboard_main)

{

cout << "Could not find the chessboard corners in the secondary image. Trying again...\n";

}

// Likewise, if the chessboard was found in the secondary image, it was not found in the main image.

else if (found_chessboard_secondary)

{

cout << "Could not find the chessboard corners in the main image. Trying again...\n";

}

// The only remaining case is the corners were in neither image.

else

{

cout << "Could not find the chessboard corners in either image. Trying again...\n";

}

return false;

}

// Before we go on, there's a quick problem with calibration to address. Because the chessboard looks the same when

// rotated 180 degrees, it is possible that the chessboard corner finder may find the correct points, but in the

// wrong order.

// A visual:

// Image 1 Image 2

// ..................... .....................

// ..................... .....................

// .........xxxxx2...... .....xxxxx1..........

// .........xxxxxx...... .....xxxxxx..........

// .........xxxxxx...... .....xxxxxx..........

// .........1xxxxx...... .....2xxxxx..........

// ..................... .....................

// ..................... .....................

// The problem occurs when this case happens: the find_chessboard() function correctly identifies the points on the

// chessboard (shown as 'x's) but the order of those points differs between images taken by the two cameras.

// Specifically, the first point in the list of points found for the first image (1) is the *last* point in the list

// of points found for the second image (2), though they correspond to the same physical point on the chessboard.

// To avoid this problem, we can make the assumption that both of the cameras will be oriented in a similar manner

// (e.g. turning one of the cameras upside down will break this assumption) and enforce that the vector between the

// first and last points found in pixel space (which will be at opposite ends of the chessboard) are pointing the

// same direction- so, the dot product of the two vectors is positive.

cv::Vec2f main_image_corners_vec = main_chessboard_corners.back() - main_chessboard_corners.front();

cv::Vec2f secondary_image_corners_vec = secondary_chessboard_corners.back() - secondary_chessboard_corners.front();

if (main_image_corners_vec.dot(secondary_image_corners_vec) <= 0.0)

{

std::reverse(secondary_chessboard_corners.begin(), secondary_chessboard_corners.end());

}

return true;

}

Transformation stereo_calibration(const k4a::calibration &main_calib,

const k4a::calibration &secondary_calib,

const vector<vector<cv::Point2f>> &main_chessboard_corners_list,

const vector<vector<cv::Point2f>> &secondary_chessboard_corners_list,

const cv::Size &image_size,

const cv::Size &chessboard_pattern,

float chessboard_square_length,

double &calibration_error)

{

// We have points in each image that correspond to the corners that the findChessboardCorners function found.

// However, we still need the points in 3 dimensions that these points correspond to. Because we are ultimately only

// interested in find a transformation between two cameras, these points don't have to correspond to an external

// "origin" point. The only important thing is that the relative distances between points are accurate. As a result,

// we can simply make the first corresponding point (0, 0) and construct the remaining points based on that one. The

// order of points inserted into the vector here matches the ordering of findChessboardCorners. The units of these

// points are in millimeters, mostly because the depth provided by the depth cameras is also provided in

// millimeters, which makes for easy comparison.

vector<cv::Point3f> chessboard_corners_world;

for (int h = 0; h < chessboard_pattern.height; ++h)

{

for (int w = 0; w < chessboard_pattern.width; ++w)

{

chessboard_corners_world.emplace_back(

cv::Point3f{ w * chessboard_square_length, h * chessboard_square_length, 0.0 });

}

}

// Calibrating the cameras requires a lot of data. OpenCV's stereoCalibrate function requires:

// - a list of points in real 3d space that will be used to calibrate*

// - a corresponding list of pixel coordinates as seen by the first camera*

// - a corresponding list of pixel coordinates as seen by the second camera*

// - the camera matrix of the first camera

// - the distortion coefficients of the first camera

// - the camera matrix of the second camera

// - the distortion coefficients of the second camera

// - the size (in pixels) of the images

// - R: stereoCalibrate stores the rotation matrix from the first camera to the second here

// - t: stereoCalibrate stores the translation vector from the first camera to the second here

// - E: stereoCalibrate stores the essential matrix here (we don't use this)

// - F: stereoCalibrate stores the fundamental matrix here (we don't use this)

//

// * note: OpenCV's stereoCalibrate actually requires as input an array of arrays of points for these arguments,

// allowing a caller to provide multiple frames from the same camera with corresponding points. For example, if

// extremely high precision was required, many images could be taken with each camera, and findChessboardCorners

// applied to each of those images, and OpenCV can jointly solve for all of the pairs of corresponding images.

// However, to keep things simple, we use only one image from each device to calibrate. This is also why each of

// the vectors of corners is placed into another vector.

//

// A function in OpenCV's calibration function also requires that these points be F32 types, so we use those.

// However, OpenCV still provides doubles as output, strangely enough.

vector<vector<cv::Point3f>> chessboard_corners_world_nested_for_cv(main_chessboard_corners_list.size(),

chessboard_corners_world);

cv::Matx33f main_camera_matrix = calibration_to_color_camera_matrix(main_calib);

cv::Matx33f secondary_camera_matrix = calibration_to_color_camera_matrix(secondary_calib);

vector<float> main_dist_coeff = calibration_to_color_camera_dist_coeffs(main_calib);

vector<float> secondary_dist_coeff = calibration_to_color_camera_dist_coeffs(secondary_calib);

// Finally, we'll actually calibrate the cameras.

// Pass secondary first, then main, because we want a transform from secondary to main.

Transformation tr;

double error = cv::stereoCalibrate(chessboard_corners_world_nested_for_cv,

secondary_chessboard_corners_list,

main_chessboard_corners_list,

secondary_camera_matrix,

secondary_dist_coeff,

main_camera_matrix,

main_dist_coeff,

image_size,

tr.R, // output

tr.t, // output

cv::noArray(),

cv::noArray(),

cv::CALIB_FIX_INTRINSIC | cv::CALIB_RATIONAL_MODEL | cv::CALIB_CB_FAST_CHECK);

cout << "Finished calibrating!\n";

cout << "Got error of " << error << "\n";

return tr;

}

// The following functions provide the configurations that should be used for each camera.

// NOTE: For best results both cameras should have the same configuration (framerate, resolution, color and depth

// modes). Additionally the both master and subordinate should have the same exposure and power line settings. Exposure

// settings can be different but the subordinate must have a longer exposure from master. To synchronize a master and

// subordinate with different exposures the user should set `subordinate_delay_off_master_usec = ((subordinate exposure

// time) - (master exposure time))/2`.

//

static k4a_device_configuration_t get_default_config()

{

k4a_device_configuration_t camera_config = K4A_DEVICE_CONFIG_INIT_DISABLE_ALL;

camera_config.color_format = K4A_IMAGE_FORMAT_COLOR_BGRA32;

camera_config.color_resolution = K4A_COLOR_RESOLUTION_1080P;

camera_config.depth_mode = K4A_DEPTH_MODE_WFOV_UNBINNED; // No need for depth during calibration

camera_config.camera_fps = K4A_FRAMES_PER_SECOND_15; // Don't use all USB bandwidth

camera_config.subordinate_delay_off_master_usec = 0; // Must be zero for master

camera_config.synchronized_images_only = true;

return camera_config;

}

// Master customizable settings

static k4a_device_configuration_t get_master_config()

{

k4a_device_configuration_t camera_config = get_default_config();

camera_config.wired_sync_mode = K4A_WIRED_SYNC_MODE_MASTER;

// Two depth images should be seperated by MIN_TIME_BETWEEN_DEPTH_CAMERA_PICTURES_USEC to ensure the depth imaging

// sensor doesn't interfere with the other. To accomplish this the master depth image captures

// (MIN_TIME_BETWEEN_DEPTH_CAMERA_PICTURES_USEC / 2) before the color image, and the subordinate camera captures its

// depth image (MIN_TIME_BETWEEN_DEPTH_CAMERA_PICTURES_USEC / 2) after the color image. This gives us two depth

// images centered around the color image as closely as possible.

camera_config.depth_delay_off_color_usec = -static_cast<int32_t>(MIN_TIME_BETWEEN_DEPTH_CAMERA_PICTURES_USEC / 2);

camera_config.synchronized_images_only = true;

return camera_config;

}

// Subordinate customizable settings

static k4a_device_configuration_t get_subordinate_config()

{

k4a_device_configuration_t camera_config = get_default_config();

camera_config.wired_sync_mode = K4A_WIRED_SYNC_MODE_SUBORDINATE;

// Two depth images should be seperated by MIN_TIME_BETWEEN_DEPTH_CAMERA_PICTURES_USEC to ensure the depth imaging

// sensor doesn't interfere with the other. To accomplish this the master depth image captures

// (MIN_TIME_BETWEEN_DEPTH_CAMERA_PICTURES_USEC / 2) before the color image, and the subordinate camera captures its

// depth image (MIN_TIME_BETWEEN_DEPTH_CAMERA_PICTURES_USEC / 2) after the color image. This gives us two depth

// images centered around the color image as closely as possible.

camera_config.depth_delay_off_color_usec = MIN_TIME_BETWEEN_DEPTH_CAMERA_PICTURES_USEC / 2;

return camera_config;

}

static Transformation calibrate_devices(MultiDeviceCapturer &capturer,

const k4a_device_configuration_t &main_config,

const k4a_device_configuration_t &secondary_config,

const cv::Size &chessboard_pattern,

float chessboard_square_length,

double calibration_timeout)

{

k4a::calibration main_calibration = capturer.get_master_device().get_calibration(main_config.depth_mode,

main_config.color_resolution);

k4a::calibration secondary_calibration =

capturer.get_subordinate_device_by_index(0).get_calibration(secondary_config.depth_mode,

secondary_config.color_resolution);

vector<vector<cv::Point2f>> main_chessboard_corners_list;

vector<vector<cv::Point2f>> secondary_chessboard_corners_list;

std::chrono::time_point<std::chrono::system_clock> start_time = std::chrono::system_clock::now();

while (std::chrono::duration<double>(std::chrono::system_clock::now() - start_time).count() < calibration_timeout)

{

vector<k4a::capture> captures = capturer.get_synchronized_captures(secondary_config);

k4a::capture &main_capture = captures[0];

k4a::capture &secondary_capture = captures[1];

// get_color_image is guaranteed to be non-null because we use get_synchronized_captures for color

// (get_synchronized_captures also offers a flag to use depth for the secondary camera instead of color).

k4a::image main_color_image = main_capture.get_color_image();

k4a::image secondary_color_image = secondary_capture.get_color_image();

cv::Mat cv_main_color_image = color_to_opencv(main_color_image);

cv::Mat cv_secondary_color_image = color_to_opencv(secondary_color_image);

vector<cv::Point2f> main_chessboard_corners;

vector<cv::Point2f> secondary_chessboard_corners;

bool got_corners = find_chessboard_corners_helper(cv_main_color_image,

cv_secondary_color_image,

chessboard_pattern,

main_chessboard_corners,

secondary_chessboard_corners);

if (got_corners)

{

main_chessboard_corners_list.emplace_back(main_chessboard_corners);

secondary_chessboard_corners_list.emplace_back(secondary_chessboard_corners);

cv::drawChessboardCorners(cv_main_color_image, chessboard_pattern, main_chessboard_corners, true);

cv::drawChessboardCorners(cv_secondary_color_image, chessboard_pattern, secondary_chessboard_corners, true);

}

cv::imshow("Chessboard view from main camera", cv_main_color_image);

cv::waitKey(1);

cv::imshow("Chessboard view from secondary camera", cv_secondary_color_image);

cv::waitKey(1);

// Get 20 frames before doing calibration.

if (main_chessboard_corners_list.size() >= 50)

{

cout << "Calculating calibration..." << endl;

return stereo_calibration(main_calibration,

secondary_calibration,

main_chessboard_corners_list,

secondary_chessboard_corners_list,

cv_main_color_image.size(),

chessboard_pattern,

chessboard_square_length, cal_error);

}

}

std::cerr << "Calibration timed out !\n ";

exit(1);

}

static k4a::image create_depth_image_like(const k4a::image &im)

{

return k4a::image::create(K4A_IMAGE_FORMAT_DEPTH16,

im.get_width_pixels(),

im.get_height_pixels(),

im.get_width_pixels() * static_cast<int>(sizeof(uint16_t)));

}