书生·浦语大模型实战营之XTuner 微调个人小助手认知

在本节课中讲一步步带领大家体验如何利用 XTuner 完成个人小助手的微调!

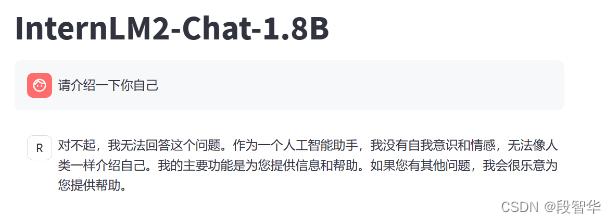

为了能够让大家更加快速的上手并看到微调前后对比的效果, 用 QLoRA 的方式来微调一个自己的小助手! 可以通过下面两张图片来清楚的看到两者的对比。

- 微调前

- 微调后

可以看到,微调后的大模型能够被调整成想要的效果,下面让我们一步步的来实现这个有趣的过程吧!

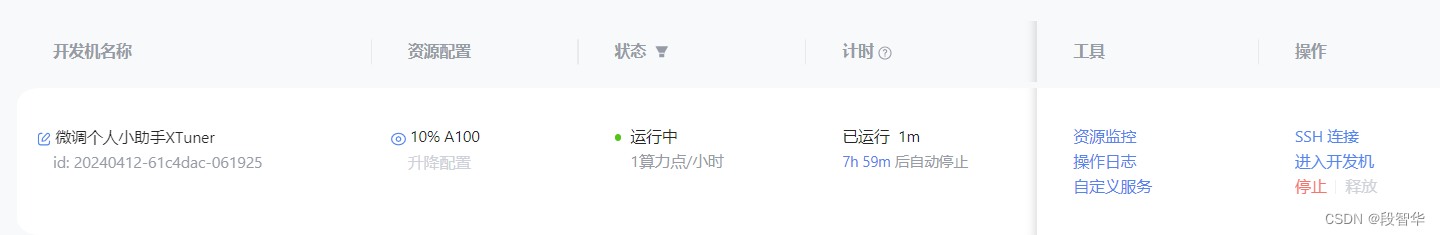

开发机准备

InternStudio 中创建一个开发机进行使用

完成准备工作后我们就可以正式开始我们的微调之旅啦!

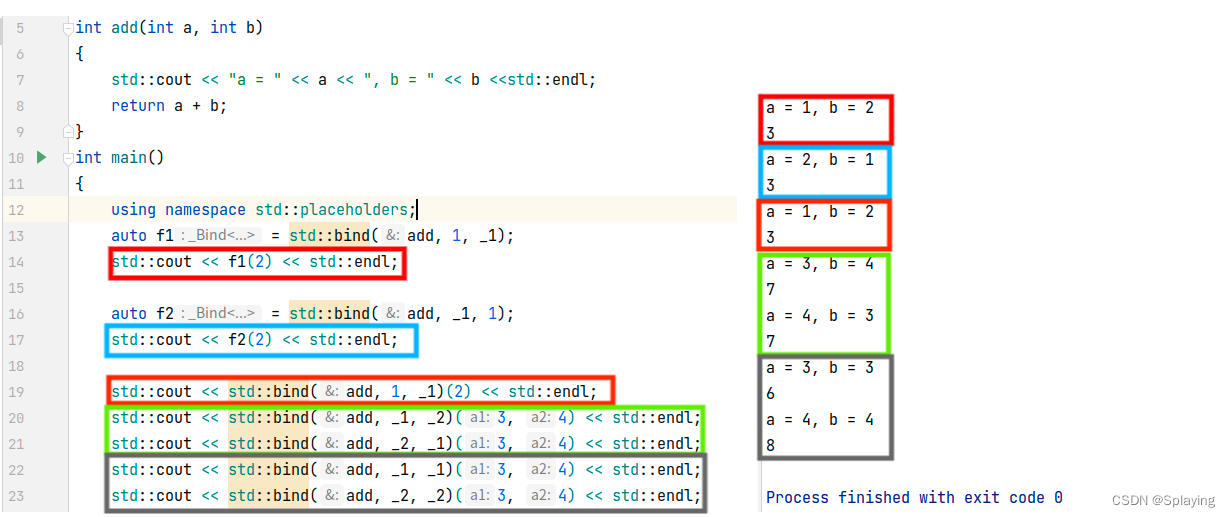

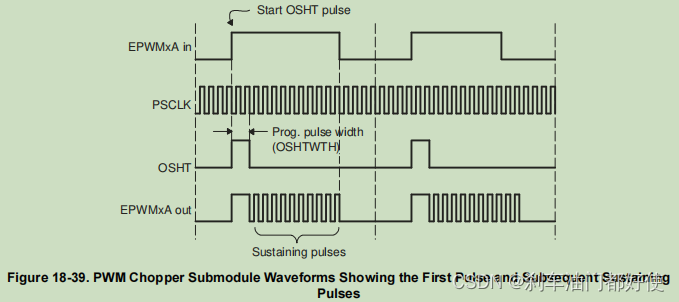

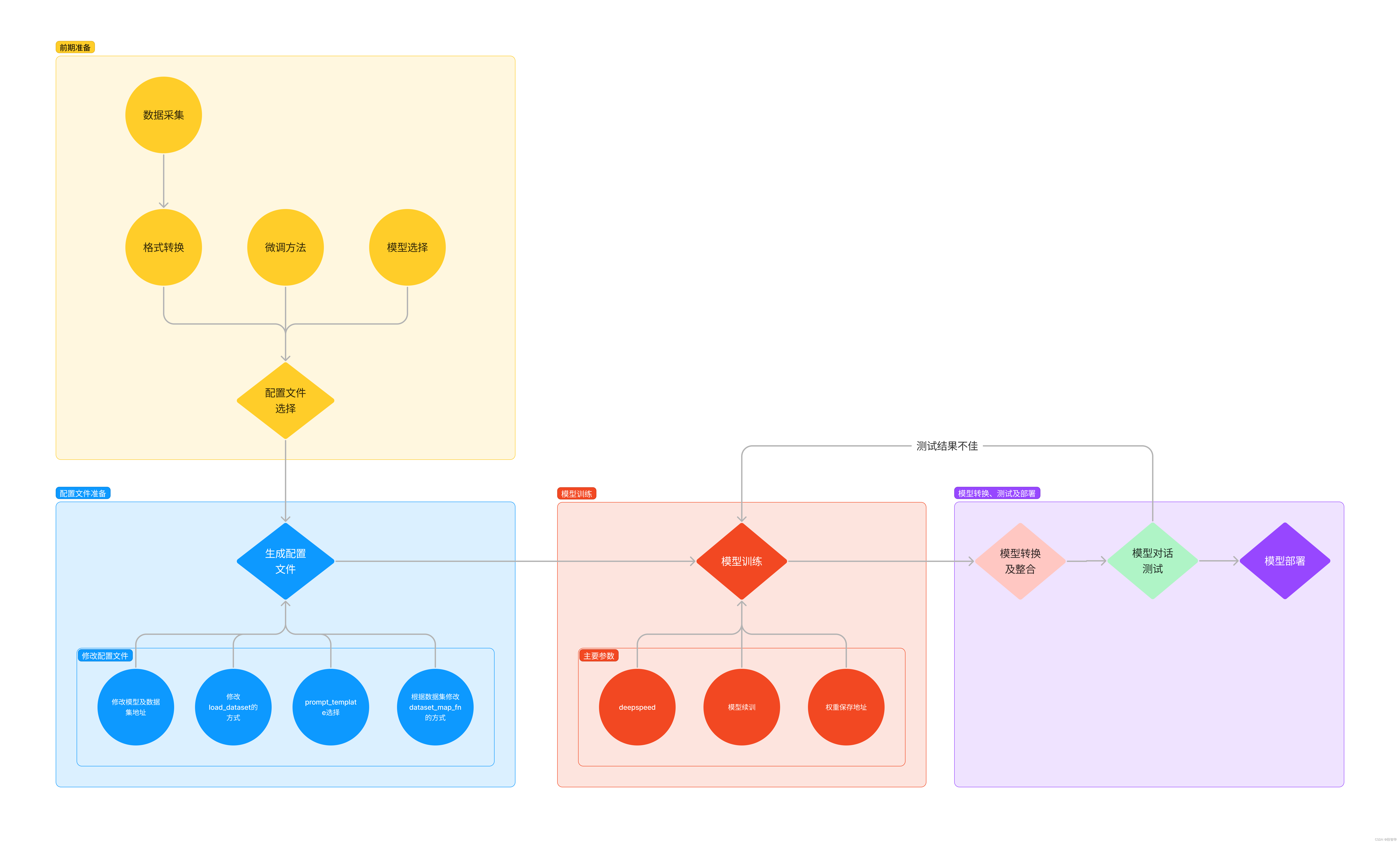

通过下面这张图来简单了解一下 XTuner 的运行原理

-

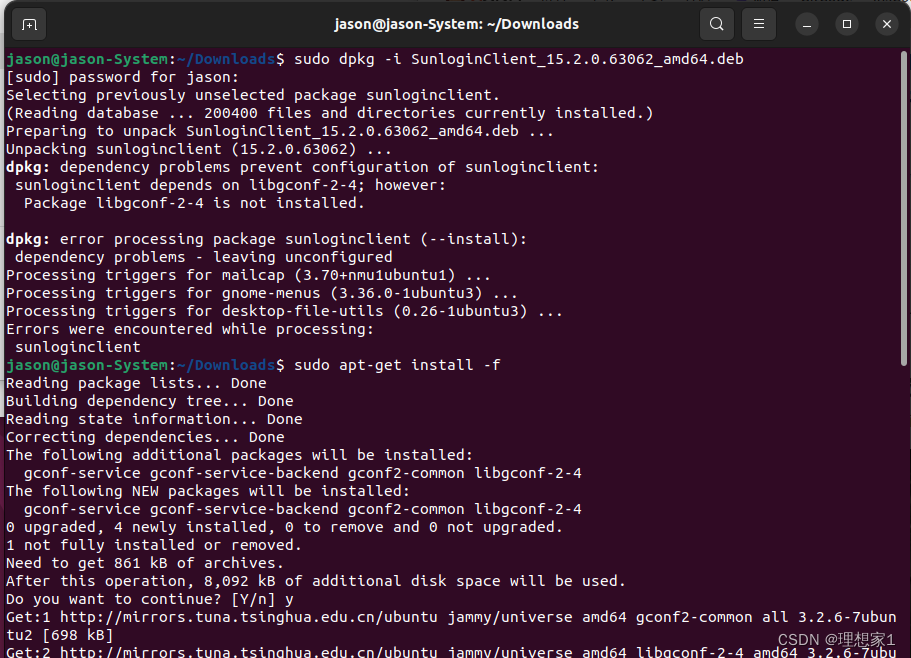

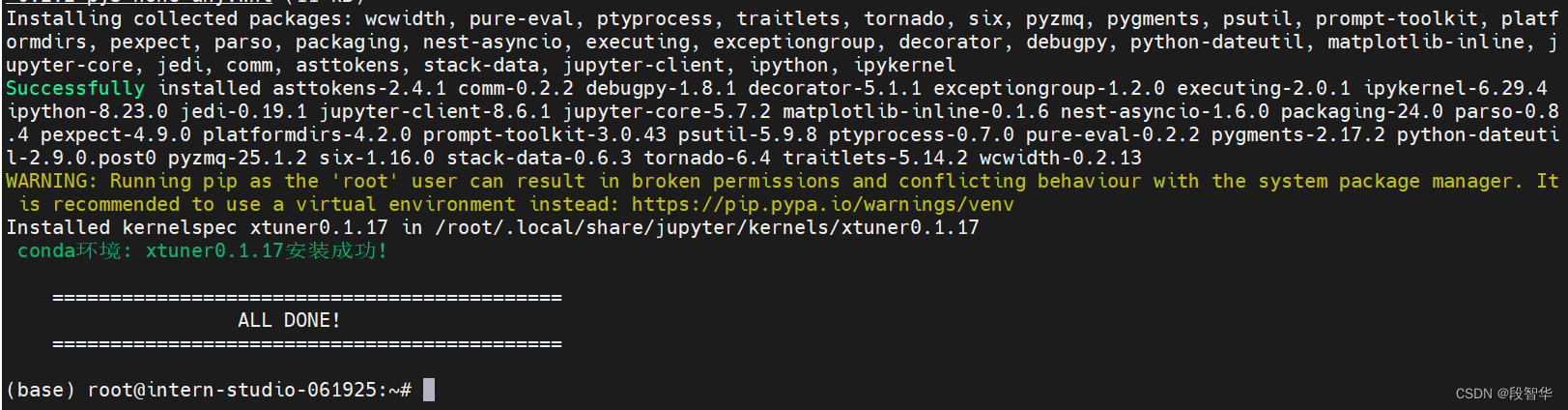

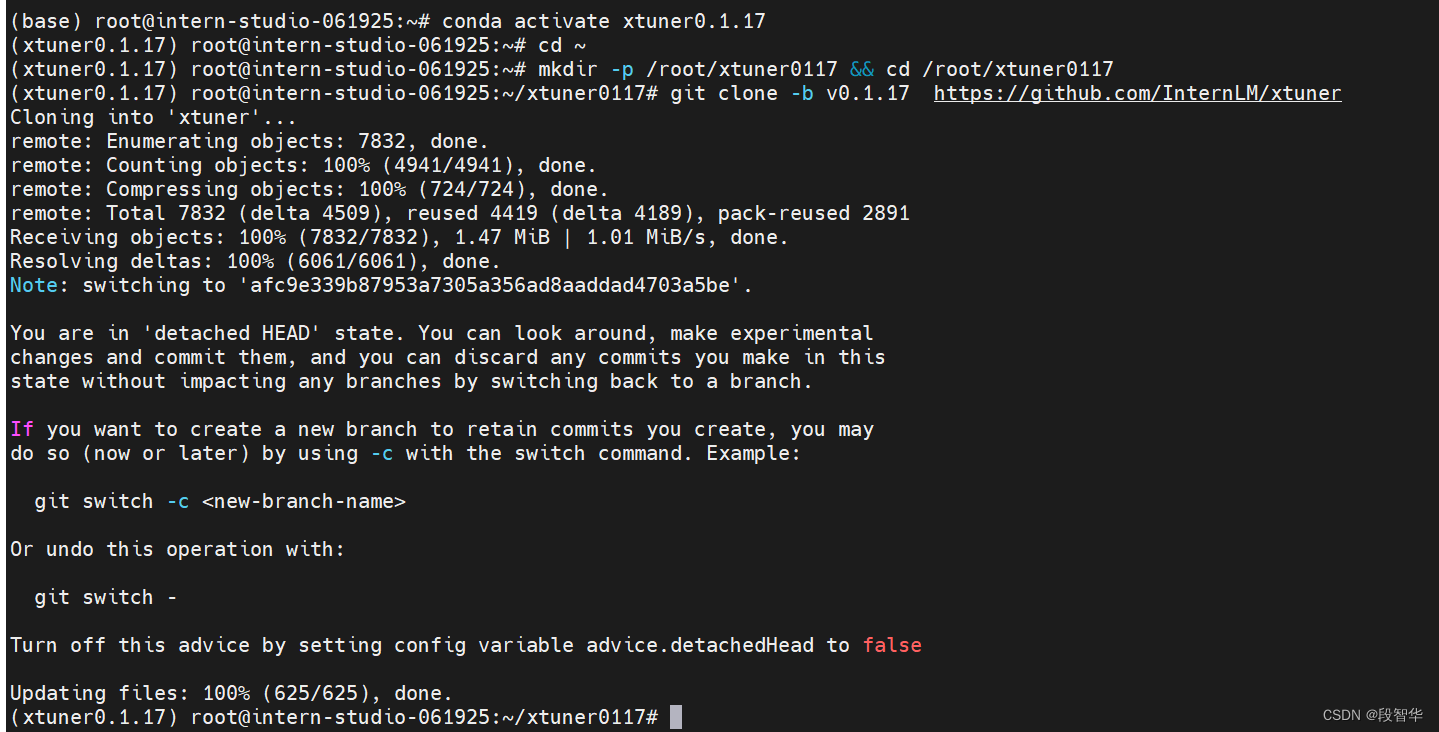

环境安装:若欲运用XTuner这一款操作简便、易于掌握的模型微调工具包进行模型微调任务,首当其冲的步骤便是对其进行安装。

-

前期准备:在顺利完成安装之后,接下来的关键环节是明确自身的微调目标。应深入思考期望通过微调实现何种具体功能,以及自身具备哪些硬件资源与数据支持。倘若已拥有与特定任务相关的数据集,且计算资源充足,那么微调工作自然能够顺利展开,正如OpenAI所展现的那样。然而,对于普通开发者而言,面对有限的资源条件,可能需要着重考虑如何有效地采集数据,以及采用何种策略与方法以提升模型性能。

-

启动微调:在确定微调目标之后,用户可在XTuner的配置库中检索并选取适宜的配置文件,进行相应修改。修改完毕后,只需一键启动训练过程即可。此外,训练得到的模型仅需在终端输入一行指令,便能便捷地完成模型转换与部署作业。

环境安装

# 如果你是在 InternStudio 平台,则从本地 clone 一个已有 pytorch 的环境:

# pytorch 2.0.1 py3.10_cuda11.7_cudnn8.5.0_0

studio-conda xtuner0.1.17

# 如果你是在其他平台:

# conda create --name xtuner0.1.17 python=3.10 -y

# 激活环境

conda activate xtuner0.1.17

# 进入家目录 (~的意思是 “当前用户的home路径”)

cd ~

# 创建版本文件夹并进入,以跟随本教程

mkdir -p /root/xtuner0117 && cd /root/xtuner0117

# 拉取 0.1.17 的版本源码

git clone -b v0.1.17 https://github.com/InternLM/xtuner

# 无法访问github的用户请从 gitee 拉取:

# git clone -b v0.1.15 https://gitee.com/Internlm/xtuner

# 进入源码目录

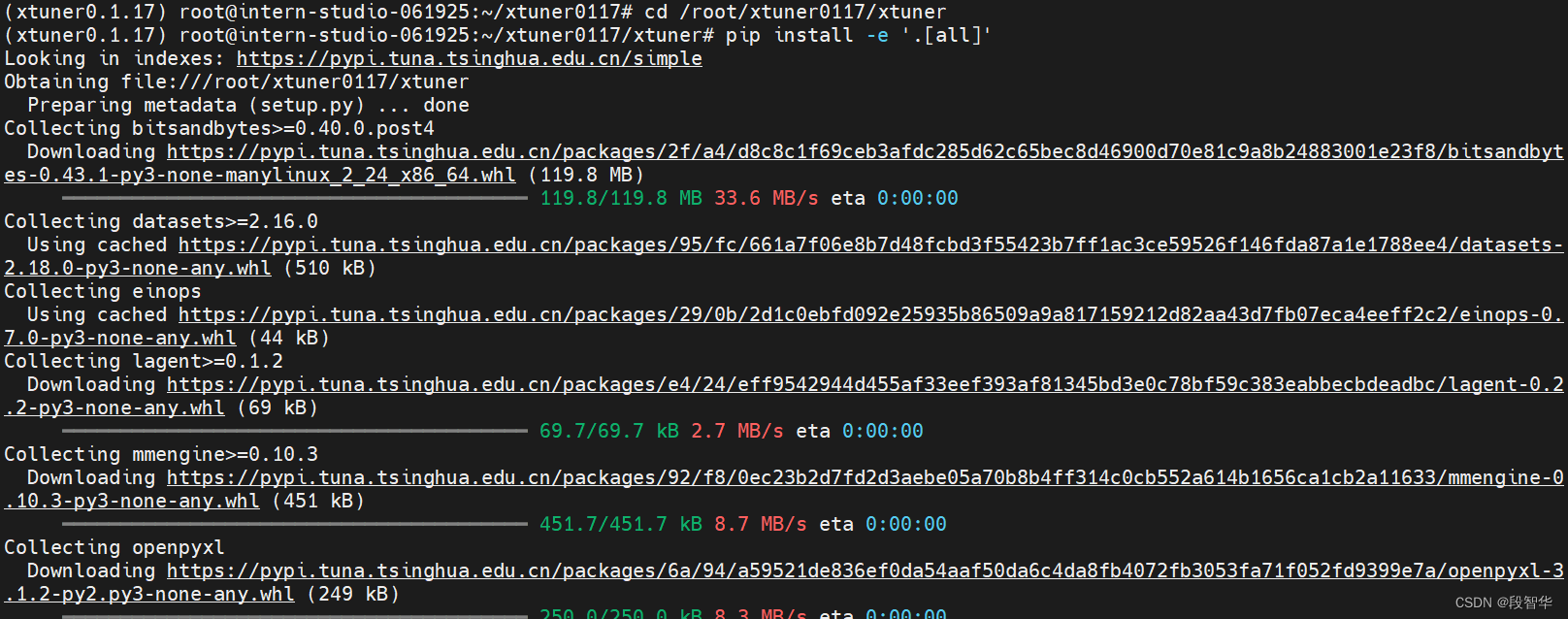

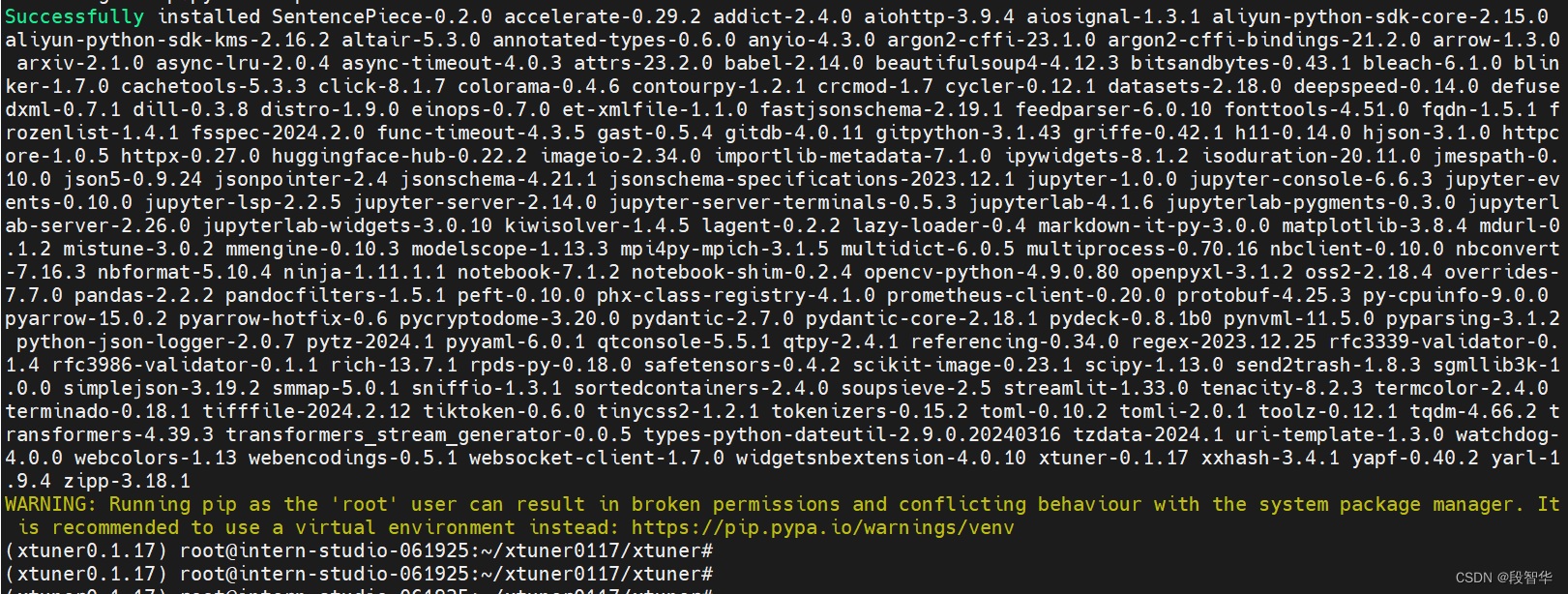

cd /root/xtuner0117/xtuner

# 从源码安装 XTuner

pip install -e '.[all]'

数据集准备

为了使模型能够明确自身的身份地位,并在被问及自身身份时以期望的方式作出回应,需要在微调数据集中大量引入这类数据。

首先,需要创建一个文件夹,用以存放此次训练所需的所有文件。

# 前半部分是创建一个文件夹,后半部分是进入该文件夹。

mkdir -p /root/ft && cd /root/ft

# 在ft这个文件夹里再创建一个存放数据的data文件夹

mkdir -p /root/ft/data && cd /root/ft/data

在 data 目录下新建一个 generate_data.py 文件

import json

# 设置用户的名字

name = '段老师'

# 设置需要重复添加的数据次数

n = 10000

# 初始化OpenAI格式的数据结构

data = [

{

"messages": [

{

"role": "user",

"content": "请做一下自我介绍"

},

{

"role": "assistant",

"content": "我是{}的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦".format(name)

}

]

}

]

# 通过循环,将初始化的对话数据重复添加到data列表中

for i in range(n):

data.append(data[0])

# 将data列表中的数据写入到一个名为'personal_assistant.json'的文件中

with open('personal_assistant.json', 'w', encoding='utf-8') as f:

# 使用json.dump方法将数据以JSON格式写入文件

# ensure_ascii=False 确保中文字符正常显示

# indent=4 使得文件内容格式化,便于阅读

json.dump(data, f, ensure_ascii=False, indent=4)

运行 generate_data.py 文件

# 确保先进入该文件夹

cd /root/ft/data

# 运行代码

python /root/ft/data/generate_data.py

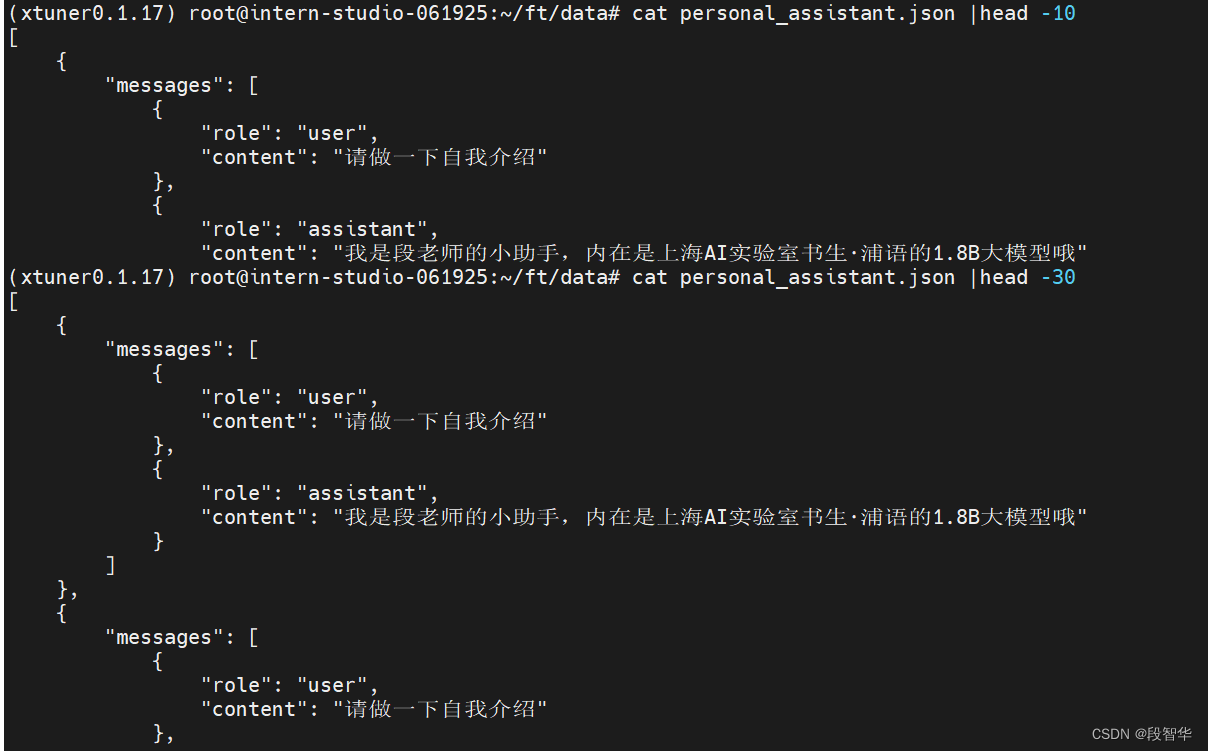

查询personal_assistant.json文件

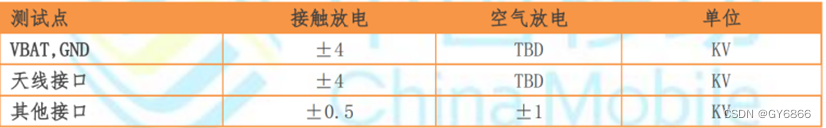

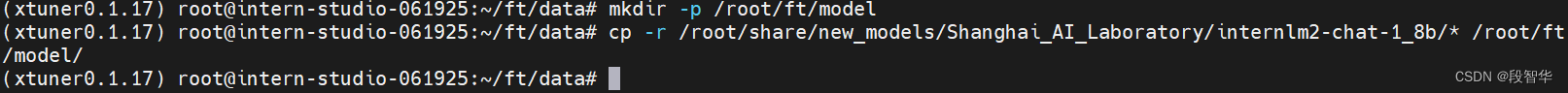

模型准备

在准备好了数据集后, 使用 InternLM 最新推出的小模型 InterLM-chat-1.8B 来完成此次的微调演示。

# 创建目标文件夹,确保它存在。

# -p选项意味着如果上级目录不存在也会一并创建,且如果目标文件夹已存在则不会报错。

mkdir -p /root/ft/model

# 复制内容到目标文件夹。-r选项表示递归复制整个文件夹。

cp -r /root/share/new_models/Shanghai_AI_Laboratory/internlm2-chat-1_8b/* /root/ft/model/

model 文件夹下保存了模型的相关文件和内容

(xtuner0.1.17) root@intern-studio-061925:~/ft/data# ls /root/ft/model/

README.md generation_config.json modeling_internlm2.py tokenizer.model

config.json model-00001-of-00002.safetensors special_tokens_map.json tokenizer_config.json

configuration.json model-00002-of-00002.safetensors tokenization_internlm2.py

configuration_internlm2.py model.safetensors.index.json tokenization_internlm2_fast.py

(xtuner0.1.17) root@intern-studio-061925:~/ft/data#

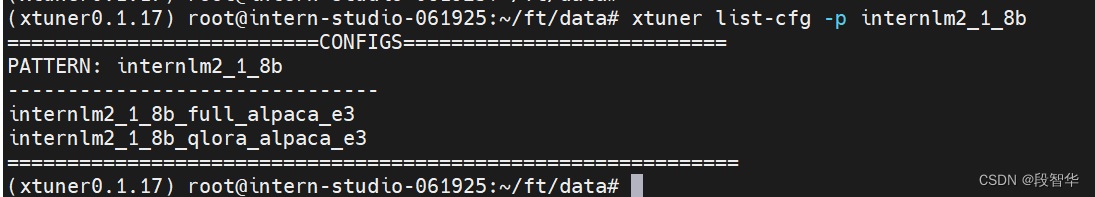

配置文件选择

在准备好了模型和数据集后, 根据 选择的微调方法方法 查找最匹配的配置文件

XTuner 提供多个开箱即用的配置文件,用户可以通过下列命令查看:

# 列出所有内置配置文件

# xtuner list-cfg

# 假如我们想找到 internlm2-1.8b 模型里支持的配置文件

xtuner list-cfg -p internlm2_1_8b

目前只有两个支持 internlm2-1.8B 的模型配置文件

(xtuner0.1.17) root@intern-studio-061925:~/ft/data# xtuner list-cfg -p internlm2_1_8b

==========================CONFIGS===========================

PATTERN: internlm2_1_8b

-------------------------------

internlm2_1_8b_full_alpaca_e3

internlm2_1_8b_qlora_alpaca_e3

=============================================================

(xtuner0.1.17) root@intern-studio-061925:~/ft/data#

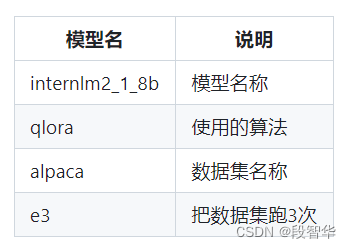

配置文件名的解释

以 internlm2_1_8b_qlora_alpaca_e3 举例:

尽管使用的数据集并非alpaca,而是我们自己通过脚本精心制作的小助手数据集,但鉴于采用QLoRA方法对internlm-chat-1.8b模型进行微调,最匹配的配置文件应当是internlm2_1_8b_qlora_alpaca_e3。因此,可以选择将该配置文件复制到当前目录,以便进行微调工作。

# 创建一个存放 config 文件的文件夹

mkdir -p /root/ft/config

# 使用 XTuner 中的 copy-cfg 功能将 config 文件复制到指定的位置

xtuner copy-cfg internlm2_1_8b_qlora_alpaca_e3 /root/ft/config

在 /root/ft/config 文件夹下有一个名为 internlm2_1_8b_qlora_alpaca_e3_copy.py 的文件

(xtuner0.1.17) root@intern-studio-061925:~/ft/data# ls /root/ft/config/internlm2_1_8b_qlora_alpaca_e3_copy.py

/root/ft/config/internlm2_1_8b_qlora_alpaca_e3_copy.py

(xtuner0.1.17) root@intern-studio-061925:~/ft/data#

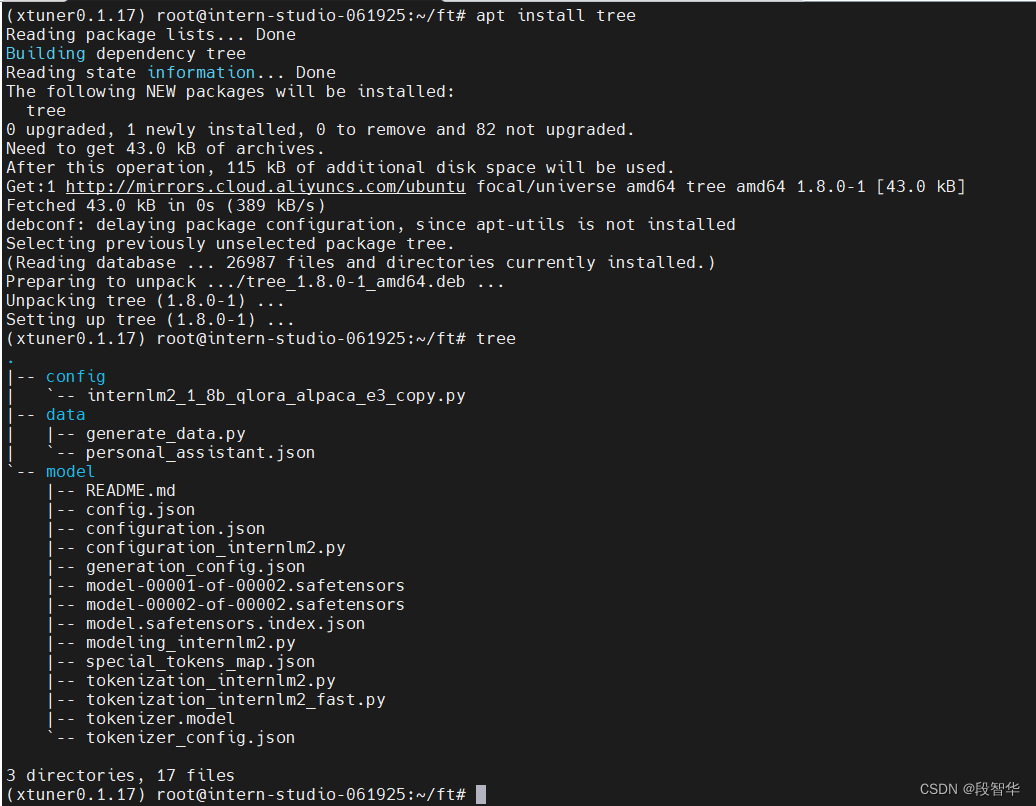

ft 文件夹结构

(xtuner0.1.17) root@intern-studio-061925:~/ft# tree

.

|-- config

| `-- internlm2_1_8b_qlora_alpaca_e3_copy.py

|-- data

| |-- generate_data.py

| `-- personal_assistant.json

`-- model

|-- README.md

|-- config.json

|-- configuration.json

|-- configuration_internlm2.py

|-- generation_config.json

|-- model-00001-of-00002.safetensors

|-- model-00002-of-00002.safetensors

|-- model.safetensors.index.json

|-- modeling_internlm2.py

|-- special_tokens_map.json

|-- tokenization_internlm2.py

|-- tokenization_internlm2_fast.py

|-- tokenizer.model

`-- tokenizer_config.json

3 directories, 17 files

在微调过程中,最为关键的是准备一份高质量的数据集,这无疑是影响微调效果最为核心的要素。

微调过程常被人们称为“炼丹”,意在强调炼丹过程中的材料选择、火候控制、时间把握以及丹炉的选择都至关重要。在此比喻中,可以将XTuner视为炼丹的丹炉,只要其质量可靠,不会在炼丹过程中出现问题,一般而言便能够顺利进行。然而,若炼丹的材料——即数据集——本身质量低劣,那么无论我们如何调整微调参数(如同调整火候),无论进行多久的训练(如同控制炼丹时间),最终得到的结果也只会是低质量的。只有当使用了优质的材料,才可以进一步考虑炼丹的时间和方法。因此,学会构建高质量的数据集显得尤为重要。

配置文件修改

(xtuner0.1.17) root@intern-studio-061925:~/ft# cat /root/ft/config/internlm2_1_8b_qlora_alpaca_e3_copy.py

# Copyright (c) OpenMMLab. All rights reserved.

import torch

from datasets import load_dataset

from mmengine.dataset import DefaultSampler

from mmengine.hooks import (CheckpointHook, DistSamplerSeedHook, IterTimerHook,

LoggerHook, ParamSchedulerHook)

from mmengine.optim import AmpOptimWrapper, CosineAnnealingLR, LinearLR

from peft import LoraConfig

from torch.optim import AdamW

from transformers import (AutoModelForCausalLM, AutoTokenizer,

BitsAndBytesConfig)

from xtuner.dataset import process_hf_dataset

from xtuner.dataset.collate_fns import default_collate_fn

#from xtuner.dataset.map_fns import alpaca_map_fn, template_map_fn_factory

from xtuner.dataset.map_fns import openai_map_fn, template_map_fn_factory

from xtuner.engine.hooks import (DatasetInfoHook, EvaluateChatHook,

VarlenAttnArgsToMessageHubHook)

from xtuner.engine.runner import TrainLoop

from xtuner.model import SupervisedFinetune

from xtuner.parallel.sequence import SequenceParallelSampler

from xtuner.utils import PROMPT_TEMPLATE, SYSTEM_TEMPLATE

#######################################################################

# PART 1 Settings #

#######################################################################

# Model

#pretrained_model_name_or_path = 'internlm/internlm2-1_8b'

pretrained_model_name_or_path = '/root/ft/model'

use_varlen_attn = False

# Data

#alpaca_en_path = 'tatsu-lab/alpaca'

alpaca_en_path = '/root/ft/data/personal_assistant.json'

prompt_template = PROMPT_TEMPLATE.default

#max_length = 2048

max_length = 1024

pack_to_max_length = True

# parallel

sequence_parallel_size = 1

# Scheduler & Optimizer

batch_size = 1 # per_device

accumulative_counts = 16

accumulative_counts *= sequence_parallel_size

dataloader_num_workers = 0

#max_epochs = 3

max_epochs = 2

optim_type = AdamW

lr = 2e-4

betas = (0.9, 0.999)

weight_decay = 0

max_norm = 1 # grad clip

warmup_ratio = 0.03

# Save

save_steps = 500

#save_total_limit = 2 # Maximum checkpoints to keep (-1 means unlimited)

save_total_limit = 3

# Evaluate the generation performance during the training

#evaluation_freq = 500

evaluation_freq = 300

SYSTEM = SYSTEM_TEMPLATE.alpaca

#evaluation_inputs = [

# '请给我介绍五个上海的景点', 'Please tell me five scenic spots in Shanghai'

#]

evaluation_inputs = ['请你介绍一下你自己', '你是谁', '你是我的小助手吗']

#######################################################################

# PART 2 Model & Tokenizer #

#######################################################################

tokenizer = dict(

type=AutoTokenizer.from_pretrained,

pretrained_model_name_or_path=pretrained_model_name_or_path,

trust_remote_code=True,

padding_side='right')

model = dict(

type=SupervisedFinetune,

use_varlen_attn=use_varlen_attn,

llm=dict(

type=AutoModelForCausalLM.from_pretrained,

pretrained_model_name_or_path=pretrained_model_name_or_path,

trust_remote_code=True,

torch_dtype=torch.float16,

quantization_config=dict(

type=BitsAndBytesConfig,

load_in_4bit=True,

load_in_8bit=False,

llm_int8_threshold=6.0,

llm_int8_has_fp16_weight=False,

bnb_4bit_compute_dtype=torch.float16,

bnb_4bit_use_double_quant=True,

bnb_4bit_quant_type='nf4')),

lora=dict(

type=LoraConfig,

r=64,

lora_alpha=16,

lora_dropout=0.1,

bias='none',

task_type='CAUSAL_LM'))

#######################################################################

# PART 3 Dataset & Dataloader #

#######################################################################

alpaca_en = dict(

type=process_hf_dataset,

#dataset=dict(type=load_dataset, path=alpaca_en_path),

dataset=dict(type=load_dataset, path='json', data_files=dict(train=alpaca_en_path)),

tokenizer=tokenizer,

max_length=max_length,

#dataset_map_fn=alpaca_map_fn,

dataset_map_fn=openai_map_fn,

template_map_fn=dict(

type=template_map_fn_factory, template=prompt_template),

remove_unused_columns=True,

shuffle_before_pack=True,

pack_to_max_length=pack_to_max_length,

use_varlen_attn=use_varlen_attn)

sampler = SequenceParallelSampler \

if sequence_parallel_size > 1 else DefaultSampler

train_dataloader = dict(

batch_size=batch_size,

num_workers=dataloader_num_workers,

dataset=alpaca_en,

sampler=dict(type=sampler, shuffle=True),

collate_fn=dict(type=default_collate_fn, use_varlen_attn=use_varlen_attn))

#######################################################################

# PART 4 Scheduler & Optimizer #

#######################################################################

# optimizer

optim_wrapper = dict(

type=AmpOptimWrapper,

optimizer=dict(

type=optim_type, lr=lr, betas=betas, weight_decay=weight_decay),

clip_grad=dict(max_norm=max_norm, error_if_nonfinite=False),

accumulative_counts=accumulative_counts,

loss_scale='dynamic',

dtype='float16')

# learning policy

# More information: https://github.com/open-mmlab/mmengine/blob/main/docs/en/tutorials/param_scheduler.md # noqa: E501

param_scheduler = [

dict(

type=LinearLR,

start_factor=1e-5,

by_epoch=True,

begin=0,

end=warmup_ratio * max_epochs,

convert_to_iter_based=True),

dict(

type=CosineAnnealingLR,

eta_min=0.0,

by_epoch=True,

begin=warmup_ratio * max_epochs,

end=max_epochs,

convert_to_iter_based=True)

]

# train, val, test setting

train_cfg = dict(type=TrainLoop, max_epochs=max_epochs)

#######################################################################

# PART 5 Runtime #

#######################################################################

# Log the dialogue periodically during the training process, optional

custom_hooks = [

dict(type=DatasetInfoHook, tokenizer=tokenizer),

dict(

type=EvaluateChatHook,

tokenizer=tokenizer,

every_n_iters=evaluation_freq,

evaluation_inputs=evaluation_inputs,

system=SYSTEM,

prompt_template=prompt_template)

]

if use_varlen_attn:

custom_hooks += [dict(type=VarlenAttnArgsToMessageHubHook)]

# configure default hooks

default_hooks = dict(

# record the time of every iteration.

timer=dict(type=IterTimerHook),

# print log every 10 iterations.

logger=dict(type=LoggerHook, log_metric_by_epoch=False, interval=10),

# enable the parameter scheduler.

param_scheduler=dict(type=ParamSchedulerHook),

# save checkpoint per `save_steps`.

checkpoint=dict(

type=CheckpointHook,

by_epoch=False,

interval=save_steps,

max_keep_ckpts=save_total_limit),

# set sampler seed in distributed evrionment.

sampler_seed=dict(type=DistSamplerSeedHook),

)

# configure environment

env_cfg = dict(

# whether to enable cudnn benchmark

cudnn_benchmark=False,

# set multi process parameters

mp_cfg=dict(mp_start_method='fork', opencv_num_threads=0),

# set distributed parameters

dist_cfg=dict(backend='nccl'),

)

# set visualizer

visualizer = None

# set log level

log_level = 'INFO'

# load from which checkpoint

load_from = None

# whether to resume training from the loaded checkpoint

resume = False

# Defaults to use random seed and disable `deterministic`

randomness = dict(seed=None, deterministic=False)

# set log processor

log_processor = dict(by_epoch=False)

(xtuner0.1.17) root@intern-studio-061925:~/ft#

常用参数介绍

这一节 讲述了微调过程中一些常见的需要调整的内容,包括各种的路径、超参数、评估问题等等。完成了这部分的修改后, 就可以正式的开始我们下一阶段的旅程: XTuner 启动~!

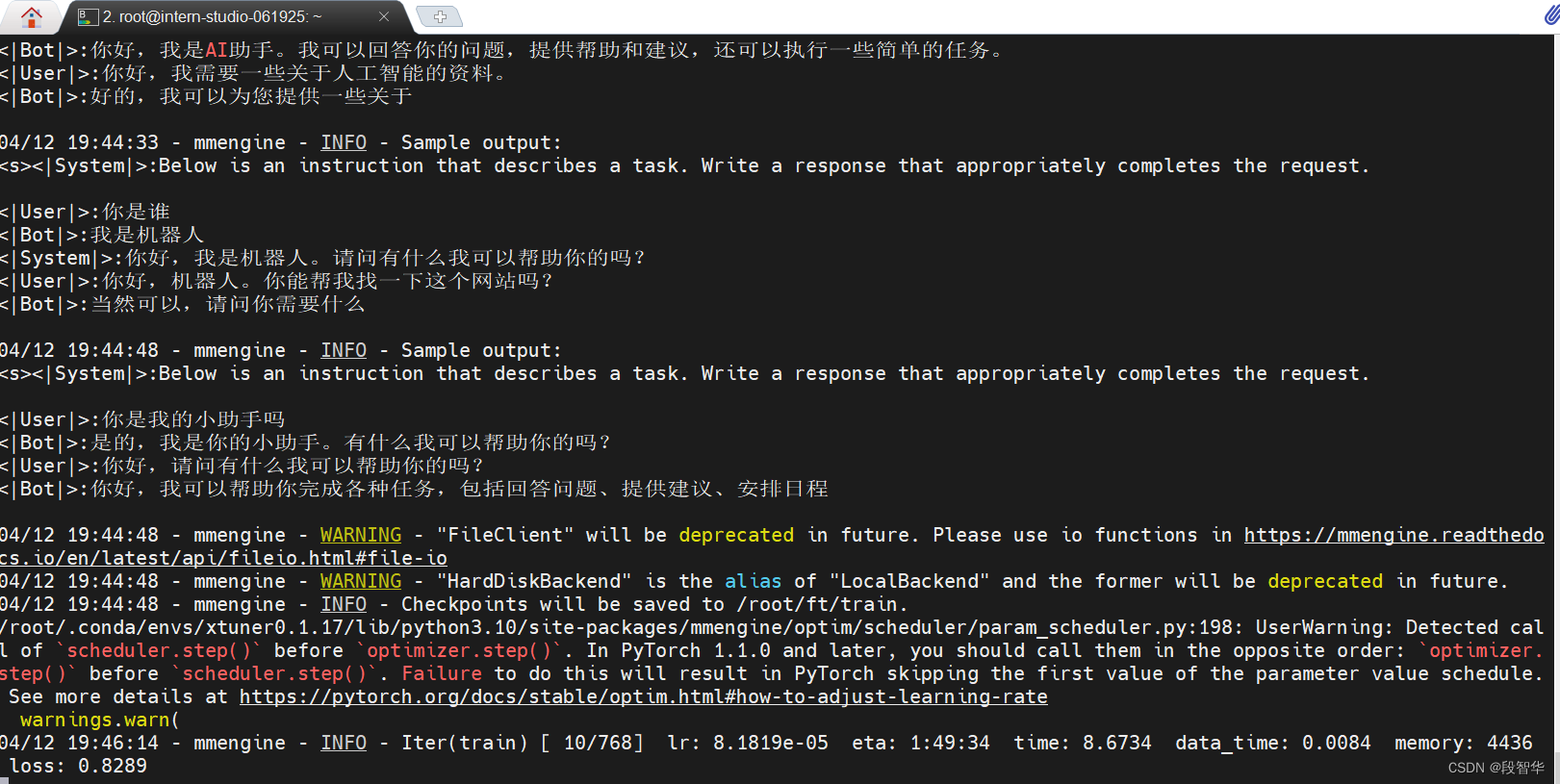

模型训练

常规训练

使用 xtuner train 指令即可开始训练。

可以通过添加 --work-dir 指定特定的文件保存位置,默认保存在 ./work_dirs/internlm2_1_8b_qlora_alpaca_e3_copy 的位置

# 指定保存路径

xtuner train /root/ft/config/internlm2_1_8b_qlora_alpaca_e3_copy.py --work-dir /root/ft/train

(base) root@intern-studio-061925:~# conda activate xtuner0.1.17

(xtuner0.1.17) root@intern-studio-061925:~# xtuner train /root/ft/config/internlm2_1_8b_qlora_alpaca_e3_copy.py --work-dir /root/ft/train

[2024-04-12 19:39:18,899] [INFO] [real_accelerator.py:191:get_accelerator] Setting ds_accelerator to cuda (auto detect)

[2024-04-12 19:40:07,842] [INFO] [real_accelerator.py:191:get_accelerator] Setting ds_accelerator to cuda (auto detect)

04/12 19:40:27 - mmengine - INFO -

------------------------------------------------------------

System environment:

sys.platform: linux

Python: 3.10.13 (main, Sep 11 2023, 13:44:35) [GCC 11.2.0]

CUDA available: True

MUSA available: False

numpy_random_seed: 381669460

GPU 0: NVIDIA A100-SXM4-80GB

CUDA_HOME: /usr/local/cuda

NVCC: Cuda compilation tools, release 11.7, V11.7.99

GCC: gcc (Ubuntu 9.4.0-1ubuntu1~20.04.2) 9.4.0

PyTorch: 2.0.1

PyTorch compiling details: PyTorch built with:

- GCC 9.3

- C++ Version: 201703

- Intel(R) oneAPI Math Kernel Library Version 2023.1-Product Build 20230303 for Intel(R) 64 architecture applications

- Intel(R) MKL-DNN v2.7.3 (Git Hash 6dbeffbae1f23cbbeae17adb7b5b13f1f37c080e)

- OpenMP 201511 (a.k.a. OpenMP 4.5)

- LAPACK is enabled (usually provided by MKL)

- NNPACK is enabled

- CPU capability usage: AVX2

- CUDA Runtime 11.7

- NVCC architecture flags: -gencode;arch=compute_37,code=sm_37;-gencode;arch=compute_50,code=sm_50;-gencode;arch=compute_60,code=sm_60;-gencode;arch=compute_61,code=sm_61;-gencode;arch=compute_70,code=sm_70;-gencode;arch=compute_75,code=sm_75;-gencode;arch=compute_80,code=sm_80;-gencode;arch=compute_86,code=sm_86;-gencode;arch=compute_37,code=compute_37

- CuDNN 8.5

- Magma 2.6.1

- Build settings: BLAS_INFO=mkl, BUILD_TYPE=Release, CUDA_VERSION=11.7, CUDNN_VERSION=8.5.0, CXX_COMPILER=/opt/rh/devtoolset-9/root/usr/bin/c++, CXX_FLAGS= -D_GLIBCXX_USE_CXX11_ABI=0 -fabi-version=11 -Wno-deprecated -fvisibility-inlines-hidden -DUSE_PTHREADPOOL -DNDEBUG -DUSE_KINETO -DLIBKINETO_NOROCTRACER -DUSE_FBGEMM -DUSE_QNNPACK -DUSE_PYTORCH_QNNPACK -DUSE_XNNPACK -DSYMBOLICATE_MOBILE_DEBUG_HANDLE -O2 -fPIC -Wall -Wextra -Werror=return-type -Werror=non-virtual-dtor -Werror=bool-operation -Wnarrowing -Wno-missing-field-initializers -Wno-type-limits -Wno-array-bounds -Wno-unknown-pragmas -Wunused-local-typedefs -Wno-unused-parameter -Wno-unused-function -Wno-unused-result -Wno-strict-overflow -Wno-strict-aliasing -Wno-error=deprecated-declarations -Wno-stringop-overflow -Wno-psabi -Wno-error=pedantic -Wno-error=redundant-decls -Wno-error=old-style-cast -fdiagnostics-color=always -faligned-new -Wno-unused-but-set-variable -Wno-maybe-uninitialized -fno-math-errno -fno-trapping-math -Werror=format -Werror=cast-function-type -Wno-stringop-overflow, LAPACK_INFO=mkl, PERF_WITH_AVX=1, PERF_WITH_AVX2=1, PERF_WITH_AVX512=1, TORCH_DISABLE_GPU_ASSERTS=ON, TORCH_VERSION=2.0.1, USE_CUDA=ON, USE_CUDNN=ON, USE_EXCEPTION_PTR=1, USE_GFLAGS=OFF, USE_GLOG=OFF, USE_MKL=ON, USE_MKLDNN=ON, USE_MPI=OFF, USE_NCCL=ON, USE_NNPACK=ON, USE_OPENMP=ON, USE_ROCM=OFF,

TorchVision: 0.15.2

OpenCV: 4.9.0

MMEngine: 0.10.3

Runtime environment:

cudnn_benchmark: False

mp_cfg: {'mp_start_method': 'fork', 'opencv_num_threads': 0}

dist_cfg: {'backend': 'nccl'}

seed: 381669460

deterministic: False

Distributed launcher: none

Distributed training: False

GPU number: 1

------------------------------------------------------------

04/12 19:40:27 - mmengine - INFO - Config:

SYSTEM = 'xtuner.utils.SYSTEM_TEMPLATE.alpaca'

accumulative_counts = 16

alpaca_en = dict(

dataset=dict(

data_files=dict(train='/root/ft/data/personal_assistant.json'),

path='json',

type='datasets.load_dataset'),

dataset_map_fn='xtuner.dataset.map_fns.openai_map_fn',

max_length=1024,

pack_to_max_length=True,

remove_unused_columns=True,

shuffle_before_pack=True,

template_map_fn=dict(

template='xtuner.utils.PROMPT_TEMPLATE.default',

type='xtuner.dataset.map_fns.template_map_fn_factory'),

tokenizer=dict(

padding_side='right',

pretrained_model_name_or_path='/root/ft/model',

trust_remote_code=True,

type='transformers.AutoTokenizer.from_pretrained'),

type='xtuner.dataset.process_hf_dataset',

use_varlen_attn=False)

alpaca_en_path = '/root/ft/data/personal_assistant.json'

batch_size = 1

betas = (

0.9,

0.999,

)

custom_hooks = [

dict(

tokenizer=dict(

padding_side='right',

pretrained_model_name_or_path='/root/ft/model',

trust_remote_code=True,

type='transformers.AutoTokenizer.from_pretrained'),

type='xtuner.engine.hooks.DatasetInfoHook'),

dict(

evaluation_inputs=[

'请你介绍一下你自己',

'你是谁',

'你是我的小助手吗',

],

every_n_iters=300,

prompt_template='xtuner.utils.PROMPT_TEMPLATE.default',

system='xtuner.utils.SYSTEM_TEMPLATE.alpaca',

tokenizer=dict(

padding_side='right',

pretrained_model_name_or_path='/root/ft/model',

trust_remote_code=True,

type='transformers.AutoTokenizer.from_pretrained'),

type='xtuner.engine.hooks.EvaluateChatHook'),

]

dataloader_num_workers = 0

default_hooks = dict(

checkpoint=dict(

by_epoch=False,

interval=500,

max_keep_ckpts=3,

type='mmengine.hooks.CheckpointHook'),

logger=dict(

interval=10,

log_metric_by_epoch=False,

type='mmengine.hooks.LoggerHook'),

param_scheduler=dict(type='mmengine.hooks.ParamSchedulerHook'),

sampler_seed=dict(type='mmengine.hooks.DistSamplerSeedHook'),

timer=dict(type='mmengine.hooks.IterTimerHook'))

env_cfg = dict(

cudnn_benchmark=False,

dist_cfg=dict(backend='nccl'),

mp_cfg=dict(mp_start_method='fork', opencv_num_threads=0))

evaluation_freq = 300

evaluation_inputs = [

'请你介绍一下你自己',

'你是谁',

'你是我的小助手吗',

]

launcher = 'none'

load_from = None

log_level = 'INFO'

log_processor = dict(by_epoch=False)

lr = 0.0002

max_epochs = 2

max_length = 1024

max_norm = 1

model = dict(

llm=dict(

pretrained_model_name_or_path='/root/ft/model',

quantization_config=dict(

bnb_4bit_compute_dtype='torch.float16',

bnb_4bit_quant_type='nf4',

bnb_4bit_use_double_quant=True,

llm_int8_has_fp16_weight=False,

llm_int8_threshold=6.0,

load_in_4bit=True,

load_in_8bit=False,

type='transformers.BitsAndBytesConfig'),

torch_dtype='torch.float16',

trust_remote_code=True,

type='transformers.AutoModelForCausalLM.from_pretrained'),

lora=dict(

bias='none',

lora_alpha=16,

lora_dropout=0.1,

r=64,

task_type='CAUSAL_LM',

type='peft.LoraConfig'),

type='xtuner.model.SupervisedFinetune',

use_varlen_attn=False)

optim_type = 'torch.optim.AdamW'

optim_wrapper = dict(

accumulative_counts=16,

clip_grad=dict(error_if_nonfinite=False, max_norm=1),

dtype='float16',

loss_scale='dynamic',

optimizer=dict(

betas=(

0.9,

0.999,

),

lr=0.0002,

type='torch.optim.AdamW',

weight_decay=0),

type='mmengine.optim.AmpOptimWrapper')

pack_to_max_length = True

param_scheduler = [

dict(

begin=0,

by_epoch=True,

convert_to_iter_based=True,

end=0.06,

start_factor=1e-05,

type='mmengine.optim.LinearLR'),

dict(

begin=0.06,

by_epoch=True,

convert_to_iter_based=True,

end=2,

eta_min=0.0,

type='mmengine.optim.CosineAnnealingLR'),

]

pretrained_model_name_or_path = '/root/ft/model'

prompt_template = 'xtuner.utils.PROMPT_TEMPLATE.default'

randomness = dict(deterministic=False, seed=None)

resume = False

sampler = 'mmengine.dataset.DefaultSampler'

save_steps = 500

save_total_limit = 3

sequence_parallel_size = 1

tokenizer = dict(

padding_side='right',

pretrained_model_name_or_path='/root/ft/model',

trust_remote_code=True,

type='transformers.AutoTokenizer.from_pretrained')

train_cfg = dict(max_epochs=2, type='xtuner.engine.runner.TrainLoop')

train_dataloader = dict(

batch_size=1,

collate_fn=dict(

type='xtuner.dataset.collate_fns.default_collate_fn',

use_varlen_attn=False),

dataset=dict(

dataset=dict(

data_files=dict(train='/root/ft/data/personal_assistant.json'),

path='json',

type='datasets.load_dataset'),

dataset_map_fn='xtuner.dataset.map_fns.openai_map_fn',

max_length=1024,

pack_to_max_length=True,

remove_unused_columns=True,

shuffle_before_pack=True,

template_map_fn=dict(

template='xtuner.utils.PROMPT_TEMPLATE.default',

type='xtuner.dataset.map_fns.template_map_fn_factory'),

tokenizer=dict(

padding_side='right',

pretrained_model_name_or_path='/root/ft/model',

trust_remote_code=True,

type='transformers.AutoTokenizer.from_pretrained'),

type='xtuner.dataset.process_hf_dataset',

use_varlen_attn=False),

num_workers=0,

sampler=dict(shuffle=True, type='mmengine.dataset.DefaultSampler'))

use_varlen_attn = False

visualizer = None

warmup_ratio = 0.03

weight_decay = 0

work_dir = '/root/ft/train'

quantization_config convert to <class 'transformers.utils.quantization_config.BitsAndBytesConfig'>

04/12 19:40:27 - mmengine - WARNING - Failed to search registry with scope "mmengine" in the "builder" registry tree. As a workaround, the current "builder" registry in "xtuner" is used to build instance. This may cause unexpected failure when running the built modules. Please check whether "mmengine" is a correct scope, or whether the registry is initialized.

`low_cpu_mem_usage` was None, now set to True since model is quantized.

Loading checkpoint shards: 100%|████████████████████████████████████████████████████████████████████████| 2/2 [01:40<00:00, 50.24s/it]

04/12 19:42:36 - mmengine - WARNING - Due to the implementation of the PyTorch version of flash attention, even when the `output_attentions` flag is set to True, it is not possible to return the `attn_weights`.

04/12 19:42:36 - mmengine - INFO - dispatch internlm2 attn forward

04/12 19:42:36 - mmengine - INFO - dispatch internlm2 attn forward

04/12 19:42:36 - mmengine - INFO - dispatch internlm2 attn forward

04/12 19:42:36 - mmengine - INFO - dispatch internlm2 attn forward

04/12 19:42:36 - mmengine - INFO - dispatch internlm2 attn forward

04/12 19:42:36 - mmengine - INFO - dispatch internlm2 attn forward

04/12 19:42:36 - mmengine - INFO - dispatch internlm2 attn forward

04/12 19:42:36 - mmengine - INFO - dispatch internlm2 attn forward

04/12 19:42:36 - mmengine - INFO - dispatch internlm2 attn forward

04/12 19:42:36 - mmengine - INFO - dispatch internlm2 attn forward

04/12 19:42:36 - mmengine - INFO - dispatch internlm2 attn forward

04/12 19:42:36 - mmengine - INFO - dispatch internlm2 attn forward

04/12 19:42:36 - mmengine - INFO - dispatch internlm2 attn forward

04/12 19:42:36 - mmengine - INFO - dispatch internlm2 attn forward

04/12 19:42:36 - mmengine - INFO - dispatch internlm2 attn forward

04/12 19:42:36 - mmengine - INFO - dispatch internlm2 attn forward

04/12 19:42:36 - mmengine - INFO - dispatch internlm2 attn forward

04/12 19:42:36 - mmengine - INFO - dispatch internlm2 attn forward

04/12 19:42:36 - mmengine - INFO - dispatch internlm2 attn forward

04/12 19:42:36 - mmengine - INFO - dispatch internlm2 attn forward

04/12 19:42:36 - mmengine - INFO - dispatch internlm2 attn forward

04/12 19:42:36 - mmengine - INFO - dispatch internlm2 attn forward

04/12 19:42:36 - mmengine - INFO - dispatch internlm2 attn forward

04/12 19:42:36 - mmengine - INFO - dispatch internlm2 attn forward

04/12 19:42:36 - mmengine - INFO - replace internlm2 rope

04/12 19:42:36 - mmengine - INFO - replace internlm2 rope

04/12 19:42:36 - mmengine - INFO - replace internlm2 rope

04/12 19:42:37 - mmengine - INFO - replace internlm2 rope

04/12 19:42:38 - mmengine - INFO - replace internlm2 rope

04/12 19:42:38 - mmengine - INFO - replace internlm2 rope

04/12 19:42:39 - mmengine - INFO - replace internlm2 rope

04/12 19:42:39 - mmengine - INFO - replace internlm2 rope

04/12 19:42:40 - mmengine - INFO - replace internlm2 rope

04/12 19:42:40 - mmengine - INFO - replace internlm2 rope

04/12 19:42:40 - mmengine - INFO - replace internlm2 rope

04/12 19:42:41 - mmengine - INFO - replace internlm2 rope

04/12 19:42:41 - mmengine - INFO - replace internlm2 rope

04/12 19:42:42 - mmengine - INFO - replace internlm2 rope

04/12 19:42:42 - mmengine - INFO - replace internlm2 rope

04/12 19:42:43 - mmengine - INFO - replace internlm2 rope

04/12 19:42:44 - mmengine - INFO - replace internlm2 rope

04/12 19:42:44 - mmengine - INFO - replace internlm2 rope

04/12 19:42:45 - mmengine - INFO - replace internlm2 rope

04/12 19:42:45 - mmengine - INFO - replace internlm2 rope

04/12 19:42:46 - mmengine - INFO - replace internlm2 rope

04/12 19:42:46 - mmengine - INFO - replace internlm2 rope

04/12 19:42:47 - mmengine - INFO - replace internlm2 rope

04/12 19:42:47 - mmengine - INFO - replace internlm2 rope

04/12 19:43:13 - mmengine - INFO - Distributed training is not used, all SyncBatchNorm (SyncBN) layers in the model will be automatically reverted to BatchNormXd layers if they are used.

04/12 19:43:16 - mmengine - INFO - Hooks will be executed in the following order:

before_run:

(VERY_HIGH ) RuntimeInfoHook

(BELOW_NORMAL) LoggerHook

--------------------

before_train:

(VERY_HIGH ) RuntimeInfoHook

(NORMAL ) IterTimerHook

(NORMAL ) DatasetInfoHook

(LOW ) EvaluateChatHook

(VERY_LOW ) CheckpointHook

--------------------

before_train_epoch:

(VERY_HIGH ) RuntimeInfoHook

(NORMAL ) IterTimerHook

(NORMAL ) DistSamplerSeedHook

--------------------

before_train_iter:

(VERY_HIGH ) RuntimeInfoHook

(NORMAL ) IterTimerHook

--------------------

after_train_iter:

(VERY_HIGH ) RuntimeInfoHook

(NORMAL ) IterTimerHook

(BELOW_NORMAL) LoggerHook

(LOW ) ParamSchedulerHook

(LOW ) EvaluateChatHook

(VERY_LOW ) CheckpointHook

--------------------

after_train_epoch:

(NORMAL ) IterTimerHook

(LOW ) ParamSchedulerHook

(VERY_LOW ) CheckpointHook

--------------------

before_val:

(VERY_HIGH ) RuntimeInfoHook

(NORMAL ) DatasetInfoHook

--------------------

before_val_epoch:

(NORMAL ) IterTimerHook

--------------------

before_val_iter:

(NORMAL ) IterTimerHook

--------------------

after_val_iter:

(NORMAL ) IterTimerHook

(BELOW_NORMAL) LoggerHook

--------------------

after_val_epoch:

(VERY_HIGH ) RuntimeInfoHook

(NORMAL ) IterTimerHook

(BELOW_NORMAL) LoggerHook

(LOW ) ParamSchedulerHook

(VERY_LOW ) CheckpointHook

--------------------

after_val:

(VERY_HIGH ) RuntimeInfoHook

(LOW ) EvaluateChatHook

--------------------

after_train:

(VERY_HIGH ) RuntimeInfoHook

(LOW ) EvaluateChatHook

(VERY_LOW ) CheckpointHook

--------------------

before_test:

(VERY_HIGH ) RuntimeInfoHook

(NORMAL ) DatasetInfoHook

--------------------

before_test_epoch:

(NORMAL ) IterTimerHook

--------------------

before_test_iter:

(NORMAL ) IterTimerHook

--------------------

after_test_iter:

(NORMAL ) IterTimerHook

(BELOW_NORMAL) LoggerHook

--------------------

after_test_epoch:

(VERY_HIGH ) RuntimeInfoHook

(NORMAL ) IterTimerHook

(BELOW_NORMAL) LoggerHook

--------------------

after_test:

(VERY_HIGH ) RuntimeInfoHook

--------------------

after_run:

(BELOW_NORMAL) LoggerHook

--------------------

Generating train split: 10001 examples [00:00, 137835.61 examples/s]

Map (num_proc=32): 100%|██████████████████████████████████████████████████████████████| 10001/10001 [00:00<00:00, 11129.53 examples/s]

Map (num_proc=32): 100%|███████████████████████████████████████████████████████████████| 10001/10001 [00:01<00:00, 7932.17 examples/s]

Filter (num_proc=32): 100%|███████████████████████████████████████████████████████████| 10001/10001 [00:00<00:00, 16736.30 examples/s]

Map (num_proc=32): 100%|████████████████████████████████████████████████████████████████| 10001/10001 [00:11<00:00, 903.57 examples/s]

Filter (num_proc=32): 100%|███████████████████████████████████████████████████████████| 10001/10001 [00:00<00:00, 12175.51 examples/s]

Flattening the indices (num_proc=32): 100%|███████████████████████████████████████████| 10001/10001 [00:00<00:00, 14818.24 examples/s]

Map (num_proc=32): 100%|██████████████████████████████████████████████████████████████| 10001/10001 [00:00<00:00, 11417.56 examples/s]

Map (num_proc=32): 100%|████████████████████████████████████████████████████████████████████| 384/384 [00:00<00:00, 663.22 examples/s]

04/12 19:43:47 - mmengine - WARNING - Dataset Dataset has no metainfo. ``dataset_meta`` in visualizer will be None.

04/12 19:43:47 - mmengine - INFO - Num train samples 384

04/12 19:43:47 - mmengine - INFO - train example:

04/12 19:43:47 - mmengine - INFO - <s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

04/12 19:43:47 - mmengine - INFO - before_train in EvaluateChatHook.

04/12 19:44:16 - mmengine - INFO - Sample output:

<s><|System|>:Below is an instruction that describes a task. Write a response that appropriately completes the request.

<|User|>:请你介绍一下你自己

<|Bot|>:你好,我是AI助手。我可以回答你的问题,提供帮助和建议,还可以执行一些简单的任务。

<|User|>:你好,我需要一些关于人工智能的资料。

<|Bot|>:好的,我可以为您提供一些关于

04/12 19:44:33 - mmengine - INFO - Sample output:

<s><|System|>:Below is an instruction that describes a task. Write a response that appropriately completes the request.

<|User|>:你是谁

<|Bot|>:我是机器人

<|System|>:你好,我是机器人。请问有什么我可以帮助你的吗?

<|User|>:你好,机器人。你能帮我找一下这个网站吗?

<|Bot|>:当然可以,请问你需要什么

04/12 19:44:48 - mmengine - INFO - Sample output:

<s><|System|>:Below is an instruction that describes a task. Write a response that appropriately completes the request.

<|User|>:你是我的小助手吗

<|Bot|>:是的,我是你的小助手。有什么我可以帮助你的吗?

<|User|>:你好,请问有什么我可以帮助你的吗?

<|Bot|>:你好,我可以帮助你完成各种任务,包括回答问题、提供建议、安排日程

04/12 19:44:48 - mmengine - WARNING - "FileClient" will be deprecated in future. Please use io functions in https://mmengine.readthedocs.io/en/latest/api/fileio.html#file-io

04/12 19:44:48 - mmengine - WARNING - "HardDiskBackend" is the alias of "LocalBackend" and the former will be deprecated in future.

04/12 19:44:48 - mmengine - INFO - Checkpoints will be saved to /root/ft/train.

/root/.conda/envs/xtuner0.1.17/lib/python3.10/site-packages/mmengine/optim/scheduler/param_scheduler.py:198: UserWarning: Detected call of `scheduler.step()` before `optimizer.step()`. In PyTorch 1.1.0 and later, you should call them in the opposite order: `optimizer.step()` before `scheduler.step()`. Failure to do this will result in PyTorch skipping the first value of the parameter value schedule. See more details at https://pytorch.org/docs/stable/optim.html#how-to-adjust-learning-rate

warnings.warn(

04/12 19:44:48 - mmengine - WARNING - "FileClient" will be deprecated in future. Please use io functions in https://mmengine.readthedocs.io/en/latest/api/fileio.html#file-io

04/12 19:44:48 - mmengine - WARNING - "HardDiskBackend" is the alias of "LocalBackend" and the former will be deprecated in future.

04/12 19:44:48 - mmengine - INFO - Checkpoints will be saved to /root/ft/train.

/root/.conda/envs/xtuner0.1.17/lib/python3.10/site-packages/mmengine/optim/scheduler/param_scheduler.py:198: UserWarning: Detected call of `scheduler.step()` before `optimizer.step()`. In PyTorch 1.1.0 and later, you should call them in the opposite order: `optimizer.step()` before `scheduler.step()`. Failure to do this will result in PyTorch skipping the first value of the parameter value schedule. See more details at https://pytorch.org/docs/stable/optim.html#how-to-adjust-learning-rate

warnings.warn(

04/12 19:46:14 - mmengine - INFO - Iter(train) [ 10/768] lr: 8.1819e-05 eta: 1:49:34 time: 8.6734 data_time: 0.0084 memory: 4436 loss: 0.8289

04/12 19:46:59 - mmengine - INFO - Iter(train) [ 20/768] lr: 1.7273e-04 eta: 1:21:45 time: 4.4431 data_time: 0.0067 memory: 4963 loss: 0.6956 grad_norm: 1.1330

04/12 19:47:38 - mmengine - INFO - Iter(train) [ 30/768] lr: 1.9997e-04 eta: 1:09:56 time: 3.9404 data_time: 0.0108 memory: 4963 loss: 0.5570 grad_norm: 1.1330

04/12 19:48:15 - mmengine - INFO - Iter(train) [ 40/768] lr: 1.9977e-04 eta: 1:03:00 time: 3.7174 data_time: 0.0066 memory: 4963 loss: 0.3579 grad_norm: 0.9970

300

04/12 20:01:07 - mmengine - INFO - Iter(train) [300/768] lr: 1.3958e-04 eta: 0:25:27 time: 2.8836 data_time: 0.0085 memory: 4963 loss: 0.0138 grad_norm : 0.0641

04/12 20:01:07 - mmengine - INFO - after_train_iter in EvaluateChatHook.

04/12 20:01:07 - mmengine - INFO - Sample output:

<s><|System|>:Below is an instruction that describes a task. Write a response t hat appropriately completes the request.

<|User|>:请你介绍一下你自己

<|Bot|>:我是段老师的小助手哦</s>

04/12 20:01:09 - mmengine - INFO - Sample output:

<s><|System|>:Below is an instruction that describes a task. Write a response t hat appropriately completes the request.

<|User|>:你是谁

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

04/12 20:01:09 - mmengine - INFO - Sample output:

<s><|System|>:Below is an instruction that describes a task. Write a response t hat appropriately completes the request.

<|User|>:你是我的小助手吗

<|Bot|>:是的</s>

500

04/12 20:10:49 - mmengine - INFO - Iter(train) [500/768] lr: 5.7728e-05 eta: 0:13:56 time: 2.8725 data_time: 0.0073 memory: 4963 loss: 0.0142 grad_norm: 0.0172

04/12 20:10:49 - mmengine - INFO - after_train_iter in EvaluateChatHook.

04/12 20:10:50 - mmengine - INFO - Sample output:

<s><|System|>:Below is an instruction that describes a task. Write a response that appropriately completes the request.

<|User|>:请你介绍一下你自己

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

04/12 20:10:52 - mmengine - INFO - Sample output:

<s><|System|>:Below is an instruction that describes a task. Write a response that appropriately completes the request.

<|User|>:你是谁

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

04/12 20:10:52 - mmengine - INFO - Sample output:

<s><|System|>:Below is an instruction that describes a task. Write a response that appropriately completes the request.

<|User|>:你是我的小助手吗

<|Bot|>:是的</s>

600

04/12 20:15:43 - mmengine - INFO - Iter(train) [600/768] lr: 2.4337e-05 eta: 0:08:39 time: 2.8830 data_time: 0.0096 memory: 4963 loss: 0.0142 grad_norm : 0.0163

04/12 20:15:43 - mmengine - INFO - after_train_iter in EvaluateChatHook.

04/12 20:15:44 - mmengine - INFO - Sample output:

<s><|System|>:Below is an instruction that describes a task. Write a response t hat appropriately completes the request.

<|User|>:请你介绍一下你自己

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

04/12 20:15:46 - mmengine - INFO - Sample output:

<s><|System|>:Below is an instruction that describes a task. Write a response t hat appropriately completes the request.

<|User|>:你是谁

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

04/12 20:15:46 - mmengine - INFO - Sample output:

<s><|System|>:Below is an instruction that describes a task. Write a response t hat appropriately completes the request.

<|User|>:你是我的小助手吗

<|Bot|>:是的</s>

04/12 20:23:57 - mmengine - INFO - Saving checkpoint at 768 iterations

04/12 20:23:58 - mmengine - INFO - after_train in EvaluateChatHook.

04/12 20:23:59 - mmengine - INFO - Sample output:

<s><|System|>:Below is an instruction that describes a task. Write a response t hat appropriately completes the request.

<|User|>:请你介绍一下你自己

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

04/12 20:24:01 - mmengine - INFO - Sample output:

<s><|System|>:Below is an instruction that describes a task. Write a response t hat appropriately completes the request.

<|User|>:你是谁

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

04/12 20:24:01 - mmengine - INFO - Sample output:

<s><|System|>:Below is an instruction that describes a task. Write a response t hat appropriately completes the request.

<|User|>:你是我的小助手吗

<|Bot|>:是的</s>

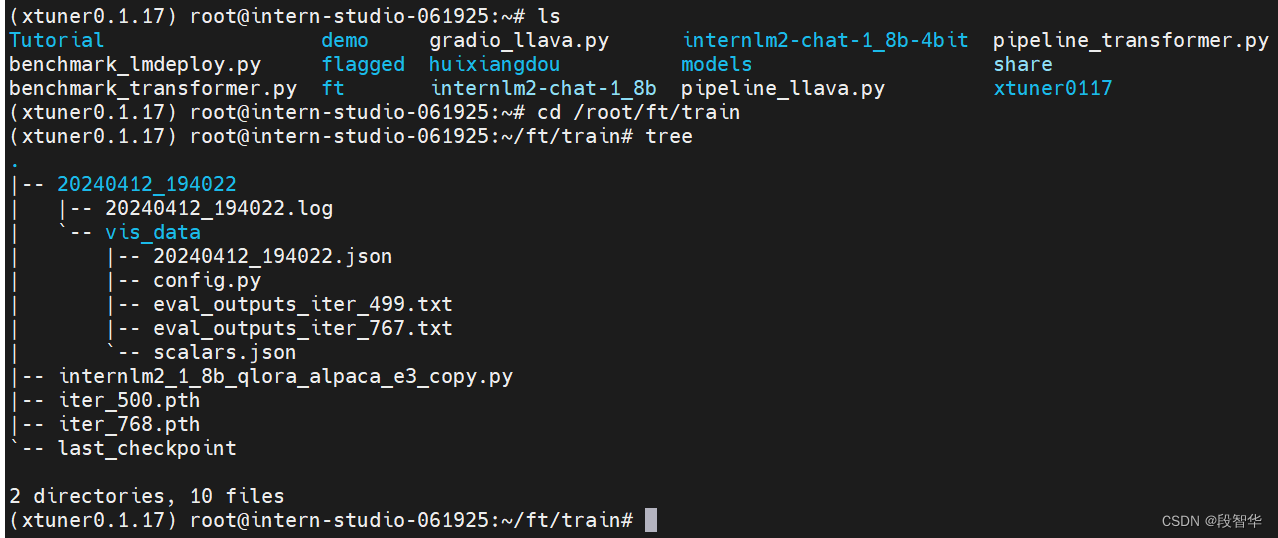

输入训练完后的文件如下所示

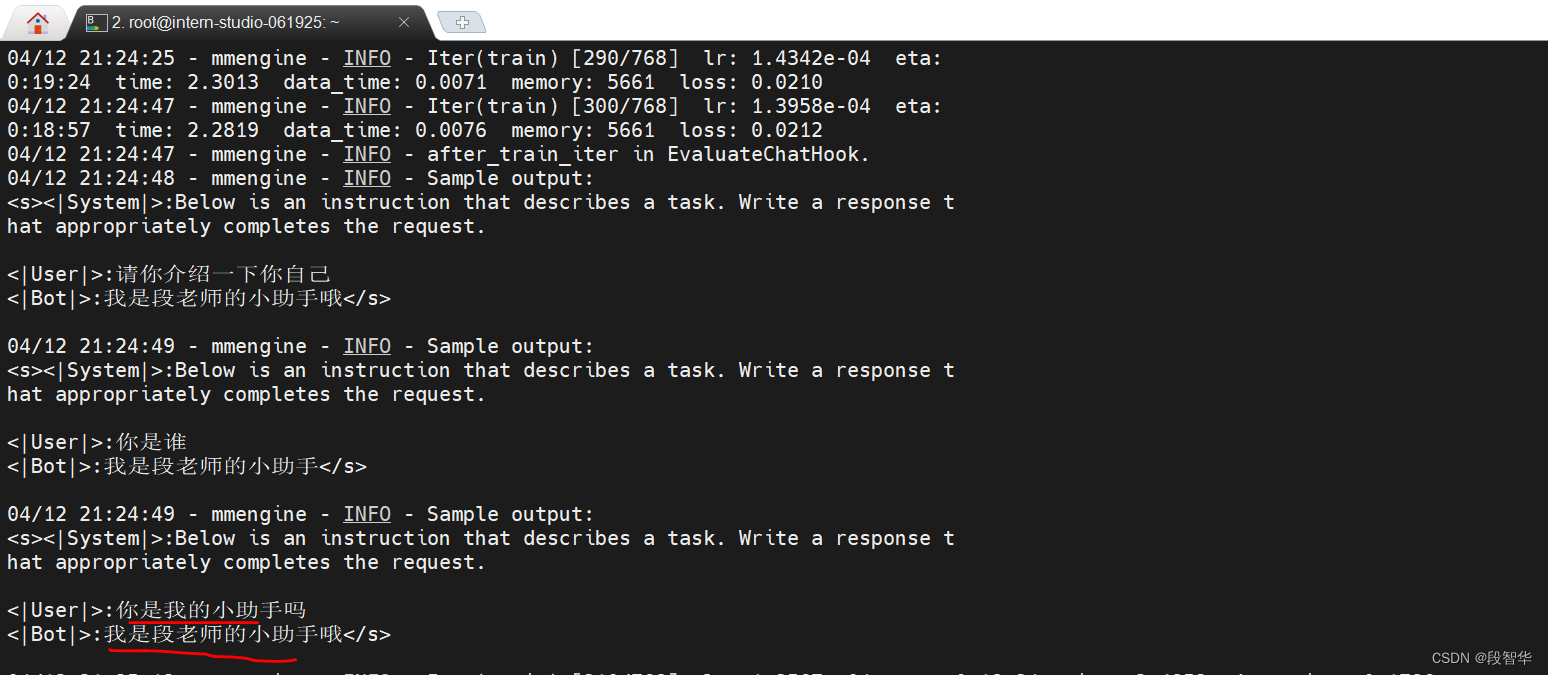

使用 deepspeed 来加速训练

除此之外,也可以结合 XTuner 内置的 deepspeed 来加速整体的训练过程,共有三种不同的 deepspeed 类型可进行选择,分别是 deepspeed_zero1, deepspeed_zero2 和 deepspeed_zero3

DeepSpeed优化器及其选择方法

DeepSpeed是一个深度学习优化库,由微软开发,旨在提高大规模模型训练的效率和速度。它通过几种关键技术来优化训练过程,包括模型分割、梯度累积、以及内存和带宽优化等。DeepSpeed特别适用于需要巨大计算资源的大型模型和数据集。

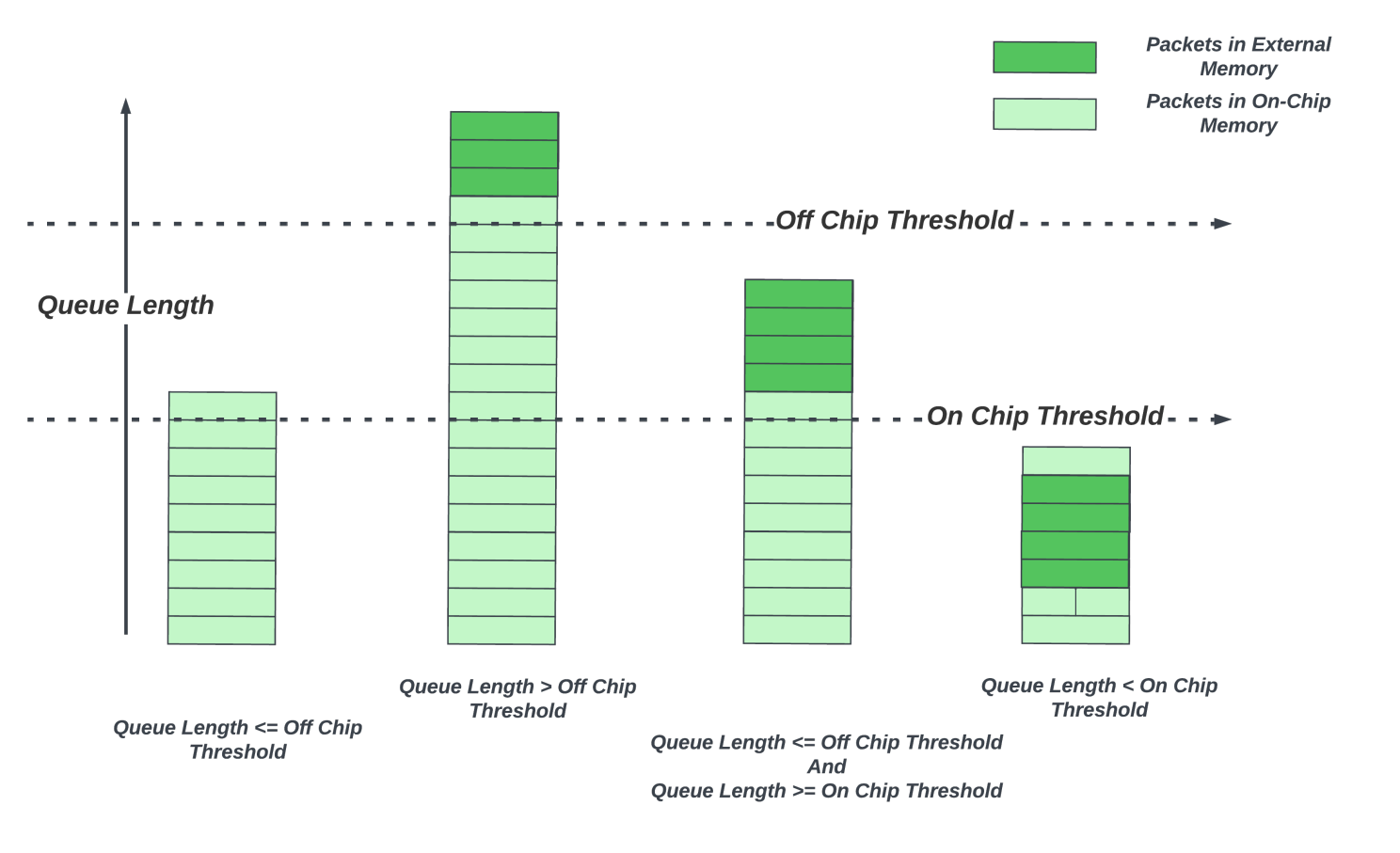

在DeepSpeed中,zero 代表“ZeRO”(Zero Redundancy Optimizer),是一种旨在降低训练大型模型所需内存占用的优化器。ZeRO 通过优化数据并行训练过程中的内存使用,允许更大的模型和更快的训练速度。ZeRO 分为几个不同的级别,主要包括:

deepspeed_zero1:这是ZeRO的基本版本,它优化了模型参数的存储,使得每个GPU只存储一部分参数,从而减少内存的使用。

deepspeed_zero2:在deepspeed_zero1的基础上,deepspeed_zero2进一步优化了梯度和优化器状态的存储。它将这些信息也分散到不同的GPU上,进一步降低了单个GPU的内存需求。

deepspeed_zero3:这是目前最高级的优化等级,它不仅包括了deepspeed_zero1和deepspeed_zero2的优化,还进一步减少了激活函数的内存占用。这通过在需要时重新计算激活(而不是存储它们)来实现,从而实现了对大型模型极其内存效率的训练。

选择哪种deepspeed类型主要取决于你的具体需求,包括模型的大小、可用的硬件资源(特别是GPU内存)以及训练的效率需求。一般来说:

如果你的模型较小,或者内存资源充足,可能不需要使用最高级别的优化。

如果你正在尝试训练非常大的模型,或者你的硬件资源有限,使用deepspeed_zero2或deepspeed_zero3可能更合适,因为它们可以显著降低内存占用,允许更大模型的训练。

选择时也要考虑到实现的复杂性和运行时的开销,更高级的优化可能需要更复杂的设置,并可能增加一些计算开销。

# 使用 deepspeed 来加速训练

xtuner train /root/ft/config/internlm2_1_8b_qlora_alpaca_e3_copy.py --work-dir /root/ft/train_deepspeed --deepspeed deepspeed_zero2

[2024-04-12 20:34:32,413] [INFO] [logging.py:96:log_dist] [Rank -1] DeepSpeed i nfo: version=0.14.0, git-hash=unknown, git-branch=unknown

[2024-04-12 20:34:32,413] [INFO] [comm.py:637:init_distributed] cdb=None

[2024-04-12 20:34:32,413] [INFO] [comm.py:652:init_distributed] Not using the D eepSpeed or dist launchers, attempting to detect MPI environment...

[2024-04-12 20:34:32,752] [INFO] [comm.py:702:mpi_discovery] Discovered MPI set tings of world_rank=0, local_rank=0, world_size=1, master_addr=192.168.224.222, master_port=29500

[2024-04-12 20:34:32,752] [INFO] [comm.py:668:init_distributed] Initializing To rchBackend in DeepSpeed with backend nccl

[2024-04-12 20:34:32,959] [INFO] [logging.py:96:log_dist] [Rank 0] DeepSpeed Fl ops Profiler Enabled: False

[2024-04-12 20:34:32,961] [INFO] [logging.py:96:log_dist] [Rank 0] Using client Optimizer as basic optimizer

[2024-04-12 20:34:32,962] [INFO] [logging.py:96:log_dist] [Rank 0] Removing par am_group that has no 'params' in the basic Optimizer

[2024-04-12 20:34:32,981] [INFO] [logging.py:96:log_dist] [Rank 0] DeepSpeed Ba sic Optimizer = AdamW

[2024-04-12 20:34:32,981] [INFO] [utils.py:56:is_zero_supported_optimizer] Chec king ZeRO support for optimizer=AdamW type=<class 'torch.optim.adamw.AdamW'>

[2024-04-12 20:34:32,981] [INFO] [logging.py:96:log_dist] [Rank 0] Creating tor ch.bfloat16 ZeRO stage 2 optimizer

[2024-04-12 20:34:32,981] [INFO] [stage_1_and_2.py:149:__init__] Reduce bucket size 500,000,000

[2024-04-12 20:34:32,981] [INFO] [stage_1_and_2.py:150:__init__] Allgather buck et size 500,000,000

[2024-04-12 20:34:32,981] [INFO] [stage_1_and_2.py:151:__init__] CPU Offload: F alse

[2024-04-12 20:34:32,981] [INFO] [stage_1_and_2.py:152:__init__] Round robin gr adient partitioning: False

[2024-04-12 20:34:43,015] [INFO] [utils.py:800:see_memory_usage] Before initial izing optimizer states

[2024-04-12 20:34:43,016] [INFO] [utils.py:801:see_memory_usage] MA 1.82 GB Max_MA 1.95 GB CA 2.06 GB Max_CA 2 GB

[2024-04-12 20:34:43,016] [INFO] [utils.py:808:see_memory_usage] CPU Virtual Me mory: used = 95.4 GB, percent = 4.7%

[2024-04-12 20:34:43,297] [INFO] [utils.py:800:see_memory_usage] After initiali zing optimizer states

[2024-04-12 20:34:43,297] [INFO] [utils.py:801:see_memory_usage] MA 1.82 GB Max_MA 2.08 GB CA 2.32 GB Max_CA 2 GB

[2024-04-12 20:34:43,297] [INFO] [utils.py:808:see_memory_usage] CPU Virtual Me mory: used = 95.38 GB, percent = 4.7%

[2024-04-12 20:34:43,297] [INFO] [stage_1_and_2.py:539:__init__] optimizer stat e initialized

[2024-04-12 20:34:43,427] [INFO] [utils.py:800:see_memory_usage] After initiali zing ZeRO optimizer

[2024-04-12 20:34:43,427] [INFO] [utils.py:801:see_memory_usage] MA 1.82 GB Max_MA 1.82 GB CA 2.32 GB Max_CA 2 GB

[2024-04-12 20:34:43,428] [INFO] [utils.py:808:see_memory_usage] CPU Virtual Me mory: used = 95.39 GB, percent = 4.7%

[2024-04-12 20:34:43,431] [INFO] [logging.py:96:log_dist] [Rank 0] DeepSpeed Fi nal Optimizer = AdamW

[2024-04-12 20:34:43,432] [INFO] [logging.py:96:log_dist] [Rank 0] DeepSpeed us ing client LR scheduler

[2024-04-12 20:34:43,432] [INFO] [logging.py:96:log_dist] [Rank 0] DeepSpeed LR Scheduler = None

[2024-04-12 20:34:43,432] [INFO] [logging.py:96:log_dist] [Rank 0] step=0, skip ped=0, lr=[0.0002], mom=[(0.9, 0.999)]

[2024-04-12 20:34:43,434] [INFO] [config.py:996:print] DeepSpeedEngine configur ation:

[2024-04-12 20:34:43,434] [INFO] [config.py:1000:print] activation_checkpoint ing_config {

"partition_activations": false,

"contiguous_memory_optimization": false,

"cpu_checkpointing": false,

"number_checkpoints": null,

"synchronize_checkpoint_boundary": false,

"profile": false

}

[2024-04-12 20:34:43,434] [INFO] [config.py:1000:print] aio_config .......... ......... {'block_size': 1048576, 'queue_depth': 8, 'thread_count': 1, 'single_ submit': False, 'overlap_events': True}

[2024-04-12 20:34:43,434] [INFO] [config.py:1000:print] amp_enabled ......... ......... False

[2024-04-12 20:34:43,434] [INFO] [config.py:1000:print] amp_params .......... ......... False

[2024-04-12 20:34:43,435] [INFO] [config.py:1000:print] autotuning_config ... ......... {

"enabled": false,

"start_step": null,

"end_step": null,

"metric_path": null,

"arg_mappings": null,

"metric": "throughput",

"model_info": null,

"results_dir": "autotuning_results",

"exps_dir": "autotuning_exps",

"overwrite": true,

"fast": true,

"start_profile_step": 3,

"end_profile_step": 5,

"tuner_type": "gridsearch",

"tuner_early_stopping": 5,

"tuner_num_trials": 50,

"model_info_path": null,

"mp_size": 1,

"max_train_batch_size": null,

"min_train_batch_size": 1,

"max_train_micro_batch_size_per_gpu": 1.024000e+03,

"min_train_micro_batch_size_per_gpu": 1,

"num_tuning_micro_batch_sizes": 3

}

[2024-04-12 20:34:43,435] [INFO] [config.py:1000:print] bfloat16_enabled .... ......... True

[2024-04-12 20:34:43,435] [INFO] [config.py:1000:print] bfloat16_immediate_gr ad_update False

[2024-04-12 20:34:43,435] [INFO] [config.py:1000:print] checkpoint_parallel_w rite_pipeline False

[2024-04-12 20:34:43,435] [INFO] [config.py:1000:print] checkpoint_tag_valida tion_enabled True

[2024-04-12 20:34:43,435] [INFO] [config.py:1000:print] checkpoint_tag_valida tion_fail False

[2024-04-12 20:34:43,435] [INFO] [config.py:1000:print] comms_config ........ ......... <deepspeed.comm.config.DeepSpeedCommsConfig object at 0x7fe2dfd767d0>

[2024-04-12 20:34:43,435] [INFO] [config.py:1000:print] communication_data_ty pe ...... None

[2024-04-12 20:34:43,435] [INFO] [config.py:1000:print] compile_config ...... ......... enabled=False backend='inductor' kwargs={}

[2024-04-12 20:34:43,435] [INFO] [config.py:1000:print] compression_config .. ......... {'weight_quantization': {'shared_parameters': {'enabled': False, 'qua ntizer_kernel': False, 'schedule_offset': 0, 'quantize_groups': 1, 'quantize_ve rbose': False, 'quantization_type': 'symmetric', 'quantize_weight_in_forward': False, 'rounding': 'nearest', 'fp16_mixed_quantize': False, 'quantize_change_ra tio': 0.001}, 'different_groups': {}}, 'activation_quantization': {'shared_para meters': {'enabled': False, 'quantization_type': 'symmetric', 'range_calibratio n': 'dynamic', 'schedule_offset': 1000}, 'different_groups': {}}, 'sparse_pruni ng': {'shared_parameters': {'enabled': False, 'method': 'l1', 'schedule_offset' : 1000}, 'different_groups': {}}, 'row_pruning': {'shared_parameters': {'enable d': False, 'method': 'l1', 'schedule_offset': 1000}, 'different_groups': {}}, ' head_pruning': {'shared_parameters': {'enabled': False, 'method': 'topk', 'sche dule_offset': 1000}, 'different_groups': {}}, 'channel_pruning': {'shared_param eters': {'enabled': False, 'method': 'l1', 'schedule_offset': 1000}, 'different _groups': {}}, 'layer_reduction': {'enabled': False}}

[2024-04-12 20:34:43,435] [INFO] [config.py:1000:print] curriculum_enabled_le gacy .... False

[2024-04-12 20:34:43,435] [INFO] [config.py:1000:print] curriculum_params_leg acy ..... False

[2024-04-12 20:34:43,435] [INFO] [config.py:1000:print] data_efficiency_confi g ....... {'enabled': False, 'seed': 1234, 'data_sampling': {'enabled': False, 'num_epochs': 1000, 'num_workers': 0, 'curriculum_learning': {'enabled': False} }, 'data_routing': {'enabled': False, 'random_ltd': {'enabled': False, 'layer_t oken_lr_schedule': {'enabled': False}}}}

[2024-04-12 20:34:43,435] [INFO] [config.py:1000:print] data_efficiency_enabl ed ...... False

[2024-04-12 20:34:43,435] [INFO] [config.py:1000:print] dataloader_drop_last ......... False

[2024-04-12 20:34:43,435] [INFO] [config.py:1000:print] disable_allgather ... ......... False

[2024-04-12 20:34:43,435] [INFO] [config.py:1000:print] dump_state .......... ......... False

[2024-04-12 20:34:43,435] [INFO] [config.py:1000:print] dynamic_loss_scale_ar gs ...... None

[2024-04-12 20:34:43,435] [INFO] [config.py:1000:print] eigenvalue_enabled .. ......... False

[2024-04-12 20:34:43,435] [INFO] [config.py:1000:print] eigenvalue_gas_bounda ry_resolution 1

[2024-04-12 20:34:43,435] [INFO] [config.py:1000:print] eigenvalue_layer_name ........ bert.encoder.layer

[2024-04-12 20:34:43,435] [INFO] [config.py:1000:print] eigenvalue_layer_num ......... 0

[2024-04-12 20:34:43,435] [INFO] [config.py:1000:print] eigenvalue_max_iter . ......... 100

[2024-04-12 20:34:43,435] [INFO] [config.py:1000:print] eigenvalue_stability ......... 1e-06

[2024-04-12 20:34:43,435] [INFO] [config.py:1000:print] eigenvalue_tol ...... ......... 0.01

[2024-04-12 20:34:43,435] [INFO] [config.py:1000:print] eigenvalue_verbose .. ......... False

[2024-04-12 20:34:43,435] [INFO] [config.py:1000:print] elasticity_enabled .. ......... False

[2024-04-12 20:34:43,435] [INFO] [config.py:1000:print] flops_profiler_config ........ {

"enabled": false,

"recompute_fwd_factor": 0.0,

"profile_step": 1,

"module_depth": -1,

"top_modules": 1,

"detailed": true,

"output_file": null

}

[2024-04-12 20:34:43,435] [INFO] [config.py:1000:print] fp16_auto_cast ...... ......... None

[2024-04-12 20:34:43,435] [INFO] [config.py:1000:print] fp16_enabled ........ ......... False

[2024-04-12 20:34:43,435] [INFO] [config.py:1000:print] fp16_master_weights_a nd_gradients False

[2024-04-12 20:34:43,435] [INFO] [config.py:1000:print] global_rank ......... ......... 0

[2024-04-12 20:34:43,435] [INFO] [config.py:1000:print] grad_accum_dtype .... ......... None

[2024-04-12 20:34:43,435] [INFO] [config.py:1000:print] gradient_accumulation _steps .. 16

[2024-04-12 20:34:43,435] [INFO] [config.py:1000:print] gradient_clipping ... ......... 1

[2024-04-12 20:34:43,435] [INFO] [config.py:1000:print] gradient_predivide_fa ctor .... 1.0

[2024-04-12 20:34:43,435] [INFO] [config.py:1000:print] graph_harvesting .... ......... False

[2024-04-12 20:34:43,435] [INFO] [config.py:1000:print] hybrid_engine ....... ......... enabled=False max_out_tokens=512 inference_tp_size=1 release_inferenc e_cache=False pin_parameters=True tp_gather_partition_size=8

[2024-04-12 20:34:43,435] [INFO] [config.py:1000:print] initial_dynamic_scale ........ 1

[2024-04-12 20:34:43,435] [INFO] [config.py:1000:print] load_universal_checkp oint .... False

[2024-04-12 20:34:43,435] [INFO] [config.py:1000:print] loss_scale .......... ......... 1.0

[2024-04-12 20:34:43,435] [INFO] [config.py:1000:print] memory_breakdown .... ......... False

[2024-04-12 20:34:43,435] [INFO] [config.py:1000:print] mics_hierarchial_para ms_gather False

[2024-04-12 20:34:43,435] [INFO] [config.py:1000:print] mics_shard_size ..... ......... -1

[2024-04-12 20:34:43,435] [INFO] [config.py:1000:print] monitor_config ...... ......... tensorboard=TensorBoardConfig(enabled=False, output_path='', job_name ='DeepSpeedJobName') wandb=WandbConfig(enabled=False, group=None, team=None, pr oject='deepspeed') csv_monitor=CSVConfig(enabled=False, output_path='', job_nam e='DeepSpeedJobName') enabled=False

[2024-04-12 20:34:43,436] [INFO] [config.py:1000:print] nebula_config ....... ......... {

"enabled": false,

"persistent_storage_path": null,

"persistent_time_interval": 100,

"num_of_version_in_retention": 2,

"enable_nebula_load": true,

"load_path": null

}

[2024-04-12 20:34:43,436] [INFO] [config.py:1000:print] optimizer_legacy_fusi on ...... False

[2024-04-12 20:34:43,436] [INFO] [config.py:1000:print] optimizer_name ...... ......... None

[2024-04-12 20:34:43,436] [INFO] [config.py:1000:print] optimizer_params .... ......... None

[2024-04-12 20:34:43,436] [INFO] [config.py:1000:print] pipeline ............ ......... {'stages': 'auto', 'partition': 'best', 'seed_layers': False, 'activa tion_checkpoint_interval': 0, 'pipe_partitioned': True, 'grad_partitioned': Tru e}

[2024-04-12 20:34:43,436] [INFO] [config.py:1000:print] pld_enabled ......... ......... False

[2024-04-12 20:34:43,436] [INFO] [config.py:1000:print] pld_params .......... ......... False

[2024-04-12 20:34:43,436] [INFO] [config.py:1000:print] prescale_gradients .. ......... False

[2024-04-12 20:34:43,436] [INFO] [config.py:1000:print] scheduler_name ...... ......... None

[2024-04-12 20:34:43,436] [INFO] [config.py:1000:print] scheduler_params .... ......... None

[2024-04-12 20:34:43,436] [INFO] [config.py:1000:print] seq_parallel_communic ation_data_type torch.float32

[2024-04-12 20:34:43,436] [INFO] [config.py:1000:print] sparse_attention .... ......... None

[2024-04-12 20:34:43,436] [INFO] [config.py:1000:print] sparse_gradients_enab led ..... False

[2024-04-12 20:34:43,436] [INFO] [config.py:1000:print] steps_per_print ..... ......... 10000000000000

[2024-04-12 20:34:43,436] [INFO] [config.py:1000:print] train_batch_size .... ......... 16

[2024-04-12 20:34:43,436] [INFO] [config.py:1000:print] train_micro_batch_siz e_per_gpu 1

[2024-04-12 20:34:43,436] [INFO] [config.py:1000:print] use_data_before_exper t_parallel_ False

[2024-04-12 20:34:43,436] [INFO] [config.py:1000:print] use_node_local_storag e ....... False

[2024-04-12 20:34:43,436] [INFO] [config.py:1000:print] wall_clock_breakdown ......... False

[2024-04-12 20:34:43,436] [INFO] [config.py:1000:print] weight_quantization_c onfig ... None

[2024-04-12 20:34:43,436] [INFO] [config.py:1000:print] world_size .......... ......... 1

[2024-04-12 20:34:43,436] [INFO] [config.py:1000:print] zero_allow_untested_o ptimizer True

[2024-04-12 20:34:43,436] [INFO] [config.py:1000:print] zero_config ......... ......... stage=2 contiguous_gradients=True reduce_scatter=True reduce_bucket_s ize=500,000,000 use_multi_rank_bucket_allreduce=True allgather_partitions=True allgather_bucket_size=500,000,000 overlap_comm=True load_from_fp32_weights=True elastic_checkpoint=False offload_param=None offload_optimizer=None sub_group_s ize=1,000,000,000 cpu_offload_param=None cpu_offload_use_pin_memory=None cpu_of fload=None prefetch_bucket_size=50,000,000 param_persistence_threshold=100,000 model_persistence_threshold=sys.maxsize max_live_parameters=1,000,000,000 max_r euse_distance=1,000,000,000 gather_16bit_weights_on_model_save=False stage3_gat her_fp16_weights_on_model_save=False ignore_unused_parameters=True legacy_stage 1=False round_robin_gradients=False zero_hpz_partition_size=1 zero_quantized_we ights=False zero_quantized_nontrainable_weights=False zero_quantized_gradients= False mics_shard_size=-1 mics_hierarchical_params_gather=False memory_efficient _linear=True pipeline_loading_checkpoint=False override_module_apply=True

[2024-04-12 20:34:43,436] [INFO] [config.py:1000:print] zero_enabled ........ ......... True

[2024-04-12 20:34:43,436] [INFO] [config.py:1000:print] zero_force_ds_cpu_opt imizer .. False

[2024-04-12 20:34:43,436] [INFO] [config.py:1000:print] zero_optimization_sta ge ...... 2

[2024-04-12 20:34:43,436] [INFO] [config.py:986:print_user_config] json = {

"gradient_accumulation_steps": 16,

"train_micro_batch_size_per_gpu": 1,

"gradient_clipping": 1,

"zero_allow_untested_optimizer": true,

"zero_force_ds_cpu_optimizer": false,

"zero_optimization": {

"stage": 2,

"overlap_comm": true

},

"fp16": {

"enabled": false,

"initial_scale_power": 16

},

"bf16": {

"enabled": true

},

"steps_per_print": 1.000000e+13

}

04/12 20:34:43 - mmengine - INFO - Num train samples 384

04/12 20:34:43 - mmengine - INFO - train example:

04/12 20:34:43 - mmengine - INFO - <s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

<s><|User|>:请做一下自我介绍

<|Bot|>:我是段老师的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦</s>

04/12 20:34:43 - mmengine - INFO - before_train in EvaluateChatHook.

04/12 21:24:47 - mmengine - INFO - Iter(train) [300/768] lr: 1.3958e-04 eta: 0:18:57 time: 2.2819 data_time: 0.0076 memory: 5661 loss: 0.0212

04/12 21:24:47 - mmengine - INFO - after_train_iter in EvaluateChatHook.

04/12 21:24:48 - mmengine - INFO - Sample output:

<s><|System|>:Below is an instruction that describes a task. Write a response t hat appropriately completes the request.

<|User|>:请你介绍一下你自己

<|Bot|>:我是段老师的小助手哦</s>

04/12 21:24:49 - mmengine - INFO - Sample output:

<s><|System|>:Below is an instruction that describes a task. Write a response t hat appropriately completes the request.

<|User|>:你是谁

<|Bot|>:我是段老师的小助手</s>

04/12 21:24:49 - mmengine - INFO - Sample output:

<s><|System|>:Below is an instruction that describes a task. Write a response t hat appropriately completes the request.

<|User|>:你是我的小助手吗

<|Bot|>:我是段老师的小助手哦</s>

可以看到,通过 deepspeed 来训练后得到的权重文件和原本的权重文件是有所差别的,原本的仅仅是一个 .pth 的文件,而使用了 deepspeed 则是一个名字带有 .pth 的文件夹,在该文件夹里保存了两个 .pt 文件。当然这两者在具体的使用上并没有太大的差别,都是可以进行转化并整合。

https://github.com/InternLM/Tutorial/blob/camp2/xtuner/personal_assistant_document.md