文章目录

- 前言

- 一、数据集调用

- 二、Tensorflow1.x

- 1.单隐藏层

- 2.模型保存与调用

- 三、Tensorflow2.x

- 1.全连接层类

- 2.keras建模

- 总结

前言

对TensorFlow笔记之单神经元完成多分类任务进行修改,在tf1.x与tf2.x中使用神经网络完成手写体数字识别多分类任务。

一、数据集调用

数据集调用与预处理和上一篇完全相同

#数据集调用,在tensorflow2.x中调用数据集

import tensorflow as tf2

import matplotlib.pyplot as plt

import numpy as np

mnist = tf2.keras.datasets.mnist

(x_train, y_train), (x_test, y_test) = mnist.load_data()

#维度转换,灰度值归一化,标签独热编码

x_train = x_train.reshape((-1, 784))

x_train = tf2.cast(x_train/255.0, tf2.float32)

x_test = x_test.reshape((-1, 784))

x_test = tf2.cast(x_test/255.0, tf2.float32)

y_train = tf2.one_hot(y_train, depth=10)

y_test = tf2.one_hot(y_test, depth=10)

#训练集训练模型,验证集调整超参数,测试集测试模型效果

#训练集60000个样本,取5000个样本作为验证集;测试集10000个样本

x_valid, y_valid = x_train[55000:], y_train[55000:]

x_train, y_train = x_train[:55000], y_train[:55000]

#显示16张图片

def show(images, labels, preds):

fig1 = plt.figure(1, figsize=(12, 12))

for i in range(16):

ax = fig1.add_subplot(4, 4, i+1)

ax.imshow(images[i].reshape(28, 28), cmap='binary')

label = np.argmax(labels[i])

pred = np.argmax(preds[i])

title = 'label:%d,pred:%d' % (label, pred)

ax.set_title(title)

ax.set_xticks([])

ax.set_yticks([])

二、Tensorflow1.x

1.单隐藏层

定义模型

增加一组权值作为隐藏层参数

输出层不进行softmax

使用截断正态分布减小随机权值的偏离程度

import tensorflow.compat.v1 as tf

from sklearn.utils import shuffle

from time import time

tf.disable_eager_execution()

with tf.name_scope('Model'):

x = tf.placeholder(tf.float32, [None, 784], name='X')

y = tf.placeholder(tf.float32, [None, 10], name='Y')

#隐藏层

with tf.name_scope('Hide'):

h1_nn = 256

#截断正态分布

w1 = tf.Variable(\

tf.truncated_normal((784, h1_nn), stddev=0.1), name='W1')

b1 = tf.Variable(tf.zeros((h1_nn)), name='B1')

y1 = tf.nn.relu(tf.matmul(x, w1) + b1)

#输出层

with tf.name_scope('Output'):

w2 = tf.Variable(\

tf.truncated_normal((h1_nn, 10), stddev=0.1), name='W2')

b2 = tf.Variable(tf.zeros((10)), name='B2')

pred = tf.matmul(y1, w2) + b2

训练模型

使用结合softmax的损失函数,避免损失值过大

#训练参数

train_epoch = 10

learning_rate = 0.1

batch_size = 1000

batch_num = x_train.shape[0] // batch_size

#损失函数与准确率

step = 0

display_step = 5

loss_list = []

acc_list = []

#结合softmax的损失函数

loss_function = tf.reduce_mean(\

tf.nn.softmax_cross_entropy_with_logits(\

logits=pred, labels=y))

equal = tf.equal(tf.argmax(y, axis=1), tf.argmax(pred, axis=1))

accuracy = tf.reduce_mean(tf.cast(equal, tf.float32))

#优化器

optimizer = tf.train.AdamOptimizer(learning_rate).minimize(loss_function)

#变量初始化

init = tf.global_variables_initializer()

with tf.Session() as sess:

sess.run(init)

#tf转为numpy

x_train = sess.run(x_train)

x_valid = sess.run(x_valid)

x_test = sess.run(x_test)

y_train = sess.run(y_train)

y_valid = sess.run(y_valid)

y_test = sess.run(y_test)

迭代训练

使用time()记录训练时间

start_time = time()

for epoch in range(train_epoch):

print('epoch:%d' % epoch)

for batch in range(batch_num):

xi = x_train[batch*batch_size:(batch+1)*batch_size]

yi = y_train[batch*batch_size:(batch+1)*batch_size]

sess.run(optimizer, feed_dict={x:xi, y:yi})

step = step + 1

if step % display_step == 0:

loss, acc = sess.run([loss_function, accuracy],\

feed_dict={x:x_valid, y:y_valid})

loss_list.append(loss)

acc_list.append(acc)

#打乱顺序

x_train, y_train = shuffle(x_train, y_train)

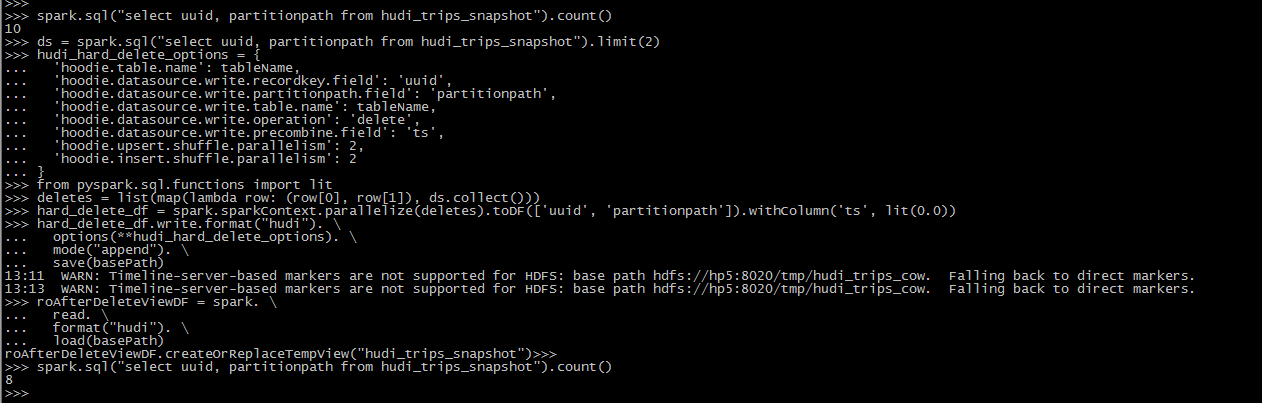

结果可视化

end_time = time()

y_pred, equ_list, acc = sess.run([pred, equal, accuracy],\

feed_dict={x:x_test, y:y_test})

fig2 = plt.figure(2, figsize=(12, 6))

ax = fig2.add_subplot(1, 2, 1)

ax.plot(loss_list, 'r-')

ax.set_title('loss')

ax = fig2.add_subplot(1, 2, 2)

ax.plot(acc_list, 'b-')

ax.set_title('acc')

print('用时%.1fs' % (end_time - start_time))

print('Accuracy:{:.2%}'.format(acc))

#展示预测错误的图片

err_list = [ not equ for equ in equ_list]

show(x_test[err_list], y_test[err_list], y_pred[err_list])

准确率比使用单神经元有所提高

预测错误的图片

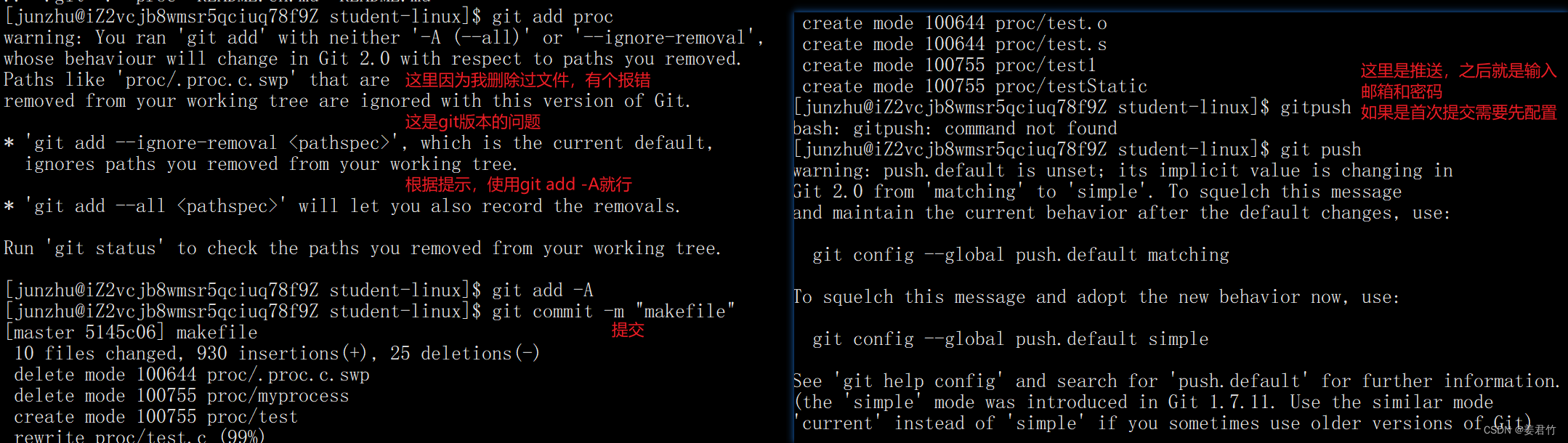

2.模型保存与调用

设置检查点目录

import os

ckpt_dir = './ckpt_dir/'

if not os.path.exists(ckpt_dir):

os.makedirs(ckpt_dir)

不保存使用Adam优化器时产生的权重

vl = [v for v in tf.global_variables() if 'Adam' not in v.name]

saver = tf.train.Saver(var_list=vl)

每轮过后保存模型

for epoch in range(train_epoch):

saver.save(sess, os.path.join(ckpt_dir,\

'mnist_model_%d.ckpt' % (epoch+1)))

训练结束后保存模型

saver.save(sess, os.path.join(ckpt_dir,\

'mnist_model.ckpt'))

保留最近5份文件,文件较大,保存时间也较长

调用模型

from tensorflow.python.tools.inspect_checkpoint import print_tensors_in_checkpoint_file

with tf.Session() as sess:

ckpt_dir = './ckpt_dir/'

saver = tf.train.Saver()

#获取最新检查点

ckpt = tf.train.get_checkpoint_state(ckpt_dir)

#打印权重信息

print_tensors_in_checkpoint_file(ckpt.model_checkpoint_path,\

tensor_name=None, all_tensors=True, all_tensor_names=True)

#恢复模型

saver.restore(sess, ckpt.model_checkpoint_path)

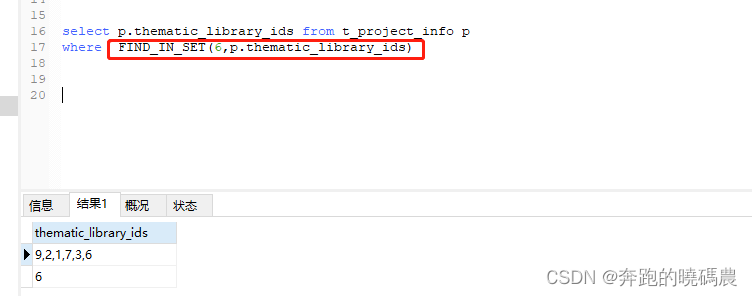

保存的模型里有两组权重Model与Model_1,分别为初始化时的权重与优化后的权重:

但在此Model为训练后的权重

Model_1为初始化时的权重

如果准确率过低,可能是用的初始化时的权重,需要将模型名称改为Model_1。模型保存后需要退出当前控制台再进行调用,否则可能会报错。

with tf.name_scope('Model_1'):

结果与训练后的一致

三、Tensorflow2.x

1.全连接层类

定义模型

import tensorflow as tf

from sklearn.utils import shuffle

from time import time

#全连接层

class fcn_layer():

def __init__(self, input_dim, output_dim):

self.w = tf.Variable(tf.random.truncated_normal(\

(input_dim, output_dim), stddev=0.1), tf.float32)

self.b = tf.Variable(tf.zeros(output_dim), tf.float32)

def cal(self, inputs, activation=None):

y = tf.matmul(inputs, self.w) + self.b

if activation != None:

y = activation(y)

return y

三层神经网络256x64x32

hide_1 = fcn_layer(784, 256)

hide_2 = fcn_layer(256, 64)

hide_3 = fcn_layer(64, 32)

out = fcn_layer(32, 10)

def model(x):

y1 = hide_1.cal(x, tf.nn.relu)

y2 = hide_2.cal(y1, tf.nn.relu)

y3 = hide_3.cal(y2, tf.nn.relu)

y4 = out.cal(y3, tf.nn.softmax)

return y4

损失函数与准确率

#损失函数

def loss_function(x, y):

pred = model(x)

loss = tf.keras.losses.categorical_crossentropy(\

y_true=y, y_pred=pred)

return tf.reduce_mean(loss)

#准确率

def accuracy(x, y):

pred = model(x)

acc = tf.equal(tf.argmax(y, axis=1), tf.argmax(pred, axis=1))

acc = tf.cast(acc, tf.float32)

return tf.reduce_mean(acc)

#梯度

def grad(x, y):

with tf.GradientTape() as tape:

loss = loss_function(x, y)

return tape.gradient(loss, w_list+b_list)

#待优化变量列表

w_list = [hide_1.w, hide_2.w, hide_3.w, out.w]

b_list = [hide_1.b, hide_2.b, hide_3.b, out.b]

训练模型

#训练参数

train_epoch = 10

learning_rate = 0.01

batch_size = 1000

batch_num = x_train.shape[0] // batch_size

#展示间隔

step = 0

display_step = 5

loss_list = []

acc_list = []

#Adam优化器

optimizer = tf.keras.optimizers.Adam(learning_rate)

迭代训练

start_time = time()

for epoch in range(train_epoch):

print('epoch:%d' % epoch)

for batch in range(batch_num):

xi = x_train[batch*batch_size: (batch+1)*batch_size]

yi = y_train[batch*batch_size: (batch+1)*batch_size]

grads = grad(xi, yi)

optimizer.apply_gradients(zip(grads, w_list+b_list))

step = step + 1

if step % display_step == 0:

loss_list.append(loss_function(x_valid, y_valid))

acc_list.append(accuracy(x_valid, y_valid))

#打乱顺序

x_train, y_train = shuffle(x_train.numpy(), y_train.numpy())

x_train = tf.cast(x_train, tf.float32)

y_train = tf.cast(y_train, tf.float32)

结果可视化

#验证集结果

end_time = time()

print('用时%.1fs' % (end_time - start_time))

fig2 = plt.figure(2, figsize=(12, 6))

ax = fig2.add_subplot(1, 2, 1)

ax.plot(loss_list, 'r-')

ax.set_title('loss')

ax = fig2.add_subplot(1, 2, 2)

ax.plot(acc_list, 'b-')

ax.set_title('acc')

#测试集结果

acc = accuracy(x_test, y_test)

print('Accuracy:{:.2%}'.format(acc))

y_pred = model(x_test)

show(x_test.numpy(), y_test, y_pred)

使用三层神经网络准确率进一步提升,训练时长也增长

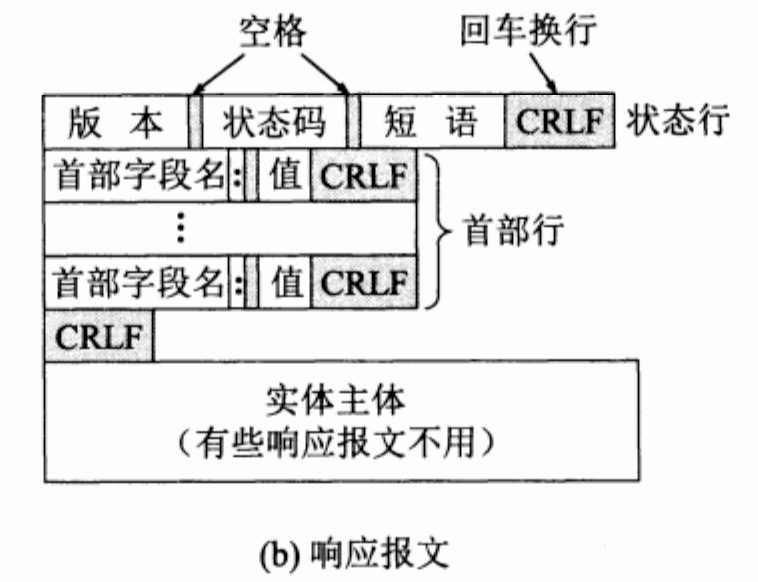

2.keras建模

数据集调用

import tensorflow as tf

import matplotlib.pyplot as plt

from time import time

mnist = tf.keras.datasets.mnist

(x_train, y_train), (x_test, y_test) = mnist.load_data()

维度转换在flatten层进行,标签直接采用整数,只需要进行灰度值归一化,在此不需要进行验证集划分。

x_train = tf.cast(x_train/255.0, tf.float32)

x_test = tf.cast(x_test/255.0, tf.float32)

显示16张图片

def show(images, labels, preds):

fig1 = plt.figure(1, figsize=(12, 12))

for i in range(16):

ax = fig1.add_subplot(4, 4, i+1)

ax.imshow(images[i].reshape(28, 28), cmap='binary')

title = 'label:%d,pred:%d' % (labels[i], preds[i])

ax.set_title(title)

ax.set_xticks([])

ax.set_yticks([])

创建模型

model = tf.keras.models.Sequential()

添加层

model.add(tf.keras.layers.Flatten(input_shape=(28,28)))

model.add(tf.keras.layers.Dense(units=256,\

kernel_initializer='normal', activation='relu'))

model.add(tf.keras.layers.Dense(units=64,\

kernel_initializer='normal', activation='relu'))

model.add(tf.keras.layers.Dense(units=32,\

kernel_initializer='normal', activation='relu'))

model.add(tf.keras.layers.Dense(units=10,\

kernel_initializer='normal', activation='softmax'))

模型摘要

model.summary()

训练模式

#整数类型作标签

model.compile(optimizer='adam',\

loss='sparse_categorical_crossentropy',\

metrics=['accuracy'])

训练模型

#学习率自动调节,输出进度条日志

start_time = time()

history = model.fit(x_train, y_train,\

validation_split=0.2, epochs=10, batch_size=1000,\

verbose=1)

end_time = time()

print('用时%.1fs' % (end_time-start_time))

history.history:字典类型数据,包含loss,accuracy,val_loss,val_accuracy

fig2 = plt.figure(2, figsize=(12, 6))

ax = fig2.add_subplot(1, 2, 1)

ax.plot(history.history['val_loss'], 'r-')

ax.set_title('loss')

ax = fig2.add_subplot(1, 2, 2)

ax.plot(history.history['val_accuracy'], 'b-')

ax.set_title('acc')

模型评估

test_loss, test_acc = model.evaluate(x_train, y_train, verbose=1)

print('Loss:%.2f' % test_loss)

print('Accuracy:{:.2%}'.format(test_acc))

使用keras的训练时间较短,模型准确率也有提升空间。

模型预测

#分类预测

preds = model.predict_classes(x_test)

show(x_test.numpy(), y_test, preds)

总结

一层神经网络包含多个神经元,输入数据维度对应于隐藏层权重的第一维度,神经元个数对应于隐藏层权重的第二维度,输出层将隐藏层输出转化为预测值维度。

在模型保存和加载时,可能出现权重名称不对应的情况,可将权重打印出来进行对照修改。

通过定义全连接层类可以省去定义多层神经网络时的重复操作,多层网络的参数较多,训练时间较长,对模型的表达能力更强,但准确率不一定更高,还需适当调节训练参数。

使用keras可以方便地定义模型、进行训练与评估,可以在内部进行维度变换和独热编码等操作,训练时间也较短。