文章目录

- 🚀🚀🚀前言

- 一、1️⃣ 添加yolov5_GFPN.yaml文件

- 二、2️⃣添加extra_modules.py代码

- 三、3️⃣yolo.py文件添加内容

- 3.1 🎓 添加CSPStage模块

- 四、4️⃣实验结果

- 4.1 🎓 使用yolov5s.pt训练的结果对比

- 4.2 ✨ 使用yolov5l.pt训练的结果对比

- 4.3 ⭐️ 实验结果分析

👀🎉📜系列文章目录

必读【论文精读】DAMO-YOLO:兼顾速度与精度的高效目标检测框架( A Report on Real-Time Object Detection Design)

【YOLOv5改进系列(1)】高效涨点----使用EIoU、Alpha-IoU、SIoU、Focal-EIOU替换CIou

【YOLOv5改进系列(2)】高效涨点----Wise-IoU详细解读及使用Wise-IoU(WIOU)替换CIOU

【YOLOv5改进系列(3)】高效涨点----Optimal Transport Assignment:OTA最优传输方法

【YOLOv5改进系列(4)】高效涨点----添加可变形卷积DCNv2

【YOLOv5改进系列(5)】高效涨点----添加密集小目标检测NWD方法

🚀🚀🚀前言

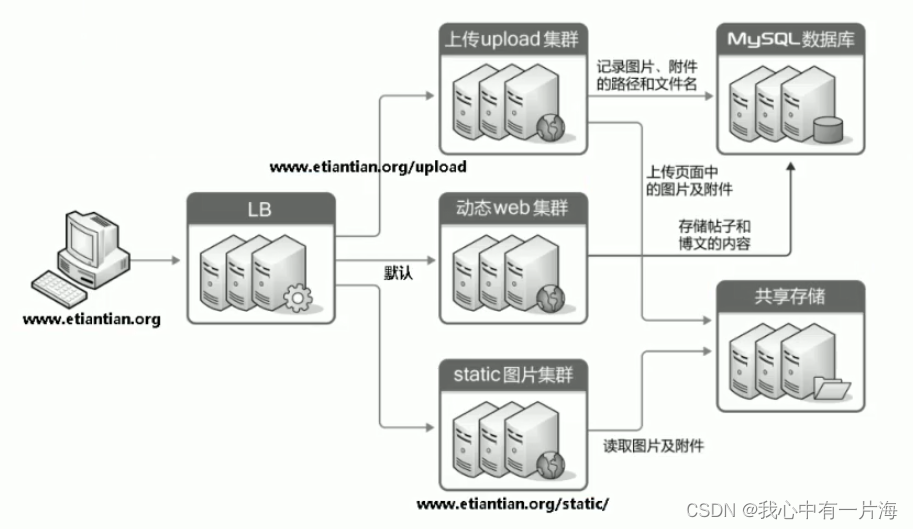

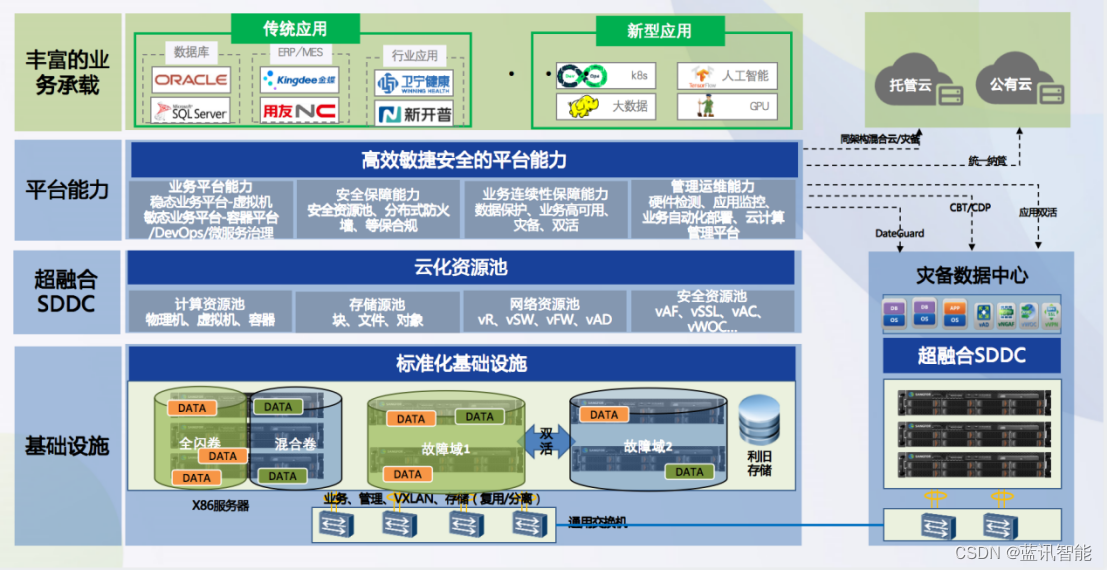

⚡️DAMO-YOLO是阿里巴巴达摩院在2022年提出的一种模型,其实在当时yolov5和v6已经出来了,并且也有部分实时监测比较好的算法模型,但是当前检测框架在实际应用时仍然有以下几个痛点:

- ① 模型尺度变化不够灵活,难以适应不同的算力场景。如 YOLO 系列的检测框架,一般只提供 3-5 个模型的计算量,从十几到一百多 Flops 数量级,难以覆盖不同的算力场景。

- ② 多尺度检测能力弱,特别是小物体检测性能较差,这使得模型应用场景十分受限。比如在无人机检测场景,它们的效果往往都不太理想。

- ③ 速度/精度曲线不够理想,速度和精度难以同时兼容。

☀️针对上述情况,达摩院计算机视觉团队设计并开源了 DAMO-YOLO,DAMO-YOLO 主要着眼于工业落地。相比于其他的目标检测框架具有三个明显的技术优势:

- ① 整合了自研 NAS 技术,可低成本自定义模型,让用户充分发挥芯片算力。

- ② 结合 Efficient RepGFPN 以及 HeavyNeck 模型设计范式,能够很大程度上提高模型的多尺度检测能力,扩大模型应用范围。

- ③ 提出了全尺度通用的蒸馏技术,将大模型的知识转移到小模型上,在不带来推理负担的情况下,提升小模型的性能。

关于DAMO-YOLO的一些其他细节这里不做过多解释,像MAE-NAS技术进行 backbone 的搜索、蒸馏模型等技术如果感兴趣可以看一下这篇文章DAMO-YOLO:【论文精读】DAMO-YOLO:兼顾速度与精度的高效目标检测框架( A Report on Real-Time Object Detection Design)

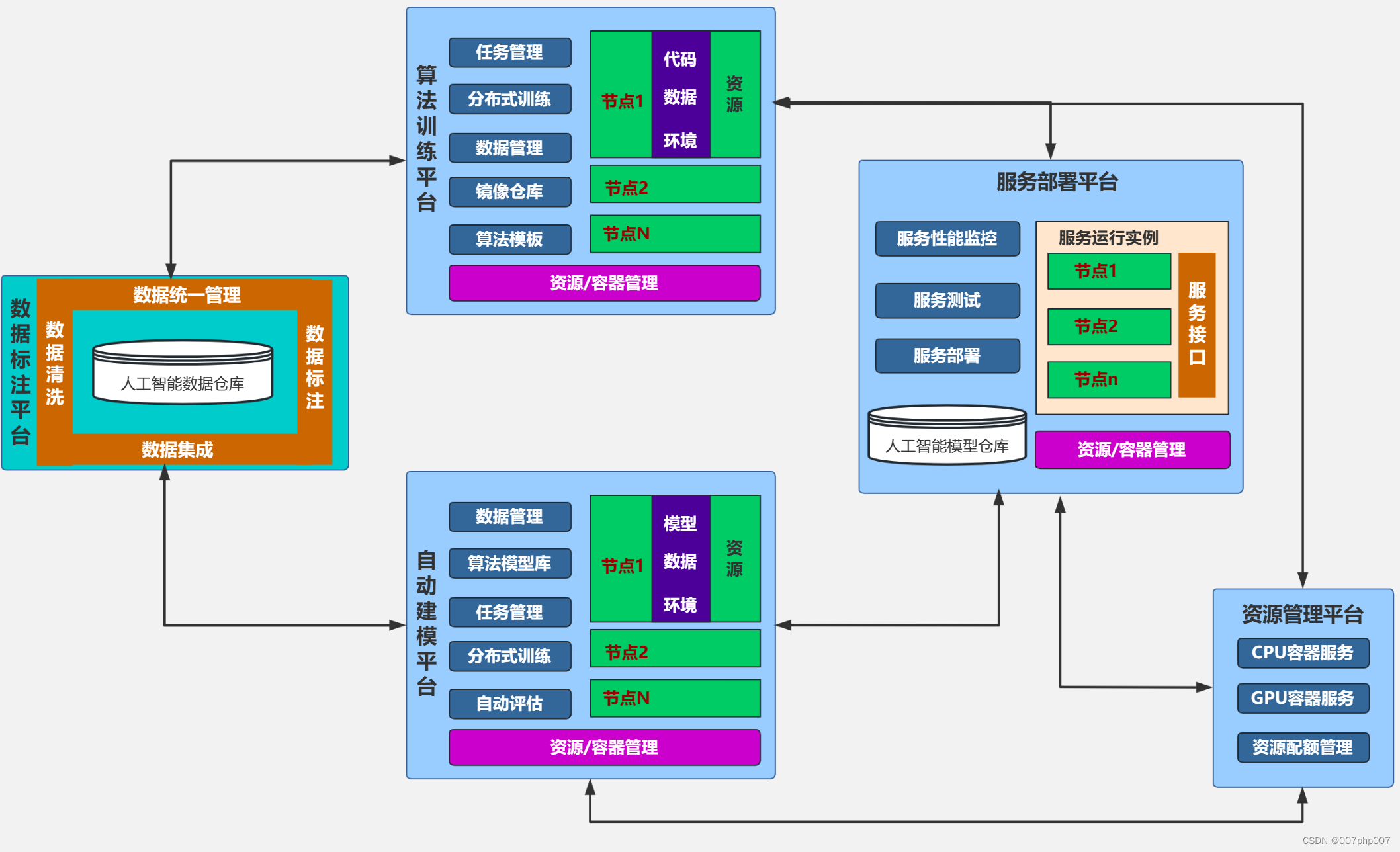

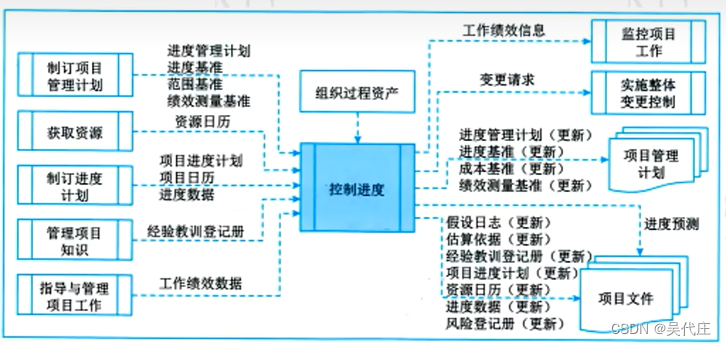

🚀本文改进使用的是DAMO-YOLO 中的Efficient RepGFPN特征网络融合部分,该网络是在GFPN特征网络上进行改进的,因为GFPN有很多上采样操作并且并行化比较低,导致了Flops高效Latency低效;因此我使用Efficient RepGFPN方法去替换了yolov5中Neck部分的PANet融合方法;

实验方面我分别使用yolov5s.pt和yolov5l.pt两个权重来进行训练,然后将yolov5_GFPN.yaml的depth_multiple和width_multiple分别设置成(0.33,0.50)和(1.0,1.0)对标yolov5s和yolov5l。

实验结果: 与yolov5s基准模型相比,使用Efficient RepGFPN之后map@0.5反而下降了,但是替换之后与yolov5l基准模型相比,map@0.5提升了近4个百分点,同时f1置信分数也有所增加。至于为什么会出现这种情况,在实验总结部分我写出自己理解的一些解释。

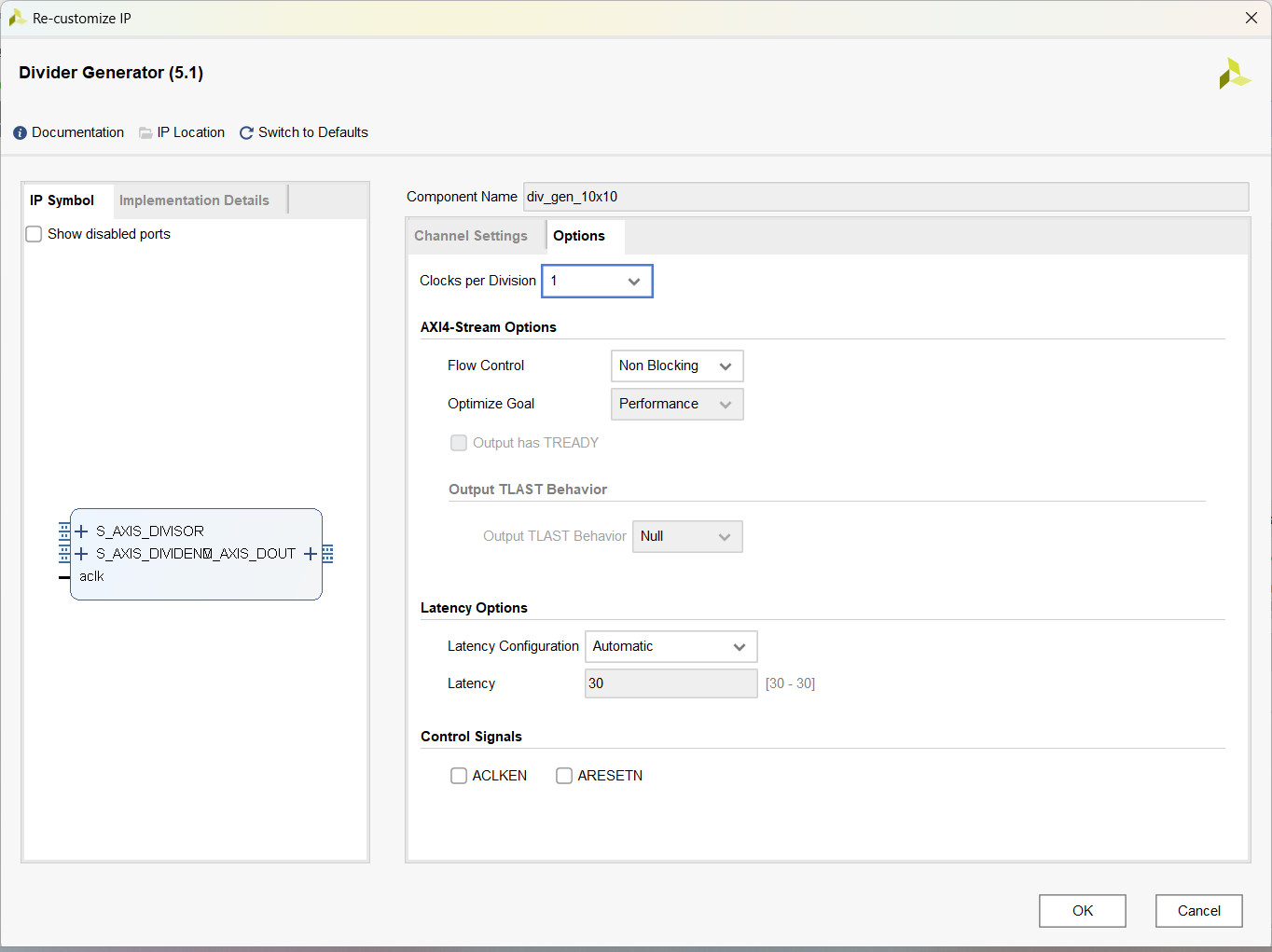

一、1️⃣ 添加yolov5_GFPN.yaml文件

🚀在models文件下新建一个yolov5_GFPN.yaml文件,将如下代码复制到yaml文件中;我这里depth_multiple、width_multiple设置的都是1,需要使用yolov5l权重进行训练,如果想要使用yolov5s的权重训练,只需要将depth_multiple、width_multiple分别设置为0.33和0.5。

# YOLOv5 🚀 by Ultralytics, GPL-3.0 license

# Parameters

nc: 6 # number of classes

depth_multiple: 1.00 # model depth multiple

width_multiple: 1.00 # layer channel multiple

anchors:

- [ 10,13, 16,30, 33,23 ] # P3/8

- [ 30,61, 62,45, 59,119 ] # P4/16

- [ 116,90, 156,198, 373,326 ] # P5/32

# YOLOv5 v6.0 backbone

backbone:

# [from, number, module, args]

[ [ -1, 1, Conv, [ 64, 6, 2, 2 ] ], # 0-P1/2

[ -1, 1, Conv, [ 128, 3, 2 ] ], # 1-P2/4

[ -1, 3, C3, [ 128 ] ],

[ -1, 1, Conv, [ 256, 3, 2 ] ], # 3-P3/8

[ -1, 6, C3, [ 256 ] ],

[ -1, 1, Conv, [ 512, 3, 2 ] ], # 5-P4/16

[ -1, 9, C3, [ 512 ] ],

[ -1, 1, Conv, [ 1024, 3, 2 ] ], # 7-P5/32

[ -1, 3, C3, [ 1024 ] ],

[ -1, 1, SPPF, [ 1024, 5 ] ], # 9

]

# DAMO-YOLO GFPN Head

head:

[ [ -1, 1, Conv, [ 512, 1, 1 ] ], # 10 添加这个是为了使用1x1卷积进行降维

[ 6, 1, Conv, [ 512, 3, 2 ] ],

[ [ -1, 10 ], 1, Concat, [ 1 ] ],

[ -1, 3, CSPStage, [ 512 ] ], # 13

[ -1, 1, nn.Upsample, [ None, 2, 'nearest' ] ], #14

[ 4, 1, Conv, [ 256, 3, 2 ] ], # 15

[ [ 14, -1, 6 ], 1, Concat, [ 1 ] ],

[ -1, 3, CSPStage, [ 512 ] ], # 17

[ -1, 1, nn.Upsample, [ None, 2, 'nearest' ] ],

[ [ -1, 4 ], 1, Concat, [ 1 ] ],

[ -1, 3, CSPStage, [ 256 ] ], # 20

[ -1, 1, Conv, [ 256, 3, 2 ] ],

[ [ -1, 17 ], 1, Concat, [ 1 ] ],

[ -1, 3, CSPStage, [ 512 ] ], # 23

[ 17, 1, Conv, [ 256, 3, 2 ] ], # 24

[ 23, 1, Conv, [ 256, 3, 2 ] ], # 25

[ [ 13, 24, -1 ], 1, Concat, [ 1 ] ],

[ -1, 3, CSPStage, [ 1024 ] ], # 27

[ [ 20, 23, 27 ], 1, Detect, [ nc, anchors ] ], # Detect(P3, P4, P5)

]

二、2️⃣添加extra_modules.py代码

📌在models文件下新建一个extra_modules.py文件,将如下代码复制到文件中;这部分代码就是Efficient RepGFPN的实现方法。

import torch

import torch.nn as nn

import torch.nn.functional as F

def conv_bn(in_channels, out_channels, kernel_size, stride, padding, groups=1):

'''Basic cell for rep-style block, including conv and bn'''

result = nn.Sequential()

result.add_module(

'conv',

nn.Conv2d(in_channels=in_channels,

out_channels=out_channels,

kernel_size=kernel_size,

stride=stride,

padding=padding,

groups=groups,

bias=False))

result.add_module('bn', nn.BatchNorm2d(num_features=out_channels))

return result

class RepConv(nn.Module):

'''RepConv is a basic rep-style block, including training and deploy status

Code is based on https://github.com/DingXiaoH/RepVGG/blob/main/repvgg.py

'''

def __init__(self,

in_channels,

out_channels,

kernel_size=3,

stride=1,

padding=1,

dilation=1,

groups=1,

padding_mode='zeros',

deploy=False,

act='relu',

norm=None):

super(RepConv, self).__init__()

self.deploy = deploy

self.groups = groups

self.in_channels = in_channels

self.out_channels = out_channels

assert kernel_size == 3

assert padding == 1

padding_11 = padding - kernel_size // 2

if isinstance(act, str):

self.nonlinearity = get_activation(act)

else:

self.nonlinearity = act

if deploy:

self.rbr_reparam = nn.Conv2d(in_channels=in_channels,

out_channels=out_channels,

kernel_size=kernel_size,

stride=stride,

padding=padding,

dilation=dilation,

groups=groups,

bias=True,

padding_mode=padding_mode)

else:

self.rbr_identity = None

self.rbr_dense = conv_bn(in_channels=in_channels,

out_channels=out_channels,

kernel_size=kernel_size,

stride=stride,

padding=padding,

groups=groups)

self.rbr_1x1 = conv_bn(in_channels=in_channels,

out_channels=out_channels,

kernel_size=1,

stride=stride,

padding=padding_11,

groups=groups)

def forward(self, inputs):

'''Forward process'''

if hasattr(self, 'rbr_reparam'):

return self.nonlinearity(self.rbr_reparam(inputs))

if self.rbr_identity is None:

id_out = 0

else:

id_out = self.rbr_identity(inputs)

return self.nonlinearity(

self.rbr_dense(inputs) + self.rbr_1x1(inputs) + id_out)

def get_equivalent_kernel_bias(self):

kernel3x3, bias3x3 = self._fuse_bn_tensor(self.rbr_dense)

kernel1x1, bias1x1 = self._fuse_bn_tensor(self.rbr_1x1)

kernelid, biasid = self._fuse_bn_tensor(self.rbr_identity)

return kernel3x3 + self._pad_1x1_to_3x3_tensor(

kernel1x1) + kernelid, bias3x3 + bias1x1 + biasid

def _pad_1x1_to_3x3_tensor(self, kernel1x1):

if kernel1x1 is None:

return 0

else:

return torch.nn.functional.pad(kernel1x1, [1, 1, 1, 1])

def _fuse_bn_tensor(self, branch):

if branch is None:

return 0, 0

if isinstance(branch, nn.Sequential):

kernel = branch.conv.weight

running_mean = branch.bn.running_mean

running_var = branch.bn.running_var

gamma = branch.bn.weight

beta = branch.bn.bias

eps = branch.bn.eps

else:

assert isinstance(branch, nn.BatchNorm2d)

if not hasattr(self, 'id_tensor'):

input_dim = self.in_channels // self.groups

kernel_value = np.zeros((self.in_channels, input_dim, 3, 3),

dtype=np.float32)

for i in range(self.in_channels):

kernel_value[i, i % input_dim, 1, 1] = 1

self.id_tensor = torch.from_numpy(kernel_value).to(

branch.weight.device)

kernel = self.id_tensor

running_mean = branch.running_mean

running_var = branch.running_var

gamma = branch.weight

beta = branch.bias

eps = branch.eps

std = (running_var + eps).sqrt()

t = (gamma / std).reshape(-1, 1, 1, 1)

return kernel * t, beta - running_mean * gamma / std

def switch_to_deploy(self):

if hasattr(self, 'rbr_reparam'):

return

kernel, bias = self.get_equivalent_kernel_bias()

self.rbr_reparam = nn.Conv2d(

in_channels=self.rbr_dense.conv.in_channels,

out_channels=self.rbr_dense.conv.out_channels,

kernel_size=self.rbr_dense.conv.kernel_size,

stride=self.rbr_dense.conv.stride,

padding=self.rbr_dense.conv.padding,

dilation=self.rbr_dense.conv.dilation,

groups=self.rbr_dense.conv.groups,

bias=True)

self.rbr_reparam.weight.data = kernel

self.rbr_reparam.bias.data = bias

for para in self.parameters():

para.detach_()

self.__delattr__('rbr_dense')

self.__delattr__('rbr_1x1')

if hasattr(self, 'rbr_identity'):

self.__delattr__('rbr_identity')

if hasattr(self, 'id_tensor'):

self.__delattr__('id_tensor')

self.deploy = True

class Swish(nn.Module):

def __init__(self, inplace=True):

super(Swish, self).__init__()

self.inplace = inplace

def forward(self, x):

if self.inplace:

x.mul_(F.sigmoid(x))

return x

else:

return x * F.sigmoid(x)

def get_activation(name='silu', inplace=True):

if name is None:

return nn.Identity()

if isinstance(name, str):

if name == 'silu':

module = nn.SiLU(inplace=inplace)

elif name == 'relu':

module = nn.ReLU(inplace=inplace)

elif name == 'lrelu':

module = nn.LeakyReLU(0.1, inplace=inplace)

elif name == 'swish':

module = Swish(inplace=inplace)

elif name == 'hardsigmoid':

module = nn.Hardsigmoid(inplace=inplace)

elif name == 'identity':

module = nn.Identity()

else:

raise AttributeError('Unsupported act type: {}'.format(name))

return module

elif isinstance(name, nn.Module):

return name

else:

raise AttributeError('Unsupported act type: {}'.format(name))

def get_norm(name, out_channels, inplace=True):

if name == 'bn':

module = nn.BatchNorm2d(out_channels)

else:

raise NotImplementedError

return module

class ConvBNAct(nn.Module):

"""A Conv2d -> Batchnorm -> silu/leaky relu block"""

def __init__(

self,

in_channels,

out_channels,

ksize,

stride=1,

groups=1,

bias=False,

act='silu',

norm='bn',

reparam=False,

):

super().__init__()

# same padding

pad = (ksize - 1) // 2

self.conv = nn.Conv2d(

in_channels,

out_channels,

kernel_size=ksize,

stride=stride,

padding=pad,

groups=groups,

bias=bias,

)

if norm is not None:

self.bn = get_norm(norm, out_channels, inplace=True)

if act is not None:

self.act = get_activation(act, inplace=True)

self.with_norm = norm is not None

self.with_act = act is not None

def forward(self, x):

x = self.conv(x)

if self.with_norm:

x = self.bn(x)

if self.with_act:

x = self.act(x)

return x

def fuseforward(self, x):

return self.act(self.conv(x))

class BasicBlock_3x3_Reverse(nn.Module):

def __init__(self,

ch_in,

ch_hidden_ratio,

ch_out,

act='relu',

shortcut=True):

super(BasicBlock_3x3_Reverse, self).__init__()

assert ch_in == ch_out

ch_hidden = int(ch_in * ch_hidden_ratio)

self.conv1 = ConvBNAct(ch_hidden, ch_out, 3, stride=1, act=act)

self.conv2 = RepConv(ch_in, ch_hidden, 3, stride=1, act=act)

self.shortcut = shortcut

def forward(self, x):

y = self.conv2(x)

y = self.conv1(y)

if self.shortcut:

return x + y

else:

return y

class SPP(nn.Module):

def __init__(

self,

ch_in,

ch_out,

k,

pool_size,

act='swish',

):

super(SPP, self).__init__()

self.pool = []

for i, size in enumerate(pool_size):

pool = nn.MaxPool2d(kernel_size=size,

stride=1,

padding=size // 2,

ceil_mode=False)

self.add_module('pool{}'.format(i), pool)

self.pool.append(pool)

self.conv = ConvBNAct(ch_in, ch_out, k, act=act)

def forward(self, x):

outs = [x]

for pool in self.pool:

outs.append(pool(x))

y = torch.cat(outs, axis=1)

y = self.conv(y)

return y

class CSPStage(nn.Module):

def __init__(self,

ch_in,

ch_out,

n,

block_fn='BasicBlock_3x3_Reverse',

ch_hidden_ratio=1.0,

act='silu',

spp=False):

super(CSPStage, self).__init__()

split_ratio = 2

ch_first = int(ch_out // split_ratio)

ch_mid = int(ch_out - ch_first)

self.conv1 = ConvBNAct(ch_in, ch_first, 1, act=act)

self.conv2 = ConvBNAct(ch_in, ch_mid, 1, act=act)

self.convs = nn.Sequential()

next_ch_in = ch_mid

for i in range(n):

if block_fn == 'BasicBlock_3x3_Reverse':

self.convs.add_module(

str(i),

BasicBlock_3x3_Reverse(next_ch_in,

ch_hidden_ratio,

ch_mid,

act=act,

shortcut=True))

else:

raise NotImplementedError

if i == (n - 1) // 2 and spp:

self.convs.add_module(

'spp', SPP(ch_mid * 4, ch_mid, 1, [5, 9, 13], act=act))

next_ch_in = ch_mid

self.conv3 = ConvBNAct(ch_mid * n + ch_first, ch_out, 1, act=act)

def forward(self, x):

y1 = self.conv1(x)

y2 = self.conv2(x)

mid_out = [y1]

for conv in self.convs:

y2 = conv(y2)

mid_out.append(y2)

y = torch.cat(mid_out, axis=1)

y = self.conv3(y)

return y

三、3️⃣yolo.py文件添加内容

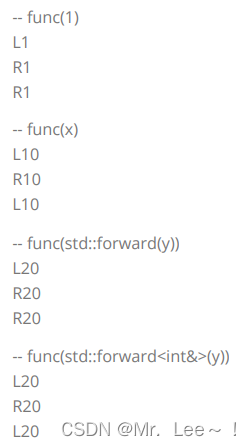

3.1 🎓 添加CSPStage模块

📌找到models文件夹的yolo.py文件,在最上方将extra_modules.py文件中的CSPStage模块导入进来,代码如下:

from models.extra_modules import CSPStage

📌然后在yolo.py文件中找到parse_model网络解析函数,在下面两个地方添加CSPStage;

四、4️⃣实验结果

4.1 🎓 使用yolov5s.pt训练的结果对比

yolov5基准模型训练结果:F1置信度分数为0.71、map@0.5=0.78;

添加Efficient RepGFPN模块训练结果:F1置信度分数为0.76、map@0.5=0.763;

4.2 ✨ 使用yolov5l.pt训练的结果对比

yolov5基准模型训练结果:F1置信度分数为0.8、map@0.5=0.795;

添加Efficient RepGFPN模块训练结果:F1置信度分数为0.82、map@0.5=0.833;

4.3 ⭐️ 实验结果分析

🚀两个对比实验可以看出,使用yolov5s.pt进行训练的时候,Efficient RepGFPN模块替换原有的PANet之后map@0.5反而下降了,但是yolov5l.pt相较于基准模型,map@0.5和f1置信分数都有明显的增加,这个可能是和Efficient RepGFPN的HeavyNeck有关,DAMO-YOLO设计的理念是将小部分参数运算应用到backbone部分,将大部分的参数运算放在了Neck特征融合部分,所以在网络加深的情况下,Neck可以获取到更多的特征信息,所以在处理特征信息方面可能要优于PANet。欢迎大家一起讨论!!!