1,视频演示地址

2,项目地址go-llama.cpp

下载并进行编译:

git clone --recurse-submodules https://github.com/go-skynet/go-llama.cpp

cd go-llama.cpp

make libbinding.a

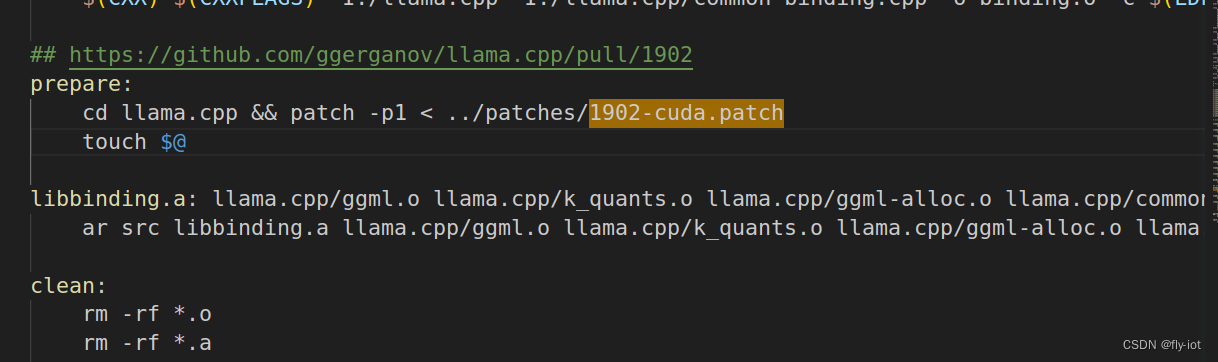

项目中还打了个补丁:

给

编译成功,虽然有一点 warning 警告信息,问题不大。

3,然后运行 llama-2-7b-chat 模型

LIBRARY_PATH=$PWD C_INCLUDE_PATH=$PWD go run ./examples -m "/data/home/test/hf_cache/llama-2-7b-chat.Q2_K.gguf" -t 14

LIBRARY_PATH=$PWD C_INCLUDE_PATH=$PWD go run ./examples -m "/data/home/test/hf_cache/qwen1_5-0_5b-chat-q6_k.gguf" -t 14

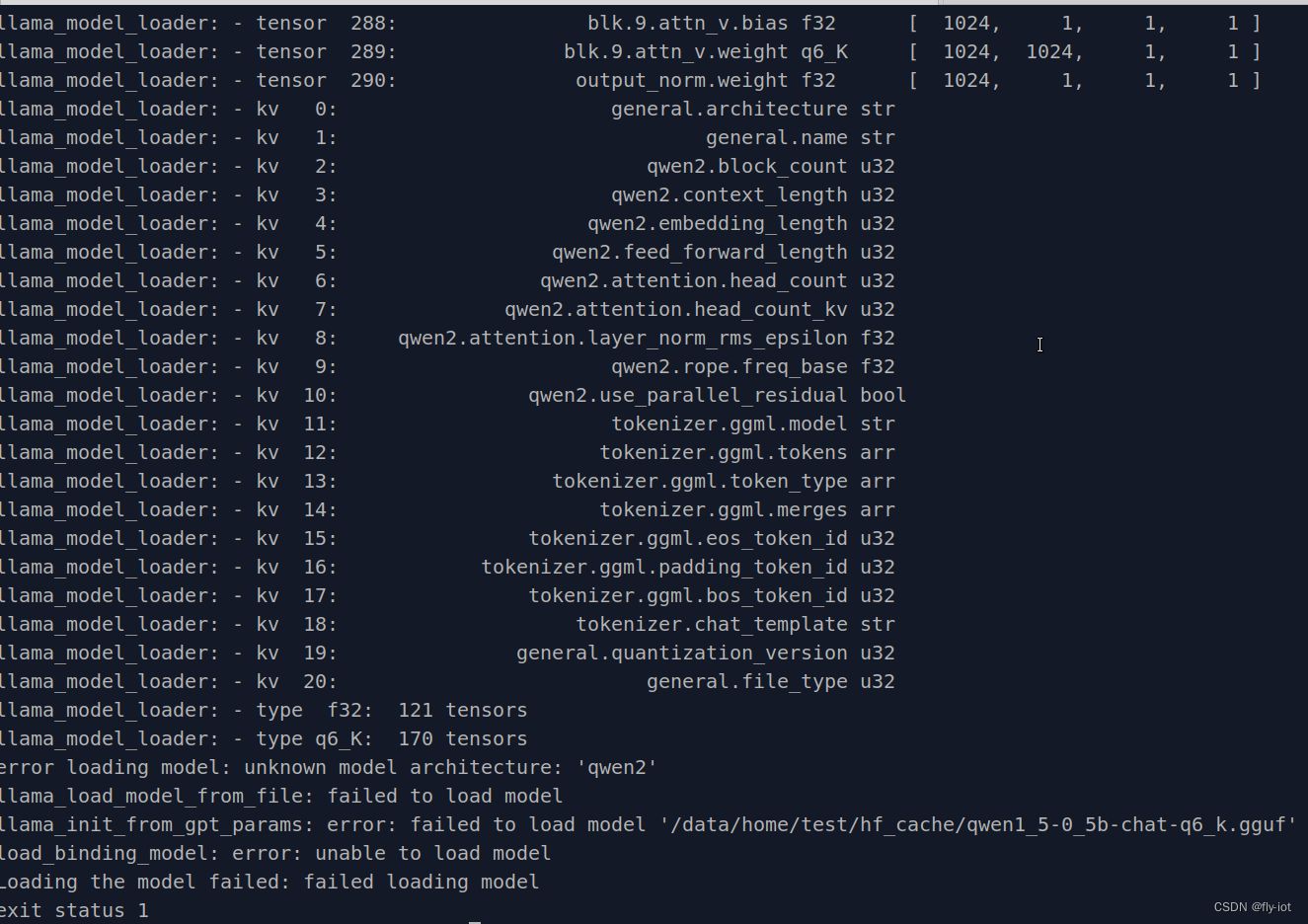

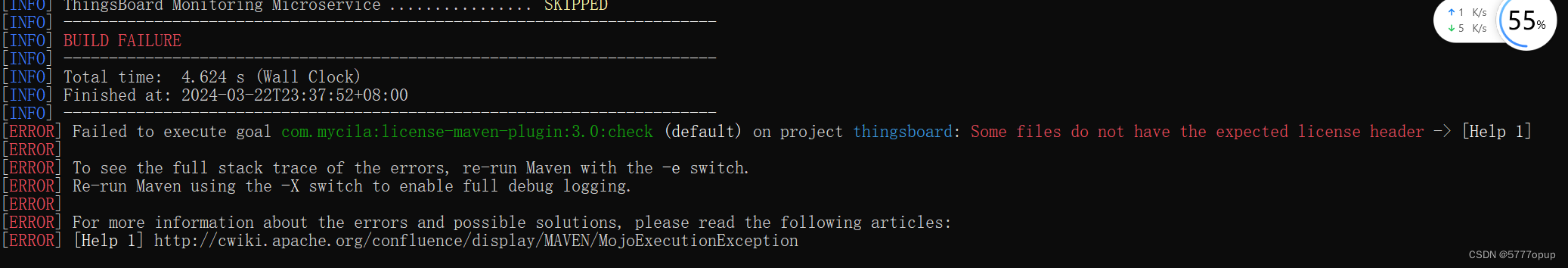

error loading model: unknown model architecture: 'qwen2'

llama_load_model_from_file: failed to load model

llama_init_from_gpt_params: error: failed to load model '/data/home/test/hf_cache/qwen1_5-0_5b-chat-q6_k.gguf'

load_binding_model: error: unable to load model

Loading the model failed: failed loading model