论文链接:https://arxiv.org/abs/2403.09040

Github链接:GitHub - neulab/ragged: Retrieval Augmented Generation Generalized Evaluation Dataset

最近RAG(retrieval-augmented generation)真的好火,这不CMU的发了一篇文章大概提出了一种RAG系统的设计方案。首先再来前情回顾一下RAG,大概就是为了让LLM有更多相关的上下文,然后就可以做领域问答了。然后写论文的人是这么说的,RAG要做的好,配置很重要,那What is the optimal RAG configuration? 先来看看摘要里的一些结论:

Through RAGGED, we uncover that different models suit substantially varied RAG setups. While encoder-decoder models monotonically improve with more documents, we find decoder-only models can only effectively use < 5 documents, despite often having a longer context window. RAGGED offers further insights into LMs’ context utilization habits, where we find that encoder-decoder models rely more on contexts and are thus more sensitive to retrieval quality, while decoder-only models tend to rely on knowledge memorized during training.

作者甚至还给sights配了一张图,这个图必须献祭出来,因为实在是太可爱了!一看就知道是用了羊驼模型,哈哈哈哈哈哈哈。有意思🦙。。。

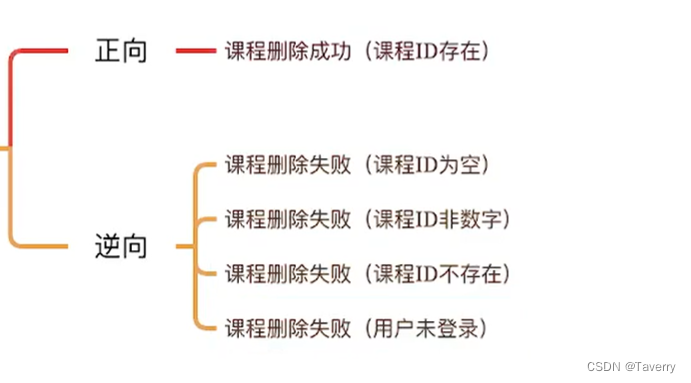

当我们把检索结果为给模型的时候,大概从结论里可以看到这里RAG的配置,指的是,喂了多少篇文章(多了行不行),为给encoder-decoder模型还是decoder模型,喂的这些文章质量怎么样(是不是有些是不相关的会有影响),大概喂多少字符比较合适。

具体看了原文,作者们把这些配置设置为了几个研究问题。

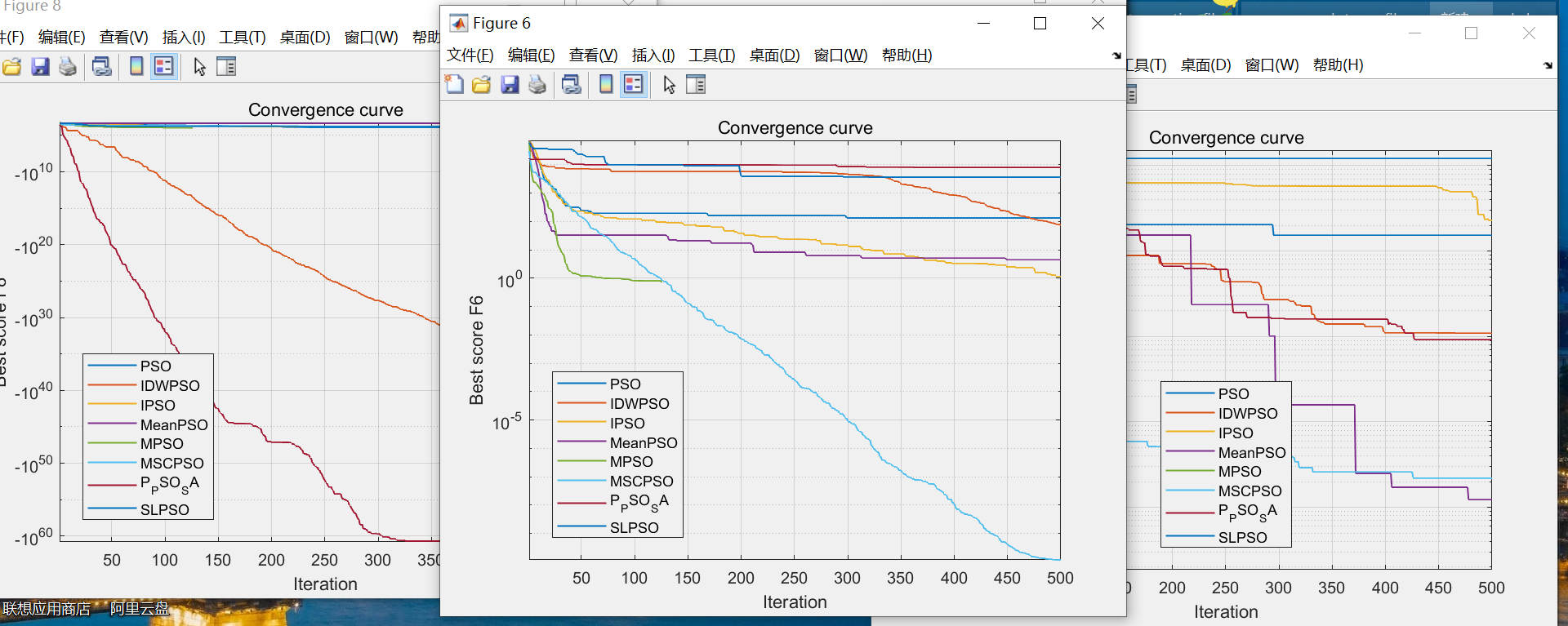

问题一:How many contexts can readers benefit from?

答:We find that encoder-decoder models can effectively utilize up to 30 passages within their 2k-token limit, whereas decoder-only models’ performance declines beyond 5 passages, despite having twice the size of the context limit (4k).

问题二:How reliant are models on provided contexts?

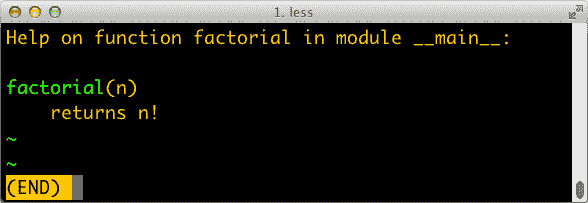

答:We find that decoder-only models, which memorize more during training, exhibit comparatively less reliance on additional, test-time contexts. In contrast, encoder-decoder models, which memorize less during training, are more faithful to the provided contexts. This suggests that providing passages for context-reliant encoder-decoder models is beneficial, whereas it is less so for memory-reliant decoder-only models. 解释一下,大概就是解码模型,比如羊驼模型如果测试的时候给他喂上下文,效果不好,你得提前训练的时候就跟他说答案。但是编码-解码模型,像是Flan模型更能学到提供的上下文。

问题三:How does the retriever quality affect readers’ contextualization behavior?

答:Our analysis considers two aspects: a retriever’s ability to identify high-quality passages and a reader’s response to varying passage quality. While dense, neural retrievers perform better on open-domain questions, sparse, lexical retrievers readily achieve comparable accuracy on special domains, with much less computation. Neural retrievers’ advantage readily benefits encoder-decoder models, especially for single-hop questions. However, the benefits are much less pronounced for decoder-only models and multi-hop questions. 解释一下,大概就是如果是open domain的话肯定dense retriever效果好啦,不过如果是domain数据,lexical retriever就可以了,可以达到一定的精度,且成本低。另外,对于编码-解码模型像是Flan模型,如果检索效果好,在做single-hop问题(single-fact的场景,感觉就是不用推理那种问题,比如知识图谱中的一组三元组即可满足解答要求)时,检索得到的结果为给模型后,模型回答的效果也好。不过对于解码模型或者multi-hop问题,这种检索质量的优势并能明显提高大模型回答的质量。我觉得解码模型和编码-解码模型在检索质量上的敏感性结论和上一个结论喂上下文有没有用也是有些关联了。

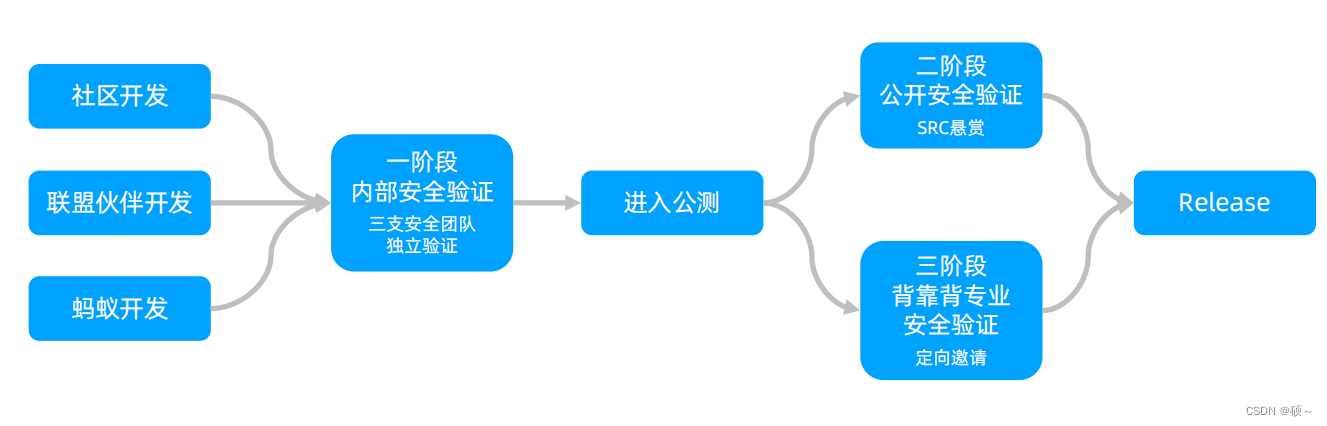

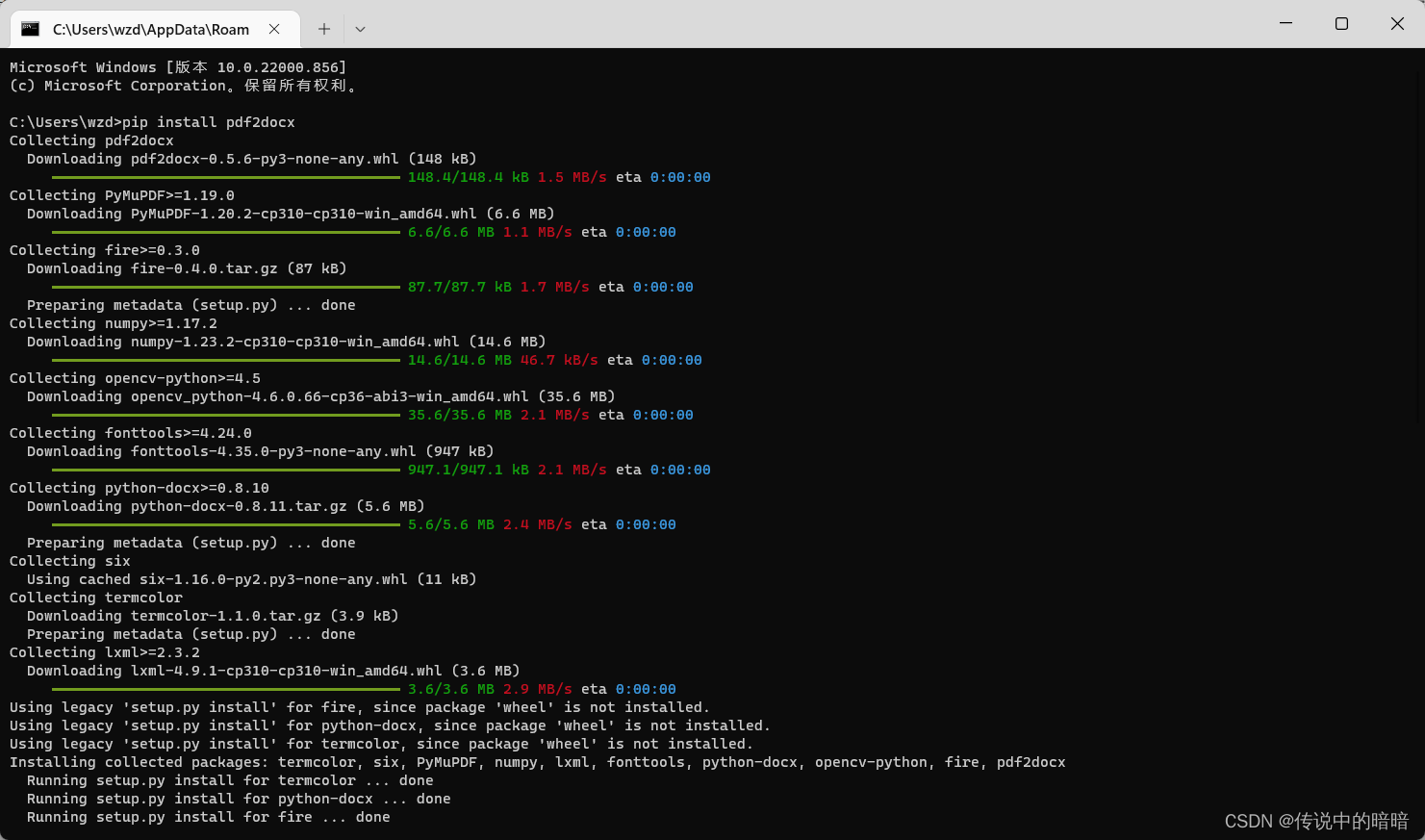

看完结论,我们来看看实验设置:

首先,为了测试效果,文章给出实验搭配是2款经典sparse and dense retrievers(BM25和ColBert)+ 4个牛逼LMs分别来自FLAN家族和LLAMA家族。

测试返回文章数量参数k,测了1-100,这是做了多少子实验呀,最后发现最能看出差距的变化发生在 k = 30 之前。另外这个检索回来的topK在做测试的时候,还做了数据切片,就是测了两组数据,一组会包括gold passages,另一组不包括gold passages。

数据集:三组DBQA(document-based question answering)数据,分别是Natural Questions(开放领域,single-hop),HotpotQA(multi-hop,至少需要两片文章才能回答),BioASQ(领域问答,包括lists and yes/no questions)。对于NQ和HotpotQA,作者从维基百科检索数据(KILT benchmark)。对于BioASQ,作者从 PubMed Annual Baseline Repository进行检索。

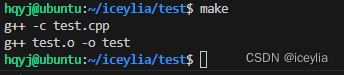

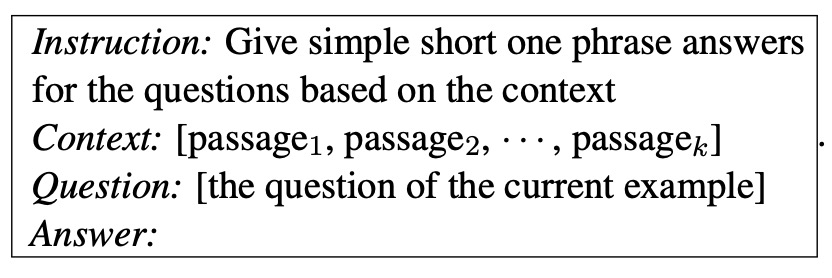

对于所有的实验prompt是这么写的:

好的,读完了这篇文章,大概学到了LLAMA不适合喂它上下文来指望回答正确答案了,最好是训练的时候就把答案告诉他。