基于kotti_ai的AI文字识别web集成系统

AIOCR项目目标:

在Kylin+RISCV搭建一个kotti_ai构架的网站,提供AI OCR文字识别web服务。

二期目标:在AIOCR的基础上提供chatgpt和文心一言等大模型调用,建立综合大模型应用平台。

功能:

1 AI 文字识别功能即ocr推理

2 web集成功能即web内容管理系统

技术实现

ocr推理部分

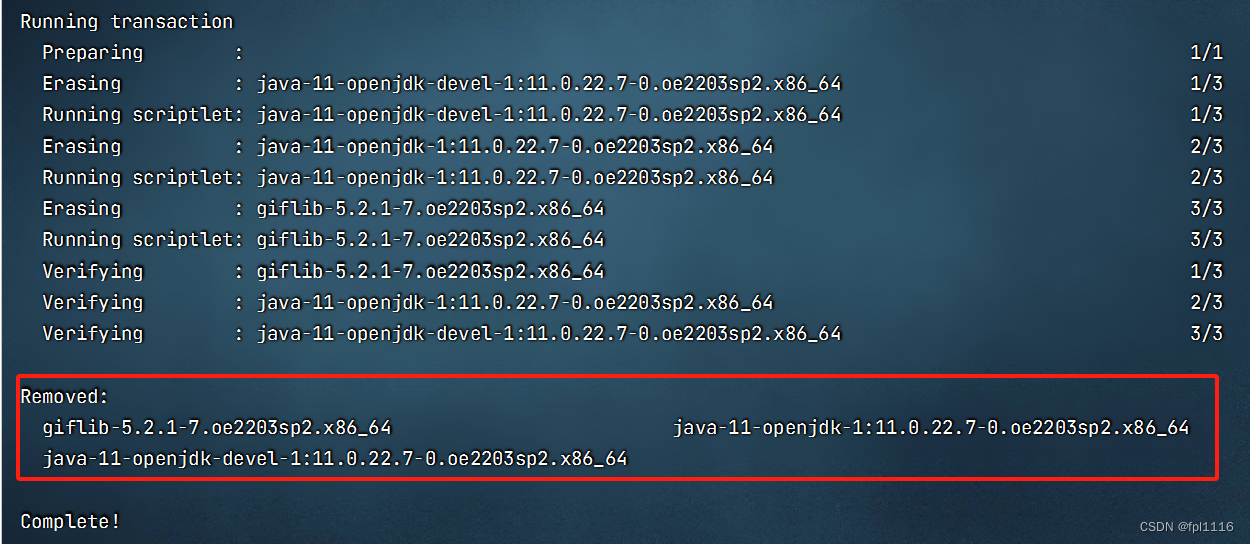

飞桨框架安装,参见https://blog.csdn.net/skywalk8163/article/details/136610462

ocr推理,参见:飞桨AI应用@riscv OpenKylin-CSDN博客

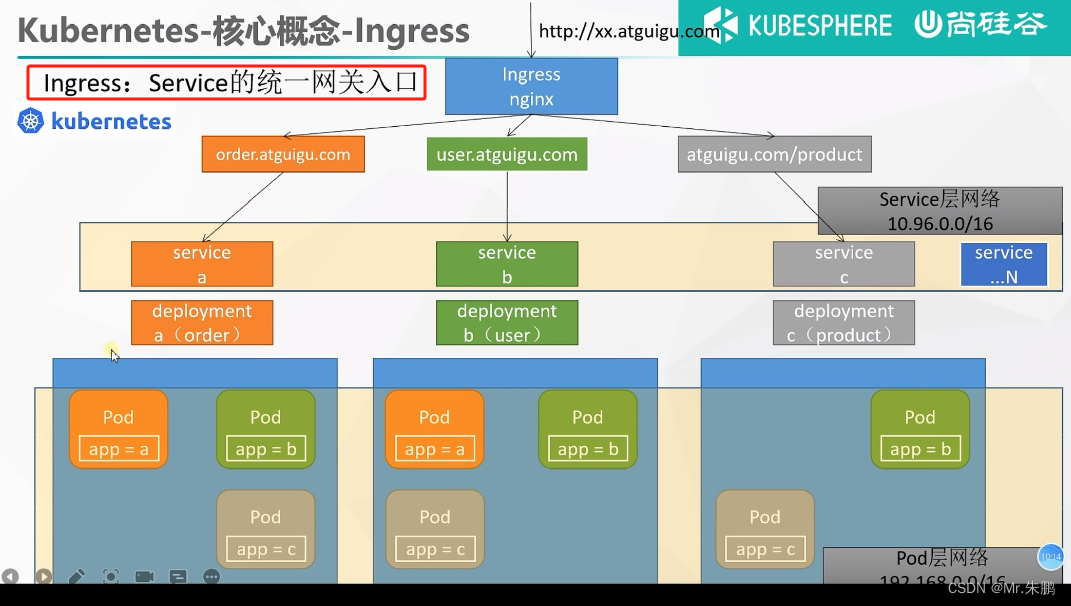

web框架部分

Kotti_ai框架调通,参见:安装调试kotti_ai:AI+互联网企业级部署应用软件包@riscv+OpenKylin-CSDN博客

为kotti_ai添加ocr文字识别部分,见下面。

实现难点:

1 飞桨框架在riscv平台的编译安装,碰到问题较多,索性都一一解决了。

2 ocr推理部分,以为会比较顺利的onnx推理没有调通,转而使用飞桨推理

3 kotti_ai安装,因为kotti本身的复杂性,安装时需要反复修改和纠错。

4 ocr与kotti_ai的连通

ocr与kotti_ai的连通

单独调通orc

根据Paddle2ONNX里面的源码infer.py,将ocr部分写成适合调用的函数,比如写入aiocr.py进行测试。

过程略。

将ocr调用功能写入kotti_ai

写并放置aiocr.py文件

将调好ocr调用函数的aiocr.py文件,放置在kotti_ai/kotti_ai目录:

import os

import sys

import cv2

import copy

import numpy as np

# import sys

# sys.path.insert(0, '.')

print("ok1")

from .utils import predict_rec

from .utils import predict_det

from .utils import predict_cls

#import .utils.predict_rec as predict_rec

#import .utils.predict_det as predict_det

#import .utils.predict_cls as predict_cls

import argparse

def str2bool(v):

return v.lower() in ("true", "t", "1")

def init_args():

parser = argparse.ArgumentParser()

# params for text detector

parser.add_argument("--image_path", type=str)

parser.add_argument("--det_algorithm", type=str, default='DB')

parser.add_argument("--det_model_dir", type=str)

parser.add_argument("--det_limit_side_len", type=float, default=960)

parser.add_argument("--det_limit_type", type=str, default='max')

# DB params

parser.add_argument("--det_db_thresh", type=float, default=0.3)

parser.add_argument("--det_db_box_thresh", type=float, default=0.6)

parser.add_argument("--det_db_unclip_ratio", type=float, default=1.5)

parser.add_argument("--max_batch_size", type=int, default=10)

parser.add_argument("--use_dilation", type=str2bool, default=False)

parser.add_argument("--det_db_score_mode", type=str, default="fast")

parser.add_argument("--rec_algorithm", type=str, default='CRNN')

parser.add_argument("--rec_model_dir", type=str)

parser.add_argument("--rec_image_shape", type=str, default="3, 32, 320")

parser.add_argument("--rec_batch_num", type=int, default=6)

parser.add_argument("--max_text_length", type=int, default=25)

parser.add_argument(

"--rec_char_dict_path",

type=str,

default="./utils/doc/ppocr_keys_v1.txt")

parser.add_argument("--use_space_char", type=str2bool, default=True)

parser.add_argument(

"--vis_font_path", type=str, default="./utils/doc/fonts/simfang.ttf")

parser.add_argument("--drop_score", type=float, default=0.5)

# params for text classifier

parser.add_argument("--use_angle_cls", type=str2bool, default=True)

parser.add_argument("--cls_model_dir", type=str)

parser.add_argument("--cls_image_shape", type=str, default="3, 48, 192")

parser.add_argument("--label_list", type=list, default=['0', '180'])

parser.add_argument("--cls_batch_num", type=int, default=6)

parser.add_argument("--cls_thresh", type=float, default=0.9)

parser.add_argument("--use_paddle_predict", type=str2bool, default=False)

parser.add_argument("--seed", type=int, default=42)

return parser.parse_args(["--seed", "42"])

def preprocess_boxes(dt_boxes, ori_im, FLAGS=None):

def get_rotate_crop_image(img, points):

assert len(points) == 4, "shape of points must be 4*2"

img_crop_width = int(

max(

np.linalg.norm(points[0] - points[1]),

np.linalg.norm(points[2] - points[3])))

img_crop_height = int(

max(

np.linalg.norm(points[0] - points[3]),

np.linalg.norm(points[1] - points[2])))

pts_std = np.float32([[0, 0], [img_crop_width, 0],

[img_crop_width, img_crop_height],

[0, img_crop_height]])

M = cv2.getPerspectiveTransform(points, pts_std)

dst_img = cv2.warpPerspective(

img,

M, (img_crop_width, img_crop_height),

borderMode=cv2.BORDER_REPLICATE,

flags=cv2.INTER_CUBIC)

dst_img_height, dst_img_width = dst_img.shape[0:2]

if dst_img_height * 1.0 / dst_img_width >= 1.5:

dst_img = np.rot90(dst_img)

return dst_img

def sorted_boxes(dt_boxes):

num_boxes = dt_boxes.shape[0]

sorted_boxes = sorted(dt_boxes, key=lambda x: (x[0][1], x[0][0]))

_boxes = list(sorted_boxes)

for i in range(num_boxes - 1):

if abs(_boxes[i + 1][0][1] - _boxes[i][0][1]) < 10 and \

(_boxes[i + 1][0][0] < _boxes[i][0][0]):

tmp = _boxes[i]

_boxes[i] = _boxes[i + 1]

_boxes[i + 1] = tmp

return _boxes

img_crop_list = []

dt_boxes = sorted_boxes(dt_boxes)

for bno in range(len(dt_boxes)):

tmp_box = copy.deepcopy(dt_boxes[bno])

img_crop = get_rotate_crop_image(ori_im, tmp_box)

img_crop_list.append(img_crop)

return dt_boxes, img_crop_list

def postprocess(dt_boxes, rec_res, FLAGS=None):

filter_boxes, filter_rec_res = [], []

for box, rec_result in zip(dt_boxes, rec_res):

text, score = rec_result

if score >= FLAGS.drop_score:

filter_boxes.append(box)

filter_rec_res.append(rec_result)

return filter_boxes, filter_rec_res

def ai_ocr(FLAGS=None, img=None):

# 本地测试

img = cv2.imread(FLAGS.image_path)

# if img == None:

# return "None"

# img = base64.b64encode(img)

ori_im = img.copy()

# text detect

text_detector = predict_det.TextDetector(FLAGS)

dt_boxes = text_detector(img)

dt_boxes, img_crop_list = preprocess_boxes(dt_boxes, ori_im, FLAGS=FLAGS)

# text classifier

if FLAGS.use_angle_cls:

text_classifier = predict_cls.TextClassifier(FLAGS)

img_crop_list, angle_list = text_classifier(img_crop_list)

# text recognize

text_recognizer = predict_rec.TextRecognizer(FLAGS)

rec_res = text_recognizer(img_crop_list)

_, filter_rec_res = postprocess(dt_boxes, rec_res, FLAGS=FLAGS)

ocrtext = " "

for text, score in filter_rec_res:

ocrtext += text

print("{}, {:.3f}".format(text, score))

print("Finish!")

print(ocrtext)

return ocrtext

if __name__ == "__main__":

FLAGS = init_args()

FLAGS.cls_model_dir="./inference/ch_ppocr_mobile_v2.0_cls_infer"

FLAGS.rec_model_dir="./inference/ch_PP-OCRv2_rec_infer"

FLAGS.det_model_dir="./inference/ch_PP-OCRv2_det_infer"

FLAGS.image_path="./images/wahaha.jpg"

# FLAGS.image_path="./images/lite_demo.png"

FLAGS.use_paddle_predict="True"

ai_ocr(FLAGS=FLAGS)

安装新的kotti_ai包

进入kotti_ai主目录,执行python setup.py develop 安装

使用develop选项,可以使用开发模式,修改源代码都会即时成效,不用再次安装。

测试

进入python,执行from kotti_ai.aiocr import ai_ocr ,如果通过,则证明aiocr.py文件写的正确。

最终测试代码为:

from kotti_ai.aiocr import ai_ocr

from kotti_ai.aiocr import init_args

FLAGS = init_args()

FLAGS.cls_model_dir="./inference/ch_ppocr_mobile_v2.0_cls_infer"

FLAGS.rec_model_dir="./inference/ch_PP-OCRv2_rec_infer"

FLAGS.det_model_dir="./inference/ch_PP-OCRv2_det_infer"

FLAGS.image_path="./images/lite_demo.png"

FLAGS.use_paddle_predict="True"

ai_ocr(FLAGS=FLAGS)在kotti_ai中添加aiocr网页处理部分

aiocr.py文件中,ai_ocr函数修改成:

def ai_ocr(FLAGS=None, img=None):

# 本地测试

if img == None:

# return "None"

img = cv2.imread(FLAGS.image_path)

# img = base64.b64encode(img)

edit.py修改:

# out = ppshitu(im=im)

from kotti_ai.aiocr import ai_ocr

from kotti_ai.aiocr import init_args

FLAGS = init_args()

FLAGS.cls_model_dir="./inference/ch_ppocr_mobile_v2.0_cls_infer"

FLAGS.rec_model_dir="./inference/ch_PP-OCRv2_rec_infer"

FLAGS.det_model_dir="./inference/ch_PP-OCRv2_det_infer"

FLAGS.image_path="./images/lite_demo.png"

FLAGS.use_paddle_predict="True"

out = ai_ocr(FLAGS=FLAGS, img=im)

print("====out:", out)

context.out = str(out)

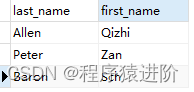

启动kotti_ai

pserve development.ini

服务端口为5000

登录用户名admin 口令qwerty

上传图片测试文字识别,发现有报错,一一解决。

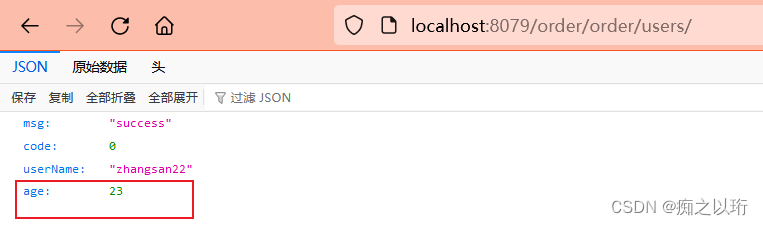

项目成功运行

经过紧张的调试,项目终于成功运行!

调试

报错AttributeError: 'str' object has no attribute 'decode'

File "/usr/lib/python3.8/site-packages/Kotti-2.0.9-py3.8.egg/kotti/security.py", line 103, in __init__

password = get_principals().hash_password(password)

File "/usr/lib/python3.8/site-packages/Kotti-2.0.9-py3.8.egg/kotti/security.py", line 564, in hash_password

return bcrypt.hashpw(password, salt).decode("utf-8")

AttributeError: 'str' object has no attribute 'decode'

修改代码 即可

sudo vi /usr/lib/python3.8/site-packages/Kotti-2.0.9-py3.8.egg/kotti/security.py

return bcrypt.hashpw(password, salt)

# return bcrypt.hashpw(password, salt).decode("utf-8")

添加图片报错

ValueError: The truth value of an array with more than one element is ambiguous. Use a.any() or a.all()

- Expression: "link(context, request)"

- Filename: ... /Kotti-2.0.9-py3.8.egg/kotti/templates/edit/el-parent.pt

- Location: (line 10: col 8)

- Source: ${link(context, request)}

^^^^^^^^^^^^^^^^^^^^^^

- Expression: "link(context, request)"

- Filename: ... ages/Kotti-2.0.9-py3.8.egg/kotti/templates/editor-bar.pt

- Location: (line 24: col 14)

- Source: ${link(context, request)}

^^^^^^^^^^^^^^^^^^^^^^

- Expression: "api.render_template('kotti:templates/editor-bar.pt')"

- Filename: ... ges/Kotti-2.0.9-py3.8.egg/kotti/templates/view/master.pt

- Location: (line 37: col 24)

- Source: ... ce="api.render_template('kotti:templates/editor-bar.pt')"></ ...

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

- Expression: "api.macro('kotti:templates/view/master.pt')"

- Filename: /home/skywalk/kotti_ai/kotti_ai/templates/image.pt

- Location: (line 3: col 23)

- Source: ... :use-macro="api.macro('kotti:templates/view/master.pt')">

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

- Arguments: view: <NoneType ('None',) at 0x3cfc48>

renderer_name: kotti:templates/edit/el-parent.pt

renderer_info: <RendererHelper ('None',) at 0x3e13e06400>

context: <AImage ('test',) at 0x3e13fd3580>

request: <Request ('None',) at 0x3e2005a790>

req: <Request ('None',) at 0x3e2005a790>

get_csrf_token: <partial ('None',) at 0x3e13f02310>

api: <TemplateAPI ('None',) at 0x3e13fd30d0>

link: <LinkRenderer ('None',) at 0x3f8848fc40>

target_language: <NoneType ('None',) at 0x3cfc48>

repeat: <RepeatDict ('None',) at 0x3e13fc1c70>

估计是返回值没有str导致的。原来img是个列表,所以aiocr.py文件中修改成img == []

报错找不到模型文件

将代码里的模型文件的配置,改为框架的相对路径,即:

```

FLAGS = init_args()

FLAGS.cls_model_dir="./kotti_ai/inference/ch_ppocr_mobile_v2.0_cls_infer"

FLAGS.rec_model_dir="./kotti_ai/inference/ch_PP-OCRv2_rec_infer"

FLAGS.det_model_dir="./kotti_ai/inference/ch_PP-OCRv2_det_infer"

FLAGS.image_path="./kotti_ai/images/lite_demo.png"

FLAGS.use_paddle_predict="True"

```

报错找不到rec_char_dict_path txt文件

在代码里将其改为框架的相对路径

parser.add_argument(

"--rec_char_dict_path",

type=str,

default="./kotti_ai/utils/doc/ppocr_keys_v1.txt")

报错__iterator = get('eval', eval)(_lookup_attr(getname('context'), 'out'))

File "/usr/local/lib/python3.8/dist-packages/chameleon/template.py", line 200, in render

self._render(

File "/tmp/tmpv4xn_ul5/image_bbceef85f35e624757730558bb775d8e.py", line 375, in render

__m(__stream, econtext.copy(), rcontext, __i18n_domain, __i18n_context, target_language)

File "/tmp/tmp5dtqmvp7/master_21123e986705ec1c4189aca762f798cd.py", line 773, in render_main

__slot_content(__stream, econtext.copy(), rcontext)

File "/tmp/tmpv4xn_ul5/image_bbceef85f35e624757730558bb775d8e.py", line 248, in __fill_content

__iterator = get('eval', eval)(_lookup_attr(getname('context'), 'out'))

File "<string>", line None

SyntaxError: <no detail available>

经过查看跟踪信息,发现已经正确文字识别了。是模板文件那个地方报错了template: /home/skywalk/kotti_ai/kotti_ai/templates/image.pt

修改该文件,将原来的循环画表语句去掉,直接显示:

<p><h2>OCR result:</h2>${context.out}</p>

最终网站起来拉!