channel

channel和goroutine是Go语言的核心命脉。这篇文章来简单介绍一下Go chan的原理,源码并不好读,应结合gmp调度模型来理解,后续补充吧。

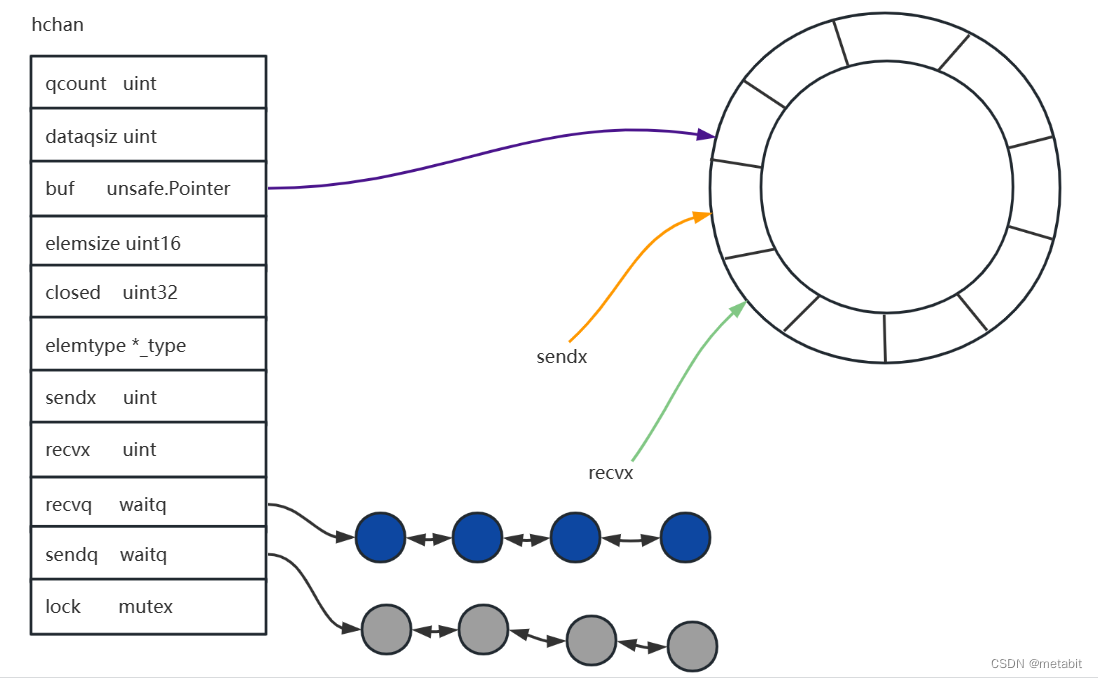

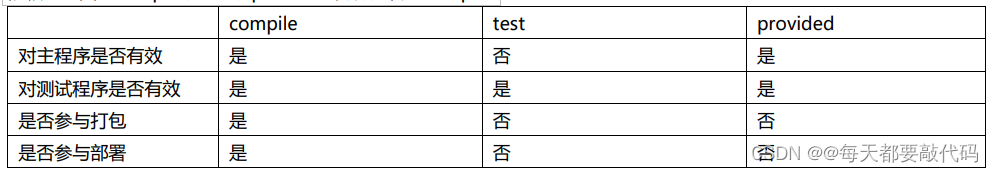

由上图可见,chan的底层结构是一个hchan结构体,其中buf字段指向了一个环形的数组缓冲区,若channel是非缓冲类型的,则没有该底层结构。

当channel无缓冲区时,只根据recvq和sendq双线链表对数据的收发进行管理,每次收发数据,都直接在两个队列的队首进行操作,保证先进先出。例如把sendq和recvq队首的g出队,将前者的值拷贝进后者,即完成了一次通道的收发操作。

当channel有缓冲区时,recvx和sendx分别指向环形数组中下一个读取和写入的位置,他们被qcount所管理。当qcount等于dataqsiz时,再次执行写入操作的goroutine将被挂到sendq双向链表后边,等待发送。同理,当qcount 等于 0 时,若有goroutine想要接收数据,则该goroutine会被挂到recvq双向链表的后边,等待接收。保证先进先出。若缓冲区有位置,则直接写入或直接取走值。

const (

maxAlign = 8 //最大对其方式

hchanSize = unsafe.Sizeof(hchan{}) + uintptr(-int(unsafe.Sizeof(hchan{}))&(maxAlign-1)) //hchan的大小

debugChan = false //debug标记

)

chan 底层结构hchan

type hchan struct {

qcount uint // total data in the queue 底层环形数组,当前数据个数

dataqsiz uint // size of the circular queue 底层环形数组的大小

buf unsafe.Pointer // points to an array of dataqsiz elements 底层环形数组指针

elemsize uint16 // 元素大小

closed uint32 // 标识通道是否关闭,0:非关闭状态

elemtype *_type // element type 元素类型

sendx uint // send index 环形数组发送的索引位置

recvx uint // receive index 环形数组接收的索引位置

recvq waitq // list of recv waiters 负责接收的goroutine的队列

sendq waitq // list of send waiters 负责发送的goroutine的队列

// lock protects all fields in hchan, as well as several

// fields in sudogs blocked on this channel.

//

// Do not change another G's status while holding this lock

// (in particular, do not ready a G), as this can deadlock

// with stack shrinking.

lock mutex //锁,负责保护以上的字段

}

waitq的结构,双向链表

type waitq struct {

first *sudog //链表头

last *sudog //链表尾

}

makechan 相当于make(chan, len)

func makechan(t *chantype, size int) *hchan {

elem := t.elem // 获取欲创建channel的成员类型

// compiler checks this but be safe.

if elem.size >= 1<<16 { //成员的size过大 panic

throw("makechan: invalid channel element type")

}

if hchanSize%maxAlign != 0 || elem.align > maxAlign { //非8字节内存对齐,或对齐方式不正确,panic

throw("makechan: bad alignment")

}

mem, overflow := math.MulUintptr(elem.size, uintptr(size)) //创建底层连续的内存区域,并检查是否溢出

if overflow || mem > maxAlloc-hchanSize || size < 0 { //若溢出 或 内存过大,或chan的长度是负数,panic

panic(plainError("makechan: size out of range"))

}

// Hchan does not contain pointers interesting for GC when elements stored in buf do not contain pointers.

// buf points into the same allocation, elemtype is persistent.

// SudoG's are referenced from their owning thread so they can't be collected.

// TODO(dvyukov,rlh): Rethink when collector can move allocated objects.

var c *hchan

switch {

case mem == 0: //chan占用内存为0

// Queue or element size is zero.

c = (*hchan)(mallocgc(hchanSize, nil, true))

// Race detector uses this location for synchronization.

c.buf = c.raceaddr()

case elem.ptrdata == 0: //chan的elem中不包含指针

// Elements do not contain pointers.

// Allocate hchan and buf in one call.

c = (*hchan)(mallocgc(hchanSize+mem, nil, true))

c.buf = add(unsafe.Pointer(c), hchanSize) // 给buf设置内存区域

default: //成员包含指针

// Elements contain pointers.

c = new(hchan)

c.buf = mallocgc(mem, elem, true)

}

c.elemsize = uint16(elem.size) //初始化及赋值操作

c.elemtype = elem

c.dataqsiz = uint(size)

lockInit(&c.lock, lockRankHchan) //初始化锁

if debugChan { //调试模式则打印信息

print("makechan: chan=", c, "; elemsize=", elem.size, "; dataqsiz=", size, "\n")

}

return c //返回hchan指针对象

}

reflect_makechan,makechan64 对makechan的封装

//go:linkname reflect_makechan reflect.makechan

func reflect_makechan(t *chantype, size int) *hchan {

return makechan(t, size)

}

func makechan64(t *chantype, size int64) *hchan {

if int64(int(size)) != size {

panic(plainError("makechan: size out of range"))

}

return makechan(t, int(size))

}

chanbuf 返回chan的第i个位置的元素的unsafe指针

// chanbuf(c, i) is pointer to the i'th slot in the buffer.

func chanbuf(c *hchan, i uint) unsafe.Pointer {

return add(c.buf, uintptr(i)*uintptr(c.elemsize))

}

full 检查channel是否已满,其报告通道发送时是否会阻塞

// full reports whether a send on c would block (that is, the channel is full).

// It uses a single word-sized read of mutable state, so although

// the answer is instantaneously true, the correct answer may have changed

// by the time the calling function receives the return value.

func full(c *hchan) bool {

// c.dataqsiz is immutable (never written after the channel is created)

// so it is safe to read at any time during channel operation.

if c.dataqsiz == 0 { // chan的len为0时,检测接收队列是否为空

// Assumes that a pointer read is relaxed-atomic.

return c.recvq.first == nil

}

// Assumes that a uint read is relaxed-atomic.

return c.qcount == c.dataqsiz //检测元素是否已满

}

chansend1对chansend的封装

// entry point for c <- x from compiled code

//

//go:nosplit

func chansend1(c *hchan, elem unsafe.Pointer) {

chansend(c, elem, true, getcallerpc())

}

chansend chan <- x 向管道中发送数据

/*

* generic single channel send/recv

* If block is not nil,

* then the protocol will not

* sleep but return if it could

* not complete.

*

* sleep can wake up with g.param == nil

* when a channel involved in the sleep has

* been closed. it is easiest to loop and re-run

* the operation; we'll see that it's now closed.

*/

func chansend(c *hchan, ep unsafe.Pointer, block bool, callerpc uintptr) bool {

if c == nil { // 若管道为空

if !block { // 若非阻塞类型,则返回false表示发送失败

return false

} // 否则发送的goroutine挂起,然后panic

gopark(nil, nil, waitReasonChanSendNilChan, traceEvGoStop, 2)

throw("unreachable")

}

if debugChan { //调试模式打印状态

print("chansend: chan=", c, "\n")

}

if raceenabled { // 竞态检测

racereadpc(c.raceaddr(), callerpc, abi.FuncPCABIInternal(chansend))

}

// Fast path: check for failed non-blocking operation without acquiring the lock.

//

// After observing that the channel is not closed, we observe that the channel is

// not ready for sending. Each of these observations is a single word-sized read

// (first c.closed and second full()).

// Because a closed channel cannot transition from 'ready for sending' to

// 'not ready for sending', even if the channel is closed between the two observations,

// they imply a moment between the two when the channel was both not yet closed

// and not ready for sending. We behave as if we observed the channel at that moment,

// and report that the send cannot proceed.

//

// It is okay if the reads are reordered here: if we observe that the channel is not

// ready for sending and then observe that it is not closed, that implies that the

// channel wasn't closed during the first observation. However, nothing here

// guarantees forward progress. We rely on the side effects of lock release in

// chanrecv() and closechan() to update this thread's view of c.closed and full().

if !block && c.closed == 0 && full(c) { // 非阻塞,未关闭,已满则返回false

return false

}

var t0 int64

if blockprofilerate > 0 {

t0 = cputicks()

}

lock(&c.lock) //上锁

if c.closed != 0 { //chan已关闭,解锁,panic

unlock(&c.lock)

panic(plainError("send on closed channel"))

}

if sg := c.recvq.dequeue(); sg != nil { //接收队列取出一个g,绕过缓冲区,直接给接收者

// Found a waiting receiver. We pass the value we want to send

// directly to the receiver, bypassing the channel buffer (if any).

send(c, sg, ep, func() { unlock(&c.lock) }, 3) //给这个g装配一个值

return true //返回true表示发送成功

}

if c.qcount < c.dataqsiz { //buf中有空余的空间,且接收队列无g等待

// Space is available in the channel buffer. Enqueue the element to send.

qp := chanbuf(c, c.sendx)

if raceenabled {

racenotify(c, c.sendx, nil)

}

typedmemmove(c.elemtype, qp, ep) //将待发送值复制到buf的sendx位置

c.sendx++ //sendx指针下移

if c.sendx == c.dataqsiz { //指针越界归零,构成环状

c.sendx = 0

}

c.qcount++ //hchan元素个数+1

unlock(&c.lock) //解锁,返回true

return true

}

if !block { //非阻塞

unlock(&c.lock) //解锁,返回false

return false

}

// 发送处于阻塞状态,则等待运行时调度当前g

// Block on the channel. Some receiver will complete our operation for us.

gp := getg()

mysg := acquireSudog()

mysg.releasetime = 0

if t0 != 0 {

mysg.releasetime = -1

}

// No stack splits between assigning elem and enqueuing mysg

// on gp.waiting where copystack can find it.

mysg.elem = ep

mysg.waitlink = nil

mysg.g = gp

mysg.isSelect = false

mysg.c = c

gp.waiting = mysg

gp.param = nil

c.sendq.enqueue(mysg) //当前g放进发送队列

// Signal to anyone trying to shrink our stack that we're about

// to park on a channel. The window between when this G's status

// changes and when we set gp.activeStackChans is not safe for

// stack shrinking.

atomic.Store8(&gp.parkingOnChan, 1)

gopark(chanparkcommit, unsafe.Pointer(&c.lock), waitReasonChanSend, traceEvGoBlockSend, 2) //g挂起

// Ensure the value being sent is kept alive until the

// receiver copies it out. The sudog has a pointer to the

// stack object, but sudogs aren't considered as roots of the

// stack tracer.

KeepAlive(ep) //防止ep被回收

// someone woke us up.

if mysg != gp.waiting {

throw("G waiting list is corrupted")

}

gp.waiting = nil

gp.activeStackChans = false

closed := !mysg.success

gp.param = nil

if mysg.releasetime > 0 {

blockevent(mysg.releasetime-t0, 2)

}

mysg.c = nil

releaseSudog(mysg)

if closed {

if c.closed == 0 {

throw("chansend: spurious wakeup")

}

panic(plainError("send on closed channel"))

}

return true

}

send 向g中写入数据

// send processes a send operation on an empty channel c.

// The value ep sent by the sender is copied to the receiver sg.

// The receiver is then woken up to go on its merry way.

// Channel c must be empty and locked. send unlocks c with unlockf.

// sg must already be dequeued from c.

// ep must be non-nil and point to the heap or the caller's stack.

func send(c *hchan, sg *sudog, ep unsafe.Pointer, unlockf func(), skip int) {

if raceenabled { //竞态检测

if c.dataqsiz == 0 {

racesync(c, sg)

} else {

// Pretend we go through the buffer, even though

// we copy directly. Note that we need to increment

// the head/tail locations only when raceenabled.

racenotify(c, c.recvx, nil)

racenotify(c, c.recvx, sg)

c.recvx++

if c.recvx == c.dataqsiz {

c.recvx = 0

}

c.sendx = c.recvx // c.sendx = (c.sendx+1) % c.dataqsiz

}

}

if sg.elem != nil {

sendDirect(c.elemtype, sg, ep) //直接发送

sg.elem = nil

}

gp := sg.g

unlockf()

gp.param = unsafe.Pointer(sg)

sg.success = true

if sg.releasetime != 0 {

sg.releasetime = cputicks()

}

goready(gp, skip+1) //就绪状态

}

sendDirect 从src复制值到g

// Sends and receives on unbuffered or empty-buffered channels are the

// only operations where one running goroutine writes to the stack of

// another running goroutine. The GC assumes that stack writes only

// happen when the goroutine is running and are only done by that

// goroutine. Using a write barrier is sufficient to make up for

// violating that assumption, but the write barrier has to work.

// typedmemmove will call bulkBarrierPreWrite, but the target bytes

// are not in the heap, so that will not help. We arrange to call

// memmove and typeBitsBulkBarrier instead.

func sendDirect(t *_type, sg *sudog, src unsafe.Pointer) {

// src is on our stack, dst is a slot on another stack.

// Once we read sg.elem out of sg, it will no longer

// be updated if the destination's stack gets copied (shrunk).

// So make sure that no preemption points can happen between read & use.

dst := sg.elem

typeBitsBulkBarrier(t, uintptr(dst), uintptr(src), t.size)

// No need for cgo write barrier checks because dst is always

// Go memory.

memmove(dst, src, t.size)

}

recvDirect 从g复制值到dst

func recvDirect(t *_type, sg *sudog, dst unsafe.Pointer) {

// dst is on our stack or the heap, src is on another stack.

// The channel is locked, so src will not move during this

// operation.

src := sg.elem

typeBitsBulkBarrier(t, uintptr(dst), uintptr(src), t.size)

memmove(dst, src, t.size)

}

closechan 相当于close(chan) 关闭channel,该操作会唤醒所有监听该chan的goroutine,若向关闭的chan中发送数据,则会panic,负责读取的goroutine会接收到通道的零值

func closechan(c *hchan) {

if c == nil { //若c本身就是空,二话不说,直接panic

panic(plainError("close of nil channel"))

}

lock(&c.lock) //上锁

if c.closed != 0 { // c已经被关闭,二次关闭会panic

unlock(&c.lock) // 解锁

panic(plainError("close of closed channel")) // panic

}

if raceenabled { // 竞态检测

callerpc := getcallerpc()

racewritepc(c.raceaddr(), callerpc, abi.FuncPCABIInternal(closechan))

racerelease(c.raceaddr())

}

c.closed = 1 // closed 赋值1 代表channel已关闭

var glist gList

// release all readers 释放所有等待读取的goroutine

for {

sg := c.recvq.dequeue() //从接收队列出队

if sg == nil {

break

}

if sg.elem != nil { //清空成员

typedmemclr(c.elemtype, sg.elem)

sg.elem = nil

}

if sg.releasetime != 0 {

sg.releasetime = cputicks()

}

gp := sg.g

gp.param = unsafe.Pointer(sg)

sg.success = false

if raceenabled {

raceacquireg(gp, c.raceaddr())

}

glist.push(gp) // 放入glist队列准备通知

}

// release all writers (they will panic) 释放所有等待写入的goroutine,向已关闭的通道发送数据,会panic

for {

sg := c.sendq.dequeue()

if sg == nil {

break

}

sg.elem = nil

if sg.releasetime != 0 {

sg.releasetime = cputicks()

}

gp := sg.g

gp.param = unsafe.Pointer(sg)

sg.success = false

if raceenabled {

raceacquireg(gp, c.raceaddr())

}

glist.push(gp)

}

unlock(&c.lock)

// Ready all Gs now that we've dropped the channel lock.

for !glist.empty() {

gp := glist.pop()

gp.schedlink = 0

goready(gp, 3) //就绪状态,准备被调度

}

}

empty 检测通道中是否没有值了,其报告通道读取时是否会阻塞

// empty reports whether a read from c would block (that is, the channel is

// empty). It uses a single atomic read of mutable state.

func empty(c *hchan) bool {

// c.dataqsiz is immutable.

if c.dataqsiz == 0 { //若非缓冲通道,则检测发送队列是否为空

return atomic.Loadp(unsafe.Pointer(&c.sendq.first)) == nil

}

return atomic.Loaduint(&c.qcount) == 0 //否则检测通道中元素个数是否为0

}

chanrecv1 对chanrecv的封装,<-chan 从通道中读取值

// entry points for <- c from compiled code

//

//go:nosplit

func chanrecv1(c *hchan, elem unsafe.Pointer) {

chanrecv(c, elem, true)

}

chanrecv2 value, ok := <-chan,读取通道时两个返回值的封装

//go:nosplit

func chanrecv2(c *hchan, elem unsafe.Pointer) (received bool) {

_, received = chanrecv(c, elem, true)

return

}

chanrecv 通道读取操作

// chanrecv receives on channel c and writes the received data to ep.

// ep may be nil, in which case received data is ignored.

// If block == false and no elements are available, returns (false, false).

// Otherwise, if c is closed, zeros *ep and returns (true, false).

// Otherwise, fills in *ep with an element and returns (true, true).

// A non-nil ep must point to the heap or the caller's stack.

func chanrecv(c *hchan, ep unsafe.Pointer, block bool) (selected, received bool) {

// raceenabled: don't need to check ep, as it is always on the stack

// or is new memory allocated by reflect.

if debugChan { // 调试模式,打印信息

print("chanrecv: chan=", c, "\n")

}

if c == nil { // 若通道为空

if !block { //非阻塞,直接返回,否则 g挂起,panic

return

}

gopark(nil, nil, waitReasonChanReceiveNilChan, traceEvGoStop, 2)

throw("unreachable")

}

// Fast path: check for failed non-blocking operation without acquiring the lock.

if !block && empty(c) { //非阻塞,并且c为空

// After observing that the channel is not ready for receiving, we observe whether the

// channel is closed.

//

// Reordering of these checks could lead to incorrect behavior when racing with a close.

// For example, if the channel was open and not empty, was closed, and then drained,

// reordered reads could incorrectly indicate "open and empty". To prevent reordering,

// we use atomic loads for both checks, and rely on emptying and closing to happen in

// separate critical sections under the same lock. This assumption fails when closing

// an unbuffered channel with a blocked send, but that is an error condition anyway.

if atomic.Load(&c.closed) == 0 { // 未关闭,直接返回

// Because a channel cannot be reopened, the later observation of the channel

// being not closed implies that it was also not closed at the moment of the

// first observation. We behave as if we observed the channel at that moment

// and report that the receive cannot proceed.

return

}

// The channel is irreversibly closed. Re-check whether the channel has any pending data

// to receive, which could have arrived between the empty and closed checks above.

// Sequential consistency is also required here, when racing with such a send.

if empty(c) { //通道为空

// The channel is irreversibly closed and empty.

if raceenabled { // 竞态检测

raceacquire(c.raceaddr())

}

if ep != nil { // 清空内存,返回true,false 代表被选中,已关闭

typedmemclr(c.elemtype, ep)

}

return true, false

}

}

var t0 int64

if blockprofilerate > 0 {

t0 = cputicks()

}

lock(&c.lock) //上锁

if c.closed != 0 { // 若已关闭

if c.qcount == 0 { //元素个数为0

if raceenabled {

raceacquire(c.raceaddr())

}

unlock(&c.lock) //解锁,清空内存

if ep != nil {

typedmemclr(c.elemtype, ep)

}

return true, false

}

// The channel has been closed, but the channel's buffer have data.

} else { //未关闭

// Just found waiting sender with not closed.

if sg := c.sendq.dequeue(); sg != nil { //发送队列有g,则直接从g中拷贝值

// Found a waiting sender. If buffer is size 0, receive value

// directly from sender. Otherwise, receive from head of queue

// and add sender's value to the tail of the queue (both map to

// the same buffer slot because the queue is full).

recv(c, sg, ep, func() { unlock(&c.lock) }, 3)

return true, true

}

}

if c.qcount > 0 { // 发送队列无g,且通道中有剩余的值

// Receive directly from queue

qp := chanbuf(c, c.recvx) //取一个值

if raceenabled {

racenotify(c, c.recvx, nil)

}

if ep != nil {

typedmemmove(c.elemtype, ep, qp) //移动值

}

typedmemclr(c.elemtype, qp) //清空

c.recvx++ //接收指针下移

if c.recvx == c.dataqsiz { //指针归零,构成环

c.recvx = 0

}

c.qcount-- //元素个数-1

unlock(&c.lock) //解锁

return true, true //返回被选中,且通道未关闭

}

if !block { //非阻塞,解锁

unlock(&c.lock)

return false, false

}

// no sender available: block on this channel.

gp := getg()

mysg := acquireSudog()

mysg.releasetime = 0

if t0 != 0 {

mysg.releasetime = -1

}

// No stack splits between assigning elem and enqueuing mysg

// on gp.waiting where copystack can find it.

mysg.elem = ep

mysg.waitlink = nil

gp.waiting = mysg

mysg.g = gp

mysg.isSelect = false

mysg.c = c

gp.param = nil

c.recvq.enqueue(mysg) //加入接收队列,阻塞

// Signal to anyone trying to shrink our stack that we're about

// to park on a channel. The window between when this G's status

// changes and when we set gp.activeStackChans is not safe for

// stack shrinking.

atomic.Store8(&gp.parkingOnChan, 1)

gopark(chanparkcommit, unsafe.Pointer(&c.lock), waitReasonChanReceive, traceEvGoBlockRecv, 2) //挂起

// someone woke us up

if mysg != gp.waiting {

throw("G waiting list is corrupted")

}

gp.waiting = nil

gp.activeStackChans = false

if mysg.releasetime > 0 {

blockevent(mysg.releasetime-t0, 2)

}

success := mysg.success

gp.param = nil

mysg.c = nil

releaseSudog(mysg) //释放当前g,证明当前g已被调度

return true, success

}

recv 在通道c上处理接收操作

// recv processes a receive operation on a full channel c.

// There are 2 parts:

// 1. The value sent by the sender sg is put into the channel

// and the sender is woken up to go on its merry way.

// 2. The value received by the receiver (the current G) is

// written to ep.

//

// For synchronous channels, both values are the same.

// For asynchronous channels, the receiver gets its data from

// the channel buffer and the sender's data is put in the

// channel buffer.

// Channel c must be full and locked. recv unlocks c with unlockf.

// sg must already be dequeued from c.

// A non-nil ep must point to the heap or the caller's stack.

func recv(c *hchan, sg *sudog, ep unsafe.Pointer, unlockf func(), skip int) {

if c.dataqsiz == 0 { //非缓冲

if raceenabled {

racesync(c, sg)

}

if ep != nil {

// copy data from sender

recvDirect(c.elemtype, sg, ep) //直接复制值从发送的g到接收者

}

} else { //缓冲

// Queue is full. Take the item at the

// head of the queue. Make the sender enqueue

// its item at the tail of the queue. Since the

// queue is full, those are both the same slot.

qp := chanbuf(c, c.recvx) //取一个值

if raceenabled {

racenotify(c, c.recvx, nil)

racenotify(c, c.recvx, sg)

}

// copy data from queue to receiver

if ep != nil {

typedmemmove(c.elemtype, ep, qp) //复制值到ep

}

// copy data from sender to queue

typedmemmove(c.elemtype, qp, sg.elem) //复制值到qp

c.recvx++ //拿走一个,又加入一个,游标下移

if c.recvx == c.dataqsiz {

c.recvx = 0

}

c.sendx = c.recvx // c.sendx = (c.sendx+1) % c.dataqsiz

}

sg.elem = nil

gp := sg.g

unlockf()

gp.param = unsafe.Pointer(sg)

sg.success = true

if sg.releasetime != 0 {

sg.releasetime = cputicks()

}

goready(gp, skip+1) //就绪状态

}

chanparkcommit 挂起

func chanparkcommit(gp *g, chanLock unsafe.Pointer) bool {

// There are unlocked sudogs that point into gp's stack. Stack

// copying must lock the channels of those sudogs.

// Set activeStackChans here instead of before we try parking

// because we could self-deadlock in stack growth on the

// channel lock.

gp.activeStackChans = true

// Mark that it's safe for stack shrinking to occur now,

// because any thread acquiring this G's stack for shrinking

// is guaranteed to observe activeStackChans after this store.

atomic.Store8(&gp.parkingOnChan, 0)

// Make sure we unlock after setting activeStackChans and

// unsetting parkingOnChan. The moment we unlock chanLock

// we risk gp getting readied by a channel operation and

// so gp could continue running before everything before

// the unlock is visible (even to gp itself).

unlock((*mutex)(chanLock))

return true

}

selectnbsend select 操作,编译器行为

// compiler implements

//

// select {

// case c <- v:

// ... foo

// default:

// ... bar

// }

//

// as

//

// if selectnbsend(c, v) {

// ... foo

// } else {

// ... bar

// }

func selectnbsend(c *hchan, elem unsafe.Pointer) (selected bool) {

return chansend(c, elem, false, getcallerpc())

}

// compiler implements

//

// select {

// case v, ok = <-c:

// ... foo

// default:

// ... bar

// }

//

// as

//

// if selected, ok = selectnbrecv(&v, c); selected {

// ... foo

// } else {

// ... bar

// }

func selectnbrecv(elem unsafe.Pointer, c *hchan) (selected, received bool) {

return chanrecv(c, elem, false)

}

reflect相关

//go:linkname reflect_chansend reflect.chansend

func reflect_chansend(c *hchan, elem unsafe.Pointer, nb bool) (selected bool) {

return chansend(c, elem, !nb, getcallerpc())

}

//go:linkname reflect_chanrecv reflect.chanrecv

func reflect_chanrecv(c *hchan, nb bool, elem unsafe.Pointer) (selected bool, received bool) {

return chanrecv(c, elem, !nb)

}

//go:linkname reflect_chanlen reflect.chanlen

func reflect_chanlen(c *hchan) int {

if c == nil {

return 0

}

return int(c.qcount)

}

//go:linkname reflectlite_chanlen internal/reflectlite.chanlen

func reflectlite_chanlen(c *hchan) int {

if c == nil {

return 0

}

return int(c.qcount)

}

//go:linkname reflect_chancap reflect.chancap

func reflect_chancap(c *hchan) int {

if c == nil {

return 0

}

return int(c.dataqsiz)

}

//go:linkname reflect_chanclose reflect.chanclose

func reflect_chanclose(c *hchan) {

closechan(c)

}

enqueue 入队

func (q *waitq) enqueue(sgp *sudog) {

sgp.next = nil

x := q.last

if x == nil {

sgp.prev = nil

q.first = sgp

q.last = sgp

return

}

sgp.prev = x

x.next = sgp

q.last = sgp

}

dequeue出队

func (q *waitq) dequeue() *sudog {

for {

sgp := q.first

if sgp == nil {

return nil

}

y := sgp.next

if y == nil {

q.first = nil

q.last = nil

} else {

y.prev = nil

q.first = y

sgp.next = nil // mark as removed (see dequeueSudoG)

}

// if a goroutine was put on this queue because of a

// select, there is a small window between the goroutine

// being woken up by a different case and it grabbing the

// channel locks. Once it has the lock

// it removes itself from the queue, so we won't see it after that.

// We use a flag in the G struct to tell us when someone

// else has won the race to signal this goroutine but the goroutine

// hasn't removed itself from the queue yet.

if sgp.isSelect && !atomic.Cas(&sgp.g.selectDone, 0, 1) {

continue

}

return sgp

}

}

race相关

func (c *hchan) raceaddr() unsafe.Pointer {

// Treat read-like and write-like operations on the channel to

// happen at this address. Avoid using the address of qcount

// or dataqsiz, because the len() and cap() builtins read

// those addresses, and we don't want them racing with

// operations like close().

return unsafe.Pointer(&c.buf)

}

func racesync(c *hchan, sg *sudog) {

racerelease(chanbuf(c, 0))

raceacquireg(sg.g, chanbuf(c, 0))

racereleaseg(sg.g, chanbuf(c, 0))

raceacquire(chanbuf(c, 0))

}

// Notify the race detector of a send or receive involving buffer entry idx

// and a channel c or its communicating partner sg.

// This function handles the special case of c.elemsize==0.

func racenotify(c *hchan, idx uint, sg *sudog) {

// We could have passed the unsafe.Pointer corresponding to entry idx

// instead of idx itself. However, in a future version of this function,

// we can use idx to better handle the case of elemsize==0.

// A future improvement to the detector is to call TSan with c and idx:

// this way, Go will continue to not allocating buffer entries for channels

// of elemsize==0, yet the race detector can be made to handle multiple

// sync objects underneath the hood (one sync object per idx)

qp := chanbuf(c, idx)

// When elemsize==0, we don't allocate a full buffer for the channel.

// Instead of individual buffer entries, the race detector uses the

// c.buf as the only buffer entry. This simplification prevents us from

// following the memory model's happens-before rules (rules that are

// implemented in racereleaseacquire). Instead, we accumulate happens-before

// information in the synchronization object associated with c.buf.

if c.elemsize == 0 {

if sg == nil {

raceacquire(qp)

racerelease(qp)

} else {

raceacquireg(sg.g, qp)

racereleaseg(sg.g, qp)

}

} else {

if sg == nil {

racereleaseacquire(qp)

} else {

racereleaseacquireg(sg.g, qp)

}

}

}

![[JavaEE初阶] 内存可见性问题----volatile与wait(),notify()的使用](https://img-blog.csdnimg.cn/714d9eadbc81456ead61a3220ef9e956.png)