-

Homebrew安装的软件会默认在

/usr/local/Cellar/路径下 -

redis的配置文件

redis.conf存放在/usr/local/etc路径下

cd /usr/local/Cellar/redis/7.0.10. 存在

cd /usr/local/opt/redis/bin/redis-server. 目录存在

cd /usr/local/etc/redis.conf 存在。配置文件

复制文件 cp /usr/local/etc/redis-6380.conf /usr/local/etc/redis-6381.conf

//启动redis和哨兵sentinel

redis-server /usr/local/etc/redis.conf

redis-server /usr/local/etc/redis-6380.conf

redis-server /usr/local/etc/redis-6381.conf

启动哨兵

redis-sentinel /usr/local/etc/redis-sentinel‐26379.conf

redis-sentinel /usr/local/etc/redis-sentinel‐26380.conf

redis-sentinel /usr/local/etc/redis-sentinel‐26381.conf

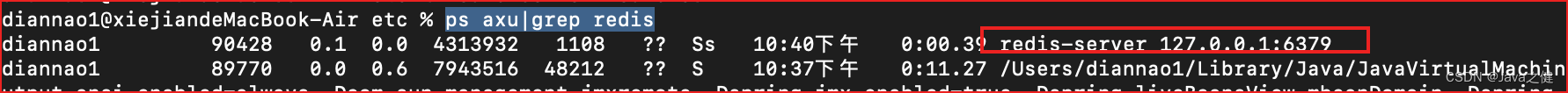

ps axu | grep redis。 查看redis是否启动成功,redis启动成功如下

kill -9 90428 是关闭。

redis-cli连接redis服务

redis-cli -h 127.0.0.1 -p 6381 :进入这里可以输入命令,输入info是查看信息

dir /usr/local/var/db/redis/. rdb.aof文件在这里

cd /usr/local/var/db/redis/

ls -l 显示rdb生成的时间

redis 集群搭建

sudo cp /usr/local/redis‐cluster/8001/redis.conf /usr/local/redis‐cluster/8002/

配置完启动所有节点

sudo redis-server /usr/local/redis‐cluster/8001/redis.conf

sudo redis-server /usr/local/redis‐cluster/8002/redis.conf

redis-server /usr/local/redis‐cluster/8003/redis.conf

redis-server /usr/local/redis‐cluster/8004/redis.conf

redis-server /usr/local/redis‐cluster/8005/redis.conf

redis-server /usr/local/redis‐cluster/8006/redis.conf

sudo redis-cli -a zhuge --cluster -create --cluster‐replicas 1 127.0.0.1:8001 127.0.0.1:8002 127.0.0.1:8003 127.0.0.1:8004 127.0.0.1:8005 127.0.0.1:8006

8004是8003的从节点,8005是8001的从节点,8006是8002的从节点

/usr/local/Cellar/redis/7.0.10/bin/

redis-cli -a zhuge -h 127.0.0.1 -c -p 8001

java安装路径

export JAVA_HOME="/Library/Java/JavaVirtualMachines/jdk1.8.0_261.jdk/Contents/Home" CLASS_PATH="$JAVA_HOME/lib" PATH=".$PATH:$JAVA_HOME/bin"

zookeeper

启动

sudo ./zkServer.sh start /usr/local/zookeeper/zookeeper-3.5.1-alpha/conf/zoo.cfg

sudo ./zkServer.sh status

./zkCli.sh -server 127.0.0.1:2181 连接客户端

zookeeper集群部署

zoo1.cfg配置

clientPort= 2181

dataDir=/usr/local/zookeeper/zookeeper-3.5.1-alpha/zkCluster/zk1/data

dataLogDir=usr/local/zookeeper/zookeeper-3.5.1-alpha/zkCluster/zk1/log

server.1= 127.0.0.1:2887:3887 server.2= 127.0.0.1:2888:3888 server.3= 127.0.0.1:2889:3889

zoo2.cfg配置

clientPort= 2182

dataDir=/usr/local/zookeeper/zookeeper-3.5.1-alpha/zkCluster/zk2/data

dataLogDir=usr/local/zookeeper/zookeeper-3.5.1-alpha/zkCluster/zk2/log

server.1= 127.0.0.1:2887:3887 server.2= 127.0.0.1:2888:3888 server.3= 127.0.0.1:2889:3889

zoo3.cfg配置

clientPort= 2183

/usr/local/zookeeper/zookeeper-3.5.1-alpha/zkCluster/zk3/data

sudo zkServer.sh start /usr/local/zookeeper/zookeeper-3.5.1-alpha/zkCluster/zk1/zoo3.cfg

server.1= 127.0.0.1:2887:3887 server.2= 127.0.0.1:2888:3888 server.3= 127.0.0.1:2889:3889

启动命令分别为

sudo ./zkServer.sh start /usr/local/zookeeper/zookeeper-3.5.1-alpha/zkCluster/zk1/zoo1.cfg

sudo ./zkServer.sh start /usr/local/zookeeper/zookeeper-3.5.1-alpha/zkCluster/zk2/zoo2.cfg

sudo ./zkServer.sh start /usr/local/zookeeper/zookeeper-3.5.1-alpha/zkCluster/zk3/zoo3.cfg

客户端

./zkCli.sh -server 127.0.0.1:2181

然后用get /zookeeper/config命令查看节点配置信息

./zkServer.sh status /usr/local/zookeeper/zookeeper-3.5.1-alpha/zkCluster/zk1/zoo1.cfg

./zkCli.sh -server 127.0.0.1:2182

./zkServer.sh status /usr/local/zookeeper/zookeeper-3.5.1-alpha/zkCluster/zk2/zoo2.cfg

./zkCli.sh -server 127.0.0.1:2183

./zkServer.sh status /usr/local/zookeeper/zookeeper-3.5.1-alpha/zkCluster/zk3/zoo3.cfg

[ ! -e "$JAVA_HOME/bin/java" ] && JAVA_HOME=$HOME/jdk/java [ ! -e "$JAVA_HOME/bin/java" ] && JAVA_HOME=/usr/java [ ! -e "$JAVA_HOME/bin/java" ] && error_exit "Please set the JAVA_HOMEvariable in your environment, We need java(x64)!" 改为:(注释后两行,第一行$HOME/jdk/java改为配置的JAVA_HOME路径) [ ! -e "$JAVA_HOME/bin/java" ] && JAVA_HOME=/Library/Java/JavaVirtualMachines/zulu-8.jdk/Contents/Home#[ ! -e "$JAVA_HOME/bin/java" ] && JAVA_HOME=/usr/java #[ ! -e "$JAVA_HOME/bin/java" ] && error_exit "Please set the JAVA_HOMEvariable in your environment, We need java(x64)!"