nano GPT

跟着youtube上AndrejKarpathy大佬复现一个简单GPT

1.数据集准备

很小的莎士比亚数据集

wget https://raw.githubusercontent.com/karpathy/char-rnn/master/data/tinyshakespeare/input.txt

1.1简单的tokenize

数据和等下的模型较简单,所以这里用了个很简单的直接按照字母去分割的tokenize。

复杂些的可以用**tiktoken**: openai在gpt2上用的。

with open('input.txt', 'r', encoding='utf-8') as f:

text = f.read()

print(len(text))

#>>> 1115394

chars = sorted(list(set(text)))

vocab_size = len(chars)

stoi = {ch: i for i, ch in enumerate(chars)}

itos = {i: ch for i, ch in enumerate(chars)}

string = 'hii there'

decode(encode(string)) == string

#>>> True

1.2切分训练集

import torch

data = torch.tensor(encode(text), dtype=torch.long)

print(data.shape, data.dtype)

#>>> torch.Size([1115394]) torch.int64

n = int(0.9 * len(data))

train_data = data[:n]

val_data = data[n:]

1.3获取小批量

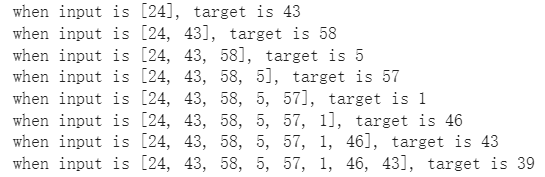

注意,target在切分的时候错开了一个位置。原因是如果原串是[1,2,3,4,5]。当我们的input是[1,2,3]的时候应该生成一个[1,2,3,4]。

实现如下

torch.manual_seed(1337)

batch_size, block_size = 4, 8

def get_batch(split):

data = train_data if split == 'train' else val_data

ix = torch.randint(len(data) - block_size, (batch_size, ))

x = torch.stack([data[i: i+block_size] for i in ix])

y = torch.stack([data[i+1:i+1+block_size] for i in ix])

return x, y

xb, yb = get_batch('train')

print(xb.shape)

print(xb)

print('target:')

print(yb.shape)

print(yb)

for b in range(batch_size):

for t in range(block_size):

# print(xb[b: :t+1])

context = xb[b, :t+1].tolist()

target = yb[b, t]

print(f'when input is {context}, target is {target}')

2.模型定义

2.1模型代码

这里具体解释一下为什么inputs, target送入模型前要做reshape。因为F.cross_entropy规定了 input 的shape必须是 [N, C] 其中N是样本数C是类别数这里也就是我们的vocab_size。与之对应,我们的 target 的shape就应该是[N]。input 送入模型后我们会得到input中每一个位置的下一个位置的预测,如果原文本是 [1,2,3],input : [1,2] ,target : [2,3]。那么送入 input 后我们可能会得到[2, 2.7]然后用这个和target计算损失。

import torch

import torch.nn as nn

from torch.nn import functional as F

torch.manual_seed(1337)

class BigramLanguageModel(nn.Module):

def __init__(self, vocab_size):

super().__init__()

self.token_embedding = nn.Embedding(vocab_size, vocab_size)

def forward(self, inputs, target=None):

# inputs: [B,L], target: [B,1]

logits = self.token_embedding(inputs) #[B,L,C]

if target is None:

loss = None

else:

B, T, C = logits.shape

logits = logits.reshape(B*T, C)

target = target.reshape(-1)

loss = F.cross_entropy(logits, target)

return logits, loss

def generate(self, idx, max_new_tokens):

# idx is [B, T] array of indices in the current context

for _ in range(max_new_tokens):

logits, loss = self(idx)

# 关注最后一个位置

logits = logits[:, -1, :] # [B, C]

probs = F.softmax(logits, dim=-1) # [B, C]

idx_next = torch.multinomial(probs, num_samples=1) # [B, 1]

idx = torch.cat([idx, idx_next], dim=1)

return idx

m = BigramLanguageModel(vocab_size)

logits, loss = m(xb, yb)

print(logits.shape)

print(loss)

idx = torch.zeros((1, 1), dtype=torch.long)

print(decode(m.generate(idx, max_new_tokens=100)[0].tolist()))

2.2优化器及训练

optimizer = torch.optim.AdamW(m.parameters(), lr=1e-3)

batch_size = 32

for steps in range(1000):

xb, yb = get_batch('train')

logits, loss = m(xb, yb)

optimizer.zero_grad(set_to_none=True)

loss.backward()

optimizer.step()

print(loss.item())

2.3 生成

print(decode(m.generate(idx, max_new_tokens=300)[0].tolist()))

3.加入注意力机制

3.1单头注意力

class Head(nn.Module):

def __init__(self, head_size):

super().__init__()

self.key = nn.Linear(n_embd, head_size)

self.query = nn.Linear(n_embd, head_size)

self.value = nn.Linear(n_embd, head_size)

self.register_buffer('tril', torch.tril(torch.ones(block_size, block_size)))

self.dropout = nn.Dropout(dropout)

def forward(self, x):

B, T, C = x.shape

k = self.key(x)

q = self.query(x)

v = self.value(x)

wei = q @ k.transpose(-2, -1) * C ** -0.5

wei = wei.masked_fill(self.tril[:T, :T] == 0, float('-inf'))

wei = F.softmax(wei, dim=-1)

wei = self.dropout(wei)

out = wei @ v

return out

3.2多头注意力

class MultiHeadAttention(nn.Module):

def __init__(self, num_heads, head_size):

super().__init__()

self.heads = nn.ModuleList(

[Head(head_size) for _ in range(num_heads)]

)

self.proj = nn.Linear(n_embd, n_embd)

self.dropout = nn.Dropout(dropout)

def forward(self, x):

out = torch.cat([head(x) for head in self.heads], dim=-1)

out = self.dropout(self.proj(out))

return out

3.3前馈神经网络

class FeedForward(nn.Module):

def __init__(self, n_embed):

super().__init__()

self.net = nn.Sequential(

nn.Linear(n_embed, 4 * n_embed),

nn.ReLU(),

nn.Linear(4 * n_embed, n_embed)

)

self.dropout = nn.Dropout(dropout)

def forward(self, x):

return self.dropout(self.net(x))

3.4transformerBlock

这里实现的是一个简易版的,如果n_embd是32, n_head=4, 那么每个单独的头只会产生 [B, T, 8] 这个尺寸的信息,然后将4个头的信息在dim=-1这个维度拼接起来即可。

class Block(nn.Module):

def __init__(self, n_embd, n_head):

super().__init__()

head_size = n_embd // n_head

self.sa = MultiHeadAttention(n_head, head_size)

self.ffwd = FeedForward(n_embd)

self.ln1 = nn.LayerNorm(n_embd)

self.ln2 = nn.LayerNorm(n_embd)

def forward(self, x):

x = x + self.sa(self.ln1(x))

x = x + self.ffwd(self.ln2(x))

return x

4.最终训练

加入注意力机制和扩大模型后我们得到了这样的模型以及超参数

参数

batch_size = 64

block_size = 256

max_iters = 5000

eval_interval = 500

lr = 3e-4

device = 'cuda' if torch.cuda.is_available() else 'cpu'

eval_iters = 200

n_embd = 384

n_head = 6

n_layer = 6

dropout = 0.2

模型

class BigramLanguageModel(nn.Module):

def __init__(self, n_embd):

super().__init__()

self.token_embedding = nn.Embedding(vocab_size, n_embd)

self.lm_head = nn.Linear(n_embd, vocab_size)

self.position_embedding_table = nn.Embedding(block_size, n_embd)

self.blocks = nn.Sequential(

Block(n_embd, n_head=n_head),

Block(n_embd, n_head=n_head),

Block(n_embd, n_head=n_head),

Block(n_embd, n_head=n_head),

Block(n_embd, n_head=n_head),

Block(n_embd, n_head=n_head),

nn.LayerNorm(n_embd)

)

self.ffwd = FeedForward(n_embd)

def forward(self, inputs, target=None):

# inputs: [B,L], target: [B,L]

B, T = inputs.shape

tok_emb = self.token_embedding(inputs) # [B,T,C]

pos_emb = self.position_embedding_table(torch.arange(T, device=device)) # [T, C]

x = tok_emb + pos_emb

x = self.blocks(x)

x = self.ffwd(x)

logits = self.lm_head(x) # [B, T, C] C = vocab_size

if target is None:

loss = None

else:

B, T, C = logits.shape

logits = logits.reshape(B * T, C)

target = target.reshape(-1)

loss = F.cross_entropy(logits, target)

return logits, loss

def generate(self, idx, max_new_tokens):

# idx is [B, T] array of indices in the current context

for _ in range(max_new_tokens):

idx_cond = idx[:, -block_size:]

logits, loss = self(idx_cond)

# 关注最后一个位置

logits = logits[:, -1, :] # [B, C]

probs = F.softmax(logits, dim=-1) # [B, C]

idx_next = torch.multinomial(probs, num_samples=1) # [B, 1]

idx = torch.cat([idx, idx_next], dim=1)

return idx

在A800上训练可以得到如下结果

可以看到loss已经降的不错了,只不过说出来的话还不太合理hhh

step 0: train loss 4.1744, val loss 4.1743

step 500: train loss 1.9218, val loss 2.0212

step 1000: train loss 1.5678, val loss 1.7493

step 1500: train loss 1.4277, val loss 1.6303

step 2000: train loss 1.3384, val loss 1.5647

step 2500: train loss 1.2810, val loss 1.5380

step 3000: train loss 1.2325, val loss 1.5121

step 3500: train loss 1.1924, val loss 1.5010

step 4000: train loss 1.1506, val loss 1.4956

step 4500: train loss 1.1204, val loss 1.5051

Havingly made me been's wife.

Thy father's name be heard he will not say

Your undoubter'd prift, that's that sympirate.

KING RICHARD III:

Those palasion most pallars, these measures

Shame laceling may be invenged by my breast.

DUKE VINCENTIO:

Then, I think it, is approach'd lip.

PRINCENTIUS:

The