一 :准备 ubuntu linux X86_64系统

a.安装anaconda

b.创建虚拟环境 python=3.8

二: 下载rknn-toolkit2

传送门

unzip 解压文件夹

三:pt转onnx模型

四:onnx转rknn模型

a:cd到rknn-toolkit2-master/rknn-toolkit2/packages目录下

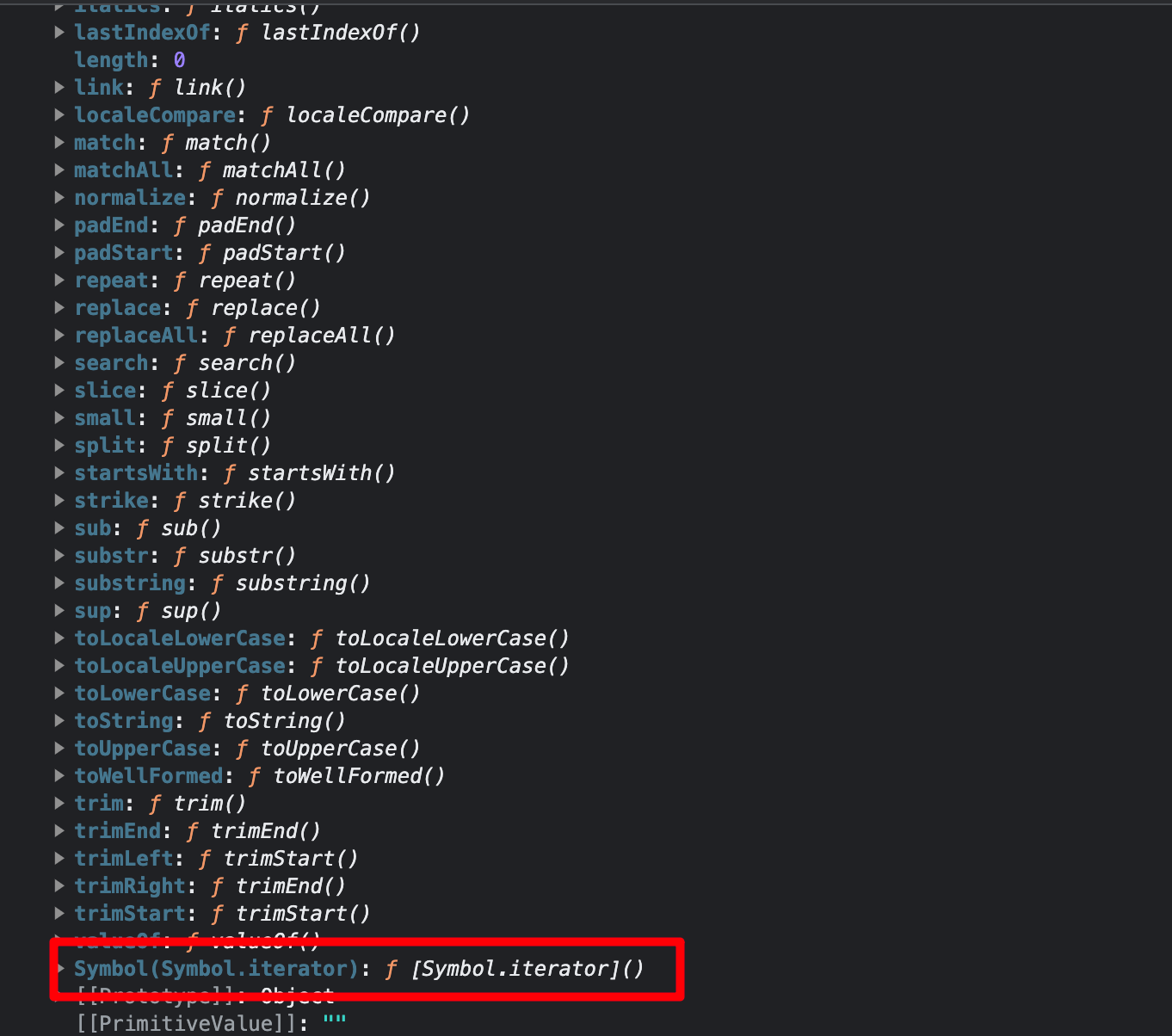

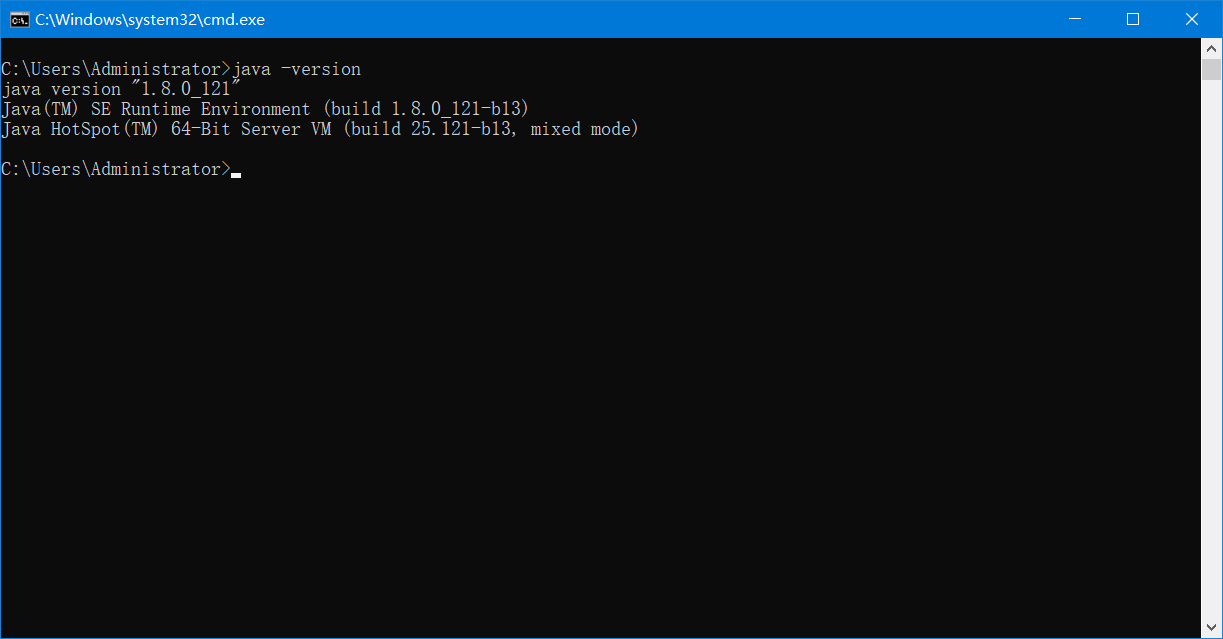

cp表示python的版本号 如果不知道版本的话 输入python __version

b: pip install -r requirements_cp38-1.6.0.txt 安装环境

c: pip install rknn_toolkit2-1.4.0_22dcfef4-cp38-cp38-linux_x86_64.whl

d: from rknn.api import RKNN 如果不报错则安装成功

e: 去到knn-toolkit2-master/rknn-toolkit2/examples/onnx/yolov5目录下修改test.py

修改成自己的

f: 修改后 运行pyhton test.py 根目录会生成 .rknn的模型以及result.jpg

五:3588部署

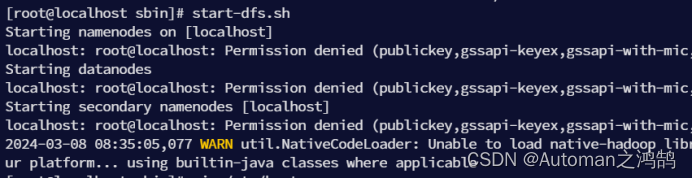

获取瑞星微官网 rknpu

传送门

在model目录下放入推理视频h264或h265格式

./rknn_yolov5_video_demo ./model/RK3588/yolov5n_result.rknn ./model/a.mp4 264或265至此推理结束

==================================24.3.7更新============================

修改了rknn_yolov5_demo读取视频操作

// Copyright (c) 2021 by Rockchip Electronics Co., Ltd. All Rights Reserved.

//

// Licensed under the Apache License, Version 2.0 (the "License");

// you may not use this file except in compliance with the License.

// You may obtain a copy of the License at

//

// http://www.apache.org/licenses/LICENSE-2.0

//

// Unless required by applicable law or agreed to in writing, software

// distributed under the License is distributed on an "AS IS" BASIS,

// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

// See the License for the specific language governing permissions and

// limitations under the License.

/*-------------------------------------------

Includes

-------------------------------------------*/

#include <dlfcn.h>

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <sys/time.h>

#define _BASETSD_H

#include "RgaUtils.h"

#include "postprocess.h"

#include "rknn_api.h"

#include "preprocess.h"

#define PERF_WITH_POST 1

/*-------------------------------------------

Functions

-------------------------------------------*/

static void dump_tensor_attr(rknn_tensor_attr *attr)

{

std::string shape_str = attr->n_dims < 1 ? "" : std::to_string(attr->dims[0]);

for (int i = 1; i < attr->n_dims; ++i)

{

shape_str += ", " + std::to_string(attr->dims[i]);

}

printf(" index=%d, name=%s, n_dims=%d, dims=[%s], n_elems=%d, size=%d, w_stride = %d, size_with_stride=%d, fmt=%s, "

"type=%s, qnt_type=%s, "

"zp=%d, scale=%f\n",

attr->index, attr->name, attr->n_dims, shape_str.c_str(), attr->n_elems, attr->size, attr->w_stride,

attr->size_with_stride, get_format_string(attr->fmt), get_type_string(attr->type),

get_qnt_type_string(attr->qnt_type), attr->zp, attr->scale);

}

double __get_us(struct timeval t) { return (t.tv_sec * 1000000 + t.tv_usec); }

static unsigned char *load_data(FILE *fp, size_t ofst, size_t sz)

{

unsigned char *data;

int ret;

data = NULL;

if (NULL == fp)

{

return NULL;

}

ret = fseek(fp, ofst, SEEK_SET);

if (ret != 0)

{

printf("blob seek failure.\n");

return NULL;

}

data = (unsigned char *)malloc(sz);

if (data == NULL)

{

printf("buffer malloc failure.\n");

return NULL;

}

ret = fread(data, 1, sz, fp);

return data;

}

static unsigned char *load_model(const char *filename, int *model_size)

{

FILE *fp;

unsigned char *data;

fp = fopen(filename, "rb");

if (NULL == fp)

{

printf("Open file %s failed.\n", filename);

return NULL;

}

fseek(fp, 0, SEEK_END);

int size = ftell(fp);

data = load_data(fp, 0, size);

fclose(fp);

*model_size = size;

return data;

}

static int saveFloat(const char *file_name, float *output, int element_size)

{

FILE *fp;

fp = fopen(file_name, "w");

for (int i = 0; i < element_size; i++)

{

fprintf(fp, "%.6f\n", output[i]);

}

fclose(fp);

return 0;

}

/*-------------------------------------------

Main Functions

-------------------------------------------*/

int main(int argc, char **argv)

{

if (argc < 2)

{

printf("Usage: %s <rknn model> <input_image_path> <resize/letterbox> <output_image_path>\n", argv[0]);

return -1;

}

int ret;

rknn_context ctx;

size_t actual_size = 0;

int img_width = 0;

int img_height = 0;

int img_channel = 0;

const float nms_threshold = NMS_THRESH; // 默认的NMS阈值

const float box_conf_threshold = BOX_THRESH; // 默认的置信度阈值

struct timeval start_time, stop_time;

char *model_name = (char *)argv[1];

//char *input_path = argv[2];

std::string option = "letterbox";

std::string out_path = "./out.jpg";

if (argc >= 4)

{

option = argv[3];

}

if (argc >= 5)

{

out_path = argv[4];

}

// init rga context

rga_buffer_t src;

rga_buffer_t dst;

memset(&src, 0, sizeof(src));

memset(&dst, 0, sizeof(dst));

printf("post process config: box_conf_threshold = %.2f, nms_threshold = %.2f\n", box_conf_threshold, nms_threshold);

/* Create the neural network */

printf("Loading mode...\n");

int model_data_size = 0;

unsigned char *model_data = load_model(model_name, &model_data_size);

ret = rknn_init(&ctx, model_data, model_data_size, 0, NULL);

if (ret < 0)

{

printf("rknn_init error ret=%d\n", ret);

return -1;

}

rknn_sdk_version version;

ret = rknn_query(ctx, RKNN_QUERY_SDK_VERSION, &version, sizeof(rknn_sdk_version));

if (ret < 0)

{

printf("rknn_init error ret=%d\n", ret);

return -1;

}

printf("sdk version: %s driver version: %s\n", version.api_version, version.drv_version);

rknn_input_output_num io_num;

ret = rknn_query(ctx, RKNN_QUERY_IN_OUT_NUM, &io_num, sizeof(io_num));

if (ret < 0)

{

printf("rknn_init error ret=%d\n", ret);

return -1;

}

printf("model input num: %d, output num: %d\n", io_num.n_input, io_num.n_output);

rknn_tensor_attr input_attrs[io_num.n_input];

memset(input_attrs, 0, sizeof(input_attrs));

for (int i = 0; i < io_num.n_input; i++)

{

input_attrs[i].index = i;

ret = rknn_query(ctx, RKNN_QUERY_INPUT_ATTR, &(input_attrs[i]), sizeof(rknn_tensor_attr));

if (ret < 0)

{

printf("rknn_init error ret=%d\n", ret);

return -1;

}

dump_tensor_attr(&(input_attrs[i]));

}

rknn_tensor_attr output_attrs[io_num.n_output];

memset(output_attrs, 0, sizeof(output_attrs));

for (int i = 0; i < io_num.n_output; i++)

{

output_attrs[i].index = i;

ret = rknn_query(ctx, RKNN_QUERY_OUTPUT_ATTR, &(output_attrs[i]), sizeof(rknn_tensor_attr));

dump_tensor_attr(&(output_attrs[i]));

}

int channel = 3;

int width = 0;

int height = 0;

if (input_attrs[0].fmt == RKNN_TENSOR_NCHW)

{

printf("model is NCHW input fmt\n");

channel = input_attrs[0].dims[1];

height = input_attrs[0].dims[2];

width = input_attrs[0].dims[3];

}

else

{

printf("model is NHWC input fmt\n");

height = input_attrs[0].dims[1];

width = input_attrs[0].dims[2];

channel = input_attrs[0].dims[3];

}

printf("model input height=%d, width=%d, channel=%d\n", height, width, channel);

rknn_input inputs[1];

memset(inputs, 0, sizeof(inputs));

inputs[0].index = 0;

inputs[0].type = RKNN_TENSOR_UINT8;

inputs[0].size = width * height * channel;

inputs[0].fmt = RKNN_TENSOR_NHWC;

inputs[0].pass_through = 0;

// 读取图片

//printf("Read %s ...\n", input_path);

cv::VideoCapture cap("/home/pi/rknpu2-master/examples/rknn_yolov5_demo/model/sample.mp4");

cv::Mat frame;

printf("aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa");

while(cap.read(frame)){

//cv::imshow("frame",frame);

cv::Mat orig_img = frame.clone();

//cv::Mat orig_img = cv::imread(input_path, 1);

if (!orig_img.data)

{

printf("cv::imread fail!\n");

return -1;

}

cv::Mat img;

cv::cvtColor(orig_img, img, cv::COLOR_BGR2RGB);

img_width = img.cols;

img_height = img.rows;

printf("img width = %d, img height = %d\n", img_width, img_height);

// 指定目标大小和预处理方式,默认使用LetterBox的预处理

BOX_RECT pads;

memset(&pads, 0, sizeof(BOX_RECT));

cv::Size target_size(width, height);

cv::Mat resized_img(target_size.height, target_size.width, CV_8UC3);

// 计算缩放比例

float scale_w = (float)target_size.width / img.cols;

float scale_h = (float)target_size.height / img.rows;

if (img_width != width || img_height != height)

{

// 直接缩放采用RGA加速

if (option == "resize")

{

printf("resize image by rga\n");

ret = resize_rga(src, dst, img, resized_img, target_size);

if (ret != 0)

{

fprintf(stderr, "resize with rga error\n");

return -1;

}

// 保存预处理图片

cv::imwrite("resize_input.jpg", resized_img);

}

else if (option == "letterbox")

{

printf("resize image with letterbox\n");

float min_scale = std::min(scale_w, scale_h);

scale_w = min_scale;

scale_h = min_scale;

letterbox(img, resized_img, pads, min_scale, target_size);

// 保存预处理图片

cv::imwrite("letterbox_input.jpg", resized_img);

}

else

{

fprintf(stderr, "Invalid resize option. Use 'resize' or 'letterbox'.\n");

return -1;

}

inputs[0].buf = resized_img.data;

}

else

{

inputs[0].buf = img.data;

}

gettimeofday(&start_time, NULL);

rknn_inputs_set(ctx, io_num.n_input, inputs);

rknn_output outputs[io_num.n_output];

memset(outputs, 0, sizeof(outputs));

for (int i = 0; i < io_num.n_output; i++)

{

outputs[i].want_float = 0;

}

// 执行推理

ret = rknn_run(ctx, NULL);

ret = rknn_outputs_get(ctx, io_num.n_output, outputs, NULL);

gettimeofday(&stop_time, NULL);

printf("once run use %f ms\n", (__get_us(stop_time) - __get_us(start_time)) / 1000);

// 后处理

detect_result_group_t detect_result_group;

std::vector<float> out_scales;

std::vector<int32_t> out_zps;

for (int i = 0; i < io_num.n_output; ++i)

{

out_scales.push_back(output_attrs[i].scale);

out_zps.push_back(output_attrs[i].zp);

}

post_process((int8_t *)outputs[0].buf, (int8_t *)outputs[1].buf, (int8_t *)outputs[2].buf, height, width,

box_conf_threshold, nms_threshold, pads, scale_w, scale_h, out_zps, out_scales, &detect_result_group);

// 画框和概率

char text[256];

for (int i = 0; i < detect_result_group.count; i++)

{

detect_result_t *det_result = &(detect_result_group.results[i]);

sprintf(text, "%s %.1f%%", det_result->name, det_result->prop * 100);

printf("%s @ (%d %d %d %d) %f\n", det_result->name, det_result->box.left, det_result->box.top,

det_result->box.right, det_result->box.bottom, det_result->prop);

int x1 = det_result->box.left;

int y1 = det_result->box.top;

int x2 = det_result->box.right;

int y2 = det_result->box.bottom;

rectangle(orig_img, cv::Point(x1, y1), cv::Point(x2, y2), cv::Scalar(256, 0, 0, 256), 3);

putText(orig_img, text, cv::Point(x1, y1 + 12), cv::FONT_HERSHEY_SIMPLEX, 0.4, cv::Scalar(255, 255, 255));

}

printf("save detect result to %s\n", out_path.c_str());

imwrite(out_path, orig_img);

imshow("aaa",orig_img);

cv::waitKey(1);

ret = rknn_outputs_release(ctx, io_num.n_output, outputs);

// 耗时统计

//int test_count = 10;

//gettimeofday(&start_time, NULL);

//for (int i = 0; i < test_count; ++i)

//{

//rknn_inputs_set(ctx, io_num.n_input, inputs);

// ret = rknn_run(ctx, NULL);

// ret = rknn_outputs_get(ctx, io_num.n_output, outputs, NULL);

//#if PERF_WITH_POST

// post_process((int8_t *)outputs[0].buf, (int8_t *)outputs[1].buf, (int8_t *)outputs[2].buf, height, width,

// box_conf_threshold, nms_threshold, pads, scale_w, scale_h, out_zps, out_scales, &detect_result_group);

//#endif

// ret = rknn_outputs_release(ctx, io_num.n_output, outputs);

//}

//gettimeofday(&stop_time, NULL);

//printf("loop count = %d , average run %f ms\n", test_count,

// (__get_us(stop_time) - __get_us(start_time)) / 1000.0 / test_count);

//deinitPostProcess();

// release

//ret = rknn_destroy(ctx);

// if (model_data)

// {

// free(model_data);

//}

}

return 0;

}