循环神经网络-高级篇RNN Classifier

本篇实现一个循环神经网络的分类器RNN Classifier

我们使用一个数据集包含Name,Country,其中名字有几千个,来自18个不同的国家,我们的目标是训练一个模型,可以实现输入一个名字,输出是哪个国家的,下面是训练集和测试集

下载地址

names_test.csv.gz

names_train.csv.gz

我们将上一篇中的RNN网络简化

我们在输入Name时得到的是一个序列,其中每一个字符都是序列中的

X

1

X

2

⋅

⋅

⋅

X

n

X_{1} X_{2} ··· X_{n}

X1X2⋅⋅⋅Xn,序列的长度是不一致的

模型的处理,输入Name为x,输出Country为o

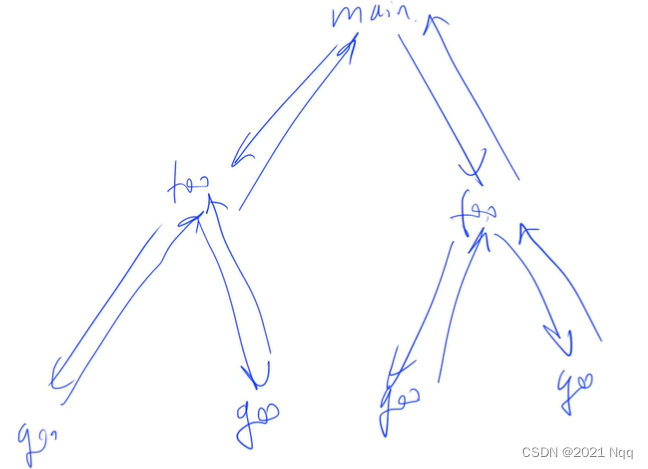

主要循环

RNNClassifier为自定义的classifier model,其中N_CHARS代表整个字母表转换成独热编码之后的全部元素(字母表大小),HIDDEN_SIZE代表输出隐层的维度,N_COUNTRY代表有多少分类,N_LAYER是设置GRU是用几层的

start=time.time()代表想知道训练时间多长

之后循环训练,在每一个epoch中做一次训练,做一次测试,把测试的结果添加到acc_list列表中,可以把准确率记录到列表中,然后进行绘图

准备数据

第一步是把name中的文本数据转换成为一个列表,做一个分离。由于只包含了英文字母,可以使用ASCLL码转换成为一个ASCLL词袋,大小为128,例如其中的77代表了一个独热编码,其中只有第77个索引为1,其余都为0。在embedding中我们只需要知道第几个维度是1就行了。

第二步因为这些序列长度不一致,所以要做一个padding,因为我们需要一个矩阵

第三步,我们要将country列表转换成一个索引标签,

接下来我们看代码

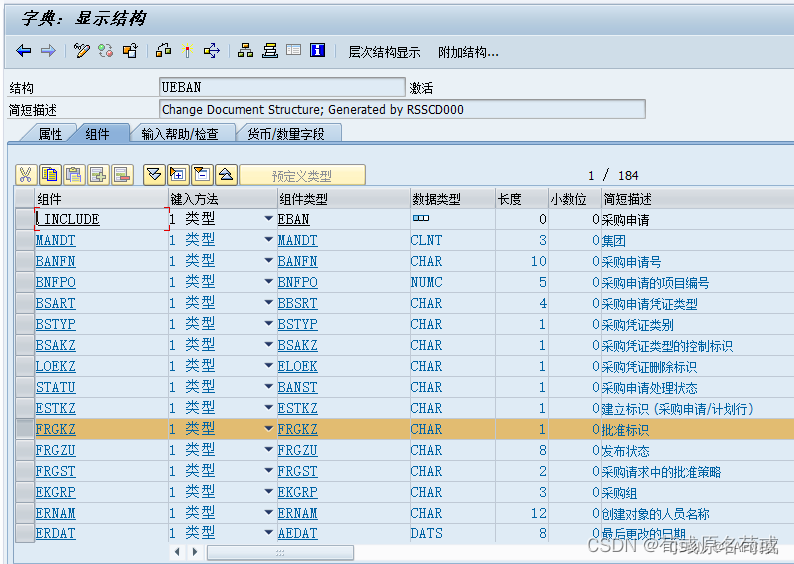

我们从.gz文件中读取数据,可以使用gzip.open和csv.reader,读取数据集的每一行,其中每一行都是(name,country)这个元组。

我们需要保存countries这个标签,用set(self.countries)把列表转换称为集合,去除重复的元素,只剩下一个country实例,之后只用list(sorted(set(self.countries)))进行排序再转换成列表。调用self.getCountryDict()转换成一个词典,country和Index就是字典中的一个key和value。

通过getitem这个方法拿出来的name是个字符串,拿出的country是一个索引

__getitem__方法是Python中的一个特殊方法,当你使用方括号符号访问对象的元素时调用。例如,如果你有一个列表lst,并且你想访问第三个元素,你可以使用lst[2]。

在NameDataset类的情况下,__getitem__方法用于返回数据集中给定索引处的人的名字和国家的元组。

该方法接受一个索引作为参数,并返回一个元组(name, country),其中name是给定索引处的人的名字,country是对应的国家,作为整数。国家是通过在self.country_dict字典中查找国家名称来确定的,该字典将国家名称映射到整数。

例如,如果self.names是['Alice', 'Bob', 'Charlie'],self.countries是['France', 'Germany', 'Italy'],self.country_dict是{'France': 0, 'Germany': 1, 'Italy': 2},调用__getitem__(1)将返回('Bob', 1)。

getCountryDict方法创建了一个将国家映射到整数的字典。 它是通过使用enumerate函数迭代self.country_list中的国家, 并为每个国家添加一个字典条目来实现的。键是国家名称,值是国家在列表中的索引。

idx2country方法接受一个索引作为参数,并返回self.country_list中相应的国家。

getCountriesNum方法返回存储在self.country_num属性中的self.country_list中唯一国家的数量。

例如,如果self.country_list是['France', 'Germany', 'Italy'],调用getCountryDict将返回字典{'France': 0, 'Germany': 1, 'Italy': 2},调用idx2country(1)将返回'Germany',调用getCountriesNum()将返回3。

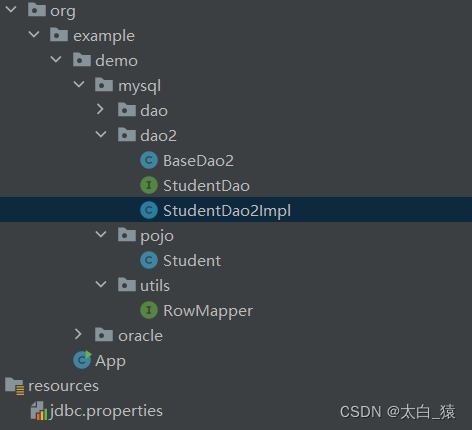

接下来准备Dataset和Dataloader

这段代码创建了两个NameDataset对象,一个用于训练,另一个用于测试。

然后,使用DataLoader类将数据集包装成批次。

trainset是一个训练数据集。trainloader是一个生成训练批次的迭代器。每个批次的大小为BATCH_SIZE,并且在每次迭代中打乱批次的顺序。testset是一个测试数据集。testloader是一个生成测试批次的迭代器。每个批次的大小为BATCH_SIZE,但在每次迭代中不打乱批次的顺序。

最后,N_COUNTRY被赋值为训练数据集中的国家数。

在这个网络中我们初始化的参数为

模型设计

这段代码定义了一个叫做RNNClassifier的PyTorch模型。这是一个分类器,用于将名字分类到不同的国家。

该模型是一个基于RNN的分类器,使用GRU(Gated Recurrent Unit)作为RNN结构。

该模型定义了以下几个层:

Embedding层:它将输入序列中的每个字符转换为一个向量。GRU层:它使用GRU结构处理嵌入的序列。Linear层(线性层):它将GRU层的输出转换为分类器的输出。

该模型还定义了一个名为forward的函数,该函数实现了模型的前向传播。在这个函数中,输入序列被转置,然后通过嵌入层和GRU层处理,最后通过线性层转换为输出。

该模型还定义了一个名为_init_hidden的函数,该函数用于初始化GRU层的隐藏状态。

该模型的构造函数(__init__函数)接受以下参数:

input_size:输入序列的字符数。hidden_size:GRU层的隐藏状态的大小。output_size:分类器的输出大小(即国家数量)。n_layers:GRU层的数量。bidirectional:是否使用双向GRU。

在初始化函数中我们需要inputSize,hiddenSize,outputSize,numLayer,bidirectional=True(单双向).

在Embedding嵌入层中需要inputSize和hiddenSize

其中hiddenSize,numLayer用在GRU的处理中,输入输出为hiddenSize,GRU层数为numLayer

GRU模块的构造函数接受以下参数:

input_size:输入数据的特征数。hidden_size:隐藏层的特征数。n_layers:GRU层的数量。bidirectional:是否使用双向GRU。如果设置为True,则使用双向GRU,否则使用单向GRU。

在这个例子中,input_size和hidden_size都设置为隐藏层的特征数。这是因为输入数据已经经过了嵌入层,嵌入向量的大小就是隐藏层的特征数。

GRU层的输出维度为(seq_len, batch_size, hidden_size * n_directions)。

batch_size是批量中的样本数。seq_len是输入序列的长度。hidden_size * n_directions是GRU层的隐藏层特征数。如果使用双向GRU,则n_directions等于2,否则等于1。

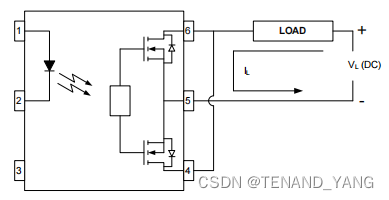

双向循环神经网络RNN

在单向得循环神经网络中,我们的结构是下面这种

沿着这个方向把隐层和输出都算出来,当算到 x N − 1 x_{N-1} xN−1时只包含过去的信息

但是在NLP中我们有时候会需要考虑一些未来的信息

由此引出双向循环神经网络

在序列的正向算出 h N f h_{N}^{f} hNf,我们在反向算出 h 0 b h_{0}^{b} h0b,最后通过合并可以得出 h N \boldsymbol{h}_{N} hN,其中 h N \boldsymbol{h}_{N} hN包含了 h N f h_{N}^{f} hNf和 h 0 b h_{0}^{b} h0b的信息

最终隐层的输出为

h

1

−

N

\boldsymbol{h}_{1-N}

h1−N,在GRU中的hidden就是

h

N

f

h_{N}^{f}

hNf和

h

N

b

h_{N}^{b}

hNb,

o

u

t

p

u

t

output

output为

h

1

−

N

\boldsymbol{h}_{1-N}

h1−N

了解了双向RNN之后我们可以知道在self.fc中我们需要线型层的参数hidden_size*self.n_directions,如果是双向RNN就可以乘2

接下来我们看一下forward

input.t()就是做一个矩阵的转置,做成seq*Batch的矩阵,这是将来embedding层需要的参数,并计算出batch_size的大小

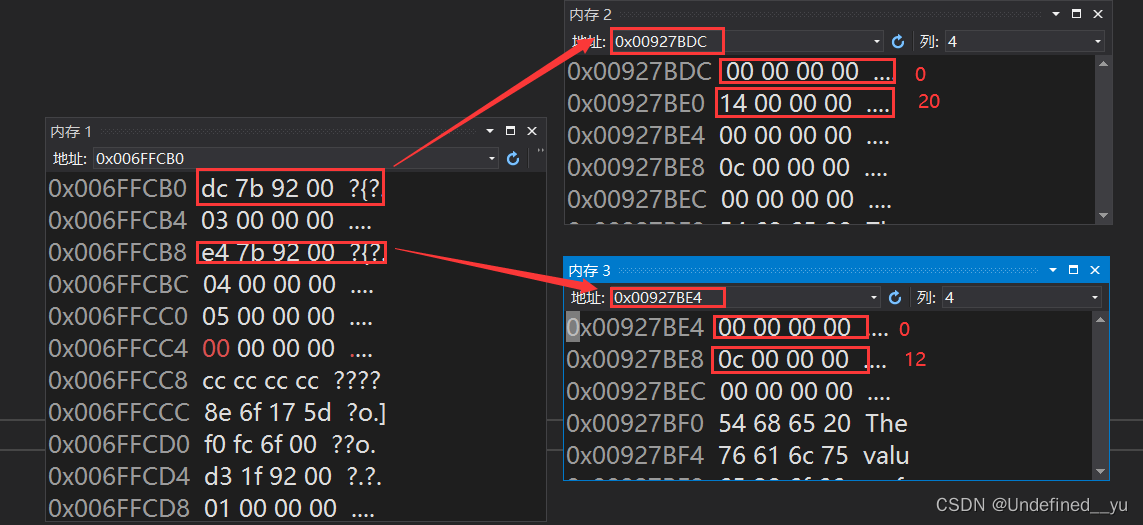

hidden = self._init_hidden(batch_size)初始化hidden的shape为(nLayer*nDirections,batchSize,hiddenSize)

embedding的shape为(seqLen,batchSize,hiddenSize)

这行代码调用pack_padded_sequence函数,将嵌入的序列embedding压缩成一个只包含有效元素的序列。其中embedding的shape是(seqLen,batchSize,hiddenSize)

seq_lengths是一个长度列表,其中的每个元素表示嵌入序列的每个序列的长度。

首先要对其中的序列长度来进行一个排序

排好数据之后在经过嵌入层

就可以经过PackedSequence得到

例如,假设你有一个嵌入的序列:

embedding = [

[1, 2, 3, 0], # seq 1

[4, 5, 0, 0], # seq 2

[6, 0, 0, 0] # seq 3

]

和一个长度列表:

seq_lengths = [3, 2, 1]

然后调用pack_padded_sequence(embedding, seq_lengths)将返回一个压缩的序列:

[1, 2, 3, 4, 5, 6]

和一个长度列表:

[3, 2, 1, 0]

之后我们使用GRU计算可以得到output和hidden,如果是双向RNN的话,我们可以得到两个hidden,并把它们拼起来

最后经过全连接层得到输出

以下是整个模型的结构

forward是一个模块的前向传播函数。在PyTorch中,当你想要在训练模型时对数据进行计算时,就要调用这个函数。

这个函数接受两个参数:

input:输入数据,其形状为(batch_size, seq_len)。seq_lengths:输入序列的长度列表,其长度为batch_size。

第一行的注释是提示,表示需要将输入数据的形状从(batch_size, seq_len)转换为(seq_len, batch_size)。这是因为GRU层的输入要求是(seq_len, batch_size, input_size)。

所以我们通过调用input.t()对输入数据进行矩阵的转置操作,从而得到新的形状(seq_len, batch_size)。

然后我们通过调用self._init_hidden(batch_size)初始化GRU层的隐藏状态。

接下来,我们通过调用self.embedding(input)获得嵌入向量。

最后,我们通过调用pack_padded_sequence函数将嵌入向量和序列长度打包起来,从而得到GRU层的输入。

接着,我们调用self.gru(gru_input, hidden)计算GRU层的输出。

如果使用双向GRU,则将最后两层的隐藏状态拼接在一起,形成一个新的张量,并将其分配给hidden_cat。

如果使用单向GRU,则将最后一层的隐藏状态赋值给hidden_cat。

最后,我们调用self.fc(hidden_cat)计算全连接层的输出。

name转换成tensor的过程

先把Name转换成为一个个字符,在由字符转换成为ASCLL值

之后进行padding填充

做完填充之后对矩阵进行转置

转置完成进行排序

我们定义一个make_tensors函数来完成

其中name2list()方法为

这个函数接受一个字符串参数name,并返回两个值:

- 一个列表,其中的每个元素是字符串中字符的ASCII码。

- 字符串的长度。

例如,调用name2list('Alice')将返回以下两个值:

[65, 108, 105, 99, 101],即ASCII码列表。5,即字符串的长度。

这个函数可能被用于将名字转换为数字序列,以便在RNN或其他模型中处理。

sequences_and_lengths是一个列表,它的每个元素都是一个元组,元组中包含一个列表和一个整数。

这个列表是通过使用列表推导式循环遍历names中的每个名字,并将每个名字传递给name2list函数,然后将函数的返回值放入列表中得到的。

例如,如果names是['Alice', 'Bob', 'Charlie'],那么sequences_and_lengths会是这样一个列表:

[([97, 108, 105, 99, 101], 5), ([98, 111, 98], 3), ([99, 104, 97, 114, 108, 105, 101], 7)]

它表示:

- 名字’Alice’转换成的列表是[97, 108, 105, 99, 101],长度是5。

- 名字’Bob’转换成的列表是[98, 111, 98],长度是3。

- 名字’Charlie’转换成的列表是[99, 104, 97, 114, 108, 105, 101],长度是7。

name_sequences是一个列表,它的每个元素都是一个包含ASCII码的列表。这个列表是通过使用列表推导式循环遍历sequences_and_lengths中的每个元组,并将每个元组的第一个元素(也就是ASCII码列表)放入新列表中得到的。

例如,如果sequences_and_lengths是[([97, 108, 105, 99, 101], 5), ([98, 111, 98], 3), ([99, 104, 97, 114, 108, 105, 101], 7)],那么name_sequences会是这样一个列表:

[[97, 108, 105, 99, 101], [98, 111, 98], [99, 104, 97, 114, 108, 105, 101]]

它表示:

- 名字’Alice’转换成的列表是[97, 108, 105, 99, 101]。

- 名字’Bob’转换成的列表是[98, 111, 98]。

- 名字’Charlie’转换成的列表是[99, 104, 97, 114, 108, 105, 101]。

seq_lengths是一个由长度构成的张量。它的每个元素都是一个长度,表示每个姓名对应的ASCII码列表的长度。

这个张量是通过使用列表推导式循环遍历sequences_and_lengths中的每个元组,并将每个元组的第二个元素(也就是长度)放入新列表中得到的。然后,这个列表被转换成了一个张量。

例如,如果sequences_and_lengths是[([97, 108, 105, 99, 101], 5), ([98, 111, 98], 3), ([99, 104, 97, 114, 108, 105, 101], 7)],那么seq_lengths会是这样一个张量:

tensor([5, 3, 7])

它表示:

- 名字’Alice’对应的ASCII码列表的长度是5。

- 名字’Bob’对应的ASCII码列表的长度是3。

- 名字’Charlie’对应的ASCII码列表的长度是7。

将变量countries转换为长整型。

如果countries是一个包含了国家代码的列表或张量,那么将其转换为长整型会让每个国家代码都变成一个整数。

比如,如果countries是这样一个张量:

tensor(['US', 'GB', 'DE'])

那么将它转换为长整型后就会变成这样:

tensor([1, 2, 3])

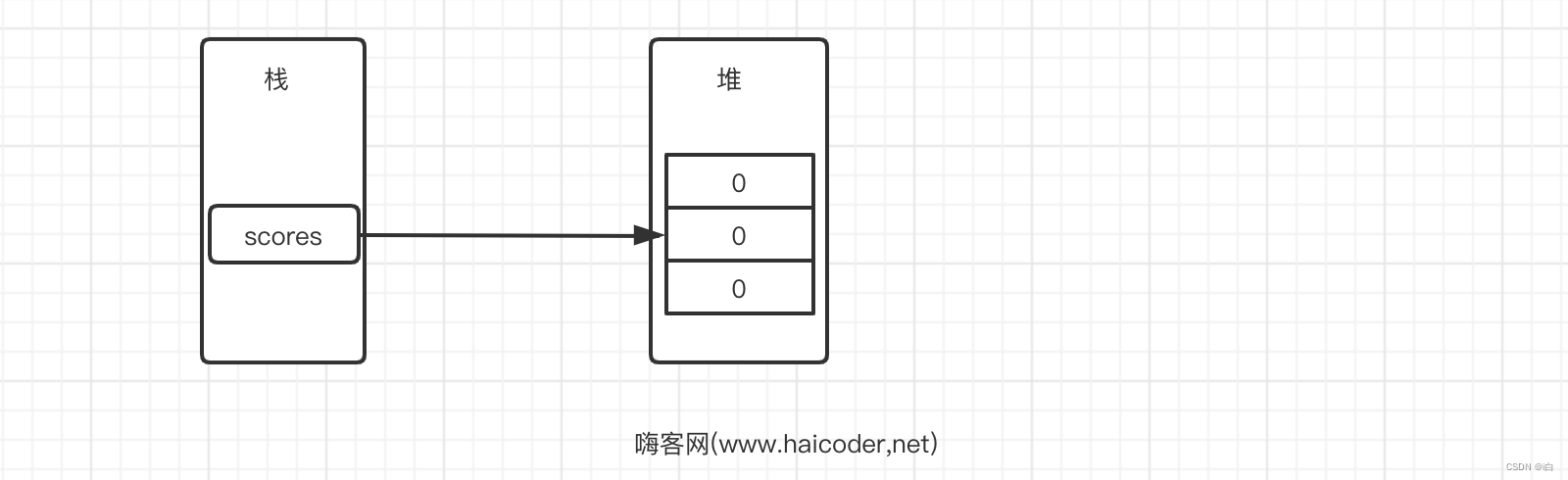

创建了一个长整型的张量,大小为(len(name_sequences), seq_lengths.max())。张量中的所有元素都是0。

例如,如果len(name_sequences)的值是3,seq_lengths.max()的值是5,那么这个张量就会是这样的:

tensor([[0, 0, 0, 0, 0], [0, 0, 0, 0, 0], [0, 0, 0, 0, 0]])

首先使用 enumerate 函数对 zip(name_sequences, seq_lengths) 进行遍历。zip(name_sequences, seq_lengths) 函数的作用是将 name_sequences 和 seq_lengths 两个序列合并,并生成一个迭代器。每次迭代中,enumerate 函数将返回一个元组 (idx, (seq, seq_len)),其中 idx 表示序列的索引,(seq, seq_len) 表示与该索引对应的序列。

然后使用返回的 (seq, seq_len) 将 seq 复制到 seq_tensor 的对应行的前 seq_len 个位置,使用 torch.LongTensor 将 seq 转换为张量。

这个代码块的作用是将每个名字的长度不足最大长度的部分填充为 0。

首先调用 seq_lengths.sort() 对 seq_lengths 张量进行排序。其中,参数 dim=0 表示按照第 0 维(即行)进行排序,descending=True 表示降序排列。

sort() 函数返回两个张量:排序后的张量和每个位置的原来的索引。将这两个张量赋值给 seq_lengths 和 perm_idx。

这个代码块的作用是将 seq_lengths 按长度从大到小排序,并记录下每个位置的原来的索引。

根据 perm_idx 对 seq_tensor 张量进行重排。具体地,perm_idx 中存储的是每个位置的原来的索引。因此,seq_tensor[perm_idx] 中的第

i

i

i 个元素对应的是 seq_tensor 中第

p

e

r

m

i

d

x

i

perm_idx_i

permidxi 个元素。

例如,如果 seq_tensor 为

[ [1, 2, 3], [4, 5, 0], [6, 0, 0] ]

而 perm_idx 为

[2, 0, 1]

那么 seq_tensor[perm_idx] 为

[ [6, 0, 0], [1, 2, 3], [4, 5, 0] ]

即将 seq_tensor 中的第 2 个元素(即 [6, 0, 0])移动到了第 0 个位置,原来的第 0 个元素(即 [1, 2, 3])移动到了第 1 个位置,原来的第 1 个元素(即 [4, 5, 0])移动到了第 2 个位置。

这样做的原因是为了使得长度相同的名字被放在一起,方便使用 pack_padded_sequence() 函数。

训练和测试

训练过程为

测试过程为

pred 表示对于每个输入样本,模型输出各个类别的概率,在此处,使用 output.max(dim=1, keepdim=True)[1] 得到每个样本最大概率对应的类别。其中,dim=1 表示在第一维(也就是类别的维度)求最大值,keepdim=True 表示保留最大值的维度。例如,假如模型的输出为:

[[0.1, 0.9, 0.2], [0.2, 0.7, 0.1], [0.3, 0.1, 0.6]]

则 output.max(dim=1, keepdim=True)[1] 得到的结果为:

[[1], [1], [2]]

即每个样本最大概率对应的类别。

output.max(dim=1, keepdim=True) 返回一个二元组,第一个元素是输出张量中每一行的最大值,第二个元素是每一行最大值的索引。[1] 表示取第二个元素,即取最大值的索引。

这一行代码主要是用来计算模型在测试集上的准确率的。

首先,pred是模型在测试集上预测出的每个样本的类别。

target是测试集上每个样本的真实类别。

view_as()函数是用来改变tensor的形状的,这里的意思是将target的形状调整为与pred的形状相同。

eq()函数是比较两个tensor的相等性,返回一个与输入tensor形状相同的tensor,其中每个位置上的值为1,如果相等,否则为0。

sum()函数是求tensor的和,item()函数是将tensor转换为数值。

最后,correct变量存储模型预测正确的样本数。

所以这一行代码的意思是将模型预测正确的样本数加上所有预测正确的样本数的和。

代码演示

import csv

import gzip

import math

import time

import matplotlib.pyplot as plt

import numpy as np

import torch

from torch.nn.utils.rnn import pack_padded_sequence

from torch.utils.data import DataLoader, Dataset

# -------------初始化参数---------------

HIDDEN_SIZE = 100

BATCH_SIZE = 256

N_LAYER = 2

N_EPOCHS = 100

N_CHARS = 128

USE_GPU = False

# ---------------准备数据-----------------

class NameDataset(Dataset):

def __init__(self, is_train_set=True):

# 根据is_train_set的值,它会加载names_train.csv.gz或names_test.csv.gz文件

filename = 'names_train.csv.gz' if is_train_set else 'names_test.csv.gz'

with gzip.open(filename, 'rt') as f: # 'rt'代表“作为文本读取”,返回一个文件对象,该对象存储在f变量中

reader = csv.reader(f)

rows = list(reader)

self.names = [row[0] for row in rows] # 每行的第一个元素为name集合

self.len = len(self.names)

self.countries = [row[1] for row in rows] # 每行的第二个元素为countries集合

self.country_list = list(sorted(set(self.countries))) # 包含了self.countries中唯一的国家,按字母顺序排序

self.country_dict = self.getCountryDict()

self.country_num = len(self.country_list)

'''

__getitem__方法用于返回数据集中给定索引处的人的名字和国家的元组。该方法接受一个索引作为参数,并返回一个元组(name, country)

例如,如果self.names是['Alice', 'Bob', 'Charlie'],self.countries是['France', 'Germany', 'Italy'],

self.country_dict是{'France': 0, 'Germany': 1, 'Italy': 2},调用__getitem__(1)将返回`

'''

def __getitem__(self, index):

return self.names[index], self.country_dict[self.countries[index]]

def __len__(self):

return self.len

'''

getCountryDict方法创建了一个将国家映射到整数的字典。

它是通过使用enumerate函数迭代self.country_list中的国家,

并为每个国家添加一个字典条目来实现的。键是国家名称,值是国家在列表中的索引。

'''

def getCountryDict(self):

country_dict = dict() # country_dict是一个已初始化为空字典的字典

# enumerate函数遍历self.country_list中的国家名称,并使用idx变量记录每个国家的索引,0是一个起始索引

for idx, country_name in enumerate(self.country_list, 0):

country_dict[country_name] = idx # country_name是key,idx是value,例如country_dict['France'] = 0

return country_dict

# idx2country方法接受一个索引作为参数,并返回self.country_list中相应的国家。

def idx2country(self, index):

return self.country_list[index]

# getCountriesNum方法返回存储在self.country_num属性中的self.country_list中唯一国家的数量。

def getCountriesNum(self):

return self.country_num

'''

如果self.country_list是['France', 'Germany', 'Italy'],

调用getCountryDict将返回字典{'France': 0, 'Germany': 1, 'Italy': 2},

调用idx2country(1)将返回'Germany',调用getCountriesNum()将返回3。

'''

trainset = NameDataset(is_train_set=True) # 训练集

trainloader = DataLoader(trainset, batch_size=BATCH_SIZE, shuffle=True)

testset = NameDataset(is_train_set=False) # 测试集

testloader = DataLoader(testset, batch_size=BATCH_SIZE, shuffle=False)

N_COUNTRY = trainset.getCountriesNum() # 训练数据集中的国家数

# -------------模型设计----------------

'''

这段代码定义了一个叫做RNNClassifier的PyTorch模型。这是一个分类器,用于将名字分类到不同的国家。

该模型是一个基于RNN的分类器,使用GRU(Gated Recurrent Unit)作为RNN结构。

该模型定义了以下几个层:

Embedding层:它将输入序列中的每个字符转换为一个向量。

GRU层:它使用GRU结构处理嵌入的序列。

Linear层(线性层):它将GRU层的输出转换为分类器的输出。

该模型还定义了一个名为forward的函数,该函数实现了模型的前向传播。在这个函数中,输入序列被转置,然后通过嵌入层和GRU层处理,最后通过线性层转换为输出。

该模型还定义了一个名为_init_hidden的函数,该函数用于初始化GRU层的隐藏状态。

该模型的构造函数(__init__函数)接受以下参数:

input_size:输入序列的字符数。

hidden_size:GRU层的隐藏状态的大小。

output_size:分类器的输出大小(即国家数量)。

n_layers:GRU层的数量。

bidirectional:是否使用双向GRU。

'''

class RNNClassifier(torch.nn.Module):

def __init__(self, input_size, hidden_size, output_size, n_layers=1, bidirectional=True):

super(RNNClassifier, self).__init__()

self.hidden_size = hidden_size

self.n_layers = n_layers

self.n_directions = 2 if bidirectional else 1

# embedding的输入的维度是(seq_len, batch_size),输出维度是(seq_len, batch_size, hidden_size)

self.embedding = torch.nn.Embedding(input_size, hidden_size)

# GRU层的输出维度为(seq_len, batch_size, hidden_size * n_directions),hidden_size * n_directions是GRU层的隐藏层特征数。

self.gru = torch.nn.GRU(hidden_size, hidden_size, n_layers, bidirectional=bidirectional)

# 线型层

self.fc = torch.nn.Linear(hidden_size * self.n_directions, output_size)

# 初始化GRU层的隐藏状态

def _init_hidden(self, batch_size):

# 创建一个全零的张量,其大小为(n_layers * n_directions, batch_size, hidden_size)。

hidden = torch.zeros(self.n_layers * self.n_directions, batch_size, self.hidden_size)

return create_tensor(hidden)

def forward(self, input, seq_lengths):

'''

:param input: 输入数据,其形状为(batch_size, seq_len)。

:param seq_lengths:输入序列的长度列表,其长度为batch_size。

:return:fc_output

'''

# input shape :B x S -> S x B

# 表示需要将输入数据的形状从(batch_size, seq_len)转换为(seq_len, batch_size)。这是因为GRU层的输入要求是(seq_len, batch_size, input_size)

input = input.t() # 矩阵的转置

batch_size = input.size(1) # (seq_len, batch_size)

# hidden保存了GRU层的隐藏状态,其大小为(n_layers * n_directions, batch_size, hidden_size)

hidden = self._init_hidden(batch_size)

embedding = self.embedding(input)

# pack them up

gru_input = pack_padded_sequence(embedding, seq_lengths)

# 返回一个元组(output, hidden),其中output是GRU层的输出,hidden是GRU层的最后一层的隐藏状态。

output, hidden = self.gru(gru_input, hidden)

# 在列方向上将两个张量拼接在一起。

# 这里的hidden[-1]和hidden[-2]是两个张量,分别表示最后一层和倒数第二层的隐藏状态。

if self.n_directions == 2:

hidden_cat = torch.cat([hidden[-1], hidden[-2]], dim=1)

else:

hidden_cat = hidden[-1]

fc_output = self.fc(hidden_cat)

return fc_output

# ---------------name转换成tensor的过程------------------------

def make_tensor(names, countries):

# sequences_and_lengths是一个列表,它的每个元素都是一个元组,元组中包含一个ASCLL列表和name长度的整数数字。

sequences_and_lengths = [name2list(name) for name in names]

# name_sequences是名字的ASCLL列表

name_sequences = [sl[0] for sl in sequences_and_lengths]

# seq_lengths是一个由长度构成的张量。它的每个元素都是一个长度,表示每个姓名对应的ASCII码列表的长度。

seq_lengths = torch.LongTensor([sl[1] for sl in sequences_and_lengths])

countries = countries.long()

# make tensor of name ,BatchSize * SeqLen

# 创建了一个长整型的张量,大小为(len(name_sequences), seq_lengths.max())。张量中的所有元素都是0。

seq_tensor = torch.zeros(len(name_sequences), seq_lengths.max()).long()

# 将每个名字的长度不足最大长度的部分填充为 0

for idx, (seq, seq_len) in enumerate(zip(name_sequences, seq_lengths), 0):

seq_tensor[idx, :seq_len] = torch.LongTensor(seq)

# sort by length to use pack_padded_sequence

# 将 seq_lengths 按长度从大到小排序,并记录下每个位置的原来的索引

seq_lengths, perm_idx = seq_lengths.sort(dim=0, descending=True)

seq_tensor = seq_tensor[perm_idx]

countries = countries[perm_idx]

return create_tensor(seq_tensor), create_tensor(seq_lengths), create_tensor(countries)

def name2list(name):

arr = [ord(c) for c in name]

return arr, len(arr)

# 将一个给定的张量转化为GPU张量

def create_tensor(tensor):

if USE_GPU:

device = torch.device('cuda:0')

tensor = tensor.to(device)

return tensor

# ----------------训练和测试---------------

def trainModel():

total_loss = 0

for i, (names, countries) in enumerate(trainloader, 1):

# inputs->seq_tensor,seq_lengths->seq_lengths,target->countries

inputs, seq_lengths, target = make_tensor(names, countries)

output = classifier(inputs, seq_lengths)

loss = criterion(output, target)

optimizer.zero_grad()

loss.backward()

optimizer.step()

# 所有批次的总损失

total_loss += loss.item()

# 每训练10个批次就会输出一条信息,每一个Batch_size为256

if i % 10 == 0:

print(f'[{time_since(start)}] Epoch {epoch} ', end='')

print(f'[{i * len(inputs)}/{len(trainset)}]', end='')

print(f'loss={total_loss / (i * len(inputs))}') # 所有批次的总损失/当前已经训练的样本数量

return total_loss

def testModel():

correct = 0

total = len(testset)

print('evaluating trained model....')

# 在测试模型中不需要进行计算梯度

with torch.no_grad():

for i, (names, countries) in enumerate(testloader, 1):

# inputs->seq_tensor,seq_lengths->seq_lengths,target->countries

inputs, seq_lengths, target = make_tensor(names, countries)

output = classifier(inputs, seq_lengths)

# 每个样本最大概率对应的类别,取最大值的索引,换句话说就是在测试集上预测出的每个样本的类别

pred = output.max(dim=1, keepdim=True)[1]

'''

首先,pred是模型在测试集上预测出的每个样本的类别。

target是测试集上每个样本的真实类别。

view_as()函数是用来改变tensor的形状的,这里的意思是将target的形状调整为与pred的形状相同。

eq()函数是比较两个tensor的相等性,返回一个与输入tensor形状相同的tensor,其中每个位置上的值为1,如果相等,否则为0。

sum()函数是求tensor的和,item()函数是将tensor转换为数值。

最后,correct变量存储模型预测正确的样本数。

所以这一行代码的意思是将模型预测正确的样本数加上所有预测正确的样本数的和。

'''

correct += pred.eq(target.view_as(pred)).sum().item()

percent = '%.2f' % (100 * correct / total)

print(f'Test set :Accuracy {correct}/{total} {percent}%')

return correct / total

# ------------主要循环-----------------

def time_since(since):

s = time.time() - since

m = math.floor(s / 60)

s -= m * 60

return '%dm%ds' % (m, s)

if __name__ == '__main__':

classifier = RNNClassifier(N_CHARS, HIDDEN_SIZE, N_COUNTRY, N_LAYER)

if USE_GPU:

device = torch.device("cuda:0")

classifier.to(device)

# 使用交叉熵损失函数和Adam优化器更新模型的参数

criterion = torch.nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(classifier.parameters(), lr=0.001)

start = time.time() # 用于计算时间差

print('Training for %d epochs...' % N_EPOCHS) # Training for 100 epochs...

acc_list = []

for epoch in range(1, N_EPOCHS + 1):

# Train cycle

trainModel()

# 得到的准确率添加到一个列表中

acc = testModel()

acc_list.append(acc)

# --------------绘图-----------------

epoch = np.arange(1, len(acc_list) + 1, 1)

acc_list = np.array(acc_list)

plt.plot(epoch, acc_list)

plt.xlabel('Epoch')

plt.ylabel('Accuracy')

# 绘制的图像上添加网格线

plt.grid()

plt.show()

输出结果

Training for 100 epochs...

[0m1s] Epoch 1 [2560/13374]loss=0.008795749628916383

[0m3s] Epoch 1 [5120/13374]loss=0.00749221951700747

[0m5s] Epoch 1 [7680/13374]loss=0.006772346297899882

[0m6s] Epoch 1 [10240/13374]loss=0.006305083283223212

[0m8s] Epoch 1 [12800/13374]loss=0.00595272284001112

evaluating trained model....

Test set :Accuracy 4490/6700 67.01%

[0m11s] Epoch 2 [2560/13374]loss=0.0043049277504906055

[0m13s] Epoch 2 [5120/13374]loss=0.004095416609197855

[0m15s] Epoch 2 [7680/13374]loss=0.0039768136572092775

[0m17s] Epoch 2 [10240/13374]loss=0.0038582786568440498

[0m19s] Epoch 2 [12800/13374]loss=0.0037702094856649636

evaluating trained model....

Test set :Accuracy 4988/6700 74.45%

[0m22s] Epoch 3 [2560/13374]loss=0.003022317495197058

[0m24s] Epoch 3 [5120/13374]loss=0.0031038673361763356

[0m25s] Epoch 3 [7680/13374]loss=0.003071871198092898

[0m27s] Epoch 3 [10240/13374]loss=0.0030331552785355597

[0m29s] Epoch 3 [12800/13374]loss=0.0030119305336847903

evaluating trained model....

Test set :Accuracy 5214/6700 77.82%

[0m32s] Epoch 4 [2560/13374]loss=0.0026609902502968906

[0m34s] Epoch 4 [5120/13374]loss=0.00260330936871469

[0m35s] Epoch 4 [7680/13374]loss=0.0025452626248200732

[0m37s] Epoch 4 [10240/13374]loss=0.002527727873530239

[0m38s] Epoch 4 [12800/13374]loss=0.0025296027818694712

evaluating trained model....

Test set :Accuracy 5377/6700 80.25%

[0m41s] Epoch 5 [2560/13374]loss=0.002322647930122912

[0m43s] Epoch 5 [5120/13374]loss=0.0022610334504861384

[0m45s] Epoch 5 [7680/13374]loss=0.002226858454135557

[0m46s] Epoch 5 [10240/13374]loss=0.0022156868362799287

[0m48s] Epoch 5 [12800/13374]loss=0.0022150837001390757

evaluating trained model....

Test set :Accuracy 5467/6700 81.60%

[0m51s] Epoch 6 [2560/13374]loss=0.0020402212860062717

[0m53s] Epoch 6 [5120/13374]loss=0.002028752595651895

[0m55s] Epoch 6 [7680/13374]loss=0.0020113130177681644

[0m56s] Epoch 6 [10240/13374]loss=0.001989361850428395

[0m58s] Epoch 6 [12800/13374]loss=0.0019963986263610424

evaluating trained model....

Test set :Accuracy 5504/6700 82.15%

[1m1s] Epoch 7 [2560/13374]loss=0.0017308587557636201

[1m3s] Epoch 7 [5120/13374]loss=0.001778336294228211

[1m5s] Epoch 7 [7680/13374]loss=0.0017760296701453625

[1m6s] Epoch 7 [10240/13374]loss=0.0017858314502518624

[1m8s] Epoch 7 [12800/13374]loss=0.001804346381686628

evaluating trained model....

Test set :Accuracy 5535/6700 82.61%

[1m11s] Epoch 8 [2560/13374]loss=0.0015619117766618729

[1m13s] Epoch 8 [5120/13374]loss=0.001596544065978378

[1m15s] Epoch 8 [7680/13374]loss=0.001608368125744164

[1m16s] Epoch 8 [10240/13374]loss=0.001619413893786259

[1m18s] Epoch 8 [12800/13374]loss=0.0016217814642004668

evaluating trained model....

Test set :Accuracy 5576/6700 83.22%

[1m21s] Epoch 9 [2560/13374]loss=0.0014580624061636626

[1m23s] Epoch 9 [5120/13374]loss=0.0014122298744041473

[1m25s] Epoch 9 [7680/13374]loss=0.0014337771610977749

[1m26s] Epoch 9 [10240/13374]loss=0.0014530424610711633

[1m28s] Epoch 9 [12800/13374]loss=0.001473297392949462

evaluating trained model....

Test set :Accuracy 5633/6700 84.07%

[1m31s] Epoch 10 [2560/13374]loss=0.0012395443627610803

[1m33s] Epoch 10 [5120/13374]loss=0.0012863237265264616

[1m34s] Epoch 10 [7680/13374]loss=0.0012860984910124291

[1m36s] Epoch 10 [10240/13374]loss=0.0013214423335739412

[1m38s] Epoch 10 [12800/13374]loss=0.001328199926065281

evaluating trained model....

Test set :Accuracy 5629/6700 84.01%

[1m41s] Epoch 11 [2560/13374]loss=0.0011477336811367422

[1m42s] Epoch 11 [5120/13374]loss=0.0012087051843991503

[1m44s] Epoch 11 [7680/13374]loss=0.0012027055742995193

[1m46s] Epoch 11 [10240/13374]loss=0.0012203586316900327

[1m47s] Epoch 11 [12800/13374]loss=0.0012193052982911467

evaluating trained model....

Test set :Accuracy 5616/6700 83.82%

[1m50s] Epoch 12 [2560/13374]loss=0.001016319904010743

[1m52s] Epoch 12 [5120/13374]loss=0.0010002771421568468

[1m54s] Epoch 12 [7680/13374]loss=0.0010312618183282514

[1m56s] Epoch 12 [10240/13374]loss=0.0010618593980325386

[1m57s] Epoch 12 [12800/13374]loss=0.0010661067173350603

evaluating trained model....

Test set :Accuracy 5639/6700 84.16%

[2m0s] Epoch 13 [2560/13374]loss=0.0009252744785044342

[2m2s] Epoch 13 [5120/13374]loss=0.0009171868819976226

[2m4s] Epoch 13 [7680/13374]loss=0.0009458162006922066

[2m5s] Epoch 13 [10240/13374]loss=0.0009481525426963344

[2m7s] Epoch 13 [12800/13374]loss=0.00096964645315893

evaluating trained model....

Test set :Accuracy 5636/6700 84.12%

[2m10s] Epoch 14 [2560/13374]loss=0.0007987115532159805

[2m12s] Epoch 14 [5120/13374]loss=0.0008508363331202418

[2m13s] Epoch 14 [7680/13374]loss=0.0008321050544812654

[2m15s] Epoch 14 [10240/13374]loss=0.000853200343408389

[2m17s] Epoch 14 [12800/13374]loss=0.0008577604981837794

evaluating trained model....

Test set :Accuracy 5649/6700 84.31%

[2m20s] Epoch 15 [2560/13374]loss=0.0006829311227193102

[2m21s] Epoch 15 [5120/13374]loss=0.0007436094296281226

[2m23s] Epoch 15 [7680/13374]loss=0.0007410863113667195

[2m25s] Epoch 15 [10240/13374]loss=0.0007492069220461417

[2m26s] Epoch 15 [12800/13374]loss=0.0007657129043946042

evaluating trained model....

Test set :Accuracy 5649/6700 84.31%

[2m29s] Epoch 16 [2560/13374]loss=0.0006110864778747782

[2m31s] Epoch 16 [5120/13374]loss=0.0006120361751527526

[2m33s] Epoch 16 [7680/13374]loss=0.0005922522376446674

[2m34s] Epoch 16 [10240/13374]loss=0.0006282105547143147

[2m36s] Epoch 16 [12800/13374]loss=0.0006521558249369264

evaluating trained model....

Test set :Accuracy 5626/6700 83.97%

[2m39s] Epoch 17 [2560/13374]loss=0.0006218408641871064

[2m41s] Epoch 17 [5120/13374]loss=0.0006253938772715628

[2m42s] Epoch 17 [7680/13374]loss=0.0006014973817703625

[2m44s] Epoch 17 [10240/13374]loss=0.0006094533549912739

[2m46s] Epoch 17 [12800/13374]loss=0.0005997634312370792

evaluating trained model....

Test set :Accuracy 5640/6700 84.18%

[2m49s] Epoch 18 [2560/13374]loss=0.000493335971259512

[2m51s] Epoch 18 [5120/13374]loss=0.0004845981427934021

[2m52s] Epoch 18 [7680/13374]loss=0.0005136435337287063

[2m54s] Epoch 18 [10240/13374]loss=0.0005225141023402103

[2m56s] Epoch 18 [12800/13374]loss=0.00053467103803996

evaluating trained model....

Test set :Accuracy 5648/6700 84.30%

[2m59s] Epoch 19 [2560/13374]loss=0.0004341423540608957

[3m0s] Epoch 19 [5120/13374]loss=0.0004463595512788743

[3m2s] Epoch 19 [7680/13374]loss=0.00044333215531272194

[3m4s] Epoch 19 [10240/13374]loss=0.00044942577442270705

[3m6s] Epoch 19 [12800/13374]loss=0.00045698363741394134

evaluating trained model....

Test set :Accuracy 5625/6700 83.96%

[3m9s] Epoch 20 [2560/13374]loss=0.0003655418404377997

[3m10s] Epoch 20 [5120/13374]loss=0.0003918105736374855

[3m12s] Epoch 20 [7680/13374]loss=0.0003942087971760581

[3m14s] Epoch 20 [10240/13374]loss=0.0004120477577089332

[3m15s] Epoch 20 [12800/13374]loss=0.00042110039852559565

evaluating trained model....

Test set :Accuracy 5617/6700 83.84%

[3m18s] Epoch 21 [2560/13374]loss=0.000387221178971231

[3m20s] Epoch 21 [5120/13374]loss=0.0003844902748824097

[3m22s] Epoch 21 [7680/13374]loss=0.0003781126724788919

[3m24s] Epoch 21 [10240/13374]loss=0.0003775410121306777

[3m25s] Epoch 21 [12800/13374]loss=0.00039219862315803766

evaluating trained model....

Test set :Accuracy 5625/6700 83.96%

[3m28s] Epoch 22 [2560/13374]loss=0.0002849995013093576

[3m30s] Epoch 22 [5120/13374]loss=0.0003119376400718465

[3m32s] Epoch 22 [7680/13374]loss=0.0003242912042575578

[3m34s] Epoch 22 [10240/13374]loss=0.0003423934140300844

[3m36s] Epoch 22 [12800/13374]loss=0.0003585551879950799

evaluating trained model....

Test set :Accuracy 5638/6700 84.15%

[3m39s] Epoch 23 [2560/13374]loss=0.00028107349207857625

[3m41s] Epoch 23 [5120/13374]loss=0.00029808350809616967

[3m42s] Epoch 23 [7680/13374]loss=0.0002935399480823738

[3m44s] Epoch 23 [10240/13374]loss=0.0003021313565113815

[3m46s] Epoch 23 [12800/13374]loss=0.0003206358276656829

evaluating trained model....

Test set :Accuracy 5643/6700 84.22%

[3m49s] Epoch 24 [2560/13374]loss=0.00027137492288602515

[3m51s] Epoch 24 [5120/13374]loss=0.0002959816287329886

[3m53s] Epoch 24 [7680/13374]loss=0.00029396258275179814

[3m55s] Epoch 24 [10240/13374]loss=0.0002972378639242379

[3m56s] Epoch 24 [12800/13374]loss=0.00031700621038908137

evaluating trained model....

Test set :Accuracy 5619/6700 83.87%

[3m59s] Epoch 25 [2560/13374]loss=0.00027997181168757377

[4m1s] Epoch 25 [5120/13374]loss=0.0002923572086729109

[4m3s] Epoch 25 [7680/13374]loss=0.0002928619486435006

[4m5s] Epoch 25 [10240/13374]loss=0.00029255779954837633

[4m7s] Epoch 25 [12800/13374]loss=0.0002898621273925528

evaluating trained model....

Test set :Accuracy 5642/6700 84.21%

[4m10s] Epoch 26 [2560/13374]loss=0.00026015249313786625

[4m12s] Epoch 26 [5120/13374]loss=0.0002594431287434418

[4m13s] Epoch 26 [7680/13374]loss=0.00026898673046768333

[4m15s] Epoch 26 [10240/13374]loss=0.00026851299626287075

[4m17s] Epoch 26 [12800/13374]loss=0.00027890532481251285

evaluating trained model....

Test set :Accuracy 5640/6700 84.18%

[4m20s] Epoch 27 [2560/13374]loss=0.00021040047140559183

[4m22s] Epoch 27 [5120/13374]loss=0.00021781289906357414

[4m24s] Epoch 27 [7680/13374]loss=0.00024041350407060235

[4m25s] Epoch 27 [10240/13374]loss=0.00025324106245534496

[4m27s] Epoch 27 [12800/13374]loss=0.0002668957394780591

evaluating trained model....

Test set :Accuracy 5599/6700 83.57%

[4m30s] Epoch 28 [2560/13374]loss=0.00023289845557883382

[4m32s] Epoch 28 [5120/13374]loss=0.00022903442149981856

[4m34s] Epoch 28 [7680/13374]loss=0.0002411791948058332

[4m36s] Epoch 28 [10240/13374]loss=0.00024657296708028296

[4m37s] Epoch 28 [12800/13374]loss=0.0002601210316061042

evaluating trained model....

Test set :Accuracy 5623/6700 83.93%

[4m41s] Epoch 29 [2560/13374]loss=0.00019408977968851104

[4m43s] Epoch 29 [5120/13374]loss=0.00021489283026312477

[4m44s] Epoch 29 [7680/13374]loss=0.0002242573376861401

[4m46s] Epoch 29 [10240/13374]loss=0.00024065984107437543

[4m48s] Epoch 29 [12800/13374]loss=0.0002547513897297904

evaluating trained model....

Test set :Accuracy 5606/6700 83.67%

[4m51s] Epoch 30 [2560/13374]loss=0.0001975414081243798

[4m53s] Epoch 30 [5120/13374]loss=0.00020308266721258406

[4m54s] Epoch 30 [7680/13374]loss=0.00021712742285065663

[4m56s] Epoch 30 [10240/13374]loss=0.00022762639873690204

[4m58s] Epoch 30 [12800/13374]loss=0.00024602484234492294

evaluating trained model....

Test set :Accuracy 5615/6700 83.81%

[5m1s] Epoch 31 [2560/13374]loss=0.00021107152715558187

[5m3s] Epoch 31 [5120/13374]loss=0.00023626213951501995

[5m5s] Epoch 31 [7680/13374]loss=0.0002421482514667635

[5m6s] Epoch 31 [10240/13374]loss=0.00024352707296202425

[5m8s] Epoch 31 [12800/13374]loss=0.0002536543316091411

evaluating trained model....

Test set :Accuracy 5609/6700 83.72%

[5m12s] Epoch 32 [2560/13374]loss=0.00022415582061512395

[5m13s] Epoch 32 [5120/13374]loss=0.00023612705190316773

[5m15s] Epoch 32 [7680/13374]loss=0.00023674616628947356

[5m17s] Epoch 32 [10240/13374]loss=0.00024407133423665073

[5m19s] Epoch 32 [12800/13374]loss=0.00025094811921007933

evaluating trained model....

Test set :Accuracy 5621/6700 83.90%

[5m22s] Epoch 33 [2560/13374]loss=0.00021448586921906098

[5m24s] Epoch 33 [5120/13374]loss=0.00021445927195600233

[5m25s] Epoch 33 [7680/13374]loss=0.00021367742253157

[5m27s] Epoch 33 [10240/13374]loss=0.00020967845048289747

[5m29s] Epoch 33 [12800/13374]loss=0.00022781318344641477

evaluating trained model....

Test set :Accuracy 5619/6700 83.87%

[5m32s] Epoch 34 [2560/13374]loss=0.00016398878797190263

[5m34s] Epoch 34 [5120/13374]loss=0.00017998322873609142

[5m36s] Epoch 34 [7680/13374]loss=0.00020074226486030967

[5m38s] Epoch 34 [10240/13374]loss=0.00020557176030706615

[5m39s] Epoch 34 [12800/13374]loss=0.00021911551215453073

evaluating trained model....

Test set :Accuracy 5625/6700 83.96%

[5m42s] Epoch 35 [2560/13374]loss=0.0001811121212085709

[5m44s] Epoch 35 [5120/13374]loss=0.0002039257771684788

[5m46s] Epoch 35 [7680/13374]loss=0.00021211462590144947

[5m48s] Epoch 35 [10240/13374]loss=0.00022183595101523678

[5m49s] Epoch 35 [12800/13374]loss=0.00022463828092440962

evaluating trained model....

Test set :Accuracy 5606/6700 83.67%

[5m53s] Epoch 36 [2560/13374]loss=0.0001615686938748695

[5m54s] Epoch 36 [5120/13374]loss=0.00020534135692287236

[5m56s] Epoch 36 [7680/13374]loss=0.0002117527993201899

[5m58s] Epoch 36 [10240/13374]loss=0.00020913015123369405

[6m0s] Epoch 36 [12800/13374]loss=0.00021531204576604067

evaluating trained model....

Test set :Accuracy 5638/6700 84.15%

[6m3s] Epoch 37 [2560/13374]loss=0.00018287628117832355

[6m5s] Epoch 37 [5120/13374]loss=0.0001738826456858078

[6m6s] Epoch 37 [7680/13374]loss=0.00018077774002449586

[6m8s] Epoch 37 [10240/13374]loss=0.00018951177153212485

[6m10s] Epoch 37 [12800/13374]loss=0.00020559900644002483

evaluating trained model....

Test set :Accuracy 5609/6700 83.72%

[6m13s] Epoch 38 [2560/13374]loss=0.0001690790981228929

[6m15s] Epoch 38 [5120/13374]loss=0.0001841513643739745

[6m17s] Epoch 38 [7680/13374]loss=0.00018440504500176756

[6m18s] Epoch 38 [10240/13374]loss=0.00018956128042191267

[6m20s] Epoch 38 [12800/13374]loss=0.00020320528419688345

evaluating trained model....

Test set :Accuracy 5618/6700 83.85%

[6m23s] Epoch 39 [2560/13374]loss=0.00016461342747788876

[6m25s] Epoch 39 [5120/13374]loss=0.00015234841557685286

[6m27s] Epoch 39 [7680/13374]loss=0.00016345222053738931

[6m29s] Epoch 39 [10240/13374]loss=0.0001764529575666529

[6m30s] Epoch 39 [12800/13374]loss=0.00019520843183272517

evaluating trained model....

Test set :Accuracy 5624/6700 83.94%

[6m34s] Epoch 40 [2560/13374]loss=0.0001546779356431216

[6m35s] Epoch 40 [5120/13374]loss=0.00015428868391609285

[6m37s] Epoch 40 [7680/13374]loss=0.0001795129605549543

[6m39s] Epoch 40 [10240/13374]loss=0.00018854692843888187

[6m40s] Epoch 40 [12800/13374]loss=0.0001973887266649399

evaluating trained model....

Test set :Accuracy 5622/6700 83.91%

[6m44s] Epoch 41 [2560/13374]loss=0.0001705992777715437

[6m45s] Epoch 41 [5120/13374]loss=0.00017570517738931812

[6m47s] Epoch 41 [7680/13374]loss=0.00018302313813668055

[6m49s] Epoch 41 [10240/13374]loss=0.0001966240446563461

[6m50s] Epoch 41 [12800/13374]loss=0.00019570997494156473

evaluating trained model....

Test set :Accuracy 5631/6700 84.04%

[6m54s] Epoch 42 [2560/13374]loss=0.00015811599078006112

[6m55s] Epoch 42 [5120/13374]loss=0.0001745999568811385

[6m57s] Epoch 42 [7680/13374]loss=0.00018485856247328533

[6m59s] Epoch 42 [10240/13374]loss=0.0001907406725877081

[7m0s] Epoch 42 [12800/13374]loss=0.00019575018071918749

evaluating trained model....

Test set :Accuracy 5605/6700 83.66%

[7m4s] Epoch 43 [2560/13374]loss=0.0001510863374278415

[7m5s] Epoch 43 [5120/13374]loss=0.0001656825730606215

[7m7s] Epoch 43 [7680/13374]loss=0.00017353364213098152

[7m9s] Epoch 43 [10240/13374]loss=0.0001848556416007341

[7m10s] Epoch 43 [12800/13374]loss=0.00019441463402472436

evaluating trained model....

Test set :Accuracy 5637/6700 84.13%

[7m14s] Epoch 44 [2560/13374]loss=0.00013900207486585713

[7m15s] Epoch 44 [5120/13374]loss=0.00015657104449928738

[7m17s] Epoch 44 [7680/13374]loss=0.00016166183340828865

[7m19s] Epoch 44 [10240/13374]loss=0.00017577846556378064

[7m20s] Epoch 44 [12800/13374]loss=0.000186349373543635

evaluating trained model....

Test set :Accuracy 5611/6700 83.75%

[7m24s] Epoch 45 [2560/13374]loss=0.00015886333567323164

[7m25s] Epoch 45 [5120/13374]loss=0.0001542387322842842

[7m27s] Epoch 45 [7680/13374]loss=0.00015916114425635898

[7m29s] Epoch 45 [10240/13374]loss=0.00016787303102319128

[7m30s] Epoch 45 [12800/13374]loss=0.0001832488866057247

evaluating trained model....

Test set :Accuracy 5618/6700 83.85%

[7m34s] Epoch 46 [2560/13374]loss=0.00016251378037850371

[7m35s] Epoch 46 [5120/13374]loss=0.00015612078168487643

[7m37s] Epoch 46 [7680/13374]loss=0.0001644015251561844

[7m39s] Epoch 46 [10240/13374]loss=0.00017556095153850037

[7m40s] Epoch 46 [12800/13374]loss=0.00018076322201522998

evaluating trained model....

Test set :Accuracy 5631/6700 84.04%

[7m44s] Epoch 47 [2560/13374]loss=0.00018637215544003993

[7m45s] Epoch 47 [5120/13374]loss=0.00016775977783254348

[7m47s] Epoch 47 [7680/13374]loss=0.00017071904900755423

[7m49s] Epoch 47 [10240/13374]loss=0.0001759853346811724

[7m50s] Epoch 47 [12800/13374]loss=0.00018099654160323554

evaluating trained model....

Test set :Accuracy 5627/6700 83.99%

[7m54s] Epoch 48 [2560/13374]loss=0.00014327409444376827

[7m55s] Epoch 48 [5120/13374]loss=0.00015061996891745365

[7m57s] Epoch 48 [7680/13374]loss=0.00016759194259066134

[7m59s] Epoch 48 [10240/13374]loss=0.00017730008403304964

[8m1s] Epoch 48 [12800/13374]loss=0.00018372942984569817

evaluating trained model....

Test set :Accuracy 5617/6700 83.84%

[8m4s] Epoch 49 [2560/13374]loss=0.00015277279671863653

[8m5s] Epoch 49 [5120/13374]loss=0.00015521488203376065

[8m7s] Epoch 49 [7680/13374]loss=0.00016506803804077207

[8m9s] Epoch 49 [10240/13374]loss=0.00017389924296367097

[8m11s] Epoch 49 [12800/13374]loss=0.00017558973129780498

evaluating trained model....

Test set :Accuracy 5624/6700 83.94%

[8m14s] Epoch 50 [2560/13374]loss=0.00011323324361001141

[8m16s] Epoch 50 [5120/13374]loss=0.00014765587002329994

[8m17s] Epoch 50 [7680/13374]loss=0.00015888109992374667

[8m19s] Epoch 50 [10240/13374]loss=0.00017072273185476662

[8m21s] Epoch 50 [12800/13374]loss=0.00018013035092735664

evaluating trained model....

Test set :Accuracy 5628/6700 84.00%

[8m24s] Epoch 51 [2560/13374]loss=0.00016151035088114442

[8m26s] Epoch 51 [5120/13374]loss=0.0001611633113498101

[8m27s] Epoch 51 [7680/13374]loss=0.00016899344118428416

[8m29s] Epoch 51 [10240/13374]loss=0.0001740327581501333

[8m31s] Epoch 51 [12800/13374]loss=0.00018140272266464307

evaluating trained model....

Test set :Accuracy 5641/6700 84.19%

[8m34s] Epoch 52 [2560/13374]loss=0.00014056754880584777

[8m35s] Epoch 52 [5120/13374]loss=0.0001548161022583372

[8m37s] Epoch 52 [7680/13374]loss=0.00016478231373184826

[8m39s] Epoch 52 [10240/13374]loss=0.00017180772838401026

[8m41s] Epoch 52 [12800/13374]loss=0.0001835115858557401

evaluating trained model....

Test set :Accuracy 5639/6700 84.16%

[8m44s] Epoch 53 [2560/13374]loss=0.00015064685430843383

[8m45s] Epoch 53 [5120/13374]loss=0.00016132084820128512

[8m47s] Epoch 53 [7680/13374]loss=0.00017351559436065144

[8m49s] Epoch 53 [10240/13374]loss=0.00017333728137600702

[8m51s] Epoch 53 [12800/13374]loss=0.000183434422215214

evaluating trained model....

Test set :Accuracy 5624/6700 83.94%

[8m54s] Epoch 54 [2560/13374]loss=0.0001317853144428227

[8m55s] Epoch 54 [5120/13374]loss=0.00012837100694014226

[8m57s] Epoch 54 [7680/13374]loss=0.00014664399811105492

[8m59s] Epoch 54 [10240/13374]loss=0.00015743264502816602

[9m1s] Epoch 54 [12800/13374]loss=0.0001717309195373673

evaluating trained model....

Test set :Accuracy 5621/6700 83.90%

[9m4s] Epoch 55 [2560/13374]loss=0.00012865873941336758

[9m5s] Epoch 55 [5120/13374]loss=0.00013132112835592124

[9m7s] Epoch 55 [7680/13374]loss=0.000156606427966229

[9m9s] Epoch 55 [10240/13374]loss=0.0001598045779246604

[9m11s] Epoch 55 [12800/13374]loss=0.00016757403835072183

evaluating trained model....

Test set :Accuracy 5624/6700 83.94%

[9m14s] Epoch 56 [2560/13374]loss=0.0001521973499620799

[9m16s] Epoch 56 [5120/13374]loss=0.00014971187738410663

[9m17s] Epoch 56 [7680/13374]loss=0.00015539889063802547

[9m19s] Epoch 56 [10240/13374]loss=0.00016088718530227197

[9m21s] Epoch 56 [12800/13374]loss=0.0001679533538117539

evaluating trained model....

Test set :Accuracy 5634/6700 84.09%

[9m24s] Epoch 57 [2560/13374]loss=0.0001408174866810441

[9m26s] Epoch 57 [5120/13374]loss=0.00014105156187724788

[9m27s] Epoch 57 [7680/13374]loss=0.00015969821130662847

[9m29s] Epoch 57 [10240/13374]loss=0.0001665821595452144

[9m31s] Epoch 57 [12800/13374]loss=0.0001765154227905441

evaluating trained model....

Test set :Accuracy 5619/6700 83.87%

[9m34s] Epoch 58 [2560/13374]loss=0.0001159722545708064

[9m36s] Epoch 58 [5120/13374]loss=0.00013082438381388782

[9m37s] Epoch 58 [7680/13374]loss=0.00015389772233902476

[9m39s] Epoch 58 [10240/13374]loss=0.00015763100327603752

[9m41s] Epoch 58 [12800/13374]loss=0.00016609600352239794

evaluating trained model....

Test set :Accuracy 5620/6700 83.88%

[9m44s] Epoch 59 [2560/13374]loss=0.00012572470150189473

[9m46s] Epoch 59 [5120/13374]loss=0.0001351951663309592

[9m47s] Epoch 59 [7680/13374]loss=0.00015341093518751828

[9m49s] Epoch 59 [10240/13374]loss=0.0001611297774616105

[9m51s] Epoch 59 [12800/13374]loss=0.000172754032901139

evaluating trained model....

Test set :Accuracy 5619/6700 83.87%

[9m54s] Epoch 60 [2560/13374]loss=0.0001572394699905999

[9m56s] Epoch 60 [5120/13374]loss=0.00016103157722682226

[9m58s] Epoch 60 [7680/13374]loss=0.00014411584876749354

[9m59s] Epoch 60 [10240/13374]loss=0.0001569970887430827

[10m1s] Epoch 60 [12800/13374]loss=0.00016543303427170032

evaluating trained model....

Test set :Accuracy 5624/6700 83.94%

[10m4s] Epoch 61 [2560/13374]loss=0.0001519184996141121

[10m6s] Epoch 61 [5120/13374]loss=0.00014532622444676235

[10m7s] Epoch 61 [7680/13374]loss=0.00015301352662694018

[10m9s] Epoch 61 [10240/13374]loss=0.0001627094849027344

[10m11s] Epoch 61 [12800/13374]loss=0.0001694941941241268

evaluating trained model....

Test set :Accuracy 5609/6700 83.72%

[10m14s] Epoch 62 [2560/13374]loss=0.00012481046942411922

[10m16s] Epoch 62 [5120/13374]loss=0.0001365885869745398

[10m18s] Epoch 62 [7680/13374]loss=0.0001334240873499463

[10m19s] Epoch 62 [10240/13374]loss=0.0001510697045887355

[10m21s] Epoch 62 [12800/13374]loss=0.00016342910603270867

evaluating trained model....

Test set :Accuracy 5636/6700 84.12%

[10m24s] Epoch 63 [2560/13374]loss=0.0001497945180744864

[10m26s] Epoch 63 [5120/13374]loss=0.00015301292223739437

[10m28s] Epoch 63 [7680/13374]loss=0.00014763502549612894

[10m29s] Epoch 63 [10240/13374]loss=0.00015617315930285258

[10m31s] Epoch 63 [12800/13374]loss=0.00016804048529593275

evaluating trained model....

Test set :Accuracy 5625/6700 83.96%

[10m34s] Epoch 64 [2560/13374]loss=0.00012523390323622153

[10m36s] Epoch 64 [5120/13374]loss=0.00013184264535084366

[10m38s] Epoch 64 [7680/13374]loss=0.00013768771411074945

[10m39s] Epoch 64 [10240/13374]loss=0.00014848649261693935

[10m41s] Epoch 64 [12800/13374]loss=0.0001612380365259014

evaluating trained model....

Test set :Accuracy 5622/6700 83.91%

[10m44s] Epoch 65 [2560/13374]loss=0.00013996066045365296

[10m46s] Epoch 65 [5120/13374]loss=0.00015102715624379926

[10m48s] Epoch 65 [7680/13374]loss=0.00014981613409569642

[10m49s] Epoch 65 [10240/13374]loss=0.00015806634810360264

[10m51s] Epoch 65 [12800/13374]loss=0.00016178309575479944

evaluating trained model....

Test set :Accuracy 5611/6700 83.75%

[10m54s] Epoch 66 [2560/13374]loss=0.00015132858243305236

[10m56s] Epoch 66 [5120/13374]loss=0.00015365178187494167

[10m58s] Epoch 66 [7680/13374]loss=0.00015351198914383227

[10m59s] Epoch 66 [10240/13374]loss=0.0001633736457733903

[11m1s] Epoch 66 [12800/13374]loss=0.0001653348852414638

evaluating trained model....

Test set :Accuracy 5633/6700 84.07%

[11m5s] Epoch 67 [2560/13374]loss=0.00016389744560001417

[11m6s] Epoch 67 [5120/13374]loss=0.00013684426030522446

[11m8s] Epoch 67 [7680/13374]loss=0.0001536815496365307

[11m10s] Epoch 67 [10240/13374]loss=0.00016131461970871896

[11m11s] Epoch 67 [12800/13374]loss=0.00016271487118501681

evaluating trained model....

Test set :Accuracy 5621/6700 83.90%

[11m15s] Epoch 68 [2560/13374]loss=0.00013072111942165067

[11m16s] Epoch 68 [5120/13374]loss=0.00012556828223750927

[11m18s] Epoch 68 [7680/13374]loss=0.00014398710845853202

[11m20s] Epoch 68 [10240/13374]loss=0.0001523779255876434

[11m21s] Epoch 68 [12800/13374]loss=0.00016215457377256827

evaluating trained model....

Test set :Accuracy 5623/6700 83.93%

[11m25s] Epoch 69 [2560/13374]loss=0.00010159311495954171

[11m26s] Epoch 69 [5120/13374]loss=0.0001278994601307204

[11m28s] Epoch 69 [7680/13374]loss=0.00013482464285819636

[11m30s] Epoch 69 [10240/13374]loss=0.00014870266868456384

[11m31s] Epoch 69 [12800/13374]loss=0.00015448125552211422

evaluating trained model....

Test set :Accuracy 5609/6700 83.72%

[11m35s] Epoch 70 [2560/13374]loss=0.0001233295086422004

[11m36s] Epoch 70 [5120/13374]loss=0.0001226161861268338

[11m38s] Epoch 70 [7680/13374]loss=0.00014428681740052222

[11m40s] Epoch 70 [10240/13374]loss=0.000151535566237726

[11m41s] Epoch 70 [12800/13374]loss=0.0001626667297023232

evaluating trained model....

Test set :Accuracy 5629/6700 84.01%

[11m45s] Epoch 71 [2560/13374]loss=0.00013054470837232658

[11m46s] Epoch 71 [5120/13374]loss=0.00014469808957073838

[11m48s] Epoch 71 [7680/13374]loss=0.00014023057932111745

[11m50s] Epoch 71 [10240/13374]loss=0.00015273510180122685

[11m51s] Epoch 71 [12800/13374]loss=0.00015601934530423022

evaluating trained model....

Test set :Accuracy 5627/6700 83.99%

[11m55s] Epoch 72 [2560/13374]loss=0.0001451513911888469

[11m56s] Epoch 72 [5120/13374]loss=0.0001421030505298404

[11m58s] Epoch 72 [7680/13374]loss=0.00014573983353329822

[12m0s] Epoch 72 [10240/13374]loss=0.00015338097709900468

[12m1s] Epoch 72 [12800/13374]loss=0.00016022806012188083

evaluating trained model....

Test set :Accuracy 5635/6700 84.10%

[12m4s] Epoch 73 [2560/13374]loss=9.918293944792822e-05

[12m6s] Epoch 73 [5120/13374]loss=0.0001242855749296723

[12m8s] Epoch 73 [7680/13374]loss=0.0001367441436741501

[12m10s] Epoch 73 [10240/13374]loss=0.0001470206563681131

[12m11s] Epoch 73 [12800/13374]loss=0.0001562797449878417

evaluating trained model....

Test set :Accuracy 5627/6700 83.99%

[12m15s] Epoch 74 [2560/13374]loss=0.00014721532606927213

[12m16s] Epoch 74 [5120/13374]loss=0.0001444981493477826

[12m18s] Epoch 74 [7680/13374]loss=0.00014881258308984495

[12m20s] Epoch 74 [10240/13374]loss=0.00015772726610521203

[12m21s] Epoch 74 [12800/13374]loss=0.0001592241138132522

evaluating trained model....

Test set :Accuracy 5634/6700 84.09%

[12m24s] Epoch 75 [2560/13374]loss=0.00012615556333912538

[12m26s] Epoch 75 [5120/13374]loss=0.00013949296262580902

[12m28s] Epoch 75 [7680/13374]loss=0.00014796604179233934

[12m30s] Epoch 75 [10240/13374]loss=0.00015019661932456075

[12m31s] Epoch 75 [12800/13374]loss=0.0001550688548013568

evaluating trained model....

Test set :Accuracy 5619/6700 83.87%

[12m34s] Epoch 76 [2560/13374]loss=0.00012396966194501148

[12m36s] Epoch 76 [5120/13374]loss=0.00012158954523329157

[12m38s] Epoch 76 [7680/13374]loss=0.00014312214213229406

[12m40s] Epoch 76 [10240/13374]loss=0.00015489414017793023

[12m41s] Epoch 76 [12800/13374]loss=0.00015657570045732427

evaluating trained model....

Test set :Accuracy 5613/6700 83.78%

[12m45s] Epoch 77 [2560/13374]loss=0.00013770114601356908

[12m46s] Epoch 77 [5120/13374]loss=0.00013504474627552555

[12m48s] Epoch 77 [7680/13374]loss=0.00014157133676538555

[12m50s] Epoch 77 [10240/13374]loss=0.00014691516571474494

[12m51s] Epoch 77 [12800/13374]loss=0.0001539474562741816

evaluating trained model....

Test set :Accuracy 5630/6700 84.03%

[12m54s] Epoch 78 [2560/13374]loss=0.0001347062941931654

[12m56s] Epoch 78 [5120/13374]loss=0.00014553138389601373

[12m58s] Epoch 78 [7680/13374]loss=0.00015422393529055018

[13m0s] Epoch 78 [10240/13374]loss=0.00016098981395771262

[13m1s] Epoch 78 [12800/13374]loss=0.00015929476780002005

evaluating trained model....

Test set :Accuracy 5632/6700 84.06%

[13m5s] Epoch 79 [2560/13374]loss=0.00014270798856159673

[13m6s] Epoch 79 [5120/13374]loss=0.00014247241269913503

[13m8s] Epoch 79 [7680/13374]loss=0.0001474730942087869

[13m10s] Epoch 79 [10240/13374]loss=0.00014405866750166751

[13m11s] Epoch 79 [12800/13374]loss=0.00015535103186266496

evaluating trained model....

Test set :Accuracy 5598/6700 83.55%

[13m15s] Epoch 80 [2560/13374]loss=0.00013216626466601157

[13m16s] Epoch 80 [5120/13374]loss=0.00012860856149927714

[13m18s] Epoch 80 [7680/13374]loss=0.00013908431768262138

[13m20s] Epoch 80 [10240/13374]loss=0.00014890859001752687

[13m21s] Epoch 80 [12800/13374]loss=0.0001536237155960407

evaluating trained model....

Test set :Accuracy 5626/6700 83.97%

[13m24s] Epoch 81 [2560/13374]loss=0.00011747977550840006

[13m26s] Epoch 81 [5120/13374]loss=0.00013745418254984543

[13m28s] Epoch 81 [7680/13374]loss=0.00014869928345433435

[13m30s] Epoch 81 [10240/13374]loss=0.00014850849202048267

[13m31s] Epoch 81 [12800/13374]loss=0.0001555897548678331

evaluating trained model....

Test set :Accuracy 5614/6700 83.79%

[13m34s] Epoch 82 [2560/13374]loss=0.00011852576681121718

[13m36s] Epoch 82 [5120/13374]loss=0.00012785865274054232

[13m38s] Epoch 82 [7680/13374]loss=0.00013469148846828222

[13m39s] Epoch 82 [10240/13374]loss=0.0001399844194565958

[13m41s] Epoch 82 [12800/13374]loss=0.00015072476504428777

evaluating trained model....

Test set :Accuracy 5618/6700 83.85%

[13m44s] Epoch 83 [2560/13374]loss=0.00012827901009586639

[13m46s] Epoch 83 [5120/13374]loss=0.0001248548773219227

[13m48s] Epoch 83 [7680/13374]loss=0.0001405031957498674

[13m49s] Epoch 83 [10240/13374]loss=0.00014757312410438317

[13m51s] Epoch 83 [12800/13374]loss=0.00015074000802997034

evaluating trained model....

Test set :Accuracy 5617/6700 83.84%

[13m54s] Epoch 84 [2560/13374]loss=0.0001197554731334094

[13m56s] Epoch 84 [5120/13374]loss=0.00013129895451129413

[13m58s] Epoch 84 [7680/13374]loss=0.0001355650213857492

[13m59s] Epoch 84 [10240/13374]loss=0.00014124497338343644

[14m1s] Epoch 84 [12800/13374]loss=0.00015468007113668137

evaluating trained model....

Test set :Accuracy 5615/6700 83.81%

[14m4s] Epoch 85 [2560/13374]loss=0.00011679257004288956

[14m6s] Epoch 85 [5120/13374]loss=0.00013543280729209073

[14m8s] Epoch 85 [7680/13374]loss=0.00014373817830346525

[14m10s] Epoch 85 [10240/13374]loss=0.00014686988724861295

[14m12s] Epoch 85 [12800/13374]loss=0.0001501399651169777

evaluating trained model....

Test set :Accuracy 5627/6700 83.99%

[14m15s] Epoch 86 [2560/13374]loss=0.00012882882801932283

[14m16s] Epoch 86 [5120/13374]loss=0.00014252278415369802

[14m18s] Epoch 86 [7680/13374]loss=0.00014668191882568257

[14m20s] Epoch 86 [10240/13374]loss=0.00014683763847642695

[14m22s] Epoch 86 [12800/13374]loss=0.00014997392434452195

evaluating trained model....

Test set :Accuracy 5621/6700 83.90%

[14m25s] Epoch 87 [2560/13374]loss=0.0001250624336535111

[14m26s] Epoch 87 [5120/13374]loss=0.00012079182706656866

[14m28s] Epoch 87 [7680/13374]loss=0.0001293805112557796

[14m30s] Epoch 87 [10240/13374]loss=0.0001396809422658407

[14m32s] Epoch 87 [12800/13374]loss=0.0001491250333492644

evaluating trained model....

Test set :Accuracy 5615/6700 83.81%

[14m35s] Epoch 88 [2560/13374]loss=0.00012685446272371338

[14m36s] Epoch 88 [5120/13374]loss=0.00013128856699040625

[14m38s] Epoch 88 [7680/13374]loss=0.00014242062704094375

[14m40s] Epoch 88 [10240/13374]loss=0.0001496332080932916

[14m41s] Epoch 88 [12800/13374]loss=0.00015086132945725693

evaluating trained model....

Test set :Accuracy 5620/6700 83.88%

[14m45s] Epoch 89 [2560/13374]loss=0.0001088894310669275

[14m46s] Epoch 89 [5120/13374]loss=0.00011795573245763081

[14m48s] Epoch 89 [7680/13374]loss=0.00011886657169573785

[14m50s] Epoch 89 [10240/13374]loss=0.0001364844952149724

[14m51s] Epoch 89 [12800/13374]loss=0.00014904032454069238

evaluating trained model....

Test set :Accuracy 5616/6700 83.82%

[14m54s] Epoch 90 [2560/13374]loss=0.00011213706384296528

[14m56s] Epoch 90 [5120/13374]loss=0.0001261134460946778

[14m58s] Epoch 90 [7680/13374]loss=0.00013915661450785894

[15m0s] Epoch 90 [10240/13374]loss=0.00014501097466563807

[15m1s] Epoch 90 [12800/13374]loss=0.00014809413871262224

evaluating trained model....

Test set :Accuracy 5624/6700 83.94%

[15m4s] Epoch 91 [2560/13374]loss=0.00013202862937760073

[15m6s] Epoch 91 [5120/13374]loss=0.00012462883660191437

[15m8s] Epoch 91 [7680/13374]loss=0.0001260573302715784

[15m9s] Epoch 91 [10240/13374]loss=0.00013730417786064208

[15m11s] Epoch 91 [12800/13374]loss=0.00014339233697683085

evaluating trained model....

Test set :Accuracy 5623/6700 83.93%

[15m14s] Epoch 92 [2560/13374]loss=0.00010567067438387312

[15m16s] Epoch 92 [5120/13374]loss=0.00013162596915208268

[15m18s] Epoch 92 [7680/13374]loss=0.00012917811667042164

[15m19s] Epoch 92 [10240/13374]loss=0.0001438192185560183

[15m21s] Epoch 92 [12800/13374]loss=0.00014732400675711687

evaluating trained model....

Test set :Accuracy 5608/6700 83.70%

[15m24s] Epoch 93 [2560/13374]loss=0.00013329306020750664

[15m26s] Epoch 93 [5120/13374]loss=0.00012738276109303115

[15m27s] Epoch 93 [7680/13374]loss=0.0001515427360573085

[15m29s] Epoch 93 [10240/13374]loss=0.00014957837001929874

[15m31s] Epoch 93 [12800/13374]loss=0.00015246473580191378

evaluating trained model....

Test set :Accuracy 5615/6700 83.81%

[15m34s] Epoch 94 [2560/13374]loss=0.0001626451310585253

[15m36s] Epoch 94 [5120/13374]loss=0.000145539248114801

[15m37s] Epoch 94 [7680/13374]loss=0.00014784088173958783

[15m39s] Epoch 94 [10240/13374]loss=0.00014439497354032938

[15m41s] Epoch 94 [12800/13374]loss=0.0001479455725348089

evaluating trained model....

Test set :Accuracy 5608/6700 83.70%

[15m44s] Epoch 95 [2560/13374]loss=0.00013216895677032882

[15m45s] Epoch 95 [5120/13374]loss=0.00013768000644631684

[15m47s] Epoch 95 [7680/13374]loss=0.00014584583647471542

[15m49s] Epoch 95 [10240/13374]loss=0.00014710118284710916

[15m50s] Epoch 95 [12800/13374]loss=0.00015041846956592053

evaluating trained model....

Test set :Accuracy 5609/6700 83.72%

[15m54s] Epoch 96 [2560/13374]loss=0.00011901519937964621

[15m55s] Epoch 96 [5120/13374]loss=0.00012891675050923367

[15m57s] Epoch 96 [7680/13374]loss=0.0001345742579966706

[15m59s] Epoch 96 [10240/13374]loss=0.00014311605400507688

[16m0s] Epoch 96 [12800/13374]loss=0.00014662933586805592

evaluating trained model....

Test set :Accuracy 5624/6700 83.94%

[16m3s] Epoch 97 [2560/13374]loss=0.00011543923828867264

[16m5s] Epoch 97 [5120/13374]loss=0.00013209702156018465

[16m7s] Epoch 97 [7680/13374]loss=0.00013954500876328286

[16m9s] Epoch 97 [10240/13374]loss=0.00014411417487281142

[16m10s] Epoch 97 [12800/13374]loss=0.00014642644047853536

evaluating trained model....

Test set :Accuracy 5614/6700 83.79%

[16m13s] Epoch 98 [2560/13374]loss=0.0001442501168639865

[16m15s] Epoch 98 [5120/13374]loss=0.00013993365719215943

[16m16s] Epoch 98 [7680/13374]loss=0.00013036451806935171

[16m18s] Epoch 98 [10240/13374]loss=0.00013761988830083283

[16m19s] Epoch 98 [12800/13374]loss=0.00014296498557087034

evaluating trained model....

Test set :Accuracy 5629/6700 84.01%

[16m22s] Epoch 99 [2560/13374]loss=0.000125877602113178

[16m23s] Epoch 99 [5120/13374]loss=0.00013187407530494965

[16m25s] Epoch 99 [7680/13374]loss=0.0001281533745592848

[16m26s] Epoch 99 [10240/13374]loss=0.00013478287883117446

[16m28s] Epoch 99 [12800/13374]loss=0.00014337152031657752

evaluating trained model....

Test set :Accuracy 5609/6700 83.72%

[16m31s] Epoch 100 [2560/13374]loss=0.00010824350210896228

[16m33s] Epoch 100 [5120/13374]loss=0.00011661885855573928

[16m34s] Epoch 100 [7680/13374]loss=0.00012793573926804432

[16m36s] Epoch 100 [10240/13374]loss=0.00013435454793579992

[16m37s] Epoch 100 [12800/13374]loss=0.00014086940420384052

evaluating trained model....

Test set :Accuracy 5624/6700 83.94%

在不使用GPU的情况下能达到83%左右

使用GPU的输出为

Training for 100 epochs...

[0m0s] Epoch 1 [2560/13374]loss=0.008903741557151078

[0m0s] Epoch 1 [5120/13374]loss=0.00753147944342345

[0m0s] Epoch 1 [7680/13374]loss=0.006852023163810372

[0m1s] Epoch 1 [10240/13374]loss=0.006399183883331716

[0m1s] Epoch 1 [12800/13374]loss=0.006034796191379428

evaluating trained model....

Test set :Accuracy 4478/6700 66.84%

[0m1s] Epoch 2 [2560/13374]loss=0.0041690033860504625

[0m1s] Epoch 2 [5120/13374]loss=0.004137577000074088

[0m2s] Epoch 2 [7680/13374]loss=0.004033644171431661

[0m2s] Epoch 2 [10240/13374]loss=0.003934146807296202

[0m2s] Epoch 2 [12800/13374]loss=0.00381225798279047

evaluating trained model....

Test set :Accuracy 4951/6700 73.90%

[0m3s] Epoch 3 [2560/13374]loss=0.0031840311829000713

[0m3s] Epoch 3 [5120/13374]loss=0.003177014389075339

[0m3s] Epoch 3 [7680/13374]loss=0.003106098901480436

[0m3s] Epoch 3 [10240/13374]loss=0.0030897249933332207

[0m3s] Epoch 3 [12800/13374]loss=0.003066398906521499

evaluating trained model....

Test set :Accuracy 5222/6700 77.94%

[0m4s] Epoch 4 [2560/13374]loss=0.0026156534906476734

[0m4s] Epoch 4 [5120/13374]loss=0.0025507750920951366

[0m4s] Epoch 4 [7680/13374]loss=0.002581376388358573

[0m4s] Epoch 4 [10240/13374]loss=0.0025776615540962665

[0m4s] Epoch 4 [12800/13374]loss=0.00257153136190027

evaluating trained model....

Test set :Accuracy 5389/6700 80.43%

[0m5s] Epoch 5 [2560/13374]loss=0.0022862419602461157

[0m5s] Epoch 5 [5120/13374]loss=0.002329017041483894

[0m5s] Epoch 5 [7680/13374]loss=0.002273431090482821

[0m5s] Epoch 5 [10240/13374]loss=0.0022545532614458353

[0m6s] Epoch 5 [12800/13374]loss=0.002221973850391805

evaluating trained model....

Test set :Accuracy 5433/6700 81.09%

[0m6s] Epoch 6 [2560/13374]loss=0.001966827618889511

[0m6s] Epoch 6 [5120/13374]loss=0.0019369014247786255

[0m6s] Epoch 6 [7680/13374]loss=0.0019475599091189602

[0m7s] Epoch 6 [10240/13374]loss=0.0019833654980175195

[0m7s] Epoch 6 [12800/13374]loss=0.0020023415936157108

evaluating trained model....

Test set :Accuracy 5460/6700 81.49%

[0m7s] Epoch 7 [2560/13374]loss=0.0017486114287748934

[0m7s] Epoch 7 [5120/13374]loss=0.00173266411293298

[0m8s] Epoch 7 [7680/13374]loss=0.001749523418645064

[0m8s] Epoch 7 [10240/13374]loss=0.0017777312692487611

[0m8s] Epoch 7 [12800/13374]loss=0.0017937347874976695

evaluating trained model....

Test set :Accuracy 5587/6700 83.39%

[0m8s] Epoch 8 [2560/13374]loss=0.001535434159450233

[0m9s] Epoch 8 [5120/13374]loss=0.0016079455672297627

[0m9s] Epoch 8 [7680/13374]loss=0.0016134626891774436

[0m9s] Epoch 8 [10240/13374]loss=0.0016099186905194074

[0m9s] Epoch 8 [12800/13374]loss=0.0016115451231598853

evaluating trained model....

Test set :Accuracy 5586/6700 83.37%

[0m9s] Epoch 9 [2560/13374]loss=0.0015246090129949152

[0m10s] Epoch 9 [5120/13374]loss=0.0014874590968247503

[0m10s] Epoch 9 [7680/13374]loss=0.0014712288432444135

[0m10s] Epoch 9 [10240/13374]loss=0.0014727842994034291

[0m10s] Epoch 9 [12800/13374]loss=0.0014706578734330832

evaluating trained model....

Test set :Accuracy 5612/6700 83.76%

[0m11s] Epoch 10 [2560/13374]loss=0.0013044387218542398

[0m11s] Epoch 10 [5120/13374]loss=0.001293457020074129

[0m11s] Epoch 10 [7680/13374]loss=0.0012720226969880363

[0m11s] Epoch 10 [10240/13374]loss=0.001289598102448508

[0m11s] Epoch 10 [12800/13374]loss=0.0013152152264956384

evaluating trained model....

Test set :Accuracy 5609/6700 83.72%

[0m12s] Epoch 11 [2560/13374]loss=0.0012514282134361565

[0m12s] Epoch 11 [5120/13374]loss=0.0011971033265581355

[0m12s] Epoch 11 [7680/13374]loss=0.0012117810353326302

[0m12s] Epoch 11 [10240/13374]loss=0.0012070848926668987

[0m12s] Epoch 11 [12800/13374]loss=0.0011964122671633958

evaluating trained model....

Test set :Accuracy 5648/6700 84.30%

[0m13s] Epoch 12 [2560/13374]loss=0.0010432401322759688

[0m13s] Epoch 12 [5120/13374]loss=0.001013617528951727

[0m13s] Epoch 12 [7680/13374]loss=0.0010387218945349256

[0m13s] Epoch 12 [10240/13374]loss=0.001028305693762377

[0m14s] Epoch 12 [12800/13374]loss=0.0010516084311529995

evaluating trained model....

Test set :Accuracy 5630/6700 84.03%

[0m14s] Epoch 13 [2560/13374]loss=0.000939148711040616

[0m14s] Epoch 13 [5120/13374]loss=0.0009055397356860339

[0m14s] Epoch 13 [7680/13374]loss=0.0009244041187533488

[0m15s] Epoch 13 [10240/13374]loss=0.0009122181771090254

[0m15s] Epoch 13 [12800/13374]loss=0.0009265360282734036

evaluating trained model....

Test set :Accuracy 5657/6700 84.43%

[0m15s] Epoch 14 [2560/13374]loss=0.0008343156077899039

[0m15s] Epoch 14 [5120/13374]loss=0.0007962662988575175

[0m16s] Epoch 14 [7680/13374]loss=0.0008194532711058855

[0m16s] Epoch 14 [10240/13374]loss=0.0008379101753234863

[0m16s] Epoch 14 [12800/13374]loss=0.0008379516634158791

evaluating trained model....

Test set :Accuracy 5634/6700 84.09%

[0m16s] Epoch 15 [2560/13374]loss=0.0007666659308597446

[0m16s] Epoch 15 [5120/13374]loss=0.0007579184079077095

[0m17s] Epoch 15 [7680/13374]loss=0.0007324354781303554

[0m17s] Epoch 15 [10240/13374]loss=0.0007251499511767179

[0m17s] Epoch 15 [12800/13374]loss=0.0007474861515220255

evaluating trained model....

Test set :Accuracy 5623/6700 83.93%

[0m17s] Epoch 16 [2560/13374]loss=0.0006009104690747336

[0m18s] Epoch 16 [5120/13374]loss=0.000619590475980658

[0m18s] Epoch 16 [7680/13374]loss=0.0006256056755470732

[0m18s] Epoch 16 [10240/13374]loss=0.0006388584442902356

[0m18s] Epoch 16 [12800/13374]loss=0.000652720594080165

evaluating trained model....

Test set :Accuracy 5635/6700 84.10%

[0m19s] Epoch 17 [2560/13374]loss=0.0005419307562988251

[0m19s] Epoch 17 [5120/13374]loss=0.0005506303001311608

[0m19s] Epoch 17 [7680/13374]loss=0.0005507158930413425

[0m19s] Epoch 17 [10240/13374]loss=0.0005757264938438311

[0m19s] Epoch 17 [12800/13374]loss=0.0005735846608877182

evaluating trained model....

Test set :Accuracy 5628/6700 84.00%

[0m20s] Epoch 18 [2560/13374]loss=0.0004513851017691195

[0m20s] Epoch 18 [5120/13374]loss=0.0004562299916869961

[0m20s] Epoch 18 [7680/13374]loss=0.0004645451757824048

[0m20s] Epoch 18 [10240/13374]loss=0.00048724356092861855

[0m20s] Epoch 18 [12800/13374]loss=0.0004980717250145972

evaluating trained model....

Test set :Accuracy 5644/6700 84.24%

[0m21s] Epoch 19 [2560/13374]loss=0.0004241921124048531

[0m21s] Epoch 19 [5120/13374]loss=0.00042552113154670224

[0m21s] Epoch 19 [7680/13374]loss=0.00043254434131085874

[0m21s] Epoch 19 [10240/13374]loss=0.00043901320386794394

[0m21s] Epoch 19 [12800/13374]loss=0.00044553699844982473

evaluating trained model....

Test set :Accuracy 5633/6700 84.07%

[0m22s] Epoch 20 [2560/13374]loss=0.00038923954707570374

[0m22s] Epoch 20 [5120/13374]loss=0.0003887563740136102

[0m22s] Epoch 20 [7680/13374]loss=0.00039828809385653583

[0m22s] Epoch 20 [10240/13374]loss=0.0004101082813576795

[0m23s] Epoch 20 [12800/13374]loss=0.00041255191375967116

evaluating trained model....

Test set :Accuracy 5613/6700 83.78%

[0m23s] Epoch 21 [2560/13374]loss=0.00034745674347504976

[0m23s] Epoch 21 [5120/13374]loss=0.0003689420540467836

[0m23s] Epoch 21 [7680/13374]loss=0.00037924563027142235

[0m24s] Epoch 21 [10240/13374]loss=0.00038082225000835024

[0m24s] Epoch 21 [12800/13374]loss=0.0003816572640789673

evaluating trained model....

Test set :Accuracy 5637/6700 84.13%

[0m24s] Epoch 22 [2560/13374]loss=0.0003355833570822142

[0m24s] Epoch 22 [5120/13374]loss=0.000330731528083561

[0m25s] Epoch 22 [7680/13374]loss=0.00032481062274503835

[0m25s] Epoch 22 [10240/13374]loss=0.00033169094494951424

[0m25s] Epoch 22 [12800/13374]loss=0.0003496117753093131

evaluating trained model....

Test set :Accuracy 5587/6700 83.39%

[0m25s] Epoch 23 [2560/13374]loss=0.0002770542458165437

[0m25s] Epoch 23 [5120/13374]loss=0.00027910433927900156

[0m26s] Epoch 23 [7680/13374]loss=0.00029357315021722266

[0m26s] Epoch 23 [10240/13374]loss=0.000304125449838466

[0m26s] Epoch 23 [12800/13374]loss=0.0003206069188308902

evaluating trained model....

Test set :Accuracy 5591/6700 83.45%

[0m26s] Epoch 24 [2560/13374]loss=0.00026160957932006566

[0m27s] Epoch 24 [5120/13374]loss=0.0002951053487777244

[0m27s] Epoch 24 [7680/13374]loss=0.00029584705189336093

[0m27s] Epoch 24 [10240/13374]loss=0.0002970322220789967

[0m27s] Epoch 24 [12800/13374]loss=0.0003125644050305709

evaluating trained model....

Test set :Accuracy 5566/6700 83.07%

[0m28s] Epoch 25 [2560/13374]loss=0.0002570196273154579

[0m28s] Epoch 25 [5120/13374]loss=0.0002689820292289369

[0m28s] Epoch 25 [7680/13374]loss=0.0002801688771190432

[0m28s] Epoch 25 [10240/13374]loss=0.0002949771547719138

[0m28s] Epoch 25 [12800/13374]loss=0.0003003898332826793

evaluating trained model....

Test set :Accuracy 5603/6700 83.63%

[0m29s] Epoch 26 [2560/13374]loss=0.00024171676632249727

[0m29s] Epoch 26 [5120/13374]loss=0.0002490489358024206

[0m29s] Epoch 26 [7680/13374]loss=0.00025696992573405925

[0m29s] Epoch 26 [10240/13374]loss=0.0002642455368913943

[0m29s] Epoch 26 [12800/13374]loss=0.0002674412378109992

evaluating trained model....

Test set :Accuracy 5597/6700 83.54%

[0m30s] Epoch 27 [2560/13374]loss=0.00024252969160443172

[0m30s] Epoch 27 [5120/13374]loss=0.00023878420834080316

[0m30s] Epoch 27 [7680/13374]loss=0.00024774402312080686

[0m30s] Epoch 27 [10240/13374]loss=0.00026160532652284017

[0m30s] Epoch 27 [12800/13374]loss=0.0002656111030955799

evaluating trained model....

Test set :Accuracy 5598/6700 83.55%

[0m31s] Epoch 28 [2560/13374]loss=0.00019403478945605456

[0m31s] Epoch 28 [5120/13374]loss=0.0002403595695795957

[0m31s] Epoch 28 [7680/13374]loss=0.00024031621384589623

[0m31s] Epoch 28 [10240/13374]loss=0.00024724315335333813

[0m32s] Epoch 28 [12800/13374]loss=0.0002531767022446729

evaluating trained model....

Test set :Accuracy 5598/6700 83.55%

[0m32s] Epoch 29 [2560/13374]loss=0.0002134321977791842

[0m32s] Epoch 29 [5120/13374]loss=0.0002278091877087718

[0m32s] Epoch 29 [7680/13374]loss=0.00022222526337524565

[0m33s] Epoch 29 [10240/13374]loss=0.00024340150830539641

[0m33s] Epoch 29 [12800/13374]loss=0.00025429620684008113

evaluating trained model....

Test set :Accuracy 5583/6700 83.33%

[0m33s] Epoch 30 [2560/13374]loss=0.00019122814992442728

[0m33s] Epoch 30 [5120/13374]loss=0.00022502463834825904

[0m34s] Epoch 30 [7680/13374]loss=0.00022874425048939883

[0m34s] Epoch 30 [10240/13374]loss=0.0002470516621542629

[0m34s] Epoch 30 [12800/13374]loss=0.00024833698495058343

evaluating trained model....

Test set :Accuracy 5599/6700 83.57%

[0m34s] Epoch 31 [2560/13374]loss=0.0001768243222613819

[0m35s] Epoch 31 [5120/13374]loss=0.00018919176327472086

[0m35s] Epoch 31 [7680/13374]loss=0.00020207777197356336

[0m35s] Epoch 31 [10240/13374]loss=0.00020924700929754182

[0m35s] Epoch 31 [12800/13374]loss=0.00023443944795872084

evaluating trained model....

Test set :Accuracy 5618/6700 83.85%

[0m36s] Epoch 32 [2560/13374]loss=0.00018557563817012124

[0m36s] Epoch 32 [5120/13374]loss=0.00020490613424044567

[0m36s] Epoch 32 [7680/13374]loss=0.0002004643082424688

[0m36s] Epoch 32 [10240/13374]loss=0.00022263992213993332

[0m36s] Epoch 32 [12800/13374]loss=0.00023168192798038944

evaluating trained model....

Test set :Accuracy 5602/6700 83.61%

[0m37s] Epoch 33 [2560/13374]loss=0.00014773697912460194

[0m37s] Epoch 33 [5120/13374]loss=0.0001799132973246742

[0m37s] Epoch 33 [7680/13374]loss=0.0001958962459563433

[0m37s] Epoch 33 [10240/13374]loss=0.0001989282796785119

[0m37s] Epoch 33 [12800/13374]loss=0.00021417427735286764

evaluating trained model....

Test set :Accuracy 5586/6700 83.37%

[0m38s] Epoch 34 [2560/13374]loss=0.00017982723584282213

[0m38s] Epoch 34 [5120/13374]loss=0.0002081723006995162

[0m38s] Epoch 34 [7680/13374]loss=0.0002243839289197543

[0m38s] Epoch 34 [10240/13374]loss=0.00022924898403289262

[0m39s] Epoch 34 [12800/13374]loss=0.00023955782264238224

evaluating trained model....

Test set :Accuracy 5602/6700 83.61%

[0m39s] Epoch 35 [2560/13374]loss=0.00015610751433996483

[0m39s] Epoch 35 [5120/13374]loss=0.00017842173147073482

[0m39s] Epoch 35 [7680/13374]loss=0.00020019117582705803

[0m40s] Epoch 35 [10240/13374]loss=0.00020771948675246676

[0m40s] Epoch 35 [12800/13374]loss=0.00021277053965604865

evaluating trained model....

Test set :Accuracy 5610/6700 83.73%

[0m40s] Epoch 36 [2560/13374]loss=0.0001811089452530723

[0m40s] Epoch 36 [5120/13374]loss=0.0001794825406250311

[0m41s] Epoch 36 [7680/13374]loss=0.00018685633040149696

[0m41s] Epoch 36 [10240/13374]loss=0.00019214530748286053

[0m41s] Epoch 36 [12800/13374]loss=0.00020475096724112518

evaluating trained model....

Test set :Accuracy 5601/6700 83.60%

[0m41s] Epoch 37 [2560/13374]loss=0.00016592514439253136

[0m41s] Epoch 37 [5120/13374]loss=0.0001770364717231132

[0m42s] Epoch 37 [7680/13374]loss=0.0001987291199232762

[0m42s] Epoch 37 [10240/13374]loss=0.000207763474463718

[0m42s] Epoch 37 [12800/13374]loss=0.00021124157603480854

evaluating trained model....

Test set :Accuracy 5585/6700 83.36%

[0m42s] Epoch 38 [2560/13374]loss=0.0001783155297744088

[0m43s] Epoch 38 [5120/13374]loss=0.00019000879401573912

[0m43s] Epoch 38 [7680/13374]loss=0.00019740900679607876

[0m43s] Epoch 38 [10240/13374]loss=0.00019866491693392162

[0m43s] Epoch 38 [12800/13374]loss=0.00020742787790368312

evaluating trained model....

Test set :Accuracy 5603/6700 83.63%

[0m44s] Epoch 39 [2560/13374]loss=0.00017442373937228694

[0m44s] Epoch 39 [5120/13374]loss=0.000183770309376996

[0m44s] Epoch 39 [7680/13374]loss=0.0002077115626889281

[0m44s] Epoch 39 [10240/13374]loss=0.0002031277863352443

[0m44s] Epoch 39 [12800/13374]loss=0.0002022141778434161

evaluating trained model....

Test set :Accuracy 5584/6700 83.34%

[0m45s] Epoch 40 [2560/13374]loss=0.0001593812608916778

[0m45s] Epoch 40 [5120/13374]loss=0.0001689576016360661

[0m45s] Epoch 40 [7680/13374]loss=0.00017404285026714205

[0m45s] Epoch 40 [10240/13374]loss=0.00018896674064308172

[0m45s] Epoch 40 [12800/13374]loss=0.00019635440388810822

evaluating trained model....

Test set :Accuracy 5619/6700 83.87%

[0m46s] Epoch 41 [2560/13374]loss=0.00016860530959093012

[0m46s] Epoch 41 [5120/13374]loss=0.0001728936353174504

[0m46s] Epoch 41 [7680/13374]loss=0.0001959022178198211

[0m46s] Epoch 41 [10240/13374]loss=0.0001952763839653926

[0m47s] Epoch 41 [12800/13374]loss=0.0002017315645935014

evaluating trained model....

Test set :Accuracy 5607/6700 83.69%

[0m47s] Epoch 42 [2560/13374]loss=0.00016435411453130655

[0m47s] Epoch 42 [5120/13374]loss=0.00017644036597630475

[0m47s] Epoch 42 [7680/13374]loss=0.00019591993550420738

[0m48s] Epoch 42 [10240/13374]loss=0.0001868714361989987

[0m48s] Epoch 42 [12800/13374]loss=0.0001949291751952842

evaluating trained model....

Test set :Accuracy 5603/6700 83.63%

[0m48s] Epoch 43 [2560/13374]loss=0.00017717829541652463

[0m48s] Epoch 43 [5120/13374]loss=0.00017531897865410428

[0m49s] Epoch 43 [7680/13374]loss=0.00017840718016183624

[0m49s] Epoch 43 [10240/13374]loss=0.0001799464293071651

[0m49s] Epoch 43 [12800/13374]loss=0.00019076924116234295

evaluating trained model....

Test set :Accuracy 5621/6700 83.90%

[0m49s] Epoch 44 [2560/13374]loss=0.00016531633809790947

[0m50s] Epoch 44 [5120/13374]loss=0.000172287825262174

[0m50s] Epoch 44 [7680/13374]loss=0.00017946926285124695

[0m50s] Epoch 44 [10240/13374]loss=0.00018492361250537214

[0m50s] Epoch 44 [12800/13374]loss=0.0001943638626835309

evaluating trained model....

Test set :Accuracy 5618/6700 83.85%

[0m51s] Epoch 45 [2560/13374]loss=0.0001361665221338626

[0m51s] Epoch 45 [5120/13374]loss=0.00015475238324142994

[0m51s] Epoch 45 [7680/13374]loss=0.0001704240278437889

[0m51s] Epoch 45 [10240/13374]loss=0.00018697583818720885

[0m51s] Epoch 45 [12800/13374]loss=0.00019171007399563679

evaluating trained model....

Test set :Accuracy 5608/6700 83.70%

[0m52s] Epoch 46 [2560/13374]loss=0.00015841058775549753

[0m52s] Epoch 46 [5120/13374]loss=0.0001654327141295653

[0m52s] Epoch 46 [7680/13374]loss=0.00016476862195607584

[0m52s] Epoch 46 [10240/13374]loss=0.00017506711210444337

[0m52s] Epoch 46 [12800/13374]loss=0.00018869986379286275

evaluating trained model....

Test set :Accuracy 5587/6700 83.39%

[0m53s] Epoch 47 [2560/13374]loss=0.00013592790492111817

[0m53s] Epoch 47 [5120/13374]loss=0.00015365287181339226

[0m53s] Epoch 47 [7680/13374]loss=0.00016054231285428006

[0m53s] Epoch 47 [10240/13374]loss=0.00016715620877221226

[0m54s] Epoch 47 [12800/13374]loss=0.00017996207185206004

evaluating trained model....

Test set :Accuracy 5605/6700 83.66%

[0m54s] Epoch 48 [2560/13374]loss=0.00015797795349499212

[0m54s] Epoch 48 [5120/13374]loss=0.0001472311902034562

[0m54s] Epoch 48 [7680/13374]loss=0.00015703178772431177

[0m55s] Epoch 48 [10240/13374]loss=0.00016835214610182446

[0m55s] Epoch 48 [12800/13374]loss=0.0001793511741561815

evaluating trained model....

Test set :Accuracy 5590/6700 83.43%

[0m55s] Epoch 49 [2560/13374]loss=0.00012804662983398885

[0m55s] Epoch 49 [5120/13374]loss=0.00014271239233494272

[0m56s] Epoch 49 [7680/13374]loss=0.00015792675088353766

[0m56s] Epoch 49 [10240/13374]loss=0.00017839588599599665

[0m56s] Epoch 49 [12800/13374]loss=0.00018214089228422382

evaluating trained model....

Test set :Accuracy 5603/6700 83.63%

[0m56s] Epoch 50 [2560/13374]loss=0.00011518407045514323

[0m57s] Epoch 50 [5120/13374]loss=0.00013085265600238928

[0m57s] Epoch 50 [7680/13374]loss=0.0001588057290064171

[0m57s] Epoch 50 [10240/13374]loss=0.00017435651116102236

[0m57s] Epoch 50 [12800/13374]loss=0.00017918572077178397

evaluating trained model....

Test set :Accuracy 5587/6700 83.39%

[0m58s] Epoch 51 [2560/13374]loss=0.00013654298163601197

[0m58s] Epoch 51 [5120/13374]loss=0.00014601262300857344

[0m58s] Epoch 51 [7680/13374]loss=0.00015973188468099882

[0m58s] Epoch 51 [10240/13374]loss=0.00017199256835738198

[0m58s] Epoch 51 [12800/13374]loss=0.0001764850087056402

evaluating trained model....

Test set :Accuracy 5600/6700 83.58%