一般用官方的rabbitmq_exporter采取数据即可,然后在普米配置。但如果rabbitmq节点的队列数超过了5000,往往rabbitmq_exporter就会瘫痪,因为rabbitmq_exporter采集的信息太多,尤其是那些队列的细节,所以队列多了,rabbitmq_exporter就没法用了。所以我们不得不自己写脚本探测MQ,脚本分享如下:

首先 pip3 install prometheus-client

import prometheus_client as prom

import pandas as pd

from sqlalchemy import create_engine

import requests,time

#自定义普米MQ监控指标

port = '15672'

username = 'username'

password = 'password'

g0 = prom.Gauge("rabbitmq_up", 'life of the node',labelnames=['node','region'])

g1 = prom.Gauge("rabbitmq_queues", 'total queue num of the node',labelnames=['node','region'])

g2 = prom.Gauge("rabbitmq_channels", 'total queue num of the node',labelnames=['node','region'])

g3 = prom.Gauge("rabbitmq_connections", 'total queue num of the node',labelnames=['node','region'])

g4 = prom.Gauge("rabbitmq_consumers", 'total queue num of the node',labelnames=['node','region'])

g5 = prom.Gauge("rabbitmq_exchanges", 'total queue num of the node',labelnames=['node','region'])

g6 = prom.Gauge("rabbitmq_messages", 'total messages of the node',labelnames=['node','region'])

g7 = prom.Gauge("rabbitmq_vhosts", 'total vhost num of the node',labelnames=['node','region'])

g8 = prom.Gauge("rabbitmq_node_mem_used", 'mem used of the node',labelnames=['node','region'])

g9 = prom.Gauge("rabbitmq_node_mem_limit", 'mem limit of the node',labelnames=['node','region'])

g10 = prom.Gauge("rabbitmq_node_mem_alarm", 'mem alarm of the node',labelnames=['node','region'])

g11 = prom.Gauge("rabbitmq_node_disk_free_alarm", 'free disk alarm of the node',labelnames=['node','region'])

prom.start_http_server(8086)

#要监控的MQ节点

nodelist=['1.1.1.1','1.1.1.2','1.1.1.3']

while True:

for node in nodelist: #遍历各个node

status=1

try: #测试连通性

requests.get(url=f"http://{node}:{port}/api/overview", auth=(username, password),timeout=5)

except:

status=0

continue

finally:

g0.labels(node=node,region=region).set(status)

api = AdminAPI(url=f"http://{node}:{port}", auth=(username, password))

info1=requests.get(url=f"http://{node}:{port}/api/overview", auth=(username, password),timeout=5)

info2=requests.get(url=f"http://{node}:{port}/api/nodes", auth=(username, password),timeout=5)[0]

info3=requests.get(url=f"http://{node}:{port}/api/vhosts", auth=(username, password),timeout=5)

g1.labels(node=node,region=region).set(info1.get('object_totals').get('queues'))

g2.labels(node=node,region=region).set(info1.get('object_totals').get('channels'))

g3.labels(node=node,region=region).set(info1.get('object_totals').get('connections'))

g4.labels(node=node,region=region).set(info1.get('object_totals').get('consumers'))

g5.labels(node=node,region=region).set(info1.get('object_totals').get('exchanges'))

g6.labels(node=node,region=region).set(info1.get('queue_totals').get('messages'))

g7.labels(node=node,region=region).set(len(info3))

g8.labels(node=node,region=region).set(info2.get('mem_used'))

g9.labels(node=node,region=region).set(info2.get('mem_limit'))

g10.labels(node=node,region=region).set(info2.get('mem_alarm'))

g11.labels(node=node,region=region).set(info2.get('disk_free_alarm'))

time.sleep(30)

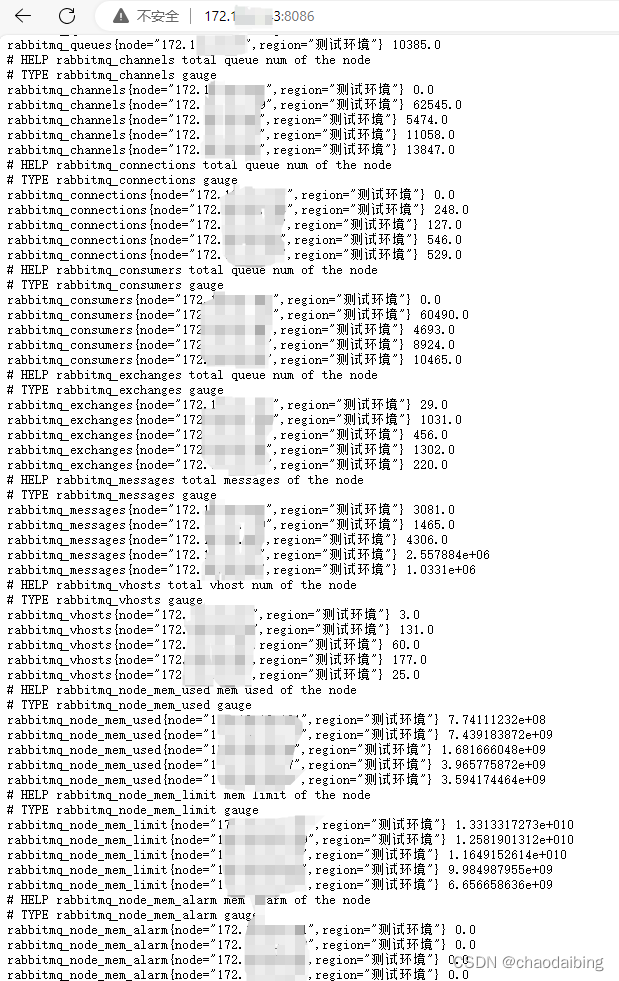

python3 执行这个脚本,就会运行一个页面如下

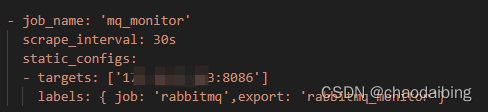

于是就可以用普米采集了