前言

Whisper 是一种通用语音识别模型。它是在大量不同音频数据集上进行训练的,也是一个多任务模型,可以执行多语言语音识别、语音翻译和语言识别。

这里呢,我将给出我的一些代码,来帮助你尽快实现【语音转文字】的服务部署。

以下是该AI模块的具体使用方式:

https://github.com/openai/whisper

心得

这是一个不错的语言模型,它支持自动识别语音语种,类似中文、英文、日语等它都能胜任,并且可以实现其他语种转英语翻译的功能,支持附加时间戳的字幕导出功能......

总体来说,它甚至可以与市面上领头的语言识别功能相媲美,并且主要它是开源的。

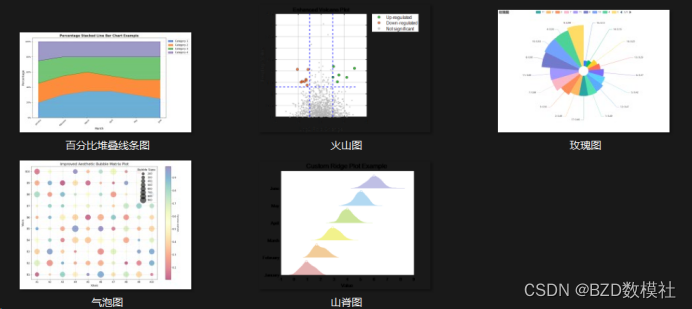

这是它的一些模型大小、需要的GPU显存、相对执行速度的对应表

这是它在命令行模式下的使用方式,这对想要尝尝鲜的小伙伴们来说,已经够了

tips:

1、首次安装完毕whisper后,执行指令时会给你安装你所选的模型,small、medium等,我的显卡已经不支持我使用medium了

2、关于GPU版本的pytorch,可以参考如下教程(使用CPU版本会比较慢)

https://blog.csdn.net/G541788_/article/details/135437236

python调用

作为一名python从业者,我十分幸运能够读懂一些模块的相关使用,这里我通过修改了一些模块源码调用,实现了在python代码中一键导出语音字幕的功能(这些功能在命令行中已拥有,但是我希望在使用python脚本model方法后再实现该功能,可能这些你并不需要,但随意吧)。

这个模块的cli()方法或许能更好实现这一功能(因为命令行模式,其实就是运行了这个方法,但我根据经验和实际代码来看,这会重复加载model,导致不必要的资源损耗)。

1、__init__.py中加入get_writer,让你能通过whisper模块去使用这个方法

from .transcribe import get_writer2、相关功能代码

import os.path

import whisper

import time

# 这是语种langue参数的解释,或许对你的选择有帮助

LANGUAGES = {

"en": "english",

"zh": "chinese",

"de": "german",

"es": "spanish",

"ru": "russian",

"ko": "korean",

"fr": "french",

"ja": "japanese",

"pt": "portuguese",

"tr": "turkish",

"pl": "polish",

"ca": "catalan",

"nl": "dutch",

"ar": "arabic",

"sv": "swedish",

"it": "italian",

"id": "indonesian",

"hi": "hindi",

"fi": "finnish",

"vi": "vietnamese",

"he": "hebrew",

"uk": "ukrainian",

"el": "greek",

"ms": "malay",

"cs": "czech",

"ro": "romanian",

"da": "danish",

"hu": "hungarian",

"ta": "tamil",

"no": "norwegian",

"th": "thai",

"ur": "urdu",

"hr": "croatian",

"bg": "bulgarian",

"lt": "lithuanian",

"la": "latin",

"mi": "maori",

"ml": "malayalam",

"cy": "welsh",

"sk": "slovak",

"te": "telugu",

"fa": "persian",

"lv": "latvian",

"bn": "bengali",

"sr": "serbian",

"az": "azerbaijani",

"sl": "slovenian",

"kn": "kannada",

"et": "estonian",

"mk": "macedonian",

"br": "breton",

"eu": "basque",

"is": "icelandic",

"hy": "armenian",

"ne": "nepali",

"mn": "mongolian",

"bs": "bosnian",

"kk": "kazakh",

"sq": "albanian",

"sw": "swahili",

"gl": "galician",

"mr": "marathi",

"pa": "punjabi",

"si": "sinhala",

"km": "khmer",

"sn": "shona",

"yo": "yoruba",

"so": "somali",

"af": "afrikaans",

"oc": "occitan",

"ka": "georgian",

"be": "belarusian",

"tg": "tajik",

"sd": "sindhi",

"gu": "gujarati",

"am": "amharic",

"yi": "yiddish",

"lo": "lao",

"uz": "uzbek",

"fo": "faroese",

"ht": "haitian creole",

"ps": "pashto",

"tk": "turkmen",

"nn": "nynorsk",

"mt": "maltese",

"sa": "sanskrit",

"lb": "luxembourgish",

"my": "myanmar",

"bo": "tibetan",

"tl": "tagalog",

"mg": "malagasy",

"as": "assamese",

"tt": "tatar",

"haw": "hawaiian",

"ln": "lingala",

"ha": "hausa",

"ba": "bashkir",

"jw": "javanese",

"su": "sundanese",

"yue": "cantonese",

}

# 以下命令将使用medium模型转录音频文件中的语音:

#

# whisper audio.flac audio.mp3 audio.wav --model medium

# 默认设置(选择模型small)非常适合转录英语。要转录包含非英语语音的音频文件,您可以使用以下选项指定语言--language:

#

# whisper japanese.wav --language Japanese

# 添加--task translate会将演讲翻译成英语:

#

# whisper japanese.wav --language Japanese --task translate

# 其他语言转录为英语

# whisper "E:\voice\恋愛サーキュレーション_(Vocals)_(Vocals).wav" --language ja --task translate

# 这个任务是将audio_files内的声音文件进行字幕导出,以时间戳为单位存储到captions/目录里

audio_files = [r"E:\voice\恋愛サーキュレーション_(Vocals)_(Vocals).wav"]

model = whisper.load_model("small")

output_format = 'all'

writer_args = {

"highlight_words": False,

"max_line_count": None,

"max_line_width": None,

"max_words_per_line": None,

}

for audio_file in audio_files:

now_timestamp = str(int(time.time()))

save_path = f'captions/{now_timestamp}'

if not os.path.exists(save_path):

os.mkdir(save_path)

# language可选

# 中文zh,日语ja,英语en

result = model.transcribe(audio_file, language='ja')

writer = whisper.get_writer(output_format, save_path)

writer(result, audio_file, **writer_args)

print('done: ', audio_file )