k8s部署prometheus

1.下载prometheus文件

cd /soft/src

git clone -b release-0.5 --single-branch https://github.com/coreos/kube-prometheus.git

2.部署

这里部署之前最好改一下alertmanager-alertmanager.yaml这个文件,将replicas:改成2或者3,当为1的时候,后面可能会有点问题

cd kube-prometheus/manifests/setup

kubectl create -f .

cd ..

kubectl create -f .

3.查看

kubectl get all -n monitoring

4.通过ingress-nginx去暴露三个服务

部署ingress-nginx

cd /root/k8s/yaml

mkdir ingress-nginx

cd ingress-nginx

ingress-nginx.yaml

apiVersion: v1

kind: Namespace

metadata:

labels:

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

name: ingress-nginx

---

apiVersion: v1

automountServiceAccountToken: true

kind: ServiceAccount

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx

namespace: ingress-nginx

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx

namespace: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- endpoints

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io

resources:

- ingressclasses

verbs:

- get

- list

- watch

- apiGroups:

- ""

resourceNames:

- ingress-controller-leader

resources:

- configmaps

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- coordination.k8s.io

resourceNames:

- ingress-controller-leader

resources:

- leases

verbs:

- get

- update

- apiGroups:

- coordination.k8s.io

resources:

- leases

verbs:

- create

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission

namespace: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- secrets

verbs:

- get

- create

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

- namespaces

verbs:

- list

- watch

- apiGroups:

- coordination.k8s.io

resources:

- leases

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- networking.k8s.io

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io

resources:

- ingressclasses

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission

rules:

- apiGroups:

- admissionregistration.k8s.io

resources:

- validatingwebhookconfigurations

verbs:

- get

- update

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

apiVersion: v1

data:

allow-snippet-annotations: "true"

kind: ConfigMap

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-controller

namespace: ingress-nginx

---

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- appProtocol: http

name: http

port: 80

protocol: TCP

targetPort: http

- appProtocol: https

name: https

port: 443

protocol: TCP

targetPort: https

selector:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

type: NodePort

---

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-controller-admission

namespace: ingress-nginx

spec:

ports:

- appProtocol: https

name: https-webhook

port: 443

targetPort: webhook

selector:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

type: ClusterIP

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

minReadySeconds: 0

revisionHistoryLimit: 10

selector:

matchLabels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

spec:

hostNetwork: true

containers:

- args:

- /nginx-ingress-controller

- --election-id=ingress-controller-leader

- --controller-class=k8s.io/ingress-nginx

- --ingress-class=nginx

- --configmap=$(POD_NAMESPACE)/ingress-nginx-controller

- --validating-webhook=:8443

- --validating-webhook-certificate=/usr/local/certificates/cert

- --validating-webhook-key=/usr/local/certificates/key

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: LD_PRELOAD

value: /usr/local/lib/libmimalloc.so

image: dyrnq/ingress-nginx-controller:v1.3.1

imagePullPolicy: IfNotPresent

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

livenessProbe:

failureThreshold: 5

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

name: controller

ports:

- containerPort: 80

name: http

protocol: TCP

- containerPort: 443

name: https

protocol: TCP

- containerPort: 8443

name: webhook

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

resources:

requests:

cpu: 100m

memory: 90Mi

securityContext:

allowPrivilegeEscalation: true

capabilities:

add:

- NET_BIND_SERVICE

drop:

- ALL

runAsUser: 101

volumeMounts:

- mountPath: /usr/local/certificates/

name: webhook-cert

readOnly: true

dnsPolicy: ClusterFirst

nodeSelector:

kubernetes.io/os: linux

serviceAccountName: ingress-nginx

terminationGracePeriodSeconds: 300

volumes:

- name: webhook-cert

secret:

secretName: ingress-nginx-admission

---

apiVersion: batch/v1

kind: Job

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission-create

namespace: ingress-nginx

spec:

template:

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission-create

spec:

containers:

- args:

- create

- --host=ingress-nginx-controller-admission,ingress-nginx-controller-admission.$(POD_NAMESPACE).svc

- --namespace=$(POD_NAMESPACE)

- --secret-name=ingress-nginx-admission

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

image: dyrnq/kube-webhook-certgen:v1.3.0

imagePullPolicy: IfNotPresent

name: create

securityContext:

allowPrivilegeEscalation: false

nodeSelector:

kubernetes.io/os: linux

restartPolicy: OnFailure

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 2000

serviceAccountName: ingress-nginx-admission

---

apiVersion: batch/v1

kind: Job

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission-patch

namespace: ingress-nginx

spec:

template:

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission-patch

spec:

containers:

- args:

- patch

- --webhook-name=ingress-nginx-admission

- --namespace=$(POD_NAMESPACE)

- --patch-mutating=false

- --secret-name=ingress-nginx-admission

- --patch-failure-policy=Fail

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

image: dyrnq/kube-webhook-certgen:v1.3.0

imagePullPolicy: IfNotPresent

name: patch

securityContext:

allowPrivilegeEscalation: false

nodeSelector:

kubernetes.io/os: linux

restartPolicy: OnFailure

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 2000

serviceAccountName: ingress-nginx-admission

---

apiVersion: networking.k8s.io/v1

kind: IngressClass

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: nginx

spec:

controller: k8s.io/ingress-nginx

---

apiVersion: admissionregistration.k8s.io/v1

kind: ValidatingWebhookConfiguration

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission

webhooks:

- admissionReviewVersions:

- v1

clientConfig:

service:

name: ingress-nginx-controller-admission

namespace: ingress-nginx

path: /networking/v1/ingresses

failurePolicy: Fail

matchPolicy: Equivalent

name: validate.nginx.ingress.kubernetes.io

rules:

- apiGroups:

- networking.k8s.io

apiVersions:

- v1

operations:

- CREATE

- UPDATE

resources:

- ingresses

sideEffects: None

创建ingress-nginx

kubectl create -f ingress-nginx.yaml

查看

kubectl get all -n ingress-nginx

5.部署ingress代理三个service到公网

domain.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: grafana

namespace: monitoring

spec:

ingressClassName: nginx

rules:

- host: grafana.zhubaoyi.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: grafana

port:

number: 3000

- host: prometheus.zhubaoyi.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: prometheus-k8s

port:

number: 9090

- host: alertmanager.zhubaoyi.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: alertmanager-main

port:

number: 9093

创建

kubectl create -f domain.yaml

查看

[root@worker1 prometheus]# kubectl get ingress -n monitoring

NAME CLASS HOSTS ADDRESS PORTS AGE

grafana nginx grafana.zhubaoyi.com,prometheus.zhubaoyi.com,alertmanager.zhubaoyi.com 11.20.28.59 80 3m47s

然后通过浏览器就可以正常访问三个服务了,接下来就是慢慢使用配置了

prometheus监控基础服务和业务

1.k8s监控服务的大概流程

提供对应服务的metrics接口->创建对应的endpoint(k8s集群内可直接与之通信)->创建对应的service->创建对应的ServiceMonitor->prometheus就可以自动创建对应的target监控

监控具体分为两种,一种是本身提供metrics接口的服务,这种服务直接创建后续的几个服务就可以监控其提供的对应的指标,另一种是没有提供metrics接口的服务,这种服务需要先部署对应的exporter,进而exporter会暴露响应的metrics,通常类似于mysql、redis这些服务都有一些现成的exporter,或是官方的或是社区的,拿来就可以直接用,但对于公司具体的业务,则需要开发人员去提供metrics指标,以此做监控

2.没有metrics接口的监控(kafka)

a.安装kafka

这一步就直接省略,具体可以看前一章部署k8s收集日志那篇[https://blog.csdn.net/ss810540895/article/details/128476758?spm=1001.2014.3001.5501]

b.部署kafka-exporter-deploy、kafka-service-exporter、kafka-servicemonitor

kafka-deploy-exporter.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: "1"

creationTimestamp: "2020-05-12T14:00:40Z"

generation: 1

labels:

app: kafka-exporter

name: kafka-exporter

namespace: monitoring

resourceVersion: "11300398"

selfLink: /apis/apps/v1/namespaces/monitoring/deployments/kafka-exporter

uid: 7a9471de-cf8f-4622-884b-130d2505d6ec

spec:

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

app: kafka-exporter

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 0

type: RollingUpdate

template:

metadata:

creationTimestamp: null

labels:

app: kafka-exporter

spec:

containers:

- args:

- --kafka.server=192.168.255.96:9092

env:

- name: TZ

value: Asia/Shanghai

- name: LANG

value: C.UTF-8

image: danielqsj/kafka-exporter:latest

imagePullPolicy: IfNotPresent

lifecycle: {}

name: kafka-exporter

ports:

- containerPort: 9308

name: web

protocol: TCP

resources:

limits:

cpu: 249m

memory: 318Mi

requests:

cpu: 10m

memory: 10Mi

securityContext:

allowPrivilegeEscalation: false

privileged: false

readOnlyRootFilesystem: false

runAsNonRoot: false

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /usr/share/zoneinfo/Asia/Shanghai

name: tz-config

- mountPath: /etc/localtime

name: tz-config

- mountPath: /etc/timezone

name: timezone

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

volumes:

- hostPath:

path: /usr/share/zoneinfo/Asia/Shanghai

type: ""

name: tz-config

- hostPath:

path: /etc/timezone

type: ""

name: timezone

kafka-service-exporter.yaml

apiVersion: v1

kind: Service

metadata:

creationTimestamp: "2020-05-12T14:00:39Z"

labels:

app: kafka-exporter

name: kafka-exporter

namespace: monitoring

resourceVersion: "11300354"

selfLink: /api/v1/namespaces/monitoring/services/kafka-exporter

uid: e5967e11-4c96-4daf-ac98-429f430229ab

spec:

clusterIP:

ports:

- name: container-1-web-1

port: 9308

protocol: TCP

targetPort: 9308

selector:

app: kafka-exporter

sessionAffinity: None

type: ClusterIP

kafka-servicemonitor.yaml

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

labels:

k8s-app: kafka-exporter

name: kafka-exporter

namespace: monitoring

spec:

endpoints:

- interval: 30s

port: container-1-web-1

namespaceSelector:

matchNames:

- monitoring

selector:

matchLabels:

app: kafka-exporter

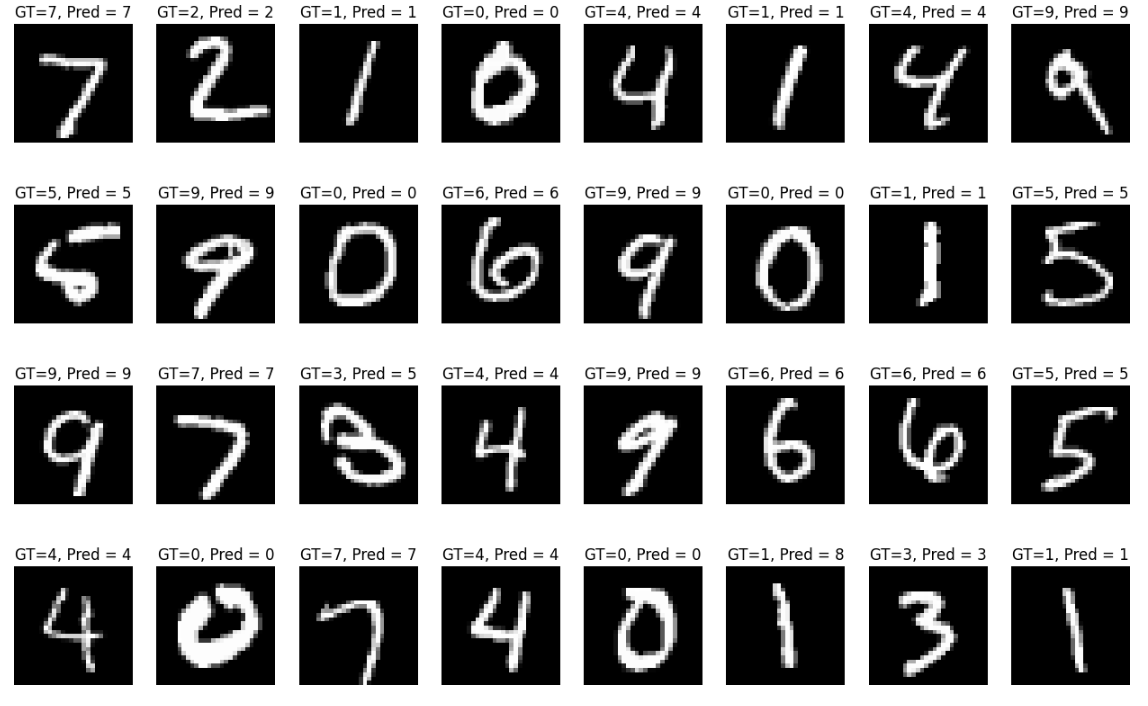

3.查看prometheus的target

可以看到已经有了

查看对应的指标

4.grafana添加dashboard

我这边添加dashboard后无法查看到数据,发现我这个dashboard监控的语法规则和prometheus查到的一些指标不匹配,

具体的大家可以去根据需要,编写对应的指标查询规则

部署黑盒监控

所需资源

[root@worker1 blackbox_exporter]# ll

total 20

-rw-r--r-- 1 root root 755 Dec 31 13:15 additional-scrape-configs.yaml

-rw-r--r-- 1 root root 917 Dec 31 11:13 blackbox_exporter_cm.yaml

-rw-r--r-- 1 root root 2315 Dec 31 11:15 blackbox_exporter_deploy.yaml

-rw-r--r-- 1 root root 491 Dec 31 11:16 blackbox_exporter_service.yaml

-rw-r--r-- 1 root root 465 Dec 31 13:13 prometheus-additional.yaml

1.创建secret

prometheus-additional.yaml

- job_name: 'blackbox'

metrics_path: /probe

params:

module: [http_2xx] # Look for a HTTP 200 response.

static_configs:

- targets:

- https://www.baidu.com/

relabel_configs:

- source_labels: [__address__]

target_label: __param_target

- source_labels: [__param_target]

target_label: instance

- source_labels: [instance]

target_label: target

- target_label: __address__

replacement: blackbox-exporter:9115

kubectl create secret generic additional-scrape-configs --from-file=prometheus-additional.yaml --dry-run -oyaml > additional-scrape-configs.yaml

kubectl create -f additional-scrape-configs.yaml -n monitoring

2.修改prometheus的crd

cd /soft/src/kube-prometheus/manifests/

vim prometheus-prometheus.yaml

在最后添加下面几行

additionalScrapeConfigs:

name: additional-scrape-configs

key: prometheus-additional.yaml

然后应用

kubectl replace -f prometheus-prometheus.yaml -n monitoring

3.创建configmap、deploy、service

blackbox_exporter_cm.yaml

apiVersion: v1

data:

blackbox.yml: |-

modules:

http_2xx:

prober: http

http_post_2xx:

prober: http

http:

method: POST

tcp_connect:

prober: tcp

pop3s_banner:

prober: tcp

tcp:

query_response:

- expect: "^+OK"

tls: true

tls_config:

insecure_skip_verify: false

ssh_banner:

prober: tcp

tcp:

query_response:

- expect: "^SSH-2.0-"

irc_banner:

prober: tcp

tcp:

query_response:

- send: "NICK prober"

- send: "USER prober prober prober :prober"

- expect: "PING :([^ ]+)"

send: "PONG ${1}"

- expect: "^:[^ ]+ 001"

icmp:

prober: icmp

kind: ConfigMap

metadata:

creationTimestamp: "2020-05-13T13:44:52Z"

name: blackbox-conf

namespace: monitoring

blackbox_exporter_deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: "1"

creationTimestamp: "2020-05-13T13:46:29Z"

generation: 1

labels:

app: blackbox-exporter

name: blackbox-exporter

namespace: monitoring

resourceVersion: "11572499"

selfLink: /apis/apps/v1/namespaces/monitoring/deployments/blackbox-exporter

uid: 2c192340-3be1-49db-945f-01a3f1c20576

spec:

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

app: blackbox-exporter

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 0

type: RollingUpdate

template:

metadata:

creationTimestamp: null

labels:

app: blackbox-exporter

spec:

containers:

- args:

- --config.file=/mnt/blackbox.yml

env:

- name: TZ

value: Asia/Shanghai

- name: LANG

value: C.UTF-8

image: prom/blackbox-exporter:master

imagePullPolicy: IfNotPresent

lifecycle: {}

name: blackbox-exporter

ports:

- containerPort: 9115

name: web

protocol: TCP

resources:

limits:

cpu: 324m

memory: 443Mi

requests:

cpu: 10m

memory: 10Mi

securityContext:

allowPrivilegeEscalation: false

privileged: false

readOnlyRootFilesystem: false

runAsNonRoot: false

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /usr/share/zoneinfo/Asia/Shanghai

name: tz-config

- mountPath: /etc/localtime

name: tz-config

- mountPath: /etc/timezone

name: timezone

- mountPath: /mnt

name: config

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

volumes:

- hostPath:

path: /usr/share/zoneinfo/Asia/Shanghai

type: ""

name: tz-config

- hostPath:

path: /etc/timezone

type: ""

name: timezone

- configMap:

defaultMode: 420

name: blackbox-conf

name: config

blackbox_exporter_service.yaml

apiVersion: v1

kind: Service

metadata:

creationTimestamp: "2020-05-13T13:46:29Z"

labels:

app: blackbox-exporter

name: blackbox-exporter

namespace: monitoring

resourceVersion: "11572454"

selfLink: /api/v1/namespaces/monitoring/services/blackbox-exporter

uid: 3c5f01eb-b331-4455-956a-9c9a331f2906

spec:

ports:

- name: container-1-web-1

port: 9115

protocol: TCP

targetPort: 9115

selector:

app: blackbox-exporter

sessionAffinity: None

type: ClusterIP

然后应用

kubectl create -f blackbox_exporter_cm.yaml

kubectl create -f blackbox_exporter_deploy.yaml

kubectl create -f blackbox_exporter_service.yaml

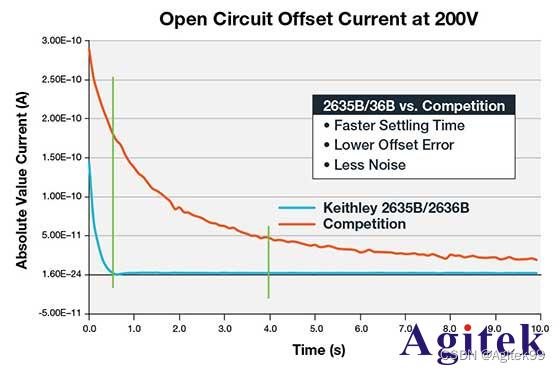

4.prometheus查看

可以看到对应的指标

target这边也可以看到了

5.添加对应的grafana

最后还差一个自动发现的没写,后续加上去

添加邮件告警

1.修改alertmanager-secret.yaml

alertmanager-secret.yaml

apiVersion: v1

kind: Secret

metadata:

name: alertmanager-main

namespace: monitoring

stringData:

alertmanager.yaml: |-

"global":

"resolve_timeout": "5m"

smtp_from: "8100895@qq.com"

smtp_smarthost: "smtp.qq.com:465"

smtp_hello: "163.com"

smtp_auth_username: "8100895@qq.com"

smtp_auth_password: "bydkomubmbejf"

smtp_require_tls: false

# wechat

# wechat_api_url: 'https://qyapi.weixin.qq.com/cgi-bin/'

# wechat_api_secret: 'ZZQt0Ue9mtplH9u1g8PhxR_RxEnRu512CQtmBn6R2x0'

# wechat_api_corp_id: 'wwef86a30130f04f2b'

"inhibit_rules":

- "equal":

- "namespace"

- "alertname"

"source_match":

"severity": "critical"

"target_match_re":

"severity": "warning|info"

- "equal":

- "namespace"

- "alertname"

"source_match":

"severity": "warning"

"target_match_re":

"severity": "info"

"receivers":

- "name": "Default"

"email_configs":

- to: "8100895@qq.com"

send_resolved: true

# - "name": "Watchdog"

# "email_configs":

# - to: "kubernetes_guide@163.com"

# send_resolved: true

# - "name": "Critical"

# "email_configs":

# - to: "kubernetes_guide@163.com"

# send_resolved: true

# - name: 'wechat'

# wechat_configs:

# - send_resolved: true

# to_tag: '1'

# agent_id: '1000003'

# - "name": "Default"

# - "name": "Watchdog"

# - "name": "Critical"

"route":

"group_by":

- "namespace"

"group_interval": "5m"

"group_wait": "30s"

"receiver": "Default"

"repeat_interval": "12h"

"routes":

- "match":

"alertname": "Watchdog"

"receiver": "Watchdog"

- "match":

"severity": "critical"

"receiver": "Critical"

type: Opaque

kubectl delete -f alertmanager-secret.yaml

kubectl create -f alertmanager-secret.yaml

2.然后就会收到告警了

其他的上面部署prometheus的时候都已经部署好了

不过这个告警界面比较丑,后面可以自定义一下告警模板

告警模板、自动发现以及业务监控后续再加上