深度学习实验五:循环神经网络编程

本次实验练习使用torch.nn中的类设计一个循环神经网络进行MNIST图像分类。

在本次实验中,你要设计一个CNN,用于将 28 × 28 28 \times 28 28×28的MNIST图像转换为 M × M × D M\times M\times D M×M×D的特征图,将该特征图看作是一个长度为 M × M M\times M M×M的特征序列,序列中每一个特征向量的大小为 D D D,然后使用RNN对该序列分类。

name = '杨宇海'#填写你的姓名

sid = 'B02014152'#填写你的学号

print('姓名:%s, 学号:%s'%(name, sid))

姓名:杨宇海, 学号:B02014152

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

import numpy as np

import matplotlib.pyplot as plt

1. 准备数据

from torchvision import datasets,transforms

#定义变换

#一行代码,提示:transforms.Compose()函数

transform = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.5,), (0.5,))

])

data_path = '../data/'

mnist_train = datasets.MNIST(data_path,download=True,train = True,transform = transform)

mnist_test = datasets.MNIST(data_path,download=True,train = False,transform = transform)

mnist_train

Dataset MNIST

Number of datapoints: 60000

Root location: ../data/

Split: Train

StandardTransform

Transform: Compose(

ToTensor()

Normalize(mean=(0.5,), std=(0.5,))

)

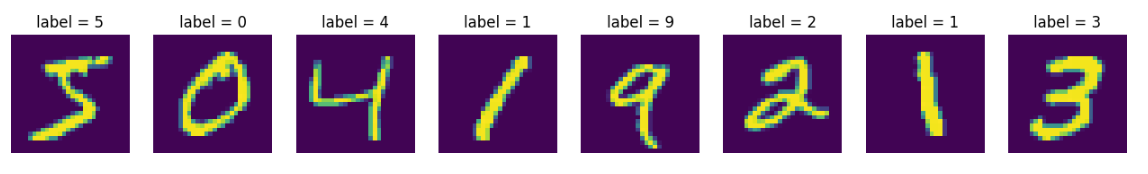

#显示图像样本

mnist_iter = iter(mnist_train)

plt.figure(figsize = [16,10])

for i in range(8):

im,label = next(mnist_iter)

im = (im.permute((1,2,0))+1)/2

plt.subplot(1,8,i+1)

plt.imshow(im)

plt.title('label = %d'%(label))

plt.axis('off')

plt.show()

2. 设计CNN类

从torch.nn.Module派生一个子类CNN,表示一个卷积神经网络;用于将 MNIST 图像转换为一个𝑀×𝑀×𝐷 的特征图

#在下面添加代码,实现一个CNN,用于提取图像特征

class CNN(torch.nn.Module):

def __init__(self):

super().__init__()

self.convd1 = nn.Conv2d(in_channels = 1, out_channels = 16, kernel_size = 2, padding = 0)

self.pool1 = torch.nn.MaxPool2d(kernel_size= 2, stride= 1)

self.convd2 = nn.Conv2d(in_channels = 16, out_channels = 32, kernel_size = 2, padding = 0)

self.pool2 = torch.nn.MaxPool2d(kernel_size= 2, stride= 2)

self.convd3 = nn.Conv2d(in_channels = 32, out_channels = 64, kernel_size = 2, padding = 0)

self.pool3 = torch.nn.MaxPool2d(kernel_size= 2, stride= 1)

self.convd4 = nn.Conv2d(in_channels = 64, out_channels = 128, kernel_size = 2, padding = 0)

self.pool4 = torch.nn.MaxPool2d(kernel_size= 2, stride= 2)

def forward(self, x):

x.cuda()

batch_size = x.size(0)

z1 = self.pool1(self.convd1(x))

a1 = F.relu(z1)

z2 = self.pool2(self.convd2(a1))

a2 = F.relu(z2)

z3 = self.pool3(self.convd3(a2))

a3 = F.relu(z3)

z4 = self.pool4(self.convd4(a3))

a4 = F.relu(z4)

return a4

#测试CNN类

X = torch.rand((5,1,28,28),dtype = torch.float32)

net = CNN()

Y = net(X)

print(Y.shape)

torch.Size([5, 128, 4, 4])

#输出模型

print(net)

CNN(

(convd1): Conv2d(1, 16, kernel_size=(2, 2), stride=(1, 1))

(pool1): MaxPool2d(kernel_size=2, stride=1, padding=0, dilation=1, ceil_mode=False)

(convd2): Conv2d(16, 32, kernel_size=(2, 2), stride=(1, 1))

(pool2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(convd3): Conv2d(32, 64, kernel_size=(2, 2), stride=(1, 1))

(pool3): MaxPool2d(kernel_size=2, stride=1, padding=0, dilation=1, ceil_mode=False)

(convd4): Conv2d(64, 128, kernel_size=(2, 2), stride=(1, 1))

(pool4): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

!pip install -i https://pypi.tuna.tsinghua.edu.cn/simple torchsummary

from torchsummary import summary

# summary(net, input_size = (1,28,28))

summary(net.cuda(), input_size = (1,28,28))

----------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Conv2d-1 [-1, 16, 27, 27] 80

MaxPool2d-2 [-1, 16, 26, 26] 0

Conv2d-3 [-1, 32, 25, 25] 2,080

MaxPool2d-4 [-1, 32, 12, 12] 0

Conv2d-5 [-1, 64, 11, 11] 8,256

MaxPool2d-6 [-1, 64, 10, 10] 0

Conv2d-7 [-1, 128, 9, 9] 32,896

MaxPool2d-8 [-1, 128, 4, 4] 0

================================================================

Total params: 43,312

Trainable params: 43,312

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 0.00

Forward/backward pass size (MB): 0.56

Params size (MB): 0.17

Estimated Total Size (MB): 0.73

----------------------------------------------------------------

3. 设计分类模型

在分类模型中,使用上面定义的CNN作为特征提取器,用LSTM循环网络构造分类器。你的模型中应该包含一个CNN和一个RNN。

#在下面添加代码,实现一个Classifier类,用CNN和LSTM循环网络构造分类器

class Classifier(nn.Module):

def __init__(self):

super().__init__()

self.cnn = CNN()

print(self.cnn)

self.rnn = nn.LSTM(input_size = 128, hidden_size = 16, batch_first = True)

self.MLP = nn.Sequential(

# nn.Linear(in_features = 16, out_features = 128),

# nn.ReLU(),

# nn.Linear(in_features = 128, out_features = 10)

nn.Linear(in_features = 16, out_features = 10)

)

def forward(self, x):

batch_size = x.size(0)

# cnn

x = self.cnn(x)

# 数据维度处理

x = x.reshape([batch_size, 128, -1]) # B * 128 * 16

x = x.permute(0, 2, 1)

# print(x.shape) # B * 16 * 128

# rnn

h, c = self.rnn(x) # h [N * L * hitsize]

# print(h.squeeze().shape)

# MLP

logit = self.MLP(h[:,-1,:])

return logit

#测试上面的类

model = Classifier()#一行代码

X = torch.randn(5,1,28,28)

Y = model(X)

print(Y.shape)

CNN(

(convd1): Conv2d(1, 16, kernel_size=(2, 2), stride=(1, 1))

(pool1): MaxPool2d(kernel_size=2, stride=1, padding=0, dilation=1, ceil_mode=False)

(convd2): Conv2d(16, 32, kernel_size=(2, 2), stride=(1, 1))

(pool2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(convd3): Conv2d(32, 64, kernel_size=(2, 2), stride=(1, 1))

(pool3): MaxPool2d(kernel_size=2, stride=1, padding=0, dilation=1, ceil_mode=False)

(convd4): Conv2d(64, 128, kernel_size=(2, 2), stride=(1, 1))

(pool4): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

torch.Size([5, 10])

4.训练模型

4.1 第一步,构造加载器,用于加载上面定义的数据集

train_loader = torch.utils.data.DataLoader(mnist_train, batch_size = 32, shuffle = True)

test_loader = torch.utils.data.DataLoader(mnist_test, batch_size = 32, shuffle = False)

imgs,labels = next(iter(train_loader))

imgs.shape

torch.Size([32, 1, 28, 28])

labels.shape

torch.Size([32])

4.2 第二步,训练模型

注意:训练卷积神经网络时,网络的输入是四维张量,尺寸为 N × C × H × W N\times C \times H \times W N×C×H×W,分别表示张量

#添加代码,完成下面的训练函数

def Train(model, loader, epochs, lr = 0.1):

'''

model:模型对象

loader:数据加载器

'''

epsilon = 1e-6

model.train()

optimizer = optim.SGD(params=model.parameters(), lr=lr)

loss = nn.CrossEntropyLoss() # 损失函数

loss0 = 0

for epoch in range(epochs):

for iter, data in enumerate(loader):

features, labels = data

optimizer.zero_grad() # 梯度清空

logits = model(features)

loss1 = loss(logits, labels)

if(abs(loss1.item() - loss0) < epsilon):

break

loss0 = loss1.item()

if iter%100==0:

print('epoch %d, iter %d, loss = %f\n'%(epoch,iter,loss0))

# 反向传播

loss1.backward()

# 梯度下降

optimizer.step()

return model

#构造并训练模型

model = Classifier()

model = Train(model, test_loader, 10, 0.1)

CNN(

(convd1): Conv2d(1, 16, kernel_size=(2, 2), stride=(1, 1))

(pool1): MaxPool2d(kernel_size=2, stride=1, padding=0, dilation=1, ceil_mode=False)

(convd2): Conv2d(16, 32, kernel_size=(2, 2), stride=(1, 1))

(pool2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(convd3): Conv2d(32, 64, kernel_size=(2, 2), stride=(1, 1))

(pool3): MaxPool2d(kernel_size=2, stride=1, padding=0, dilation=1, ceil_mode=False)

(convd4): Conv2d(64, 128, kernel_size=(2, 2), stride=(1, 1))

(pool4): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

epoch 0, iter 0, loss = 2.301467

epoch 0, iter 100, loss = 2.279888

epoch 0, iter 200, loss = 2.084048

epoch 0, iter 300, loss = 1.706302

epoch 1, iter 0, loss = 1.520445

epoch 1, iter 100, loss = 1.137834

epoch 1, iter 200, loss = 0.864159

epoch 1, iter 300, loss = 0.567380

epoch 2, iter 0, loss = 0.723860

epoch 2, iter 100, loss = 0.599388

epoch 2, iter 200, loss = 0.428438

epoch 2, iter 300, loss = 0.232027

epoch 3, iter 0, loss = 0.509207

epoch 3, iter 100, loss = 0.344112

epoch 3, iter 200, loss = 0.306382

epoch 3, iter 300, loss = 0.150422

epoch 4, iter 0, loss = 0.357463

epoch 4, iter 100, loss = 0.193024

epoch 4, iter 200, loss = 0.130213

epoch 4, iter 300, loss = 0.079743

epoch 5, iter 0, loss = 0.460217

epoch 5, iter 100, loss = 0.201989

epoch 5, iter 200, loss = 0.095881

epoch 5, iter 300, loss = 0.049980

epoch 6, iter 0, loss = 0.552857

epoch 6, iter 100, loss = 0.206212

epoch 6, iter 200, loss = 0.068986

epoch 6, iter 300, loss = 0.038401

epoch 7, iter 0, loss = 0.481544

epoch 7, iter 100, loss = 0.123809

epoch 7, iter 200, loss = 0.069442

epoch 7, iter 300, loss = 0.030164

epoch 8, iter 0, loss = 0.306035

epoch 8, iter 100, loss = 0.083185

epoch 8, iter 200, loss = 0.052271

epoch 8, iter 300, loss = 0.038275

epoch 9, iter 0, loss = 0.275993

epoch 9, iter 100, loss = 0.108630

epoch 9, iter 200, loss = 0.052308

epoch 9, iter 300, loss = 0.026095

4.3 第三步,测试模型

#编写模型测试过程

def Evaluate(model, loader):

model.eval()

correct = 0

counts = 0

for imgs, labels in loader:

logits = model(imgs) # 各个概率

yhat = logits.argmax(dim = 1) # 最大概率, 预测结果

correct = correct + (yhat==labels).sum().item() # 预测正确

counts = counts + imgs.size(0) # 总数

accuracy = correct / counts # 精度

return accuracy

acc = Evaluate(model,test_loader)

print('Accuracy = %f'%(acc))

Accuracy = 0.961000

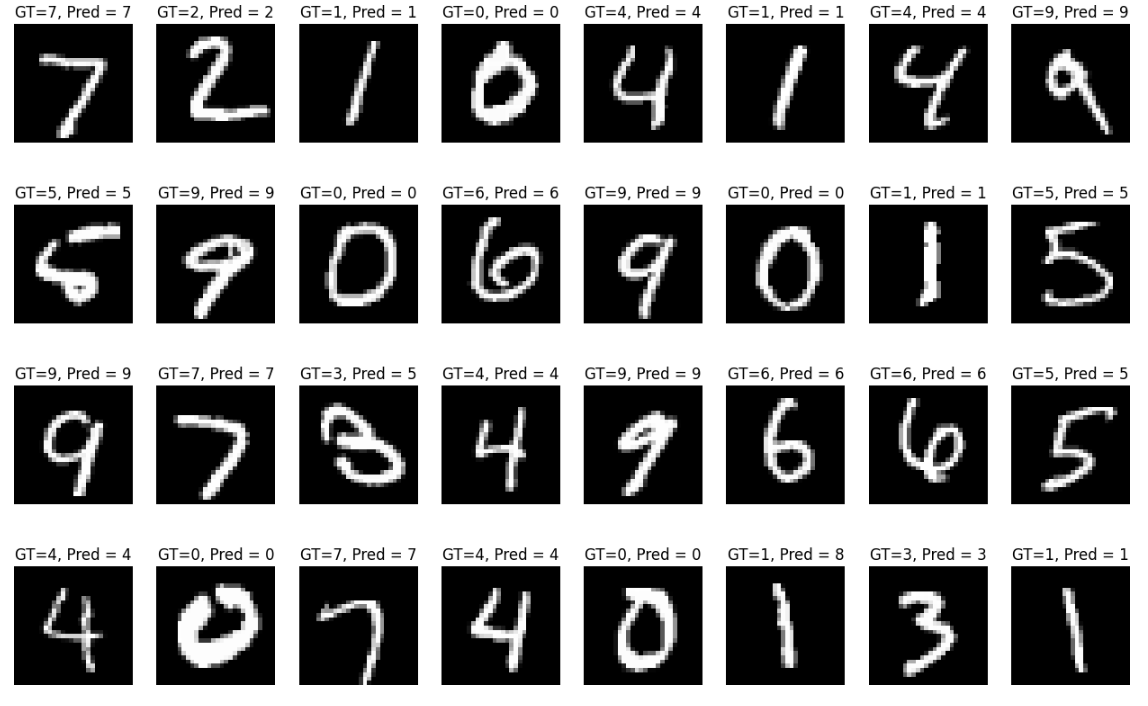

imgs,labels = next(iter(test_loader))

logits = model(imgs)

yhat = logits.argmax(dim = 1)

imgs = imgs.permute((0,2,3,1))

plt.figure(figsize = (16,10))

for i in range(imgs.size(0)):

plt.subplot(4,8,i+1)

plt.imshow(imgs[i]/2+0.5,cmap = 'gray')

plt.axis('off')

plt.title('GT=%d, Pred = %d'%(labels[i],yhat[i]))

plt.show()