1 规划&前提

- Zookeeper 、HDFS 正常部署

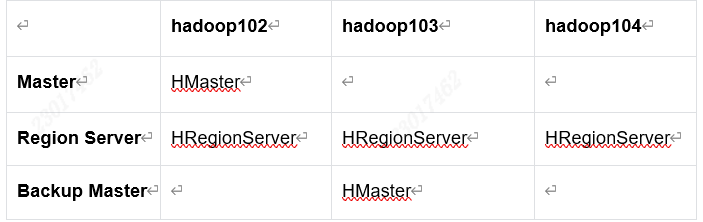

- 规划如下

2 解压并重命名

cd /opt/software/

tar -zxvf hbase-2.4.11-bin.tar.gz -C /opt/module/

cd /opt/module

mv hbase-2.4.11/ hbase

3 修改配置文件

3.1 hbase-env.sh

#!/usr/bin/env bash

#

#/**

# * Licensed to the Apache Software Foundation (ASF) under one

# * or more contributor license agreements. See the NOTICE file

# * distributed with this work for additional information

# * regarding copyright ownership. The ASF licenses this file

# * to you under the Apache License, Version 2.0 (the

# * "License"); you may not use this file except in compliance

# * with the License. You may obtain a copy of the License at

# *

# * http://www.apache.org/licenses/LICENSE-2.0

# *

# * Unless required by applicable law or agreed to in writing, software

# * distributed under the License is distributed on an "AS IS" BASIS,

# * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# * See the License for the specific language governing permissions and

# * limitations under the License.

# */

# Set environment variables here.

# This script sets variables multiple times over the course of starting an hbase process,

# so try to keep things idempotent unless you want to take an even deeper look

# into the startup scripts (bin/hbase, etc.)

# The java implementation to use. Java 1.8+ required.

# export JAVA_HOME=/usr/java/jdk1.8.0/

# Extra Java CLASSPATH elements. Optional.

# export HBASE_CLASSPATH=

# The maximum amount of heap to use. Default is left to JVM default.

# export HBASE_HEAPSIZE=1G

# Uncomment below if you intend to use off heap cache. For example, to allocate 8G of

# offheap, set the value to "8G".

# export HBASE_OFFHEAPSIZE=1G

# Extra Java runtime options.

# Default settings are applied according to the detected JVM version. Override these default

# settings by specifying a value here. For more details on possible settings,

# see http://hbase.apache.org/book.html#_jvm_tuning

# export HBASE_OPTS

# Uncomment one of the below three options to enable java garbage collection logging for the server-side processes.

# This enables basic gc logging to the .out file.

# export SERVER_GC_OPTS="-verbose:gc -XX:+PrintGCDetails -XX:+PrintGCDateStamps"

# This enables basic gc logging to its own file.

# If FILE-PATH is not replaced, the log file(.gc) would still be generated in the HBASE_LOG_DIR .

# export SERVER_GC_OPTS="-verbose:gc -XX:+PrintGCDetails -XX:+PrintGCDateStamps -Xloggc:<FILE-PATH>"

# This enables basic GC logging to its own file with automatic log rolling. Only applies to jdk 1.6.0_34+ and 1.7.0_2+.

# If FILE-PATH is not replaced, the log file(.gc) would still be generated in the HBASE_LOG_DIR .

# export SERVER_GC_OPTS="-verbose:gc -XX:+PrintGCDetails -XX:+PrintGCDateStamps -Xloggc:<FILE-PATH> -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=1 -XX:GCLogFileSize=512M"

# Uncomment one of the below three options to enable java garbage collection logging for the client processes.

# This enables basic gc logging to the .out file.

# export CLIENT_GC_OPTS="-verbose:gc -XX:+PrintGCDetails -XX:+PrintGCDateStamps"

# This enables basic gc logging to its own file.

# If FILE-PATH is not replaced, the log file(.gc) would still be generated in the HBASE_LOG_DIR .

# export CLIENT_GC_OPTS="-verbose:gc -XX:+PrintGCDetails -XX:+PrintGCDateStamps -Xloggc:<FILE-PATH>"

# This enables basic GC logging to its own file with automatic log rolling. Only applies to jdk 1.6.0_34+ and 1.7.0_2+.

# If FILE-PATH is not replaced, the log file(.gc) would still be generated in the HBASE_LOG_DIR .

# export CLIENT_GC_OPTS="-verbose:gc -XX:+PrintGCDetails -XX:+PrintGCDateStamps -Xloggc:<FILE-PATH> -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=1 -XX:GCLogFileSize=512M"

# See the package documentation for org.apache.hadoop.hbase.io.hfile for other configurations

# needed setting up off-heap block caching.

# Uncomment and adjust to enable JMX exporting

# See jmxremote.password and jmxremote.access in $JRE_HOME/lib/management to configure remote password access.

# More details at: http://java.sun.com/javase/6/docs/technotes/guides/management/agent.html

# NOTE: HBase provides an alternative JMX implementation to fix the random ports issue, please see JMX

# section in HBase Reference Guide for instructions.

# export HBASE_JMX_BASE="-Dcom.sun.management.jmxremote.ssl=false -Dcom.sun.management.jmxremote.authenticate=false"

# export HBASE_MASTER_OPTS="$HBASE_MASTER_OPTS $HBASE_JMX_BASE -Dcom.sun.management.jmxremote.port=10101"

# export HBASE_REGIONSERVER_OPTS="$HBASE_REGIONSERVER_OPTS $HBASE_JMX_BASE -Dcom.sun.management.jmxremote.port=10102"

# export HBASE_THRIFT_OPTS="$HBASE_THRIFT_OPTS $HBASE_JMX_BASE -Dcom.sun.management.jmxremote.port=10103"

# export HBASE_ZOOKEEPER_OPTS="$HBASE_ZOOKEEPER_OPTS $HBASE_JMX_BASE -Dcom.sun.management.jmxremote.port=10104"

# export HBASE_REST_OPTS="$HBASE_REST_OPTS $HBASE_JMX_BASE -Dcom.sun.management.jmxremote.port=10105"

# File naming hosts on which HRegionServers will run. $HBASE_HOME/conf/regionservers by default.

# export HBASE_REGIONSERVERS=${HBASE_HOME}/conf/regionservers

# Uncomment and adjust to keep all the Region Server pages mapped to be memory resident

#HBASE_REGIONSERVER_MLOCK=true

#HBASE_REGIONSERVER_UID="hbase"

# File naming hosts on which backup HMaster will run. $HBASE_HOME/conf/backup-masters by default.

# export HBASE_BACKUP_MASTERS=${HBASE_HOME}/conf/backup-masters

# Extra ssh options. Empty by default.

# export HBASE_SSH_OPTS="-o ConnectTimeout=1 -o SendEnv=HBASE_CONF_DIR"

# Where log files are stored. $HBASE_HOME/logs by default.

# export HBASE_LOG_DIR=${HBASE_HOME}/logs

# Enable remote JDWP debugging of major HBase processes. Meant for Core Developers

# export HBASE_MASTER_OPTS="$HBASE_MASTER_OPTS -Xdebug -Xrunjdwp:transport=dt_socket,server=y,suspend=n,address=8070"

# export HBASE_REGIONSERVER_OPTS="$HBASE_REGIONSERVER_OPTS -Xdebug -Xrunjdwp:transport=dt_socket,server=y,suspend=n,address=8071"

# export HBASE_THRIFT_OPTS="$HBASE_THRIFT_OPTS -Xdebug -Xrunjdwp:transport=dt_socket,server=y,suspend=n,address=8072"

# export HBASE_ZOOKEEPER_OPTS="$HBASE_ZOOKEEPER_OPTS -Xdebug -Xrunjdwp:transport=dt_socket,server=y,suspend=n,address=8073"

# export HBASE_REST_OPTS="$HBASE_REST_OPTS -Xdebug -Xrunjdwp:transport=dt_socket,server=y,suspend=n,address=8074"

# A string representing this instance of hbase. $USER by default.

# export HBASE_IDENT_STRING=$USER

# The scheduling priority for daemon processes. See 'man nice'.

# export HBASE_NICENESS=10

# The directory where pid files are stored. /tmp by default.

# export HBASE_PID_DIR=/var/hadoop/pids

# Seconds to sleep between slave commands. Unset by default. This

# can be useful in large clusters, where, e.g., slave rsyncs can

# otherwise arrive faster than the master can service them.

# export HBASE_SLAVE_SLEEP=0.1

# Tell HBase whether it should manage it's own instance of ZooKeeper or not.

export HBASE_MANAGES_ZK=false

# The default log rolling policy is RFA, where the log file is rolled as per the size defined for the

# RFA appender. Please refer to the log4j.properties file to see more details on this appender.

# In case one needs to do log rolling on a date change, one should set the environment property

# HBASE_ROOT_LOGGER to "<DESIRED_LOG LEVEL>,DRFA".

# For example:

# HBASE_ROOT_LOGGER=INFO,DRFA

# The reason for changing default to RFA is to avoid the boundary case of filling out disk space as

# DRFA doesn't put any cap on the log size. Please refer to HBase-5655 for more context.

# Tell HBase whether it should include Hadoop's lib when start up,

# the default value is false,means that includes Hadoop's lib.

# export HBASE_DISABLE_HADOOP_CLASSPATH_LOOKUP="true"

# Override text processing tools for use by these launch scripts.

# export GREP="${GREP-grep}"

# export SED="${SED-sed}"

3.3 hbase-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

/*

* Licensed to the Apache Software Foundation (ASF) under one

* or more contributor license agreements. See the NOTICE file

* distributed with this work for additional information

* regarding copyright ownership. The ASF licenses this file

* to you under the Apache License, Version 2.0 (the

* "License"); you may not use this file except in compliance

* with the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

-->

<configuration>

<!--

The following properties are set for running HBase as a single process on a

developer workstation. With this configuration, HBase is running in

"stand-alone" mode and without a distributed file system. In this mode, and

without further configuration, HBase and ZooKeeper data are stored on the

local filesystem, in a path under the value configured for `hbase.tmp.dir`.

This value is overridden from its default value of `/tmp` because many

systems clean `/tmp` on a regular basis. Instead, it points to a path within

this HBase installation directory.

Running against the `LocalFileSystem`, as opposed to a distributed

filesystem, runs the risk of data integrity issues and data loss. Normally

HBase will refuse to run in such an environment. Setting

`hbase.unsafe.stream.capability.enforce` to `false` overrides this behavior,

permitting operation. This configuration is for the developer workstation

only and __should not be used in production!__

See also https://hbase.apache.org/book.html#standalone_dist

-->

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<!-- <property>

<name>hbase.tmp.dir</name>

<value>./tmp</value>

</property>

<property>

<name>hbase.unsafe.stream.capability.enforce</name>

<value>false</value>

</property> -->

<property>

<name>hbase.zookeeper.quorum</name>

<value>hadoop102,hadoop103,hadoop104</value>

</property>

<property>

<name>hbase.rootdir</name>

<value>hdfs://hadoop102:8020/hbase</value>

</property>

</configuration>

3.3 zoo.cfg

修改zookeeper默认数据存放位置,防止tmp路径 将文件清除

# The number of milliseconds of each tick

tickTime=2000

# The number of ticks that the initial

# synchronization phase can take

initLimit=10

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

syncLimit=5

# the directory where the snapshot is stored.

# do not use /tmp for storage, /tmp here is just

# example sakes.

# dataDir=/tmp/zookeeper

dataDir=/opt/module/zookeeper-3.5.7/zkData

# the port at which the clients will connect

clientPort=2181

# the maximum number of client connections.

# increase this if you need to handle more clients

#maxClientCnxns=60

#

# Be sure to read the maintenance section of the

# administrator guide before turning on autopurge.

#

# http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance

#

# The number of snapshots to retain in dataDir

#autopurge.snapRetainCount=3

# Purge task interval in hours

# Set to "0" to disable auto purge feature

#autopurge.purgeInterval=1

3.4 regionservers

hadoop102

hadoop103

hadoop104

3.5 backup-masters

echo "hadoop103" > /opt/module/hbase/conf/backup-masters

4 修改hadoop和hbase jar 冲突问题

/opt/module/hbase/lib/client-facing-thirdparty

rm -f slf4j-reload4j-1.7.33.jar

5 分发安装包到其他服务器

sh xsync /opt/module/hbase/

6 添加环境变量

在三台server 分别添加

vim /etc/profile.d/my_env.sh

#HBASE_HOME

export HBASE_HOME=/opt/module/hbase

export PATH=$PATH:$HBASE_HOME/bin

source /etc/profile

7 启动停止脚本

#!/bin/bash

if [ $# -lt 1 ]; then

echo "No Args Input..."

exit

fi

case $1 in

"start")

{

echo "----------------- HBase start -----------------"

nohup sh /opt/module/hbase/bin/start-hbase.sh >> /opt/module/hbase/logs/start-hbase.out 2>&1 & }

;;

"stop")

{

echo "----------------- HBase stop -----------------"

nohup sh /opt/module/hbase/bin/stop-hbase.sh >> /opt/module/hbase/logs/stop-hbase.out 2>&1 &

}

;;

*)

echo "Input Args Error..."

;;

esac

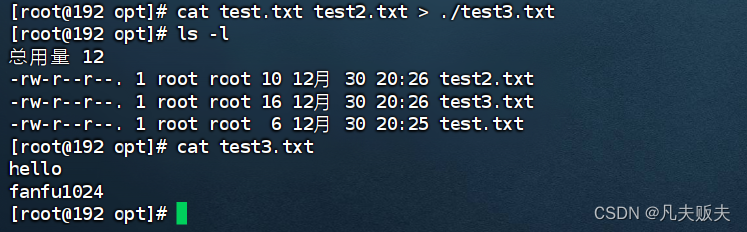

8 web页面

Master

http://hadoop102:16010/master-status

Backup Master

http://hadoop103:16010/master-status

![[雷池WAF]长亭雷池WAF配置基于健康监测的负载均衡,实现故障自动切换上游服务器](https://img-blog.csdnimg.cn/img_convert/ace5c6389fb022a80ab2398ab241a89c.png)