CKA认证模块②-K8S企业运维和落地实战-2

K8S常见的存储方案及具体应用场景分析

k8s存储-empty

emptyDir类型的Volume是在Pod分配到Node上时被创建,Kubernetes会在Node上自动分配一个目录,因此无需指定宿主机Node上对应的目录文件。 这个目录的初始内容为空,当Pod从Node上移除时,emptyDir中的数据会被永久删除。emptyDir Volume主要用于某些应用程序无需永久保存的临时目录,多个容器的共享目录等。

[root@k8s-master01 ~]# mkdir storage

[root@k8s-master01 ~]# cd storage/

# 创建工作目录

[root@k8s-master01 storage]# kubectl explain pod.spec.volumes.

...

# 查看支持哪些存储卷

[root@k8s-master01 storage]# cat emptydir.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-empty

spec:

containers:

- name: container-empty

image: nginx

imagePullPolicy: IfNotPresent

volumeMounts:

- name: cache-volume

mountPath: /cache

volumes:

- name: cache-volume

emptyDir: {}

[root@k8s-master01 storage]# kubectl apply -f emptydir.yaml

pod/pod-empty created

[root@k8s-master01 storage]# kubectl get pods -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod-empty 1/1 Running 0 4s 10.244.58.253 k8s-node02 <none> <none>

# 创建pod

[root@k8s-master01 storage]# kubectl exec -it pod-empty -c container-empty -- /bin/bash

root@pod-empty:/# cd /cache/

root@pod-empty:/cache# touch 123

root@pod-empty:/cache# touch aa

root@pod-empty:/cache# ls

123 aa

# 创建empty挂载文件夹下文件

root@pod-empty:/cache# exit

exit

[root@k8s-master01 storage]# kubectl get pods -oyaml |grep uid

uid: 8ce8fecc-dc86-4c92-8876-69ac034b6972

[root@k8s-master01 storage]# kubectl get pods -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod-empty 1/1 Running 0 2m43s 10.244.58.253 k8s-node02 <none> <none>

# 查看pod的uid,并查看调度节点

[root@k8s-node02 ~]# yum -y install tree

[root@k8s-node02 ~]# tree /var/lib/kubelet/pods/8ce8fecc-dc86-4c92-8876-69ac034b6972

...

[root@k8s-node02 ~]# cd /var/lib/kubelet/pods/8ce8fecc-dc86-4c92-8876-69ac034b6972/

[root@k8s-node02 8ce8fecc-dc86-4c92-8876-69ac034b6972]# cd volumes/kubernetes.io~empty-dir/cache-volume/

[root@k8s-node02 cache-volume]# ls

123 aa

[root@k8s-node02 cache-volume]# pwd

/var/lib/kubelet/pods/8ce8fecc-dc86-4c92-8876-69ac034b6972/volumes/kubernetes.io~empty-dir/cache-volume

# 已经保存到临时文件夹下

[root@k8s-master01 storage]# kubectl delete pod pod-empty

pod "pod-empty" deleted

# 删除pod

[root@k8s-node02 cache-volume]# ls

[root@k8s-node02 cache-volume]# cd ..

cd: error retrieving current directory: getcwd: cannot access parent directories: No such file or directory

# 因为整个容器目录都被删除,emptyDir目录自然也被删除,所以不建议使用

k8s存储-hostPath

hostpath存储卷缺点:

单节点

pod删除之后重新创建必须调度到同一个node节点,数据才不会丢失

[root@k8s-node01 images]# ctr -n k8s.io images import tomcat.tar.gz.0

[root@k8s-node02 images]# ctr -n k8s.io images import tomcat.tar.gz.0

# 因为之前上传过同名镜像包,名字不能一样后面加了.0

[root@k8s-master01 storage]# kubectl explain pod.spec.volumes.hostPath.type

# 查看支持哪些类型 https://kubernetes.io/docs/concepts/storage/volumes#hostpath

[root@k8s-master01 storage]# kubectl explain pod.spec |grep -i nodename

# 查看帮助

[root@k8s-master01 storage]# cat hostpath.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-hostpath

namespace: default

spec:

nodeName: k8s-node01

containers:

- name: test-nginx

image: nginx

imagePullPolicy: IfNotPresent

volumeMounts:

- name: test-volume

mountPath: /test-nginx

- name: test-tomcat

image: tomcat

imagePullPolicy: IfNotPresent

volumeMounts:

- name: test-volume

mountPath: /test-tomcat

volumes:

- name: test-volume

hostPath:

path: /data1

type: DirectoryOrCreate

[root@k8s-master01 storage]# kubectl apply -f hostpath.yaml

pod/pod-hostpath created

[root@k8s-master01 storage]# kubectl exec -it pod-hostpath -c test-nginx -- /bin/bash

root@pod-hostpath:/# cd test-nginx/

root@pod-hostpath:/test-nginx# touch nginx

root@pod-hostpath:/test-nginx# ls

nginx

root@pod-hostpath:/test-nginx# exit

exit

[root@k8s-master01 storage]# kubectl exec -it pod-hostpath -c test-tomcat -- /bin/bash

root@pod-hostpath:/usr/local/tomcat# cd /test-tomcat/

root@pod-hostpath:/test-tomcat# touch tomcat

root@pod-hostpath:/test-tomcat# ls

nginx tomcat

root@pod-hostpath:/test-tomcat# exit

exit

[root@k8s-node01 ~]# ls /data1/

nginx tomcat

# 测试是否为同一卷

[root@k8s-master01 storage]# kubectl delete -f hostpath.yaml

pod "pod-hostpath" deleted

# 删除pod

[root@k8s-node01 ~]# ll /data1/

total 0

-rw-r--r-- 1 root root 0 Jul 3 16:30 nginx

-rw-r--r-- 1 root root 0 Jul 3 16:31 tomcat

# 数据仍然存在

k8s存储-NFS

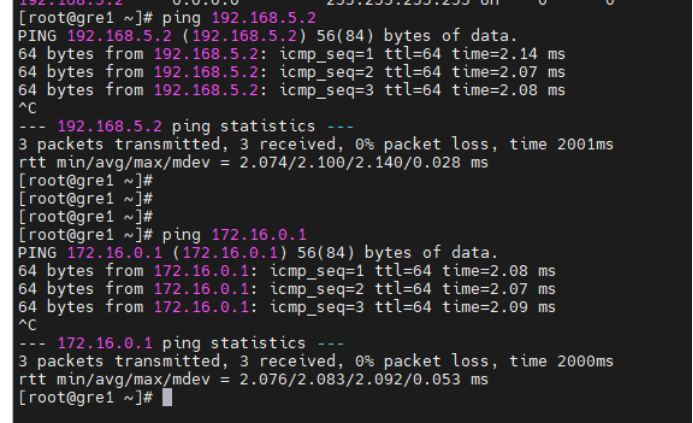

注意: NFS服务器配置白名单是node节点网段而不是pod网段

node节点网段,例如我的环境应配置: 192.168.1.0/24

yum -y install nfs-utils

systemctl enable --now nfs

# 所有节点安装nfs

[root@k8s-master01 storage]# mkdir -pv /data/volume

mkdir: created directory ‘/data’

mkdir: created directory ‘/data/volume’

# v参数是展示创建了哪些文件夹

[root@k8s-master01 storage]# cat /etc/exports

/data/volume *(rw,no_root_squash)

[root@k8s-master01 storage]# systemctl restart nfs

[root@k8s-master01 storage]# showmount -e localhost

Export list for localhost:

/data/volume *

# 配置nfs服务

[root@k8s-node01 ~]# mount -t nfs k8s-master01:/data/volume /mnt

[root@k8s-node01 ~]# df -Th |tail -n1

k8s-master01:/data/volume nfs4 38G 11G 27G 29% /mnt

[root@k8s-node01 ~]# umount /mnt

# 测试nfs服务

[root@k8s-master01 storage]# cat nfs.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: test-nfs-volume

namespace: default

spec:

replicas: 3

selector:

matchLabels:

storage: nfs

template:

metadata:

labels:

storage: nfs

spec:

containers:

- name: test-nfs

image: xianchao/nginx:v1

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

protocol: TCP

volumeMounts:

- name: nfs-volumes

mountPath: /usr/share/nginx/html

volumes:

- name: nfs-volumes

nfs:

server: 192.168.1.181

# 注意nfs服务器的ip一定不能写错

path: /data/volume

[root@k8s-master01 storage]# kubectl apply -f nfs.yaml

deployment.apps/test-nfs-volume created

# 创建deployment

[root@k8s-master01 storage]# echo nfs-test > /data/volume/index.html

[root@k8s-master01 storage]# kubectl get pods -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

test-nfs-volume-6656574b86-66m2h 1/1 Running 0 5m37s 10.244.85.233 k8s-node01 <none> <none>

test-nfs-volume-6656574b86-6nxgw 1/1 Running 0 5m37s 10.244.85.232 k8s-node01 <none> <none>

test-nfs-volume-6656574b86-cvqmr 1/1 Running 0 5m37s 10.244.58.255 k8s-node02 <none> <none>

[root@k8s-master01 storage]# curl 10.244.85.232

nfs-test

# 测试nfs

[root@k8s-master01 storage]# kubectl exec -it test-nfs-volume-6656574b86-cvqmr

-- /bin/bash

root@test-nfs-volume-6656574b86-cvqmr:/# ls /usr/share/nginx/html/

index.html

root@test-nfs-volume-6656574b86-cvqmr:/# cat /usr/share/nginx/html/index.html

nfs-test

root@test-nfs-volume-6656574b86-cvqmr:/# exit

exit

# 进入pod测试

[root@k8s-master01 storage]# kubectl delete -f nfs.yaml

deployment.apps "test-nfs-volume" deleted

# 删除deployment

[root@k8s-master01 storage]# ls /data/volume/

index.html

[root@k8s-master01 storage]# cat /data/volume/index.html

nfs-test

# 数据仍然存在

k8s存储-PVC

[root@k8s-master01 storage]# mkdir /data/volume-test/v{1..10} -p

[root@k8s-master01 storage]# cat /etc/exports

/data/volume *(rw,no_root_squash)

/data/volume-test/v1 *(rw,no_root_squash)

/data/volume-test/v2 *(rw,no_root_squash)

/data/volume-test/v3 *(rw,no_root_squash)

/data/volume-test/v4 *(rw,no_root_squash)

/data/volume-test/v5 *(rw,no_root_squash)

/data/volume-test/v6 *(rw,no_root_squash)

/data/volume-test/v7 *(rw,no_root_squash)

/data/volume-test/v8 *(rw,no_root_squash)

/data/volume-test/v9 *(rw,no_root_squash)

/data/volume-test/v10 *(rw,no_root_squash)

[root@k8s-master01 storage]# exportfs -arv

# 生效配置

[root@k8s-master01 storage]# cat pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: v1

labels:

app: v1

spec:

nfs:

server: 192.168.1.181

path: /data/volume-test/v1

accessModes: ["ReadWriteOnce"]

capacity:

storage: 1Gi

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: v2

labels:

app: v2

spec:

nfs:

server: 192.168.1.181

path: /data/volume-test/v2

accessModes: ["ReadOnlyMany"]

capacity:

storage: 2Gi

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: v3

labels:

app: v3

spec:

nfs:

server: 192.168.1.181

path: /data/volume-test/v3

accessModes: ["ReadWriteMany"]

capacity:

storage: 3Gi

https://kubernetes.io/docs/concepts/storage/persistent-volumes#access-mod

访问模式有:

-

ReadWriteOnce卷可以被一个节点以读写方式挂载。 ReadWriteOnce 访问模式也允许运行在同一节点上的多个 Pod 访问卷。

-

ReadOnlyMany卷可以被多个节点以只读方式挂载。

-

ReadWriteMany卷可以被多个节点以读写方式挂载。

-

ReadWriteOncePod特性状态:

Kubernetes v1.27 [beta]卷可以被单个 Pod 以读写方式挂载。 如果你想确保整个集群中只有一个 Pod 可以读取或写入该 PVC, 请使用 ReadWriteOncePod 访问模式。这只支持 CSI 卷以及需要 Kubernetes 1.22 以上版本。

[root@k8s-master01 storage]# kubectl apply -f pv.yaml

persistentvolume/v1 created

persistentvolume/v2 created

persistentvolume/v3 created

[root@k8s-master01 storage]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

v1 1Gi RWO Retain Available 22s

v2 2Gi ROX Retain Available 22s

v3 3Gi RWX Retain Available 22s

[root@k8s-master01 storage]# cat pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc-v1

spec:

accessModes: ["ReadWriteOnce"]

selector:

matchLabels:

app: v1

resources:

requests:

storage: 1Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc-v2

spec:

accessModes: ["ReadOnlyMany"]

selector:

matchLabels:

app: v2

resources:

requests:

storage: 2Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc-v3

spec:

accessModes: ["ReadWriteMany"]

selector:

matchLabels:

app: v3

resources:

requests:

storage: 3Gi

[root@k8s-master01 storage]# kubectl apply -f pvc.yaml

persistentvolumeclaim/pvc-v1 created

persistentvolumeclaim/pvc-v2 created

persistentvolumeclaim/pvc-v3 created

[root@k8s-master01 storage]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

pvc-v1 Bound v1 1Gi RWO 4s

pvc-v2 Bound v2 2Gi ROX 4s

pvc-v3 Bound v3 3Gi RWX 4s

# Bound状态就说明pvc已经和pv绑定

RWO: ReadWriteOnce

ROX: ReadOnlyMany

RWX: ReadWriteMany

[root@k8s-master01 storage]# cat deploy_pvc.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: pvc-test

spec:

replicas: 3

selector:

matchLabels:

storage: pvc

template:

metadata:

labels:

storage: pvc

spec:

containers:

- name: test-pvc

image: xianchao/nginx:v1

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

protocol: TCP

volumeMounts:

- name: nginx-html

mountPath: /usr/share/nginx/html

volumes:

- name: nginx-html

persistentVolumeClaim:

claimName: pvc-v1

[root@k8s-master01 storage]# kubectl apply -f deploy_pvc.yaml

deployment.apps/pvc-test created

# 创建deployment使用pvc

[root@k8s-master01 storage]# echo pvc-test > /data/volume-test/v1/index.html

[root@k8s-master01 storage]# kubectl get pods -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pvc-test-66c48b4c9d-dljv7 1/1 Running 0 46s 10.244.85.236 k8s-node01 <none> <none>

pvc-test-66c48b4c9d-fcttc 1/1 Running 0 46s 10.244.58.197 k8s-node02 <none> <none>

pvc-test-66c48b4c9d-kcjvr 1/1 Running 0 46s 10.244.58.198 k8s-node02 <none> <none>

[root@k8s-master01 storage]# curl 10.244.58.198

pvc-test

# 测试访问成功

**注:**使用pvc和pv的注意事项

1、我们每次创建pvc的时候,需要事先有划分好的pv,这样可能不方便,那么可以在创建pvc的时候直接动态创建一个pv这个存储类,pv事先是不存在的

2、pvc和pv绑定,如果使用默认的回收策略retain,那么删除pvc之后,pv会处于released状态,我们想要继续使用这个pv,需要手动删除pv,kubectl delete pv pv_name,删除pv,不会删除pv里的数据,当我们重新创建pvc时还会和这个最匹配的pv绑定,数据还是原来数据,不会丢失。

经过测试,如果回收策略是Delete,删除pv,pv后端存储的数据也不会被删除

**回收策略:**persistentVolumeReclaimPolicy字段

删除pvc的步骤:

需要先删除使用pvc的pod

再删除pvc

[root@k8s-master01 storage]# kubectl delete -f deploy_pvc.yaml

deployment.apps "pvc-test" deleted

[root@k8s-master01 storage]# kubectl delete -f pvc.yaml

persistentvolumeclaim "pvc-v1" deleted

persistentvolumeclaim "pvc-v2" deleted

persistentvolumeclaim "pvc-v3" deleted

[root@k8s-master01 storage]# kubectl delete -f pv.yaml

persistentvolume "v1" deleted

persistentvolume "v2" deleted

persistentvolume "v3" deleted

演示pv用Delete回收策略:

[root@k8s-master01 storage]# cat pv-1.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: v4

labels:

app: v4

spec:

nfs:

server: 192.168.1.181

path: /data/volume-test/v4

accessModes: ["ReadWriteOnce"]

capacity:

storage: 1Gi

persistentVolumeReclaimPolicy: Delete

[root@k8s-master01 storage]# kubectl apply -f pv-1.yaml

persistentvolume/v4 created

# 创建pv

[root@k8s-master01 storage]# cat pvc-1.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc-v4

spec:

accessModes: ["ReadWriteOnce"]

selector:

matchLabels:

app: v4

resources:

requests:

storage: 1Gi

[root@k8s-master01 storage]# kubectl apply -f pvc-1.yaml

persistentvolumeclaim/pvc-v4 created

# 创建pvc

[root@k8s-master01 storage]# cat deploy_pvc-1.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: pvc-test-1

spec:

replicas: 3

selector:

matchLabels:

storage: pvc-1

template:

metadata:

labels:

storage: pvc-1

spec:

containers:

- name: test-pvc

image: xianchao/nginx:v1

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

protocol: TCP

volumeMounts:

- name: nginx-html

mountPath: /usr/share/nginx/html

volumes:

- name: nginx-html

persistentVolumeClaim:

claimName: pvc-v4

[root@k8s-master01 storage]# kubectl apply -f deploy_pvc-1.yaml

deployment.apps/pvc-test-1 created

# 创建deployment使用pvc

[root@k8s-master01 storage]# kubectl get pods

NAME READY STATUS RESTARTS AGE

pvc-test-1-58fc869c7c-fgl4r 1/1 Running 0 6s

pvc-test-1-58fc869c7c-h5rxb 1/1 Running 0 6s

pvc-test-1-58fc869c7c-nr7cv 1/1 Running 0 6s

[root@k8s-master01 storage]# kubectl exec -it pvc-test-1-58fc869c7c-fgl4r -- /bin/bash

root@pvc-test-1-58fc869c7c-fgl4r:/# cd /usr/share/nginx/html/

root@pvc-test-1-58fc869c7c-fgl4r:/usr/share/nginx/html# echo ReclaimPolicy-Delete_test > index.html

root@pvc-test-1-58fc869c7c-fgl4r:/usr/share/nginx/html# touch 123

# 写入内容

root@pvc-test-1-58fc869c7c-fgl4r:/usr/share/nginx/html# ls

123 index.html

root@pvc-test-1-58fc869c7c-fgl4r:/usr/share/nginx/html# exit

exit

command terminated with exit code 127

# 退出容器

[root@k8s-master01 storage]# kubectl delete -f deploy_pvc-1.yaml

deployment.apps "pvc-test-1" deleted

[root@k8s-master01 storage]# kubectl delete -f pvc-1.yaml

persistentvolumeclaim "pvc-v4" deleted

[root@k8s-master01 storage]# kubectl delete -f pv-1.yaml

persistentvolume "v4" deleted

# 删除deployment,pvc以及pv

[root@k8s-master01 storage]# kubectl get pv

No resources found

[root@k8s-master01 storage]# ls /data/volume-test/v4/

123 index.html

# 可以看到,内容并没有被删除,所以Delete不是像官网说的一样会删除,可能是暂时不支持NFS

Storageclass存储类动态生成存储

存储类动态生成pv

[root@k8s-node01 images]# ctr -n k8s.io images import nfs-subdir-external-provisioner.tar.gz

[root@k8s-node02 images]# ctr -n k8s.io images import nfs-subdir-external-provisioner.tar.gz

# 工作节点导入镜像

[root@k8s-master01 storageclass]# mkdir /data/nfs_pro -p

[root@k8s-master01 storageclass]# cat /etc/exports

/data/volume 192.168.1.0/24(rw,no_root_squash)

/data/volume-test/v1 *(rw,no_root_squash)

/data/volume-test/v2 *(rw,no_root_squash)

/data/volume-test/v3 *(rw,no_root_squash)

/data/volume-test/v4 *(rw,no_root_squash)

/data/volume-test/v5 *(rw,no_root_squash)

/data/volume-test/v6 *(rw,no_root_squash)

/data/volume-test/v7 *(rw,no_root_squash)

/data/volume-test/v8 *(rw,no_root_squash)

/data/volume-test/v9 *(rw,no_root_squash)

/data/volume-test/v10 *(rw,no_root_squash)

/data/nfs_pro *(rw,no_root_squash)

[root@k8s-master01 storageclass]# exportfs -arv

exporting 192.168.1.0/24:/data/volume

exporting *:/data/nfs_pro

exporting *:/data/volume-test/v10

exporting *:/data/volume-test/v9

exporting *:/data/volume-test/v8

exporting *:/data/volume-test/v7

exporting *:/data/volume-test/v6

exporting *:/data/volume-test/v5

exporting *:/data/volume-test/v4

exporting *:/data/volume-test/v3

exporting *:/data/volume-test/v2

exporting *:/data/volume-test/v1

# 配置nfs服务

[root@k8s-master01 ~]# mkdir storageclass

[root@k8s-master01 ~]# cd storageclass/

# 创建工作目录

[root@k8s-master01 storageclass]# cat serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-provisioner

[root@k8s-master01 storageclass]# kubectl apply -f serviceaccount.yaml

serviceaccount/nfs-provisioner created

# 创建sa

[root@k8s-master01 storageclass]# kubectl create clusterrolebinding nfs-provisioner-clusterrolebinding --clusterrole=cluster-admin --serviceaccount=default:nfs-provisioner

clusterrolebinding.rbac.authorization.k8s.io/nfs-provisioner-clusterrolebinding created

# 对sa授权

[root@k8s-master01 storageclass]# cat nfs-deployment.yaml

kind: Deployment

apiVersion: apps/v1

metadata:

name: nfs-provisioner

spec:

selector:

matchLabels:

app: nfs-provisioner

replicas: 1

strategy:

type: Recreate

template:

metadata:

labels:

app: nfs-provisioner

spec:

serviceAccount: nfs-provisioner

containers:

- name: nfs-provisioner

image: registry.cn-beijing.aliyuncs.com/mydlq/nfs-subdir-external-provisioner:v4.0.0

imagePullPolicy: IfNotPresent

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: example.com/nfs

- name: NFS_SERVER

value: 192.168.1.181

- name: NFS_PATH

value: /data/nfs_pro/

volumes:

- name: nfs-client-root

nfs:

server: 192.168.1.181

path: /data/nfs_pro/

[root@k8s-master01 storageclass]# kubectl apply -f nfs-deployment.yaml

deployment.apps/nfs-provisioner created

[root@k8s-master01 storageclass]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nfs-provisioner-747db885fd-phwm2 1/1 Running 0 60s

# 安装nfs-provisioner程序

[root@k8s-master01 storageclass]# cat nfs-storageclass.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs

provisioner: example.com/nfs

# 注意provisioner的值一定要跟安装nfs-provisioner时候的PROVISIONER_NAME一致

[root@k8s-master01 storageclass]# kubectl apply -f nfs-storageclass.yaml

storageclass.storage.k8s.io/nfs created

[root@k8s-master01 storageclass]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs example.com/nfs Delete Immediate false 16s

# 创建storageclass,动态供给pv

[root@k8s-master01 storageclass]# cat claim.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: test-claim1

spec:

accessModes: ["ReadWriteMany"]

resources:

requests:

storage: 1Gi

storageClassName: nfs

[root@k8s-master01 storageclass]# kubectl apply -f claim.yaml

persistentvolumeclaim/test-claim1 created

# 创建pvc

[root@k8s-master01 storageclass]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

test-claim1 Bound pvc-b334c653-bb8b-4e48-8aa1-469f8ea90fb3 1Gi RWX nfs 49s

# 可以看到pvc已经成功创建了

步骤总结:

1、供应商:创建一个nfs provisioner

2、创建storageclass,storageclass指定刚才创建的供应商

3、创建pvc,这个pvc指定storageclass

[root@k8s-master01 storageclass]# cat read-pod.yaml

kind: Pod

apiVersion: v1

metadata:

name: read-pod

spec:

containers:

- name: read-pod

image: nginx

imagePullPolicy: IfNotPresent

volumeMounts:

- name: nfs-pvc

mountPath: /usr/share/nginx/html

restartPolicy: "Never"

volumes:

- name: nfs-pvc

persistentVolumeClaim:

claimName: test-claim1

[root@k8s-master01 storageclass]# kubectl apply -f read-pod.yaml

pod/read-pod created

# 创建pod,挂载storageclass动态生成的pvc: test-claim1

[root@k8s-master01 storageclass]# kubectl get pods | grep read

read-pod 1/1 Running 0 88s

# 可以看到pod已经建立成功

[root@k8s-master01 storageclass]# echo nfs-provisioner > /data/nfs_pro/default-test-claim1-pvc-b334c653-bb8b-4e48-8aa1-469f8ea90fb3/index.html

# 写入文件测试

[root@k8s-master01 storageclass]# kubectl get pods -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nfs-provisioner-747db885fd-phwm2 1/1 Running 0 8m20s 10.244.58.201 k8s-node02 <none> <none>

read-pod 1/1 Running 0 3m 10.244.85.238 k8s-node01 <none> <none>

[root@k8s-master01 storageclass]# curl 10.244.85.238

nfs-provisioner

# 访问成功

K8S控制器Statefulset入门到企业实战应用

StatefulSet资源-YAML编写技巧

StatefulSet是为了管理有状态服务的问题而设计的

扩展:

有状态服务?

**StatefulSet是有状态的集合,管理有状态的服务,**它所管理的Pod的名称不能随意变化。数据持久化的目录也是不一样,每一个Pod都有自己独有的数据持久化存储目录。比如MySQL主从、redis集群等。

无状态服务?

**RC、Deployment、DaemonSet都是管理无状态的服务,**它们所管理的Pod的IP、名字,启停顺序等都是随机的。个体对整体无影响,所有pod都是共用一个数据卷的,部署的tomcat就是无状态的服务,tomcat被删除,在启动一个新的tomcat,加入到集群即可,跟tomcat的名字无关。

[root@k8s-master01 ~]# kubectl explain statefulset.

# 查看帮助

[root@k8s-master01 ~]# mkdir statefulset

[root@k8s-master01 ~]# cd statefulset/

# 创建工作目录

[root@k8s-master01 statefulset]# cat statefulset.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx

labels:

app: nginx

spec:

ports:

- port: 80

name: web

clusterIP: None

selector:

app: nginx

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: web

spec:

replicas: 2

selector:

matchLabels:

app: nginx

serviceName: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

imagePullPolicy: IfNotPresent

ports:

- name: web

containerPort: 80

volumeMounts:

- name: www

mountPath: /usr/share/nginx/html

volumeClaimTemplates:

- metadata:

name: www

spec:

accessModes: ["ReadWriteOnce"]

storageClassName: nfs

resources:

requests:

storage: 1Gi

[root@k8s-master01 statefulset]# kubectl apply -f statefulset.yaml

service/nginx created

statefulset.apps/web created

# 创建statefulset以及对应的service

[root@k8s-master01 statefulset]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nfs-provisioner-747db885fd-phwm2 1/1 Running 4 (105m ago) 18h

web-0 1/1 Running 0 39s

web-1 1/1 Running 0 38s

[root@k8s-master01 statefulset]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

test-claim1 Bound pvc-b334c653-bb8b-4e48-8aa1-469f8ea90fb3 1Gi RWX nfs 18h

www-web-0 Bound pvc-54ce83ca-698d-4c32-a0a2-1350f8717941 1Gi RWO nfs 88s

www-web-1 Bound pvc-35c5949e-0227-4d8e-bdbb-02c1ba9ce488 1Gi RWO nfs 85s

[root@k8s-master01 statefulset]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-35c5949e-0227-4d8e-bdbb-02c1ba9ce488 1Gi RWO Delete Bound default/www-web-1 nfs 88s

pvc-54ce83ca-698d-4c32-a0a2-1350f8717941 1Gi RWO Delete Bound default/www-web-0 nfs 91s

pvc-b334c653-bb8b-4e48-8aa1-469f8ea90fb3 1Gi RWX Delete Bound default/test-claim1 nfs 18h

# 可以看到已经自动帮你创建了pv以及pvc

[root@k8s-master01 statefulset]# kubectl get pods -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nfs-provisioner-747db885fd-phwm2 1/1 Running 4 (109m ago) 18h 10.244.58.203 k8s-node02 <none> <none>

web-0 1/1 Running 0 4m1s 10.244.58.204 k8s-node02 <none> <none>

web-1 1/1 Running 0 4m 10.244.85.242 k8s-node01 <none> <none>

[root@k8s-master01 statefulset]# echo web-test-0 > /data/nfs_pro/default-www-web-0-pvc-54ce83ca-698d-4c32-a0a2-1350f8717941/index.html

[root@k8s-master01 statefulset]# echo web-test-1 > /data/nfs_pro/default-www-web-1-pvc-35c5949e-0227-4d8e-bdbb-02c1ba9ce488/index.html

[root@k8s-master01 statefulset]# curl 10.244.58.204

web-test-0

[root@k8s-master01 statefulset]# curl 10.244.85.242

web-test-1

# 测试成功,pod分别使用不同卷

[root@k8s-master01 statefulset]# kubectl run busybox --image docker.io/library/busybox:1.28 --image-pull-policy=IfNotPresent --restart=Never --rm -it busybox -- sh

If you don't see a command prompt, try pressing enter.

/ # nslookup nginx

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: nginx

Address 1: 10.244.58.204 web-0.nginx.default.svc.cluster.local

Address 2: 10.244.85.242 web-1.nginx.default.svc.cluster.local

/ # exit

pod "busybox" deleted

# 因为ClusterIP设置为了None,所以解析出来是两个pod的地址

[root@k8s-master01 statefulset]# kubectl delete -f statefulset.yaml

service "nginx" deleted

statefulset.apps "web" deleted

# 删除service以及statefulset

[root@k8s-master01 statefulset]# cat statefulset.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx

labels:

app: nginx

spec:

ports:

- port: 80

name: web

selector:

app: nginx

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: web

spec:

replicas: 2

selector:

matchLabels:

app: nginx

serviceName: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

imagePullPolicy: IfNotPresent

ports:

- name: web

containerPort: 80

volumeMounts:

- name: www

mountPath: /usr/share/nginx/html

volumeClaimTemplates:

- metadata:

name: www

spec:

accessModes: ["ReadWriteOnce"]

storageClassName: nfs

resources:

requests:

storage: 1Gi

[root@k8s-master01 statefulset]# kubectl apply -f statefulset.yaml

service/nginx created

statefulset.apps/web created

# 删除clusterIP: None,也就是给一个ip给service看看会怎么样

[root@k8s-master01 statefulset]# kubectl run busybox --image docker.io/library/busybox:1.28 --image-pull-policy=IfNotPresent --restart=Never --rm -it busybox -- sh

If you don't see a command prompt, try pressing enter.

/ # nslookup nginx

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: nginx

Address 1: 10.96.165.5 nginx.default.svc.cluster.local

/ # exit

pod "busybox" deleted

[root@k8s-master01 statefulset]# kubectl get svc -owide -l app=nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

nginx ClusterIP 10.96.165.5 <none> 80/TCP 48s app=nginx

# 可以看到解析出来是service的ip

StatefulSet总结:

1、Statefulset管理的pod,pod名字是有序的,由statefulset的名字-0、1、2这种格式组成

2、创建statefulset资源的时候,必须事先创建好一个service,如果创建的service没有ip,那对这个service做dns解析,会找到它所关联的pod ip,如果创建的service有ip,那对这个service做dns解析,会解析到service本身ip。

3、statefulset管理的pod,删除pod,新创建的pod名字跟删除的pod名字是一样的

4、statefulset具有volumeclaimtemplate这个字段,这个是卷申请模板,会自动创建pv,pvc也会自动生成,跟pv进行绑定,那如果创建的statefulset使用了volumeclaimtemplate这个字段,那创建pod,数据目录是独享的

5、ststefulset创建的pod,是域名的(域名组成:pod-name.svc-name.svc-namespace.svc.cluster.local)

StatefulSet管理pod-扩缩容和更新

[root@k8s-master01 statefulset]# cat statefulset.yaml |grep replicas:

replicas: 3

[root@k8s-master01 statefulset]# kubectl apply -f statefulset.yaml

service/nginx unchanged

statefulset.apps/web configured

[root@k8s-master01 statefulset]# kubectl get pods -w -l app=nginx

NAME READY STATUS RESTARTS AGE

web-0 1/1 Running 0 43m

web-1 1/1 Running 0 43m

web-2 0/1 Pending 0 0s

web-2 0/1 Pending 0 0s

web-2 0/1 Pending 0 1s

web-2 0/1 ContainerCreating 0 1s

web-2 0/1 ContainerCreating 0 1s

web-2 1/1 Running 0 2s

# 直接修改yaml文件实现pod扩容

[root@k8s-master01 statefulset]# cat statefulset.yaml |grep replicas:

replicas: 2

[root@k8s-master01 statefulset]# kubectl apply -f statefulset.yaml

service/nginx unchanged

statefulset.apps/web configured

[root@k8s-master01 statefulset]# kubectl get pods -w -l app=nginx

NAME READY STATUS RESTARTS AGE

web-0 1/1 Running 0 44m

web-1 1/1 Running 0 44m

web-2 1/1 Running 0 5s

web-2 1/1 Terminating 0 13s

web-2 1/1 Terminating 0 13s

web-2 0/1 Terminating 0 14s

web-2 0/1 Terminating 0 14s

web-2 0/1 Terminating 0 14s

^C[root@k8s-master01 statefulset]# kubectl get pods -l app=nginx

NAME READY STATUS RESTARTS AGE

web-0 1/1 Running 0 45m

web-1 1/1 Running 0 45m

# 实现pod缩容

更新

[root@k8s-master01 statefulset]# kubectl explain statefulset.spec.updateStrategy.

# 查看帮助

[root@k8s-master01 statefulset]# cat statefulset.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx

labels:

app: nginx

spec:

ports:

- port: 80

name: web

selector:

app: nginx

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: web

spec:

replicas: 3

updateStrategy:

rollingUpdate:

partition: 1

# 意为只更新序号大于等于1的pod

maxUnavailable: 0

# 最多不可用pod数为0

selector:

matchLabels:

app: nginx

serviceName: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: ikubernetes/myapp:v1

imagePullPolicy: IfNotPresent

ports:

- name: web

containerPort: 80

volumeMounts:

- name: www

mountPath: /usr/share/nginx/html

volumeClaimTemplates:

- metadata:

name: www

spec:

accessModes: ["ReadWriteOnce"]

storageClassName: nfs

resources:

requests:

storage: 1Gi

[root@k8s-master01 statefulset]# kubectl apply -f statefulset.yaml

service/nginx unchanged

statefulset.apps/web configured

[root@k8s-master01 statefulset]# kubectl get pods -l app=nginx -w

NAME READY STATUS RESTARTS AGE

web-0 1/1 Running 0 17s

web-1 1/1 Running 0 15s

web-2 1/1 Running 0 14s

web-2 1/1 Terminating 0 24s

web-2 1/1 Terminating 0 24s

web-2 0/1 Terminating 0 25s

web-2 0/1 Terminating 0 25s

web-2 0/1 Terminating 0 25s

web-2 0/1 Pending 0 0s

web-2 0/1 Pending 0 0s

web-2 0/1 ContainerCreating 0 0s

web-2 0/1 ContainerCreating 0 0s

web-2 1/1 Running 0 1s

web-1 1/1 Terminating 0 27s

web-1 0/1 Terminating 0 28s

web-1 0/1 Terminating 0 28s

web-1 0/1 Terminating 0 28s

web-1 0/1 Pending 0 0s

web-1 0/1 Pending 0 0s

web-1 0/1 ContainerCreating 0 0s

web-1 0/1 ContainerCreating 0 0s

web-1 1/1 Running 0 2s

# 先从大的开始删(只测过一次,不确定)

需要注意rollingUpdate下的partition字段,整数类型,

如果是1,就代表只更新序号大于等于1的pod

测试OnDelete类型

[root@k8s-master01 statefulset]# cat statefulset.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx

labels:

app: nginx

spec:

ports:

- port: 80

name: web

selector:

app: nginx

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: web

spec:

replicas: 3

updateStrategy:

type: OnDelete

selector:

matchLabels:

app: nginx

serviceName: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: ikubernetes/myapp:v2

imagePullPolicy: IfNotPresent

ports:

- name: web

containerPort: 80

volumeMounts:

- name: www

mountPath: /usr/share/nginx/html

volumeClaimTemplates:

- metadata:

name: www

spec:

accessModes: ["ReadWriteOnce"]

storageClassName: nfs

resources:

requests:

storage: 1Gi

[root@k8s-master01 statefulset]# kubectl apply -f statefulset.yaml

service/nginx unchanged

statefulset.apps/web configured

[root@k8s-master01 statefulset]# kubectl get pods -l app=nginx -w

NAME READY STATUS RESTARTS AGE

web-0 1/1 Running 0 2m30s

web-1 1/1 Running 0 2m

web-2 1/1 Running 0 2m2s

# 没有更新

[root@k8s-master01 statefulset]# kubectl delete pod web-0

pod "web-0" deleted

[root@k8s-master01 statefulset]# kubectl delete pod web-1

pod "web-1" deleted

[root@k8s-master01 statefulset]# kubectl delete pod web-2

pod "web-2" deleted

[root@k8s-master01 statefulset]# kubectl get pods -l app=nginx

NAME READY STATUS RESTARTS AGE

web-0 1/1 Running 0 10s

web-1 1/1 Running 0 8s

web-2 1/1 Running 0 5s

# OnDelete类型必须要手动删除pod才会更新

[root@k8s-master01 statefulset]# kubectl delete -f statefulset.yaml

service "nginx" deleted

statefulset.apps "web" deleted

# 清除环境

K8S控制器DaemonSet入门到企业实战应用

Daemonset控制器基本介绍

DaemonSet概述

DaemonSet控制器能够确保k8s集群所有的节点都运行一个相同的pod副本,当向k8s集群中增加node节点时,这个node节点也会自动创建一个pod副本,当node节点从集群移除,这些pod也会自动删除;删除Daemonset也会删除它们创建的pod

DaemonSet工作原理:如何管理Pod?

daemonset的控制器会监听kuberntes的daemonset对象、pod对象、node对象,这些被监听的对象之变动,就会触发syncLoop循环让kubernetes集群朝着daemonset对象描述的状态进行演进。

Daemonset具有实战应用场景分析

在集群的每个节点上运行存储,比如:glusterd 或 ceph。

在每个节点上运行日志收集组件,比如:flunentd 、 logstash、filebeat等。

在每个节点上运行监控组件,比如:Prometheus、 Node Exporter 、collectd等。

通过YAML文件创建Daemonset资源技巧

[root@k8s-master01 ~]# kubectl explain ds.

# 查看帮助

Daemonset实战: 部署收集日志组件

ctr -n k8s.io images import fluentd_2_5_1.tar.gz

# 所有节点导入镜像

[root@k8s-master01 ~]# kubectl describe node k8s-master01 |grep -i taint

Taints: node-role.kubernetes.io/control-plane:NoSchedule

# 查看master节点污点

[root@k8s-master01 ~]# mkdir daemonset

[root@k8s-master01 ~]# cd daemonset/

# 创建工作目录

[root@k8s-master01 daemonset]# cat daemonset.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: fluentd-elasticsearch

namespace: kube-system

labels:

k8s-app: fluentd-logging

spec:

selector:

matchLabels:

name: fluentd-elasticsearch

template:

metadata:

labels:

name: fluentd-elasticsearch

spec:

tolerations:

- key: node-role.kubernetes.io/control-plane

effect: NoSchedule

containers:

- name: fluentd-elasticsearch

image: xianchao/fluentd:v2.5.1

imagePullPolicy: IfNotPresent

resources:

requests:

cpu: 100m

memory: 200Mi

limits:

cpu: 100m

memory: 200Mi

volumeMounts:

- name: varlog

mountPath: /var/log

readOnly: true

- name: varlibcontainerdiocontainerdgrpcv1cricontainers

mountPath: /var/lib/containerd/io.containerd.grpc.v1.cri/containers

readOnly: true

volumes:

- name: varlog

hostPath:

path: /var/log

- name: varlibcontainerdiocontainerdgrpcv1cricontainers

hostPath:

path: /var/lib/containerd/io.containerd.grpc.v1.cri/containers

# 这个目录自己琢磨了一下,应该存放的是containerd的运行中容器状态

[root@k8s-master01 daemonset]# kubectl apply -f daemonset.yaml

daemonset.apps/fluentd-elasticsearch created

[root@k8s-master01 daemonset]# kubectl get ds -n kube-system -l k8s-app=fluentd-logging

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

fluentd-elasticsearch 3 3 3 3 3 <none> 94s

[root@k8s-master01 daemonset]# kubectl get pods -n kube-system -l name=fluentd-elasticsearch -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

fluentd-elasticsearch-482h6 1/1 Running 0 3m18s 10.244.85.255 k8s-node01 <none> <none>

fluentd-elasticsearch-5hscz 1/1 Running 0 3m17s 10.244.58.217 k8s-node02 <none> <none>

fluentd-elasticsearch-jjf8w 1/1 Running 0 3m17s 10.244.32.161 k8s-master01 <none> <none>

# 可以看到已经部署成功

Daemonset管理Pod: 动态更新和回滚

[root@k8s-master01 statefulset]# kubectl explain ds.spec.updateStrategy.rollingUpdate.

# 查看帮助

[root@k8s-master01 daemonset]# kubectl set image daemonsets fluentd-elasticsearch fluentd-elasticsearch=ikubernetes/filebeat:5.6.6-alpine -n kube-system

daemonset.apps/fluentd-elasticsearch image updated

# 这个镜像启动pod会有问题,主要是演示daemonset如何在命令行更新pod

[root@k8s-master01 daemonset]# kubectl rollout history daemonset fluentd-elasticsearch -n kube-system

daemonset.apps/fluentd-elasticsearch

REVISION CHANGE-CAUSE

1 <none>

2 <none>

[root@k8s-master01 daemonset]# kubectl -n kube-system rollout undo daemonset fluentd-elasticsearch --to-revision=1

daemonset.apps/fluentd-elasticsearch rolled back

# 回滚

[root@k8s-master01 daemonset]# kubectl get pods -n kube-system -l name=fluentd-elasticsearch -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

fluentd-elasticsearch-cm55l 1/1 Running 0 16m 10.244.58.214 k8s-node02 <none> <none>

fluentd-elasticsearch-lxmd8 1/1 Running 0 13s 10.244.32.164 k8s-master01 <none> <none>

fluentd-elasticsearch-x5jrc 1/1 Running 0 16m 10.244.85.193 k8s-node01 <none> <none>

# 状态正常

[root@k8s-master01 daemonset]# kubectl delete -f daemonset.yaml

daemonset.apps "fluentd-elasticsearch" deleted

# 清除环境

K8S配置管理中心ConfigMap实现微服务配置管理

配置管理中心Configmap基本介绍

Configmap是k8s中的资源对象,用于保存非机密性的配置的,数据可以用key/value键值对的形式保存,也可通过文件的形式保存。

- configmap是k8s的资源,相当于配置文件,可以有一个或者多个configmap;

- configmap可以做成volume,k8s pod启动后,通过volume挂载到容器内部指定目录;

- 容器内部应用按照原有方式读取特定目录上的配置文件;

- 在容器看来,配置文件就像是打包在容器内部特定目录,整个过程对应用没有任何侵入.

Configmap具体实战应用场景分析

集群跑着服务,像nginx,tomcat,mysql,突然资源不够用了,需要加机器,加机器的话又要更新配置,一个一个修改很麻烦,这时候就有configmap,可以把配置信息之类的存在configmap,通过volume卷挂载进去

Configmap注入方式有两种: 一种是将configmap作为存储卷,一种是将configmap通过env中configMapKeyRef注入到容器中

使用微服务架构的话,存在多个服务共用配置的情况,如果每个服务中心单独一份配置的话,那么更新配置很麻烦,使用configmap可以友好的进行配置共享

configmap局限性

configmap设计上不是用来保存大量数据的,保存在configmap中的数据不能超过1MiB,如果你需要保存超过此尺寸限制的数据,可以考虑挂载存储卷或者使用独立数据库或文件服务

创建configmap的第一种方案: 指定参数

[root@k8s-master01 ~]# kubectl create cm --help

[root@k8s-master01 ~]# kubectl create cm --help |grep '\-\-from\-literal=\[]' -A1

--from-literal=[]:

Specify a key and literal value to insert in configmap (i.e. mykey=somevalue)

# 查看帮助

[root@k8s-master01 ~]# kubectl create cm tomcat-config --from-literal=tomcat-port=8080 --from-literal=tomcat-server_name=myapp.tomcat.com

configmap/tomcat-config created

[root@k8s-master01 ~]# kubectl describe cm tomcat-config

Name: tomcat-config

Namespace: default

Labels: <none>

Annotations: <none>

Data

====

tomcat-server_name:

----

myapp.tomcat.com

tomcat-port:

----

8080

BinaryData

====

Events: <none>

# 创建一个名为tomcat-config的cm

创建configmap的第二种方案: 指定文件

[root@k8s-master01 ~]# kubectl create cm www-nginx --from-file=www=./nginx.conf

configmap/www-nginx created

[root@k8s-master01 ~]# kubectl describe cm www-nginx

Name: www-nginx

Namespace: default

Labels: <none>

Annotations: <none>

Data

====

www:

# 这里是--from-file后面那个参数定义的

----

server {

server_name www.nginx.com;

listen 80;

root /home/nginx/www/

}

BinaryData

====

Events: <none>

[root@k8s-master01 ~]# kubectl describe cm www-nginx-1

Name: www-nginx-1

Namespace: default

Labels: <none>

Annotations: <none>

Data

====

nginx.conf:

# 如果不写的话就是文件名

----

server {

server_name www.nginx.com;

listen 80;

root /home/nginx/www/

}

BinaryData

====

Events: <none>

创建configmap的第三种方案: 指定文件夹

[root@k8s-master01 ~]# mkdir configmap

[root@k8s-master01 ~]# cd configmap/

[root@k8s-master01 configmap]# mv ../nginx.conf ./

[root@k8s-master01 configmap]# mkdir test-a

[root@k8s-master01 configmap]# cd test-a/

[root@k8s-master01 test-a]# echo server-id=1 > my-server.cnf

[root@k8s-master01 test-a]# echo server-id=2 > my-slave.cnf

[root@k8s-master01 test-a]# kubectl create cm mysql-config --from-file=/root/configmap/test-a/

configmap/mysql-config created

# 通过目录创建configmap

[root@k8s-master01 test-a]# kubectl describe cm mysql-config

Name: mysql-config

Namespace: default

Labels: <none>

Annotations: <none>

Data

====

my-server.cnf:

----

server-id=1

my-slave.cnf:

----

server-id=2

BinaryData

====

Events: <none>

通过YAML文件创建configmap技巧

[root@k8s-master01 test-a]# cd ..

[root@k8s-master01 configmap]# kubectl explain cm.

# 查看帮助

[root@k8s-master01 configmap]# cat mysql-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: mysql

labels:

app: mysql

data:

master.cnf: |

[mysqld]

log-bin

log_bin_trust_function_creators=1

lower_case_table_names=1

slave.cnf: |

[mysqld]

super-read-only

log_bin_trust_function_creators=1

# 对于多行数据|必须要加,这代表多行字符串保留为单个字符串

[root@k8s-master01 configmap]# kubectl apply -f mysql-configmap.yaml

configmap/mysql created

[root@k8s-master01 configmap]# kubectl describe cm mysql

Name: mysql

Namespace: default

Labels: app=mysql

Annotations: <none>

Data

====

master.cnf:

----

[mysqld]

log-bin

log_bin_trust_function_creators=1

lower_case_table_names=1

slave.cnf:

----

[mysqld]

super-read-only

log_bin_trust_function_creators=1

BinaryData

====

Events: <none>

[root@k8s-master01 configmap]# kubectl delete -f mysql-configmap.yaml

configmap "mysql" deleted

# 删除cm

注意:

多行数据必须要加 “|”

使用cm第一种方式: ConfigMapKeyRef

[root@k8s-master01 configmap]# cat mysql-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: mysql

labels:

app: mysql

data:

log: "1"

lower: "1"

[root@k8s-master01 configmap]# kubectl apply -f mysql-configmap.yaml

configmap/mysql created

# 创建cm

[root@k8s-master01 configmap]# cat mysql-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: mysql-pod

spec:

containers:

- name: mysql

image: busybox

imagePullPolicy: IfNotPresent

command: ["/bin/sh", "-c", "sleep 3600"]

env:

- name: log-bin

# 指定环境变量名字

valueFrom:

configMapKeyRef:

name: mysql

# 指定cm的名字

key: log

# 指定cm中的key

- name: lower

valueFrom:

configMapKeyRef:

name: mysql

key: lower

[root@k8s-master01 configmap]# kubectl apply -f mysql-pod.yaml

pod/mysql-pod created

[root@k8s-master01 configmap]# kubectl exec -it mysql-pod -c mysql -- /bin/sh

/ # printenv

KUBERNETES_PORT=tcp://10.96.0.1:443

KUBERNETES_SERVICE_PORT=443

HOSTNAME=mysql-pod

SHLVL=1

HOME=/root

NGINX_PORT_80_TCP=tcp://10.100.169.159:80

TERM=xterm

lower=1

KUBERNETES_PORT_443_TCP_ADDR=10.96.0.1

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

NGINX_SERVICE_HOST=10.100.169.159

KUBERNETES_PORT_443_TCP_PORT=443

KUBERNETES_PORT_443_TCP_PROTO=tcp

NGINX_SERVICE_PORT=80

NGINX_PORT=tcp://10.100.169.159:80

log-bin=1

KUBERNETES_PORT_443_TCP=tcp://10.96.0.1:443

KUBERNETES_SERVICE_PORT_HTTPS=443

NGINX_SERVICE_PORT_WEB=80

KUBERNETES_SERVICE_HOST=10.96.0.1

PWD=/

NGINX_PORT_80_TCP_ADDR=10.100.169.159

NGINX_PORT_80_TCP_PORT=80

NGINX_PORT_80_TCP_PROTO=tcp

/ # exit

# 查看环境变量

[root@k8s-master01 configmap]# kubectl delete -f mysql-pod.yaml

pod "mysql-pod" deleted

# 清除环境

使用configmap第二种方式: envFrom

[root@k8s-master01 configmap]# cat mysql-pod-envfrom.yaml

apiVersion: v1

kind: Pod

metadata:

name: mysql-pod-envfrom

spec:

containers:

- name: mysql

image: busybox

imagePullPolicy: IfNotPresent

command: ["/bin/sh", "-c", "sleep 3600"]

envFrom:

- configMapRef:

name: mysql

[root@k8s-master01 configmap]# kubectl apply -f mysql-pod-envfrom.yaml

pod/mysql-pod-envfrom created

[root@k8s-master01 configmap]# kubectl exec -it mysql-pod-envfrom -c mysql -- /bin/sh

/ # printenv

KUBERNETES_SERVICE_PORT=443

KUBERNETES_PORT=tcp://10.96.0.1:443

HOSTNAME=mysql-pod-envfrom

SHLVL=1

HOME=/root

TERM=xterm

lower=1

KUBERNETES_PORT_443_TCP_ADDR=10.96.0.1

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

log=1

KUBERNETES_PORT_443_TCP_PORT=443

KUBERNETES_PORT_443_TCP_PROTO=tcp

KUBERNETES_SERVICE_PORT_HTTPS=443

KUBERNETES_PORT_443_TCP=tcp://10.96.0.1:443

KUBERNETES_SERVICE_HOST=10.96.0.1

PWD=/

/ # exit

# 查看环境变量

[root@k8s-master01 configmap]# kubectl delete -f mysql-pod-envfrom.yaml

pod "mysql-pod-envfrom" deleted

# 清除环境

使用configmap第三种方式: volume

[root@k8s-master01 configmap]# cat mysql-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: mysql

labels:

app: mysql

data:

log: "1"

lower: "1"

my.cnf: |

[mysqld]

Welcome=yuhang

[root@k8s-master01 configmap]# kubectl apply -f mysql-configmap.yaml

configmap/mysql configured

# 更新cm

[root@k8s-master01 configmap]# cat mysql-pod-volume.yaml

apiVersion: v1

kind: Pod

metadata:

name: mysql-pod-volume

spec:

containers:

- name: mysql

image: busybox

imagePullPolicy: IfNotPresent

command: ["/bin/sh", "-c", "sleep 3600"]

volumeMounts:

- name: mysql-config

mountPath: /tmp/config

volumes:

- name: mysql-config

configMap:

name: mysql

[root@k8s-master01 configmap]# kubectl apply -f mysql-pod-volume.yaml

pod/mysql-pod-volume created

# 创建pod

[root@k8s-master01 configmap]# kubectl exec -it mysql-pod-volume -c mysql -- /bin/sh

/ # cd /tmp/config/

/tmp/config # ls

log lower my.cnf

/tmp/config # cat log

1/tmp/config # cat lower

1/tmp/config # cat my.cnf

[mysqld]

Welcome=yuhang

/tmp/config # exit

# 查看挂载目录下文件内容

Configmap热加载: 自动更新配置

[root@k8s-master01 configmap]# kubectl edit cm mysql

data:

log: "2"

# 修改log值为2

[root@k8s-master01 configmap]# kubectl exec -it mysql-pod-volume -c mysql -- /bin/sh

/ # cd /tmp/config/

/tmp/config # cat log

2/tmp/config # exit

# 有时候没改过来可能是还在改,过一会再看看

[root@k8s-master01 configmap]# kubectl delete -f mysql-pod-volume.yaml

pod "mysql-pod-volume" deleted

# 清除环境

nfigmap]# kubectl delete -f mysql-pod-envfrom.yaml

pod “mysql-pod-envfrom” deleted

清除环境

#### 使用configmap第三种方式: volume

```shell

[root@k8s-master01 configmap]# cat mysql-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: mysql

labels:

app: mysql

data:

log: "1"

lower: "1"

my.cnf: |

[mysqld]

Welcome=yuhang

[root@k8s-master01 configmap]# kubectl apply -f mysql-configmap.yaml

configmap/mysql configured

# 更新cm

[root@k8s-master01 configmap]# cat mysql-pod-volume.yaml

apiVersion: v1

kind: Pod

metadata:

name: mysql-pod-volume

spec:

containers:

- name: mysql

image: busybox

imagePullPolicy: IfNotPresent

command: ["/bin/sh", "-c", "sleep 3600"]

volumeMounts:

- name: mysql-config

mountPath: /tmp/config

volumes:

- name: mysql-config

configMap:

name: mysql

[root@k8s-master01 configmap]# kubectl apply -f mysql-pod-volume.yaml

pod/mysql-pod-volume created

# 创建pod

[root@k8s-master01 configmap]# kubectl exec -it mysql-pod-volume -c mysql -- /bin/sh

/ # cd /tmp/config/

/tmp/config # ls

log lower my.cnf

/tmp/config # cat log

1/tmp/config # cat lower

1/tmp/config # cat my.cnf

[mysqld]

Welcome=yuhang

/tmp/config # exit

# 查看挂载目录下文件内容

Configmap热加载: 自动更新配置

[root@k8s-master01 configmap]# kubectl edit cm mysql

data:

log: "2"

# 修改log值为2

[root@k8s-master01 configmap]# kubectl exec -it mysql-pod-volume -c mysql -- /bin/sh

/ # cd /tmp/config/

/tmp/config # cat log

2/tmp/config # exit

# 有时候没改过来可能是还在改,过一会再看看

[root@k8s-master01 configmap]# kubectl delete -f mysql-pod-volume.yaml

pod "mysql-pod-volume" deleted

# 清除环境