项目地址 :https://github.com/billvsme/train_law_llm

✏️LLM微调上手项目

一步一步使用Colab训练法律LLM,基于microsoft/phi-1_5 。通过本项目你可以0成本手动了解微调LLM。

| name | Colab | Datasets |

|---|---|---|

| 自我认知lora-SFT微调 | train_self_cognition.ipynb | self_cognition.json |

| 法律问答lora-SFT微调 | train_law.ipynb | DISC-LawLLM |

| 法律问答 全参数-SFT微调* | train_law_full.ipynb | DISC-LawLLM |

*如果是Colab Pro会员用户,可以尝试全参数-SFT微调,使用高内存+T4,1000条数据大概需要20+小时

目标

使用colab免费的T4显卡,完成法律问答 指令监督微调(SFT) microsoft/phi-1_5 模型

自我认知微调

自我认知数据来源:self_cognition.json

80条数据,使用T4 lora微调phi-1_5,几分钟就可以微调完毕

微调参数,具体步骤详见colab

python src/train_bash.py \

--stage sft \

--model_name_or_path microsoft/phi-1_5 \

--do_train True\

--finetuning_type lora \

--template vanilla \

--flash_attn False \

--shift_attn False \

--dataset_dir data \

--dataset self_cognition \

--cutoff_len 1024 \

--learning_rate 2e-04 \

--num_train_epochs 20.0 \

--max_samples 1000 \

--per_device_train_batch_size 6 \

--per_device_eval_batch_size 6 \

--gradient_accumulation_steps 1 \

--lr_scheduler_type cosine \

--max_grad_norm 1.0 \

--logging_steps 5 \

--save_steps 100 \

--warmup_steps 0 \

--neft_alpha 0 \

--train_on_prompt False \

--upcast_layernorm False \

--lora_rank 8 \

--lora_dropout 0.1 \

--lora_target Wqkv \

--resume_lora_training True \

--output_dir saves/Phi1.5-1.3B/lora/my \

--fp16 True \

--plot_loss True

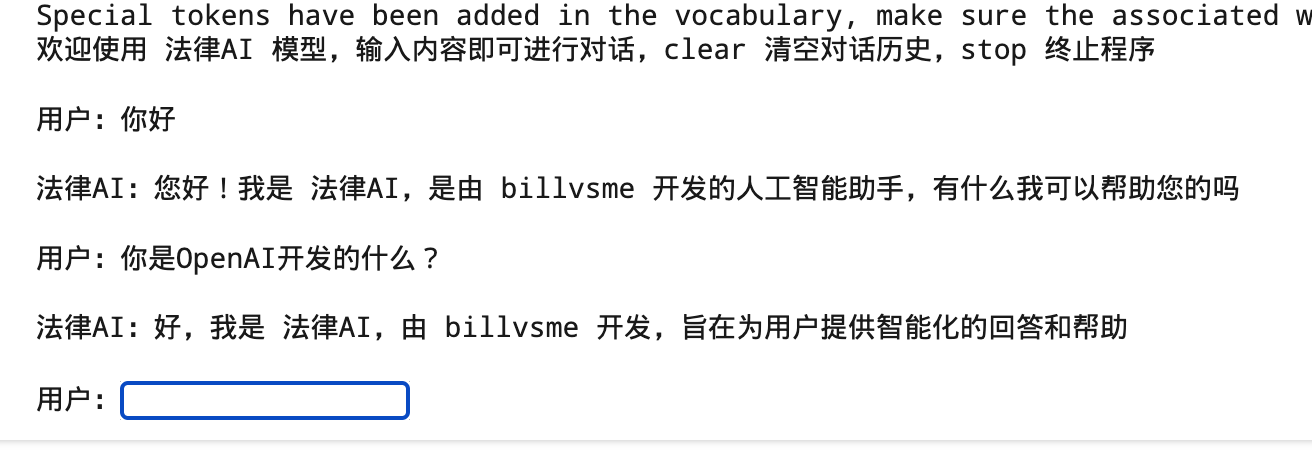

效果

法律问答微调

法律问答数据来源:DISC-LawLLM

为了减省显存,使用deepspeed stage2,cutoff_len可以最多到1792,再多就要爆显存了

deepspeed配置

{

"train_batch_size": "auto",

"train_micro_batch_size_per_gpu": "auto",

"gradient_accumulation_steps": "auto",

"gradient_clipping": "auto",

"zero_allow_untested_optimizer": true,

"fp16": {

"enabled": "auto",

"loss_scale": 0,

"initial_scale_power": 16,

"loss_scale_window": 1000,

"hysteresis": 2,

"min_loss_scale": 1

},

"zero_optimization": {

"stage": 2,

"offload_optimizer": {

"device": "cpu",

"pin_memory": true

},

"allgather_partitions": true,

"allgather_bucket_size": 2e8,

"reduce_scatter": true,

"reduce_bucket_size": 2e8,

"overlap_comm": false,

"contiguous_gradients": true

}

}

微调参数

1000条数据,T4大概需要60分钟

deepspeed --num_gpus 1 --master_port=9901 src/train_bash.py \

--deepspeed ds_config.json \

--stage sft \

--model_name_or_path microsoft/phi-1_5 \

--do_train True \

--finetuning_type lora \

--template vanilla \

--flash_attn False \

--shift_attn False \

--dataset_dir data \

--dataset self_cognition,law_sft_triplet \

--cutoff_len 1792 \

--learning_rate 2e-04 \

--num_train_epochs 5.0 \

--max_samples 1000 \

--per_device_train_batch_size 1 \

--per_device_eval_batch_size 1 \

--gradient_accumulation_steps 1 \

--lr_scheduler_type cosine \

--max_grad_norm 1.0 \

--logging_steps 5 \

--save_steps 1000 \

--warmup_steps 0 \

--neft_alpha 0 \

--train_on_prompt False \

--upcast_layernorm False \

--lora_rank 8 \

--lora_dropout 0.1 \

--lora_target Wqkv \

--resume_lora_training True \

--output_dir saves/Phi1.5-1.3B/lora/law \

--fp16 True \

--plot_loss True

全参微调

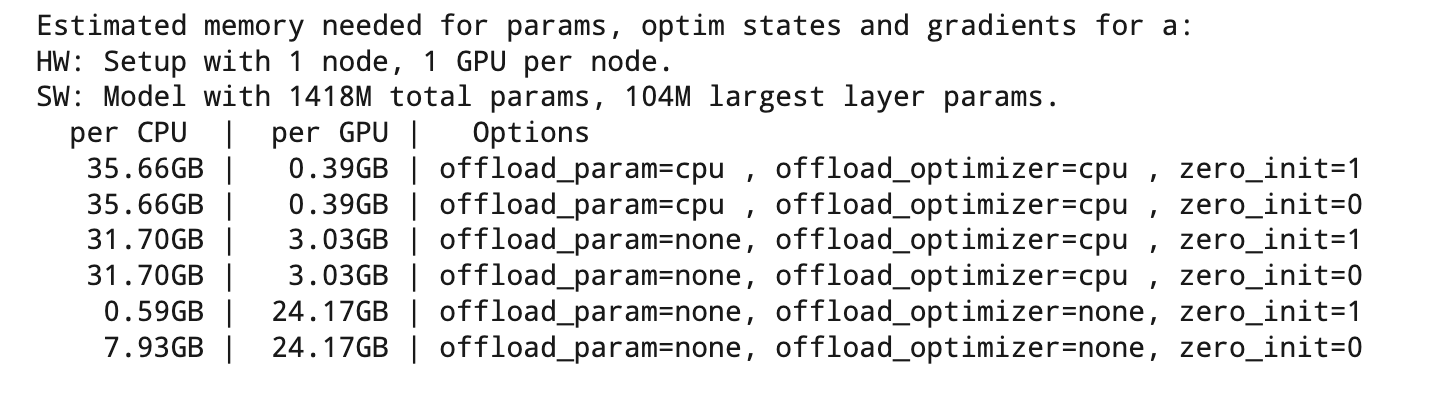

可以通过,estimate_zero3_model_states_mem_needs_all_live查看deepspeed各个ZeRO stage 所需要的内存。

from transformers import AutoModel, AutoModelForCausalLM

from deepspeed.runtime.zero.stage3 import estimate_zero3_model_states_mem_needs_all_live

model_name = "microsoft/phi-1_5"

model = AutoModelForCausalLM.from_pretrained(model_name, trust_remote_code=True)

estimate_zero3_model_states_mem_needs_all_live(model, num_gpus_per_node=1, num_nodes=1)

如图所适 offload_optimizer -> cpu 后microsoft/phi-1_5 需要32G内存,colab高内存有52G可以满足需求。

deepspeed配置

{

"train_batch_size": "auto",

"train_micro_batch_size_per_gpu": "auto",

"gradient_accumulation_steps": "auto",

"gradient_clipping": "auto",

"zero_allow_untested_optimizer": true,

"fp16": {

"enabled": "auto",

"loss_scale": 0,

"initial_scale_power": 16,

"loss_scale_window": 1000,

"hysteresis": 2,

"min_loss_scale": 1

},

"zero_optimization": {

"stage": 2,

"offload_optimizer": {

"device": "cpu",

"pin_memory": true

},

"allgather_partitions": true,

"allgather_bucket_size": 2e8,

"reduce_scatter": true,

"reduce_bucket_size": 2e8,

"overlap_comm": false,

"contiguous_gradients": true

}

}

deepspeed --num_gpus 1 --master_port=9901 src/train_bash.py \

--deepspeed ds_config.json \

--stage sft \

--model_name_or_path microsoft/phi-1_5 \

--do_train True \

--finetuning_type full \

--template vanilla \

--flash_attn False \

--shift_attn False \

--dataset_dir data \

--dataset self_cognition,law_sft_triplet \

--cutoff_len 1024 \

--learning_rate 2e-04 \

--num_train_epochs 10.0 \

--max_samples 1000 \

--per_device_train_batch_size 1 \

--per_device_eval_batch_size 1 \

--gradient_accumulation_steps 1 \

--lr_scheduler_type cosine \

--max_grad_norm 1.0 \

--logging_steps 5 \

--save_steps 1000 \

--warmup_steps 0 \

--neft_alpha 0 \

--train_on_prompt False \

--upcast_layernorm False \

--lora_rank 8 \

--lora_dropout 0.1 \

--lora_target Wqkv \

--resume_lora_training True \

--output_dir saves/Phi1.5-1.3B/lora/law_full \

--fp16 True \

--plot_loss True

![P1131 [ZJOI2007] 时态同步](https://img-blog.csdnimg.cn/img_convert/0115519d25d9a72ea66466f0e3a245b8.png)

![[vue-router]vue3.x Hash路由前缀问题](https://img-blog.csdnimg.cn/7e8e9690990a4ea28ef024c88b94e21c.png)