精华置顶

墙裂推荐!小白如何1个月系统学习CV核心知识:链接

点击@CV计算机视觉,关注更多CV干货

论文已打包,点击进入—>下载界面

点击加入—>CV计算机视觉交流群

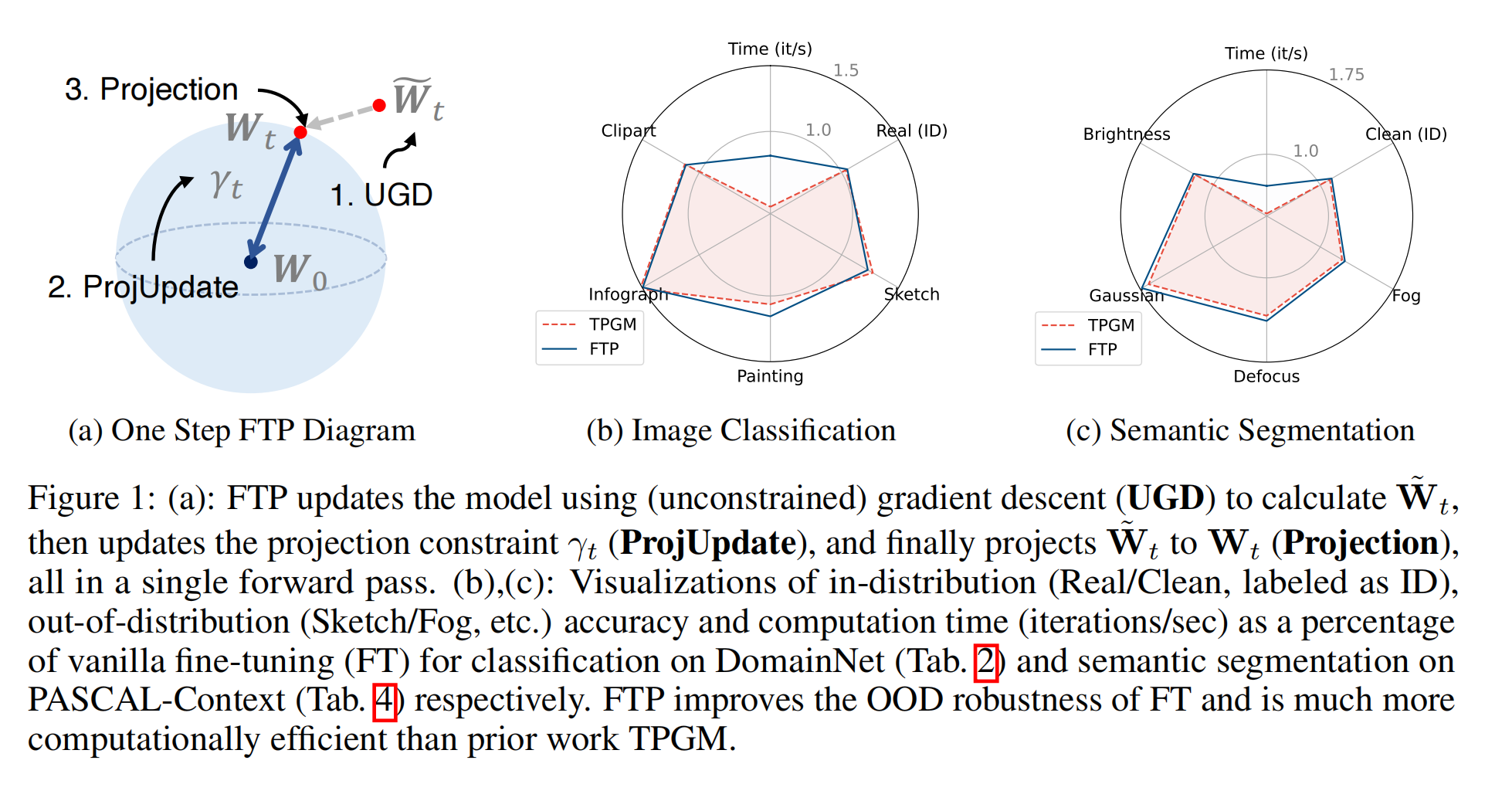

1.【基础网络架构】(NeurIPS2023)Fast Trainable Projection for Robust Fine-Tuning

-

论文地址:https://arxiv.org//pdf/2310.19182

-

开源代码:GitHub - GT-RIPL/FTP: This repo hosts the code for the Fast Trainable Projection (FTP) project.

2.【基础网络架构:Transformer】TransXNet: Learning Both Global and Local Dynamics with a Dual Dynamic Token Mixer for Visual Recognition

-

论文地址:https://arxiv.org//pdf/2310.19380

-

开源代码(即将开源):GitHub - LMMMEng/TransXNet

3.【图像分类】(NeurIPS2023)Analyzing Vision Transformers for Image Classification in Class Embedding Space

-

论文地址:https://arxiv.org//pdf/2310.18969

-

开源代码:GitHub - martinagvilas/vit-cls_emb: Accompanying code for "Analyzing Vision Tranformers in Class Embedding Space" (NeurIPS '23)

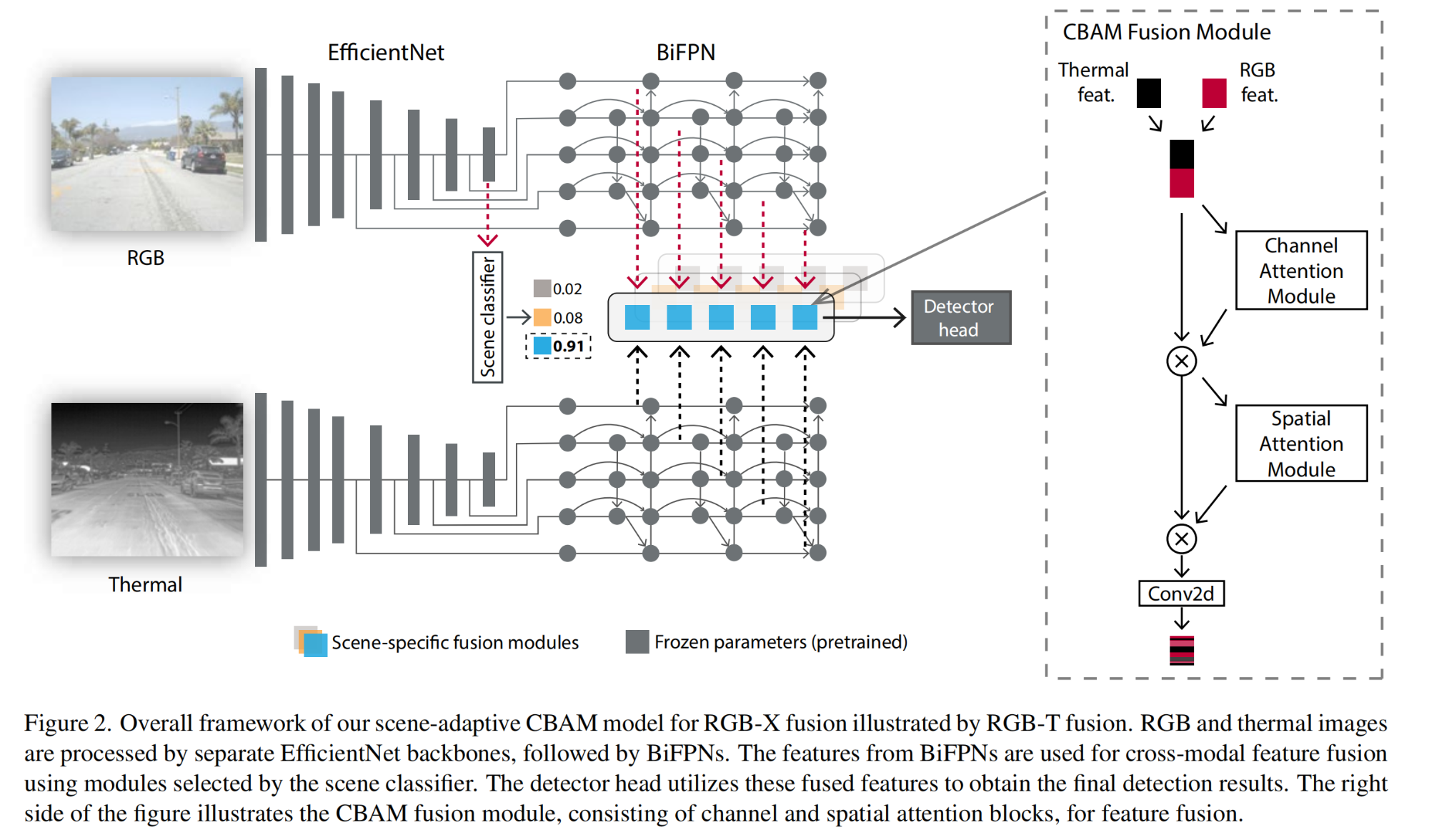

4.【目标检测】RGB-X Object Detection via Scene-Specific Fusion Modules

-

论文地址:https://arxiv.org//pdf/2310.19372

-

开源代码:GitHub - dsriaditya999/RGBXFusion

5.【目标检测】A High-Resolution Dataset for Instance Detection with Multi-View Instance Capture

-

论文地址:https://arxiv.org//pdf/2310.19257

-

开源代码:GitHub - insdet/instance-detection

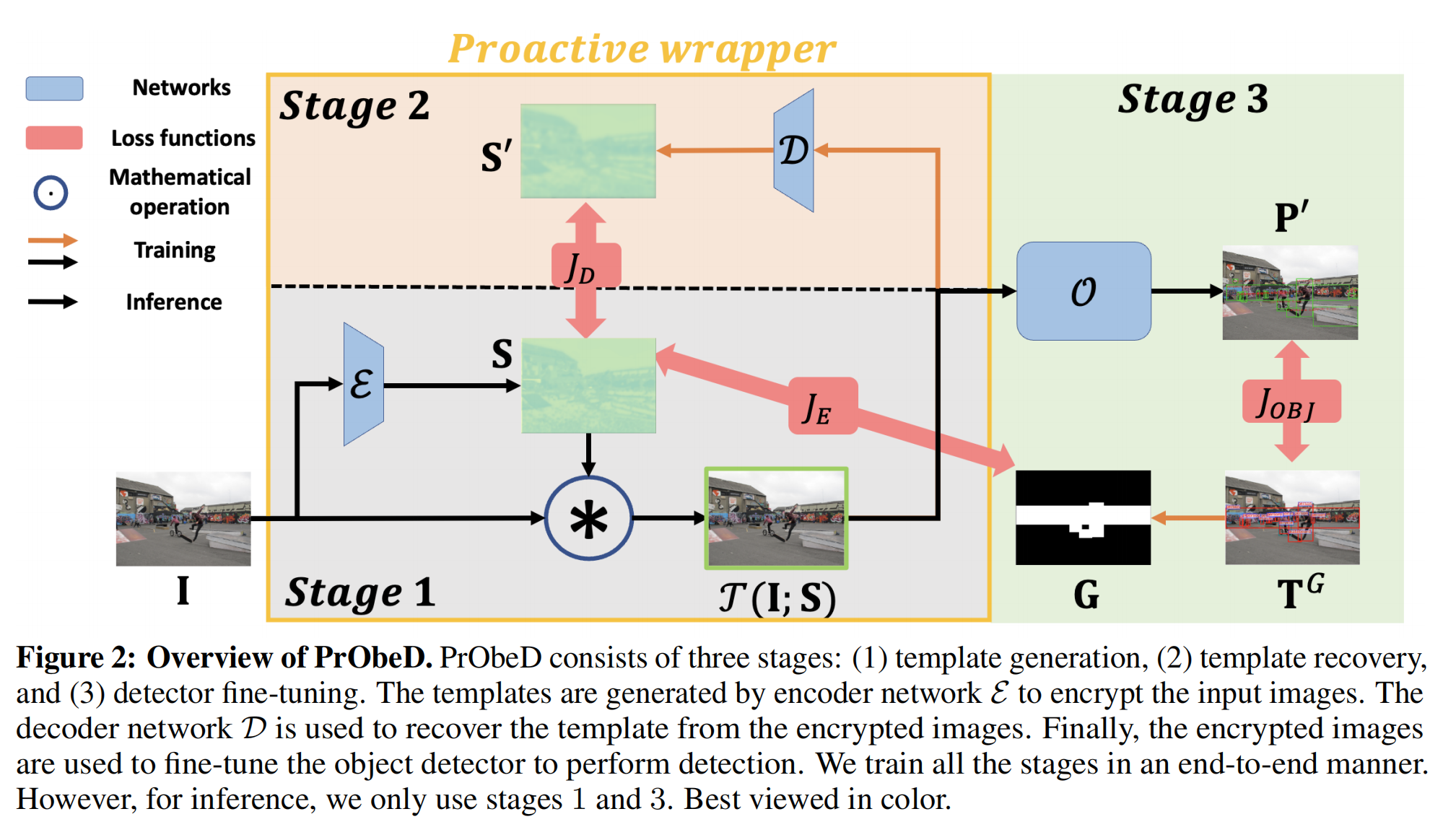

6.【目标检测】PrObeD: Proactive Object Detection Wrapper

-

论文地址:https://arxiv.org//pdf/2310.18788

-

开源代码(即将开源):GitHub - vishal3477/Proactive-Object-Detection

7.【异常检测】Myriad: Large Multimodal Model by Applying Vision Experts for Industrial Anomaly Detection

-

论文地址:https://arxiv.org//pdf/2310.19070

-

开源代码(即将开源):GitHub - tzjtatata/Myriad: Open-sourced codes, IAD vision-language datasets and pre-trained checkpoints for Myriad.

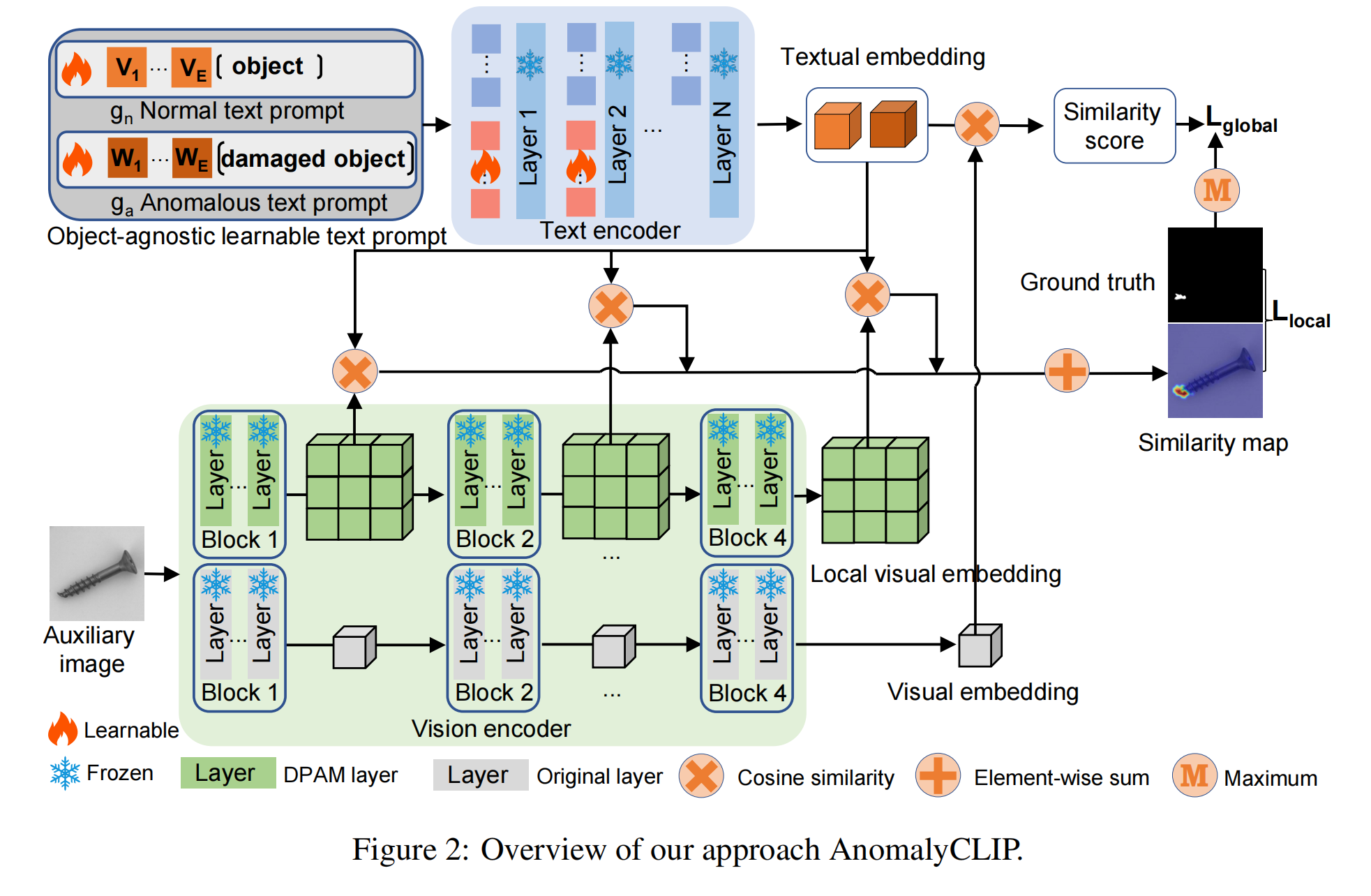

8.【异常检测】AnomalyCLIP: Object-agnostic Prompt Learning for Zero-shot Anomaly Detection

-

论文地址:https://arxiv.org//pdf/2310.18961

-

开源代码(即将开源):GitHub - zqhang/AnomalyCLIP

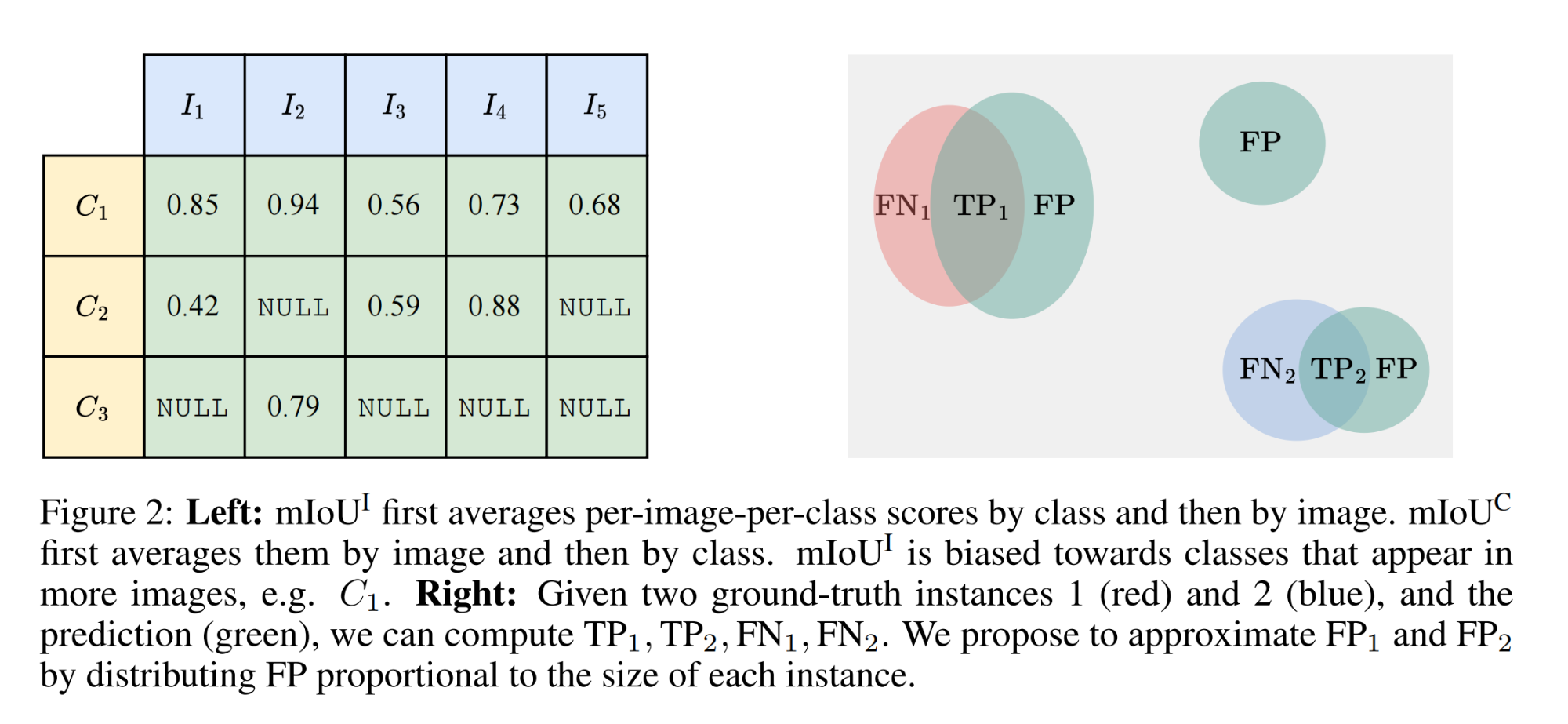

9.【语义分割】(NeurIPS2023)Revisiting Evaluation Metrics for Semantic Segmentation: Optimization and Evaluation of Fine-grained Intersection over Union

-

论文地址:https://arxiv.org//pdf/2310.19252

-

开源代码:GitHub - zifuwanggg/JDTLosses: Optimization with JDTLoss and Evaluation with Fine-grained mIoUs for Semantic Segmentation

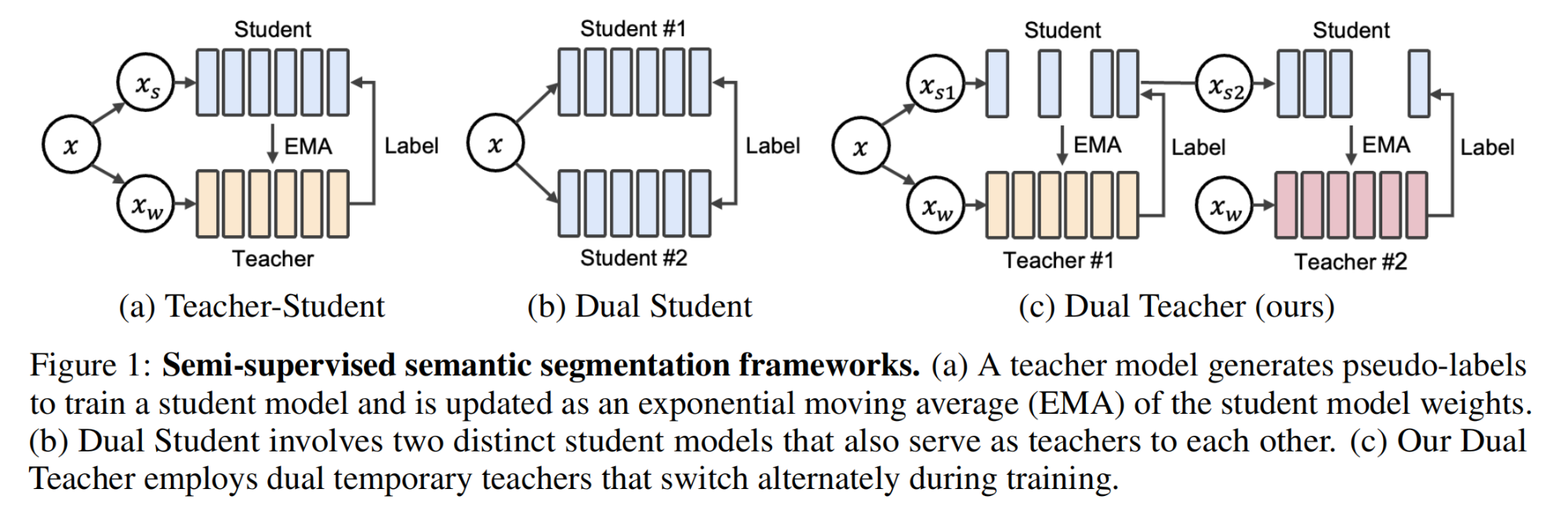

10.【语义分割】(NeurIPS2023)Switching Temporary Teachers for Semi-Supervised Semantic Segmentation

-

论文地址:https://arxiv.org//pdf/2310.18640

-

开源代码(即将开源):GitHub - naver-ai/dual-teacher: Official code for the NeurIPS 2023 paper "Switching Temporary Teachers for Semi-Supervised Semantic Segmentation"

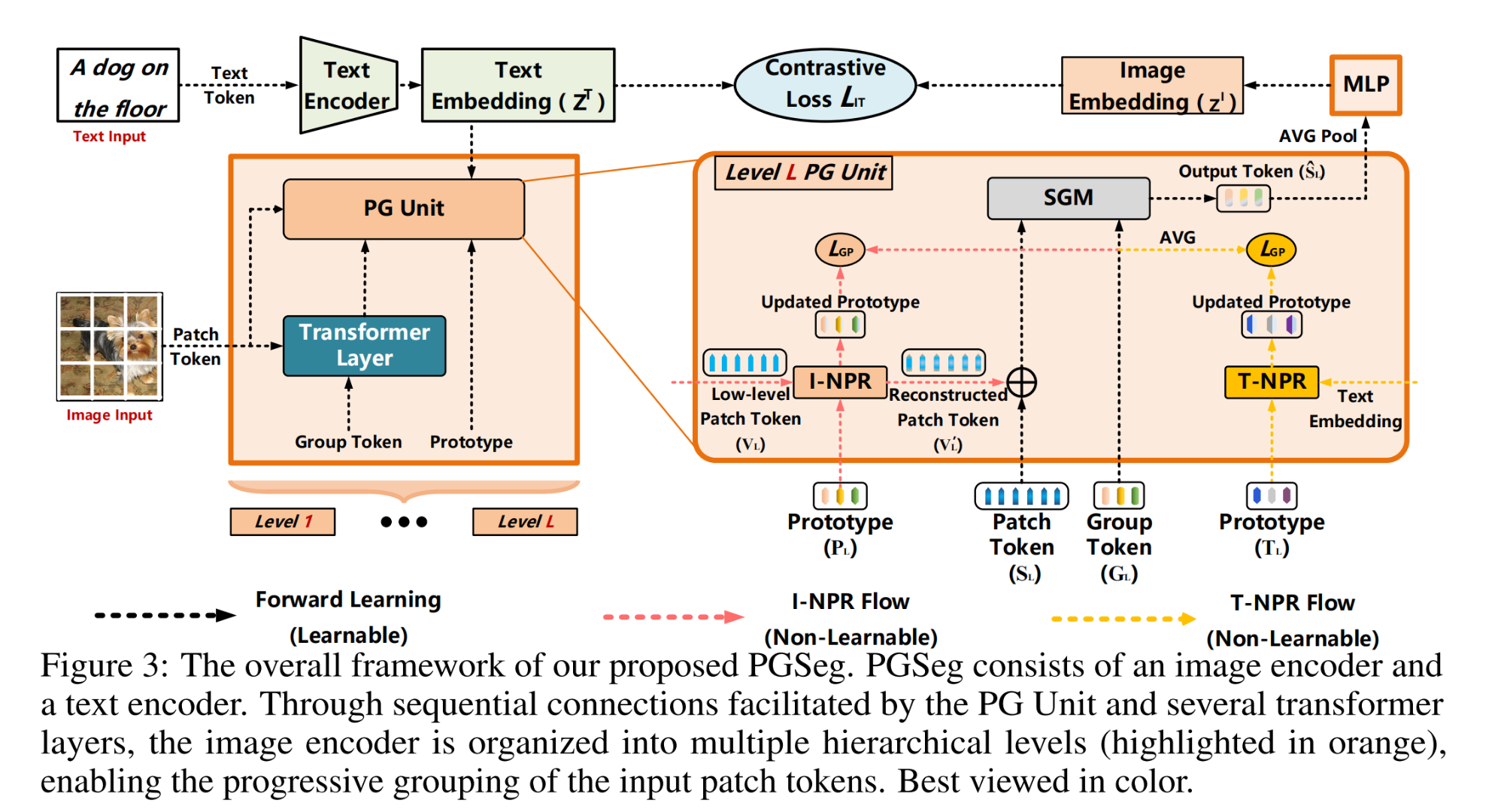

11.【Open-Vocabulary Segmentation】(NeurIPS2023)Uncovering Prototypical Knowledge for Weakly Open-Vocabulary Semantic Segmentation

-

论文地址:https://arxiv.org//pdf/2310.19001

-

开源代码(即将开源):GitHub - Ferenas/PGSeg: This is the official code of "Uncovering Prototypical Knowledge for Weakly Open-Vocabulary Semantic Segmentation, Neurips23"

12.【视频语义分割】(NeurIPS2023)Mask Propagation for Efficient Video Semantic Segmentation

-

论文地址:https://arxiv.org//pdf/2310.18954

-

开源代码(即将开源):GitHub - ziplab/MPVSS

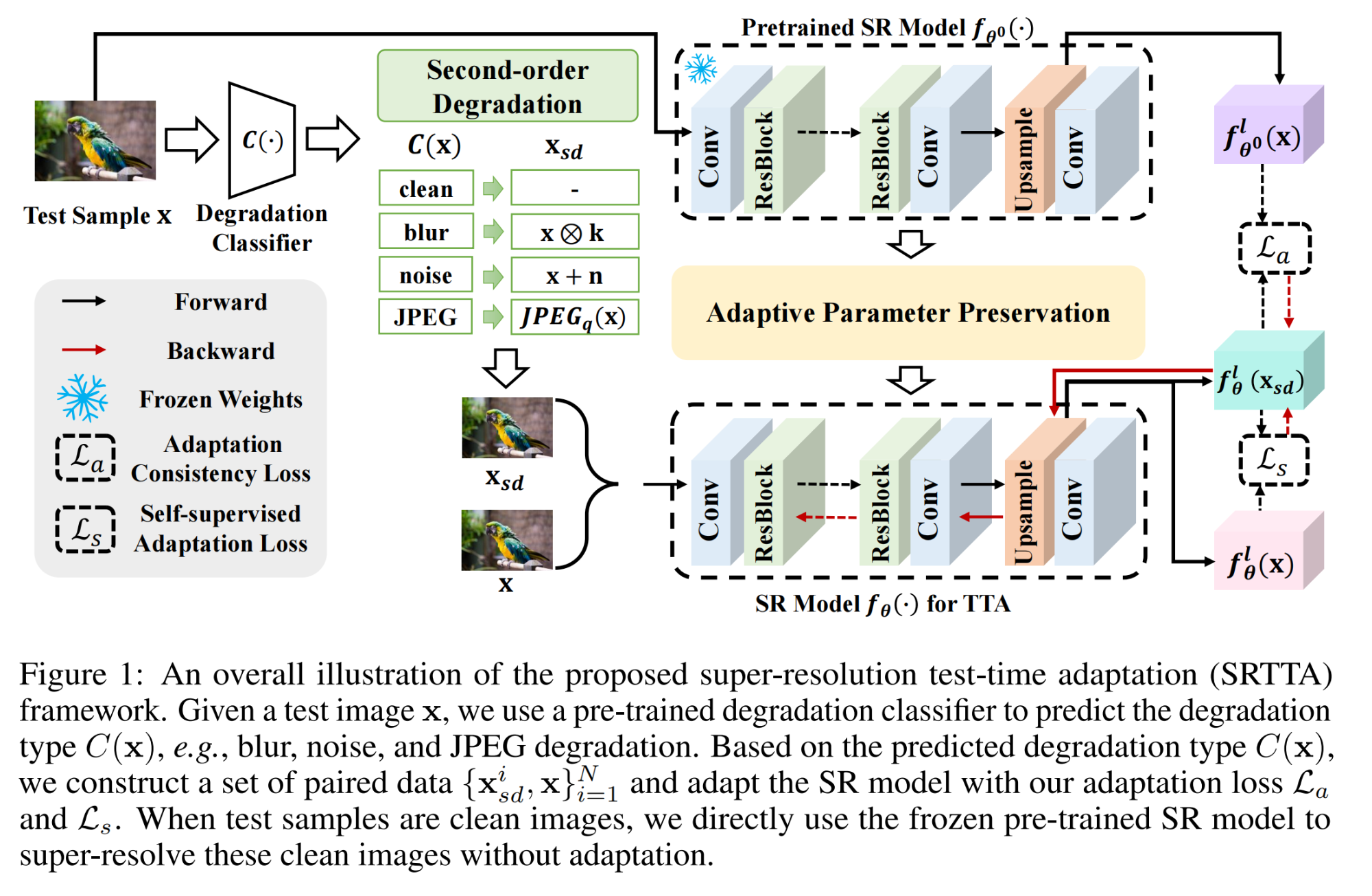

13.【超分辨率重建】(NeurIPS2023)Efficient Test-Time Adaptation for Super-Resolution with Second-Order Degradation and Reconstruction

-

论文地址:https://arxiv.org//pdf/2310.19011

-

开源代码(即将开源):GitHub - DengZeshuai/SRTTA

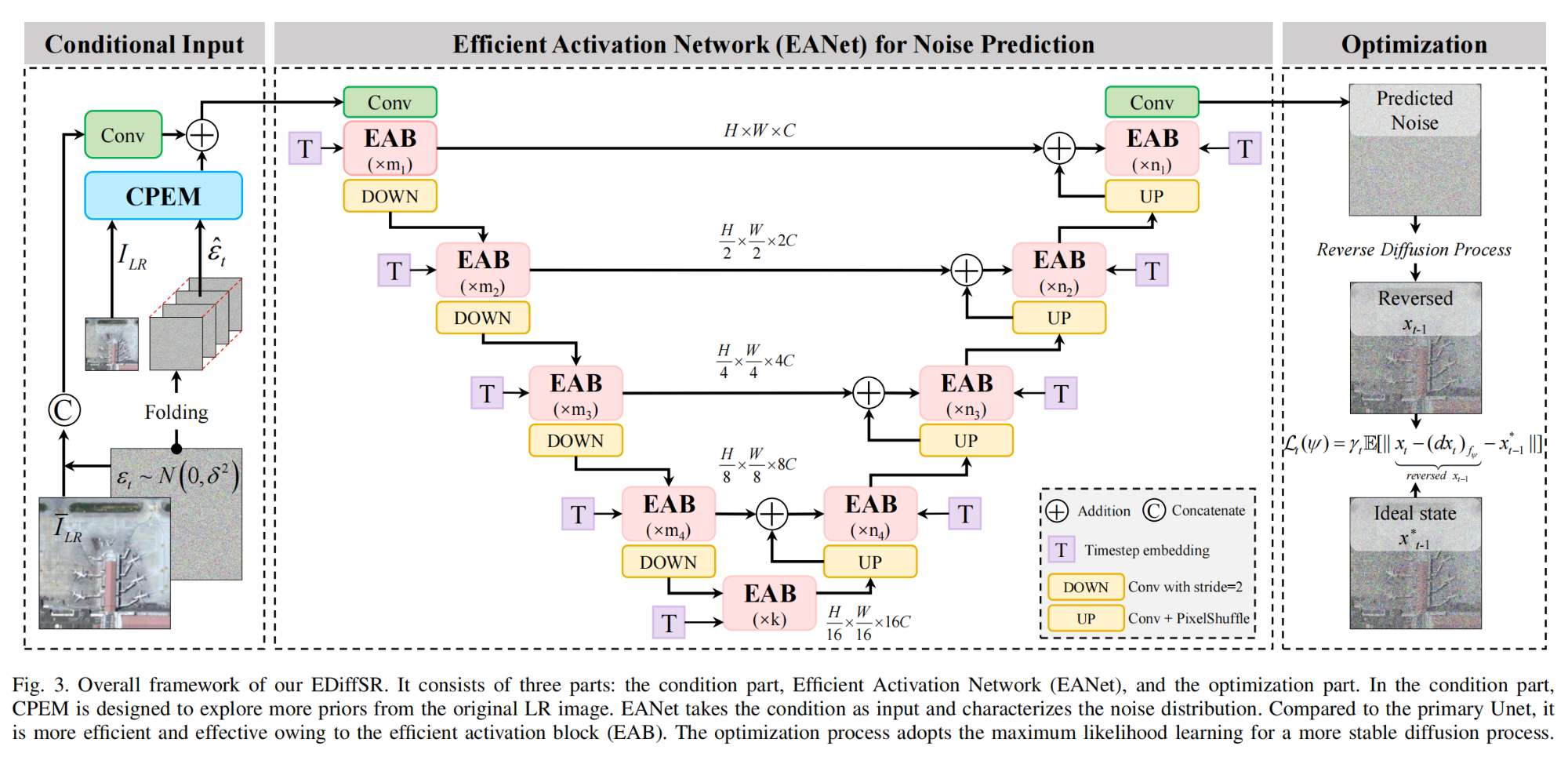

14.【超分辨率重建】EDiffSR: An Efficient Diffusion Probabilistic Model for Remote Sensing Image Super-Resolution

-

论文地址:https://arxiv.org//pdf/2310.19288

-

开源代码(即将开源):GitHub - XY-boy/EDiffSR: EDiffSR: An Efficient Diffusion Probabilistic Model for Remote Sensing Image Super-Resolution

15.【领域泛化】(NeurIPS2023)SimMMDG: A Simple and Effective Framework for Multi-modal Domain Generalization

-

论文地址:https://arxiv.org//pdf/2310.19795

-

开源代码(即将开源):GitHub - donghao51/SimMMDG: [NeurIPS 2023] SimMMDG: A Simple and Effective Framework for Multi-modal Domain Generalization

16.【领域泛化】(WACV2024)Domain Generalisation via Risk Distribution Matching

-

论文地址:https://arxiv.org//pdf/2310.18598

-

开源代码:GitHub - nktoan/risk-distribution-matching: Here is the codebase for our accepted paper in the Research Track of WACV'24 on 'Domain Generalization via Risk Distribution Matching'.

17.【多模态】Harvest Video Foundation Models via Efficient Post-Pretraining

-

论文地址:https://arxiv.org//pdf/2310.19554

-

开源代码:GitHub - OpenGVLab/InternVideo: InternVideo: General Video Foundation Models via Generative and Discriminative Learning (https://arxiv.org/abs/2212.03191)

18.【多模态】IterInv: Iterative Inversion for Pixel-Level T2I Models

-

论文地址:https://arxiv.org//pdf/2310.19540

-

开源代码(即将开源):GitHub - Tchuanm/IterInv: The official implement of "IterInv: Iterative Inversion for Pixel-Level T2I Models".

19.【多模态】Generating Context-Aware Natural Answers for Questions in 3D Scenes

-

论文地址:https://arxiv.org//pdf/2310.19516

-

开源代码(即将开源):GitHub - MunzerDw/Gen3DQA: My guided research project on 3D visual question answering at the lab of Prof. Dr. Niessner at Technical University of Munich.

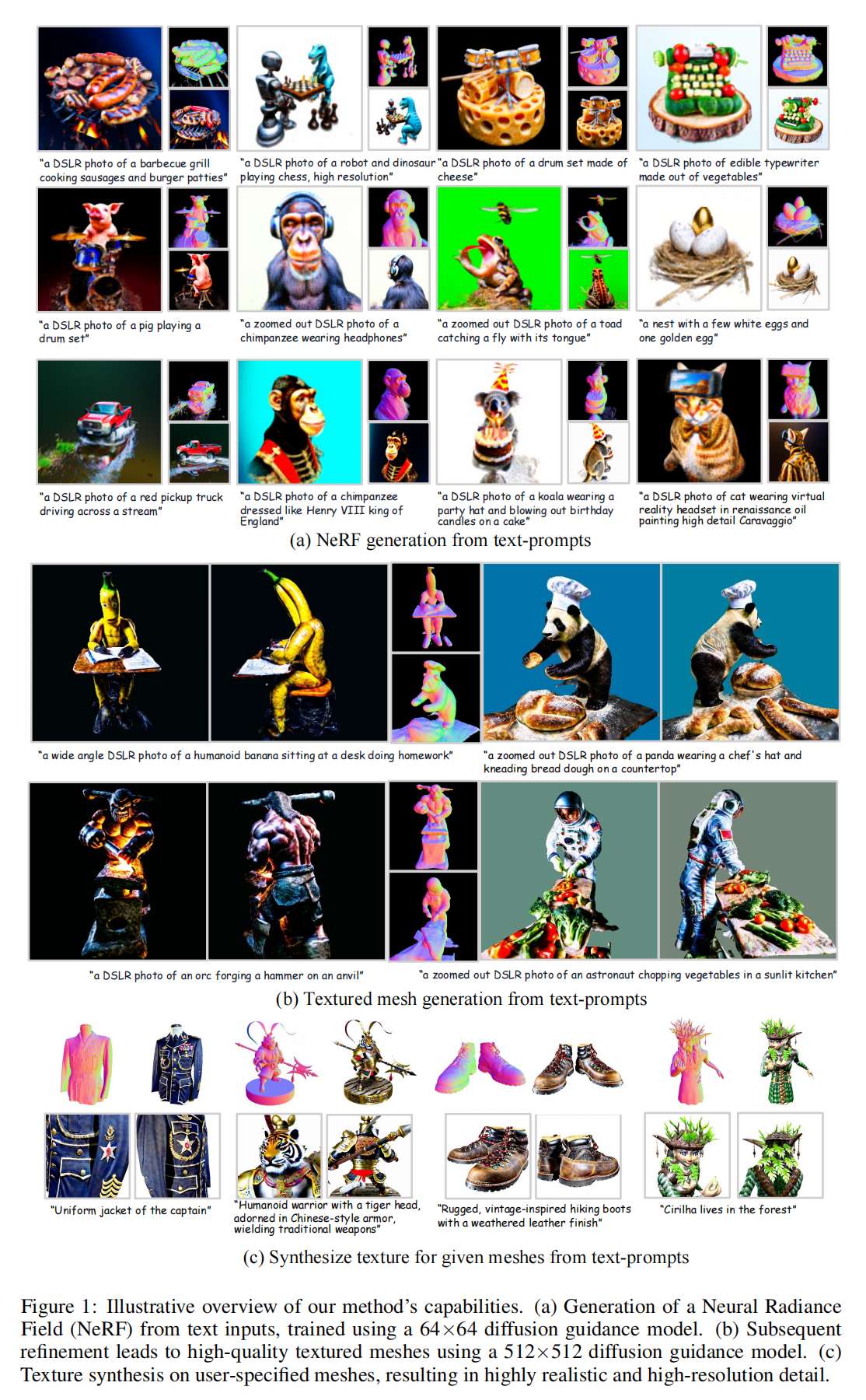

20.【多模态】Text-to-3D with Classifier Score Distillation

-

论文地址:https://arxiv.org//pdf/2310.19415

-

工程主页:Classifier Score Distillation

-

代码即将开源

21.【多模态】Dynamic Task and Weight Prioritization Curriculum Learning for Multimodal Imagery

-

论文地址:https://arxiv.org//pdf/2310.19109

-

开源代码:GitHub - fualsan/DATWEP: Source code for the Dynamic Task and Weight Prioritization Curriculum Learning for Multimodal Imagery

22.【多模态】TESTA: Temporal-Spatial Token Aggregation for Long-form Video-Language Understanding

-

论文地址:https://arxiv.org//pdf/2310.19060

-

开源代码:GitHub - RenShuhuai-Andy/TESTA: [EMNLP 2023] TESTA: Temporal-Spatial Token Aggregation for Long-form Video-Language Understanding

23.【多模态】Customizing 360-Degree Panoramas through Text-to-Image Diffusion Models

-

论文地址:https://arxiv.org//pdf/2310.18840

-

开源代码:GitHub - littlewhitesea/StitchDiffusion: This is the official implementation of "Customizing 360-Degree Panoramas through Text-to-Image Diffusion Models" (WACV2024)

24.【多模态】ROME: Evaluating Pre-trained Vision-Language Models on Reasoning beyond Visual Common Sense

-

论文地址:https://arxiv.org//pdf/2310.19301

-

开源代码(即将开源):GitHub - K-Square-00/ROME

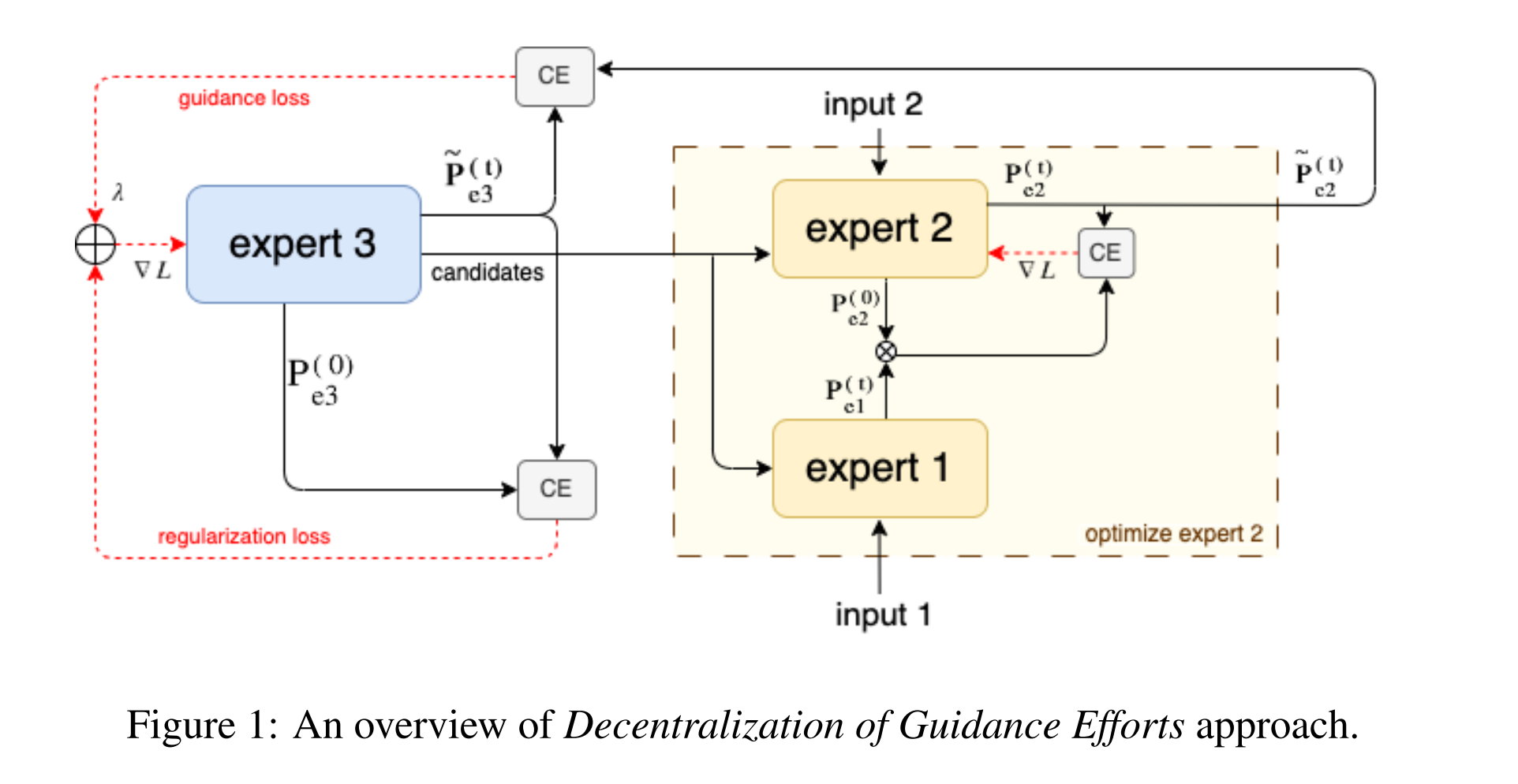

25.【多模态】Apollo: Zero-shot MultiModal Reasoning with Multiple Experts

-

论文地址:https://arxiv.org//pdf/2310.18369

-

开源代码:GitHub - danielabd/Apollo-Cap: Style Controllable Zero-Shot Image-to-Text Generation

26.【自监督学习】Local-Global Self-Supervised Visual Representation Learning

-

论文地址:https://arxiv.org//pdf/2310.18651

-

开源代码:GitHub - alijavidani/Local_Global_Representation_Learning

27.【自监督学习】(NeurIPS2023)InstanT: Semi-supervised Learning with Instance-dependent Thresholds

-

论文地址:https://arxiv.org//pdf/2310.18910

-

开源代码(即将开源):tmllab/2023_NeurIPS_InstanT · GitHub

28.【单目3D目标检测】ODM3D: Alleviating Foreground Sparsity for Enhanced Semi-Supervised Monocular 3D Object Detection

-

论文地址:https://arxiv.org//pdf/2310.18620

-

开源代码(即将开源):https://github.com/arcaninez/odm3d

29.【自动驾驶:协同感知】Dynamic V2X Autonomous Perception from Road-to-Vehicle Vision

-

论文地址:https://arxiv.org//pdf/2310.19113

-

开源代码(即将开源):tjy1423317192/AP2VP · GitHub

30.【自动驾驶:深度估计】(NeurIPS2023)Dynamo-Depth: Fixing Unsupervised Depth Estimation for Dynamical Scenes

-

论文地址:https://arxiv.org//pdf/2310.18887

-

工程主页:Dynamo-Depth: Fixing Unsupervised Depth Estimation for Dynamical Scenes

-

开源代码(即将开源):GitHub - YihongSun/Dynamo-Depth: [NeurIPS 2023] Fixing Unsupervised Depth Estimation for Dynamical Scenes

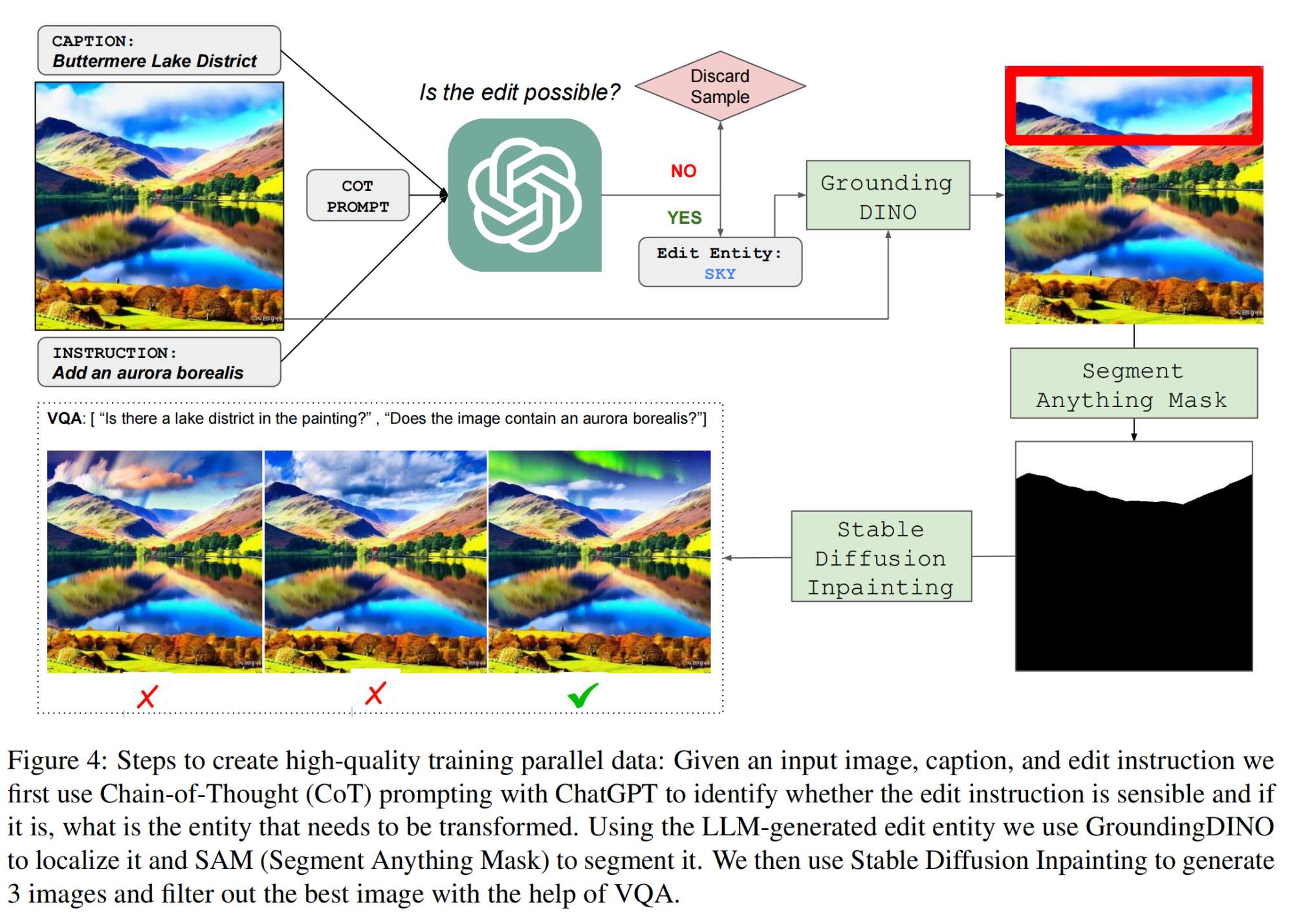

31.【图像编辑】(EMNLP2023)Learning to Follow Object-Centric Image Editing Instructions Faithfully

-

论文地址:https://arxiv.org//pdf/2310.19145

-

开源代码:GitHub - tuhinjubcse/FaithfulEdits_EMNLP2023: Code and Data for EMNLP 2023 paper "Learning to Follow Object-Centric Image Editing Instructions Faithfully"

32.【视频生成】VideoCrafter1: Open Diffusion Models for High-Quality Video Generation

-

论文地址:https://arxiv.org//pdf/2310.19512

-

工程主页:VideoCrafter1

-

开源代码:GitHub - AILab-CVC/VideoCrafter: VideoCrafter1: Open Diffusion Models for High-Quality Video Generation

33.【知识蒸馏】One-for-All: Bridge the Gap Between Heterogeneous Architectures in Knowledge Distillation

-

论文地址:https://arxiv.org//pdf/2310.19444

-

开源代码:GitHub - Hao840/OFAKD

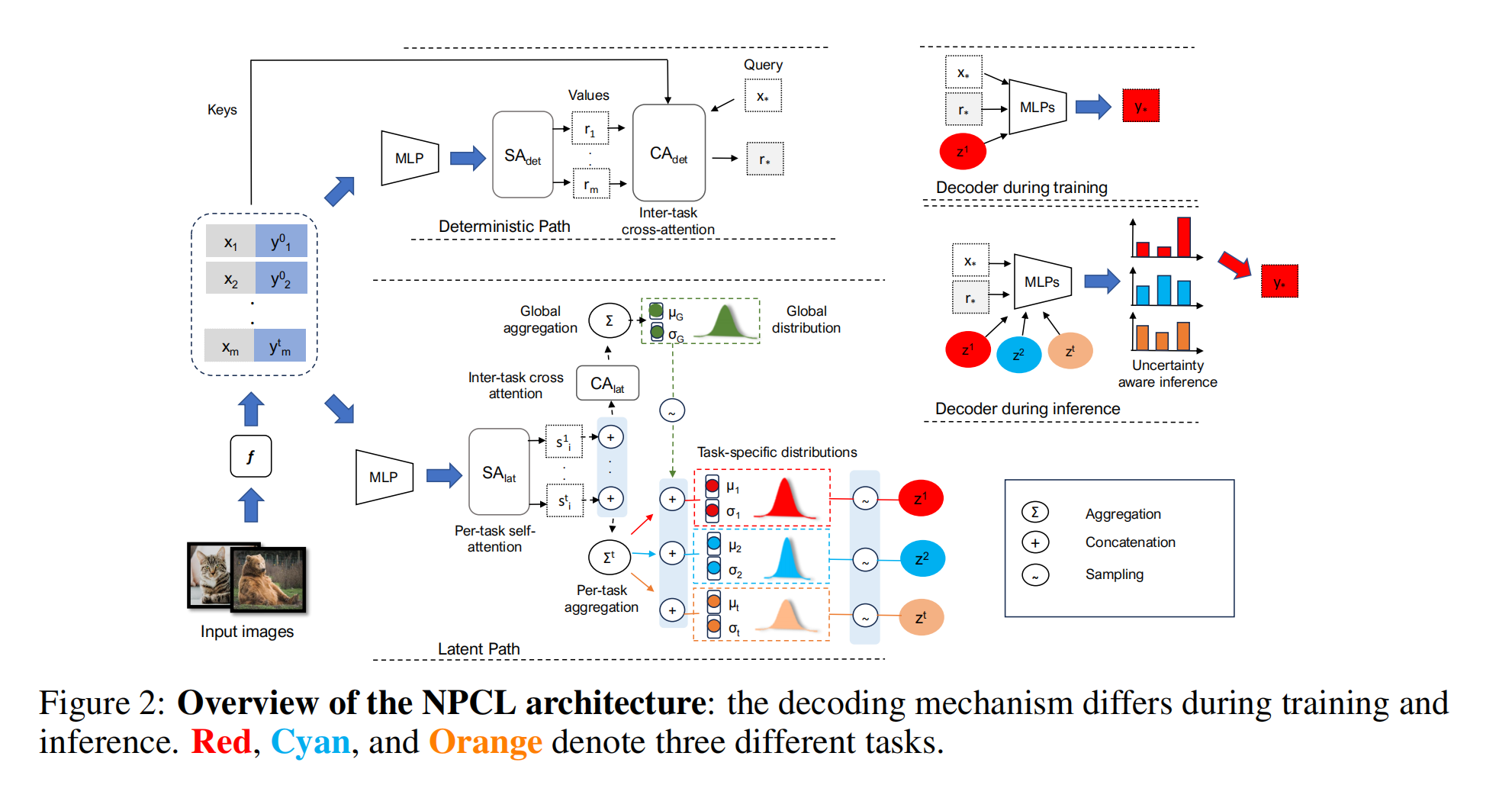

34.【Continual Learning】(NeurIPS2023)NPCL: Neural Processes for Uncertainty-Aware Continual Learning

-

论文地址:https://arxiv.org//pdf/2310.19272

-

开源代码(即将开源):GitHub - srvCodes/NPCL

论文已打包,下载链接

CV计算机视觉交流群

群内包含目标检测、图像分割、目标跟踪、Transformer、多模态、NeRF、GAN、缺陷检测、显著目标检测、关键点检测、超分辨率重建、SLAM、人脸、OCR、生物医学图像、三维重建、姿态估计、自动驾驶感知、深度估计、视频理解、行为识别、图像去雾、图像去雨、图像修复、图像检索、车道线检测、点云目标检测、点云分割、图像压缩、运动预测、神经网络量化、网络部署等多个领域的大佬,不定期分享技术知识、面试技巧和内推招聘信息。

想进群的同学请添加微信号联系管理员:PingShanHai666。添加好友时请备注:学校/公司+研究方向+昵称。

推荐阅读:

使用目标之间的先验关系提升目标检测器性能