rancher2.6.2 单机及高可用部署

文章目录

- rancher2.6.2 单机及高可用部署

- 前言

- 单机部署

- 高可用部署

- k8s集成

前言

1、服务器准备

单机部署:

| 机器名 | IP地址 | 部署内容 | cpu核心数 | 内存(G) | 硬盘(G) |

|---|---|---|---|---|---|

| rancher-master | 192.168.0.18 | rancher | 2 | 4 | 50 |

高可用部署:

| 机器名 | IP地址 | 部署内容 | cpu核心数 | 内存(G) | 硬盘(G) |

|---|---|---|---|---|---|

| rancher-controler01 | 192.168.0.52 | rancher | 4 | 8 | 70 |

| rancher-controler02 | 192.168.0.164 | rancher | 2 | 4 | 50 |

| rancher-controler03 | 192.168.0.22 | rancher | 2 | 4 | 50 |

K8s集群:

| 机器名 | IP地址 | 部署内容 | cpu核心数 | 内存(G) | 硬盘(G) |

|---|---|---|---|---|---|

| k8s1 | 39.106.48.250 | master01、etcd01、worker01 | 4 | 8 | 70 |

| k8s2 | 123.60.215.71 | master02、etcd02、worker02 | 4 | 8 | 70 |

| k8s3 | 120.46.211.33 | master02、etcd02、worker03 | 4 | 8 | 70 |

2、安装前准备

#!/bin/bash

#关闭selinux

sed -i '/^SELINUX=/cSELINUX=disabled' /etc/selinux/config

setenforce 0

#关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

#关闭swap

swapoff -a && sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

#内核优化

cp /etc/sysctl.conf /etc/sysctl.conf.back

echo "

net.bridge.bridge-nf-call-ip6tables=1

net.bridge.bridge-nf-call-iptables=1

net.ipv4.ip_forward=1

net.ipv4.conf.all.forwarding=1

net.ipv4.neigh.default.gc_thresh1=4096

net.ipv4.neigh.default.gc_thresh2=6144

net.ipv4.neigh.default.gc_thresh3=8192

net.ipv4.neigh.default.gc_interval=60

net.ipv4.neigh.default.gc_stale_time=120

kernel.perf_event_paranoid=-1

#sysctls for k8s node config

net.ipv4.tcp_slow_start_after_idle=0

net.core.rmem_max=16777216

fs.inotify.max_user_watches=524288

kernel.softlockup_all_cpu_backtrace=1

kernel.softlockup_panic=0

kernel.watchdog_thresh=30

fs.file-max=2097152

fs.inotify.max_user_instances=8192

fs.inotify.max_queued_events=16384

vm.max_map_count=262144

fs.may_detach_mounts=1

net.core.netdev_max_backlog=16384

net.ipv4.tcp_wmem=4096 12582912 16777216

net.core.wmem_max=16777216

net.core.somaxconn=32768

net.ipv4.ip_forward=1

net.ipv4.tcp_max_syn_backlog=8096

net.ipv4.tcp_rmem=4096 12582912 16777216

net.ipv6.conf.all.disable_ipv6=1

net.ipv6.conf.default.disable_ipv6=1

net.ipv6.conf.lo.disable_ipv6=1

kernel.yama.ptrace_scope=0

vm.swappiness=0

kernel.core_uses_pid=1

# Do not accept source routing

net.ipv4.conf.default.accept_source_route=0

net.ipv4.conf.all.accept_source_route=0

# Promote secondary addresses when the primary address is removed

net.ipv4.conf.default.promote_secondaries=1

net.ipv4.conf.all.promote_secondaries=1

# Enable hard and soft link protection

fs.protected_hardlinks=1

fs.protected_symlinks=1

# see details in https://help.aliyun.com/knowledge_detail/39428.html

net.ipv4.conf.all.rp_filter=0

net.ipv4.conf.default.rp_filter=0

net.ipv4.conf.default.arp_announce = 2

net.ipv4.conf.lo.arp_announce=2

net.ipv4.conf.all.arp_announce=2

# see details in https://help.aliyun.com/knowledge_detail/41334.html

net.ipv4.tcp_max_tw_buckets=5000

net.ipv4.tcp_syncookies=1

net.ipv4.tcp_fin_timeout=30

net.ipv4.tcp_synack_retries=2

kernel.sysrq=1

" >> /etc/sysctl.conf

sysctl -p /etc/sysctl.conf

echo "系统初始化完毕,建议重启一下系统...."

while 1>0

do

echo -e "\033[31m是否需要现在重启?[y/n] \033[0m" && read yn

case "$yn" in

y | Y)

echo "正在重启......"

reboot

exit

;;

n | N)

echo -e "\033[31m正在退出,请稍后...\033[0m"

exit

;;

*)

echo -e "\033[31m请输入正确字符! \033[0m" ;;

esac

done

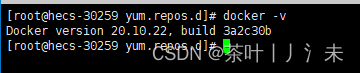

3、docker准备

单机部署

1、部署rancher

docker run -d --restart=unless-stopped -p 8080:80 -p 8443:443 --privileged rancher/rancher:v2.6.2

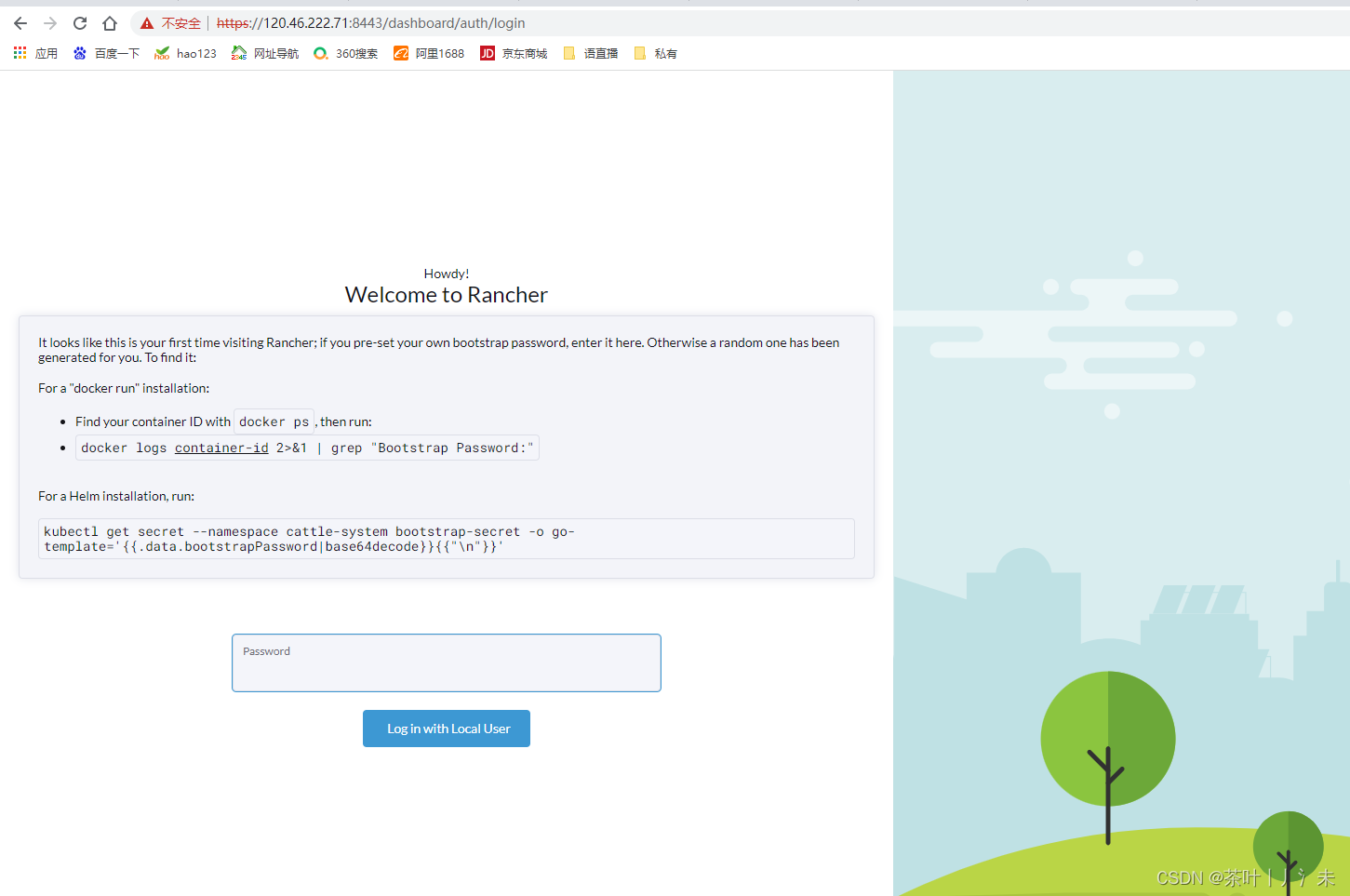

2、配置rancher

浏览器访问:https://ip:8443/ ,进入rancher管理页面。

# 容器ID

docker ps

# 找到密码

docker logs ${container-id} 2>&1 | grep "Bootstrap Password:"

高可用部署

1、添加rancher用户

# useradd rancher && usermod -aG docker rancher

# passwd rancher

2、rke所在主机上创建密钥

# ssh-keygen

//将所生成的密钥的公钥分发到各个节点

# ssh-copy-id rancher@公网IP

# ssh-copy-id rancher@公网IP

# ssh-copy-id rancher@公网IP

修改下主机名

hostnamectl set-hostname controler01

bash

3、RKE工具下载

wget https://github.com/rancher/rke/releases/download/v1.3.1/rke_linux-amd64

chmod +x rke_linux-amd64

mv rke_linux-amd64 /usr/local/bin/rke

cp /usr/local/bin/rke /usr/bin/

rke -v

rke version

4、初始化配置文件

mkdir -p /apps/rancher-control

cd /apps/rancher-control

rke config --name cluster.yml

也可以直接 rke up 这个文件

# If you intended to deploy Kubernetes in an air-gapped environment,

# please consult the documentation on how to configure custom RKE images.

nodes:

- address: 124.70.48.219

port: "22"

internal_address: 192.168.0.52

role:

- controlplane

- worker

- etcd

hostname_override: rancher-control-01

user: "rancher"

docker_socket: /var/run/docker.sock

ssh_key_path: ~/.ssh/id_rsa

labels: {}

taints: []

- address: 124.70.1.215

port: "22"

internal_address: 192.168.0.164

role:

- controlplane

- worker

- etcd

hostname_override: rancher-control-02

user: "rancher"

docker_socket: /var/run/docker.sock

ssh_key_path: ~/.ssh/id_rsa

labels: {}

taints: []

- address: 123.249.78.133

port: "22"

internal_address: 192.168.0.22

role:

- controlplane

- worker

- etcd

hostname_override: rancher-control-03

user: "rancher"

docker_socket: /var/run/docker.sock

ssh_key_path: ~/.ssh/id_rsa

labels: {}

taints: []

services:

etcd:

image: ""

extra_args: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_binds: []

win_extra_env: []

external_urls: []

ca_cert: ""

cert: ""

key: ""

path: ""

uid: 0

gid: 0

snapshot: null

retention: ""

creation: ""

backup_config: null

kube-api:

image: ""

extra_args:

#后面部署kubeflow的依赖

service-account-issuer: "kubernetes.default.svc"

service-account-signing-key-file: "/etc/kubernetes/ssl/kube-service-account-token-key.pem"

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_binds: []

win_extra_env: []

service_cluster_ip_range: 192.178.0.0/16

service_node_port_range: ""

pod_security_policy: false

always_pull_images: false

secrets_encryption_config: null

audit_log: null

admission_configuration: null

event_rate_limit: null

kube-controller:

image: ""

extra_args:

#后面部署kubeflow的依赖

cluster-signing-cert-file: "/etc/kubernetes/ssl/kube-ca.pem"

cluster-signing-key-file: "/etc/kubernetes/ssl/kube-ca-key.pem"

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_binds: []

win_extra_env: []

cluster_cidr: 192.177.0.0/16

service_cluster_ip_range: 192.178.0.0/16

scheduler:

image: ""

extra_args: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_binds: []

win_extra_env: []

kubelet:

image: ""

extra_args: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_binds: []

win_extra_env: []

cluster_domain: rancher-cluster

infra_container_image: ""

cluster_dns_server: 192.178.0.10

fail_swap_on: false

generate_serving_certificate: false

kubeproxy:

image: ""

extra_args: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_binds: []

win_extra_env: []

network:

plugin: flannel

options: {}

mtu: 0

node_selector: {}

update_strategy: null

tolerations: []

authentication:

strategy: x509

sans: []

webhook: null

addons: ""

addons_include: []

system_images:

etcd: rancher/mirrored-coreos-etcd:v3.4.16-rancher1

alpine: rancher/rke-tools:v0.1.78

nginx_proxy: rancher/rke-tools:v0.1.78

cert_downloader: rancher/rke-tools:v0.1.78

kubernetes_services_sidecar: rancher/rke-tools:v0.1.78

kubedns: rancher/mirrored-k8s-dns-kube-dns:1.17.4

dnsmasq: rancher/mirrored-k8s-dns-dnsmasq-nanny:1.17.4

kubedns_sidecar: rancher/mirrored-k8s-dns-sidecar:1.17.4

kubedns_autoscaler: rancher/mirrored-cluster-proportional-autoscaler:1.8.3

coredns: rancher/mirrored-coredns-coredns:1.8.4

coredns_autoscaler: rancher/mirrored-cluster-proportional-autoscaler:1.8.3

nodelocal: rancher/mirrored-k8s-dns-node-cache:1.18.0

kubernetes: rancher/hyperkube:v1.19.15-rancher1

flannel: rancher/mirrored-coreos-flannel:v0.14.0

flannel_cni: rancher/flannel-cni:v0.3.0-rancher6

calico_node: rancher/mirrored-calico-node:v3.19.2

calico_cni: rancher/mirrored-calico-cni:v3.19.2

calico_controllers: rancher/mirrored-calico-kube-controllers:v3.19.2

calico_ctl: rancher/mirrored-calico-ctl:v3.19.2

calico_flexvol: rancher/mirrored-calico-pod2daemon-flexvol:v3.19.2

canal_node: rancher/mirrored-calico-node:v3.19.2

canal_cni: rancher/mirrored-calico-cni:v3.19.2

canal_controllers: rancher/mirrored-calico-kube-controllers:v3.19.2

canal_flannel: rancher/mirrored-coreos-flannel:v0.14.0

canal_flexvol: rancher/mirrored-calico-pod2daemon-flexvol:v3.19.2

weave_node: weaveworks/weave-kube:2.8.1

weave_cni: weaveworks/weave-npc:2.8.1

pod_infra_container: rancher/mirrored-pause:3.4.1

ingress: rancher/nginx-ingress-controller:nginx-0.48.1-rancher1

ingress_backend: rancher/mirrored-nginx-ingress-controller-defaultbackend:1.5-rancher1

ingress_webhook: rancher/mirrored-jettech-kube-webhook-certgen:v1.5.1

metrics_server: rancher/mirrored-metrics-server:v0.5.0

windows_pod_infra_container: rancher/kubelet-pause:v0.1.6

aci_cni_deploy_container: noiro/cnideploy:5.1.1.0.1ae238a

aci_host_container: noiro/aci-containers-host:5.1.1.0.1ae238a

aci_opflex_container: noiro/opflex:5.1.1.0.1ae238a

aci_mcast_container: noiro/opflex:5.1.1.0.1ae238a

aci_ovs_container: noiro/openvswitch:5.1.1.0.1ae238a

aci_controller_container: noiro/aci-containers-controller:5.1.1.0.1ae238a

aci_gbp_server_container: noiro/gbp-server:5.1.1.0.1ae238a

aci_opflex_server_container: noiro/opflex-server:5.1.1.0.1ae238a

ssh_key_path: ~/.ssh/id_rsa

ssh_cert_path: ""

ssh_agent_auth: false

authorization:

mode: rbac

options: {}

ignore_docker_version: null

enable_cri_dockerd: null

kubernetes_version: ""

private_registries: []

ingress:

provider: ""

options: {}

node_selector: {}

extra_args: {}

dns_policy: ""

extra_envs: []

extra_volumes: []

extra_volume_mounts: []

update_strategy: null

http_port: 0

https_port: 0

network_mode: ""

tolerations: []

default_backend: null

default_http_backend_priority_class_name: ""

nginx_ingress_controller_priority_class_name: ""

cluster_name: ""

cloud_provider:

name: ""

prefix_path: ""

win_prefix_path: ""

addon_job_timeout: 0

bastion_host:

address: ""

port: ""

user: ""

ssh_key: ""

ssh_key_path: ""

ssh_cert: ""

ssh_cert_path: ""

ignore_proxy_env_vars: false

monitoring:

provider: ""

options: {}

node_selector: {}

update_strategy: null

replicas: null

tolerations: []

metrics_server_priority_class_name: ""

restore:

restore: false

snapshot_name: ""

rotate_encryption_key: false

dns: null

记得修改IP

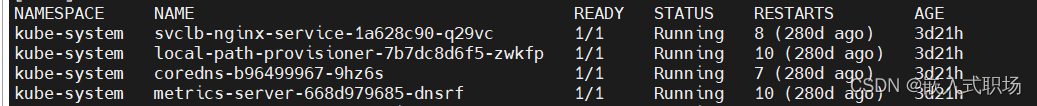

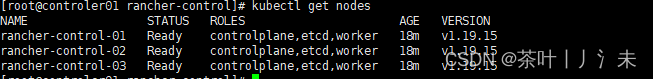

5、使用kubectl命令查看集群

wget https://storage.googleapis.com/kubernetes-release/release/v1.22.2/bin/linux/amd64/kubectl

chmod +x kubectl_v1.22.2

mv kubectl_v1.22.2 /usr/local/bin/kubectl

cp /usr/local/bin/kubectl /usr/bin/

mkdir ~/.kube

cp kube_config_cluster.yml /root/.kube/config

查看集群node状态

6、helm工具下载

mkdir -p /apps/k8s-cluster

cd /apps/k8s-cluster

wget https://github.com/zyiqian/package/raw/main/helm

chmod +x helm

cp helm /usr/local/bin/

helm version

7、添加仓库

helm repo add aliyun https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

helm repo add bitnami https://charts.bitnami.com/bitnami

helm repo add rancher-stable https://releases.rancher.com/server-charts/stable

helm repo update

8、生成自签证书

bash key.sh --ssl-domain=test.rancker.com --ssl-trusted-ip=120.46.211.33,123.60.215.71,43.136.177.175 --ssl-size=2048 --ssl-date=3650

key.sh

#!/bin/bash -e

help ()

{

echo ' ================================================================ '

echo ' --ssl-domain: 生成ssl证书需要的主域名,如不指定则默认为www.rancher.local,如果是ip访问服务,则可忽略;'

echo ' --ssl-trusted-ip: 一般ssl证书只信任域名的访问请求,有时候需要使用ip去访问server,那么需要给ssl证书添加扩展IP,多个IP用逗号隔开;'

echo ' --ssl-trusted-domain: 如果想多个域名访问,则添加扩展域名(SSL_TRUSTED_DOMAIN),多个扩展域名用逗号隔开;'

echo ' --ssl-size: ssl加密位数,默认2048;'

echo ' --ssl-date: ssl有效期,默认10年;'

echo ' --ca-date: ca有效期,默认10年;'

echo ' --ssl-cn: 国家代码(2个字母的代号),默认CN;'

echo ' 使用示例:'

echo ' ./create_self-signed-cert.sh --ssl-domain=www.test.com --ssl-trusted-domain=www.test2.com \ '

echo ' --ssl-trusted-ip=1.1.1.1,2.2.2.2,3.3.3.3 --ssl-size=2048 --ssl-date=3650'

echo ' ================================================================'

}

case "$1" in

-h|--help) help; exit;;

esac

if [[ $1 == '' ]];then

help;

exit;

fi

CMDOPTS="$*"

for OPTS in $CMDOPTS;

do

key=$(echo ${OPTS} | awk -F"=" '{print $1}' )

value=$(echo ${OPTS} | awk -F"=" '{print $2}' )

case "$key" in

--ssl-domain) SSL_DOMAIN=$value ;;

--ssl-trusted-ip) SSL_TRUSTED_IP=$value ;;

--ssl-trusted-domain) SSL_TRUSTED_DOMAIN=$value ;;

--ssl-size) SSL_SIZE=$value ;;

--ssl-date) SSL_DATE=$value ;;

--ca-date) CA_DATE=$value ;;

--ssl-cn) CN=$value ;;

esac

done

# CA相关配置

CA_DATE=${CA_DATE:-3650}

CA_KEY=${CA_KEY:-cakey.pem}

CA_CERT=${CA_CERT:-cacerts.pem}

CA_DOMAIN=cattle-ca

# ssl相关配置

SSL_CONFIG=${SSL_CONFIG:-$PWD/openssl.cnf}

SSL_DOMAIN=${SSL_DOMAIN:-'www.rancher.local'}

SSL_DATE=${SSL_DATE:-3650}

SSL_SIZE=${SSL_SIZE:-2048}

## 国家代码(2个字母的代号),默认CN;

CN=${CN:-CN}

SSL_KEY=$SSL_DOMAIN.key

SSL_CSR=$SSL_DOMAIN.csr

SSL_CERT=$SSL_DOMAIN.crt

echo -e "\033[32m ---------------------------- \033[0m"

echo -e "\033[32m | 生成 SSL Cert | \033[0m"

echo -e "\033[32m ---------------------------- \033[0m"

if [[ -e ./${CA_KEY} ]]; then

echo -e "\033[32m ====> 1. 发现已存在CA私钥,备份"${CA_KEY}"为"${CA_KEY}"-bak,然后重新创建 \033[0m"

mv ${CA_KEY} "${CA_KEY}"-bak

openssl genrsa -out ${CA_KEY} ${SSL_SIZE}

else

echo -e "\033[32m ====> 1. 生成新的CA私钥 ${CA_KEY} \033[0m"

openssl genrsa -out ${CA_KEY} ${SSL_SIZE}

fi

if [[ -e ./${CA_CERT} ]]; then

echo -e "\033[32m ====> 2. 发现已存在CA证书,先备份"${CA_CERT}"为"${CA_CERT}"-bak,然后重新创建 \033[0m"

mv ${CA_CERT} "${CA_CERT}"-bak

openssl req -x509 -sha256 -new -nodes -key ${CA_KEY} -days ${CA_DATE} -out ${CA_CERT} -subj "/C=${CN}/CN=${CA_DOMAIN}"

else

echo -e "\033[32m ====> 2. 生成新的CA证书 ${CA_CERT} \033[0m"

openssl req -x509 -sha256 -new -nodes -key ${CA_KEY} -days ${CA_DATE} -out ${CA_CERT} -subj "/C=${CN}/CN=${CA_DOMAIN}"

fi

echo -e "\033[32m ====> 3. 生成Openssl配置文件 ${SSL_CONFIG} \033[0m"

cat > ${SSL_CONFIG} <<EOM

[req]

req_extensions = v3_req

distinguished_name = req_distinguished_name

[req_distinguished_name]

[ v3_req ]

basicConstraints = CA:FALSE

keyUsage = nonRepudiation, digitalSignature, keyEncipherment

extendedKeyUsage = clientAuth, serverAuth

EOM

if [[ -n ${SSL_TRUSTED_IP} || -n ${SSL_TRUSTED_DOMAIN} ]]; then

cat >> ${SSL_CONFIG} <<EOM

subjectAltName = @alt_names

[alt_names]

EOM

IFS=","

dns=(${SSL_TRUSTED_DOMAIN})

dns+=(${SSL_DOMAIN})

for i in "${!dns[@]}"; do

echo DNS.$((i+1)) = ${dns[$i]} >> ${SSL_CONFIG}

done

if [[ -n ${SSL_TRUSTED_IP} ]]; then

ip=(${SSL_TRUSTED_IP})

for i in "${!ip[@]}"; do

echo IP.$((i+1)) = ${ip[$i]} >> ${SSL_CONFIG}

done

fi

fi

echo -e "\033[32m ====> 4. 生成服务SSL KEY ${SSL_KEY} \033[0m"

openssl genrsa -out ${SSL_KEY} ${SSL_SIZE}

echo -e "\033[32m ====> 5. 生成服务SSL CSR ${SSL_CSR} \033[0m"

openssl req -sha256 -new -key ${SSL_KEY} -out ${SSL_CSR} -subj "/C=${CN}/CN=${SSL_DOMAIN}" -config ${SSL_CONFIG}

echo -e "\033[32m ====> 6. 生成服务SSL CERT ${SSL_CERT} \033[0m"

openssl x509 -sha256 -req -in ${SSL_CSR} -CA ${CA_CERT} \

-CAkey ${CA_KEY} -CAcreateserial -out ${SSL_CERT} \

-days ${SSL_DATE} -extensions v3_req \

-extfile ${SSL_CONFIG}

echo -e "\033[32m ====> 7. 证书制作完成 \033[0m"

echo

echo -e "\033[32m ====> 8. 以YAML格式输出结果 \033[0m"

echo "----------------------------------------------------------"

echo "ca_key: |"

cat $CA_KEY | sed 's/^/ /'

echo

echo "ca_cert: |"

cat $CA_CERT | sed 's/^/ /'

echo

echo "ssl_key: |"

cat $SSL_KEY | sed 's/^/ /'

echo

echo "ssl_csr: |"

cat $SSL_CSR | sed 's/^/ /'

echo

echo "ssl_cert: |"

cat $SSL_CERT | sed 's/^/ /'

echo

echo -e "\033[32m ====> 9. 附加CA证书到Cert文件 \033[0m"

cat ${CA_CERT} >> ${SSL_CERT}

echo "ssl_cert: |"

cat $SSL_CERT | sed 's/^/ /'

echo

echo -e "\033[32m ====> 10. 重命名服务证书 \033[0m"

echo "cp ${SSL_DOMAIN}.key tls.key"

cp ${SSL_DOMAIN}.key tls.key

echo "cp ${SSL_DOMAIN}.crt tls.crt"

cp ${SSL_DOMAIN}.crt tls.crt

9、部署rancher

创建 rancher 的 namespace:

kubectl --kubeconfig=$KUBECONFIG create namespace cattle-system

helm 渲染中 --set privateCA=true 用到的证书:

kubectl -n cattle-system create secret generic tls-ca --from-file=cacerts.pem

helm 渲染中 --set additionalTrustedCAs=true 用到的证书:

cp cacerts.pem ca-additional.pem

kubectl -n cattle-system create secret generic tls-ca-additional --from-file=ca-additional.pem

helm 渲染中 --set ingress.tls.source=secret 用到的证书和密钥:

kubectl -n cattle-system create secret tls tls-rancher-ingress --cert=tls.crt --key=tls.key

通过Helm将部署模板下载到本地:

helm fetch rancher-stable/rancher --version 2.6.2

当前目录会多一个rancher-2.6.2.tgz

使用以下命令渲染模板:

$ helm template rancher ./rancher-2.6.2.tgz \

--namespace cattle-system --output-dir . \

--set privateCA=true \

--set additionalTrustedCAs=true \

--set ingress.tls.source=secret \

--set hostname=test.rancker.com \

--set useBundledSystemChart=true

使用kubectl安装rancher:

kubectl delete -A ValidatingWebhookConfiguration ingress-nginx-admission

kubectl -n cattle-system apply -R -f ./rancher/templates/

查看安裝进度:

kubectl -n cattle-system get all -o wide

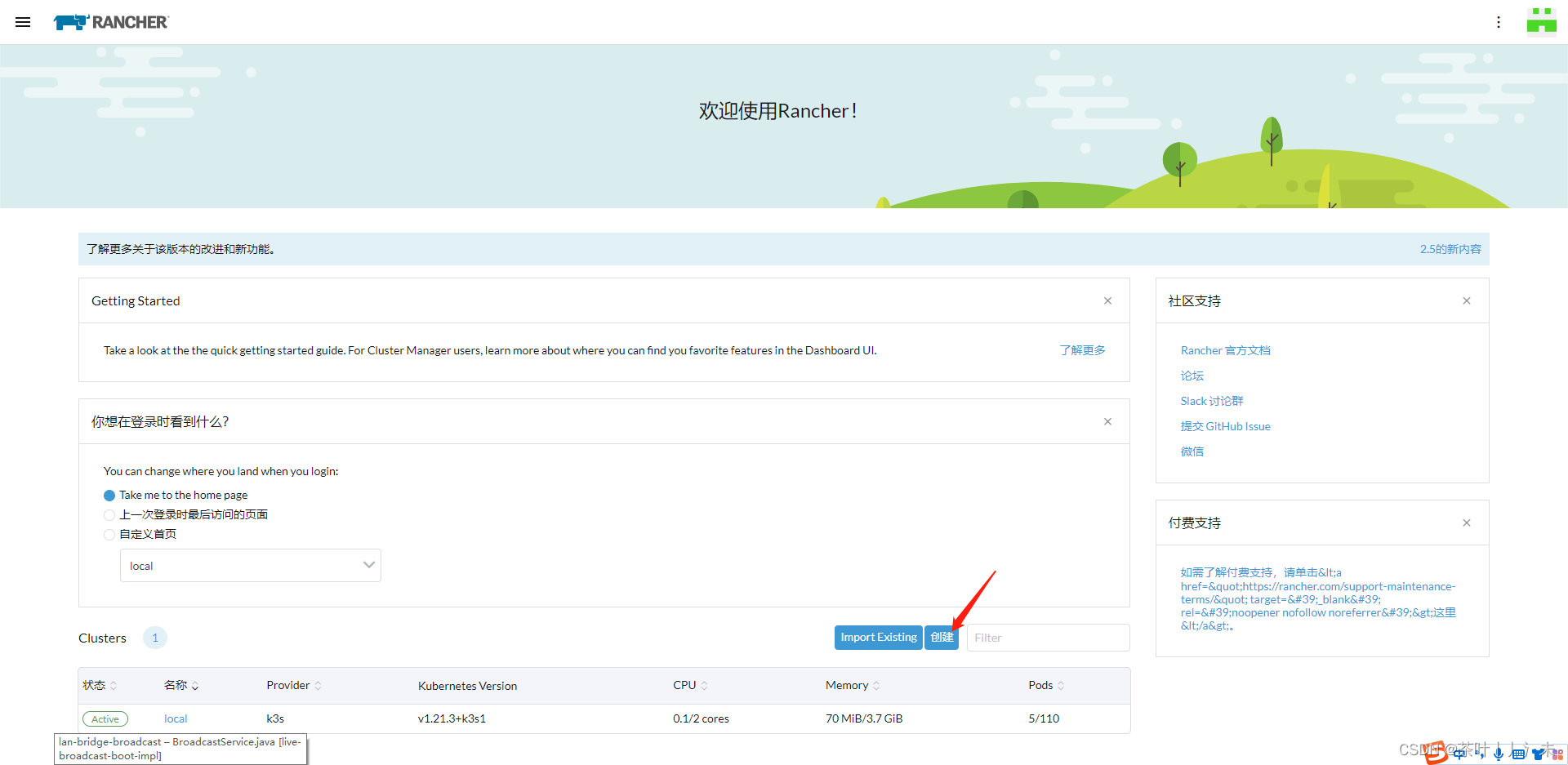

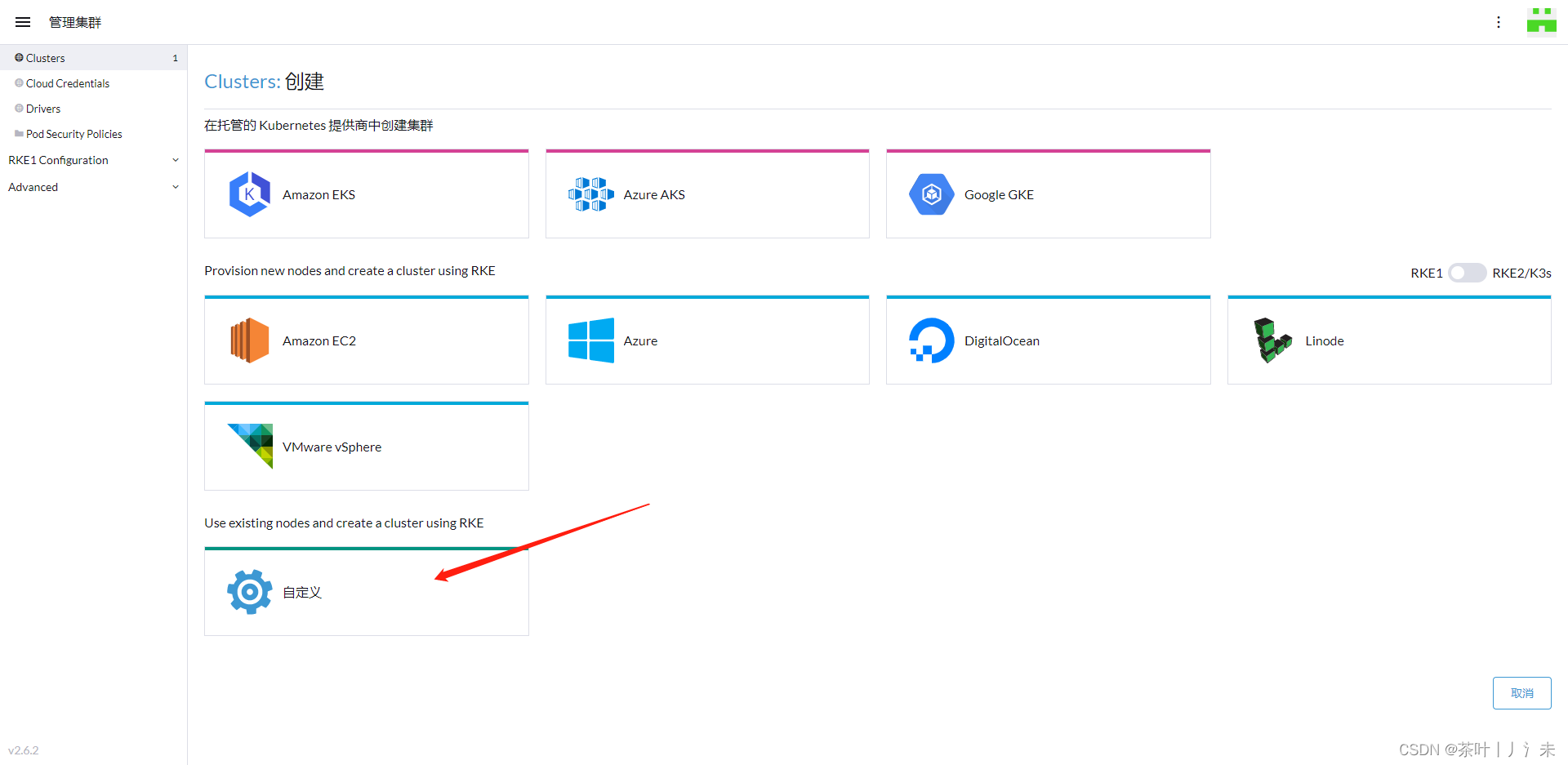

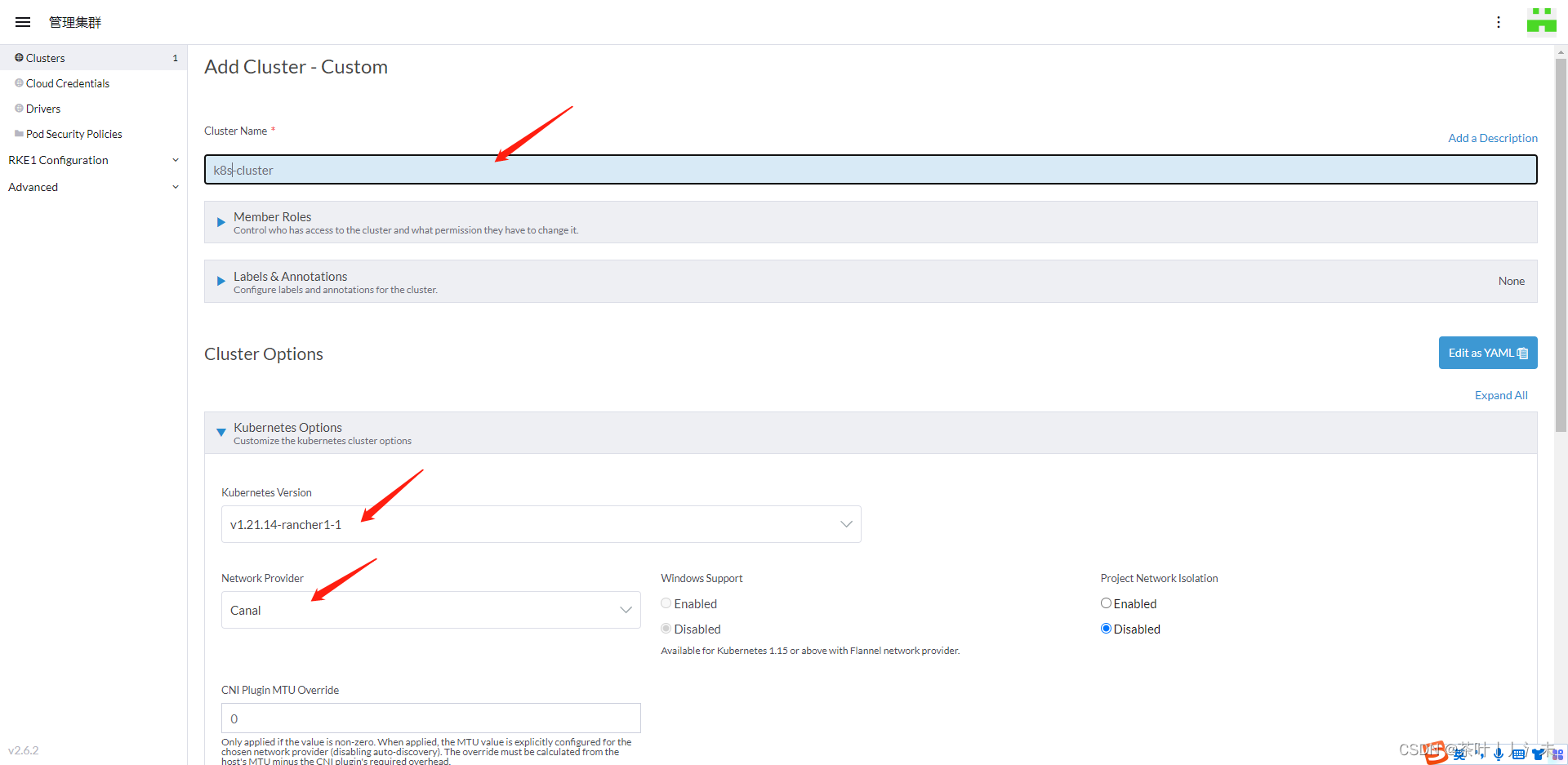

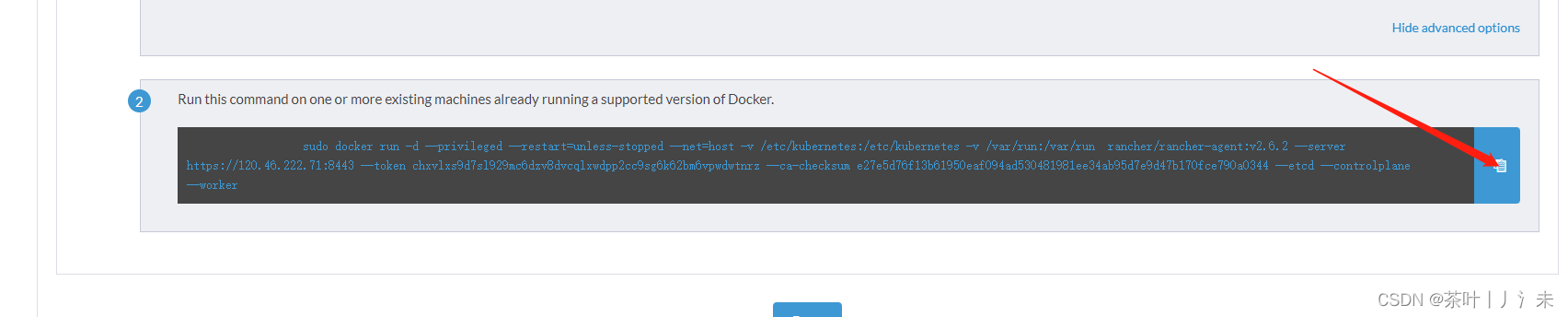

k8s集成

以单机rancher 为例

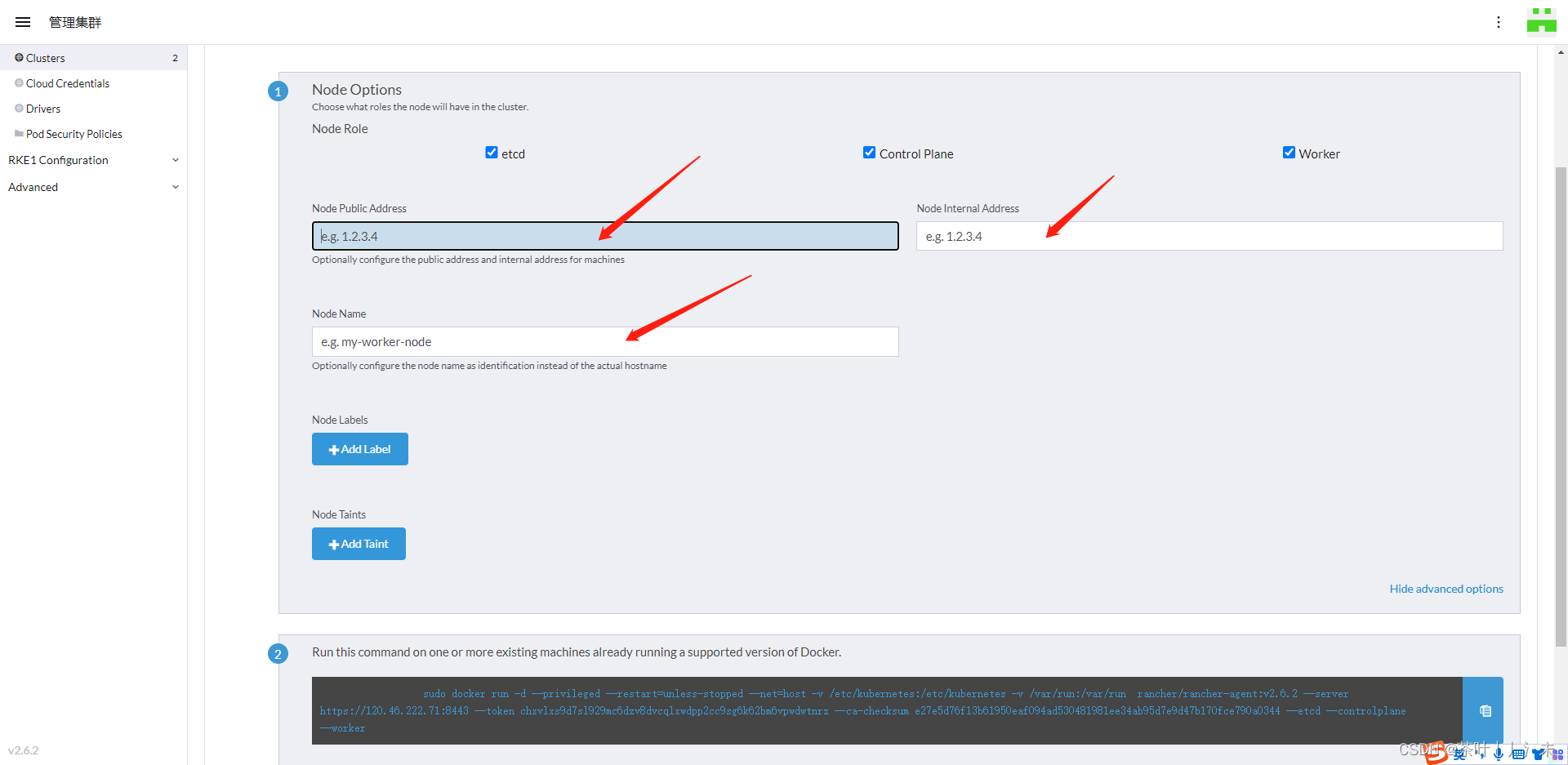

配置上你的服务器ip

勾选节点之后,复制对应的命令,到对应的服务器执行

等待十几分钟,完成!