目录

1、多节点安装介绍

2、概念介绍

3、安装

3.1 准备主机

系统要求

3.2 下载 KubeKey

3.3 编辑配置文件

文件关键字介绍

3.3 使用配置文件创建集群

3.4 验证安装

3.5 启用 kubectl 自动补全

1、多节点安装介绍

在生产环境中,由于单节点集群资源有限、计算能力不足,无法满足大部分需求,因此不建议在处理大规模数据时使用单节点集群。

此外,单节点集群只有一个节点,因此也不具有高可用性。相比之下,在应用程序部署和分发方面,多节点架构是最常见的首选架构。

本节概述了多节点安装,包括概念、KubeKey 和操作步骤。有关高可用安装的信息,请参考高可用配置、在公有云上安装和在本地环境中安装。

2、概念介绍

多节点集群由至少一个主节点和一个工作节点组成。可以使用任何节点作为任务机来执行安装任务,也可以在安装之前或之后根据需要新增节点(例如,为了实现高可用性)。

Control plane node:主节点,通常托管控制平面,控制和管理整个系统。

Worker node:工作节点,运行部署在工作节点上的实际应用程序。

3、安装

3.1 准备主机

系统要求

系统 最低要求(每个节点) Ubuntu 16.04,18.04,20.04, 22.04 CPU:2 核,内存:4 G,硬盘:40 G Debian Buster,Stretch CPU:2 核,内存:4 G,硬盘:40 G CentOS 7.x CPU:2 核,内存:4 G,硬盘:40 G Red Hat Enterprise Linux 7 CPU:2 核,内存:4 G,硬盘:40 G SUSE Linux Enterprise Server 15 /openSUSE Leap 15.2 CPU:2 核,内存:4 G,硬盘:40 G

本文示例是在阿里云开了三台服务器

| 主机 IP | 主机名 | 角色 |

|---|---|---|

| ******* | master | control plane, etcd |

| ******* | node1 | worker |

| ******* | node2 | worker |

3.2 下载 KubeKey

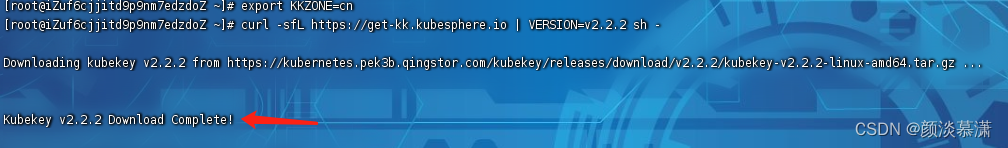

如果不能正常访问github/googleapis,下载之前需要设置

export KKZONE=cn如果可以正常访问,直接执行

curl -sfL https://get-kk.kubesphere.io | VERSION=v2.2.2 sh -下载成功,如下

3.3 编辑配置文件

config-sample.yaml 示例如下

apiVersion: kubekey.kubesphere.io/v1alpha2

kind: Cluster

metadata:

name: sample

spec:

hosts:

- {name: master, address: 47.102.134.179, internalAddress: 172.19.139.251, user: root, password: "Root1234"}

- {name: node1, address: 47.102.117.129, internalAddress: 172.19.139.250, user: root, password: "Root1234"}

- {name: node2, address: 139.196.224.201, internalAddress: 172.19.139.249, user: root, password: "Root1234"}

roleGroups:

etcd:

- master # All the nodes in your cluster that serve as the etcd nodes.

master:

- master

worker:

- node1

- node2

controlPlaneEndpoint:

internalLoadbalancer: haproxy #Internal loadbalancer for apiservers. [Default: ""]

domain: lb.kubesphere.local

address: "" # The IP address of your load balancer.

port: 6443

system:

ntpServers: # The ntp servers of chrony.

- time1.cloud.tencent.com

- ntp.aliyun.com

- node1 # Set the node name in `hosts` as ntp server if no public ntp servers access.

timezone: "Asia/Shanghai"

kubernetes:

version: v1.21.5

imageRepo: kubesphere

containerManager: docker # Container Runtime, support: containerd, cri-o, isula. [Default: docker]

clusterName: cluster.local

autoRenewCerts: true # Whether to install a script which can automatically renew the Kubernetes control plane certificates. [Default: false]

masqueradeAll: false # masqueradeAll tells kube-proxy to SNAT everything if using the pure iptables proxy mode. [Default: false].

maxPods: 110 # maxPods is the number of Pods that can run on this Kubelet. [Default: 110]

nodeCidrMaskSize: 24 # The internal network node size allocation. This is the size allocated to each node on your network. [Default: 24]

proxyMode: ipvs # Specify which proxy mode to use. [Default: ipvs]

featureGates: # enable featureGates, [Default: {"ExpandCSIVolumes":true,"RotateKubeletServerCertificate": true,"CSIStorageCapacity":true, "TTLAfterFinished":true}]

CSIStorageCapacity: true

ExpandCSIVolumes: true

RotateKubeletServerCertificate: true

TTLAfterFinished: true

## support kata and NFD

# kata:

# enabled: true

# nodeFeatureDiscovery

# enabled: true

etcd:

type: kubekey # Specify the type of etcd used by the cluster. When the cluster type is k3s, setting this parameter to kubeadm is invalid. [kubekey | kubeadm | external] [Default: kubekey]

## The following parameters need to be added only when the type is set to external.

## caFile, certFile and keyFile need not be set, if TLS authentication is not enabled for the existing etcd.

# external:

# endpoints:

# - https://192.168.6.6:2379

# caFile: /pki/etcd/ca.crt

# certFile: /pki/etcd/etcd.crt

# keyFile: /pki/etcd/etcd.key

network:

plugin: calico

calico:

ipipMode: Always # IPIP Mode to use for the IPv4 POOL created at start up. If set to a value other than Never, vxlanMode should be set to "Never". [Always | CrossSubnet | Never] [Default: Always]

vxlanMode: Never # VXLAN Mode to use for the IPv4 POOL created at start up. If set to a value other than Never, ipipMode should be set to "Never". [Always | CrossSubnet | Never] [Default: Never]

vethMTU: 0 # The maximum transmission unit (MTU) setting determines the largest packet size that can be transmitted through your network. By default, MTU is auto-detected. [Default: 0]

kubePodsCIDR: 10.233.64.0/18

kubeServiceCIDR: 10.233.0.0/18

registry:

registryMirrors: []

insecureRegistries: []

privateRegistry: ""

namespaceOverride: ""

auths: # if docker add by `docker login`, if containerd append to `/etc/containerd/config.toml`

"dockerhub.kubekey.local":

username: "xxx"

password: "***"

skipTLSVerify: false # Allow contacting registries over HTTPS with failed TLS verification.

plainHTTP: false # Allow contacting registries over HTTP.

certsPath: "/etc/docker/certs.d/dockerhub.kubekey.local" # Use certificates at path (*.crt, *.cert, *.key) to connect to the registry.

addons: [] # You can install cloud-native addons (Chart or YAML) by using this field.

---

apiVersion: installer.kubesphere.io/v1alpha1

kind: ClusterConfiguration

metadata:

name: ks-installer

namespace: kubesphere-system

labels:

version: v3.1.0

spec:

persistence:

storageClass: "" # If there is no default StorageClass in your cluster, you need to specify an existing StorageClass here.

authentication:

jwtSecret: "" # Keep the jwtSecret consistent with the Host Cluster. Retrieve the jwtSecret by executing "kubectl -n kubesphere-system get cm kubesphere-config -o yaml | grep -v "apiVersion" | grep jwtSecret" on the Host Cluster.

local_registry: "" # Add your private registry address if it is needed.

etcd:

monitoring: false # Enable or disable etcd monitoring dashboard installation. You have to create a Secret for etcd before you enable it.

endpointIps: localhost # etcd cluster EndpointIps. It can be a bunch of IPs here.

port: 2379 # etcd port.

tlsEnable: true

common:

redis:

enabled: false

openldap:

enabled: false

minioVolumeSize: 20Gi # Minio PVC size.

openldapVolumeSize: 2Gi # openldap PVC size.

redisVolumSize: 2Gi # Redis PVC size.

monitoring:

endpoint: http://prometheus-operated.kubesphere-monitoring-system.svc:9090 # Prometheus endpoint to get metrics data.

es: # Storage backend for logging, events and auditing.

# elasticsearchMasterReplicas: 1 # The total number of master nodes. Even numbers are not allowed.

# elasticsearchDataReplicas: 1 # The total number of data nodes.

elasticsearchMasterVolumeSize: 4Gi # The volume size of Elasticsearch master nodes.

elasticsearchDataVolumeSize: 20Gi # The volume size of Elasticsearch data nodes.

logMaxAge: 7 # Log retention time in built-in Elasticsearch. It is 7 days by default.

elkPrefix: logstash # The string making up index names. The index name will be formatted as ks-<elk_prefix>-log.

basicAuth:

enabled: false

username: ""

password: ""

externalElasticsearchUrl: ""

externalElasticsearchPort: ""

console:

enableMultiLogin: true # Enable or disable simultaneous logins. It allows different users to log in with the same account at the same time.

port: 30880

alerting: # (CPU: 0.1 Core, Memory: 100 MiB) It enables users to customize alerting policies to send messages to receivers in time with different time intervals and alerting levels to choose from.

enabled: false # Enable or disable the KubeSphere Alerting System.

# thanosruler:

# replicas: 1

# resources: {}

auditing: # Provide a security-relevant chronological set of records,recording the sequence of activities happening on the platform, initiated by different tenants.

enabled: false # Enable or disable the KubeSphere Auditing Log System.

devops: # (CPU: 0.47 Core, Memory: 8.6 G) Provide an out-of-the-box CI/CD system based on Jenkins, and automated workflow tools including Source-to-Image & Binary-to-Image.

enabled: false # Enable or disable the KubeSphere DevOps System.

jenkinsMemoryLim: 2Gi # Jenkins memory limit.

jenkinsMemoryReq: 1500Mi # Jenkins memory request.

jenkinsVolumeSize: 8Gi # Jenkins volume size.

jenkinsJavaOpts_Xms: 512m # The following three fields are JVM parameters.

jenkinsJavaOpts_Xmx: 512m

jenkinsJavaOpts_MaxRAM: 2g

events: # Provide a graphical web console for Kubernetes Events exporting, filtering and alerting in multi-tenant Kubernetes clusters.

enabled: false # Enable or disable the KubeSphere Events System.

ruler:

enabled: true

replicas: 2

logging: # (CPU: 57 m, Memory: 2.76 G) Flexible logging functions are provided for log query, collection and management in a unified console. Additional log collectors can be added, such as Elasticsearch, Kafka and Fluentd.

enabled: false # Enable or disable the KubeSphere Logging System.

logsidecar:

enabled: true

replicas: 2

metrics_server: # (CPU: 56 m, Memory: 44.35 MiB) It enables HPA (Horizontal Pod Autoscaler).

enabled: false # Enable or disable metrics-server.

monitoring:

storageClass: "" # If there is an independent StorageClass you need for Prometheus, you can specify it here. The default StorageClass is used by default.

# prometheusReplicas: 1 # Prometheus replicas are responsible for monitoring different segments of data source and providing high availability.

prometheusMemoryRequest: 400Mi # Prometheus request memory.

prometheusVolumeSize: 20Gi # Prometheus PVC size.

# alertmanagerReplicas: 1 # AlertManager Replicas.

multicluster:

clusterRole: none # host | member | none # You can install a solo cluster, or specify it as the Host or Member Cluster.

network:

networkpolicy: # Network policies allow network isolation within the same cluster, which means firewalls can be set up between certain instances (Pods).

# Make sure that the CNI network plugin used by the cluster supports NetworkPolicy. There are a number of CNI network plugins that support NetworkPolicy, including Calico, Cilium, Kube-router, Romana and Weave Net.

enabled: false # Enable or disable network policies.

ippool: # Use Pod IP Pools to manage the Pod network address space. Pods to be created can be assigned IP addresses from a Pod IP Pool.

type: none # Specify "calico" for this field if Calico is used as your CNI plugin. "none" means that Pod IP Pools are disabled.

topology: # Use Service Topology to view Service-to-Service communication based on Weave Scope.

type: none # Specify "weave-scope" for this field to enable Service Topology. "none" means that Service Topology is disabled.

openpitrix: # An App Store that is accessible to all platform tenants. You can use it to manage apps across their entire lifecycle.

store:

enabled: false # Enable or disable the KubeSphere App Store.

servicemesh: # (0.3 Core, 300 MiB) Provide fine-grained traffic management, observability and tracing, and visualized traffic topology.

enabled: false # Base component (pilot). Enable or disable KubeSphere Service Mesh (Istio-based).

kubeedge: # Add edge nodes to your cluster and deploy workloads on edge nodes.

enabled: false # Enable or disable KubeEdge.

cloudCore:

nodeSelector: {"node-role.kubernetes.io/worker": ""}

tolerations: []

cloudhubPort: "10000"

cloudhubQuicPort: "10001"

cloudhubHttpsPort: "10002"

cloudstreamPort: "10003"

tunnelPort: "10004"

cloudHub:

advertiseAddress: # At least a public IP address or an IP address which can be accessed by edge nodes must be provided.

- "" # Note that once KubeEdge is enabled, CloudCore will malfunction if the address is not provided.

nodeLimit: "100"

service:

cloudhubNodePort: "30000"

cloudhubQuicNodePort: "30001"

cloudhubHttpsNodePort: "30002"

cloudstreamNodePort: "30003"

tunnelNodePort: "30004"

edgeWatcher:

nodeSelector: {"node-role.kubernetes.io/worker": ""}

tolerations: []

edgeWatcherAgent:

nodeSelector: {"node-role.kubernetes.io/worker": ""}

tolerations: []文件关键字介绍

name:实例的主机名。

address:任务机和其他实例通过 SSH 相互连接所使用的 IP 地址。根据您的环境,可以是公有 IP 地址或私有 IP 地址。例如,一些云平台为每个实例提供一个公有 IP 地址,用于通过 SSH 访问。在这种情况下,您可以在该字段填入这个公有 IP 地址。

internalAddress:实例的私有 IP 地址。roleGroups

etcd:etcd 节点名称control-plane:主节点名称worker:工作节点名称controlPlaneEndpoint(仅适用于高可用安装)

您需要在

controlPlaneEndpoint部分为高可用集群提供外部负载均衡器信息。当且仅当您安装多个主节点时,才需要准备和配置外部负载均衡器。请注意,config-sample.yaml中的地址和端口应缩进两个空格,address应为您的负载均衡器地址。有关详细信息,请参见高可用配置。addons

可以在

config-sample.yaml的addons字段下指定存储,从而自定义持久化存储插件,例如 NFS 客户端、Ceph RBD、GlusterFS 等。有关更多信息,请参见持久化存储配置。KubeSphere 会默认安装 OpenEBS,为开发和测试环境配置 LocalPV,方便新用户使用。在本多节点安装示例中,使用了默认存储类型(本地存储卷)。对于生产环境,您可以使用 Ceph/GlusterFS/CSI 或者商业存储产品作为持久化存储解决方案。

此外,必须提供用于连接至每台实例的登录信息,以下示例供参考:

密码登录:

hosts: - {name: master, address: 192.168.0.2, internalAddress: 192.168.0.2, port: 8022, user: ubuntu, password: Testing123}

- 在安装 KubeSphere 之前,可以使用

hosts下提供的信息(例如 IP 地址和密码)通过 SSH 的方式测试任务机和其他实例之间的网络连接。

3.3 使用配置文件创建集群

执行命令

./kk create cluster -f config-sample.yaml

整个安装过程可能需要 10 到 20 分钟,具体取决于计算机和网络环境。

3.4 验证安装

安装完成后,您会看到如下内容:

##################################################### ### Welcome to KubeSphere! ### ##################################################### Console: http://192.168.0.2:30880 Account: admin Password: P@88w0rd NOTES: 1. After you log into the console, please check the monitoring status of service components in the "Cluster Management". If any service is not ready, please wait patiently until all components are up and running. 2. Please change the default password after login. ##################################################### https://kubesphere.io 20xx-xx-xx xx:xx:xx #####################################################可以通过

<NodeIP:30880使用默认帐户和密码 (admin/P@88w0rd) 访问 KubeSphere 的 Web 控制台。

提示:

若要访问控制台,您可能需要根据您的环境配置端口转发规则。还请确保在您的安全组中打开了端口

30880。

3.5 启用 kubectl 自动补全

KubeKey 不会启用 kubectl 自动补全功能,请参见以下内容并将其打开:

备注

请确保已安装 bash-autocompletion 并可以正常工作。

执行以下命令:

# Install bash-completion apt-get install bash-completion # Source the completion script in your ~/.bashrc file echo 'source <(kubectl completion bash)' >>~/.bashrc # Add the completion script to the /etc/bash_completion.d directory kubectl completion bash >/etc/bash_completion.d/kubectl