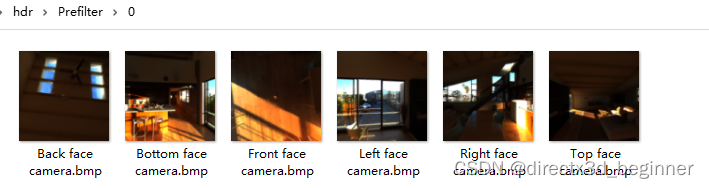

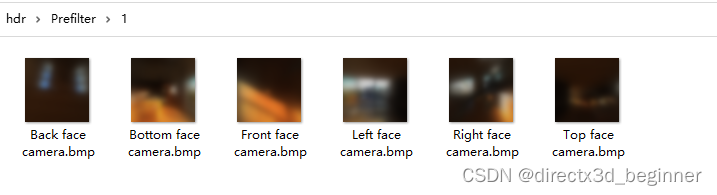

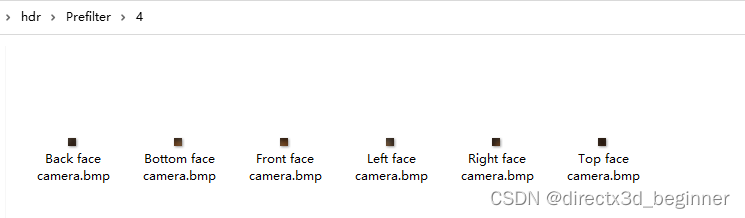

前面打印了各个级别的hdr环境贴图,也能看到预过滤环境贴图,现在进行打印各个级别的预过滤环境贴图。

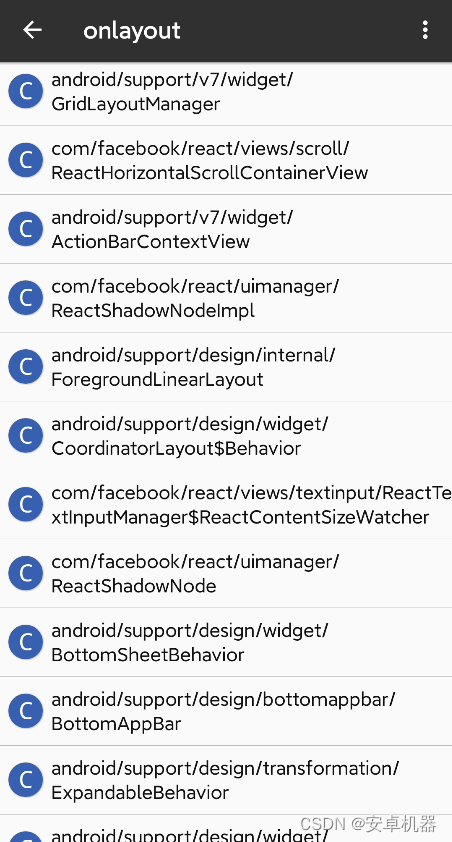

运行结果如下

代码如下:

#include <osg/TextureCubeMap>

#include <osg/TexGen>

#include <osg/TexEnvCombine>

#include <osgUtil/ReflectionMapGenerator>

#include <osgDB/ReadFile>

#include <osgViewer/Viewer>

#include <osg/NodeVisitor>

#include <osg/ShapeDrawable>

#include <osgGA/TrackballManipulator>

#include <osgDB/WriteFile>

static const char * vertexShader =

{

//“#version 120 core\n”

“in vec3 aPos;\n”

“varying vec3 localPos;\n”

“void main(void)\n”

“{\n”

“localPos = aPos;\n”

" gl_Position = ftransform();\n"

//“gl_Position = view * view * vec4(aPos,1.0);”

“}\n”

};

static const char psShader =

{

“varying vec3 localPos;\n”

“uniform samplerCube environmentMap;”

“uniform float roughness;”

“const float PI = 3.1415926;”

“float VanDerCorpus(uint n, uint base) "

“{ "

" float invBase = 1.0 / float(base); "

" float denom = 1.0; "

" float result = 0.0; "

" for (uint i = 0u; i < 32u; ++i) "

" { "

" if (n > 0u) "

" { "

" denom = mod(float(n), 2.0); "

" result += denom * invBase; "

" invBase = invBase / 2.0; "

" n = uint(float(n) / 2.0); "

" } "

" } "

“return result; "

“} "

" "

“vec2 HammersleyNoBitOps(uint i, uint N) "

“{ "

" return vec2(float(i) / float(N), VanDerCorpus(i, 2u)); "

“} "

//“float RadicalInverse_Vdc(uint bits)\n”

//”{”

//“bits = (bits << 16u) | (bits >> 16u);”

//“bits = ((bits & 0x55555555u) << 1u ) | (bits & 0xAAAAAAAAu) >> 1u);”

//“bits = ((bits & 0x33333333u) << 2u ) | (bits & 0xCCCCCCCCu) >> 2u);”

//“bits = ((bits & 0x0F0F0F0Fu) << 4u ) | (bits & 0xF0F0F0F0u) >> 4u);”

//“bits = ((bits & 0x00FF00FFu) << 8u ) | (bits & 0xFF00FF00u) >> 8u);”

//“return float(bits) * 2.3283064365386963e-10;”

//”}”

//“vec2 Hammersley(uint i, uint N)”

//”{”

//“return vec2(float(i) / float(N), RadicalInverse_Vdc(i));”

//”}"

“vec3 ImportanceSampleGGX(vec2 Xi, vec3 N, float roughness)”

“{”

“float a = roughness * roughness;”

“float phi = 2.0 * PI * Xi.x;”

"float cosTheta = sqrt((1.0 - Xi.y)/(1.0+(aa-1.0) * Xi.y));"

“float sinTheta = sqrt(1.0 - cosTheta * cosTheta);”

“vec3 H;”

“H.x = cos(phi) * sinTheta;”

“H.y = sin(phi) * sinTheta;”

“H.z = cosTheta;”

“vec3 up = abs(N.z) < 0.999 ? vec3(0.0,0.0,1.0) : vec3(1.0,0.0,0.0);”

“vec3 tangent = normalize(cross(up,N));”

“vec3 bitangent = cross(N,tangent);”

“vec3 sampleVec = tangent * H.x + bitangent * H.y + N * H.z;”

“return normalize(sampleVec);”

“}”

"void main() "

"{ "

" vec3 N = normalize(localPos); "

" vec3 R = N; "

" vec3 V = R; "

" "

" const uint SAMPLE_COUNT = 1024u; "

" float totalWeight = 0.0; "

" vec3 prefilteredColor = vec3(0.0); "

" for (uint i = 0u; i < SAMPLE_COUNT; ++i) "

" { "

" vec2 Xi = HammersleyNoBitOps(i, SAMPLE_COUNT); "

" vec3 H = ImportanceSampleGGX(Xi, N, roughness); "

" vec3 L = normalize(2.0 * dot(V, H) * H - V); "

" "

" float NdotL = max(dot(N, L), 0.0); "

" if (NdotL > 0.0) "

" { "

" prefilteredColor += texture(environmentMap, L).rgb * NdotL; "

" totalWeight += NdotL; "

" } "

" } "

" prefilteredColor = prefilteredColor / totalWeight; "

" "

" gl_FragColor = vec4(prefilteredColor, 1.0); "

"} "

};

class MyNodeVisitor : public osg::NodeVisitor

{

public:

MyNodeVisitor() : osg::NodeVisitor(osg::NodeVisitor::TRAVERSE_ALL_CHILDREN)

{

}

void apply(osg::Geode& geode)

{

int count = geode.getNumDrawables();

for (int i = 0; i < count; i++)

{

osg::ref_ptr<osg::Geometry> geometry = geode.getDrawable(i)->asGeometry();

if (!geometry.valid())

{

continue;

}

osg::Array* vertexArray = geometry->getVertexArray();

geometry->setVertexAttribArray(1, vertexArray);

}

traverse(geode);

}

};

osg::ref_ptrosg::TextureCubeMap getTextureCubeMap(osgViewer::Viewer& viewer,

int textureWidth,

int textureHeight)

{

unsigned int screenWidth, screenHeight;

osg::GraphicsContext::WindowingSystemInterface * wsInterface = osg::GraphicsContext::getWindowingSystemInterface();

wsInterface->getScreenResolution(osg::GraphicsContext::ScreenIdentifier(0), screenWidth, screenHeight);

osg::ref_ptr<osg::GraphicsContext::Traits> traits = new osg::GraphicsContext::Traits;

traits->x = 0;

traits->y = 0;

traits->width = screenWidth;

traits->height = screenHeight;

traits->windowDecoration = false;

traits->doubleBuffer = true;

traits->sharedContext = 0;

traits->readDISPLAY();

traits->setUndefinedScreenDetailsToDefaultScreen();

osg::ref_ptr<osg::GraphicsContext> gc = osg::GraphicsContext::createGraphicsContext(traits.get());

if (!gc)

{

osg::notify(osg::NOTICE) << "GraphicsWindow has not been created successfully." << std::endl;

return NULL;

}

osg::ref_ptr<osg::TextureCubeMap> texture = new osg::TextureCubeMap;

texture->setTextureSize(textureWidth, textureHeight);

texture->setInternalFormat(GL_RGB);

texture->setFilter(osg::Texture::MIN_FILTER, osg::Texture::LINEAR);

texture->setFilter(osg::Texture::MAG_FILTER, osg::Texture::LINEAR);

texture->setWrap(osg::Texture::WRAP_S, osg::Texture::CLAMP_TO_EDGE);

texture->setWrap(osg::Texture::WRAP_T, osg::Texture::CLAMP_TO_EDGE);

texture->setWrap(osg::Texture::WRAP_R, osg::Texture::CLAMP_TO_EDGE);

osg::Camera::RenderTargetImplementation renderTargetImplementation = osg::Camera::FRAME_BUFFER_OBJECT;

// front face

{

osg::ref_ptr<osg::Camera> camera = new osg::Camera;

camera->setName("Front face camera");

camera->setGraphicsContext(gc.get());

camera->setViewport(new osg::Viewport(0, 0, textureWidth, textureHeight));

camera->setAllowEventFocus(false);

camera->setRenderTargetImplementation(renderTargetImplementation);

camera->setRenderOrder(osg::Camera::PRE_RENDER);

//关联采样贴图

camera->attach(osg::Camera::COLOR_BUFFER, texture, 0, osg::TextureCubeMap::POSITIVE_Y);

osg::ref_ptr<osg::Image> printImage = new osg::Image;

printImage->setFileName(camera->getName());

printImage->allocateImage(textureWidth, textureHeight, 1, GL_RGBA, GL_UNSIGNED_BYTE);

texture->setImage(0, printImage);

camera->attach(osg::Camera::COLOR_BUFFER, printImage);

viewer.addSlave(camera.get(), osg::Matrixd(), osg::Matrixd());

}

// top face

{

osg::ref_ptr<osg::Camera> camera = new osg::Camera;

camera->setName("Top face camera");

camera->setGraphicsContext(gc.get());

camera->setViewport(new osg::Viewport(0, 0, textureWidth, textureHeight));

camera->setAllowEventFocus(false);

camera->setRenderTargetImplementation(renderTargetImplementation);

camera->setRenderOrder(osg::Camera::PRE_RENDER);

//关联采样贴图

camera->attach(osg::Camera::COLOR_BUFFER, texture, 0, osg::TextureCubeMap::POSITIVE_Z);

osg::ref_ptr<osg::Image> printImage = new osg::Image;

printImage->setFileName(camera->getName());

printImage->allocateImage(textureWidth, textureHeight, 1, GL_RGBA, GL_UNSIGNED_BYTE);

texture->setImage(1, printImage);

camera->attach(osg::Camera::COLOR_BUFFER, printImage);

viewer.addSlave(camera.get(), osg::Matrixd(), osg::Matrixd::rotate(osg::inDegrees(-90.0f), 1.0, 0.0, 0.0));

}

// left face

{

osg::ref_ptr<osg::Camera> camera = new osg::Camera;

camera->setName("Left face camera");

camera->setGraphicsContext(gc.get());

camera->setViewport(new osg::Viewport(0, 0, textureWidth, textureHeight));

camera->setAllowEventFocus(false);

camera->setRenderTargetImplementation(renderTargetImplementation);

camera->setRenderOrder(osg::Camera::PRE_RENDER);

//关联采样贴图

camera->attach(osg::Camera::COLOR_BUFFER, texture, 0, osg::TextureCubeMap::NEGATIVE_X);

osg::ref_ptr<osg::Image> printImage = new osg::Image;

printImage->setFileName(camera->getName());

printImage->allocateImage(textureWidth, textureHeight, 1, GL_RGBA, GL_UNSIGNED_BYTE);

texture->setImage(2, printImage);

camera->attach(osg::Camera::COLOR_BUFFER, printImage);

viewer.addSlave(camera.get(), osg::Matrixd(), osg::Matrixd::rotate(osg::inDegrees(-90.0f), 0.0, 1.0, 0.0) * osg::Matrixd::rotate(osg::inDegrees(-90.0f), 0.0, 0.0, 1.0));

}

// right face

{

osg::ref_ptr<osg::Camera> camera = new osg::Camera;

camera->setName("Right face camera");

camera->setGraphicsContext(gc.get());

camera->setViewport(new osg::Viewport(0, 0, textureWidth, textureHeight));

camera->setAllowEventFocus(false);

camera->setRenderTargetImplementation(renderTargetImplementation);

camera->setRenderOrder(osg::Camera::PRE_RENDER);

//关联采样贴图

camera->attach(osg::Camera::COLOR_BUFFER, texture, 0, osg::TextureCubeMap::POSITIVE_X);

osg::ref_ptr<osg::Image> printImage = new osg::Image;

printImage->setFileName(camera->getName());

printImage->allocateImage(textureWidth, textureHeight, 1, GL_RGBA, GL_UNSIGNED_BYTE);

texture->setImage(3, printImage);

camera->attach(osg::Camera::COLOR_BUFFER, printImage);

viewer.addSlave(camera.get(), osg::Matrixd(), osg::Matrixd::rotate(osg::inDegrees(90.0f), 0.0, 1.0, 0.0) * osg::Matrixd::rotate(osg::inDegrees(90.0f), 0.0, 0.0, 1.0));

}

// bottom face

{

osg::ref_ptr<osg::Camera> camera = new osg::Camera;

camera->setGraphicsContext(gc.get());

camera->setName("Bottom face camera");

camera->setViewport(new osg::Viewport(0, 0, textureWidth, textureHeight));

camera->setAllowEventFocus(false);

camera->setRenderTargetImplementation(renderTargetImplementation);

camera->setRenderOrder(osg::Camera::PRE_RENDER);

//关联采样贴图

camera->attach(osg::Camera::COLOR_BUFFER, texture, 0, osg::TextureCubeMap::NEGATIVE_Z);

osg::ref_ptr<osg::Image> printImage = new osg::Image;

printImage->setFileName(camera->getName());

printImage->allocateImage(textureWidth, textureHeight, 1, GL_RGBA, GL_UNSIGNED_BYTE);

texture->setImage(4, printImage);

camera->attach(osg::Camera::COLOR_BUFFER, printImage);

viewer.addSlave(camera.get(), osg::Matrixd(), osg::Matrixd::rotate(osg::inDegrees(90.0f), 1.0, 0.0, 0.0) * osg::Matrixd::rotate(osg::inDegrees(180.0f), 0.0, 0.0, 1.0));

}

// back face

{

osg::ref_ptr<osg::Camera> camera = new osg::Camera;

camera->setName("Back face camera");

camera->setGraphicsContext(gc.get());

camera->setViewport(new osg::Viewport(0, 0, textureWidth, textureHeight));

camera->setAllowEventFocus(false);

camera->setRenderTargetImplementation(renderTargetImplementation);

camera->setRenderOrder(osg::Camera::PRE_RENDER);

//关联采样贴图

camera->attach(osg::Camera::COLOR_BUFFER, texture, 0, osg::TextureCubeMap::NEGATIVE_Y);

osg::ref_ptr<osg::Image> printImage = new osg::Image;

printImage->setFileName(camera->getName());

printImage->allocateImage(textureWidth, textureHeight, 1, GL_RGBA, GL_UNSIGNED_BYTE);

texture->setImage(5, printImage);

camera->attach(osg::Camera::COLOR_BUFFER, printImage);

viewer.addSlave(camera.get(), osg::Matrixd(), osg::Matrixd::rotate(osg::inDegrees(180.0f), 1.0, 0.0, 0.0));

}

viewer.getCamera()->setProjectionMatrixAsPerspective(90.0f, 1.0, 0.1, 10);

//viewer.getCamera()->setNearFarRatio(0.0001f);

return texture;

}

int main()

{

int level = 0; //0,1,2,3,4

int maxLevel = 4;

float roughness = level * 1.0 / maxLevel;

int textureWidth = 128;

int textureHeight = 128;

float ratio = std::pow(0.5, level);

int mipWidth = textureWidth * ratio;

int mipHeight = textureHeight * ratio;

std::string strDir = "e:/hdr/lod/" + std::to_string(level) + "/";

osg::ref_ptr<osg::TextureCubeMap> tcm = new osg::TextureCubeMap;

tcm->setFilter(osg::Texture::MIN_FILTER, osg::Texture::LINEAR);

tcm->setFilter(osg::Texture::MAG_FILTER, osg::Texture::LINEAR);

tcm->setWrap(osg::Texture::WRAP_S, osg::Texture::CLAMP_TO_EDGE);

tcm->setWrap(osg::Texture::WRAP_T, osg::Texture::CLAMP_TO_EDGE);

tcm->setWrap(osg::Texture::WRAP_R, osg::Texture::CLAMP_TO_EDGE);

std::string strImagePosX = strDir + "Right face camera.bmp";

osg::ref_ptr<osg::Image> imagePosX = osgDB::readImageFile(strImagePosX);

tcm->setImage(osg::TextureCubeMap::POSITIVE_X, imagePosX);

std::string strImageNegX = strDir + "Left face camera.bmp";

osg::ref_ptr<osg::Image> imageNegX = osgDB::readImageFile(strImageNegX);

tcm->setImage(osg::TextureCubeMap::NEGATIVE_X, imageNegX);

std::string strImagePosY = strDir + "Front face camera.bmp";;

osg::ref_ptr<osg::Image> imagePosY = osgDB::readImageFile(strImagePosY);

tcm->setImage(osg::TextureCubeMap::POSITIVE_Y, imagePosY);

std::string strImageNegY = strDir + "Back face camera.bmp";;

osg::ref_ptr<osg::Image> imageNegY = osgDB::readImageFile(strImageNegY);

tcm->setImage(osg::TextureCubeMap::NEGATIVE_Y, imageNegY);

std::string strImagePosZ = strDir + "Top face camera.bmp";

osg::ref_ptr<osg::Image> imagePosZ = osgDB::readImageFile(strImagePosZ);

tcm->setImage(osg::TextureCubeMap::POSITIVE_Z, imagePosZ);

std::string strImageNegZ = strDir + "Bottom face camera.bmp";

osg::ref_ptr<osg::Image> imageNegZ = osgDB::readImageFile(strImageNegZ);

tcm->setImage(osg::TextureCubeMap::NEGATIVE_Z, imageNegZ);

osg::ref_ptr<osg::Box> box = new osg::Box(osg::Vec3(0, 0, 0), 1);

osg::ref_ptr<osg::ShapeDrawable> drawable = new osg::ShapeDrawable(box);

osg::ref_ptr<osg::Geode> geode = new osg::Geode;

geode->addDrawable(drawable);

MyNodeVisitor nv;

geode->accept(nv);

osg::ref_ptr<osg::StateSet> stateset = geode->getOrCreateStateSet();

stateset->setTextureAttributeAndModes(0, tcm, osg::StateAttribute::OVERRIDE | osg::StateAttribute::ON);

//shader

osg::ref_ptr<osg::Shader> vs1 = new osg::Shader(osg::Shader::VERTEX, vertexShader);

osg::ref_ptr<osg::Shader> ps1 = new osg::Shader(osg::Shader::FRAGMENT, psShader);

osg::ref_ptr<osg::Program> program1 = new osg::Program;

program1->addShader(vs1);

program1->addShader(ps1);

program1->addBindAttribLocation("aPos", 1);

osg::ref_ptr<osg::Uniform> tex0Uniform = new osg::Uniform("environmentMap", 0);

stateset->addUniform(tex0Uniform);

osg::ref_ptr<osg::Uniform> roughnessUniform = new osg::Uniform("roughness", roughness);

stateset->addUniform(roughnessUniform);

stateset->setAttribute(program1, osg::StateAttribute::ON);

osgViewer::Viewer viewer;

osg::ref_ptr<osgGA::TrackballManipulator> manipulator = new osgGA::TrackballManipulator();

viewer.setCameraManipulator(manipulator);

osg::Vec3d newEye(0, 0, 0);

osg::Vec3 newCenter(0, 0, 0);

osg::Vec3 newUp(0, 1, 0);

manipulator->setHomePosition(newEye, newCenter, newUp);

osg::ref_ptr<osg::TextureCubeMap> textureCubeMap = getTextureCubeMap(viewer, mipWidth, mipHeight);

viewer.setSceneData(geode.get());

bool bPrinted = false;

while (!viewer.done())

{

viewer.frame();

if (!bPrinted)

{

bPrinted = true;

int imageNumber = textureCubeMap->getNumImages();

for (int i = 0; i < imageNumber; i++)

{

osg::ref_ptr<osg::Image> theImage = textureCubeMap->getImage(i);

std::string strPrintName = "e:/hdr/Prefilter/" + std::to_string(level) + "/" + theImage->getFileName() + ".bmp";

osgDB::writeImageFile(*theImage, strPrintName);

}

}

}

return 0;

}