项目下载链接:链接: https://pan.baidu.com/s/1OfICplwlEtRBz_ta7Nwyyg?pwd=yr5c 提取码: yr5c 复制这段内容后打开百度网盘手机App,操作更方便哦

--来自百度网盘超级会员v4的分享

1.模型代码:model.py

# -*- coding: utf-8 -*-

# file: model.py

# author: JinTian

# time: 07/03/2017 3:07 PM

# Copyright 2017 JinTian. All Rights Reserved.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# ------------------------------------------------------------------------

import tensorflow as tf

import numpy as np

def rnn_model(model, input_data, output_data, vocab_size, rnn_size=128, num_layers=2, batch_size=64,

learning_rate=0.01):

"""

construct rnn seq2seq model.

:param model: model class

:param input_data: input data placeholder

:param output_data: output data placeholder

:param vocab_size:

:param rnn_size:

:param num_layers:

:param batch_size:

:param learning_rate:

:return:

"""

end_points = {}

if model == 'rnn':

cell_fun = tf.contrib.rnn.BasicRNNCell

elif model == 'gru':

cell_fun = tf.contrib.rnn.GRUCell

elif model == 'lstm':

cell_fun = tf.contrib.rnn.BasicLSTMCell

cell = cell_fun(rnn_size, state_is_tuple=True)

cell = tf.contrib.rnn.MultiRNNCell([cell] * num_layers, state_is_tuple=True)

if output_data is not None:

initial_state = cell.zero_state(batch_size, tf.float32)

else:

initial_state = cell.zero_state(1, tf.float32)

with tf.device("/cpu"):

embedding = tf.get_variable('embedding', initializer=tf.random_uniform(

[vocab_size + 1, rnn_size], -1.0, 1.0))

inputs = tf.nn.embedding_lookup(embedding, input_data)

# [batch_size, ?, rnn_size] = [64, ?, 128]

outputs, last_state = tf.nn.dynamic_rnn(cell, inputs, initial_state=initial_state)

output = tf.reshape(outputs, [-1, rnn_size])

weights = tf.Variable(tf.truncated_normal([rnn_size, vocab_size + 1])) # 产生一个正态分布

bias = tf.Variable(tf.zeros(shape=[vocab_size + 1]))

# 预测值h

logits = tf.nn.bias_add(tf.matmul(output, weights), bias=bias)

# [?, vocab_size+1]

if output_data is not None:

# output_data must be one-hot encode 真实标签

labels = tf.one_hot(tf.reshape(output_data, [-1]), depth=vocab_size + 1)

# should be [?, vocab_size+1]

loss = tf.nn.softmax_cross_entropy_with_logits(labels=labels, logits=logits)

# loss shape should be [?, vocab_size+1]

total_loss = tf.reduce_mean(loss)

train_op = tf.train.AdamOptimizer(learning_rate).minimize(total_loss)

# gvs = train_op.compute_gradients(total_loss) # gvs:[(10000,w1),(10,b1),(0.001,w2),(1,b2)]

# new_gvs = []

# for i,j in gvs:

# new_gvs.append((tf.clip_by_value(i,-10,10), j))

# train_op = train_op.apply_gradients(new_gvs)梯度裁剪

end_points['initial_state'] = initial_state

end_points['output'] = output

end_points['train_op'] = train_op

end_points['total_loss'] = total_loss

end_points['loss'] = loss

end_points['last_state'] = last_state

else:

prediction = tf.nn.softmax(logits)

end_points['initial_state'] = initial_state

end_points['last_state'] = last_state

end_points['prediction'] = prediction

return end_points

2.模型保存

小知识:保存模型方式有两种:1.只保存模型参数;2.保存模型结构和参数。

1.只保存参数:加载的时候一定要实例化模型,不然没有结构。

2.保存参数和模型:保存的比较大,占用内存大,一般不太使用。

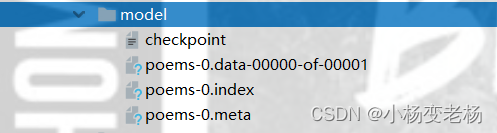

3.model里四个文件:

1.checkpoint:模型保存路径

2.第二个模型参数

3.第三个基本没用

3.第四个是模型的图(模型结构图)

2.主程序代码

# -*- coding: utf-8 -*-

# file: main.py

# author: JinTian

# time: 11/03/2017 9:53 AM

# Copyright 2017 JinTian. All Rights Reserved.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# ------------------------------------------------------------------------

import os

import numpy as np

import tensorflow as tf

from poems.model import rnn_model

from poems.poems import process_poems, generate_batch

tf.flags.DEFINE_integer('batch_size',64, help='batch size.')#批次带线啊哦

tf.flags.DEFINE_float('learning_rate', 0.01, 'learning rate.')

tf.flags.DEFINE_string('model_dir', os.path.abspath('./model'), 'model save path.')#模型路径

tf.flags.DEFINE_string('file_path', os.path.abspath('./data/poems.txt'), 'file name of poems.')#数据路径

tf.flags.DEFINE_string('model_prefix', 'poems', 'model save prefix.')

tf.flags.DEFINE_integer('epochs', 10, 'train how many epochs.')#训练次数

FLAGS = tf.flags.FLAGS#实例化一下

#定义参数和路径

def run_training():

if not os.path.exists(FLAGS.model_dir):

os.makedirs(FLAGS.model_dir)

poems_vector, word_to_int, vocabularies = process_poems(FLAGS.file_path)

batches_inputs, batches_outputs = generate_batch(FLAGS.batch_size, poems_vector, word_to_int)

input_data = tf.placeholder(tf.int32, [FLAGS.batch_size, None])

output_targets = tf.placeholder(tf.int32, [FLAGS.batch_size, None])

end_points = rnn_model(model='lstm', input_data=input_data, output_data=output_targets, vocab_size=len(

vocabularies), rnn_size=128, num_layers=2, batch_size=64, learning_rate=FLAGS.learning_rate)

saver = tf.train.Saver(tf.global_variables())

init_op = tf.group(tf.global_variables_initializer(), tf.local_variables_initializer())

with tf.Session() as sess:

# sess = tf_debug.LocalCLIDebugWrapperSession(sess=sess)

# sess.add_tensor_filter("has_inf_or_nan", tf_debug.has_inf_or_nan)

sess.run(init_op)

start_epoch = 0

checkpoint = tf.train.latest_checkpoint(FLAGS.model_dir)#间接训练,判断之前是否有模型,如果有就在之前基础进行训练

if checkpoint:

saver.restore(sess, checkpoint)#读出来模型

print("## restore from the checkpoint {0}".format(checkpoint))

start_epoch += int(checkpoint.split('-')[-1])

print('## start training...')

try:

for epoch in range(start_epoch, FLAGS.epochs):

n = 0

n_chunk = len(poems_vector) // FLAGS.batch_size

for batch in range(n_chunk):

loss, _, _ = sess.run([

end_points['total_loss'],

end_points['last_state'],

end_points['train_op']

], feed_dict={input_data: batches_inputs[n], output_targets: batches_outputs[n]})

n += 1

print('Epoch: %d, batch: %d, training loss: %.6f' % (epoch, batch, loss))

if epoch % 6 == 0:

saver.save(sess, os.path.join(FLAGS.model_dir, FLAGS.model_prefix), global_step=epoch)

except KeyboardInterrupt:

print('## Interrupt manually, try saving checkpoint for now...')

saver.save(sess, os.path.join(FLAGS.model_dir, FLAGS.model_prefix), global_step=epoch)

print('## Last epoch were saved, next time will start from epoch {}.'.format(epoch))

def main(_):

run_training()

if __name__ == '__main__':

tf.app.run()

![[附源码]计算机毕业设计Python贵港高铁站志愿者服务平台(程序+源码+LW文档)](https://img-blog.csdnimg.cn/6c37b894c26b44028fb8c8627dee4848.png)

![[附源码]Nodejs计算机毕业设计江西婺源旅游文化推广系统Express(程序+LW)](https://img-blog.csdnimg.cn/c7fc02a05e294ea3bf22c62dcc3fd35f.png)