参考:

Apache hive 3.1.2从单机到高可用部署 HiveServer2高可用 Metastore高可用 hive on spark hiveserver2 web UI 高可用集群启动脚本_薛定谔的猫不吃猫粮的博客-CSDN博客

没用里头的hive on spark,测试后发现版本冲突

一、Hive 集群规划(蓝色部分)

| ck1 | ck2 | ck3 |

| SecondaryNameNode | NameNode | |

| DataNode | DataNode | DataNode |

| ResourceManager | ||

| yarn historyserver | ||

| NodeManager | NodeManager | NodeManager |

| MySQL | ||

| hiveServer2 | hiveServer2 | |

| hiveMetastore | hiveMetastore | |

| Spark | ||

| Spark JobHistoryServer |

二、配置文件

记得向hive的lib导入mysql6以上的安装包,否则:

[hive]报错:Caused by: java.lang.ClassNotFoundException: com.mysql.cj.jdbc.Driver_胖胖学编程的博客-CSDN博客

1、ck3和ck2相同的

1)hive-env.sh 末尾添加3行:

# Set HADOOP_HOME to point to a specific hadoop install directory

HADOOP_HOME=/home/data_warehouse/module/hadoop-3.1.3

# Hive Configuration Directory can be controlled by:

export HIVE_CONF_DIR=/home/data_warehouse/module/hive-3.1.2/conf

# Folder containing extra libraries required for hive compilation/execution can be controlled by:

export HIVE_AUX_JARS_PATH=/home/data_warehouse/module/hive-3.1.2/lib

2)环境变量

#HIVE_HOME

export HIVE_HOME=/home/data_warehouse/module/hive-3.1.2

export PATH=$PATH:$HIVE_HOME/bin2、ck3和ck2不同的

ck3

hive-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<!-- jdbc 连接的 URL -->

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://ck3:3306/metastore?useSSL=false</value>

</property>

<!-- jdbc 连接的 Driver-->

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.cj.jdbc.Driver</value>

</property>

<!-- jdbc 连接的 username-->

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

</property>

<!-- jdbc 连接的 password -->

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>123456</value>

</property>

<!-- Hive 元数据存储版本的验证 -->

<property>

<name>hive.metastore.schema.verification</name>

<value>false</value>

</property>

<!--元数据存储授权-->

<property>

<name>hive.metastore.event.db.notification.api.auth</name>

<value>false</value>

</property>

<!-- 指定存储元数据要连接的地址

<property>

<name>hive.metastore.uris</name>

<value>thrift://ck3:9083</value>

</property> -->

<!-- Hive 默认在 HDFS 的工作目录 -->

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/user/hive/warehouse</value>

</property>

<property>

<name>hive.cli.print.current.db</name>

<value>true</value>

</property>

<property>

<name>hive.cli.print.header</name>

<value>true</value>

</property>

<property>

<name>hive.server2.session.check.interval</name>

<value>60000</value>

</property>

<property>

<name>hive.aux.jars.path</name>

<value>/home/data_warehouse/module/hive-3.1.2/lib</value>

</property>

<property>

<name>hive.execution.engine</name>

<value>mr</value>

</property>

<!--hive metastore高可用-->

<property>

<name>hive.metastore.uris</name>

<value>thrift://ck2:9083,thrift://ck3:9083</value>

</property>

<!--hiveserver2高可用-->

<property>

<name>hive.server2.support.dynamic.service.discovery</name>

<value>true</value>

</property>

<!-- <property>

<name>hive.server2.active.passive.ha.enable</name>

<value>true</value>

</property> -->

<property>

<name>hive.server2.zookeeper.namespace</name>

<value>hiveserver2_zk</value>

</property>

<property>

<name>hive.zookeeper.quorum</name>

<value>ck1:2181,ck2:2181,ck3:2181</value>

</property>

<property>

<name>hive.zookeeper.client.port</name>

<value>2181</value>

</property>

<!--填写节点, 如ck2,ck3 注意要填写本机的hostname-->

<property>

<name>hive.server2.thrift.bind.host</name>

<value>ck3</value>

</property>

<property>

<name>hive.server2.thrift.port</name>

<value>10000</value>

</property>

<!--hiveserver2 webui-->

<property>

<name>hive.server2.webui.host</name>

<value>ck3</value>

</property>

<property>

<name>hive.server2.webui.port</name>

<value>10002</value>

</property>

<!-- <property>

<name>hive.server2.idle.session.timeout</name>

<value>3600000</value>

</property> -->

</configuration>

ck2

hive-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<!-- jdbc 连接的 URL -->

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://ck3:3306/metastore?useSSL=false</value>

</property>

<!-- jdbc 连接的 Driver-->

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.cj.jdbc.Driver</value>

</property>

<!-- jdbc 连接的 username-->

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

</property>

<!-- jdbc 连接的 password -->

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>123456</value>

</property>

<!-- Hive 元数据存储版本的验证 -->

<property>

<name>hive.metastore.schema.verification</name>

<value>false</value>

</property>

<!--元数据存储授权-->

<property>

<name>hive.metastore.event.db.notification.api.auth</name>

<value>false</value>

</property>

<!-- 指定存储元数据要连接的地址

<property>

<name>hive.metastore.uris</name>

<value>thrift://ck3:9083</value>

</property> -->

<!-- Hive 默认在 HDFS 的工作目录 -->

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/user/hive/warehouse</value>

</property>

<property>

<name>hive.cli.print.current.db</name>

<value>true</value>

</property>

<property>

<name>hive.cli.print.header</name>

<value>true</value>

</property>

<property>

<name>hive.server2.session.check.interval</name>

<value>60000</value>

</property>

<property>

<name>hive.aux.jars.path</name>

<value>/home/data_warehouse/module/hive-3.1.2/lib</value>

</property>

<property>

<name>hive.execution.engine</name>

<value>mr</value>

</property>

<!--hive metastore高可用-->

<property>

<name>hive.metastore.uris</name>

<value>thrift://ck2:9083,thrift://ck3:9083</value>

</property>

<!--hiveserver2高可用-->

<property>

<name>hive.server2.support.dynamic.service.discovery</name>

<value>true</value>

</property>

<!-- <property>

<name>hive.server2.active.passive.ha.enable</name>

<value>true</value>

</property> -->

<property>

<name>hive.server2.zookeeper.namespace</name>

<value>hiveserver2_zk</value>

</property>

<property>

<name>hive.zookeeper.quorum</name>

<value>ck1:2181,ck2:2181,ck3:2181</value>

</property>

<property>

<name>hive.zookeeper.client.port</name>

<value>2181</value>

</property>

<!--填写节点, 如ck2,ck3 注意要填写本机的hostname-->

<property>

<name>hive.server2.thrift.bind.host</name>

<value>ck2</value>

</property>

<property>

<name>hive.server2.thrift.port</name>

<value>10000</value>

</property>

<!--hiveserver2 webui-->

<property>

<name>hive.server2.webui.host</name>

<value>ck2</value>

</property>

<property>

<name>hive.server2.webui.port</name>

<value>10002</value>

</property>

<!-- <property>

<name>hive.server2.idle.session.timeout</name>

<value>3600000</value>

</property> -->

</configuration>

三、运行hiveserver2和hive metastore服务的脚本(ck2,ck3相同,下面缺一不可)

将这两个脚本放在/home/data_warehouse/module/hive-3.1.2/bin下,并chmod 777

1、hiveservices.sh

#!/bin/bash

HIVE_LOG_DIR=$HIVE_HOME/logs

mkdir -p $HIVE_LOG_DIR

#检查进程是否运行正常,参数1为进程名,参数2为进程端口

function check_process()

{

pid=$(ps -ef 2>/dev/null | grep -v grep | grep -i $1 | awk '{print $2}')

ppid=$(netstat -nltp 2>/dev/null | grep $2 | awk '{print $7}' | cut -d '/' -f 1)

echo $pid

[[ "$pid" =~ "$ppid" ]] && [ "$ppid" ] && return 0 || return 1

}

function hive_start()

{

metapid=$(check_process HiveMetastore 9083)

cmd="nohup hive --service metastore >$HIVE_LOG_DIR/metastore.log 2>&1 &"

cmd=$cmd" sleep 4; hdfs dfsadmin -safemode wait >/dev/null 2>&1"

[ -z "$metapid" ] && eval $cmd || echo "Metastroe服务已启动"

server2pid=$(check_process HiveServer2 10000)

cmd="nohup hive --service hiveserver2 >$HIVE_LOG_DIR/hiveServer2.log 2>&1 &"

[ -z "$server2pid" ] && eval $cmd || echo "HiveServer2服务已启动"

}

function hive_stop()

{

metapid=$(check_process HiveMetastore 9083)

[ "$metapid" ] && kill $metapid || echo "Metastore服务未启动"

server2pid=$(check_process HiveServer2 10000)

[ "$server2pid" ] && kill $server2pid || echo "HiveServer2服务未启动"

}

case $1 in

"start")

hive_start

;;

"stop")

hive_stop

;;

"restart")

hive_stop

sleep 2

hive_start

;;

"status")

check_process HiveMetastore 9083 >/dev/null && echo "Metastore服务运行正常" || echo "Metastore服务运行异常"

check_process HiveServer2 10000 >/dev/null && echo "HiveServer2服务运行正常" || echo "HiveServer2服务运行异常"

;;

*)

echo Invalid Args!

echo 'Usage: '$(basename $0)' start|stop|restart|status'

;;

esac

2、hive2server.sh

#!/bin/bash

if [ $# -lt 1 ]

then

echo "No Args Input..."

exit ;

fi

case $1 in

"start")

echo " =================== ck3 启动 HiveServer2和Metastore服务 ==================="

ssh ck3 "/home/data_warehouse/module/hive-3.1.2/bin/hiveservices.sh start"

echo " =================== ck2 启动 HiveServer2和Metastore服务 ==================="

ssh ck2 "/home/data_warehouse/module/hive-3.1.2/bin/hiveservices.sh start"

;;

"stop")

echo " =================== ck3 停止 HiveServer2和Metastore服务 ==================="

ssh ck3 "/home/data_warehouse/module/hive-3.1.2/bin/hiveservices.sh stop"

echo " =================== ck2 停止 HiveServer2和Metastore服务 ==================="

ssh ck2 "/home/data_warehouse/module/hive-3.1.2/bin/hiveservices.sh stop"

;;

"status")

echo " =================== ck3 查看 HiveServer2和Metastore服务 ==================="

ssh ck3 "/home/data_warehouse/module/hive-3.1.2/bin/hiveservices.sh status"

echo " =================== ck2 查看 HiveServer2和Metastore服务 ==================="

ssh ck2 "/home/data_warehouse/module/hive-3.1.2/bin/hiveservices.sh status"

;;

*)

echo "Input Args Error...start|stop|status"

;;

esac

四、启动hiveserver2和metastire服务

1、启动 :在ck3或ck2上:hive2server.sh start

2、查看状态 :在ck3或ck2上:hive2server.sh status

3、关闭 :在ck3或ck2上:hive2server.sh stop

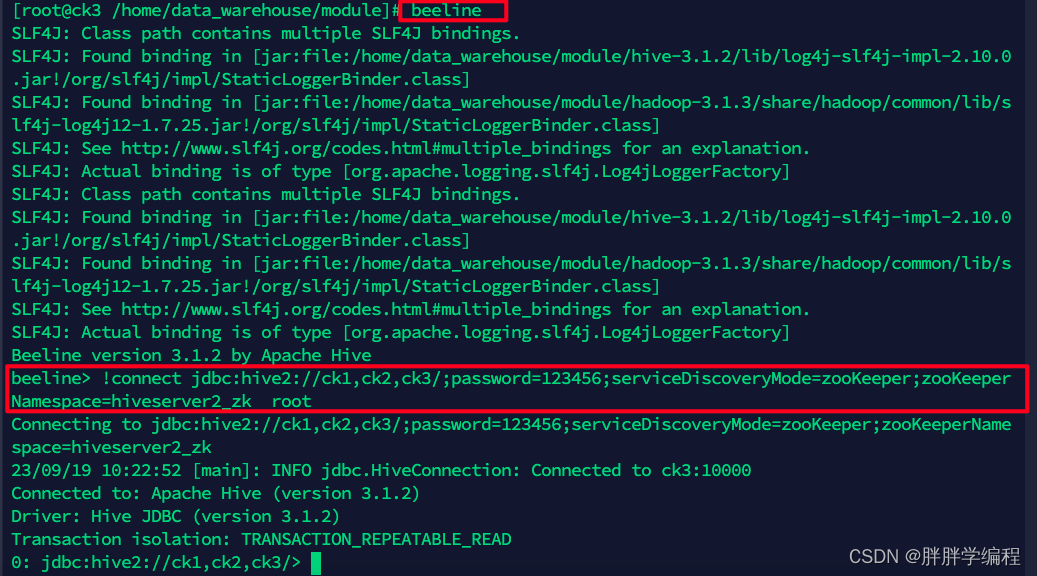

五、使用

依次输入下面两个命令

beeline

!connect jdbc:hive2://ck1,ck2,ck3/;password=123456;serviceDiscoveryMode=zooKeeper;zooKeeperNamespace=hiveserver2_zk root