文章目录

- 概要

- 重平衡通知机制

- 消费组组状态

- 消费端重平衡流程

- Broker端重平衡流程

概要

上一篇Kafka消费者组重平衡主要介绍了重平衡相关的概念,本篇主要梳理重平衡发生的流程。

为了更好地观察,数据准备如下:

kafka版本:kafka_2.13-3.2.1

控制台创建topic (2个分区1个副本):

bin/kafka-topics.sh --create --bootstrap-server node1:9092 --replication-factor 1 --partitions 2 --topic test-rebalance

本地启动两个SpringBoot项目实例,代码如下

@KafkaListener(topics = "test-rebalance", groupId = "test-group")

public void rebalanceConsumer(ConsumerRecord<String, String> recordInfo) {

int partition = recordInfo.partition();

System.out.println("partition:" + partition + " value:" + recordInfo.value());

}

重平衡通知机制

Kafka Java 消费者需要定期地发送心跳请求(Heartbeat Request)到 Broker 端的协调者,以表明它还存活着。在 Kafka 0.10.1.0 版本之前,发送心跳请求是在消费者主线程完成的,也就是调用 KafkaConsumer.poll 方法的那个线程。

这样的设计存在弊端,一旦消息处理消耗了过长的时间,心跳请求将无法及时发到协调者那里,导致协调者“错误地”认为该消费者已“死”。自 0.10.1.0 版本开始,Kafka引入了一个单独的心跳线程来专门执行心跳请求发送,避免了这个问题。

重平衡的通知机制正是通过心跳线程来完成的。当协调者决定开启新一轮重平衡后,它会将“REBALANCE_IN_PROGRESS”封装进心跳请求的响应中,发还给消费者实例。当消费者实例发现心跳响应中包含了“REBALANCE_IN_PROGRESS”,就能立马知道重平衡又开始了,这就是重平衡的通知机制。

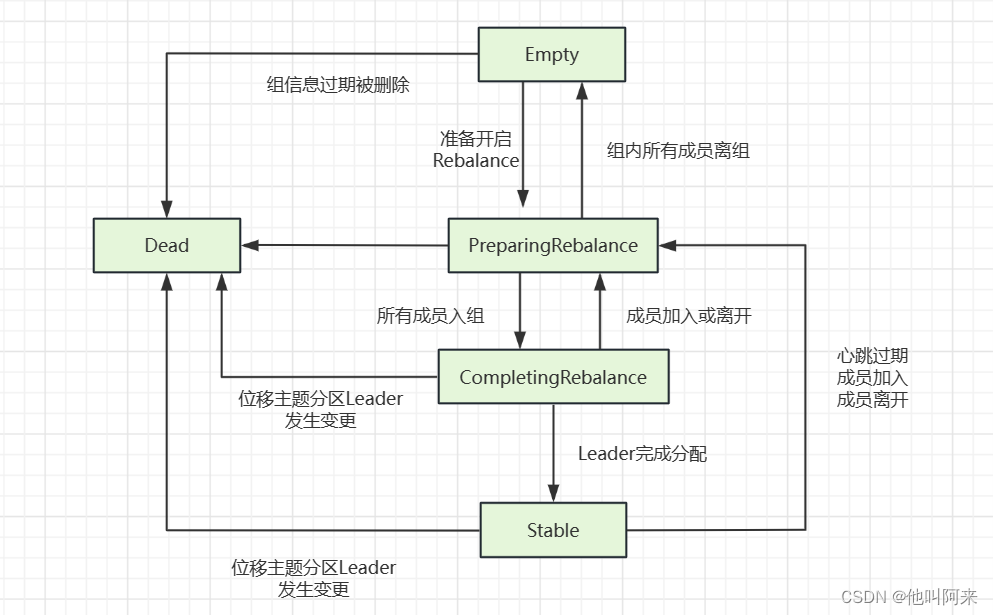

消费组组状态

Kafka 为消费者组定义了 5 种状态,它们分别是:Empty、Dead、PreparingRebalance、CompletingRebalance 和 Stable。那么,这 5 种状态的含义如下:

| 状态 | 含义 |

|---|---|

| Empty | 组内没有任何成员 |

| Dead | 组内没有任何成员,但组的元数据已经在协调者端删除 |

| PreparingRebalance | 消费组组准备开启重平衡,此时所有成员都要重新请求加入消费者组 |

| CompletingRebalance | 消费者组下所有成员已经加入,各个成员正在等待分配方案 |

| Stable | 消费者组的稳定状态,该状态表明重平衡已经完成,组内各成员都能够正常消费数据了 |

以下是各个状态的流转:

一个消费者组最开始是 Empty 状态,当重平衡过程开启后,它会被置于PreparingRebalance 状态等待成员加入,之后变更到CompletingRebalance 状态等待分配方案,最后流转到 Stable 状态完成重平衡。当有新成员加入或已有成员退出时,消费者组的状态从 Stable 直接跳到PreparingRebalance 状态,此时,所有现存成员就必须重新申请加入组。

创建一个topic并逐步启动两个消费者实例:

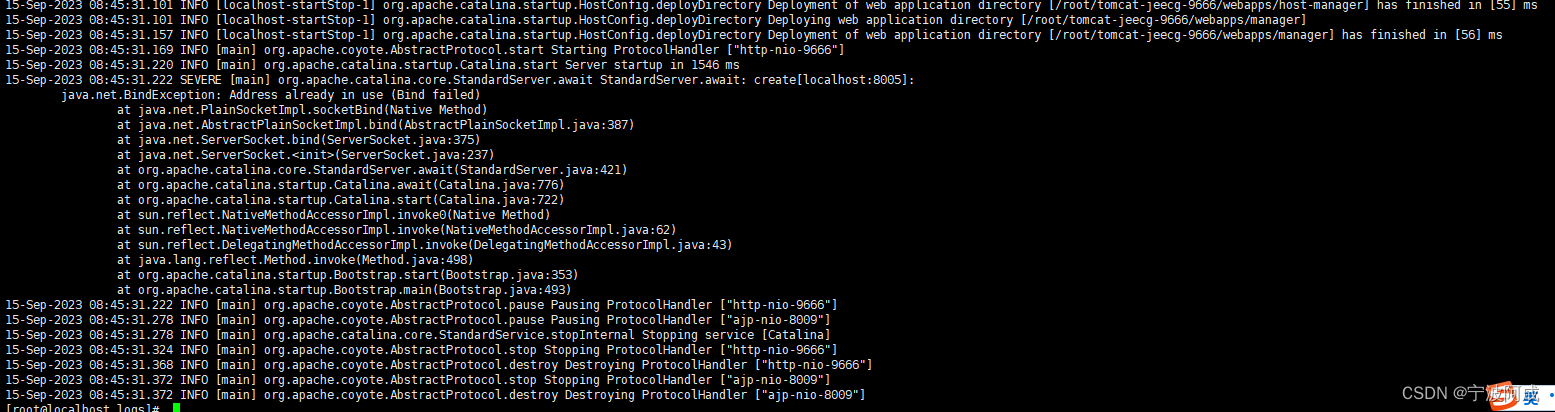

服务端日志:

INFO [GroupCoordinator 0]: Dynamic member with unknown member id joins group test-group in Empty state. Created a new member id consumer-1-a6c11c9f-ec26-4e66-adeb-832f699f1247 and request the member to rejoin with this id. (kafka.coordinator.group.GroupCoordinator)

INFO [GroupCoordinator 0]: Preparing to rebalance group test-group in state PreparingRebalance with old generation 3 (__consumer_offsets-12) (reason: Adding new member consumer-1-a6c11c9f-ec26-4e66-adeb-832f699f1247 with group instance id None; client reason: not provided) (kafka.coordinator.group.GroupCoordinator)

INFO [GroupCoordinator 0]: Stabilized group test-group generation 4 (__consumer_offsets-12) with 1 members (kafka.coordinator.group.GroupCoordinator)

INFO [GroupCoordinator 0]: Assignment received from leader consumer-1-a6c11c9f-ec26-4e66-adeb-832f699f1247 for group test-group for generation 4. The group has 1 members, 0 of which are static. (kafka.coordinator.group.GroupCoordinator)

客户端一启动日志

o.a.k.c.c.internals.AbstractCoordinator : [Consumer clientId=consumer-1, groupId=test-group] (Re-)joining group

o.a.k.c.c.internals.AbstractCoordinator : [Consumer clientId=consumer-1, groupId=test-group] (Re-)joining group

o.a.k.c.c.internals.AbstractCoordinator : [Consumer clientId=consumer-1, groupId=test-group] Successfully joined group with generation 4

o.a.k.c.c.internals.ConsumerCoordinator : [Consumer clientId=consumer-1, groupId=test-group] Setting newly assigned partitions: test-rebalance-1, test-rebalance-0

o.a.k.c.c.internals.ConsumerCoordinator : [Consumer clientId=consumer-1, groupId=test-group] Setting offset for partition test-rebalance-1 to the committed offset FetchPosition{offset=0, offsetEpoch=Optional.empty, currentLeader=LeaderAndEpoch{leader=node1:9092 (id: 0 rack: null), epoch=0}}

o.a.k.c.c.internals.ConsumerCoordinator : [Consumer clientId=consumer-1, groupId=test-group] Setting offset for partition test-rebalance-0 to the committed offset FetchPosition{offset=0, offsetEpoch=Optional.empty, currentLeader=LeaderAndEpoch{leader=node1:9092 (id: 0 rack: null), epoch=0}}

o.s.k.l.KafkaMessageListenerContainer : test-group: partitions assigned: [test-rebalance-1, test-rebalance-0]

有新成员加入后broker日志

INFO [GroupCoordinator 0]: Dynamic member with unknown member id joins group test-group in Stable state. Created a new member id consumer-1-97eba1d4-7f5c-4d78-b979-ba6ab9c82395 and request the member to rejoin with this id. (kafka.coordinator.group.GroupCoordinator)

INFO [GroupCoordinator 0]: Preparing to rebalance group test-group in state PreparingRebalance with old generation 4 (__consumer_offsets-12) (reason: Adding new member consumer-1-97eba1d4-7f5c-4d78-b979-ba6ab9c82395 with group instance id None; client reason: not provided) (kafka.coordinator.group.GroupCoordinator)

INFO [GroupCoordinator 0]: Stabilized group test-group generation 5 (__consumer_offsets-12) with 2 members (kafka.coordinator.group.GroupCoordinator)

INFO [GroupCoordinator 0]: Assignment received from leader consumer-1-a6c11c9f-ec26-4e66-adeb-832f699f1247 for group test-group for generation 5. The group has 2 members, 0 of which are static. (kafka.coordinator.group.GroupCoordinator)

接下来启动客户端二

客户端一日志

o.a.k.c.c.internals.AbstractCoordinator : [Consumer clientId=consumer-1, groupId=test-group] (Re-)joining group

o.a.k.c.c.internals.AbstractCoordinator : [Consumer clientId=consumer-1, groupId=test-group] (Re-)joining group

o.a.k.c.c.internals.AbstractCoordinator : [Consumer clientId=consumer-1, groupId=test-group] Successfully joined group with generation 5

o.a.k.c.c.internals.ConsumerCoordinator : [Consumer clientId=consumer-1, groupId=test-group] Setting newly assigned partitions: test-rebalance-0

o.a.k.c.c.internals.ConsumerCoordinator : [Consumer clientId=consumer-1, groupId=test-group] Setting offset for partition test-rebalance-0 to the committed offset FetchPosition{offset=0, offsetEpoch=Optional.empty, currentLeader=LeaderAndEpoch{leader=node1:9092 (id: 0 rack: null), epoch=0}}

o.s.k.l.KafkaMessageListenerContainer : test-group: partitions assigned: [test-rebalance-0]

客户端二日志

o.a.k.c.c.internals.AbstractCoordinator : [Consumer clientId=consumer-1, groupId=test-group] Attempt to heartbeat failed since group is rebalancing

o.a.k.c.c.internals.ConsumerCoordinator : [Consumer clientId=consumer-1, groupId=test-group] Revoking previously assigned partitions [test-rebalance-1, test-rebalance-0]

o.s.k.l.KafkaMessageListenerContainer : test-group: partitions revoked: [test-rebalance-1, test-rebalance-0]

o.a.k.c.c.internals.AbstractCoordinator : [Consumer clientId=consumer-1, groupId=test-group] (Re-)joining group

o.a.k.c.c.internals.AbstractCoordinator : [Consumer clientId=consumer-1, groupId=test-group] Successfully joined group with generation 5

o.a.k.c.c.internals.ConsumerCoordinator : [Consumer clientId=consumer-1, groupId=test-group] Setting newly assigned partitions: test-rebalance-1

o.a.k.c.c.internals.ConsumerCoordinator : [Consumer clientId=consumer-1, groupId=test-group] Setting offset for partition test-rebalance-1 to the committed offset FetchPosition{offset=0, offsetEpoch=Optional.empty, o.s.k.l.KafkaMessageListenerContainer : test-group: partitions assigned: [test-rebalance-1]

当所有成员都退出组后,消费者组状态变更为 Empty。Kafka 定期自动删除过期位移的条件就是,组要处于 Empty 状态。因此,如果你的消费者组停掉了很长时间(超过 7 天),那么 Kafka 很可能就把该组的位移数据删除了。

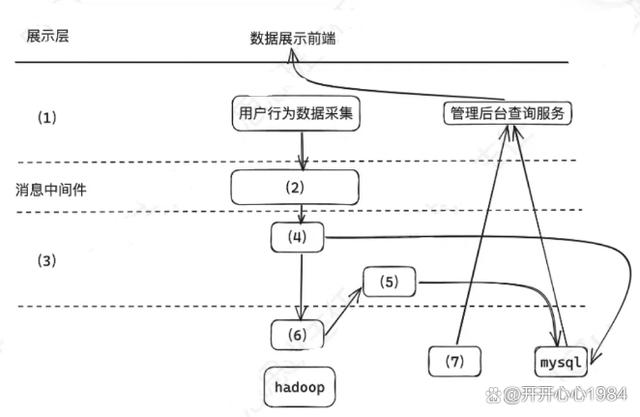

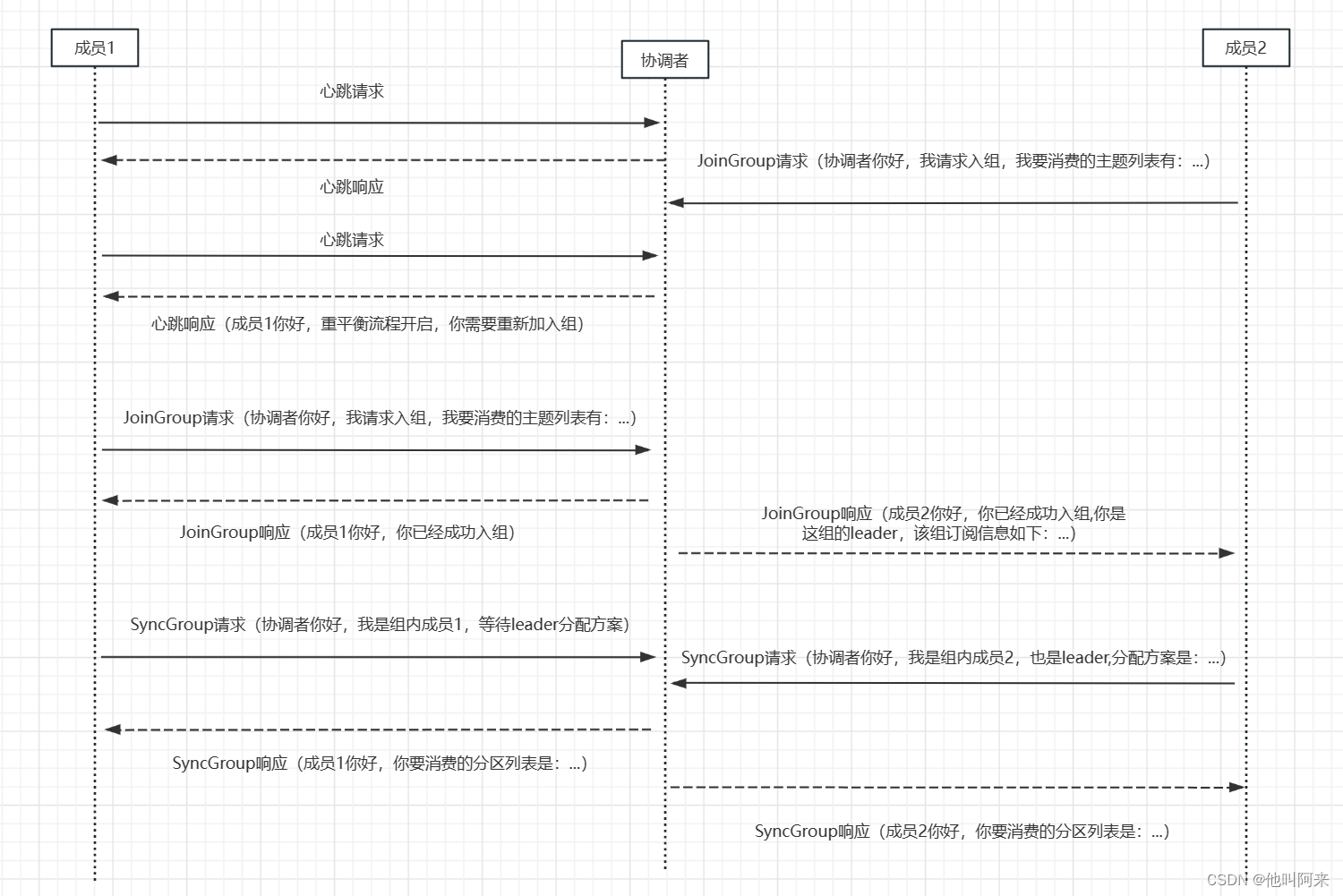

消费端重平衡流程

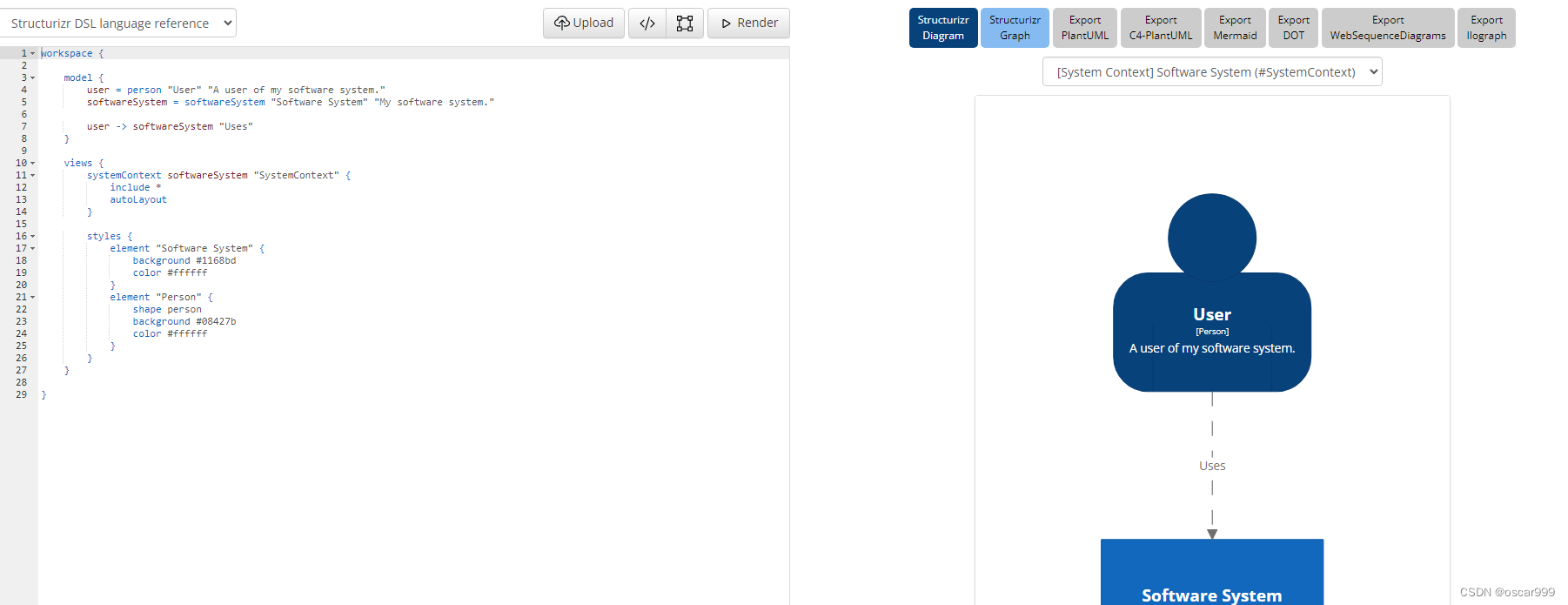

重平衡的完整流程需要消费者端和协调者组件(什么是协调者)共同参与才能完成。下面先梳理消费者端的重平衡流程。主要分为3各阶段。

第一阶段:确定组协调器

关于如何确定组协调器,参考Kafka消费者重平衡(一)

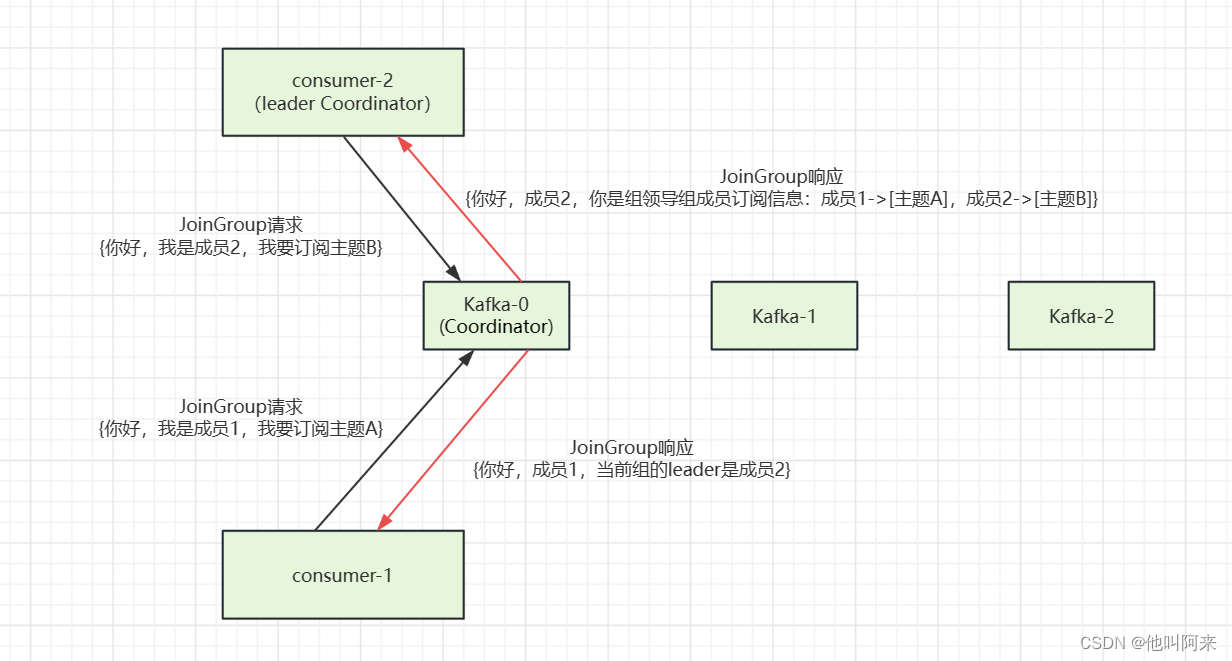

第二阶段:JoinGroup

在此阶段的消费者会向Group Coordinator 发送 JoinGroupRequest 请求,并处理响应。然后GroupCoordinator 从一个consumer group中选择第一个(通常情况下)加入group的consumer作为leader(消费组协调器),把consumer group情况发送给这个leader,接着这个leader会负责制定分区方案。

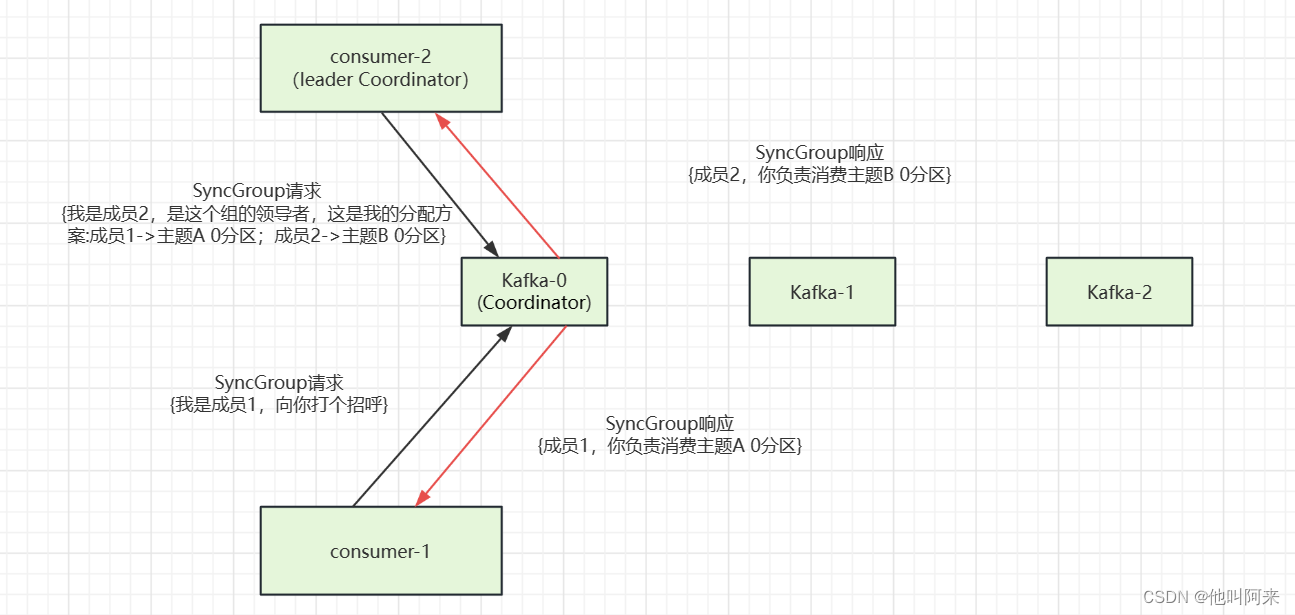

在这一步中,领导者向协调者发送 SyncGroup 请求,将刚刚做出的分配方案发给协调者。值得注意的是,其他成员也会向协调者发送 SyncGroup 请求,只不过请求体中并没有实际的内容。这一步的主要目的是让协调者接收分配方案,然后统一以 SyncGroup 响应的方式分发给所有成员,这样组内所有成员就都知道自己该消费哪些分区了。

第三阶段:SyncGroup

待领导者制定好分配方案后,重平衡流程进入到 SyncGroup 请求阶段

SyncGroup 请求的主要目的,就是让协调者把领导者制定的分配方案下发给各个组内成员。当所有成员都成功接收到分配方案后,消费者组进入到 Stable 状态,即开始正常的消费工作。

Broker端重平衡流程

下面只要从几个常见的场景梳理Broker端重平衡的流程。

新成员加入

当协调者收到新的 JoinGroup 请求后,它会通过心跳请求响应的方式通知组内现有的所有成员,强制它们开启新一轮的重平衡。

具体流程如下:

组成员主动离组

消费者实例所在线程或进程调用 close() 方法时,就会主动通知协调者要退出组。以下时具体的流程

组成员崩溃离组

崩溃离组是指消费者实例出现严重故障,突然宕机导致的离组。它和主动离组是有区别的,因为后者是主动发起的离组,协调者能马上感知并处理。但崩溃离组是被动的,协调者通常需要等待一段时间才能感知到,这段时间一般是由消费者端参数 session.timeout.ms 控制。

![[刷题记录]牛客面试笔刷TOP101(一)](https://img-blog.csdnimg.cn/a570e15940af478982b3da48593005dd.png)