paddle预测库

git config --global http.postBuffer 1048576000

git clone --recursive https://github.com/PaddlePaddle/Paddle.git修改CMakeLists.txt

mkdir build

cd build

cmake -DWITH_CONTRIB=OFF -DWITH_MKL=OFF -DWITH_MKLDNN=OFF -DWITH_TESTING=OFF -DCMAKE_BUILD_TYPE=Release -DWITH_INFERENCE_API_TEST=OFF -DON_INFER=ON -DWITH_PYTHON=ON -DWITH_GPU=ON -DWITH_AVX=OFF -DCUDA_ARCH_NAME=Auto ..

安装依赖

apt-get install -y patchelf

1.Fast

1.1)编译GPU版本的

| 选项 | 支持平台 | 描述 |

|---|---|---|

| ENABLE_ORT_BACKEND | Linux(x64)/windows(x64) | 默认OFF,是否集成ONNX Runtime后端 |

| ENABLE_PADDLE_BACKEND | Linux(x64)/window(x64) | 默认OFF,是否集成Paddle Inference后端 |

| ENABLE_TRT_BACKEND | Linux(x64)/window(x64) | 默认OFF,是否集成TensorRT后端 |

| ENABLE_OPENVINO_BACKEND | Linux(x64)/window(x64) | 默认OFF,是否集成OpenVINO后端(仅支持CPU) |

| ENABLE_VISION | Linux(x64)/window(x64) | 默认OFF,是否集成视觉模型 |

| ENABLE_TEXT | Linux(x64)/window(x64) | 默认OFF,是否集成文本模型 |

| CUDA_DIRECTORY | Linux(x64)/window(x64) | 默认/usr/local/cuda,要求CUDA>=11.2 |

| TRT_DIRECTORY | Linux(x64)/window(x64) | 默认为空,要求TensorRT>=8.4,例如/Download/TensorRT-8.5 |

第三个库的配置(可选,如果没有定义以下选项,预构建的第三个库将在构建 FastDeploy 时自动下载)。

| 选项 | 描述 |

|---|---|

| ORT_DIRECTORY | 当 ENABLE_ORT_BACKEND=ON 时,使用 ORT_DIRECTORY 指定您自己的 ONNX Runtime 库路径。 |

| OPENCV_DIRECTORY | 当 ENABLE_VISION=ON 时,使用 OPENCV_DIRECTORY 指定您自己的 OpenCV 库路径。 |

| OPENVINO_DIRECTORY | 当 ENABLE_OPENVINO_BACKEND=ON 时,使用 OPENVINO_DIRECTORY 指定您自己的 OpenVINO 库路径。 |

编译时Linux需要

- gcc/g++ >= 5.4 (8.2 is recommended)

- cmake >= 3.18.0

- cuda >= 11.2

- cudnn >= 8.2

建议手动安装OpenCV库,并定义-DOPENCV_DIRECTORY设置OpenCV库的路径(如果没有定义标志,构建FastDeploy时会自动下载一个预建的OpenCV库,但预建的OpenCV不支持读取视频文件或其他功能,例如imshow)

apt-get install libopencv-devgit clone https://github.com/PaddlePaddle/FastDeploy.git

cd FastDeploy

mkdir buildgpu && cd buildgpu

cmake .. -DENABLE_ORT_BACKEND=ON \

-DENABLE_PADDLE_BACKEND=ON \

-DENABLE_OPENVINO_BACKEND=OFF \

-DENABLE_TRT_BACKEND=ON \

-DWITH_GPU=ON \

-DTRT_DIRECTORY=/TensorRT-8.4.1.5 \

-DCUDA_DIRECTORY=/usr/local/cuda \

-DCMAKE_INSTALL_PREFIX=${PWD}/compiled_fastdeploy_sdk \

-DENABLE_VISION=ON \

-DOPENCV_DIRECTORY=/usr/lib/x86_64-linux-gnu/cmake/opencv4

make -j12

make install

DTRT_DIRECTORY的目录在于找到下面的Include和lib目录,我的tensorrt最初是安装的deb包,

系统中头文件在/usr/include/x86_64-linux-gnu,依赖库在/usr/lib/x86_64-linux-gnu

注意:

但在CMakeLists.txt中,需要用到tensorrt的include和lib

include_directories(${TRT_DIRECTORY}/include)

find_library(TRT_INFER_LIB nvinfer ${TRT_DIRECTORY}/lib)

find_library(TRT_ONNX_LIB nvonnxparser ${TRT_DIRECTORY}/lib)

find_library(TRT_PLUGIN_LIB nvinfer_plugin ${TRT_DIRECTORY}/lib)

list(APPEND DEPEND_LIBS ${TRT_INFER_LIB} ${TRT_ONNX_LIB} ${TRT_PLUGIN_LIB})可以在https://developer.nvidia.com/nvidia-tensorrt-8x-download下载tensorrt的tar包

否则,会报如下错误

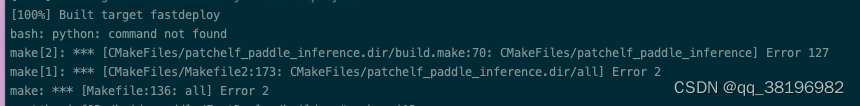

编译报如下错误

此处我下载了tensorrt的tar包

测试demo

创建fastdeploy_test,在该目录下创建3rdparty,将/baidu_paddle/FastDeploy/buildgpu/compiled_fastdeploy_sdk/include和/baidu_paddle/FastDeploy/buildgpu/compiled_fastdeploy_sdk/lib拷贝到3rdparty目录下

注意:不知道为啥直接拷贝/baidu_paddle/FastDeploy/buildgpu/compiled_fastdeploy_sdk/lib下的so无法找到某些backend,使用

cp -r /baidu_paddle/FastDeploy/buildgpu/libfastdeploy.so* ./

/baidu_paddle/FastDeploy/buildgpu

cp -r /usr/include/opencv4/opencv2/ /baidu_paddle/fastdeploy_test/3rdparty/include

1)获取模型和测试图像

wget https://bj.bcebos.com/paddlehub/fastdeploy/ppyoloe_crn_l_300e_coco.tgz

wget https://gitee.com/paddlepaddle/PaddleDetection/raw/release/2.4/demo/000000014439.jpg

tar xvf ppyoloe_crn_l_300e_coco.tgz2)创建infer_demo.cc,内容如下

#include "fastdeploy/vision.h"

int main() {

std::string model_file = "/baidu_paddle/fastdeplot_test/ppyoloe_crn_l_300e_coco/model.pdmodel";

std::string params_file = "/baidu_paddle/fastdeplot_test/ppyoloe_crn_l_300e_coco/model.pdiparams";

std::string infer_cfg_file = "/baidu_paddle/fastdeplot_test/ppyoloe_crn_l_300e_coco/infer_cfg.yml";

auto option = fastdeploy::RuntimeOption();

option.UseGpu();

auto model = fastdeploy::vision::detection::PPYOLOE(model_file, params_file,

infer_cfg_file, option);

if (!model.Initialized()) {

std::cerr << "Failed to initialize." << std::endl;

return 0;

}

cv::Mat im = cv::imread("../000000014439.jpg");

fastdeploy::vision::DetectionResult res;

if (!model.Predict(im, &res)) {

std::cerr << "Failed to predict." << std::endl;

return 0;

}

std::cout << res.Str() << std::endl;

cv::Mat vis_im = fastdeploy::vision::VisDetection(im, res, 0.5);

cv::imwrite("vis_result.jpg", vis_im);

std::cout << "Visualized result saved in ./vis_result.jpg" << std::endl;

return 0;

}

roption.UseCpu()或option.UseGpu()此处似乎没有影响

3)编写CMakeLists.txt内容如下

cmake_minimum_required(VERSION 3.20)

SET(CMAKE_CXX_STANDARD 17)

project(test)

set(INCLUDE_PATH ${CMAKE_SOURCE_DIR}/3rdparty/include)

set(LIB_PATH ${CMAKE_SOURCE_DIR}/3rdparty/lib)

include_directories(${INCLUDE_PATH})

link_directories(${LIB_PATH})

add_executable(

infer_demo

infer_demo.cc

)

target_link_libraries(

infer_demo

fastdeploy

opencv_calib3d opencv_core opencv_dnn opencv_features2d opencv_flann opencv_highgui opencv_imgcodecs opencv_imgproc opencv_ml opencv_objdetect opencv_photo opencv_stitching opencv_video opencv_videoio

)4)编译运行

mkdir build && cd build

cmake .. && make

./infer_demo

1.2)编译CPU版本

mkdir buildcpu && cd buildcpu

cmake .. -DENABLE_ORT_BACKEND=ON \

-DENABLE_PADDLE_BACKEND=ON \

-DENABLE_OPENVINO_BACKEND=ON \

-DCMAKE_INSTALL_PREFIX=${PWD}/compiled_fastdeploy_sdk \

-DENABLE_VISION=ON \

-DOPENCV_DIRECTORY=/usr/lib/x86_64-linux-gnu/cmake/opencv4

make -j12

make install-DWITH_TESTING=ON目的在于开启单元测试

在make -j12时遇到了如下错误

解决方法如下:

##检查python

root@hpcinf02:/baidu_paddle/FastDeploy# type python python2 python3

bash: type: python: not found

bash: type: python2: not found

python3 is hashed (/usr/bin/python3)

发现存在python3,要做的就是将python链接到python3

在.bashrc文件中创建一个永久别名

alias python='python3'

这样,运行Python命令,系统运行python3

多数情况下都起作用,除非某些程序期望运行/usr/bin/python.现在,你可以在 /usr/bin/python 和 /usr/bin/python3 之间建立符号链接,但对于 Ubuntu 用户来说,存在一个更简单的选择。

对于 Ubuntu 20.04 和更高版本,如果你安装了 python-is-python3 软件包,你有一个软件包可以自动完成所有链接创建。这也是原始错误信息所提示的。

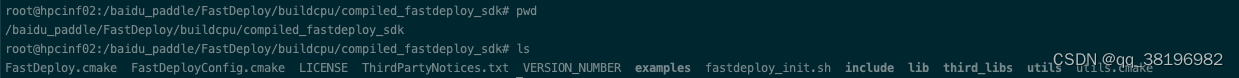

apt-get install python-is-python3然后重新编译,成功后头文件和lib文件在/baidu_paddle/FastDeploy/buildcpu/compiled_fastdeploy_sdk目录下

添加环境变量

export LD_LIBRARY_PATH=/baidu_paddle/FastDeploy/buildcpu/compiled_fastdeploy_sdk/third_libs/install/paddle2onnx/lib:/baidu_paddle/FastDeploy/buildcpu/compiled_fastdeploy_sdk/third_libs/install/opencv/lib:/baidu_paddle/FastDeploy/buildcpu/compiled_fastdeploy_sdk/third_libs/install/onnxruntime/lib:/baidu_paddle/FastDeploy/buildcpu:${LD_LIBRARY_PATH}测试demo

创建fastdeploy_test,在该目录下创建3rdparty,将/baidu_paddle/FastDeploy/buildcpu/compiled_fastdeploy_sdk/include和/baidu_paddle/FastDeploy/buildcpu/compiled_fastdeploy_sdk/lib拷贝到3rdparty目录下

注意:不知道为啥直接拷贝/baidu_paddle/FastDeploy/buildcpu/compiled_fastdeploy_sdk/lib下的so无法找到某些backend,使用

cp -r /baidu_paddle/FastDeploy/buildcpu/libfastdeploy.so* ./

/baidu_paddle/FastDeploy/buildcpu

cp -r /usr/include/opencv4/opencv2/ /baidu_paddle/fastdeploy_test/3rdparty/include

1)获取模型和测试图像

wget https://bj.bcebos.com/paddlehub/fastdeploy/ppyoloe_crn_l_300e_coco.tgz

wget https://gitee.com/paddlepaddle/PaddleDetection/raw/release/2.4/demo/000000014439.jpg

tar xvf ppyoloe_crn_l_300e_coco.tgz2)创建infer_demo.cc,内容如下

#include "fastdeploy/vision.h"

int main() {

std::string model_file = "/baidu_paddle/fastdeplot_test/ppyoloe_crn_l_300e_coco/model.pdmodel";

std::string params_file = "/baidu_paddle/fastdeplot_test/ppyoloe_crn_l_300e_coco/model.pdiparams";

std::string infer_cfg_file = "/baidu_paddle/fastdeplot_test/ppyoloe_crn_l_300e_coco/infer_cfg.yml";

auto option = fastdeploy::RuntimeOption();

option.UseCpu();

auto model = fastdeploy::vision::detection::PPYOLOE(model_file, params_file,

infer_cfg_file, option);

if (!model.Initialized()) {

std::cerr << "Failed to initialize." << std::endl;

return 0;

}

cv::Mat im = cv::imread("../000000014439.jpg");

fastdeploy::vision::DetectionResult res;

if (!model.Predict(im, &res)) {

std::cerr << "Failed to predict." << std::endl;

return 0;

}

std::cout << res.Str() << std::endl;

cv::Mat vis_im = fastdeploy::vision::VisDetection(im, res, 0.5);

cv::imwrite("vis_result.jpg", vis_im);

std::cout << "Visualized result saved in ./vis_result.jpg" << std::endl;

return 0;

}

roption.UseCpu()或option.UseGpu()此处似乎没有影响

3)编写CMakeLists.txt内容如下

cmake_minimum_required(VERSION 3.20)

SET(CMAKE_CXX_STANDARD 17)

project(test)

set(INCLUDE_PATH ${CMAKE_SOURCE_DIR}/3rdparty/include)

set(LIB_PATH ${CMAKE_SOURCE_DIR}/3rdparty/lib)

include_directories(${INCLUDE_PATH})

link_directories(${LIB_PATH})

add_executable(

infer_demo

infer_demo.cc

)

target_link_libraries(

infer_demo

fastdeploy

opencv_calib3d opencv_core opencv_dnn opencv_features2d opencv_flann opencv_highgui opencv_imgcodecs opencv_imgproc opencv_ml opencv_objdetect opencv_photo opencv_stitching opencv_video opencv_videoio

)4)编译运行

mkdir build && cd build

cmake .. && make

./infer_demo

![[附源码]Nodejs计算机毕业设计教师业绩考核和职称评审系统Express(程序+LW)](https://img-blog.csdnimg.cn/d21daaa733bd450ba53ebb55fe0306bc.png)

![[附源码]Nodejs计算机毕业设计教务管理系统Express(程序+LW)](https://img-blog.csdnimg.cn/bdd7dc4dfd1d42c0be2c7586b6a6c623.png)