参考:

https://github.com/vllm-project/vllm

https://zhuanlan.zhihu.com/p/645732302

https://vllm.readthedocs.io/en/latest/getting_started/quickstart.html ##文档

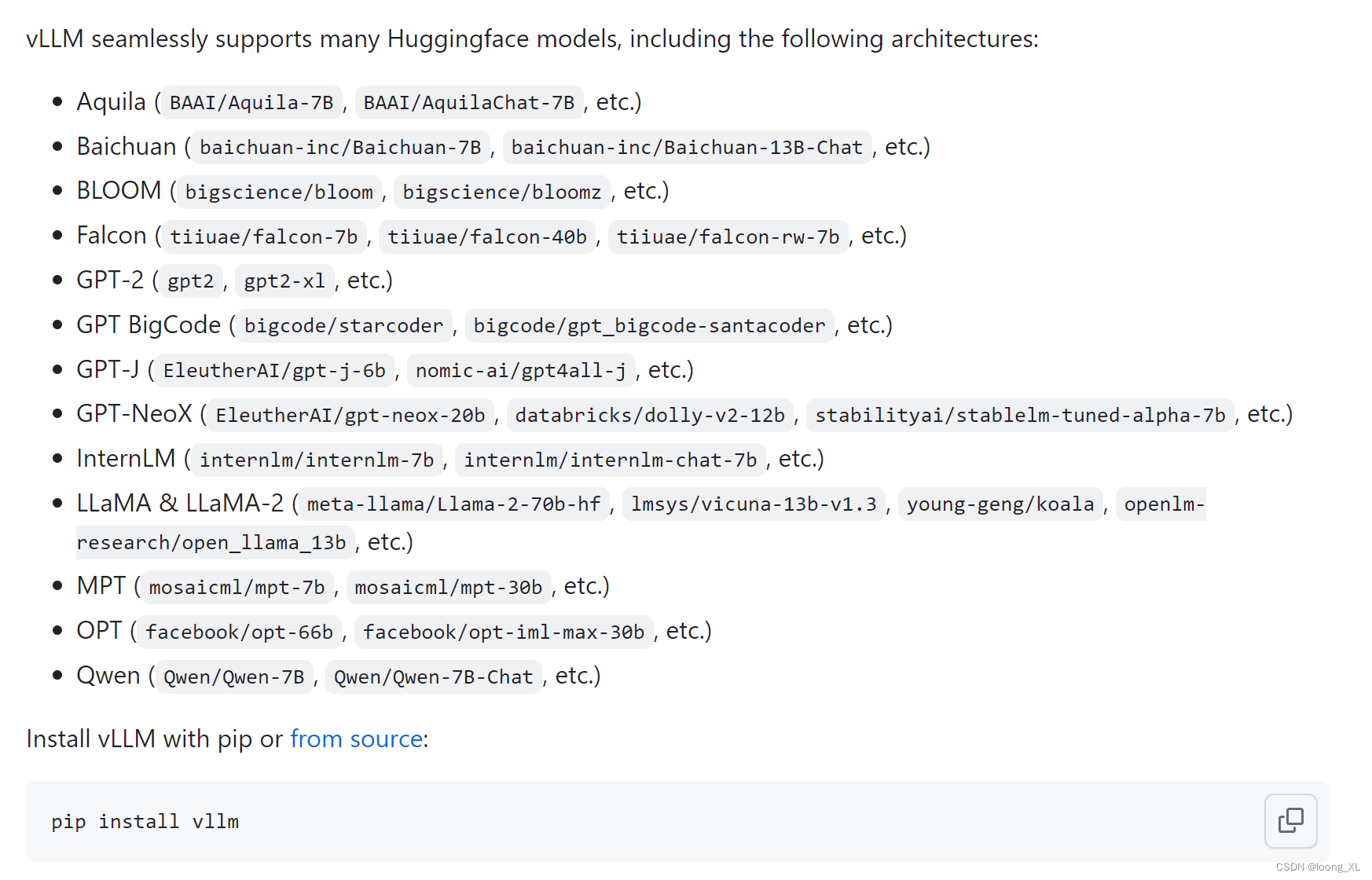

1、vLLM

这里使用的cuda版本是11.4,tesla T4卡

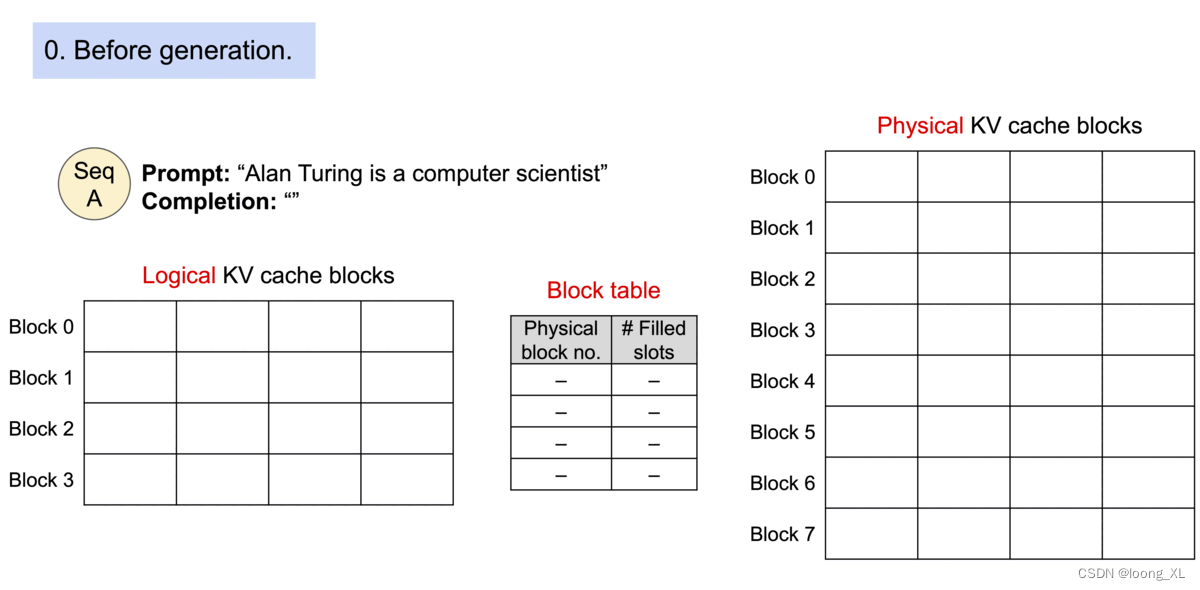

加速原理:

PagedAttention,主要是利用kv缓存

2、qwen测试使用:

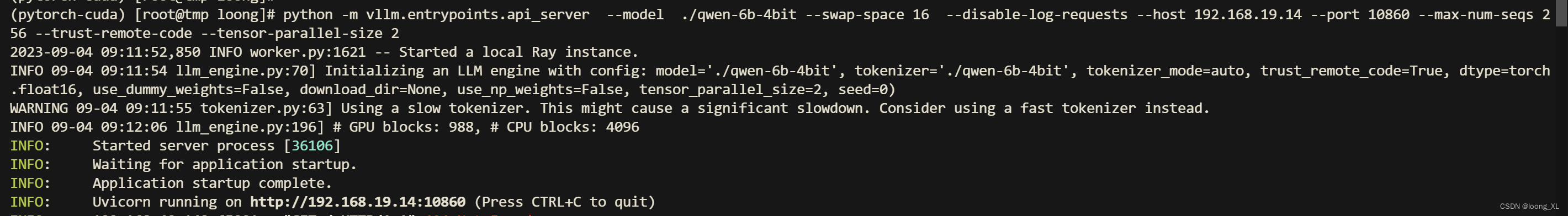

##启动正常api服务

python -m vllm.entrypoints.api_server --model ./qwen-6b-model --swap-space 16 --disable-log-requests --host 192***.14 --port 10860 --max-num-seqs

256 --trust-remote-code --tensor-parallel-size 2

##启动openai形式 api服务

python -m vllm.entrypoints.openai.api_server --model ./qwen-6b-model --swap-space 16 --disable-log-requests --host 1***.14 --port 10860 --max-nu

m-seqs 256 --trust-remote-code --tensor-parallel-size 2

api访问(服务端用的正常api服务第一个):

import requests

import json

# from vllm import LLM, SamplingParams

headers = {"User-Agent": "Test Client"}

pload = {

"prompt": "<|im_start|>system\n你是一个人工智能相关的专家,名字叫小杰.<|im_end|>\n<|im_start|>user\n介绍下深度学习<|im_end|>\n<|im_start|>assistant\n",

"n": 2,

"use_beam_search": True,

"temperature": 0,

"max_tokens": 16,

"stream": False,

"stop": ["<|im_end|>", "<|im_start|>",]

}

response = requests.post("http://1***:10860/generate", headers=headers, json=pload, stream=True)

print(response)

print(response.content)

print(response.content.decode())

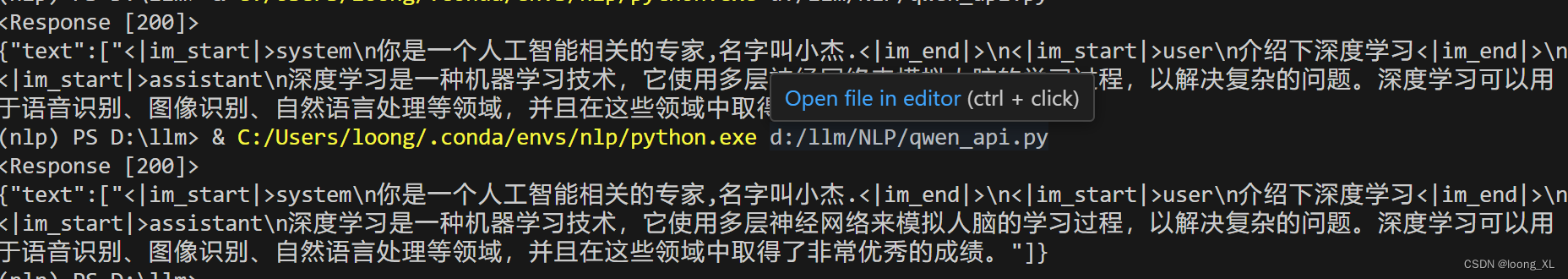

如果要只输出一个答案(注释掉# “use_beam_search”: True,),答案长一些( “max_tokens”: 800,)等,需要更改请求参数:

import requests

import json

# from vllm import LLM, SamplingParams

headers = {"User-Agent": "Test Client"}

pload = {

"prompt": "<|im_start|>system\n你是一个人工智能相关的专家,名字叫小杰.<|im_end|>\n<|im_start|>user\n介绍下深度学习<|im_end|>\n<|im_start|>assistant\n",

"n": 1,

# "use_beam_search": True,

"temperature": 0,

"max_tokens": 800,

"stream": False,

"stop": ["<|im_end|>", "<|im_start|>",]

}

response = requests.post("http://192.168.19.14:10860/generate", headers=headers, json=pload, stream=True)

print(response)

# print(response.content)

print(response.content.decode())

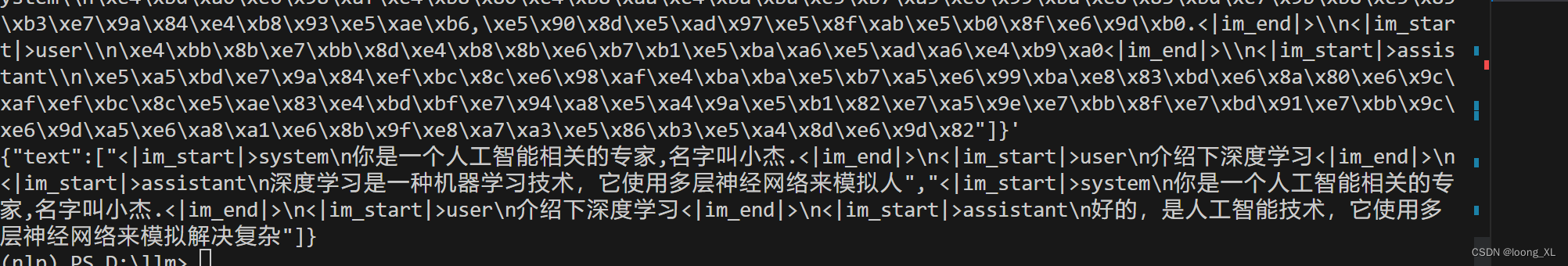

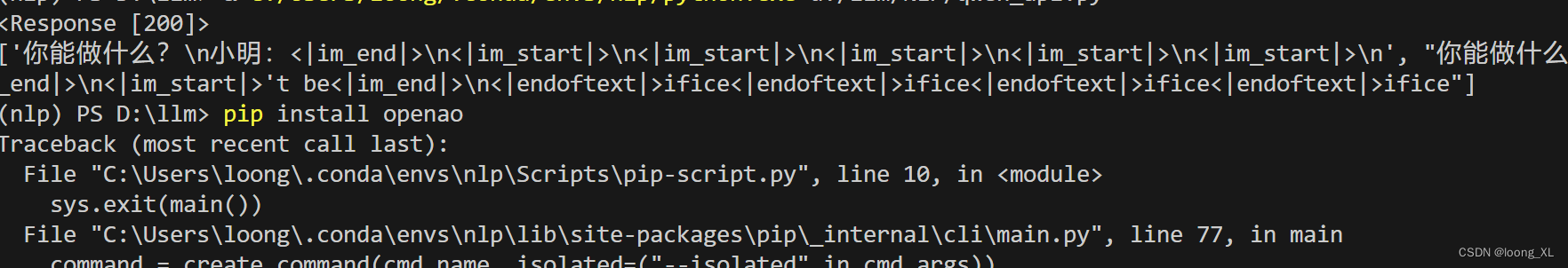

问题

1、现在中文qwen模型运行返回的基本都是乱码,不知道是不是vLLM支持的问题?

解决方法:(qwen需要构造输入格式)

https://github.com/vllm-project/vllm/issues/901

qwen_vllm1.py

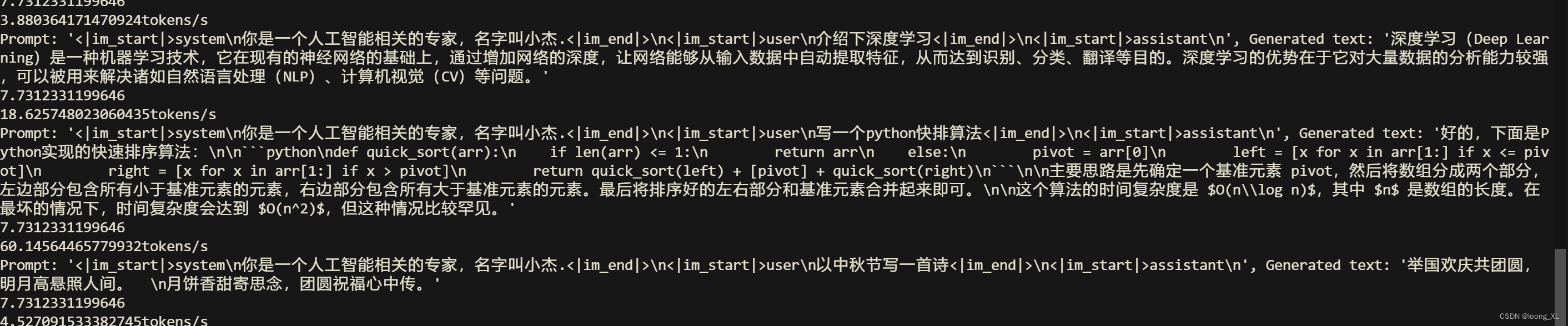

from vllm import LLM, SamplingParams

from transformers import AutoModelForCausalLM, AutoTokenizer

import time

model_path=r"/mnt/data/loong/qwen-6b-model"

model = LLM(model=model_path, tokenizer=model_path,tokenizer_mode='slow',tensor_parallel_size=2,trust_remote_code=True)

tokenizer = AutoTokenizer.from_pretrained(model_path, legacy=True, trust_remote_code=True)

sampling_params = SamplingParams(temperature=0.9,stop=["<|im_end|>", "<|im_start|>",],max_tokens=400)

start=time.time()

prompt_before = '<|im_start|>system\n你是一个人工智能相关的专家,名字叫小杰.<|im_end|>\n<|im_start|>user\n'

prompt_after = '<|im_end|>\n<|im_start|>assistant\n'

prompts = ["你好!","介绍下深度学习","写一个python快排算法","以中秋节写一首诗"]

prompts = [prompt_before + x + prompt_after for x in prompts]

outputs = model.generate(prompts, sampling_params)

end = time.time()

for output in outputs:

prompt = output.prompt

generated_text = output.outputs[0].text

length = len(generated_text)

print(f"Prompt: {prompt!r}, Generated text: {generated_text!r}")

print(end-start)

cost = end-start

print(f"{length/cost}tokens/s")

运行:python qwen_vllm1.py