一、基础环境配置(所有主机操作)

主机名规划

序号 主机ip 主机名规划

1 192.168.1.30 kubernetes-master.openlab.cn kubernetes-master

2 192.168.1.31 kubernetes-node1.openlab.cn kubernetes-node1

3 192.168.1.32 kubernetes-node2.openlab.cn kubernetes-node2

4 192.168.1.33 kubernetes-node3.openlab.cn kubernetes-node3

5 192.168.1.34 kubernetes-register.openlab.cn kubernetes-register

1.配置IP地址和主机名、hosts解析

#这里只演示一台服务器上的命令,实则五台服务器都要实现

[root@kubernetes-master ~]# vim /etc/hosts

192.168.1.30 kubernetes-master.openlab.cn kubernetes-master

192.168.1.31 kubernetes-node1.openlab.cn kubernetes-node1

192.168.1.32 kubernetes-node2.openlab.cn kubernetes-node2

192.168.1.33 kubernetes-node3.openlab.cn kubernetes-node3

192.168.1.34 kubernetes-register.openlab.cn kubernetes-register

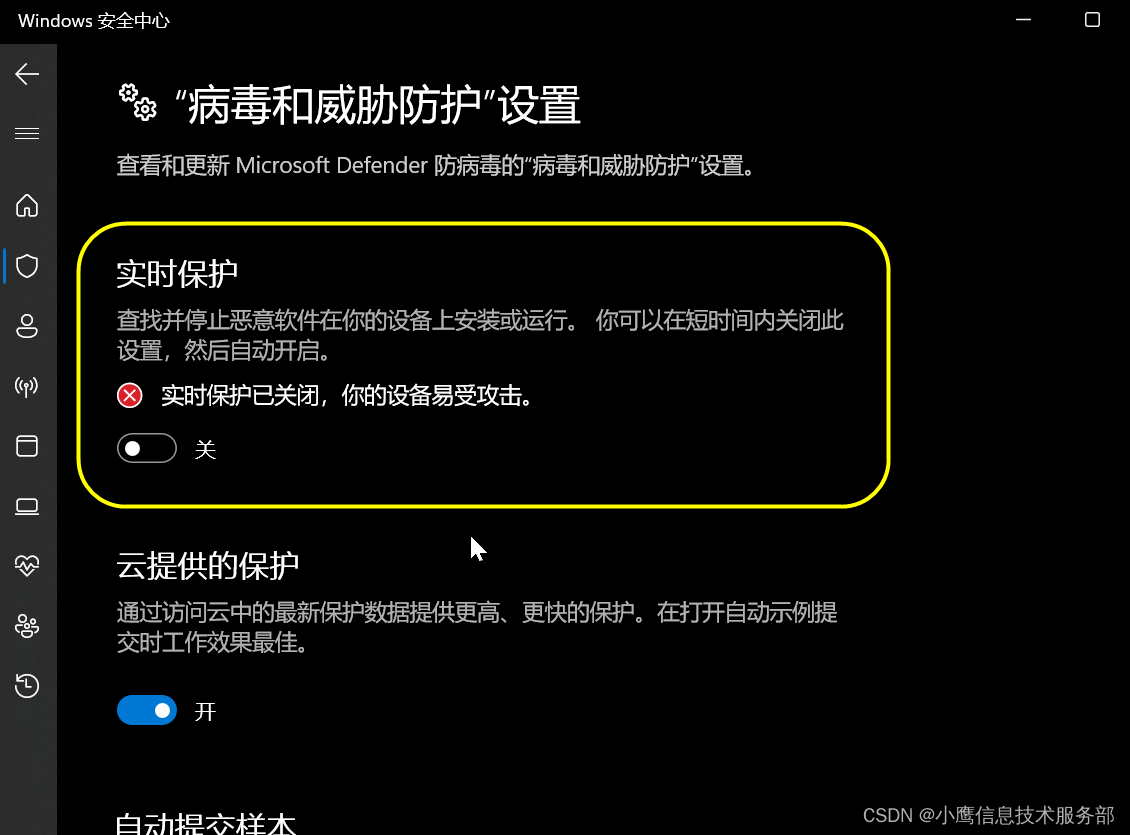

2.关闭防火墙、禁用SELinux

#这里只演示一台服务器上的命令,实则五台服务器都要实现

[root@kubernetes-master ~]# systemctl stop firewalld

[root@kubernetes-master ~]# systemctl disable firewalld

[root@kubernetes-master ~]# sed -i '/^SELINUX=/ c SELINUX=disabled' /etc/selinux/config

[root@kubernetes-master ~]# setenforce 0

3.安装常用软件

#这里只演示一台服务器上的命令,实则五台服务器都要实现

[root@kubernetes-master ~]# yum install -y wget tree bash-completion lrzsz psmisc net-tools vim

4.时间同步

#这里只演示一台服务器上的命令,实则五台服务器都要实现

[root@kubernetes-master ~]# yum install chrony -y

[root@kubernetes-master ~]# vim /etc/chrony.conf

···

#server 0.centos.pool.ntp.org iburst

#server 1.centos.pool.ntp.org iburst

#server 2.centos.pool.ntp.org iburst

#server 3.centos.pool.ntp.org iburst

server ntp1.aliyun.com iburst

···

#启动chronyd服务并设置chronyd服务开机自启动

[root@kubernetes-master ~]# systemctl enable --now chronyd

[root@kubernetes-master ~]# chronyc sources

5.禁用Swap分区

#这里只演示一台服务器上的命令,实则五台服务器都要实现

#临时禁用

[root@kubernetes-master ~]# swapoff -a

#永久禁用

[root@kubernetes-master ~]# sed -i 's/.*swap.*/#&/' /etc/fstab

6.修改linux的内核参数

#这里只演示一台服务器上的命令,实则五台服务器都要实现

# 修改linux的内核参数,添加网桥过滤和地址转发功能

[root@kubernetes-master ~]# cat >> /etc/sysctl.d/k8s.conf << EOF

> vm.swappiness=0

> net.bridge.bridge-nf-call-ip6tables = 1

> net.bridge.bridge-nf-call-iptables = 1

> net.ipv4.ip_forward = 1

> EOF

#加载模块

[root@kubernetes-master ~]# modprobe br_netfilter

[root@kubernetes-master ~]# modprobe overlay

#重新加载配置

[root@kubernetes-master ~]# sysctl -p /etc/sysctl.d/k8s.conf

7.配置ipvs功能

#这里只演示一台服务器上的命令,实则五台服务器都要实现

#1.安装ipset和ipvsadm

[root@kubernetes-master ~]# yum install ipset ipvsadm -y

#2.添加需要加载的模块写入脚本文件

[root@kubernetes-master ~]# cat <<EOF>> /etc/sysconfig/modules/ipvs.modulesmodprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack

EOF

#3.为脚本文件添加执行权限

[root@kubernetes-master ~]# chmod +x /etc/sysconfig/modules/ipvs.modules

#4.执行脚本文件

[root@kubernetes-master ~]# /bin/bash /etc/sysconfig/modules/ipvs.modules

#5.查看对应的模块是否加载成功

[root@kubernetes-master ~]# lsmod | grep -e ip_vs -e nf_conntrack_ipv4

ip_vs_sh 12688 0

ip_vs_wrr 12697 0

ip_vs_rr 12600 0

ip_vs 145458 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr

nf_conntrack 139264 1 ip_vs

libcrc32c 12644 3 xfs,ip_vs,nf_conntrack

二、容器环境操作(所有主机操作)

1.定制软件源

[root@kubernetes-master ~]# yum install -y yum-utils device-mapper-persistent-data lvm2

[root@kubernetes-master ~]# yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

2.安装最新版docker

[root@kubernetes-master ~]# yum install docker-ce -y

3.配置docker加速器

[root@kubernetes-master ~]# cat >> /etc/docker/daemon.json <<-EOF

> {

> "registry-mirrors": [

> "http://74f21445.m.daocloud.io",

> "https://registry.docker-cn.com",

> "http://hub-mirror.c.163.com",

> "https://docker.mirrors.ustc.edu.cn"

> ],

> "insecure-registries": ["kubernetes-register.openlab.cn"],

> "exec-opts": ["native.cgroupdriver=systemd"]

> }

> EOF

4.启动docker

[root@kubernetes-master ~]# systemctl daemon-reload

[root@kubernetes-master ~]# systemctl enable --now docker

三、cri环境操作(所有主机操作)

#下载软件

[root@kubernetes-master ~]#wget https://github.com/Mirantis/cri-dockerd/releases/download/v0.3.4/cri-dockerd-0.3.4.amd64.tgz

#解压

[root@kubernetes-master ~]# tar xf cri-dockerd-0.3.4.amd64.tgz -C /usr/local/

[root@kubernetes-master ~]# mv /usr/local/cri-dockerd/cri-dockerd /usr/local/bin/

[root@kubernetes-master ~]# cri-dockerd --version

cri-dockerd 0.3.4 (e88b1605)

#定制配置文件

[root@kubernetes-master ~]# cat > /etc/systemd/system/cri-dockerd.service<<-EOF

> [Unit]

> Description=CRI Interface for Docker Application Container Engine

> Documentation=https://docs.mirantis.com

> After=network-online.target firewalld.service docker.service

> Wants=network-online.target

>

> [Service]

> Type=notify

> ExecStart=/usr/local/bin/cri-dockerd --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.9

> --network-plugin=cni --cni-conf-dir=/etc/cni/net.d --cni-bin-dir=/opt/cni/bin -

> -container-runtime-endpoint=unix:///var/run/cri-dockerd.sock --cri-dockerd-root-directory=/var/lib/dockershim --docker-endpoint=unix:///var/run/docker.sock --

> cri-dockerd-root-directory=/var/lib/docker

> ExecReload=/bin/kill -s HUP $MAINPID

> TimeoutSec=0

> RestartSec=2

> Restart=always

> StartLimitBurst=3

> StartLimitInterval=60s

> LimitNOFILE=infinity

> LimitNPROC=infinity

> LimitCORE=infinity

> TasksMax=infinity

> Delegate=yes

> KillMode=process

> [Install]

> WantedBy=multi-user.target

> EOF

[root@kubernetes-master ~]# cat > /etc/systemd/system/cri-dockerd.socket <<-EOF

> [Unit]

> Description=CRI Docker Socket for the API

> PartOf=cri-docker.service

> [Socket]

> ListenStream=/var/run/cri-dockerd.sock

> SocketMode=0660

> SocketUser=root

> SocketGroup=docker

> [Install]

> WantedBy=sockets.target

> EOF

#设置服务开机自启

[root@kubernetes-master ~]# systemctl daemon-reload

[root@kubernetes-master ~]# systemctl enable --now cri-dockerd.service

四、harbor仓库操作(只在仓库机器上执行)

#安装docker-compose

[root@kubernetes-register ~]# install -m 755 docker-compose-linux-x86_64 /usr/local/bin/docker-compose

[root@kubernetes-register ~]# mkdir -p /data/server

[root@kubernetes-register ~]# tar xf harbor-offline-installer-v2.8.4.tgz -C /data/server/

#加载镜像

[root@kubernetes-register ~]# cd /data/server/harbor/

[root@kubernetes-register harbor]# docker load -i harbor.v2.8.4.tar.gz

#修改配置文件

[root@kubernetes-register harbor]# cp harbor.yml.tmpl harbor.yml

[root@kubernetes-register harbor]# vim harbor.yml

hostname: kubernetes-register.openlab.cn

#https:

# port: 443

# certificate: /your/certificate/path

# private_key: /your/private/key/path

···

harbor_admin_password: 123456

data_volume: /data/server/harbor/data

···

#配置harbor

[root@kubernetes-register harbor]# ./prepare

#启动harbor

[root@kubernetes-register harbor]# ./install.sh

#定制服务启动文件

[root@kubernetes-register harbor]# vim /etc/systemd/system/harbor.service

[Unit]

Description=Harbor

After=docker.service systemd-networkd.service systemd-resolved.service

Requires=docker.service

Documentation=http://github.com/vmware/harbor

[Service]

Type=simple

Restart=on-failure

RestartSec=5

#需要注意harbor的安装位置

ExecStart=/usr/local/bin/docker-compose --file /data/server/harbor/docker-compose.yml up

ExecStop=/usr/local/bin/docker-compose --file /data/server/harbor/docker-compose.yml down

[Install]

WantedBy=multi-user.target

#启动harbor并设置开机自启

[root@kubernetes-register harbor]# systemctl daemon-reload

[root@kubernetes-register harbor]# systemctl enable harbor.service

[root@kubernetes-register harbor]# systemctl restart harbor

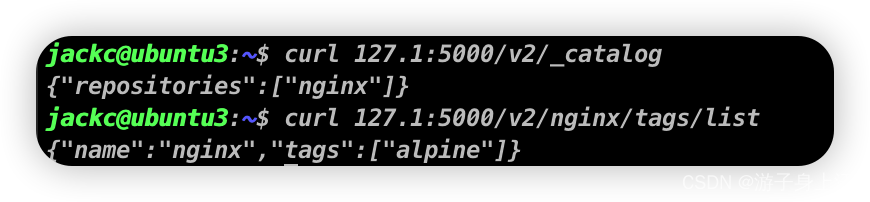

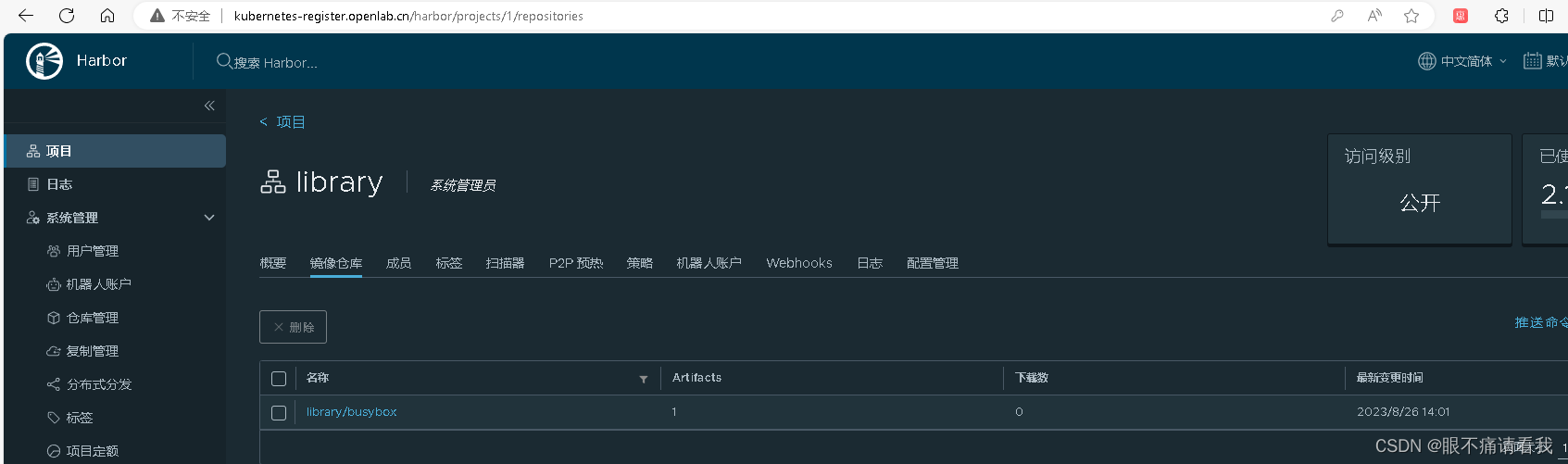

#测试仓库,只在k8s集群某一个节点

#拉取镜像

[root@kubernetes-master ~]# docker pull busybox

#登录harbor仓库

[root@kubernetes-master ~]# docker login kubernetes-register.openlab.cn -u admin -p 123456

#将镜像打上标签

[root@kubernetes-master ~]# docker tag busybox:latest kubernetes-register.openlab.cn/library/busybox:latest

#推送镜像到仓库

[root@kubernetes-master ~]# docker push kubernetes-register.openlab.cn/library/busybox:latest

五、k8s集群初始化

定制软件源(所有主机操作)

[root@kubernetes-master ~]# cat > /etc/yum.repos.d/kubernetes.repo << EOF

>[kubernetes]

>name=Kubernetes

>baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

>enabled=1

>gpgcheck=0

>repo_gpgcheck=0

>gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

>https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

>EOF

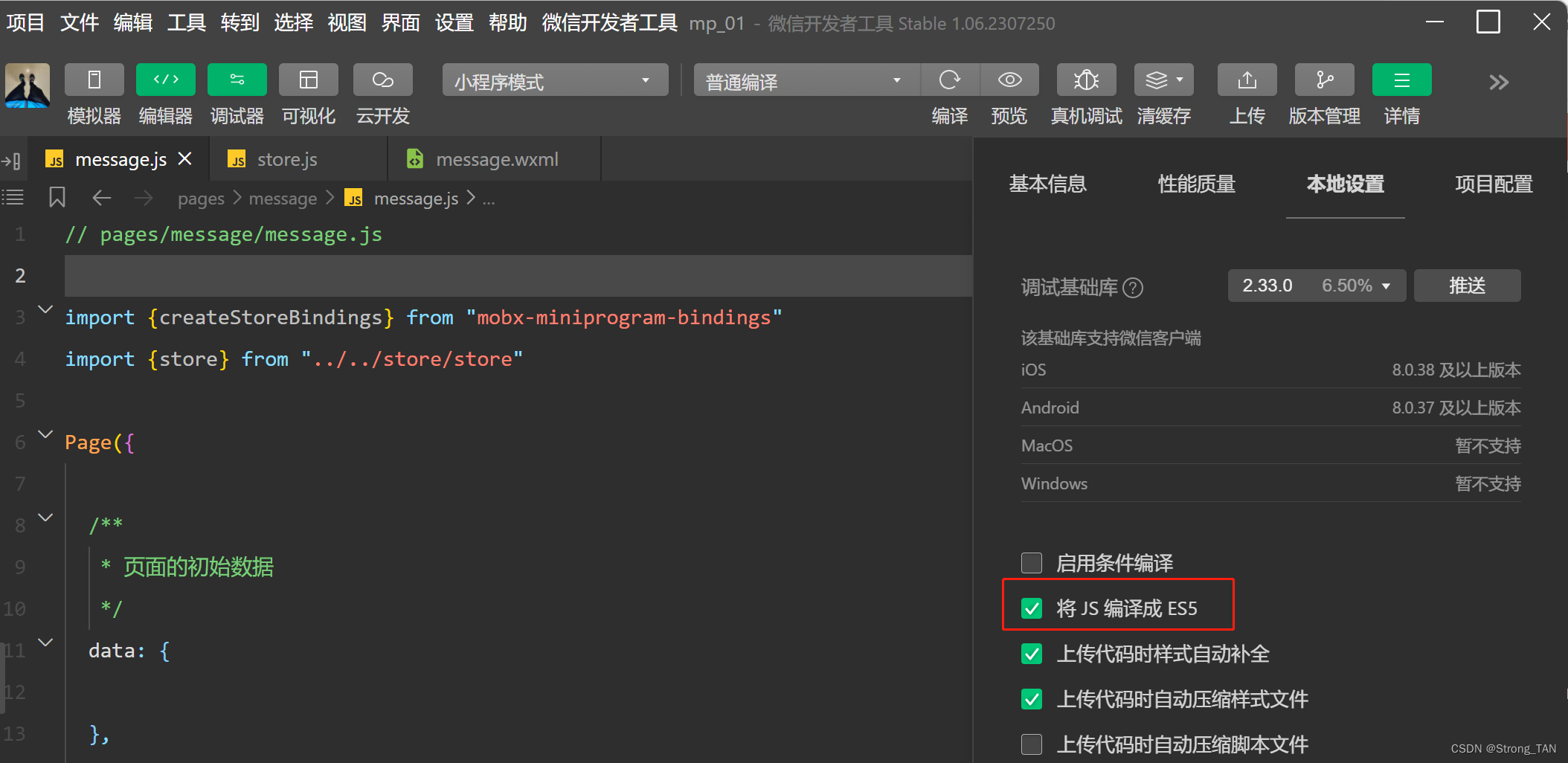

[root@kubernetes-master ~]# yum install kubeadm kubectl kubelet -y

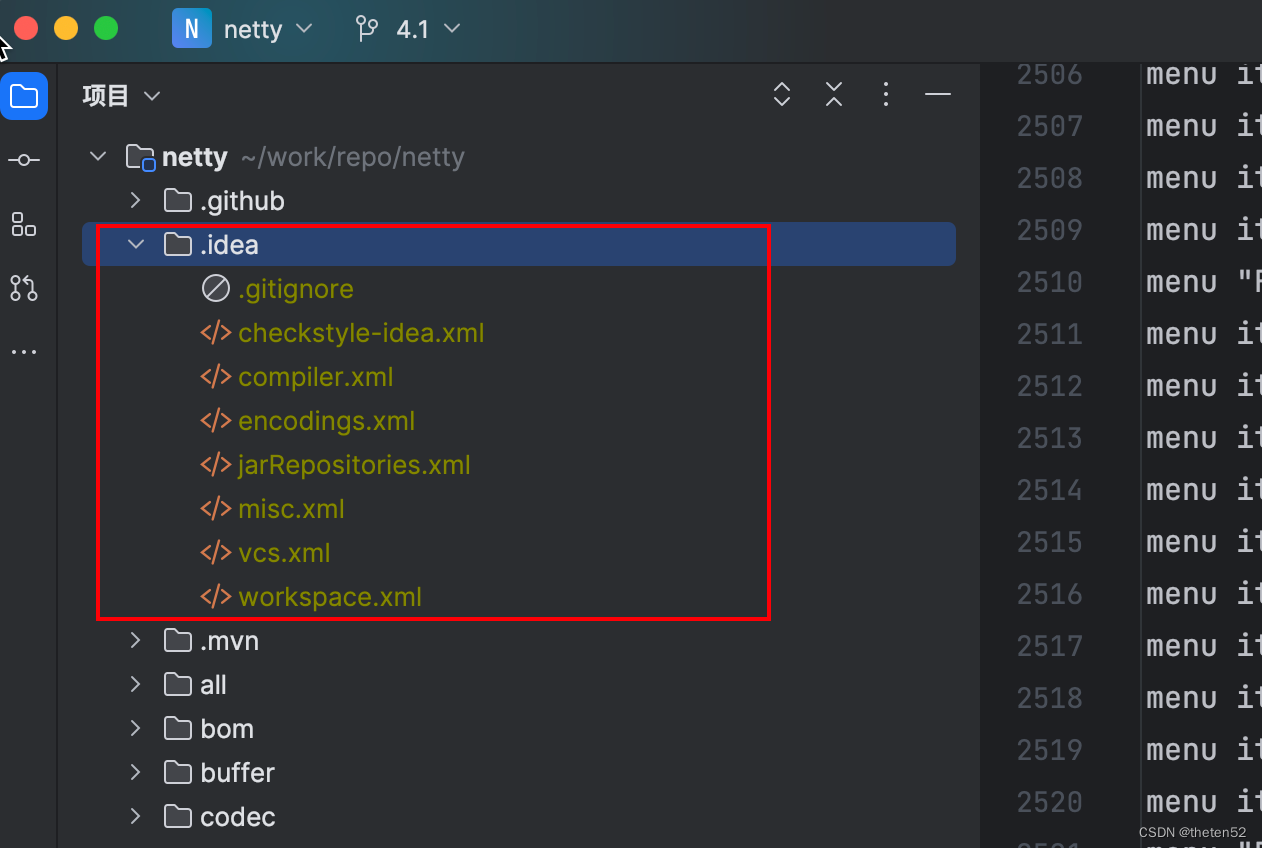

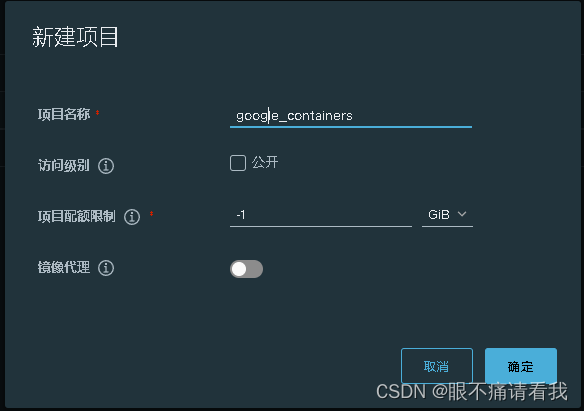

在仓库中新建项目:google_containers

接下来这一步只用在一个主机上操作

[root@kubernetes-master ~]# vim images.sh

#!/bin/bash

images=$(kubeadm config images list --kubernetes-version=1.28.0 | awk -F'/' '{print $NF}')

for i in ${images}

do

docker pull registry.aliyuncs.com/google_containers/$i

docker tag registry.aliyuncs.com/google_containers/$i kubernetes-register.openlab.cn/google_containers/$i

docker push kubernetes-register.openlab.cn/google_containers/$i

docker rmi registry.aliyuncs.com/google_containers/$i

done

#执行脚本文件

[root@kubernetes-master ~]# sh images.sh

master节点初始化

[root@kubernetes-master ~]# kubeadm init --kubernetes-version=1.28.0 \

> --apiserver-advertise-address=192.168.1.30 \

> --image-repository kubernetes-register.openlab.cn/google_containers \

> --service-cidr=10.96.0.0/12 \

> --pod-network-cidr=10.244.0.0/16 \

> --ignore-preflight-errors=Swap \

> --cri-socket=unix:///var/run/cri-dockerd.sock

···

kubeadm join 192.168.1.30:6443 --token wj0hmh.2cdfzhck6w32quzx \

--discovery-token-ca-cert-hash sha256:a0b7e59c2e769dc1e2197611ecae1f39d7a0bd19fc25906fe7a5b43199d15cd2

出现这两行说明初始化成功

[root@kubernetes-master ~]# mkdir -p $HOME/.kube

[root@kubernetes-master ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@kubernetes-master ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

node节点加入

[root@kubernetes-node1 ~]# kubeadm join 192.168.1.30:6443 --token wj0hmh.2cdfzhck6w32quzx \

> --cri-socket=unix:///var/run/cri-dockerd.sock \

> --discovery-token-ca-cert-hash sha256:a0b7e59c2e769dc1e2197611ecae1f39d7a0bd19fc25906fe7a5b43199d15cd2

#注意的是,每个人的令牌是不一样的

六、k8s环境收尾

命令补全

#放到master主机的环境文件中

[root@kubernetes-master ~]# echo "source <(kubectl completion bash)" >> ~/.bashrc

[root@kubernetes-master ~]# echo "source <(kubeadm completion bash)" >> ~/.bashrc

[root@kubernetes-master ~]# source ~/.bashrc

网络环境(在master主机上)

#下载kube-flannel.yml

[root@kubernetes-master ~]# vim flannel.sh

#执行脚本

[root@kubernetes-master ~]# sh flannel.sh

#修改配置文件

[root@kubernetes-master ~]# sed -i '/ image:/s#docker.io/flannel#kubernetes-register.openlab.cn/google_containers#' kube-flannel.yml

#应用配置文件

[root@kubernetes-master ~]# kubectl apply -f kube-flannel.yml

#检查效果

[root@kubernetes-master ~]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-76f899b8cf-tmdx9 1/1 Running 0 19m

coredns-76f899b8cf-zvfhg 1/1 Running 0 19m

etcd-kubernetes-master 1/1 Running 0 19m

kube-apiserver-kubernetes-master 1/1 Running 0 19m

kube-controller-manager-kubernetes-master 1/1 Running 0 19m

kube-proxy-rhdck 1/1 Running 0 14m

kube-proxy-tvfh4 1/1 Running 0 19m

kube-proxy-vjmrs 1/1 Running 0 14m

kube-proxy-zkwv2 1/1 Running 0 14m

kube-scheduler-kubernetes-master 1/1 Running 0 19m

#全部都是Running就可以了

扩展:要让kubectl在node节点上也能运行,需要将master上的.kube文件复制到node节点上,即在master节点上执行下面操作:

scp -r $HOME/.kube kubernetes-node1.openlab.cn:$HOME/

scp -r $HOME/.kube kubernetes-node2.openlab.cn:$HOME/

scp -r $HOME/.kube kubernetes-node3.openlab.cn:$HOME/

至此,Kubernetes(K8s 1.28.x)部署完成