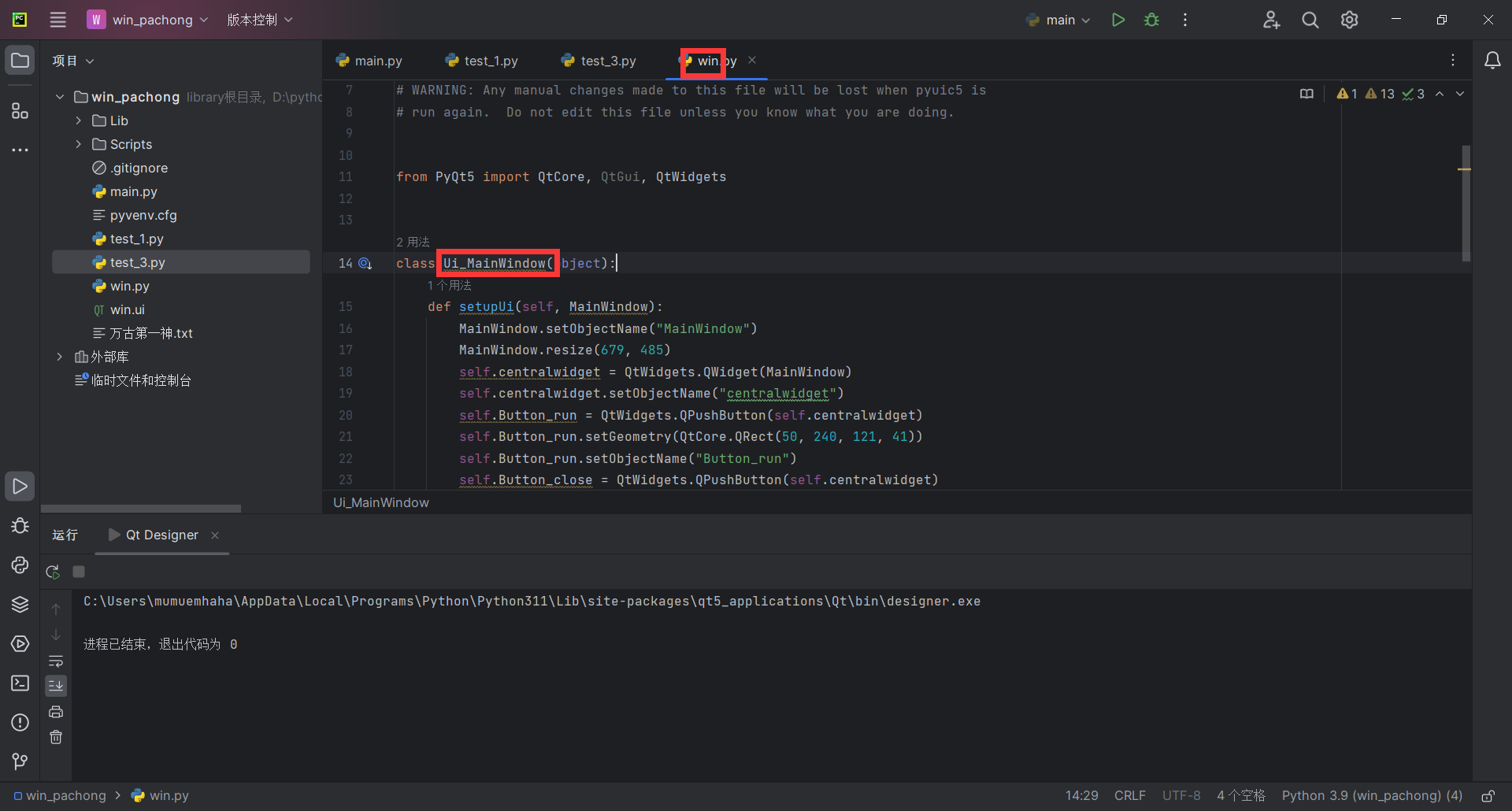

本次项目的文件

main.py主程序如下

-

导入必要的库和模块:

- 导入 TensorFlow 库以及自定义的

FaceAging模块。 - 导入操作系统库和参数解析库。

- 导入 TensorFlow 库以及自定义的

-

定义

str2bool函数:- 自定义函数用于将字符串转换为布尔值。

-

创建命令行参数解析器:

- 使用

argparse.ArgumentParser创建解析器,设置命令行参数的相关信息,如是否训练、轮数、数据集名称等。

- 使用

-

主函数

main(_)入口:- 打印设置的参数。

- 配置 TensorFlow 会话,设置 GPU 使用等。

-

在

with tf.Session(config=config) as session中:- 创建

FaceAging模型实例,传入会话、训练模式标志、保存路径和数据集名称。

- 创建

-

判断是否训练模式:

- 如果是训练模式,根据参数决定是否使用预训练模型进行训练。

- 如果不使用预训练模型,执行预训练步骤,并在预训练完成后开始正式训练。

- 执行模型的训练方法,传入训练轮数等参数。

-

如果不是训练模式:

- 进入测试模式,执行模型的自定义测试方法,传入测试图像目录。

-

在

__name__ == '__main__'中执行程序:- 执行命令行参数解析和主函数。

import tensorflow as tf from FaceAging import FaceAging # 导入自定义的 FaceAging 模块 from os import environ import argparse # 设置环境变量,控制 TensorFlow 输出日志等级 environ['TF_CPP_MIN_LOG_LEVEL'] = '3' # 自定义一个函数用于将字符串转换为布尔值 def str2bool(v): if v.lower() in ('yes', 'true', 't', 'y', '1'): return True elif v.lower() in ('no', 'false', 'f', 'n', '0'): return False else: raise argparse.ArgumentTypeError('Boolean value expected.') # 创建命令行参数解析器 parser = argparse.ArgumentParser(description='CAAE') parser.add_argument('--is_train', type=str2bool, default=True, help='是否进行训练') parser.add_argument('--epoch', type=int, default=50, help='训练的轮数') parser.add_argument('--dataset', type=str, default='UTKFace', help='存储在./data目录中的训练数据集名称') parser.add_argument('--savedir', type=str, default='save', help='保存检查点、中间训练结果和摘要的目录') parser.add_argument('--testdir', type=str, default='None', help='测试图像所在的目录') parser.add_argument('--use_trained_model', type=str2bool, default=True, help='是否使用已有的模型进行训练') parser.add_argument('--use_init_model', type=str2bool, default=True, help='如果找不到已有模型,是否从初始模型开始训练') FLAGS = parser.parse_args() # 主函数入口 def main(_): # 打印设置参数 import pprint pprint.pprint(FLAGS) # 配置 TensorFlow 会话 config = tf.ConfigProto() config.gpu_options.allow_growth = True with tf.Session(config=config) as session: # 创建 FaceAging 模型实例 model = FaceAging( session, # TensorFlow 会话 is_training=FLAGS.is_train, # 是否为训练模式的标志 save_dir=FLAGS.savedir, # 保存检查点、样本和摘要的路径 dataset_name=FLAGS.dataset # 存储在 ./data 目录中的数据集名称 ) if FLAGS.is_train: print ('\n\t训练模式') if not FLAGS.use_trained_model: print ('\n\t预训练网络') model.train( num_epochs=10, # 训练轮数 use_trained_model=FLAGS.use_trained_model, use_init_model=FLAGS.use_init_model, weights=(0, 0, 0) ) print ('\n\t预训练完成!训练将开始。') model.train( num_epochs=FLAGS.epoch, # 训练轮数 use_trained_model=FLAGS.use_trained_model, use_init_model=FLAGS.use_init_model ) else: print ('\n\t测试模式') model.custom_test( testing_samples_dir=FLAGS.testdir + '/*jpg' ) if __name__ == '__main__': # 在主程序中执行命令行解析和执行主函数 tf.app.run()

2.FaceAging.py

主要流程

-

导入必要的库和模块:

- 导入所需的Python库,如NumPy、TensorFlow等。

- 导入自定义的操作(ops.py)。

-

定义

FaceAging类:- 在初始化方法中,设置了模型的各种参数,例如输入图像大小、网络层参数、训练参数等,并创建了 TensorFlow 图的输入节点。

- 定义了图的结构,包括编码器、生成器、判别器等。

- 定义了损失函数,包括生成器、判别器、总变差(TV)等。

- 收集了需要用于TensorBoard可视化的摘要信息。

-

train方法:- 从文件中加载训练数据集的文件名列表。

- 定义了优化器和损失函数,然后进行模型的训练。

- 在每个epoch中,随机选择一部分训练图像样本,计算并更新生成器和判别器的参数,输出训练进度等信息。

- 保存模型的中间检查点,生成样本图像用于可视化,训练结束后保存最终模型。

-

encoder方法:- 实现了编码器结构,将输入图像转化为对应的噪声或特征。

-

generator方法:- 实现了生成器结构,将噪声特征、年龄标签和性别标签拼接,生成相应年龄段的人脸图像。

-

discriminator_z和discriminator_img方法:- 实现了判别器结构,对输入的噪声特征或图像进行判别。

-

save_checkpoint和load_checkpoint方法:- 用于保存和加载训练过程中的模型检查点。

-

sample和test方法:- 生成一些样本图像以及将训练过程中的中间结果保存为图片。

-

custom_test方法:- 运行模型进行自定义测试,加载模型并生成特定人脸的年龄化效果。

from __future__ import division

import os

import time

from glob import glob

import tensorflow as tf

import numpy as np

from scipy.io import savemat

from ops import *

class FaceAging(object):

def __init__(self,

session, # TensorFlow session

size_image=128, # size the input images

size_kernel=5, # size of the kernels in convolution and deconvolution

size_batch=100, # mini-batch size for training and testing, must be square of an integer

num_input_channels=3, # number of channels of input images

num_encoder_channels=64, # number of channels of the first conv layer of encoder

num_z_channels=50, # number of channels of the layer z (noise or code)

num_categories=10, # number of categories (age segments) in the training dataset

num_gen_channels=1024, # number of channels of the first deconv layer of generator

enable_tile_label=True, # enable to tile the label

tile_ratio=1.0, # ratio of the length between tiled label and z

is_training=True, # flag for training or testing mode

save_dir='./save', # path to save checkpoints, samples, and summary

dataset_name='UTKFace' # name of the dataset in the folder ./data

):

self.session = session

self.image_value_range = (-1, 1)

self.size_image = size_image

self.size_kernel = size_kernel

self.size_batch = size_batch

self.num_input_channels = num_input_channels

self.num_encoder_channels = num_encoder_channels

self.num_z_channels = num_z_channels

self.num_categories = num_categories

self.num_gen_channels = num_gen_channels

self.enable_tile_label = enable_tile_label

self.tile_ratio = tile_ratio

self.is_training = is_training

self.save_dir = save_dir

self.dataset_name = dataset_name

# ************************************* input to graph ********************************************************

self.input_image = tf.placeholder(

tf.float32,

[self.size_batch, self.size_image, self.size_image, self.num_input_channels],

name='input_images'

)

self.age = tf.placeholder(

tf.float32,

[self.size_batch, self.num_categories],

name='age_labels'

)

self.gender = tf.placeholder(

tf.float32,

[self.size_batch, 2],

name='gender_labels'

)

self.z_prior = tf.placeholder(

tf.float32,

[self.size_batch, self.num_z_channels],

name='z_prior'

)

# ************************************* build the graph *******************************************************

print ('\n\tBuilding graph ...')

# encoder: input image --> z

self.z = self.encoder(

image=self.input_image

)

# generator: z + label --> generated image

self.G = self.generator(

z=self.z,

y=self.age,

gender=self.gender,

enable_tile_label=self.enable_tile_label,

tile_ratio=self.tile_ratio

)

# discriminator on z

self.D_z, self.D_z_logits = self.discriminator_z(

z=self.z,

is_training=self.is_training

)

# discriminator on G

self.D_G, self.D_G_logits = self.discriminator_img(

image=self.G,

y=self.age,

gender=self.gender,

is_training=self.is_training

)

# discriminator on z_prior

self.D_z_prior, self.D_z_prior_logits = self.discriminator_z(

z=self.z_prior,

is_training=self.is_training,

reuse_variables=True

)

# discriminator on input image

self.D_input, self.D_input_logits = self.discriminator_img(

image=self.input_image,

y=self.age,

gender=self.gender,

is_training=self.is_training,

reuse_variables=True

)

# ************************************* loss functions *******************************************************

# loss function of encoder + generator

#self.EG_loss = tf.nn.l2_loss(self.input_image - self.G) / self.size_batch # L2 loss

self.EG_loss = tf.reduce_mean(tf.abs(self.input_image - self.G)) # L1 loss

# loss function of discriminator on z

self.D_z_loss_prior = tf.reduce_mean(

tf.nn.sigmoid_cross_entropy_with_logits(logits=self.D_z_prior_logits, labels=tf.ones_like(self.D_z_prior_logits))

)

self.D_z_loss_z = tf.reduce_mean(

tf.nn.sigmoid_cross_entropy_with_logits(logits=self.D_z_logits, labels=tf.zeros_like(self.D_z_logits))

)

self.E_z_loss = tf.reduce_mean(

tf.nn.sigmoid_cross_entropy_with_logits(logits=self.D_z_logits, labels=tf.ones_like(self.D_z_logits))

)

# loss function of discriminator on image

self.D_img_loss_input = tf.reduce_mean(

tf.nn.sigmoid_cross_entropy_with_logits(logits=self.D_input_logits, labels=tf.ones_like(self.D_input_logits))

)

self.D_img_loss_G = tf.reduce_mean(

tf.nn.sigmoid_cross_entropy_with_logits(logits=self.D_G_logits, labels=tf.zeros_like(self.D_G_logits))

)

self.G_img_loss = tf.reduce_mean(

tf.nn.sigmoid_cross_entropy_with_logits(logits=self.D_G_logits, labels=tf.ones_like(self.D_G_logits))

)

# total variation to smooth the generated image

tv_y_size = self.size_image

tv_x_size = self.size_image

self.tv_loss = (

(tf.nn.l2_loss(self.G[:, 1:, :, :] - self.G[:, :self.size_image - 1, :, :]) / tv_y_size) +

(tf.nn.l2_loss(self.G[:, :, 1:, :] - self.G[:, :, :self.size_image - 1, :]) / tv_x_size)) / self.size_batch

# *********************************** trainable variables ****************************************************

trainable_variables = tf.trainable_variables()

# variables of encoder

self.E_variables = [var for var in trainable_variables if 'E_' in var.name]

# variables of generator

self.G_variables = [var for var in trainable_variables if 'G_' in var.name]

# variables of discriminator on z

self.D_z_variables = [var for var in trainable_variables if 'D_z_' in var.name]

# variables of discriminator on image

self.D_img_variables = [var for var in trainable_variables if 'D_img_' in var.name]

# ************************************* collect the summary ***************************************

self.z_summary = tf.summary.histogram('z', self.z)

self.z_prior_summary = tf.summary.histogram('z_prior', self.z_prior)

self.EG_loss_summary = tf.summary.scalar('EG_loss', self.EG_loss)

self.D_z_loss_z_summary = tf.summary.scalar('D_z_loss_z', self.D_z_loss_z)

self.D_z_loss_prior_summary = tf.summary.scalar('D_z_loss_prior', self.D_z_loss_prior)

self.E_z_loss_summary = tf.summary.scalar('E_z_loss', self.E_z_loss)

self.D_z_logits_summary = tf.summary.histogram('D_z_logits', self.D_z_logits)

self.D_z_prior_logits_summary = tf.summary.histogram('D_z_prior_logits', self.D_z_prior_logits)

self.D_img_loss_input_summary = tf.summary.scalar('D_img_loss_input', self.D_img_loss_input)

self.D_img_loss_G_summary = tf.summary.scalar('D_img_loss_G', self.D_img_loss_G)

self.G_img_loss_summary = tf.summary.scalar('G_img_loss', self.G_img_loss)

self.D_G_logits_summary = tf.summary.histogram('D_G_logits', self.D_G_logits)

self.D_input_logits_summary = tf.summary.histogram('D_input_logits', self.D_input_logits)

# for saving the graph and variables

self.saver = tf.train.Saver(max_to_keep=2)

def train(self,

num_epochs=200, # number of epochs

learning_rate=0.0002, # learning rate of optimizer

beta1=0.5, # parameter for Adam optimizer

decay_rate=1.0, # learning rate decay (0, 1], 1 means no decay

enable_shuffle=True, # enable shuffle of the dataset

use_trained_model=True, # use the saved checkpoint to initialize the network

use_init_model=True, # use the init model to initialize the network

weigts=(0.0001, 0, 0) # the weights of adversarial loss and TV loss

):

# *************************** load file names of images ******************************************************

file_names = glob(os.path.join('./data', self.dataset_name, '*.jpg'))

size_data = len(file_names)

np.random.seed(seed=2017)

if enable_shuffle:

np.random.shuffle(file_names)

# *********************************** optimizer **************************************************************

# over all, there are three loss functions, weights may differ from the paper because of different datasets

self.loss_EG = self.EG_loss + weigts[0] * self.G_img_loss + weigts[1] * self.E_z_loss + weigts[2] * self.tv_loss # slightly increase the params

self.loss_Dz = self.D_z_loss_prior + self.D_z_loss_z

self.loss_Di = self.D_img_loss_input + self.D_img_loss_G

# set learning rate decay

self.EG_global_step = tf.Variable(0, trainable=False, name='global_step')

EG_learning_rate = tf.train.exponential_decay(

learning_rate=learning_rate,

global_step=self.EG_global_step,

decay_steps=size_data / self.size_batch * 2,

decay_rate=decay_rate,

staircase=True

)

# optimizer for encoder + generator

with tf.variable_scope('opt', reuse=tf.AUTO_REUSE):

self.EG_optimizer = tf.train.AdamOptimizer(

learning_rate=EG_learning_rate,

beta1=beta1

).minimize(

loss=self.loss_EG,

global_step=self.EG_global_step,

var_list=self.E_variables + self.G_variables

)

# optimizer for discriminator on z

self.D_z_optimizer = tf.train.AdamOptimizer(

learning_rate=EG_learning_rate,

beta1=beta1

).minimize(

loss=self.loss_Dz,

var_list=self.D_z_variables

)

# optimizer for discriminator on image

self.D_img_optimizer = tf.train.AdamOptimizer(

learning_rate=EG_learning_rate,

beta1=beta1

).minimize(

loss=self.loss_Di,

var_list=self.D_img_variables

)

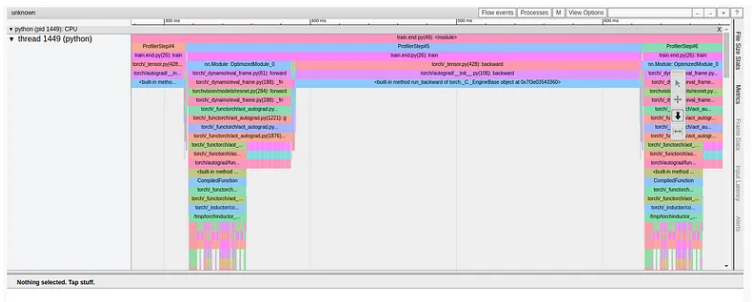

# *********************************** tensorboard *************************************************************

# for visualization (TensorBoard): $ tensorboard --logdir path/to/log-directory

self.EG_learning_rate_summary = tf.summary.scalar('EG_learning_rate', EG_learning_rate)

self.summary = tf.summary.merge([

self.z_summary, self.z_prior_summary,

self.D_z_loss_z_summary, self.D_z_loss_prior_summary,

self.D_z_logits_summary, self.D_z_prior_logits_summary,

self.EG_loss_summary, self.E_z_loss_summary,

self.D_img_loss_input_summary, self.D_img_loss_G_summary,

self.G_img_loss_summary, self.EG_learning_rate_summary,

self.D_G_logits_summary, self.D_input_logits_summary

])

self.writer = tf.summary.FileWriter(os.path.join(self.save_dir, 'summary'), self.session.graph)

# ************* get some random samples as testing data to visualize the learning process *********************

sample_files = file_names[0:self.size_batch]

file_names[0:self.size_batch] = []

sample = [load_image(

image_path=sample_file,

image_size=self.size_image,

image_value_range=self.image_value_range,

is_gray=(self.num_input_channels == 1),

) for sample_file in sample_files]

if self.num_input_channels == 1:

sample_images = np.array(sample).astype(np.float32)[:, :, :, None]

else:

sample_images = np.array(sample).astype(np.float32)

sample_label_age = np.ones(

shape=(len(sample_files), self.num_categories),

dtype=np.float32

) * self.image_value_range[0]

sample_label_gender = np.ones(

shape=(len(sample_files), 2),

dtype=np.float32

) * self.image_value_range[0]

for i, label in enumerate(sample_files):

label = int(str(sample_files[i]).split('/')[-1].split('_')[0])

if 0 <= label <= 5:

label = 0

elif 6 <= label <= 10:

label = 1

elif 11 <= label <= 15:

label = 2

elif 16 <= label <= 20:

label = 3

elif 21 <= label <= 30:

label = 4

elif 31 <= label <= 40:

label = 5

elif 41 <= label <= 50:

label = 6

elif 51 <= label <= 60:

label = 7

elif 61 <= label <= 70:

label = 8

else:

label = 9

sample_label_age[i, label] = self.image_value_range[-1]

gender = int(str(sample_files[i]).split('/')[-1].split('_')[1])

sample_label_gender[i, gender] = self.image_value_range[-1]

# ******************************************* training *******************************************************

# initialize the graph

tf.global_variables_initializer().run()

# load check point

if use_trained_model:

if self.load_checkpoint():

print("\tSUCCESS ^_^")

else:

print("\tFAILED >_<!")

# load init model

if use_init_model:

if not os.path.exists('init_model/model-init.data-00000-of-00001'):

from init_model.zip_opt import join

try:

join('init_model/model_parts', 'init_model/model-init.data-00000-of-00001')

except:

raise Exception('Error joining files')

self.load_checkpoint(model_path='init_model')

# epoch iteration

num_batches = len(file_names) // self.size_batch

for epoch in range(num_epochs):

if enable_shuffle:

np.random.shuffle(file_names)

for ind_batch in range(num_batches):

start_time = time.time()

# read batch images and labels

batch_files = file_names[ind_batch*self.size_batch:(ind_batch+1)*self.size_batch]

batch = [load_image(

image_path=batch_file,

image_size=self.size_image,

image_value_range=self.image_value_range,

is_gray=(self.num_input_channels == 1),

) for batch_file in batch_files]

if self.num_input_channels == 1:

batch_images = np.array(batch).astype(np.float32)[:, :, :, None]

else:

batch_images = np.array(batch).astype(np.float32)

batch_label_age = np.ones(

shape=(len(batch_files), self.num_categories),

dtype=np.float

) * self.image_value_range[0]

batch_label_gender = np.ones(

shape=(len(batch_files), 2),

dtype=np.float

) * self.image_value_range[0]

for i, label in enumerate(batch_files):

label = int(str(batch_files[i]).split('/')[-1].split('_')[0])

if 0 <= label <= 5:

label = 0

elif 6 <= label <= 10:

label = 1

elif 11 <= label <= 15:

label = 2

elif 16 <= label <= 20:

label = 3

elif 21 <= label <= 30:

label = 4

elif 31 <= label <= 40:

label = 5

elif 41 <= label <= 50:

label = 6

elif 51 <= label <= 60:

label = 7

elif 61 <= label <= 70:

label = 8

else:

label = 9

batch_label_age[i, label] = self.image_value_range[-1]

gender = int(str(batch_files[i]).split('/')[-1].split('_')[1])

batch_label_gender[i, gender] = self.image_value_range[-1]

# prior distribution on the prior of z

batch_z_prior = np.random.uniform(

self.image_value_range[0],

self.image_value_range[-1],

[self.size_batch, self.num_z_channels]

).astype(np.float32)

# update

_, _, _, EG_err, Ez_err, Dz_err, Dzp_err, Gi_err, DiG_err, Di_err, TV = self.session.run(

fetches = [

self.EG_optimizer,

self.D_z_optimizer,

self.D_img_optimizer,

self.EG_loss,

self.E_z_loss,

self.D_z_loss_z,

self.D_z_loss_prior,

self.G_img_loss,

self.D_img_loss_G,

self.D_img_loss_input,

self.tv_loss

],

feed_dict={

self.input_image: batch_images,

self.age: batch_label_age,

self.gender: batch_label_gender,

self.z_prior: batch_z_prior

}

)

print("\nEpoch: [%3d/%3d] Batch: [%3d/%3d]\n\tEG_err=%.4f\tTV=%.4f" %

(epoch+1, num_epochs, ind_batch+1, num_batches, EG_err, TV))

print("\tEz=%.4f\tDz=%.4f\tDzp=%.4f" % (Ez_err, Dz_err, Dzp_err))

print("\tGi=%.4f\tDi=%.4f\tDiG=%.4f" % (Gi_err, Di_err, DiG_err))

# estimate left run time

elapse = time.time() - start_time

time_left = ((num_epochs - epoch - 1) * num_batches + (num_batches - ind_batch - 1)) * elapse

print("\tTime left: %02d:%02d:%02d" %

(int(time_left / 3600), int(time_left % 3600 / 60), time_left % 60))

# add to summary

summary = self.summary.eval(

feed_dict={

self.input_image: batch_images,

self.age: batch_label_age,

self.gender: batch_label_gender,

self.z_prior: batch_z_prior

}

)

self.writer.add_summary(summary, self.EG_global_step.eval())

# save sample images for each epoch

name = '{:02d}.png'.format(epoch+1)

self.sample(sample_images, sample_label_age, sample_label_gender, name)

self.test(sample_images, sample_label_gender, name)

# save checkpoint for each 5 epoch

if np.mod(epoch, 5) == 4:

self.save_checkpoint()

# save the trained model

self.save_checkpoint()

# close the summary writer

self.writer.close()

def encoder(self, image, reuse_variables=False):

if reuse_variables:

tf.get_variable_scope().reuse_variables()

num_layers = int(np.log2(self.size_image)) - int(self.size_kernel / 2)

current = image

# conv layers with stride 2

for i in range(num_layers):

name = 'E_conv' + str(i)

current = conv2d(

input_map=current,

num_output_channels=self.num_encoder_channels * (2 ** i),

size_kernel=self.size_kernel,

name=name

)

current = tf.nn.relu(current)

# fully connection layer

name = 'E_fc'

current = fc(

input_vector=tf.reshape(current, [self.size_batch, -1]),

num_output_length=self.num_z_channels,

name=name

)

# output

return tf.nn.tanh(current)

def generator(self, z, y, gender, reuse_variables=False, enable_tile_label=True, tile_ratio=1.0):

if reuse_variables:

tf.get_variable_scope().reuse_variables()

num_layers = int(np.log2(self.size_image)) - int(self.size_kernel / 2)

if enable_tile_label:

duplicate = int(self.num_z_channels * tile_ratio / self.num_categories)

else:

duplicate = 1

z = concat_label(z, y, duplicate=duplicate)

if enable_tile_label:

duplicate = int(self.num_z_channels * tile_ratio / 2)

else:

duplicate = 1

z = concat_label(z, gender, duplicate=duplicate)

size_mini_map = int(self.size_image / 2 ** num_layers)

# fc layer

name = 'G_fc'

current = fc(

input_vector=z,

num_output_length=self.num_gen_channels * size_mini_map * size_mini_map,

name=name

)

# reshape to cube for deconv

current = tf.reshape(current, [-1, size_mini_map, size_mini_map, self.num_gen_channels])

current = tf.nn.relu(current)

# deconv layers with stride 2

for i in range(num_layers):

name = 'G_deconv' + str(i)

current = deconv2d(

input_map=current,

output_shape=[self.size_batch,

size_mini_map * 2 ** (i + 1),

size_mini_map * 2 ** (i + 1),

int(self.num_gen_channels / 2 ** (i + 1))],

size_kernel=self.size_kernel,

name=name

)

current = tf.nn.relu(current)

name = 'G_deconv' + str(i+1)

current = deconv2d(

input_map=current,

output_shape=[self.size_batch,

self.size_image,

self.size_image,

int(self.num_gen_channels / 2 ** (i + 2))],

size_kernel=self.size_kernel,

stride=1,

name=name

)

current = tf.nn.relu(current)

name = 'G_deconv' + str(i + 2)

current = deconv2d(

input_map=current,

output_shape=[self.size_batch,

self.size_image,

self.size_image,

self.num_input_channels],

size_kernel=self.size_kernel,

stride=1,

name=name

)

# output

return tf.nn.tanh(current)

def discriminator_z(self, z, is_training=True, reuse_variables=False, num_hidden_layer_channels=(64, 32, 16), enable_bn=True):

if reuse_variables:

tf.get_variable_scope().reuse_variables()

current = z

# fully connection layer

for i in range(len(num_hidden_layer_channels)):

name = 'D_z_fc' + str(i)

current = fc(

input_vector=current,

num_output_length=num_hidden_layer_channels[i],

name=name

)

if enable_bn:

name = 'D_z_bn' + str(i)

current = tf.contrib.layers.batch_norm(

current,

scale=False,

is_training=is_training,

scope=name,

reuse=reuse_variables

)

current = tf.nn.relu(current)

# output layer

name = 'D_z_fc' + str(i+1)

current = fc(

input_vector=current,

num_output_length=1,

name=name

)

return tf.nn.sigmoid(current), current

def discriminator_img(self, image, y, gender, is_training=True, reuse_variables=False, num_hidden_layer_channels=(16, 32, 64, 128), enable_bn=True):

if reuse_variables:

tf.get_variable_scope().reuse_variables()

num_layers = len(num_hidden_layer_channels)

current = image

# conv layers with stride 2

for i in range(num_layers):

name = 'D_img_conv' + str(i)

current = conv2d(

input_map=current,

num_output_channels=num_hidden_layer_channels[i],

size_kernel=self.size_kernel,

name=name

)

if enable_bn:

name = 'D_img_bn' + str(i)

current = tf.contrib.layers.batch_norm(

current,

scale=False,

is_training=is_training,

scope=name,

reuse=reuse_variables

)

current = tf.nn.relu(current)

if i == 0:

current = concat_label(current, y)

current = concat_label(current, gender, int(self.num_categories / 2))

# fully connection layer

name = 'D_img_fc1'

current = fc(

input_vector=tf.reshape(current, [self.size_batch, -1]),

num_output_length=1024,

name=name

)

current = lrelu(current)

name = 'D_img_fc2'

current = fc(

input_vector=current,

num_output_length=1,

name=name

)

# output

return tf.nn.sigmoid(current), current

def save_checkpoint(self):

checkpoint_dir = os.path.join(self.save_dir, 'checkpoint')

if not os.path.exists(checkpoint_dir):

os.makedirs(checkpoint_dir)

self.saver.save(

sess=self.session,

save_path=os.path.join(checkpoint_dir, 'model'),

global_step=self.EG_global_step.eval()

)

def load_checkpoint(self, model_path=None):

if model_path is None:

print("\n\tLoading pre-trained model ...")

checkpoint_dir = os.path.join(self.save_dir, 'checkpoint')

else:

print("\n\tLoading init model ...")

checkpoint_dir = model_path

checkpoints = tf.train.get_checkpoint_state(checkpoint_dir)

if checkpoints and checkpoints.model_checkpoint_path:

checkpoints_name = os.path.basename(checkpoints.model_checkpoint_path)

try:

self.saver.restore(self.session, os.path.join(checkpoint_dir, checkpoints_name))

return True

except:

return False

else:

return False

def sample(self, images, labels, gender, name):

sample_dir = os.path.join(self.save_dir, 'samples')

if not os.path.exists(sample_dir):

os.makedirs(sample_dir)

z, G = self.session.run(

[self.z, self.G],

feed_dict={

self.input_image: images,

self.age: labels,

self.gender: gender

}

)

size_frame = int(np.sqrt(self.size_batch))

save_batch_images(

batch_images=G,

save_path=os.path.join(sample_dir, name),

image_value_range=self.image_value_range,

size_frame=[size_frame, size_frame]

)

def test(self, images, gender, name):

test_dir = os.path.join(self.save_dir, 'test')

if not os.path.exists(test_dir):

os.makedirs(test_dir)

images = images[:int(np.sqrt(self.size_batch)), :, :, :]

gender = gender[:int(np.sqrt(self.size_batch)), :]

size_sample = images.shape[0]

labels = np.arange(size_sample)

labels = np.repeat(labels, size_sample)

query_labels = np.ones(

shape=(size_sample ** 2, size_sample),

dtype=np.float32

) * self.image_value_range[0]

for i in range(query_labels.shape[0]):

query_labels[i, labels[i]] = self.image_value_range[-1]

query_images = np.tile(images, [self.num_categories, 1, 1, 1])

query_gender = np.tile(gender, [self.num_categories, 1])

z, G = self.session.run(

[self.z, self.G],

feed_dict={

self.input_image: query_images,

self.age: query_labels,

self.gender: query_gender

}

)

save_batch_images(

batch_images=query_images,

save_path=os.path.join(test_dir, 'input.png'),

image_value_range=self.image_value_range,

size_frame=[size_sample, size_sample]

)

save_batch_images(

batch_images=G,

save_path=os.path.join(test_dir, name),

image_value_range=self.image_value_range,

size_frame=[size_sample, size_sample]

)

def custom_test(self, testing_samples_dir):

if not self.load_checkpoint():

print("\tFAILED >_<!")

exit(0)

else:

print("\tSUCCESS ^_^")

num_samples = int(np.sqrt(self.size_batch))

file_names = glob(testing_samples_dir)

if len(file_names) < num_samples:

print ('The number of testing images is must larger than %d' % num_samples)

exit(0)

sample_files = file_names[0:num_samples]

sample = [load_image(

image_path=sample_file,

image_size=self.size_image,

image_value_range=self.image_value_range,

is_gray=(self.num_input_channels == 1),

) for sample_file in sample_files]

if self.num_input_channels == 1:

images = np.array(sample).astype(np.float32)[:, :, :, None]

else:

images = np.array(sample).astype(np.float32)

gender_male = np.ones(

shape=(num_samples, 2),

dtype=np.float32

) * self.image_value_range[0]

gender_female = np.ones(

shape=(num_samples, 2),

dtype=np.float32

) * self.image_value_range[0]

for i in range(gender_male.shape[0]):

gender_male[i, 0] = self.image_value_range[-1]

gender_female[i, 1] = self.image_value_range[-1]

self.test(images, gender_male, 'test_as_male.png')

self.test(images, gender_female, 'test_as_female.png')

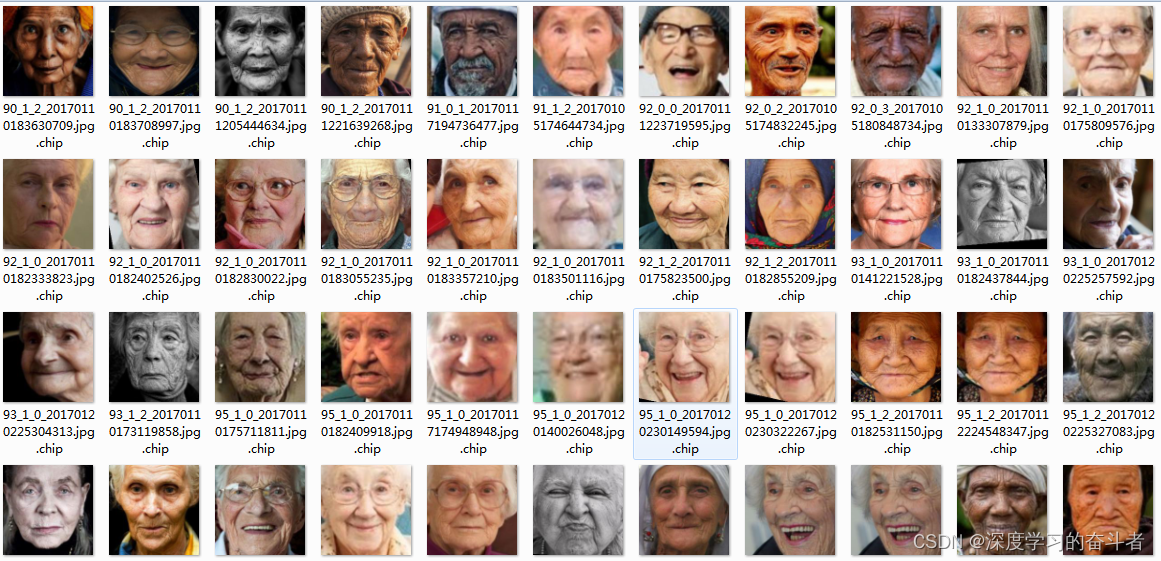

print ('\n\tDone! Results are saved as %s\n' % os.path.join(self.save_dir, 'test', 'test_as_xxx.png'))3.data,一共23708张照片

4.对数据集感兴趣的可以关注

4.对数据集感兴趣的可以关注

from __future__ import division

import os

import time

from glob import glob

import tensorflow as tf

import numpy as np

from scipy.io import savemat

from ops import *

#https://mbd.pub/o/bread/ZJ2UmJpp