文章目录

- Graph-Segmenter: Graph Transformer with Boundary-aware Attention for Semantic Segmentation

- 方法

- SCSC: Spatial Cross-scale Convolution Module to Strengthen both CNNs and Transformers

- 方法

- Deformable Mixer Transformer with Gating for Multi-Task Learning of Dense Prediction

- 方法

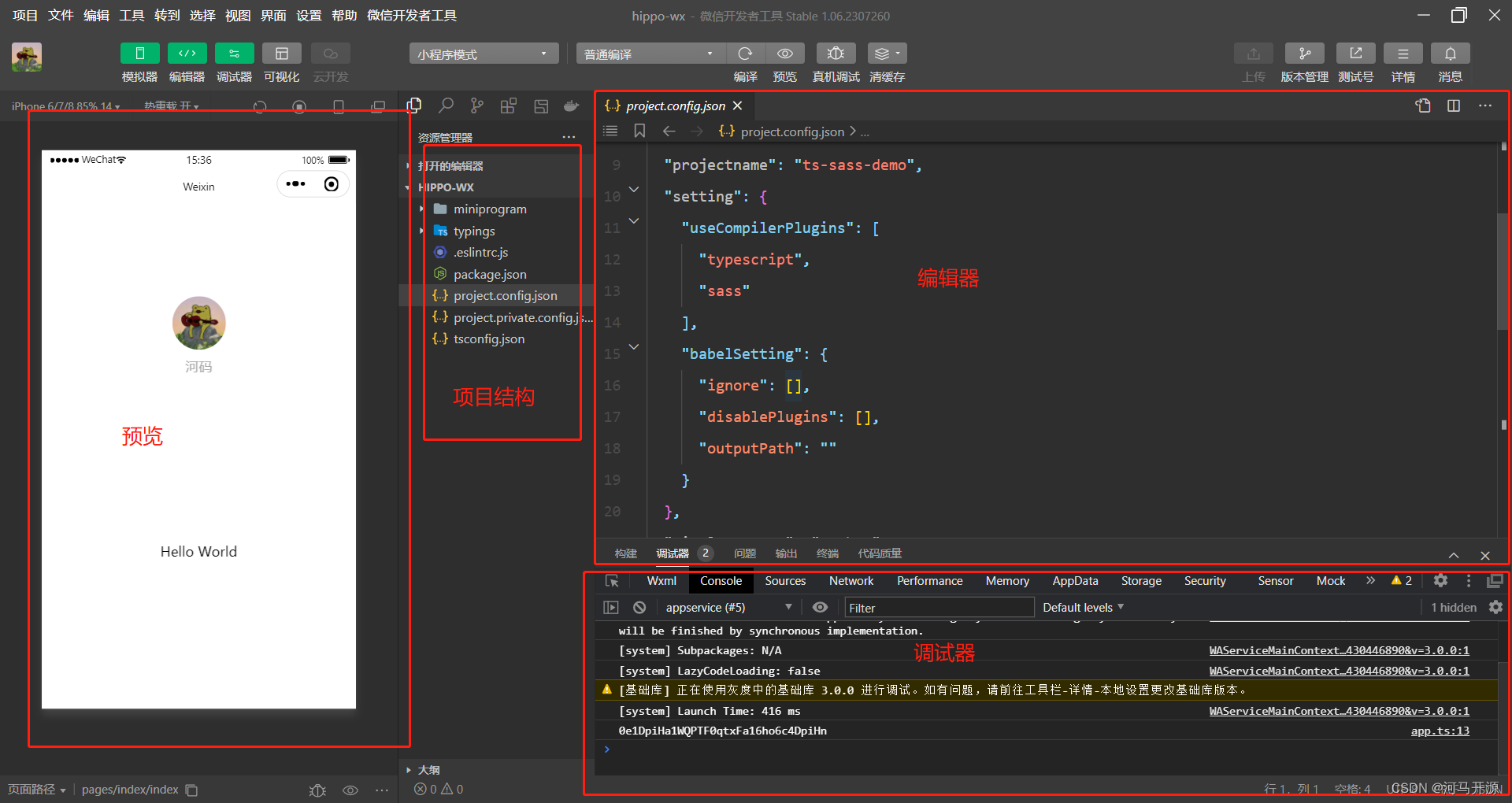

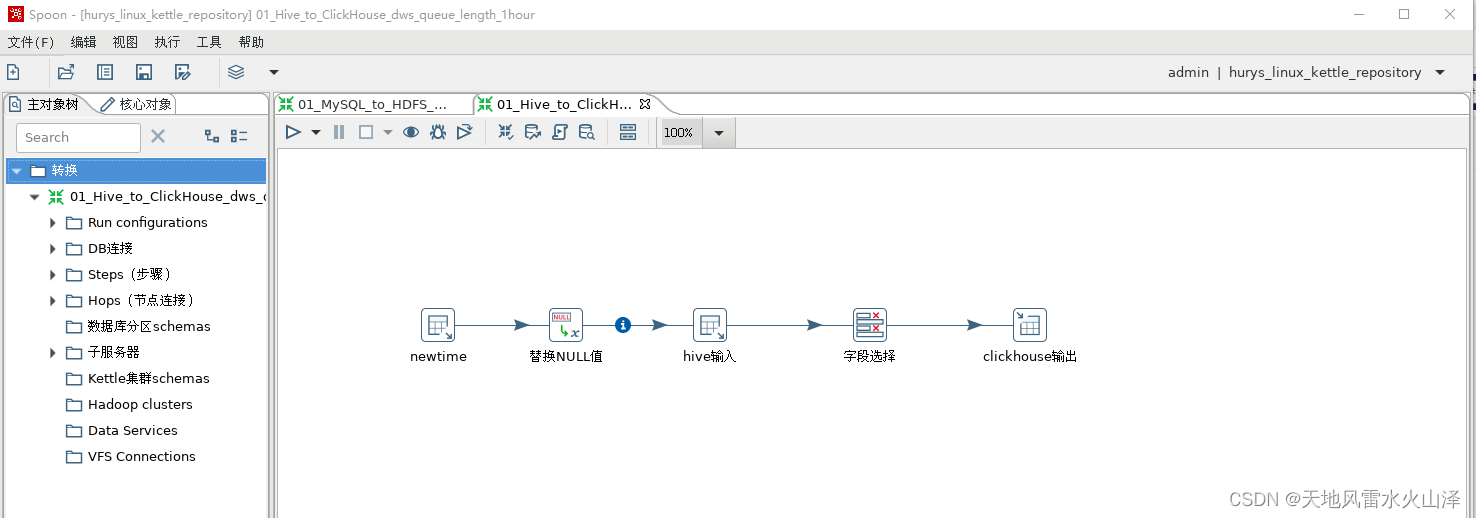

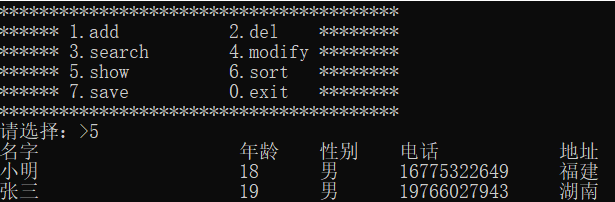

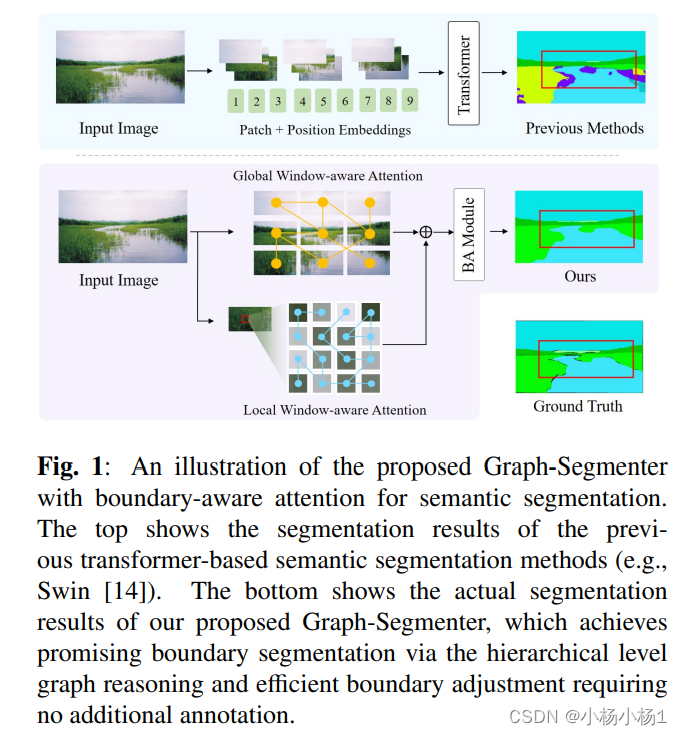

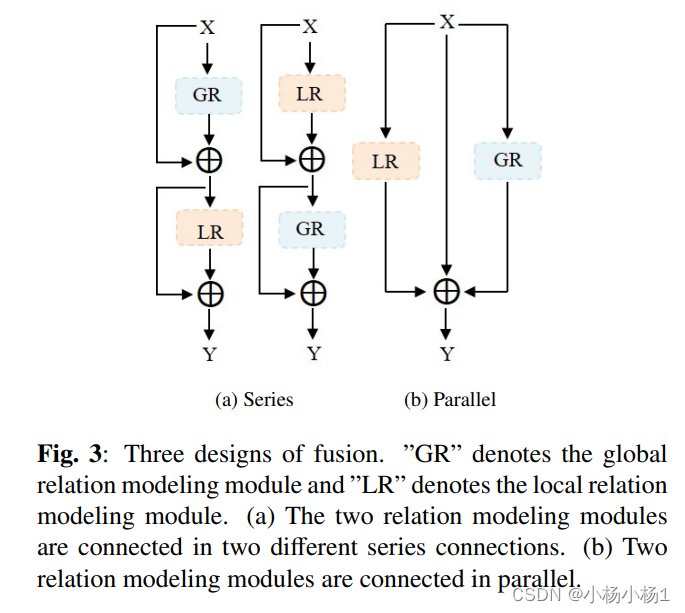

Graph-Segmenter: Graph Transformer with Boundary-aware Attention for Semantic Segmentation

方法

基于图的transformer,

有一定的参考价值

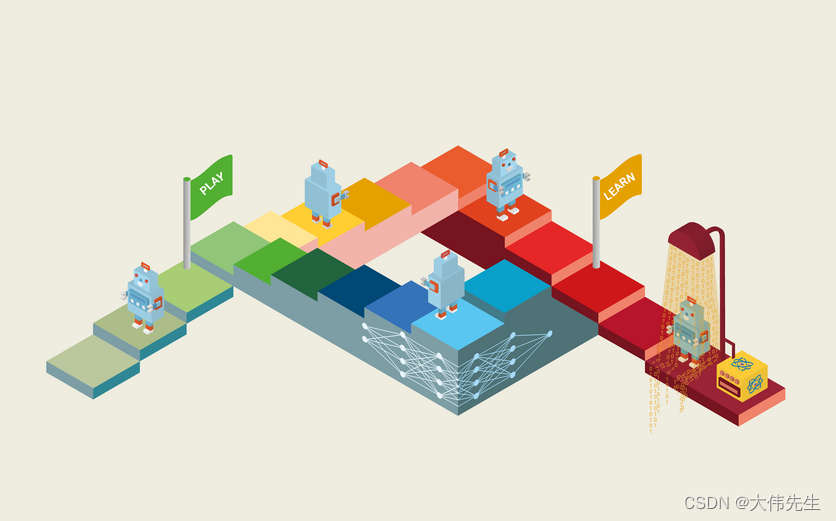

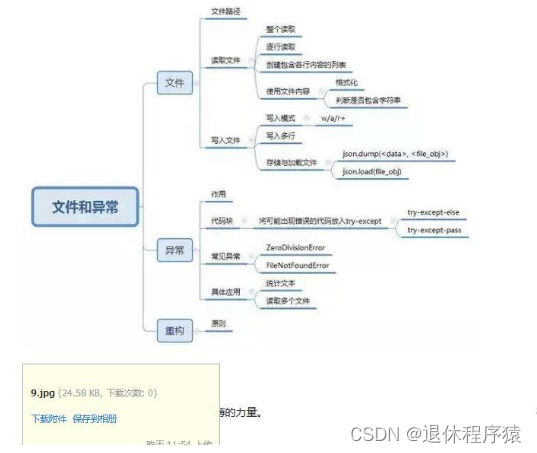

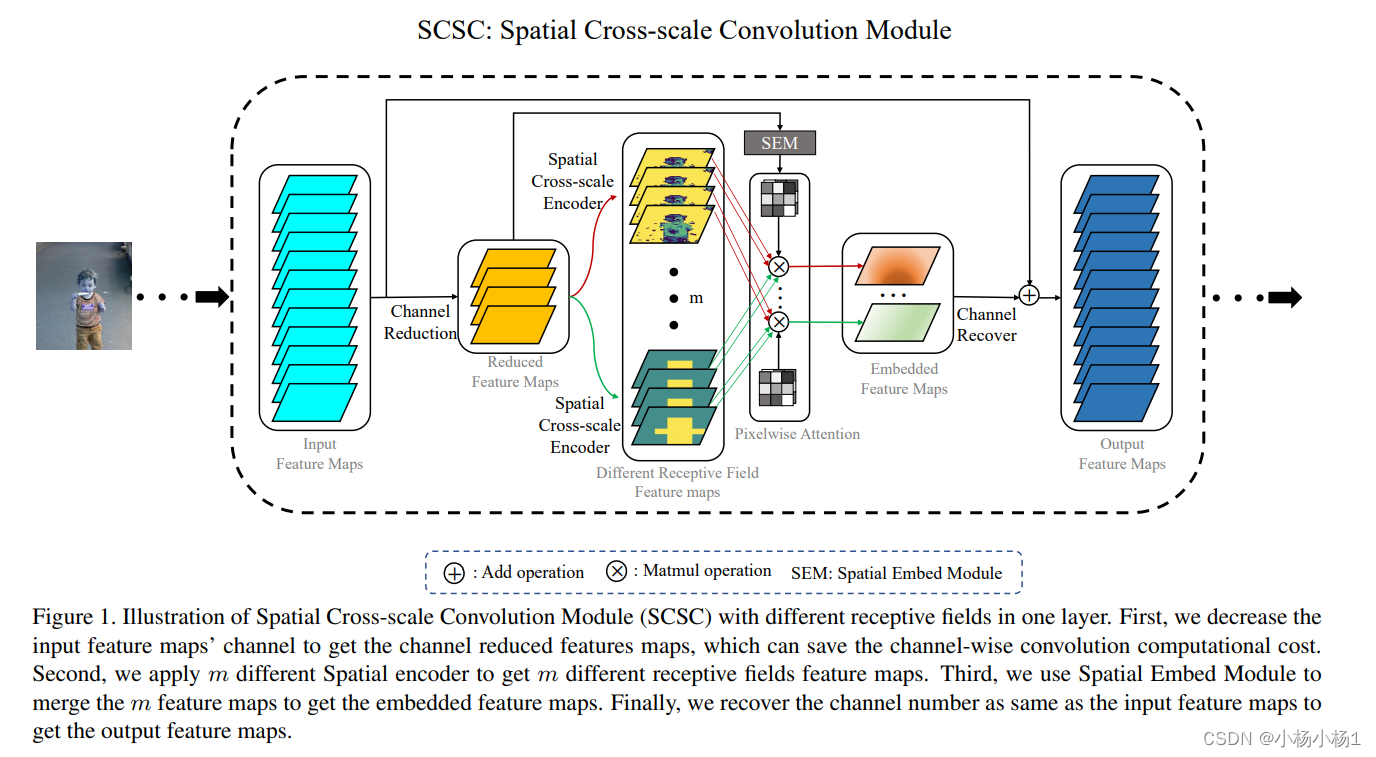

SCSC: Spatial Cross-scale Convolution Module to Strengthen both CNNs and Transformers

方法

就是层级思想

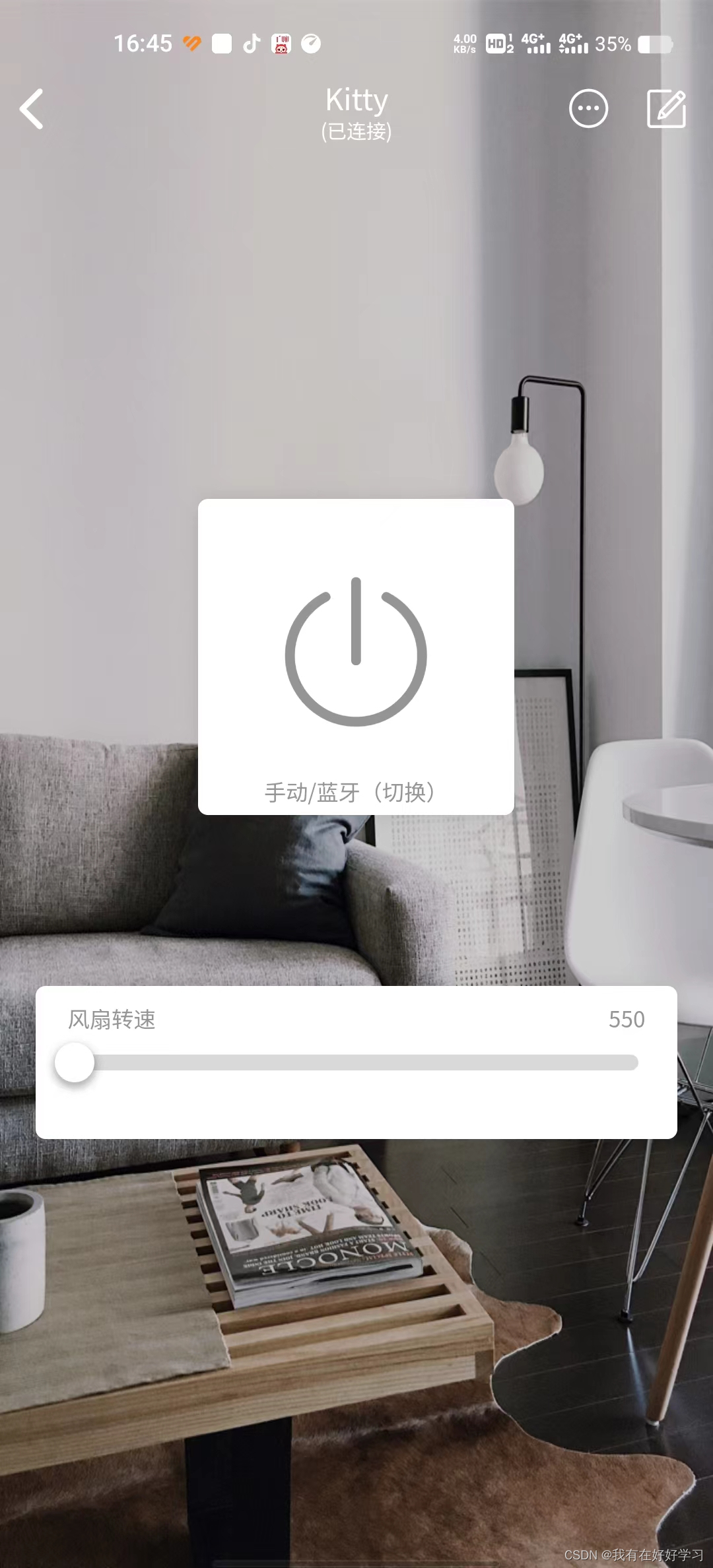

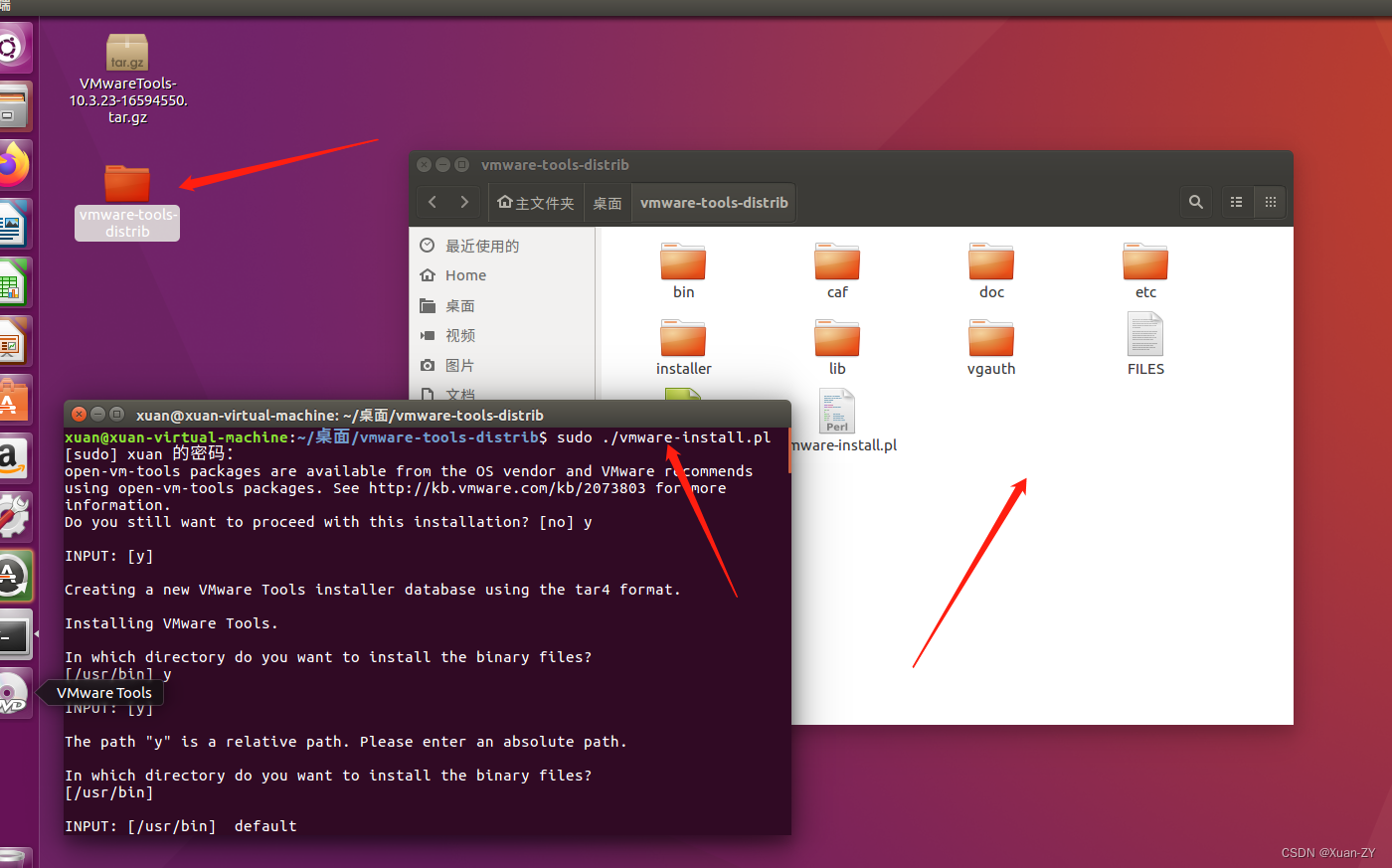

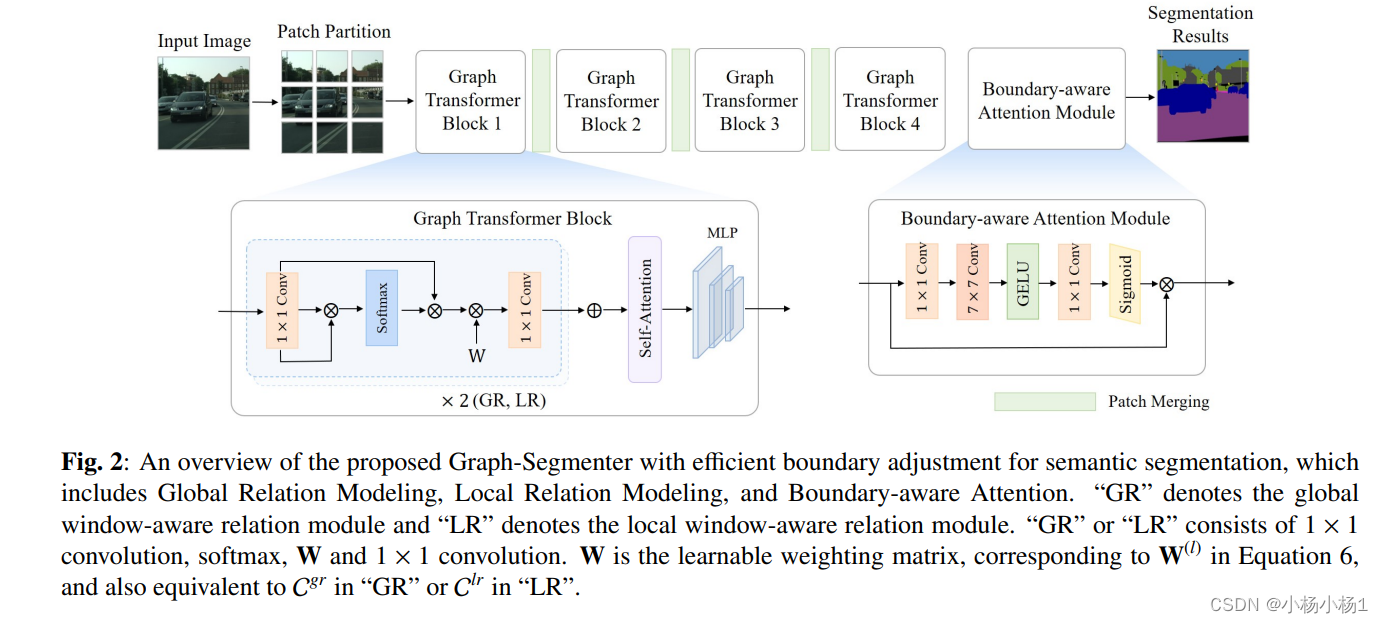

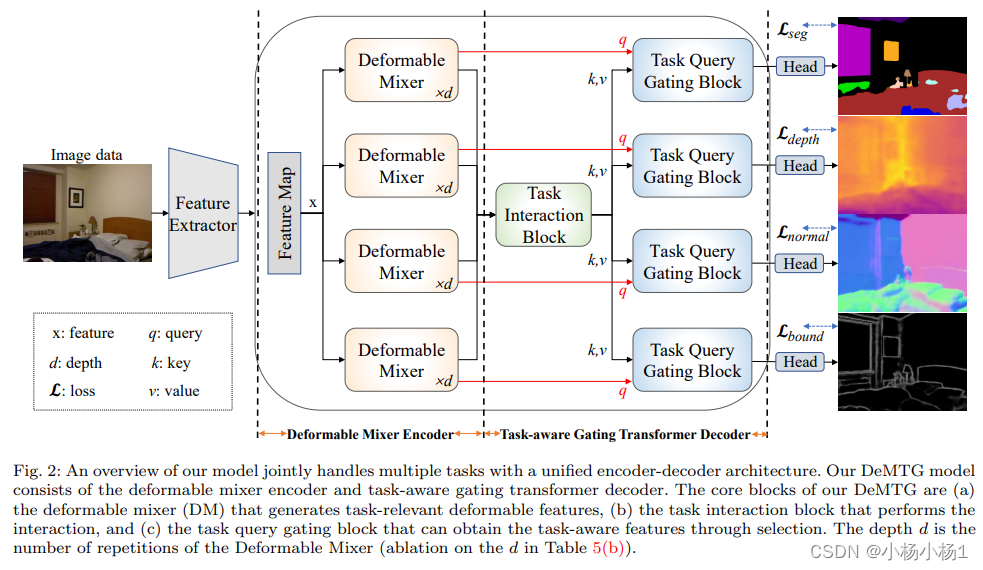

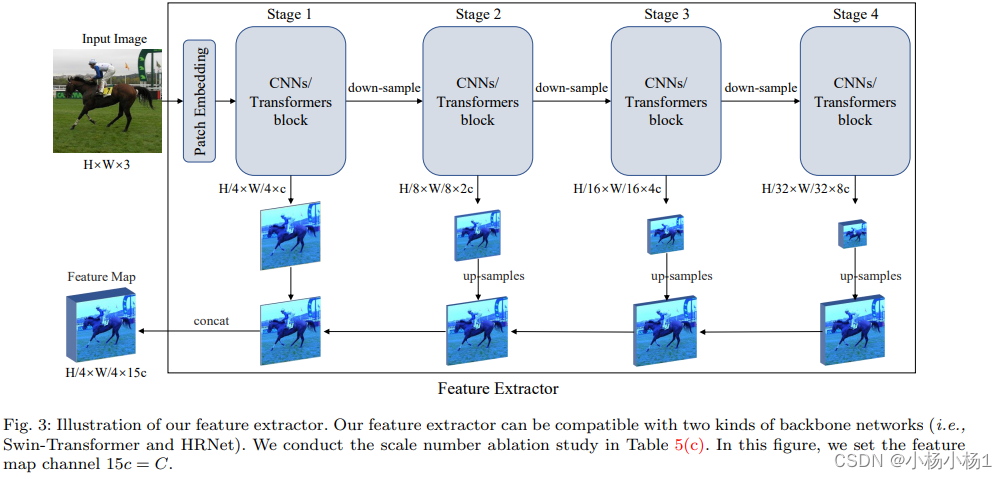

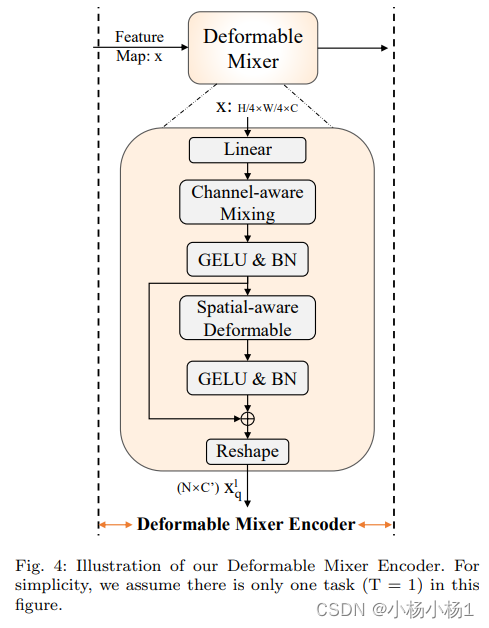

Deformable Mixer Transformer with Gating for Multi-Task Learning of Dense Prediction

方法

代码地址

他的这个是混合注意力