YOLO v5、v7、v8 模型优化

- 魔改YOLO

- yaml 文件解读

- 模型选择

- 在线做数据标注

- YOLO算法改进

- YOLOv5

- yolo.py

- yolov5.yaml

- 更换骨干网络之 SwinTransformer

- 更换骨干网络之 EfficientNet

- 优化上采样方式:轻量化算子CARAFE 替换 传统(最近邻 / 双线性 / 双立方 / 三线性 / 转置卷积)

- 优化空间金字塔池化:SPPF / SimSPPF / ASPP / RFB / SPPCSPC / SPPFCSPC 替换 SPP

- 优化训练策略:SIoU / EIoU / WIoU / Focal_xIoU / MPDIoU 替换 IoU

- 优化激活函数:SiLU、Hardswish、MemoryEfficientMish、FReLU、AconC、MetaAconC 替换 Mish函数

- 优化损失函数:多种类Loss, PolyLoss / VarifocalLoss / GFL / QualityFLoss / FL

- 优化损失函数:改进用于微小目标检测的 Normalized Gaussian Wasserstein Distance

- 引入特征融合网络 BiFPN:提高检测精度和效率

- 引入 YOLOv6 颈部 BiFusion Neck

- 引入注意力机制:在 C3 模块添加添加注意力机制

- 添加【SE】【CBAM】【 ECA 】【CA】

- 添加【SimAM】【CoTAttention】【SKAttention】【DoubleAttention】

- 添加【EffectiveSE】【GlobalContext】【GatherExcite】【MHSA】

- 添加【Triplet】【SpatialGroupEnhance】【NAM】【S2】

- 引入小目标检测:用于低分辨率图像和小物体的新 CNN 模块SPD-Conv

- 引入轻量化卷积 替换 传统卷积

- 引入独立注意力FPN+PAN结构 替换 传统卷积

- 引入 YOLOX 解耦头

- 引入 YOLOv8 的 C2f 模块

- 引入 RepVGG 重参数化模块

- 引入密集连接模块

- 模型剪枝

- 知识蒸馏

- 热力图可视化

- YOLOv7

- 更换骨干网络之 SwinTransformer

- 更换骨干网络之 EfficientNet

- 优化上采样方式:轻量化算子CARAFE 替换 传统(最近邻 / 双线性 / 双立方 / 三线性 / 转置卷积)

- 优化空间金字塔池化:SPPF / SimSPPF / ASPP / RFB / SPPCSPC / SPPFCSPC 替换 SPP

- 优化训练策略:SIoU / EIoU / WIoU / Focal_xIoU / MPDIoU 替换 IoU

- 优化激活函数:SiLU、Hardswish、MemoryEfficientMish、FReLU、AconC、MetaAconC 替换 Mish函数

- 优化标签分配策略:基于TOOD标签分配策略改进

- 优化损失函数:SIoU等结合FocalLoss应用:组成Focal-EIoU|Focal-SIoU|Focal-CIoU|Focal-GIoU、DIoU

- 优化损失函数:Wise-IoU

- 优化特征集成方法:目标检测的渐近特征金字塔网络AsymptoticFPN

- 引入 BiFPN 特征融合网络:提高检测精度和效率

- 引入 YOLOv6 颈部 BiFusion Neck

- 引入小目标检测:用于低分辨率图像和小物体的新 CNN 模块SPD-Conv

- 引入轻量化卷积 替换 传统卷积

- 引入 YOLOX 解耦头

- 引入 YOLOv8 的 C2f 模块

- 引入 RepVGG 重参数化模块

- 引入密集连接模块

- 引入用于小目标检测的归一化高斯 Wasserstein Distance Loss

- 引入Generalized Focal Loss

- 模型剪枝

- 知识蒸馏

- 热力图可视化

- YOLOv8

- 更换主干网络之 VanillaNet

- 更换主干网络之 FasterNet

- 更换骨干网络之 SwinTransformer

- 更换骨干网络之 CReToNeXt

- 更换主干之 MAE

- 更换主干之 QARepNeXt

- 更换主干之 RepLKNet

- 引入注意力机制:在 C2F 模块添加添加注意力机制

- 添加【SE】 【CBAM】【 ECA 】【CA 】

- 添加【SimAM】 【CoTAttention】【SKAttention】【DoubleAttention】

- 添加【EffectiveSE】【GlobalContext】【GatherExcite】【MHSA】

- 添加【Triplet】【SpatialGroupEnhance】【NAM】【S2】

- 添加【ParNet】【CrissCross】【GAM】【ParallelPolarized】【Sequential】

- 引入 RepVGG 重参数化模块

- 引入 SPD-Conv 替换 Conv

- 引入跨空间学习的高效多尺度注意力 Efficient Multi-Scale Attention

- 引入选择性注意力 LSK 模块

- 引入空间通道重组卷积

- 引入轻量级通用上采样算子CARAFE

- 引入全维动态卷积

- 引入 BiFPN 结构

- 引入 Slim-neck

- 引入中心化特征金字塔 EVC 模块

- 引入渐进特征金字塔网络 AFPN 结构

- 引入大目标检测头、小目标检测头

- 引入谷歌 Lion 优化器

- 引入小目标检测结构:CBiF、BiFB

- 优化FPN结构

- 优化损失函数:FocalLoss结合变种IoU套装

- 优化损失函数:Wise-IoU

- 优化损失函数:遮挡损失函数 Repulsion Loss

- 优化卷积:PWConv

- 优化 EfficientRep 结构:结合硬件感知神经网络设计的 Repvgg 的 ConvNet 网络结构

- 优化特征集成方法:目标检测的渐近特征金字塔网络AsymptoticFPN

- 优化检测方法:EfficiCLNMS

魔改YOLO

yaml 文件解读

比如我们用 V8 做一个分类任务,那我们需要看 V8分类模型 的 .yaml 文件。

yolov8-cls.yaml(ultralytics/models/v8/yolov8-cls.yaml)

# Parameters

nc: 1000 # 数据集有多少类别

scales: # 调整YOLO模型的深度、宽度和通道数,通过选择不同的规模,可以根据具体的需求来调整模型的大小和性能,从而实现更好的检测结果。

# 网络规模:[深度、宽度和通道数]

n: [0.33, 0.25, 1024] # n: 较小的规模

s: [0.33, 0.50, 1024] # s: 小规模

m: [0.67, 0.75, 1024] # m: 中等规模

l: [1.00, 1.00, 1024] # l: 大规模

x: [1.00, 1.25, 1024] # x: 最大规模

# YOLOv8.0n backbone

backbone:

# [ 从哪层获取输入(-1是上一层,[-1,6]是从上一层和第6层), 模块重复次数, 模块名字(Conv是卷积层), args]

- [-1, 1, Conv, [64, 3, 2]] # 0-P1/2 第 0 层:P1是第1特征层,缩小为输入图像的1/2,64代表输出通道数,3代表卷积核大小k,2代表stride步长

- [-1, 1, Conv, [128, 3, 2]] # 1-P2/4 第 1 层:P2是第2特征层,缩小为输入图像的1/4,128代表输出通道数,3代表卷积核大小k,2代表stride步长

- [-1, 3, C2f, [128, True]] 第 2 层:本层是C2f模块,3代表本层重复3次。128代表输出通道数,True表示Bottleneck有shortcut。

- [-1, 1, Conv, [256, 3, 2]] # 3-P3/8 P3是第3特征层,缩小为输入图像的1/8

- [-1, 6, C2f, [256, True]]

- [-1, 1, Conv, [512, 3, 2]] # 5-P4/16 P4是第4特征层,缩小为输入图像的1/16

- [-1, 6, C2f, [512, True]]

- [-1, 1, Conv, [1024, 3, 2]] # 7-P5/32 P5是第5特征层,缩小为输入图像的1/32

- [-1, 3, C2f, [1024, True]]

# YOLOv8.0n head

head:

- [-1, 1, Classify, [nc]] # Classify

yaml 文件分为:

- 参数部分:nc(数据集类别数量)、

- 网络结构部分:from(从哪层获取输入), repeats(模块重复次数), module(模块名字), args

- 头部部分

模型选择

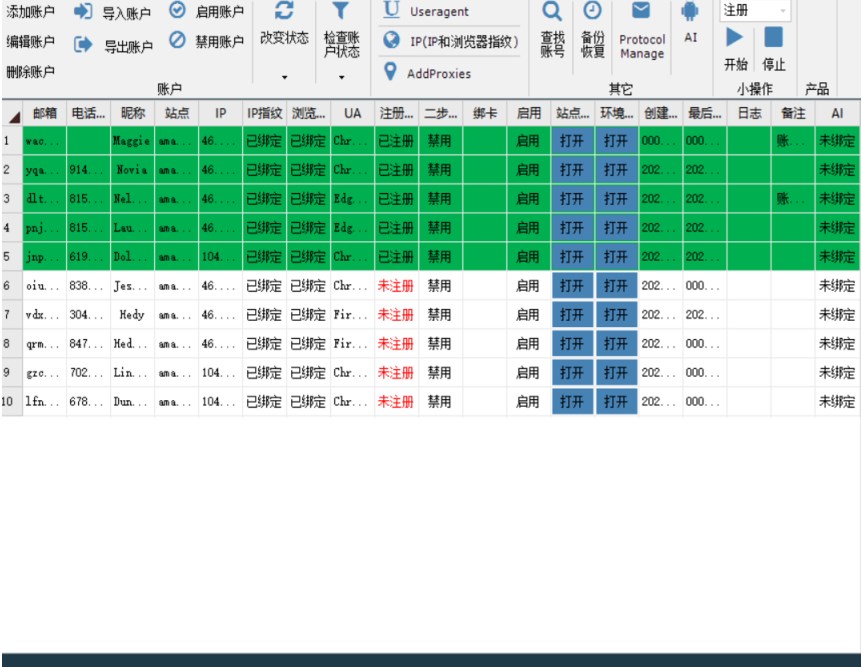

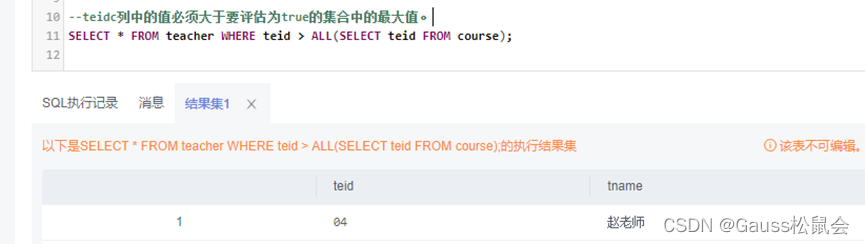

在线做数据标注

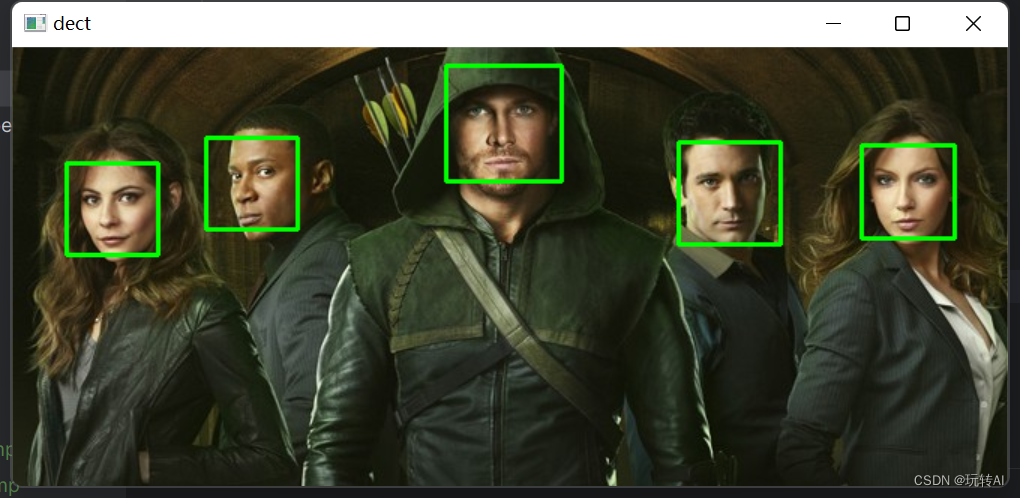

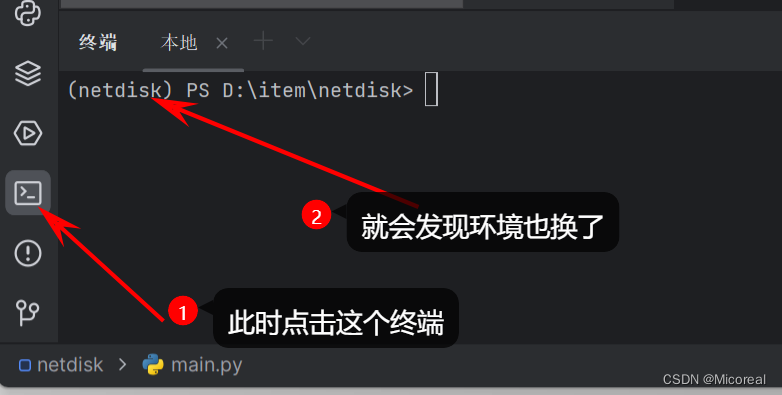

由于 Labelimg 和 Labelme 安装起来比较麻烦,我们使用在线标注工具make sense:https://www.makesense.ai

准备类别数据集,每添加一个类别文件夹:

在添加标签:

把图中类别标注出来:

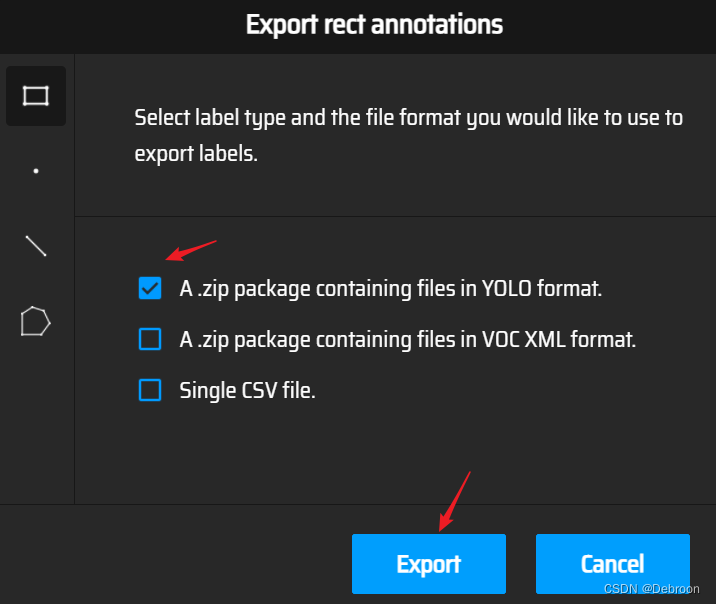

点选 Actions -> Export Annotations 导出标注:

数据标注文件:

0 0.329611 0.329853 0.339493 0.259214

0 0.698680 0.656634 0.339493 0.266585

0 0.770694 0.244472 0.318917 0.194103

0 0.813131 0.104423 0.073300 0.063882

类别标签 目标中心点坐标x 目标中心点坐标y 宽度 高度

类别标签:此处的序号是按照Make Sense中的标签"顺序"排列的,0 是第一个类别

YOLO算法改进

YOLO算法改进,无非六方面:

- 更快更强的网络架构

- 更有效的特征集成方法

- 更准确的检测方法

- 更精确的损失函数

- 更有效的标签分配方法

- 更有效的训练方法

YOLOv5

yolo.py

ultralytics/yolo5/models/yolo.py:

import argparse

import contextlib

import os

import platform

import sys

from copy import deepcopy

from pathlib import Path

FILE = Path(__file__).resolve()

ROOT = FILE.parents[1] # YOLOv5 root directory

if str(ROOT) not in sys.path:

sys.path.append(str(ROOT)) # add ROOT to PATH

if platform.system() != 'Windows':

ROOT = Path(os.path.relpath(ROOT, Path.cwd())) # relative

from models.common import * # noqa

from models.experimental import * # noqa

from utils.autoanchor import check_anchor_order

from utils.general import LOGGER, check_version, check_yaml, make_divisible, print_args

from utils.plots import feature_visualization

from utils.torch_utils import (fuse_conv_and_bn, initialize_weights, model_info, profile, scale_img, select_device,

time_sync)

try:

import thop # for FLOPs computation

except ImportError:

thop = None

class Detect(nn.Module):

# YOLOv5 Detect head for detection models

stride = None # strides computed during build

dynamic = False # force grid reconstruction

export = False # export mode

def __init__(self, nc=80, anchors=(), ch=(), inplace=True): # detection layer

super().__init__()

self.nc = nc # number of classes

self.no = nc + 5 # number of outputs per anchor

self.nl = len(anchors) # number of detection layers

self.na = len(anchors[0]) // 2 # number of anchors

self.grid = [torch.empty(0) for _ in range(self.nl)] # init grid

self.anchor_grid = [torch.empty(0) for _ in range(self.nl)] # init anchor grid

self.register_buffer('anchors', torch.tensor(anchors).float().view(self.nl, -1, 2)) # shape(nl,na,2)

self.m = nn.ModuleList(nn.Conv2d(x, self.no * self.na, 1) for x in ch) # output conv

self.inplace = inplace # use inplace ops (e.g. slice assignment)

def forward(self, x):

z = [] # inference output

for i in range(self.nl):

x[i] = self.m[i](x[i]) # conv

bs, _, ny, nx = x[i].shape # x(bs,255,20,20) to x(bs,3,20,20,85)

x[i] = x[i].view(bs, self.na, self.no, ny, nx).permute(0, 1, 3, 4, 2).contiguous()

if not self.training: # inference

if self.dynamic or self.grid[i].shape[2:4] != x[i].shape[2:4]:

self.grid[i], self.anchor_grid[i] = self._make_grid(nx, ny, i)

if isinstance(self, Segment): # (boxes + masks)

xy, wh, conf, mask = x[i].split((2, 2, self.nc + 1, self.no - self.nc - 5), 4)

xy = (xy.sigmoid() * 2 + self.grid[i]) * self.stride[i] # xy

wh = (wh.sigmoid() * 2) ** 2 * self.anchor_grid[i] # wh

y = torch.cat((xy, wh, conf.sigmoid(), mask), 4)

else: # Detect (boxes only)

xy, wh, conf = x[i].sigmoid().split((2, 2, self.nc + 1), 4)

xy = (xy * 2 + self.grid[i]) * self.stride[i] # xy

wh = (wh * 2) ** 2 * self.anchor_grid[i] # wh

y = torch.cat((xy, wh, conf), 4)

z.append(y.view(bs, self.na * nx * ny, self.no))

return x if self.training else (torch.cat(z, 1), ) if self.export else (torch.cat(z, 1), x)

def _make_grid(self, nx=20, ny=20, i=0, torch_1_10=check_version(torch.__version__, '1.10.0')):

d = self.anchors[i].device

t = self.anchors[i].dtype

shape = 1, self.na, ny, nx, 2 # grid shape

y, x = torch.arange(ny, device=d, dtype=t), torch.arange(nx, device=d, dtype=t)

yv, xv = torch.meshgrid(y, x, indexing='ij') if torch_1_10 else torch.meshgrid(y, x) # torch>=0.7 compatibility

grid = torch.stack((xv, yv), 2).expand(shape) - 0.5 # add grid offset, i.e. y = 2.0 * x - 0.5

anchor_grid = (self.anchors[i] * self.stride[i]).view((1, self.na, 1, 1, 2)).expand(shape)

return grid, anchor_grid

class Segment(Detect):

# YOLOv5 Segment head for segmentation models

def __init__(self, nc=80, anchors=(), nm=32, npr=256, ch=(), inplace=True):

super().__init__(nc, anchors, ch, inplace)

self.nm = nm # number of masks

self.npr = npr # number of protos

self.no = 5 + nc + self.nm # number of outputs per anchor

self.m = nn.ModuleList(nn.Conv2d(x, self.no * self.na, 1) for x in ch) # output conv

self.proto = Proto(ch[0], self.npr, self.nm) # protos

self.detect = Detect.forward

def forward(self, x):

p = self.proto(x[0])

x = self.detect(self, x)

return (x, p) if self.training else (x[0], p) if self.export else (x[0], p, x[1])

class BaseModel(nn.Module):

# YOLOv5 base model

def forward(self, x, profile=False, visualize=False):

return self._forward_once(x, profile, visualize) # single-scale inference, train

def _forward_once(self, x, profile=False, visualize=False):

y, dt = [], [] # outputs

for m in self.model:

if m.f != -1: # if not from previous layer

x = y[m.f] if isinstance(m.f, int) else [x if j == -1 else y[j] for j in m.f] # from earlier layers

if profile:

self._profile_one_layer(m, x, dt)

x = m(x) # run

y.append(x if m.i in self.save else None) # save output

if visualize:

feature_visualization(x, m.type, m.i, save_dir=visualize)

return x

def _profile_one_layer(self, m, x, dt):

c = m == self.model[-1] # is final layer, copy input as inplace fix

o = thop.profile(m, inputs=(x.copy() if c else x, ), verbose=False)[0] / 1E9 * 2 if thop else 0 # FLOPs

t = time_sync()

for _ in range(10):

m(x.copy() if c else x)

dt.append((time_sync() - t) * 100)

if m == self.model[0]:

LOGGER.info(f"{'time (ms)':>10s} {'GFLOPs':>10s} {'params':>10s} module")

LOGGER.info(f'{dt[-1]:10.2f} {o:10.2f} {m.np:10.0f} {m.type}')

if c:

LOGGER.info(f"{sum(dt):10.2f} {'-':>10s} {'-':>10s} Total")

def fuse(self): # fuse model Conv2d() + BatchNorm2d() layers

LOGGER.info('Fusing layers... ')

for m in self.model.modules():

if isinstance(m, (Conv, DWConv)) and hasattr(m, 'bn'):

m.conv = fuse_conv_and_bn(m.conv, m.bn) # update conv

delattr(m, 'bn') # remove batchnorm

m.forward = m.forward_fuse # update forward

self.info()

return self

def info(self, verbose=False, img_size=640): # print model information

model_info(self, verbose, img_size)

def _apply(self, fn):

# Apply to(), cpu(), cuda(), half() to model tensors that are not parameters or registered buffers

self = super()._apply(fn)

m = self.model[-1] # Detect()

if isinstance(m, (Detect, Segment)):

m.stride = fn(m.stride)

m.grid = list(map(fn, m.grid))

if isinstance(m.anchor_grid, list):

m.anchor_grid = list(map(fn, m.anchor_grid))

return self

class DetectionModel(BaseModel):

# YOLOv5 detection model

def __init__(self, cfg='yolov5s.yaml', ch=3, nc=None, anchors=None): # model, input channels, number of classes

super().__init__()

if isinstance(cfg, dict):

self.yaml = cfg # model dict

else: # is *.yaml

import yaml # for torch hub

self.yaml_file = Path(cfg).name

with open(cfg, encoding='ascii', errors='ignore') as f:

self.yaml = yaml.safe_load(f) # model dict

# Define model

ch = self.yaml['ch'] = self.yaml.get('ch', ch) # input channels

if nc and nc != self.yaml['nc']:

LOGGER.info(f"Overriding model.yaml nc={self.yaml['nc']} with nc={nc}")

self.yaml['nc'] = nc # override yaml value

if anchors:

LOGGER.info(f'Overriding model.yaml anchors with anchors={anchors}')

self.yaml['anchors'] = round(anchors) # override yaml value

self.model, self.save = parse_model(deepcopy(self.yaml), ch=[ch]) # model, savelist

self.names = [str(i) for i in range(self.yaml['nc'])] # default names

self.inplace = self.yaml.get('inplace', True)

# Build strides, anchors

m = self.model[-1] # Detect()

if isinstance(m, (Detect, Segment)):

s = 256 # 2x min stride

m.inplace = self.inplace

forward = lambda x: self.forward(x)[0] if isinstance(m, Segment) else self.forward(x)

m.stride = torch.tensor([s / x.shape[-2] for x in forward(torch.zeros(1, ch, s, s))]) # forward

check_anchor_order(m)

m.anchors /= m.stride.view(-1, 1, 1)

self.stride = m.stride

self._initialize_biases() # only run once

# Init weights, biases

initialize_weights(self)

self.info()

LOGGER.info('')

def forward(self, x, augment=False, profile=False, visualize=False):

if augment:

return self._forward_augment(x) # augmented inference, None

return self._forward_once(x, profile, visualize) # single-scale inference, train

def _forward_augment(self, x):

img_size = x.shape[-2:] # height, width

s = [1, 0.83, 0.67] # scales

f = [None, 3, None] # flips (2-ud, 3-lr)

y = [] # outputs

for si, fi in zip(s, f):

xi = scale_img(x.flip(fi) if fi else x, si, gs=int(self.stride.max()))

yi = self._forward_once(xi)[0] # forward

# cv2.imwrite(f'img_{si}.jpg', 255 * xi[0].cpu().numpy().transpose((1, 2, 0))[:, :, ::-1]) # save

yi = self._descale_pred(yi, fi, si, img_size)

y.append(yi)

y = self._clip_augmented(y) # clip augmented tails

return torch.cat(y, 1), None # augmented inference, train

def _descale_pred(self, p, flips, scale, img_size):

# de-scale predictions following augmented inference (inverse operation)

if self.inplace:

p[..., :4] /= scale # de-scale

if flips == 2:

p[..., 1] = img_size[0] - p[..., 1] # de-flip ud

elif flips == 3:

p[..., 0] = img_size[1] - p[..., 0] # de-flip lr

else:

x, y, wh = p[..., 0:1] / scale, p[..., 1:2] / scale, p[..., 2:4] / scale # de-scale

if flips == 2:

y = img_size[0] - y # de-flip ud

elif flips == 3:

x = img_size[1] - x # de-flip lr

p = torch.cat((x, y, wh, p[..., 4:]), -1)

return p

def _clip_augmented(self, y):

# Clip YOLOv5 augmented inference tails

nl = self.model[-1].nl # number of detection layers (P3-P5)

g = sum(4 ** x for x in range(nl)) # grid points

e = 1 # exclude layer count

i = (y[0].shape[1] // g) * sum(4 ** x for x in range(e)) # indices

y[0] = y[0][:, :-i] # large

i = (y[-1].shape[1] // g) * sum(4 ** (nl - 1 - x) for x in range(e)) # indices

y[-1] = y[-1][:, i:] # small

return y

def _initialize_biases(self, cf=None): # initialize biases into Detect(), cf is class frequency

# https://arxiv.org/abs/1708.02002 section 3.3

# cf = torch.bincount(torch.tensor(np.concatenate(dataset.labels, 0)[:, 0]).long(), minlength=nc) + 1.

m = self.model[-1] # Detect() module

for mi, s in zip(m.m, m.stride): # from

b = mi.bias.view(m.na, -1) # conv.bias(255) to (3,85)

b.data[:, 4] += math.log(8 / (640 / s) ** 2) # obj (8 objects per 640 image)

b.data[:, 5:5 + m.nc] += math.log(0.6 / (m.nc - 0.99999)) if cf is None else torch.log(cf / cf.sum()) # cls

mi.bias = torch.nn.Parameter(b.view(-1), requires_grad=True)

Model = DetectionModel # retain YOLOv5 'Model' class for backwards compatibility

class SegmentationModel(DetectionModel):

# YOLOv5 segmentation model

def __init__(self, cfg='yolov5s-seg.yaml', ch=3, nc=None, anchors=None):

super().__init__(cfg, ch, nc, anchors)

class ClassificationModel(BaseModel):

# YOLOv5 classification model

def __init__(self, cfg=None, model=None, nc=1000, cutoff=10): # yaml, model, number of classes, cutoff index

super().__init__()

self._from_detection_model(model, nc, cutoff) if model is not None else self._from_yaml(cfg)

def _from_detection_model(self, model, nc=1000, cutoff=10):

# Create a YOLOv5 classification model from a YOLOv5 detection model

if isinstance(model, DetectMultiBackend):

model = model.model # unwrap DetectMultiBackend

model.model = model.model[:cutoff] # backbone

m = model.model[-1] # last layer

ch = m.conv.in_channels if hasattr(m, 'conv') else m.cv1.conv.in_channels # ch into module

c = Classify(ch, nc) # Classify()

c.i, c.f, c.type = m.i, m.f, 'models.common.Classify' # index, from, type

model.model[-1] = c # replace

self.model = model.model

self.stride = model.stride

self.save = []

self.nc = nc

def _from_yaml(self, cfg):

# Create a YOLOv5 classification model from a *.yaml file

self.model = None

def parse_model(d, ch): # model_dict, input_channels(3)

# Parse a YOLOv5 model.yaml dictionary

LOGGER.info(f"\n{'':>3}{'from':>18}{'n':>3}{'params':>10} {'module':<40}{'arguments':<30}")

anchors, nc, gd, gw, act = d['anchors'], d['nc'], d['depth_multiple'], d['width_multiple'], d.get('activation')

if act:

Conv.default_act = eval(act) # redefine default activation, i.e. Conv.default_act = nn.SiLU()

LOGGER.info(f"{colorstr('activation:')} {act}") # print

na = (len(anchors[0]) // 2) if isinstance(anchors, list) else anchors # number of anchors

no = na * (nc + 5) # number of outputs = anchors * (classes + 5)

layers, save, c2 = [], [], ch[-1] # layers, savelist, ch out

for i, (f, n, m, args) in enumerate(d['backbone'] + d['head']): # from, number, module, args

m = eval(m) if isinstance(m, str) else m # eval strings

for j, a in enumerate(args):

with contextlib.suppress(NameError):

args[j] = eval(a) if isinstance(a, str) else a # eval strings

n = n_ = max(round(n * gd), 1) if n > 1 else n # depth gain

if m in {

Conv, GhostConv, Bottleneck, GhostBottleneck, SPP, SPPF, DWConv, MixConv2d, Focus, CrossConv,

BottleneckCSP, C3, C3TR, C3SPP, C3Ghost, nn.ConvTranspose2d, DWConvTranspose2d, C3x}:

c1, c2 = ch[f], args[0]

if c2 != no: # if not output

c2 = make_divisible(c2 * gw, 8)

args = [c1, c2, *args[1:]]

if m in {BottleneckCSP, C3, C3TR, C3Ghost, C3x}:

args.insert(2, n) # number of repeats

n = 1

elif m is nn.BatchNorm2d:

args = [ch[f]]

elif m is Concat:

c2 = sum(ch[x] for x in f)

# TODO: channel, gw, gd

elif m in {Detect, Segment}:

args.append([ch[x] for x in f])

if isinstance(args[1], int): # number of anchors

args[1] = [list(range(args[1] * 2))] * len(f)

if m is Segment:

args[3] = make_divisible(args[3] * gw, 8)

elif m is Contract:

c2 = ch[f] * args[0] ** 2

elif m is Expand:

c2 = ch[f] // args[0] ** 2

else:

c2 = ch[f]

m_ = nn.Sequential(*(m(*args) for _ in range(n))) if n > 1 else m(*args) # module

t = str(m)[8:-2].replace('__main__.', '') # module type

np = sum(x.numel() for x in m_.parameters()) # number params

m_.i, m_.f, m_.type, m_.np = i, f, t, np # attach index, 'from' index, type, number params

LOGGER.info(f'{i:>3}{str(f):>18}{n_:>3}{np:10.0f} {t:<40}{str(args):<30}') # print

save.extend(x % i for x in ([f] if isinstance(f, int) else f) if x != -1) # append to savelist

layers.append(m_)

if i == 0:

ch = []

ch.append(c2)

return nn.Sequential(*layers), sorted(save)

if __name__ == '__main__':

parser = argparse.ArgumentParser()

parser.add_argument('--cfg', type=str, default='yolov5s.yaml', help='model.yaml')

parser.add_argument('--batch-size', type=int, default=1, help='total batch size for all GPUs')

parser.add_argument('--device', default='', help='cuda device, i.e. 0 or 0,1,2,3 or cpu')

parser.add_argument('--profile', action='store_true', help='profile model speed')

parser.add_argument('--line-profile', action='store_true', help='profile model speed layer by layer')

parser.add_argument('--test', action='store_true', help='test all yolo*.yaml')

opt = parser.parse_args()

opt.cfg = check_yaml(opt.cfg) # check YAML

print_args(vars(opt))

device = select_device(opt.device)

# Create model

im = torch.rand(opt.batch_size, 3, 640, 640).to(device)

model = Model(opt.cfg).to(device)

# Options

if opt.line_profile: # profile layer by layer

model(im, profile=True)

elif opt.profile: # profile forward-backward

results = profile(input=im, ops=[model], n=3)

elif opt.test: # test all models

for cfg in Path(ROOT / 'models').rglob('yolo*.yaml'):

try:

_ = Model(cfg)

except Exception as e:

print(f'Error in {cfg}: {e}')

else: # report fused model summary

model.fuse()

yolov5.yaml

ultralytics/models/v5/yolov5.yaml:

# Parameters

nc: 80 # number of classes

scales: # model compound scaling constants, i.e. 'model=yolov5n.yaml' will call yolov5.yaml with scale 'n'

# [depth, width, max_channels]

n: [0.33, 0.25, 1024]

s: [0.33, 0.50, 1024]

m: [0.67, 0.75, 1024]

l: [1.00, 1.00, 1024]

x: [1.33, 1.25, 1024]

# YOLOv5 v6.0 backbone

backbone:

# [from, number, module, args]

[[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2

[-1, 1, Conv, [128, 3, 2]], # 1-P2/4

[-1, 3, C3, [128]],

[-1, 1, Conv, [256, 3, 2]], # 3-P3/8

[-1, 6, C3, [256]],

[-1, 1, Conv, [512, 3, 2]], # 5-P4/16

[-1, 9, C3, [512]],

[-1, 1, Conv, [1024, 3, 2]], # 7-P5/32

[-1, 3, C3, [1024]],

[-1, 1, SPPF, [1024, 5]], # 9

]

# YOLOv5 v6.0 head

head:

[[-1, 1, Conv, [512, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 6], 1, Concat, [1]], # cat backbone P4

[-1, 3, C3, [512, False]], # 13

[-1, 1, Conv, [256, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 4], 1, Concat, [1]], # cat backbone P3

[-1, 3, C3, [256, False]], # 17 (P3/8-small)

[-1, 1, Conv, [256, 3, 2]],

[[-1, 14], 1, Concat, [1]], # cat head P4

[-1, 3, C3, [512, False]], # 20 (P4/16-medium)

[-1, 1, Conv, [512, 3, 2]],

[[-1, 10], 1, Concat, [1]], # cat head P5

[-1, 3, C3, [1024, False]], # 23 (P5/32-large)

[[17, 20, 23], 1, Detect, [nc]], # Detect(P3, P4, P5)

]

更换骨干网络之 SwinTransformer

更换骨干网络之 EfficientNet

优化上采样方式:轻量化算子CARAFE 替换 传统(最近邻 / 双线性 / 双立方 / 三线性 / 转置卷积)

上采样(Upsampling)是一种图像处理中的操作,用于将低分辨率的图像或特征图增加到高分辨率。

在计算机视觉中,上采样常常用于将低分辨率的特征图恢复到原始图像的尺寸,或者在图像生成任务中生成更高分辨率的图像。

常见的上采样方式包括:

-

最近邻插值(Nearest Neighbor Interpolation):最简单的上采样方法,将低分辨率图像的每个像素复制到对应的多个像素位置,以增加图像的尺寸和分辨率。这种方法不考虑像素之间的差异,可能会导致图像的锯齿状边缘。

-

双线性插值(Bilinear Interpolation):通过在四个最近的像素之间进行插值来计算新像素的值。这种方法考虑了像素之间的差异,可以得到更平滑的上采样结果。

-

双三次插值(Bicubic Interpolation):在双线性插值的基础上进行进一步的插值计算,使用更多的像素进行插值计算,得到更平滑的上采样结果。然而,双三次插值计算量较大。

-

转置卷积(Transposed Convolution):也称为反卷积(Deconvolution),通过使用卷积操作的反向计算过程来实现上采样。转置卷积通过填充和卷积核的反向操作,将低分辨率特征图恢复到原始尺寸。

这些上采样方法在不同的任务和场景中具有不同的适用性和效果。选择合适的上采样方法取决于任务的需求、计算资源和对图像质量的要求。

- 图像超分辨率:当需要将低分辨率图像恢复到高分辨率时,双线性插值和双三次插值是常用的方法。它们能够增加图像的细节,并且计算效率较高。

- 目标检测和语义分割:在目标检测和语义分割等任务中,需要将低分辨率的特征图上采样到原始图像的尺寸。转置卷积是常用的上采样方法,因为它能够学习到更复杂的特征重建,并且能够保持较好的位置信息。

- 图像生成:在图像生成任务中,需要生成高分辨率的图像。转置卷积通常被用于将低分辨率的噪声向量映射到高分辨率图像空间。

- 图像风格转换:在图像风格转换任务中,需要将输入图像的风格转换为目标风格。双线性插值在这种情况下也是常用的上采样方法,因为它能够保持较好的图像纹理和细节。

在 YOLOv5 中,上采样模块是使用 nn.Upsample 实现的。

具体来说,YOLOv5 中使用的上采样模块包括两种类型:

-

nn.Upsample(scale_factor=2, mode=‘nearest’),这种上采样模块是通过最近邻插值实现的。

它用于将特征图的大小增加一倍,例如在 yolov5s 中将 16x16 特征图上采样到 32x32。

-

nn.ConvTranspose2d(in_channels, out_channels, kernel_size=2, stride=2),这种上采样模块是通过转置卷积实现的。

它用于将特征图的大小增加一倍,并且可以学习更加复杂的上采样方式。在 YOLOv5 中,这种上采样模块在 yolov5m、yolov5l 和 yolov5x 中使用。

把 CARAFE 添加到 common.py:

import torch.nn.functional as F

class CARAFE(nn.Module):

# CARAFE: Content-Aware ReAssembly of FEatures https://arxiv.org/pdf/1905.02188.pdf

def __init__(self, c1, c2, kernel_size=3, up_factor=2):

super(CARAFE, self).__init__()

self.kernel_size = kernel_size

self.up_factor = up_factor

self.down = nn.Conv2d(c1, c1 // 4, 1)

self.encoder = nn.Conv2d(c1 // 4, self.up_factor ** 2 * self.kernel_size ** 2,

self.kernel_size, 1, self.kernel_size // 2)

self.out = nn.Conv2d(c1, c2, 1)

def forward(self, x):

N, C, H, W = x.size()

# N,C,H,W -> N,C,delta*H,delta*W

# kernel prediction module

kernel_tensor = self.down(x) # (N, Cm, H, W)

kernel_tensor = self.encoder(kernel_tensor) # (N, S^2 * Kup^2, H, W)

kernel_tensor = F.pixel_shuffle(kernel_tensor, self.up_factor) # (N, S^2 * Kup^2, H, W)->(N, Kup^2, S*H, S*W)

kernel_tensor = F.softmax(kernel_tensor, dim=1) # (N, Kup^2, S*H, S*W)

kernel_tensor = kernel_tensor.unfold(2, self.up_factor, step=self.up_factor) # (N, Kup^2, H, W*S, S)

kernel_tensor = kernel_tensor.unfold(3, self.up_factor, step=self.up_factor) # (N, Kup^2, H, W, S, S)

kernel_tensor = kernel_tensor.reshape(N, self.kernel_size ** 2, H, W, self.up_factor ** 2) # (N, Kup^2, H, W, S^2)

kernel_tensor = kernel_tensor.permute(0, 2, 3, 1, 4) # (N, H, W, Kup^2, S^2)

# content-aware reassembly module

# tensor.unfold: dim, size, step

x = F.pad(x, pad=(self.kernel_size // 2, self.kernel_size // 2,

self.kernel_size // 2, self.kernel_size // 2),

mode='constant', value=0) # (N, C, H+Kup//2+Kup//2, W+Kup//2+Kup//2)

x = x.unfold(2, self.kernel_size, step=1) # (N, C, H, W+Kup//2+Kup//2, Kup)

x = x.unfold(3, self.kernel_size, step=1) # (N, C, H, W, Kup, Kup)

x = x.reshape(N, C, H, W, -1) # (N, C, H, W, Kup^2)

x = x.permute(0, 2, 3, 1, 4) # (N, H, W, C, Kup^2)

out_tensor = torch.matmul(x, kernel_tensor) # (N, H, W, C, S^2)

out_tensor = out_tensor.reshape(N, H, W, -1)

out_tensor = out_tensor.permute(0, 3, 1, 2)

out_tensor = F.pixel_shuffle(out_tensor, self.up_factor)

out_tensor = self.out(out_tensor)

return out_tensor

model/yolo.py 添加这层:

修改前:

if m in {

Conv, GhostConv, Bottleneck, GhostBottleneck, SPP, SPPF, DWConv, MixConv2d, Focus, CrossConv,

BottleneckCSP, C3, C3TR, C3SPP, C3Ghost, nn.ConvTranspose2d, DWConvTranspose2d, C3x}:

修改后:

if m in {

Conv, GhostConv, Bottleneck, GhostBottleneck, SPP, SPPF, DWConv, MixConv2d, Focus, CrossConv,

BottleneckCSP, C3, C3TR, C3SPP, C3Ghost, nn.ConvTranspose2d, DWConvTranspose2d, C3x, CARAFE}:

修改一下 ultralytics/models/v5/yolov5.yaml:

# Parameters

nc: 80 # number of classes

scales: # model compound scaling constants, i.e. 'model=yolov5n.yaml' will call yolov5.yaml with scale 'n'

# [depth, width, max_channels]

n: [0.33, 0.25, 1024]

s: [0.33, 0.50, 1024]

m: [0.67, 0.75, 1024]

l: [1.00, 1.00, 1024]

x: [1.33, 1.25, 1024]

# YOLOv5 v6.0 backbone

backbone:

# [from, number, module, args]

[[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2

[-1, 1, Conv, [128, 3, 2]], # 1-P2/4

[-1, 3, C3, [128]],

[-1, 1, Conv, [256, 3, 2]], # 3-P3/8

[-1, 6, C3, [256]],

[-1, 1, Conv, [512, 3, 2]], # 5-P4/16

[-1, 9, C3, [512]],

[-1, 1, Conv, [1024, 3, 2]], # 7-P5/32

[-1, 3, C3, [1024]],

[-1, 1, SPPF, [1024, 5]], # 9

]

# YOLOv5 v6.0 head

head:

[[-1, 1, Conv, [512, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 6], 1, Concat, [1]], # cat backbone P4

[-1, 3, C3, [512, False]], # 13

[-1, 1, Conv, [256, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 4], 1, Concat, [1]], # cat backbone P3

[-1, 3, C3, [256, False]], # 17 (P3/8-small)

[-1, 1, Conv, [256, 3, 2]],

[[-1, 14], 1, Concat, [1]], # cat head P4

[-1, 3, C3, [512, False]], # 20 (P4/16-medium)

[-1, 1, Conv, [512, 3, 2]],

[[-1, 10], 1, Concat, [1]], # cat head P5

[-1, 3, C3, [1024, False]], # 23 (P5/32-large)

[[17, 20, 23], 1, Detect, [nc]], # Detect(P3, P4, P5)

]

优化空间金字塔池化:SPPF / SimSPPF / ASPP / RFB / SPPCSPC / SPPFCSPC 替换 SPP

SPP:

class SPP(nn.Module):

# Spatial Pyramid Pooling (SPP) layer https://arxiv.org/abs/1406.4729

def __init__(self, c1, c2, k=(5, 9, 13)):

super().__init__()

c_ = c1 // 2 # hidden channels

self.cv1 = Conv(c1, c_, 1, 1)

self.cv2 = Conv(c_ * (len(k) + 1), c2, 1, 1)

self.m = nn.ModuleList([nn.MaxPool2d(kernel_size=x, stride=1, padding=x // 2) for x in k])

def forward(self, x):

x = self.cv1(x)

with warnings.catch_warnings():

warnings.simplefilter('ignore') # suppress torch 1.9.0 max_pool2d() warning

return self.cv2(torch.cat([x] + [m(x) for m in self.m], 1))

SPPF:

class SPPF(nn.Module):

# Spatial Pyramid Pooling - Fast (SPPF) layer for YOLOv5 by Glenn Jocher

def __init__(self, c1, c2, k=5): # equivalent to SPP(k=(5, 9, 13))

super().__init__()

c_ = c1 // 2 # hidden channels

self.cv1 = Conv(c1, c_, 1, 1)

self.cv2 = Conv(c_ * 4, c2, 1, 1)

self.m = nn.MaxPool2d(kernel_size=k, stride=1, padding=k // 2)

def forward(self, x):

x = self.cv1(x)

with warnings.catch_warnings():

warnings.simplefilter('ignore') # suppress torch 1.9.0 max_pool2d() warning

y1 = self.m(x)

y2 = self.m(y1)

return self.cv2(torch.cat((x, y1, y2, self.m(y2)), 1))

SimSPPF:

class SimConv(nn.Module):

'''Normal Conv with ReLU activation'''

def __init__(self, in_channels, out_channels, kernel_size, stride, groups=1, bias=False):

super().__init__()

padding = kernel_size // 2

self.conv = nn.Conv2d(

in_channels,

out_channels,

kernel_size=kernel_size,

stride=stride,

padding=padding,

groups=groups,

bias=bias,

)

self.bn = nn.BatchNorm2d(out_channels)

self.act = nn.ReLU()

def forward(self, x):

return self.act(self.bn(self.conv(x)))

def forward_fuse(self, x):

return self.act(self.conv(x))

class SimSPPF(nn.Module):

'''Simplified SPPF with ReLU activation'''

def __init__(self, in_channels, out_channels, kernel_size=5):

super().__init__()

c_ = in_channels // 2 # hidden channels

self.cv1 = SimConv(in_channels, c_, 1, 1)

self.cv2 = SimConv(c_ * 4, out_channels, 1, 1)

self.m = nn.MaxPool2d(kernel_size=kernel_size, stride=1, padding=kernel_size // 2)

def forward(self, x):

x = self.cv1(x)

with warnings.catch_warnings():

warnings.simplefilter('ignore')

y1 = self.m(x)

y2 = self.m(y1)

return self.cv2(torch.cat([x, y1, y2, self.m(y2)], 1))

ASPP:

# without BN version

class ASPP(nn.Module):

def __init__(self, in_channel=512, out_channel=256):

super(ASPP, self).__init__()

self.mean = nn.AdaptiveAvgPool2d((1, 1)) # (1,1)means ouput_dim

self.conv = nn.Conv2d(in_channel,out_channel, 1, 1)

self.atrous_block1 = nn.Conv2d(in_channel, out_channel, 1, 1)

self.atrous_block6 = nn.Conv2d(in_channel, out_channel, 3, 1, padding=6, dilation=6)

self.atrous_block12 = nn.Conv2d(in_channel, out_channel, 3, 1, padding=12, dilation=12)

self.atrous_block18 = nn.Conv2d(in_channel, out_channel, 3, 1, padding=18, dilation=18)

self.conv_1x1_output = nn.Conv2d(out_channel * 5, out_channel, 1, 1)

def forward(self, x):

size = x.shape[2:]

image_features = self.mean(x)

image_features = self.conv(image_features)

image_features = F.upsample(image_features, size=size, mode='bilinear')

atrous_block1 = self.atrous_block1(x)

atrous_block6 = self.atrous_block6(x)

atrous_block12 = self.atrous_block12(x)

atrous_block18 = self.atrous_block18(x)

net = self.conv_1x1_output(torch.cat([image_features, atrous_block1, atrous_block6,

atrous_block12, atrous_block18], dim=1))

return net

RFB:

class BasicConv(nn.Module):

def __init__(self, in_planes, out_planes, kernel_size, stride=1, padding=0, dilation=1, groups=1, relu=True, bn=True):

super(BasicConv, self).__init__()

self.out_channels = out_planes

if bn:

self.conv = nn.Conv2d(in_planes, out_planes, kernel_size=kernel_size, stride=stride, padding=padding, dilation=dilation, groups=groups, bias=False)

self.bn = nn.BatchNorm2d(out_planes, eps=1e-5, momentum=0.01, affine=True)

self.relu = nn.ReLU(inplace=True) if relu else None

else:

self.conv = nn.Conv2d(in_planes, out_planes, kernel_size=kernel_size, stride=stride, padding=padding, dilation=dilation, groups=groups, bias=True)

self.bn = None

self.relu = nn.ReLU(inplace=True) if relu else None

def forward(self, x):

x = self.conv(x)

if self.bn is not None:

x = self.bn(x)

if self.relu is not None:

x = self.relu(x)

return x

class BasicRFB(nn.Module):

def __init__(self, in_planes, out_planes, stride=1, scale=0.1, map_reduce=8, vision=1, groups=1):

super(BasicRFB, self).__init__()

self.scale = scale

self.out_channels = out_planes

inter_planes = in_planes // map_reduce

self.branch0 = nn.Sequential(

BasicConv(in_planes, inter_planes, kernel_size=1, stride=1, groups=groups, relu=False),

BasicConv(inter_planes, 2 * inter_planes, kernel_size=(3, 3), stride=stride, padding=(1, 1), groups=groups),

BasicConv(2 * inter_planes, 2 * inter_planes, kernel_size=3, stride=1, padding=vision, dilation=vision, relu=False, groups=groups)

)

self.branch1 = nn.Sequential(

BasicConv(in_planes, inter_planes, kernel_size=1, stride=1, groups=groups, relu=False),

BasicConv(inter_planes, 2 * inter_planes, kernel_size=(3, 3), stride=stride, padding=(1, 1), groups=groups),

BasicConv(2 * inter_planes, 2 * inter_planes, kernel_size=3, stride=1, padding=vision + 2, dilation=vision + 2, relu=False, groups=groups)

)

self.branch2 = nn.Sequential(

BasicConv(in_planes, inter_planes, kernel_size=1, stride=1, groups=groups, relu=False),

BasicConv(inter_planes, (inter_planes // 2) * 3, kernel_size=3, stride=1, padding=1, groups=groups),

BasicConv((inter_planes // 2) * 3, 2 * inter_planes, kernel_size=3, stride=stride, padding=1, groups=groups),

BasicConv(2 * inter_planes, 2 * inter_planes, kernel_size=3, stride=1, padding=vision + 4, dilation=vision + 4, relu=False, groups=groups)

)

self.ConvLinear = BasicConv(6 * inter_planes, out_planes, kernel_size=1, stride=1, relu=False)

self.shortcut = BasicConv(in_planes, out_planes, kernel_size=1, stride=stride, relu=False)

self.relu = nn.ReLU(inplace=False)

def forward(self, x):

x0 = self.branch0(x)

x1 = self.branch1(x)

x2 = self.branch2(x)

out = torch.cat((x0, x1, x2), 1)

out = self.ConvLinear(out)

short = self.shortcut(x)

out = out * self.scale + short

out = self.relu(out)

return out

SPPCSPC:

class SPPCSPC(nn.Module):

# CSP https://github.com/WongKinYiu/CrossStagePartialNetworks

def __init__(self, c1, c2, n=1, shortcut=False, g=1, e=0.5, k=(5, 9, 13)):

super(SPPCSPC, self).__init__()

c_ = int(2 * c2 * e) # hidden channels

self.cv1 = Conv(c1, c_, 1, 1)

self.cv2 = Conv(c1, c_, 1, 1)

self.cv3 = Conv(c_, c_, 3, 1)

self.cv4 = Conv(c_, c_, 1, 1)

self.m = nn.ModuleList([nn.MaxPool2d(kernel_size=x, stride=1, padding=x // 2) for x in k])

self.cv5 = Conv(4 * c_, c_, 1, 1)

self.cv6 = Conv(c_, c_, 3, 1)

self.cv7 = Conv(2 * c_, c2, 1, 1)

def forward(self, x):

x1 = self.cv4(self.cv3(self.cv1(x)))

y1 = self.cv6(self.cv5(torch.cat([x1] + [m(x1) for m in self.m], 1)))

y2 = self.cv2(x)

return self.cv7(torch.cat((y1, y2), dim=1))

优化训练策略:SIoU / EIoU / WIoU / Focal_xIoU / MPDIoU 替换 IoU

优化激活函数:SiLU、Hardswish、MemoryEfficientMish、FReLU、AconC、MetaAconC 替换 Mish函数

V5 自带的激活函数代码在:yolo5/utils/activations.py,需要换直接调用即可,不用自己写了:

import torch

import torch.nn as nn

import torch.nn.functional as F

class SiLU(nn.Module):

@staticmethod

def forward(x):

return x * torch.sigmoid(x)

class Hardswish(nn.Module):

@staticmethod

def forward(x):

return x * F.hardtanh(x + 3, 0.0, 6.0) / 6.0 # for TorchScript, CoreML and ONNX

class Mish(nn.Module):

@staticmethod

def forward(x):

return x * F.softplus(x).tanh()

class MemoryEfficientMish(nn.Module):

class F(torch.autograd.Function):

@staticmethod

def forward(ctx, x):

ctx.save_for_backward(x)

return x.mul(torch.tanh(F.softplus(x))) # x * tanh(ln(1 + exp(x)))

@staticmethod

def backward(ctx, grad_output):

x = ctx.saved_tensors[0]

sx = torch.sigmoid(x)

fx = F.softplus(x).tanh()

return grad_output * (fx + x * sx * (1 - fx * fx))

def forward(self, x):

return self.F.apply(x)

class FReLU(nn.Module):

def __init__(self, c1, k=3): # ch_in, kernel

super().__init__()

self.conv = nn.Conv2d(c1, c1, k, 1, 1, groups=c1, bias=False)

self.bn = nn.BatchNorm2d(c1)

def forward(self, x):

return torch.max(x, self.bn(self.conv(x)))

class AconC(nn.Module):

def __init__(self, c1):

super().__init__()

self.p1 = nn.Parameter(torch.randn(1, c1, 1, 1))

self.p2 = nn.Parameter(torch.randn(1, c1, 1, 1))

self.beta = nn.Parameter(torch.ones(1, c1, 1, 1))

def forward(self, x):

dpx = (self.p1 - self.p2) * x

return dpx * torch.sigmoid(self.beta * dpx) + self.p2 * x

class MetaAconC(nn.Module):

def __init__(self, c1, k=1, s=1, r=16): # ch_in, kernel, stride, r

super().__init__()

c2 = max(r, c1 // r)

self.p1 = nn.Parameter(torch.randn(1, c1, 1, 1))

self.p2 = nn.Parameter(torch.randn(1, c1, 1, 1))

self.fc1 = nn.Conv2d(c1, c2, k, s, bias=True)

self.fc2 = nn.Conv2d(c2, c1, k, s, bias=True)

def forward(self, x):

y = x.mean(dim=2, keepdims=True).mean(dim=3, keepdims=True)

beta = torch.sigmoid(self.fc2(self.fc1(y))) # bug patch BN layers removed

dpx = (self.p1 - self.p2) * x

return dpx * torch.sigmoid(beta * dpx) + self.p2 * x

修改网络中的激活函数:yolo5/models/common.py

class Conv(nn.Module):

default_act = nn.SiLU() # 默认激活函数,选择你要更换的激活函数

def __init__(self, c1, c2, k=1, s=1, p=None, g=1, d=1, act=True):

super().__init__()

self.conv = nn.Conv2d(c1, c2, k, s, autopad(k, p, d), groups=g, dilation=d, bias=False)

self.bn = nn.BatchNorm2d(c2)

self.act = self.default_act if act is True else act if isinstance(act, nn.Module) else nn.Identity()

更换自己想要的激活函数:

default_act = nn.SiLU() # 默认激活函数

default_act = nn.Identity()

default_act = nn.Tanh()

default_act = nn.Sigmoid()

default_act = nn.ReLU()

default_act = nn.LeakyReLU(0.1)

default_act = nn.Hardswish()

default_act = nn.SiLU()

default_act = Mish()

default_act = FReLU(c2)

default_act = AconC(c2)

default_act = MetaAconC(c2)

default_act = SiLU_beta(c2)

default_act = FReLU_noBN_biasFalse(c2)

default_act = FReLU_noBN_biasTrue(c2)

class BottleneckCSP(nn.Module):

def __init__(self, c1, c2, n=1, shortcut=True, g=1, e=0.5): # ch_in, ch_out, number, shortcut, groups, expansion

super().__init__()

c_ = int(c2 * e) # hidden channels

self.cv1 = Conv(c1, c_, 1, 1)

self.cv2 = nn.Conv2d(c1, c_, 1, 1, bias=False)

self.cv3 = nn.Conv2d(c_, c_, 1, 1, bias=False)

self.cv4 = Conv(2 * c_, c2, 1, 1)

self.bn = nn.BatchNorm2d(2 * c_) # applied to cat(cv2, cv3)

self.act = nn.SiLU() # 选择你要更换的激活函数

优化损失函数:多种类Loss, PolyLoss / VarifocalLoss / GFL / QualityFLoss / FL

优化损失函数:改进用于微小目标检测的 Normalized Gaussian Wasserstein Distance

引入特征融合网络 BiFPN:提高检测精度和效率

添加到 common.py 文件:

# BiFPN 两个特征图add操作

class BiFPN_Add2(nn.Module):

def __init__(self, c1, c2):

super(BiFPN_Add2, self).__init__()

# 设置可学习参数 nn.Parameter的作用是:将一个不可训练的类型Tensor转换成可以训练的类型parameter

# 并且会向宿主模型注册该参数 成为其一部分 即model.parameters()会包含这个parameter

# 从而在参数优化的时候可以自动一起优化

self.w = nn.Parameter(torch.ones(2, dtype=torch.float32), requires_grad=True)

self.epsilon = 0.0001

self.conv = nn.Conv2d(c1, c2, kernel_size=1, stride=1, padding=0)

self.silu = nn.SiLU()

def forward(self, x):

w = self.w

weight = w / (torch.sum(w, dim=0) + self.epsilon)

return self.conv(self.silu(weight[0] * x[0] + weight[1] * x[1]))

# 三个特征图add操作

class BiFPN_Add3(nn.Module):

def __init__(self, c1, c2):

super(BiFPN_Add3, self).__init__()

self.w = nn.Parameter(torch.ones(3, dtype=torch.float32), requires_grad=True)

self.epsilon = 0.0001

self.conv = nn.Conv2d(c1, c2, kernel_size=1, stride=1, padding=0)

self.silu = nn.SiLU()

def forward(self, x):

w = self.w

weight = w / (torch.sum(w, dim=0) + self.epsilon)

# Fast normalized fusion

return self.conv(self.silu(weight[0] * x[0] + weight[1] * x[1] + weight[2] * x[2]))

修改 yolo.py 的 parse_model:

def parse_model(d, ch):

elif m is Concat:

c2 = sum(ch[x] for x in f)

elif m in [BiFPN_Add2, BiFPN_Add3]: # 在 Concat 后,添加 BiFPN 结构

c2 = max([ch[x] for x in f)

添加 ultralytics/yolo5/train.py ,导入 BiFPN_Add3, BiFPN_Add2:

from models.common import BiFPN_Add3, BiFPN_Add2

将 BiFPN_Add2, BiFPN_Add3 的参数 w 加入 g1:

g0, g1, g2 = [], [], [] # optimizer parameter groups

for v in model.modules():

······

# BiFPN_Concat

elif isinstance(v, BiFPN_Add2) and hasattr(v, 'w') and isinstance(v.w, nn.Parameter):

g1.append(v.w)

elif isinstance(v, BiFPN_Add3) and hasattr(v, 'w') and isinstance(v.w, nn.Parameter):

g1.append(v.w)

修改 yolov5.yaml 的 Concat 换成 BiFPN_Add:

# Parameters

nc: 80 # number of classes

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.50 # layer channel multiple

anchors:

- [10,13, 16,30, 33,23] # P3/8

- [30,61, 62,45, 59,119] # P4/16

- [116,90, 156,198, 373,326] # P5/32

# YOLOv5 v6.0 backbone

backbone:

# [from, number, module, args]

[[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2

[-1, 1, Conv, [128, 3, 2]], # 1-P2/4

[-1, 3, C3, [128]],

[-1, 1, Conv, [256, 3, 2]], # 3-P3/8

[-1, 6, C3, [256]],

[-1, 1, Conv, [512, 3, 2]], # 5-P4/16

[-1, 9, C3, [512]],

[-1, 1, Conv, [1024, 3, 2]], # 7-P5/32

[-1, 3, C3, [1024]],

[-1, 1, SPPF, [1024, 5]], # 9

]

# YOLOv5 v6.1 BiFPN head

head:

[[-1, 1, Conv, [512, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 6], 1, BiFPN_Add2, [256, 256]], # cat backbone P4

[-1, 3, C3, [512, False]], # 13

[-1, 1, Conv, [256, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 4], 1, BiFPN_Add2, [128, 128]], # cat backbone P3

[-1, 3, C3, [256, False]], # 17

[-1, 1, Conv, [512, 3, 2]],

[[-1, 13, 6], 1, BiFPN_Add3, [256, 256]], #v5s通道数是默认参数的一半

[-1, 3, C3, [512, False]], # 20

[-1, 1, Conv, [512, 3, 2]],

[[-1, 10], 1, BiFPN_Add2, [256, 256]], # cat head P5

[-1, 3, C3, [1024, False]], # 23

[[17, 20, 23], 1, Detect, [nc, anchors]], # Detect(P3, P4, P5)

]

引入 YOLOv6 颈部 BiFusion Neck

引入注意力机制:在 C3 模块添加添加注意力机制

添加【SE】【CBAM】【 ECA 】【CA】

添加【SimAM】【CoTAttention】【SKAttention】【DoubleAttention】

添加【EffectiveSE】【GlobalContext】【GatherExcite】【MHSA】

添加【Triplet】【SpatialGroupEnhance】【NAM】【S2】

引入小目标检测:用于低分辨率图像和小物体的新 CNN 模块SPD-Conv

引入轻量化卷积 替换 传统卷积

引入独立注意力FPN+PAN结构 替换 传统卷积

引入 YOLOX 解耦头

引入 YOLOv8 的 C2f 模块

引入 RepVGG 重参数化模块

引入密集连接模块

模型剪枝

知识蒸馏

热力图可视化

YOLOv7

更换骨干网络之 SwinTransformer

更换骨干网络之 EfficientNet

优化上采样方式:轻量化算子CARAFE 替换 传统(最近邻 / 双线性 / 双立方 / 三线性 / 转置卷积)

优化空间金字塔池化:SPPF / SimSPPF / ASPP / RFB / SPPCSPC / SPPFCSPC 替换 SPP

优化训练策略:SIoU / EIoU / WIoU / Focal_xIoU / MPDIoU 替换 IoU

优化激活函数:SiLU、Hardswish、MemoryEfficientMish、FReLU、AconC、MetaAconC 替换 Mish函数

优化标签分配策略:基于TOOD标签分配策略改进

优化损失函数:SIoU等结合FocalLoss应用:组成Focal-EIoU|Focal-SIoU|Focal-CIoU|Focal-GIoU、DIoU

优化损失函数:Wise-IoU

优化特征集成方法:目标检测的渐近特征金字塔网络AsymptoticFPN

引入 BiFPN 特征融合网络:提高检测精度和效率

引入 YOLOv6 颈部 BiFusion Neck

引入小目标检测:用于低分辨率图像和小物体的新 CNN 模块SPD-Conv

引入轻量化卷积 替换 传统卷积

引入 YOLOX 解耦头

引入 YOLOv8 的 C2f 模块

引入 RepVGG 重参数化模块

引入密集连接模块

引入用于小目标检测的归一化高斯 Wasserstein Distance Loss

引入Generalized Focal Loss

模型剪枝

知识蒸馏

热力图可视化