OpenStack-Y版安装部署

目录

- OpenStack-Y版安装部署

- 1、环境准备

- 1.1 环境简介

- 1.2 配置hosts解析(所有节点)

- 1.3 配置时间同步

- 1.4 安装openstack客户端(控制节点执行)

- 1.5 安装部署MariaDB(控制节点执行)

- 1.6 安装部署RabbitMQ(控制节点执行)

- 1.7 安装部署Memcache(控制节点执行)

- 2、部署配置keystone(控制节点执行)

- 2.1 创建数据库与用户

- 2.2 安装配置keystone

- 2.3 修改数据库配置

- 2.4 修改apache配置

- 2.5 配置OpenStack认证环境变量

- 3、部署配置glance镜像(控制节点执行)

- 3.1 创建数据库与用户

- 3.2 创建服务项目及glance浏览用户

- 3.3 创建镜像服务API端点

- 3.4 安装glance镜像服务

- 3.5 填充数据库

- 3.6上传镜像

- 4、部署配置placement元数据(控制节点执行)

- 4.1 创建数据库与用户

- 4.2 创建服务用户

- 4.3 创建Placement API服务端点

- 4.4 安装placement服务

- 4.5 填充数据库

- 5、部署配置nova计算服务

- 5.1 创建数据库与用户给予nova使用(控制节点执行)

- 5.2 创建nova用户(控制节点执行)

- 5.3 创建计算API服务端点(控制节点执行)

- 5.4 配置nova(控制节点执行)

- 5.5 填充数据库 (控制节点执行)

- 5.6 重启相关nova服务加载配置文件 (控制节点执行)

- 5.7 安装nova-compute服务(计算节点执行)

- 5.8 配置主机发现(控制节点执行)

- 6、配置基于OVS的Neutron网络服务

- 6.1 创建数据库与用给予neutron使用(控制节点执行)

- 6.2 创建neutron用户(控制节点执行)

- 6.3 创建neutron的api端点(控制节点执行)

- 6.4 配置内核转发(全部节点执行)

- 6.5 安装ovs服务

- 6.6 配置neutron.conf文件,用于提供neutron主体服务

- 6.7 配置ml2_conf.ini文件,用户提供二层网络插件服务(控制节点执行)

- 6.8 配置openvswitch_agent.ini文件,提供ovs代理服务

- 6.9 配置l3_agent.ini文件,提供三层网络服务(控制节点执行)

- 6.10 配置dhcp_agent文件,提供dhcp动态网络服务(控制节点执行)

- 6.11 配置metadata_agent.ini文件(控制节点执行)

- 6.12 配置nova文件,主要识别neutron配置,从而能调用网络

- 6.13 填充数据库(控制节点执行)

- 6.14 配置外部网络桥接(全部节点执行)

- 6.15 重启neutron相关服务生效配置

- 6.16 校验neutron

- 7、配置dashboard仪表盘服务

- 7.1 安装服务

- 7.2 配置local_settings.py文件

- 7.3 重新加载web服务器配置

- 7.4 浏览器访问

- 8、部署配置cinder卷存储

- 8.1 创建数据库与用户给予cinder组件使用(控制节点配置执行)

- 8.2 创建cinder用户(控制节点配置执行)

- 8.3 创建cinder服务API端点(控制节点配置执行)

- 8.4 安装cinder相关服务(控制节点配置执行)

- 8.5 填充数据库(控制节点配置执行)

- 8.6 配置nova服务可调用cinder服务(控制节点配置执行)

- 8.7 重启相关服务生效配置(控制节点配置执行)

- 8.8 安装支持的实用程序包(计算节点执行)

- 8.9 创建LVM物理卷(计算节点执行)

- 8.10 修改lvm.conf文件(计算节点执行)

- 8.11 安装cinder软件包(计算节点执行)

- 8.12 指定卷路径(计算节点执行)

- 8.13 重新启动块存储卷服务,包括其依赖项(计算节点执行)

- 8.14 校验cinder(控制节点执行)

- 9、运维实战一(命令行)(控制节点执行)

- 9.1 创建路由器

- 9.2 创建Vxlan网络

- 9.3 创建Flat网络

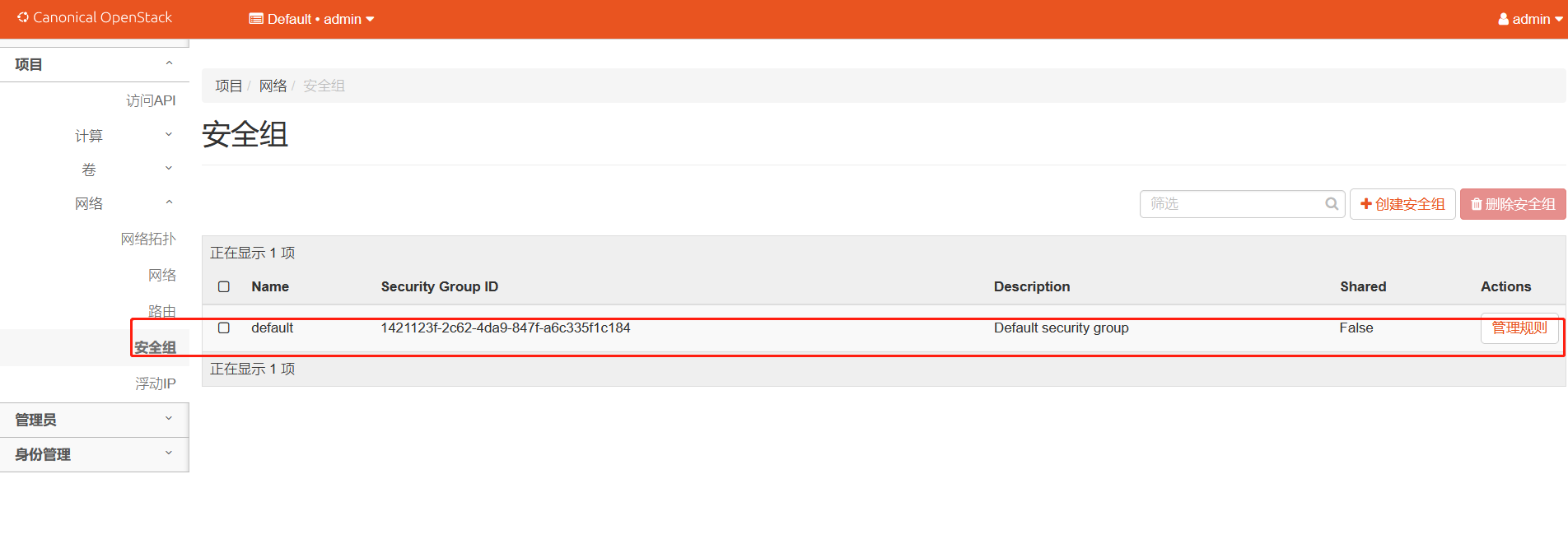

- 9.4 开放安全组

- 9.5 查看镜像

- 9.6 创建云主机

- 9.7 创建卷类型

- 9.8 创建卷

- 10、运维实战二(web界面)

- 10.1 登录dashbroad

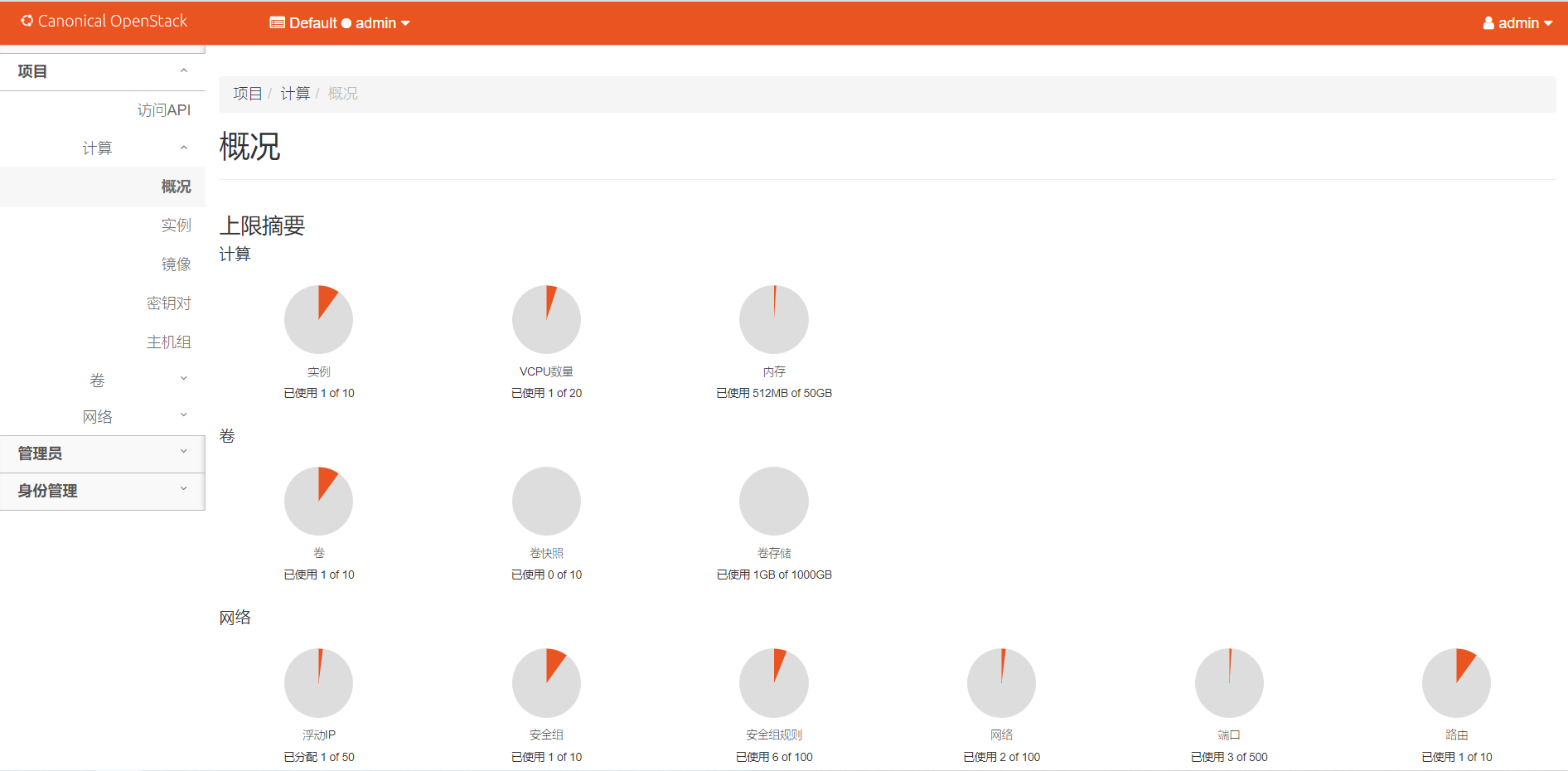

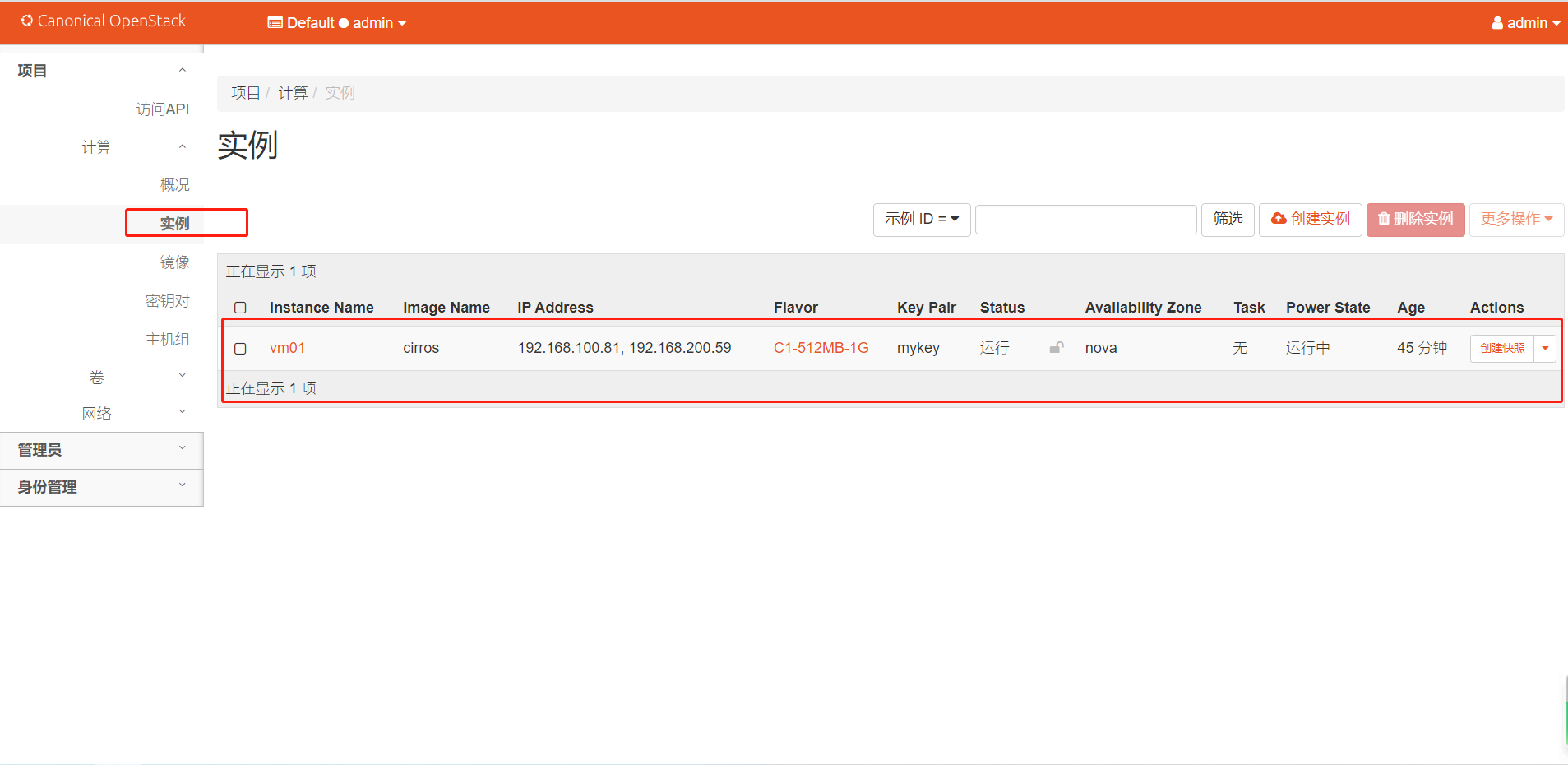

- 10.2 查看之前我们启动的虚拟机实例

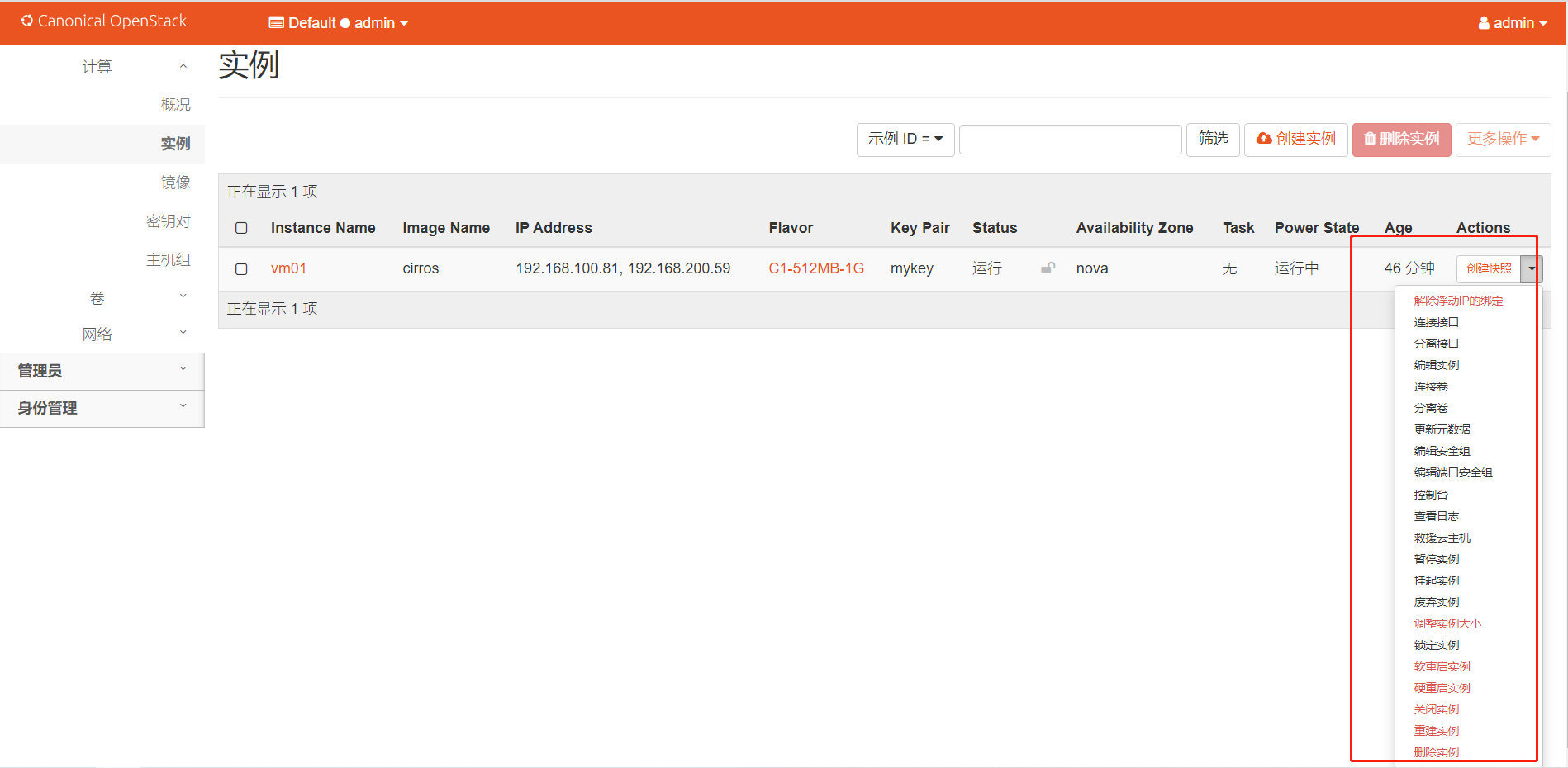

- 10.3 管理实例

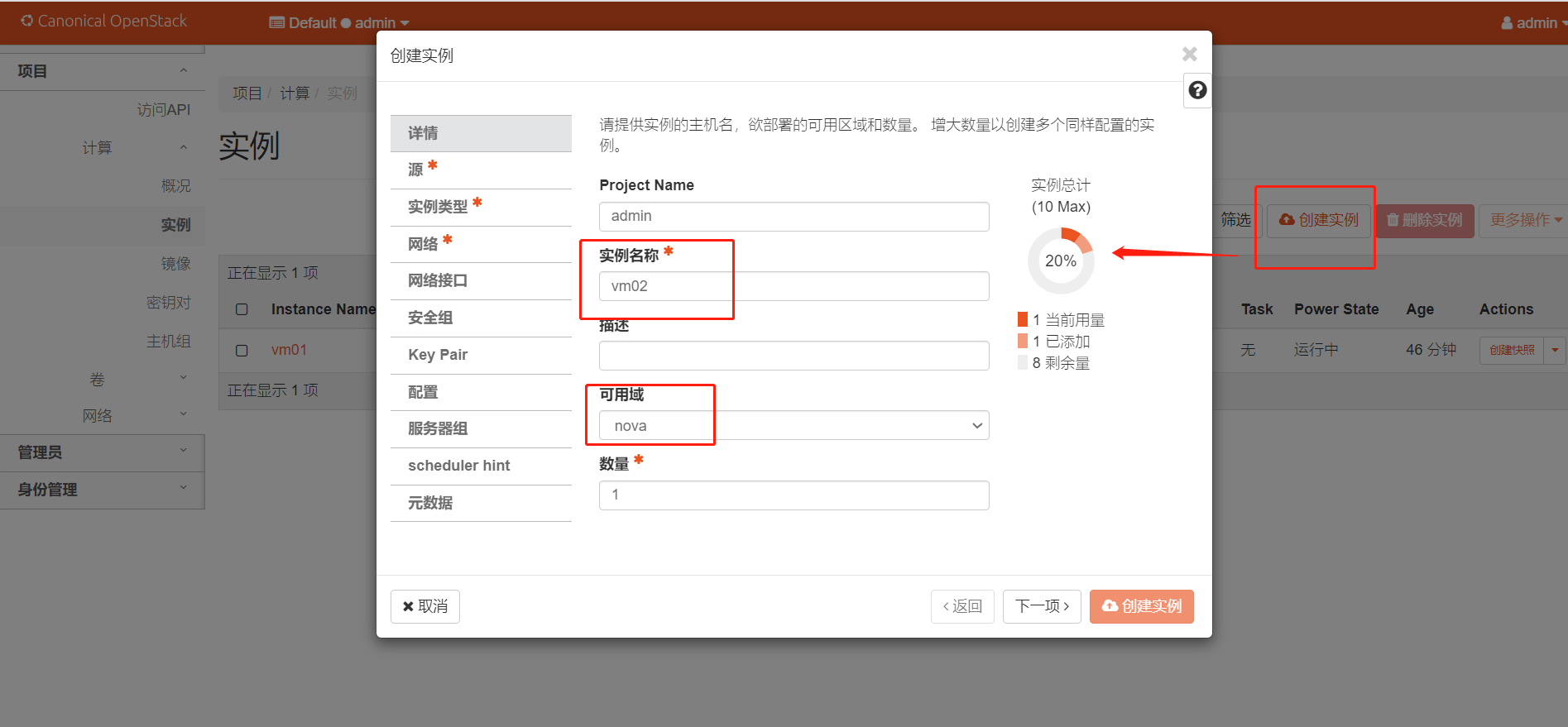

- 10.4 创建虚拟机实例

- 10.5 目前集群已上传的镜像

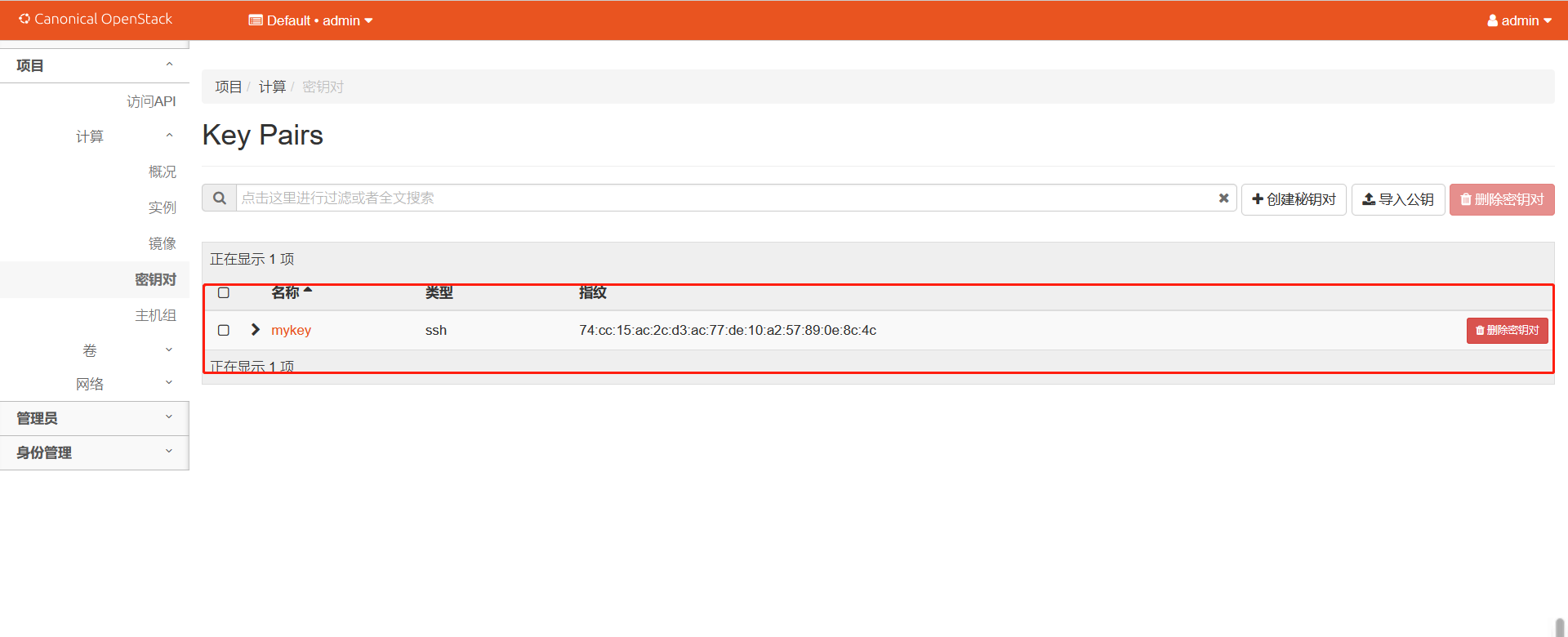

- 10.6 密匙对

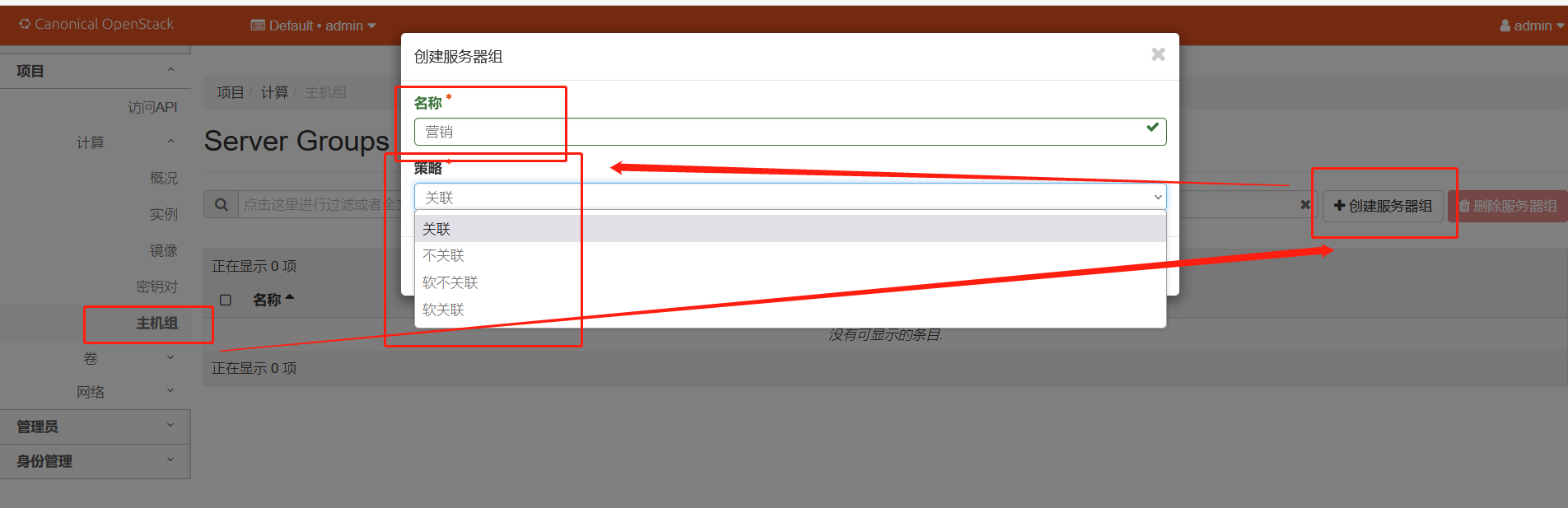

- 10.7 创建主机组,可关联实例

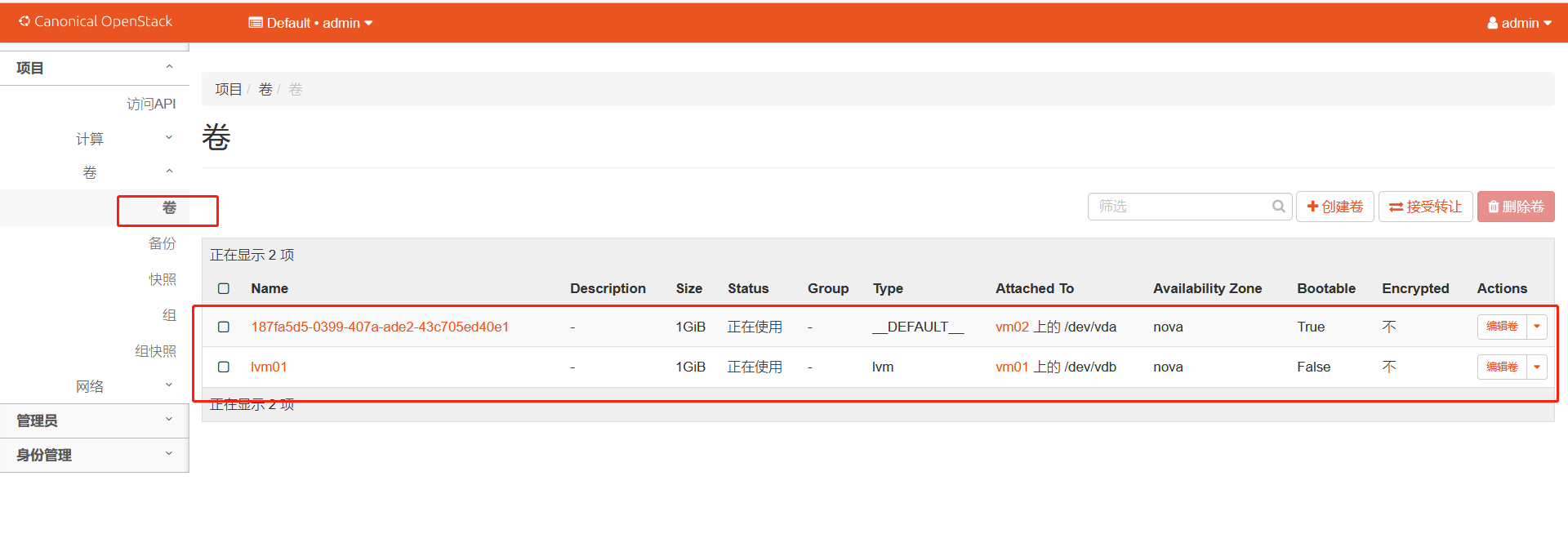

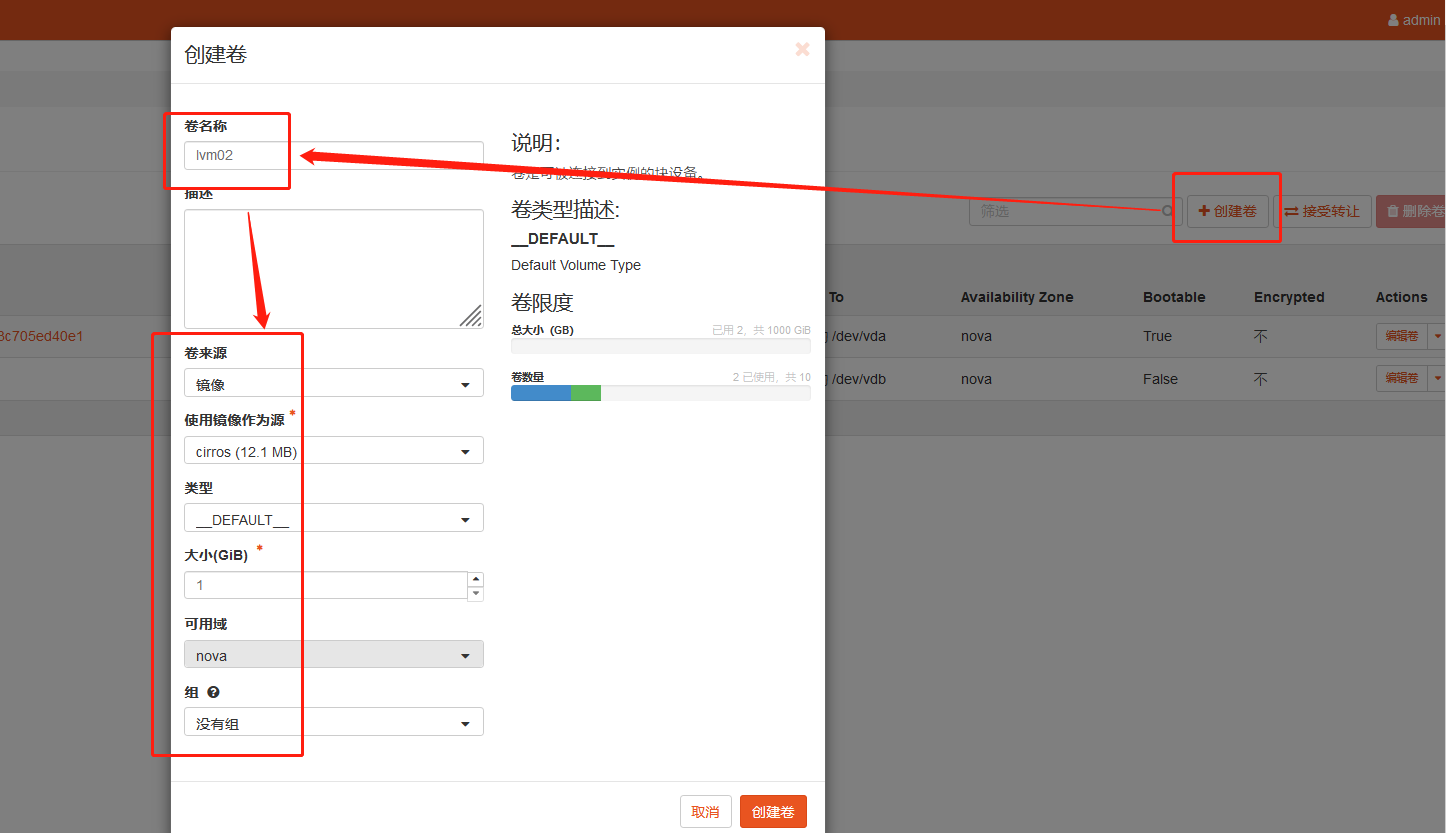

- 10.8 卷

- 10.9 网络

- 1、环境准备

1、环境准备

1.1 环境简介

| 主机名 | 网卡一 | 网卡二 | 磁盘一 | 磁盘二 | CPU | 内存 | 操作系统 | 虚拟化工具 | 说明 |

|---|---|---|---|---|---|---|---|---|---|

| controller | 192.168.200.30 | / | 100G | / | 2C | 6G | Ubuntu 22.04 | VMware15 | 控制节点 |

| compute-01 | 192.168.200.31 | / | 100G | 20G | 2C | 4G | Ubuntu 22.04 | VMware15 | 计算节点 |

| compute-02 | 192.168.200.32 | / | 100G | 20G | 2C | 4G | Ubuntu 22.04 | VMware15 | 计算节点 |

1.2 配置hosts解析(所有节点)

root@controller:~# cat >> /etc/hosts << EOF

192.168.200.30 controller

192.168.200.31 compute-01

192.168.200.32 compute-02

EOF1.3 配置时间同步

所有节点执行

# 开启可配置服务

root@controller:~# timedatectl set-ntp true

# 调整时区为上海

root@controller:~# timedatectl set-timezone Asia/Shanghai

# 将系统时间同步到硬件时间

root@controller:~# hwclock --systohc控制节点执行

# 安装服务

root@controller:~# apt install -y chrony

# 配置文件

root@controller:~# cat >> /etc/chrony/chrony.conf << EOF

server controller iburst maxsources 2

allow all

local stratum 10

EOF

# 重启服务

root@controller:~# systemctl restart chronyd计算节点执行

# 安装服务

root@compute-01:~# apt install -y chrony

# 配置文件

root@compute-01:~# vim /etc/chrony/chrony.conf

root@compute-01:~# sed -n "21p" /etc/chrony/chrony.conf

pool controller iburst maxsources 4

# 重启服务

root@compute-01:~# systemctl restart chronyd1.4 安装openstack客户端(控制节点执行)

root@controller:~# apt install -y python3-openstackclient1.5 安装部署MariaDB(控制节点执行)

# 安装 MariaDB

root@controller:~# apt install -y mariadb-server python3-pymysql

# 配置mariadb配置文件

root@controller:~# cat > /etc/mysql/mariadb.conf.d/99-openstack.cnf << EOF

[mysqld]

bind-address = 0.0.0.0

default-storage-engine = innodb

innodb_file_per_table = on

max_connections = 4096

collation-server = utf8_general_ci

character-set-server = utf8

EOF# 重启根据配置文件启动

root@controller:~# systemctl restart mysql

# 设置开机自启动

root@controller:~# systemctl enable mysql

# 初始化配置数据库

root@controller:~# mysql_secure_installation

NOTE: RUNNING ALL PARTS OF THIS SCRIPT IS RECOMMENDED FOR ALL MariaDB

SERVERS IN PRODUCTION USE! PLEASE READ EACH STEP CAREFULLY!

In order to log into MariaDB to secure it, we'll need the current

password for the root user. If you've just installed MariaDB, and

haven't set the root password yet, you should just press enter here.

Enter current password for root (enter for none): # 输入数据库密码:回车 ;可以在没有适当授权的情况下登录到MariaDB

OK, successfully used password, moving on...

Setting the root password or using the unix_socket ensures that nobody

can log into the MariaDB root user without the proper authorisation.

You already have your root account protected, so you can safely answer 'n'.

Switch to unix_socket authentication [Y/n] n # root用户,当前已收到保护:n

... skipping.

You already have your root account protected, so you can safely answer 'n'.

Change the root password? [Y/n] n # 设置root用户密码:n

... skipping.

By default, a MariaDB installation has an anonymous user, allowing anyone

to log into MariaDB without having to have a user account created for

them. This is intended only for testing, and to make the installation

go a bit smoother. You should remove them before moving into a

production environment.

Remove anonymous users? [Y/n] y # 删除匿名用户:y

... Success!

Normally, root should only be allowed to connect from 'localhost'. This

ensures that someone cannot guess at the root password from the network.

Disallow root login remotely? [Y/n] n # 不允许远程root登录:n

... skipping.

By default, MariaDB comes with a database named 'test' that anyone can

access. This is also intended only for testing, and should be removed

before moving into a production environment.

Remove test database and access to it? [Y/n] y # 删除测试数据库:y

- Dropping test database...

... Success!

- Removing privileges on test database...

... Success!

Reloading the privilege tables will ensure that all changes made so far

will take effect immediately.

Reload privilege tables now? [Y/n] y # 重新加载数据库:y

... Success!

Cleaning up...

All done! If you've completed all of the above steps, your MariaDB

installation should now be secure.

Thanks for using MariaDB!1.6 安装部署RabbitMQ(控制节点执行)

# 安装RabbitMQ

root@controller:~# apt install -y rabbitmq-server

# 创建openstack用户,账号/密码:openstack/000000

root@controller:~# rabbitmqctl add_user openstack 000000

# 允许openstack用户进行配置、写入和读取访问

root@controller:~# rabbitmqctl set_permissions openstack ".*" ".*" ".*"1.7 安装部署Memcache(控制节点执行)

# 安装Memcache

root@controller:~# apt install -y memcached python3-memcache

# 配置监听地址

root@controller:~# vim /etc/memcached.conf

root@controller:~# sed -n "35p" /etc/memcached.conf

-l 0.0.0.0

# 重启服务

root@controller:~# systemctl restart memcached

# 设置开机自启动

root@controller:~# systemctl enable memcached2、部署配置keystone(控制节点执行)

2.1 创建数据库与用户

# 创建数据库与用户给予keystone使用

MariaDB [(none)]> CREATE DATABASE keystone;

# 创建用户

MariaDB [(none)]> GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%' IDENTIFIED BY 'keystoneang';

# 配置立即生效

MariaDB [(none)]> flush privileges;2.2 安装配置keystone

# 安装 keystone

root@controller:~# apt install -y keystone

# 配置keystone文件

# 备份配置文件

root@controller:~# cp /etc/keystone/keystone.conf{,.bak}

# 修改配置

root@controller:~# nano /etc/keystone/keystone.conf

root@controller:~# cat /etc/keystone/keystone.conf

[DEFAULT]

log_dir = /var/log/keystone

[application_credential]

[assignment]

[auth]

[cache]

[catalog]

[cors]

[credential]

[database]

connection = mysql+pymysql://keystone:keystoneang@controller/keystone

[domain_config]

[endpoint_filter]

[endpoint_policy]

[eventlet_server]

[extra_headers]

Distribution = Ubuntu

[federation]

[fernet_receipts]

[fernet_tokens]

[healthcheck]

[identity]

[identity_mapping]

[jwt_tokens]

[ldap]

[memcache]

[oauth1]

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_middleware]

[oslo_policy]

[policy]

[profiler]

[receipt]

[resource]

[revoke]

[role]

[saml]

[security_compliance]

[shadow_users]

[token]

provider = fernet

[tokenless_auth]

[totp]

[trust]

[unified_limit]

[wsgi]2.3 修改数据库配置

# 填充数据库

root@controller:~# su -s /bin/sh -c "keystone-manage db_sync" keystone# 调用用户和组的密钥库,这些选项是为了允许在另一个操作系统用户/组下运行密钥库

# 用户

root@controller:~# keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

# 组

root@controller:~# keystone-manage credential_setup --keystone-user keystone --keystone-group keystone# 在Queens发布之前,keystone需要在两个单独的端口上运行,以容纳Identity v2 API,后者通常在端口35357上运行单独的仅限管理员的服务。随着v2 API的删除,keystones可以在所有接口的同一端口上运行5000

root@controller:~# keystone-manage bootstrap --bootstrap-password 000000 --bootstrap-admin-url http://controller:5000/v3/ --bootstrap-internal-url http://controller:5000/v3/ --bootstrap-public-url http://controller:5000/v3/ --bootstrap-region-id RegionOne2.4 修改apache配置

# 编辑apache2文件并配置ServerName选项以引用控制器节点

root@controller:~# echo "ServerName controller" >> /etc/apache2/apache2.conf

# 重新启动Apache服务生效配置

root@controller:~# systemctl restart apache2

# 设置k2.5 配置OpenStack认证环境变量

# 配置OpenStack认证环境变量

root@controller:~# cat > /etc/keystone/admin-openrc.sh << EOF

export OS_PROJECT_DOMAIN_NAME=Default

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_NAME=admin

export OS_USERNAME=admin

export OS_PASSWORD=000000

export OS_AUTH_URL=http://controller:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

EOF

# 加载环境变量

root@controller:~# source /etc/keystone/admin-openrc.sh

# 配置在个人目录下

root@controller:~# echo "source /etc/keystone/admin-openrc.sh" >> .bashrc3、部署配置glance镜像(控制节点执行)

3.1 创建数据库与用户

# 创建数据库与用户给予glance使用

MariaDB [(none)]> CREATE DATABASE glance;

# 创建用户

MariaDB [(none)]> GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' IDENTIFIED BY 'glanceang';

# 配置立即生效

MariaDB [(none)]> flush privileges;3.2 创建服务项目及glance浏览用户

# 创建服务项目

root@controller:~# openstack project create --domain default --description "Service Project" service

# 查看项目列表

root@controller:~# openstack project list

+----------------------------------+---------+

| ID | Name |

+----------------------------------+---------+

| 12d5de899b1f4cecb879a23d94e84af5 | service |

| d511671fdcbc408fbe1978da791caa46 | admin |

+----------------------------------+---------+

# 验证

root@controller:~# openstack token issue

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| Field | Value |

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| expires | 2023-08-05T12:19:27+0000 |

| id | gAAAAABkzjA_Y7GHumqarUwhR-cxtIISwuxAgBhE3dfXzhuCStR-bhq8va5pvJE3-XoXox5WmpQlU0V0f9Ym9WXOtrUpV_Cnku_J9uI9bm4lwKY8yDk1Kp2-67edd9AvETyHYhb0DfXu_jRyRhsTlNSaGMZ_wKZXkd_ZV3YxKPtdQOMvlV8dAxo |

| project_id | d511671fdcbc408fbe1978da791caa46 |

| user_id | 3a66f914c2804018a416255998dda997 |

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+# 创建glance浏览用户

root@controller:~# openstack user create --domain default --password glance glance

# 将管理员角色添加到浏览用户和服务项目

root@controller:~# openstack role add --project service --user glance admin

# 创建浏览服务实体

root@controller:~# openstack service create --name glance --description "OpenStack Image" image3.3 创建镜像服务API端点

# 公共端点

root@controller:~# openstack endpoint create --region RegionOne image public http://controller:9292

# 私有端点

root@controller:~# openstack endpoint create --region RegionOne image internal http://controller:9292

# 管理端点

root@controller:~# openstack endpoint create --region RegionOne image admin http://controller:92923.4 安装glance镜像服务

# 安装glance镜像服务

root@controller:~# apt install -y glance

# 配置glance配置文件

# 备份配置文件

root@controller:~# cp /etc/glance/glance-api.conf{,.bak}

# 配置项信息

root@controller:~# nano /etc/glance/glance-api.conf

root@controller:~# cat /etc/glance/glance-api.conf

[DEFAULT]

[barbican]

[barbican_service_user]

[cinder]

[cors]

[database]

connection = mysql+pymysql://glance:glanceang@controller/glance

[file]

[glance.store.http.store]

[glance.store.rbd.store]

[glance.store.s3.store]

[glance.store.swift.store]

[glance.store.vmware_datastore.store]

[glance_store]

stores = file,http

default_store = file

filesystem_store_datadir = /var/lib/glance/images/

[healthcheck]

[image_format]

disk_formats = ami,ari,aki,vhd,vhdx,vmdk,raw,qcow2,vdi,iso,ploop.root-tar

[key_manager]

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = glance

password = glance

[oslo_concurrency]

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_middleware]

[oslo_policy]

[oslo_reports]

[paste_deploy]

flavor = keystone

[profiler]

[store_type_location_strategy]

[task]

[taskflow_executor]

[vault]

[wsgi]3.5 填充数据库

# 填充数据库

root@controller:~# su -s /bin/sh -c "glance-manage db_sync" glance

# 重启glance服务生效配置

root@controller:~# systemctl restart glance-api

# 设置开机自启动

root@controller:~# systemctl enable glance-api3.6上传镜像

# 上传镜像验证

# 下载镜像

root@controller:~# wget http://download.cirros-cloud.net/0.4.0/cirros-0.4.0-x86_64-disk.img

# 上传镜像命令

root@controller:~# glance image-create --name "cirros" --file cirros-0.4.0-x86_64-disk.img --disk-format qcow2 --container-format bare --visibility=public

# 查看镜像运行状态

root@controller:~# openstack image list

+--------------------------------------+--------+--------+

| ID | Name | Status |

+--------------------------------------+--------+--------+

| ca7a8fff-7296-4907-894d-a84825955ad2 | cirros | active |

+--------------------------------------+--------+--------+4、部署配置placement元数据(控制节点执行)

作用:placement服务跟踪每个供应商的库存和使用情况。例如,在一个计算节点创建一个实例的可消费资源如计算节点的资源提供者的CPU和内存,磁盘从外部共享存储池资源提供商和IP地址从外部IP资源提供者。

4.1 创建数据库与用户

# 创建数据库与用户给予placement使用

MariaDB [(none)]> CREATE DATABASE placement;

# 创建用户

MariaDB [(none)]> GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'%' IDENTIFIED BY 'placementang';

# 配置立即生效

MariaDB [(none)]> flush privileges;4.2 创建服务用户

# 创建服务用户

root@controller:~# openstack user create --domain default --password placement placement

# 将Placement用户添加到具有管理员角色的服务项目中

root@controller:~# openstack role add --project service --user placement admin

# 在服务目录中创建Placement API条目

root@controller:~# openstack service create --name placement --description "Placement API" placement4.3 创建Placement API服务端点

# 公共端点

root@controller:~# openstack endpoint create --region RegionOne placement public http://controller:8778

# 私有端点

root@controller:~# openstack endpoint create --region RegionOne placement internal http://controller:8778

# 管理端点

root@controller:~# openstack endpoint create --region RegionOne placement admin http://controller:87784.4 安装placement服务

# 安装placement服务

root@controller:~# apt install -y placement-api

# 配置placement文件

# 备份配置文件

root@controller:~# cp /etc/placement/placement.conf{,.bak}

# 配置文件

root@controller:~# nano /etc/placement/placement.conf

root@controller:~# cat > /etc/placement/placement.conf

[DEFAULT]

[api]

auth_strategy = keystone

[cors]

[keystone_authtoken]

auth_url = http://controller:5000/v3

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = placement

password = placement

[oslo_middleware]

[oslo_policy]

[placement]

[placement_database]

connection = mysql+pymysql://placement:placementang@controller/placement

[profiler]4.5 填充数据库

# 填充数据库

root@controller:~# su -s /bin/sh -c "placement-manage db sync" placement

# 重启apache加载placement配置

root@controller:~# systemctl restart apache2

# 验证

root@controller:~# placement-status upgrade check

+-------------------------------------------+

| Upgrade Check Results |

+-------------------------------------------+

| Check: Missing Root Provider IDs |

| Result: Success |

| Details: None |

+-------------------------------------------+

| Check: Incomplete Consumers |

| Result: Success |

| Details: None |

+-------------------------------------------+

| Check: Policy File JSON to YAML Migration |

| Result: Success |

| Details: None |

+-------------------------------------------+5、部署配置nova计算服务

5.1 创建数据库与用户给予nova使用(控制节点执行)

# 存放nova交互等数据

MariaDB [(none)]> CREATE DATABASE nova_api;

# 存放nova资源等数据

MariaDB [(none)]> CREATE DATABASE nova;

# 存放nova等元数据

MariaDB [(none)]> CREATE DATABASE nova_cell0;

# 创建管理nova_api库的用户

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' IDENTIFIED BY 'novaang';

# 创建管理nova库的用户

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' IDENTIFIED BY 'novaang';

# 创建管理nova_cell0库的用户

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'%' IDENTIFIED BY 'novaang';

# 配置立即生效

MariaDB [(none)]> flush privileges;5.2 创建nova用户(控制节点执行)

# 创建nova用户

root@controller:~# openstack user create --domain default --password nova nova

# 将管理员角色添加到nova用户

root@controller:~# openstack role add --project service --user nova admin

# 创建nova服务实体

root@controller:~# openstack service create --name nova --description "OpenStack Compute" compute5.3 创建计算API服务端点(控制节点执行)

# 创建公共端点

root@controller:~# openstack endpoint create --region RegionOne compute public http://controller:8774/v2.1

# 创建私有端点

root@controller:~# openstack endpoint create --region RegionOne compute internal http://controller:8774/v2.1

# 创建管理端点

root@controller:~# openstack endpoint create --region RegionOne compute admin http://controller:8774/v2.15.4 配置nova(控制节点执行)

# 安装服务

root@controller:~# apt install -y nova-api nova-conductor nova-novncproxy nova-scheduler

# 配置nova文件

# 备份配置文件

root@controller:~# cp /etc/nova/nova.conf{,.bak}

# 配置文件

root@controller:~# nano /etc/nova/nova.conf

root@controller:~# cat /etc/nova/nova.conf

[DEFAULT]

log_dir = /var/log/nova

lock_path = /var/lock/nova

state_path = /var/lib/nova

compute_driver=libvirt.LibvirtDriver

transport_url = rabbit://openstack:000000@controller:5672/

my_ip = 192.168.200.30

[api]

auth_strategy = keystone

[api_database]

connection = mysql+pymysql://nova:novaang@controller/nova_api

[barbican]

[barbican_service_user]

[cache]

[cinder]

[compute]

[conductor]

[console]

[consoleauth]

[cors]

[cyborg]

[database]

connection = mysql+pymysql://nova:novaang@controller/nova

[devices]

[ephemeral_storage_encryption]

[filter_scheduler]

[glance]

api_servers = http://controller:9292

[guestfs]

[healthcheck]

[hyperv]

[image_cache]

[ironic]

[key_manager]

[keystone]

[keystone_authtoken]

www_authenticate_uri = http://controller:5000/

auth_url = http://controller:5000/

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = nova

[libvirt]

[metrics]

[mks]

[neutron]

[notifications]

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_middleware]

[oslo_policy]

[oslo_reports]

[pci]

[placement]

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:5000/v3

username = placement

password = placement

[powervm]

[privsep]

[profiler]

[quota]

[rdp]

[remote_debug]

[scheduler]

[serial_console]

[service_user]

[spice]

[upgrade_levels]

[vault]

[vendordata_dynamic_auth]

[vmware]

[vnc]

enabled = true

server_listen = $my_ip

server_proxyclient_address = $my_ip

[workarounds]

[wsgi]

[zvm]

[cells]

enable = False

[os_region_name]

openstack =5.5 填充数据库 (控制节点执行)

# 填充nova_api数据库

root@controller:~# su -s /bin/sh -c "nova-manage api_db sync" nova

# 注册cell0数据库

root@controller:~# su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova

# 创建cell1单元格

root@controller:~# su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova

# 填充nova数据库

root@controller:~# su -s /bin/sh -c "nova-manage db sync" nova# 验证nova、cell0和cell1是否正确注册

root@controller:~# su -s /bin/sh -c "nova-manage cell_v2 list_cells" nova

+-------+--------------------------------------+------------------------------------------+-------------------------------------------------+----------+

| Name | UUID | Transport URL | Database Connection | Disabled |

+-------+--------------------------------------+------------------------------------------+-------------------------------------------------+----------+

| cell0 | 00000000-0000-0000-0000-000000000000 | none:/ | mysql+pymysql://nova:****@controller/nova_cell0 | False |

| cell1 | 2df08f99-731c-4b26-89c0-5510637dfb35 | rabbit://openstack:****@controller:5672/ | mysql+pymysql://nova:****@controller/nova | False |

+-------+--------------------------------------+------------------------------------------+-------------------------------------------------+----------+5.6 重启相关nova服务加载配置文件 (控制节点执行)

# 处理api服务

root@controller:~# systemctl restart nova-api

# 处理资源调度服务

root@controller:~# systemctl restart nova-scheduler

# 处理数据库服务

root@controller:~# systemctl restart nova-conductor

# 处理vnc远程窗口服务

root@controller:~# systemctl restart nova-novncproxy5.7 安装nova-compute服务(计算节点执行)

# 安装nova-compute服务

root@compute-01:~# apt install -y nova-compute

# 配置nova文件

# 备份配置文件

root@compute-01:~# cp /etc/nova/nova.conf{,.bak}

# 完整配置

root@compute-01:~# nano /etc/nova/nova.conf

root@compute-01:~# cat /etc/nova/nova.conf

[DEFAULT]

log_dir = /var/log/nova

lock_path = /var/lock/nova

state_path = /var/lib/nova

transport_url = rabbit://openstack:000000@controller

my_ip = 192.168.200.31 # 注意各计算节点IP不同

[api]

auth_strategy = keystone

[api_database]

[barbican]

[barbican_service_user]

[cache]

[cinder]

[compute]

[conductor]

[console]

[consoleauth]

[cors]

[cyborg]

[database]

[devices]

[ephemeral_storage_encryption]

[filter_scheduler]

[glance]

api_servers = http://controller:9292

[guestfs]

[healthcheck]

[hyperv]

[image_cache]

[ironic]

[key_manager]

[keystone]

[keystone_authtoken]

www_authenticate_uri = http://controller:5000/

auth_url = http://controller:5000/

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = nova

[libvirt]

[metrics]

[mks]

[neutron]

[notifications]

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_middleware]

[oslo_policy]

[oslo_reports]

[pci]

[placement]

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:5000/v3

username = placement

password = placement

[powervm]

[privsep]

[profiler]

[quota]

[rdp]

[remote_debug]

[scheduler]

[serial_console]

[service_user]

[spice]

[upgrade_levels]

[vault]

[vendordata_dynamic_auth]

[vmware]

[vnc]

enabled = true

server_listen = 0.0.0.0

server_proxyclient_address = $my_ip

novncproxy_base_url = http://192.168.200.30:6080/vnc_auto.html

[workarounds]

[wsgi]

[zvm]

[cells]

enable = False

[os_region_name]

openstack =# 检测计算节点是否支持虚拟机的硬件加速

root@compute-01:~# egrep -c '(vmx|svm)' /proc/cpuinfo

# 如果结果返回 “0” ,那么需要配置如下

root@compute-01:~# cat > /etc/nova/nova-compute.conf << EOF

[DEFAULT]

compute_driver=libvirt.LibvirtDriver

[libvirt]

virt_type = qemu

EOF

# 重启服务生效nova配置

root@compute-01:~# systemctl restart nova-compute

# 设置开机自启动

root@compute-01:~# systemctl enable nova-compute5.8 配置主机发现(控制节点执行)

# 查看有那些可用的计算节点

root@controller:~# openstack compute service list --service nova-compute

+--------------------------------------+--------------+------------+------+---------+-------+----------------------------+

| ID | Binary | Host | Zone | Status | State | Updated At |

+--------------------------------------+--------------+------------+------+---------+-------+----------------------------+

| 522981d5-961a-4d94-9405-735abdffa5bc | nova-compute | compute-01 | nova | enabled | up | 2023-08-05T12:25:42.000000 |

| aae9f5bb-4f82-435d-bf2e-0fdf302760c6 | nova-compute | compute-02 | nova | enabled | up | 2023-08-05T12:25:43.000000 |

+--------------------------------------+--------------+------------+------+---------+-------+----------------------------+

# 发现计算主机

root@controller:~# su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova

# 配置每5分钟主机发现一次

root@controller:~# vim /etc/nova/nova.conf

root@controller:~# sed -n "76,77p" /etc/nova/nova.conf

[scheduler]

discover_hosts_in_cells_interval = 300

# 重启生效配置

root@controller:~# systemctl restart nova-api

# 校验nova服务

root@controller:~# openstack compute service list

+--------------------------------------+----------------+------------+----------+---------+-------+----------------------------+

| ID | Binary | Host | Zone | Status | State | Updated At |

+--------------------------------------+----------------+------------+----------+---------+-------+----------------------------+

| c2208b45-6118-4b40-a556-e83c64be489f | nova-conductor | controller | internal | enabled | up | 2023-08-05T12:27:38.000000 |

| 34d37e63-f4b3-4439-b7b9-6c908788e4a0 | nova-scheduler | controller | internal | enabled | up | 2023-08-05T12:27:34.000000 |

| 522981d5-961a-4d94-9405-735abdffa5bc | nova-compute | compute-01 | nova | enabled | up | 2023-08-05T12:27:42.000000 |

| aae9f5bb-4f82-435d-bf2e-0fdf302760c6 | nova-compute | compute-02 | nova | enabled | up | 2023-08-05T12:27:34.000000 |

+--------------------------------------+----------------+------------+----------+---------+-------+----------------------------+6、配置基于OVS的Neutron网络服务

6.1 创建数据库与用给予neutron使用(控制节点执行)

# 创建数据库与用给予neutron使用

MariaDB [(none)]> CREATE DATABASE neutron;

# 创建用户

MariaDB [(none)]> GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' IDENTIFIED BY 'neutronang';

# 配置立即生效

MariaDB [(none)]> flush privileges;6.2 创建neutron用户(控制节点执行)

# 创建neutron用户

root@controller:~# openstack user create --domain default --password neutron neutron

# 向neutron用户添加管理员角色

root@controller:~# openstack role add --project service --user neutron admin

# 创建neutron实体

root@controller:~# openstack service create --name neutron --description "OpenStack Networking" network6.3 创建neutron的api端点(控制节点执行)

# 创建公共端点

root@controller:~# openstack endpoint create --region RegionOne network public http://controller:9696

# 创建私有端点

root@controller:~# openstack endpoint create --region RegionOne network internal http://controller:9696

# 创建管理端点

root@controller:~# openstack endpoint create --region RegionOne network admin http://controller:96966.4 配置内核转发(全部节点执行)

root@controller:~# cat >> /etc/sysctl.conf << EOF

# 用于控制系统是否开启对数据包源地址的校验,关闭

net.ipv4.conf.all.rp_filter=0

net.ipv4.conf.default.rp_filter=0

# 开启二层转发设备

net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-ip6tables=1

EOF

# 加载模块,作用:桥接流量转发到iptables链

root@controller:~# modprobe br_netfilter

# 生效内核配置

root@controller:~# sysctl -p6.5 安装ovs服务

# 控制节点执行

root@controller:~# apt install -y neutron-server neutron-plugin-ml2 neutron-l3-agent neutron-dhcp-agent neutron-metadata-agent neutron-openvswitch-agent# 计算节点执行

root@compute-01:~# apt install -y neutron-openvswitch-agent6.6 配置neutron.conf文件,用于提供neutron主体服务

# 控制节点执行

# 备份配置文件

root@controller:~# cp /etc/neutron/neutron.conf{,.bak}

# 配置文件

root@controller:~# nano /etc/neutron/neutron.conf

root@controller:~# cat /etc/neutron/neutron.conf

[DEFAULT]

core_plugin = ml2

service_plugins = router

allow_overlapping_ips = true

auth_strategy = keystone

state_path = /var/lib/neutron

dhcp_agent_notification = true

allow_overlapping_ips = true

notify_nova_on_port_status_changes = true

notify_nova_on_port_data_changes = true

transport_url = rabbit://openstack:000000@controller

[agent]

root_helper = "sudo /usr/bin/neutron-rootwrap /etc/neutron/rootwrap.conf"

[cache]

[cors]

[database]

connection = mysql+pymysql://neutron:neutronang@controller/neutron

[healthcheck]

[ironic]

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = neutron

[nova]

auth_url = http://controller:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = nova

password = nova

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_middleware]

[oslo_policy]

[oslo_reports]

[placement]

[privsep]

[quotas]

[ssl]# 计算节点执行

# 备份配置文件

root@compute-01:~# cp /etc/neutron/neutron.conf{,.bak}

# 配置文件

root@compute-01:~# nano /etc/neutron/neutron.conf

root@compute-01:~# cat /etc/neutron/neutron.conf

[DEFAULT]

core_plugin = ml2

service_plugins = router

auth_strategy = keystone

state_path = /var/lib/neutron

allow_overlapping_ips = true

transport_url = rabbit://openstack:000000@controller

[agent]

root_helper = "sudo /usr/bin/neutron-rootwrap /etc/neutron/rootwrap.conf"

[cache]

[cors]

[database]

[healthcheck]

[ironic]

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = neutron

[nova]

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_middleware]

[oslo_policy]

[oslo_reports]

[placement]

[privsep]

[quotas]

[ssl]6.7 配置ml2_conf.ini文件,用户提供二层网络插件服务(控制节点执行)

# 备份配置文件

root@controller:~# cp /etc/neutron/plugins/ml2/ml2_conf.ini{,.bak}

# 配置文件

root@controller:~# nano /etc/neutron/plugins/ml2/ml2_conf.ini

root@controller:~# cat /etc/neutron/plugins/ml2/ml2_conf.ini

[DEFAULT]

[ml2]

type_drivers = flat,vlan,vxlan,gre

tenant_network_types = vxlan

mechanism_drivers = openvswitch,l2population

extension_drivers = port_security

[ml2_type_flat]

flat_networks = physnet1

[ml2_type_geneve]

[ml2_type_gre]

[ml2_type_vlan]

[ml2_type_vxlan]

vni_ranges = 1:1000

[ovs_driver]

[securitygroup]

enable_ipset = true

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver

[sriov_driver]6.8 配置openvswitch_agent.ini文件,提供ovs代理服务

# 控制节点执行

# 备份文件

root@controller:~# cp /etc/neutron/plugins/ml2/openvswitch_agent.ini{,.bak}

# 配置文件

root@controller:~# nano /etc/neutron/plugins/ml2/openvswitch_agent.ini

root@controller:~# cat /etc/neutron/plugins/ml2/openvswitch_agent.ini

[DEFAULT]

[agent]

l2_population = True

tunnel_types = vxlan

prevent_arp_spoofing = True

[dhcp]

[network_log]

[ovs]

local_ip = 192.168.200.30

bridge_mappings = physnet1:br-ens33

[securitygroup]# 计算节点执行

# 备份文件

root@compute-01:~# cp /etc/neutron/plugins/ml2/openvswitch_agent.ini{,.bak}

# 配置文件

root@compute-01:~# nano /etc/neutron/plugins/ml2/openvswitch_agent.ini

root@compute-01:~# cat /etc/neutron/plugins/ml2/openvswitch_agent.ini

[DEFAULT]

[agent]

l2_population = True

tunnel_types = vxlan

prevent_arp_spoofing = True

[dhcp]

[network_log]

[ovs]

local_ip = 192.168.200.31 # 注意各计算节点IP不同

bridge_mappings = physnet1:br-ens33

[securitygroup]

enable_security_group = True

firewall_driver = neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver6.9 配置l3_agent.ini文件,提供三层网络服务(控制节点执行)

# 备份文件

root@controller:~# cp /etc/neutron/l3_agent.ini{,.bak}

# 完整配置文件

root@controller:~# nano /etc/neutron/l3_agent.ini

root@controller:~# cat /etc/neutron/l3_agent.ini

[DEFAULT]

interface_driver = neutron.agent.linux.interface.OVSInterfaceDriver

external_network_bridge =

[agent]

[network_log]

[ovs]6.10 配置dhcp_agent文件,提供dhcp动态网络服务(控制节点执行)

# 备份文件

root@controller:~# cp /etc/neutron/dhcp_agent.ini{,.bak}

# 配置文件

root@controller:~# nano /etc/neutron/dhcp_agent.ini

root@controller:~# cat /etc/neutron/dhcp_agent.ini

[DEFAULT]

interface_driver = neutron.agent.linux.interface.OVSInterfaceDriver

dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

enable_isolated_metadata = True

[agent]

[ovs]6.11 配置metadata_agent.ini文件(控制节点执行)

提供元数据服务;元数据是用来支持如指示存储位置、历史数据、资源查找、文件记录等功能。元数据算是一种电子式目录,为了达到编制目录的目的,必须在描述并收藏数据的内容或特色,进而达成协助数据检索的目的

# 备份文件

root@controller:~# cp /etc/neutron/metadata_agent.ini{,.bak}

# 配置文件

root@controller:~# nano /etc/neutron/metadata_agent.ini

root@controller:~# cat /etc/neutron/metadata_agent.ini

[DEFAULT]

nova_metadata_host = controller

metadata_proxy_shared_secret = ws

[agent]

[cache]6.12 配置nova文件,主要识别neutron配置,从而能调用网络

# 控制节点执行

root@controller:~# nano /etc/nova/nova.conf

root@controller:~# cat /etc/nova/nova.conf

......

[default]

linuxnet_interface_driver = nova.network.linux_net.LinuxOVSlnterfaceDriver

[neutron]

auth_url = http://controller:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = neutron

service_metadata_proxy = true

metadata_proxy_shared_secret = ws

......# 计算节点执行

root@controller:~# nano /etc/nova/nova.conf

root@controller:~# cat /etc/nova/nova.conf

[DEFAULT]

linuxnet_interface_driver = nova.network.linux_net.LinuxOVSlnterfaceDriver

vif_plugging_is_fatal = true

vif_pligging_timeout = 300

......

[neutron]

auth_url = http://controller:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = neutron

......

# 重启nova服务识别网络配置

root@compute-01:~# systemctl restart nova-compute

# 设置开机自启动

root@compute-01:~# systemctl enable nova-compute6.13 填充数据库(控制节点执行)

# 填充数据库

root@controller:~# su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron

# 重启nova-api服务生效neutron配置

root@controller:~# systemctl restart nova-api6.14 配置外部网络桥接(全部节点执行)

# 新建一个外部网络桥接

root@controller:~# ovs-vsctl add-br br-ens33

# 将外部网络桥接映射到网卡,这里绑定第二张网卡,属于业务网卡

root@controller:~# ovs-vsctl add-port br-ens33 ens336.15 重启neutron相关服务生效配置

# 全部节点执行

# 提供ovs服务

root@controller:~# systemctl restart neutron-openvswitch-agent# 控制节点执行

# 提供neutron服务

root@controller:~# systemctl restart neutron-server

# 提供地址动态服务

root@controller:~# systemctl restart neutron-dhcp-agent

# 提供元数据服务

root@controller:~# systemctl restart neutron-metadata-agent

# 提供三层网络服务

root@controller:~# systemctl restart neutron-l3-agent6.16 校验neutron

# 校验命令

root@controller:~# openstack network agent list

+--------------------------------------+--------------------+------------+-------------------+-------+-------+---------------------------+

| ID | Agent Type | Host | Availability Zone | Alive | State | Binary |

+--------------------------------------+--------------------+------------+-------------------+-------+-------+---------------------------+

| 76dc6322-2673-450a-a8e7-28a3edbabf3c | DHCP agent | controller | nova | :-) | UP | neutron-dhcp-agent |

| 88e7e617-137b-417d-94a2-b831fb063839 | Open vSwitch agent | controller | None | :-) | UP | neutron-openvswitch-agent |

| b43cd575-2b08-4113-858d-a307ace57217 | Open vSwitch agent | compute-01 | None | :-) | UP | neutron-openvswitch-agent |

| da28b732-d59e-4a10-848a-e6d1b1dcedbe | Metadata agent | controller | None | :-) | UP | neutron-metadata-agent |

| dea1439f-4b0d-4519-9ea4-c5f4220588b2 | L3 agent | controller | nova | :-) | UP | neutron-l3-agent |

| fb0ed798-0729-41ed-8b49-e8a88f68bb27 | Open vSwitch agent | compute-02 | None | :-) | UP | neutron-openvswitch-agent |

+--------------------------------------+--------------------+------------+-------------------+-------+-------+---------------------------+7、配置dashboard仪表盘服务

7.1 安装服务

root@controller:~# apt install -y openstack-dashboard7.2 配置local_settings.py文件

# 备份脚本文件

root@controller:~# cp /etc/openstack-dashboard/local_settings.py{,.bak}

# 配置文件

root@controller:~# nano /etc/openstack-dashboard/local_settings.py

sed -n "96,101p;112p;126,127p;131,142p;411p" /etc/openstack-dashboard/local_settings.py

CACHES = {

'default': {

'BACKEND': 'django.core.cache.backends.memcached.MemcachedCache',

'LOCATION': 'controller:11211',

},

}

# 配置memcached会话存储服务

SESSION_ENGINE = 'django.contrib.sessions.backends.cache'

# 配置仪表板以在控制器节点上使用OpenStack服务

OPENSTACK_HOST = "controller"

# 启用Identity API版本3

OPENSTACK_KEYSTONE_URL = "http://%s:5000/v3" % OPENSTACK_HOST

# 启用对域的支持

OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True

# 配置API版本

OPENSTACK_API_VERSIONS = {

"identity": 3,

"image": 2,

"volume": 3,

}

# 将Default配置为通过仪表板创建的用户的默认域

OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = "Default"

# 将用户配置为通过仪表板创建的用户的默认角色

OPENSTACK_KEYSTONE_DEFAULT_ROLE = "user"

# 启用卷备份

OPENSTACK_CINDER_FEATURES = {

'enable_backup': True,

}

# 配置时区

TIME_ZONE = "Asia/Shanghai"

# 在Dashboard configuration部分中,允许主机访问Dashboard

ALLOWED_HOSTS = ["*"]7.3 重新加载web服务器配置

root@controller:~# systemctl restart apache27.4 浏览器访问

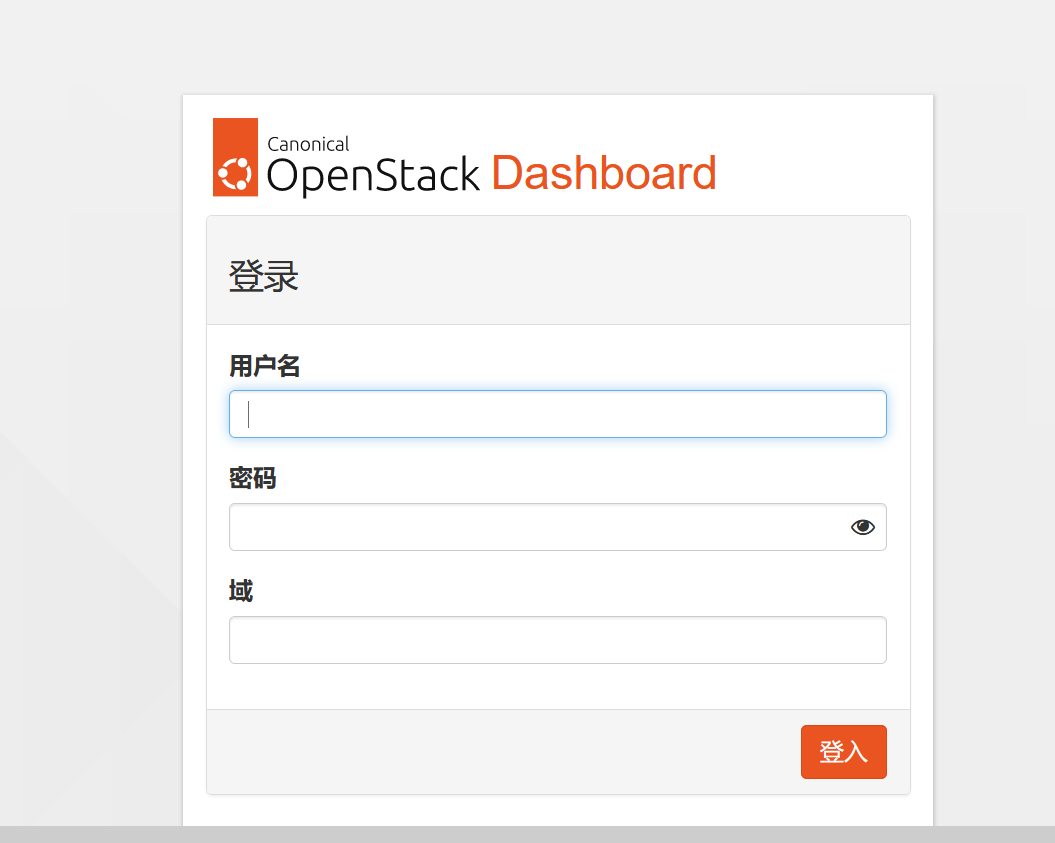

(账号:admin;密码:000000;域:default)

浏览器访问:http://192.168.200.30/horizon

8、部署配置cinder卷存储

8.1 创建数据库与用户给予cinder组件使用(控制节点配置执行)

# 创建cinder数据库

MariaDB [(none)]> CREATE DATABASE cinder;

# 创建cinder用户

MariaDB [(none)]> GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'%' IDENTIFIED BY 'cinderang';

# 配置立即生效

MariaDB [(none)]> flush privileges;8.2 创建cinder用户(控制节点配置执行)

# 创建cinder用户

root@controller:~# openstack user create --domain default --password cinder cinder

# 添加cinder用户到admin角色

root@controller:~# openstack role add --project service --user cinder admin

# 创建cinder服务实体

root@controller:~# openstack service create --name cinderv3 --description "OpenStack Block Storage" volumev38.3 创建cinder服务API端点(控制节点配置执行)

# 创建公共端点

root@controller:~# openstack endpoint create --region RegionOne volumev3 public http://controller:8776/v3/%\(project_id\)s

# 创建私有端点

root@controller:~# openstack endpoint create --region RegionOne volumev3 internal http://controller:8776/v3/%\(project_id\)s

# 创建管理端点

root@controller:~# openstack endpoint create --region RegionOne volumev3 admin http://controller:8776/v3/%\(project_id\)s8.4 安装cinder相关服务(控制节点配置执行)

root@controller:~# apt install -y cinder-api cinder-scheduler

# 配置cinder.conf文件

# 备份文件

root@controller:~# cp /etc/cinder/cinder.conf{,.bak}

# 完整配置

root@controller:~# nano /etc/cinder/cinder.conf

root@controller:~# cat /etc/cinder/cinder.conf

[DEFAULT]

transport_url = rabbit://openstack:000000@controller

auth_strategy = keystone

my_ip = 192.168.200.30

[database]

connection = mysql+pymysql://cinder:cinderang@controller/cinder

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = cinder

password = cinder

[oslo_concurrency]

lock_path = /var/lib/cinder/tmp8.5 填充数据库(控制节点配置执行)

root@controller:~# su -s /bin/sh -c "cinder-manage db sync" cinder8.6 配置nova服务可调用cinder服务(控制节点配置执行)

root@controller:~# nano /etc/nova/nova.conf

root@controller:~# cat /etc/nova/nova.conf

......

[cinder]

os_region_name = RegionOne

......8.7 重启相关服务生效配置(控制节点配置执行)

# 重启nova服务生效cinder服务

root@controller:~# systemctl restart nova-api

# 重新启动块存储服务

root@controller:~# systemctl restart cinder-scheduler

# 平滑重启apache服务识别cinder页面

root@controller:~# systemctl reload apache28.8 安装支持的实用程序包(计算节点执行)

root@compute-01:~# apt install -y lvm2 thin-provisioning-tools8.9 创建LVM物理卷(计算节点执行)

# 组成逻辑卷

root@compute-01:~# pvcreate /dev/sdb

# 查看逻辑卷

root@compute-01:~# pvs

PV VG Fmt Attr PSize PFree

/dev/sdb lvm2 --- 20.00g 20.00g# 创建卷组 cinder-volumes

root@compute-01:~# vgcreate cinder-volumes /dev/sdb

# 查看卷组

root@compute-01:~# vgs

VG #PV #LV #SN Attr VSize VFree

cinder-volumes 1 0 0 wz--n- <20.00g <20.00g8.10 修改lvm.conf文件(计算节点执行)

作用:添加接受/dev/sdb设备并拒绝所有其他设备的筛选器

root@compute-01:~# nano /etc/lvm/lvm.conf

root@compute-01:~# sed -n "49,50p" /etc/lvm/lvm.conf

devices {

filter = [ "a/sdb/", "r/.*/"]8.11 安装cinder软件包(计算节点执行)

root@compute-01:~# apt install -y cinder-volume tgt

# 配置cinder.conf配置文件

# 备份配置文件

root@compute-01:~# cp /etc/cinder/cinder.conf{,.bak}

# 完整配置文件

root@compute-01:~# nano /etc/cinder/cinder.conf

root@compute-01:~# cat /etc/cinder/cinder.conf

[DEFAULT]

transport_url = rabbit://openstack:000000@controller

auth_strategy = keystone

my_ip = 192.168.200.31 # 注意各计算节点IP不同

enabled_backends = lvm

glance_api_servers = http://controller:9292

[database]

connection = mysql+pymysql://cinder:cinderang@controller/cinder

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = cinder

password = cinder

[lvm]

volume_driver = cinder.volume.drivers.lvm.LVMVolumeDriver

volume_group = cinder-volumes

target_protocol = iscsi

target_helper = tgtadm

volume_backend_name = lvm

[oslo_concurrency]

lock_path = /var/lib/cinder/tmp8.12 指定卷路径(计算节点执行)

root@compute-01:~# cat > /etc/tgt/conf.d/tgt.conf << EOF

include /var/lib/cinder/volumes/*

EOF8.13 重新启动块存储卷服务,包括其依赖项(计算节点执行)

# 重启tgt

root@compute-01:~# systemctl restart tgt

# 设置开机自启动

root@compute-01:~# systemctl enable tgt# 重启cinder-volume

root@compute-01:~# systemctl restart cinder-volume

# 设置开机自启动

root@compute-01:~# systemctl enable cinder-volume8.14 校验cinder(控制节点执行)

# 校验命令

root@controller:~# openstack volume service list

+------------------+----------------+------+---------+-------+----------------------------+

| Binary | Host | Zone | Status | State | Updated At |

+------------------+----------------+------+---------+-------+----------------------------+

| cinder-scheduler | controller | nova | enabled | up | 2023-08-07T13:13:48.000000 |

| cinder-volume | compute-02@lvm | nova | enabled | up | 2023-08-07T13:13:54.000000 |

| cinder-volume | compute-01@lvm | nova | enabled | up | 2023-08-07T13:13:46.000000 |

+------------------+----------------+------+---------+-------+----------------------------+9、运维实战一(命令行)(控制节点执行)

9.1 创建路由器

# 创建路由器

root@controller:~# openstack router create Ext-Router

# 查看路由列表

root@controller:~# openstack router list9.2 创建Vxlan网络

# 创建vxlan网络

root@controller:~# openstack network create --provider-network-type vxlan Intnal

# 查看网络列表

root@controller:~# openstack network list# 创建vxlan子网

root@controller:~# openstack subnet create Intsubnal --network Intnal --subnet-range 192.168.100.0/24 --gateway 192.168.100.1 --dns-nameserver 114.114.114.114

# 查看子网列表

root@controller:~# openstack subnet list# 将内部网络添加到路由器(路由:Ext-Router;网络:Intsubnal)

root@controller:~# openstack router add subnet Ext-Router Intsubnal9.3 创建Flat网络

# 创建flat网络

root@controller:~# openstack network create --provider-physical-network physnet1 --provider-network-type flat --external Extnal

# 创建flat子网

root@controller:~# openstack subnet create Extsubnal --network Extnal --subnet-range 10.0.0.0/24 --allocation-pool start=10.0.0.30,end=10.0.0.200 --gateway 10.0.0.254 --dns-nameserver 114.114.114.114 --no-dhcp

root@controller:~# openstack subnet create Extsubnal --network Extnal --subnet-range 192.168.200.0/24 --allocation-pool start=192.168.200.50,end=192.168.200.200 --gateway 192.168.200.254 --dns-nameserver 192.168.200.2 --no-dhcp# 设置路由器网关接口(路由:Ext-Router;网络:Extnal)

root@controller:~# openstack router set Ext-Router --external-gateway Extnal9.4 开放安全组

# 开放icmp协议

root@controller:~# openstack security group rule create --proto icmp default

# 开放22端口

root@controller:~# openstack security group rule create --proto tcp --dst-port 22:22 default

# 查看安全组规则

root@controller:~# openstack security group rule list9.5 查看镜像

# 查看镜像运行状态

root@controller:~# openstack image list

+--------------------------------------+----------+--------+

| ID | Name | Status |

+--------------------------------------+----------+--------+

| ca7a8fff-7296-4907-894d-a84825955ad2 | cirros | active |

+--------------------------------------+----------+--------+9.6 创建云主机

# 创建ssh-key密钥

root@controller:~# ssh-keygen -N ""

# 创建密钥

root@controller:~# openstack keypair create --public-key ~/.ssh/id_rsa.pub mykey# 创建云主机类型

root@controller:~# openstack flavor create --vcpus 1 --ram 512 --disk 1 C1-512MB-1G

# 创建云主机

openstack server create --flavor C1-512MB-1G --image cirros --security-group default --nic net-id=$(vxlan网络id) --key-name mykey vm01

root@controller:~# openstack server create --flavor C1-512MB-1G --image cirros --security-group default --nic net-id=a371c907-a326-4869-ab35-8ea53d838a98 --key-name mykey vm01

# 查看云主机列表

root@controller:~# openstack server list --all

+--------------------------------------+------+--------+-----------------------+--------+-------------+

| ID | Name | Status | Networks | Image | Flavor |

+--------------------------------------+------+--------+-----------------------+--------+-------------+

| 77705e1a-8dfb-4b07-886b-c773b4b9be4a | vm01 | ACTIVE | Intnal=192.168.100.81 | cirros | C1-512MB-1G |

+--------------------------------------+------+--------+-----------------------+--------+-------------+# 分配浮动地址

root@controller:~# openstack floating ip create Extnal

+---------------------+--------------------------------------+

| Field | Value |

+---------------------+--------------------------------------+

| created_at | 2023-08-07T14:30:48Z |

| description | |

| dns_domain | None |

| dns_name | None |

| fixed_ip_address | None |

| floating_ip_address | 192.168.200.59 |

| floating_network_id | dc9becce-901a-49f2-936b-f5e80f7759e8 |

| id | c74c9289-c6b5-47c2-bce4-f5700533fb9f |

| name | 192.168.200.59 |

| port_details | None |

| port_id | None |

| project_id | d511671fdcbc408fbe1978da791caa46 |

| qos_policy_id | None |

| revision_number | 0 |

| router_id | None |

| status | DOWN |

| subnet_id | None |

| tags | [] |

| updated_at | 2023-08-07T14:30:48Z |

+---------------------+--------------------------------------+

# 将分配的浮动IP绑定云主机

openstack server add floating ip vm01 $(分配出的地址)

root@controller:~# openstack server add floating ip vm01 192.168.200.59

# VNC查看实例

root@controller:~# openstack console url show vm01

+----------+-----------------------------------------------------------------------------------------------+

| Field | Value |

+----------+-----------------------------------------------------------------------------------------------+

| protocol | vnc |

| type | novnc |

| url | http://192.168.200.30:6080/vnc_auto.html?path=%3Ftoken%3D40bbebd5-7698-4b4b-a5a8-47026fa87acd |

+----------+-----------------------------------------------------------------------------------------------+9.7 创建卷类型

# 创建卷类型

root@controller:~# openstack volume type create lvm

# 卷类型添加元数据

root@controller:~# cinder --os-username admin --os-tenant-name admin type-key lvm set volume_backend_name=lvm

# 查看卷类型

root@controller:~# openstack volume type list

+--------------------------------------+-------------+-----------+

| ID | Name | Is Public |

+--------------------------------------+-------------+-----------+

| 5ffdbebe-4f37-4690-8b40-36c6e6c63233 | lvm | True |

| c5b71526-643d-4e9c-b0b7-3cdf8d1e926b | __DEFAULT__ | True |

+--------------------------------------+-------------+-----------+9.8 创建卷

# 指定lvm卷类型创建卷

root@controller:~# openstack volume create lvm01 --type lvm --size 1

+---------------------+--------------------------------------+

| Field | Value |

+---------------------+--------------------------------------+

| attachments | [] |

| availability_zone | nova |

| bootable | false |

| consistencygroup_id | None |

| created_at | 2023-08-07T14:44:05.121851 |

| description | None |

| encrypted | False |

| id | 59278ea9-2fc0-4b5e-bdec-7a8663769d3b |

| migration_status | None |

| multiattach | False |

| name | lvm01 |

| properties | |

| replication_status | None |

| size | 1 |

| snapshot_id | None |

| source_volid | None |

| status | creating |

| type | lvm |

| updated_at | None |

| user_id | 3a66f914c2804018a416255998dda997 |

+---------------------+--------------------------------------+# 将卷绑定云主机

nova volume-attach vm01 卷ID

root@controller:~# nova volume-attach vm01 59278ea9-2fc0-4b5e-bdec-7a8663769d3b

+-----------------------+--------------------------------------+

| Property | Value |

+-----------------------+--------------------------------------+

| delete_on_termination | False |

| device | /dev/vdb |

| id | 59278ea9-2fc0-4b5e-bdec-7a8663769d3b |

| serverId | 77705e1a-8dfb-4b07-886b-c773b4b9be4a |

| tag | - |

| volumeId | 59278ea9-2fc0-4b5e-bdec-7a8663769d3b |

+-----------------------+--------------------------------------+10、运维实战二(web界面)

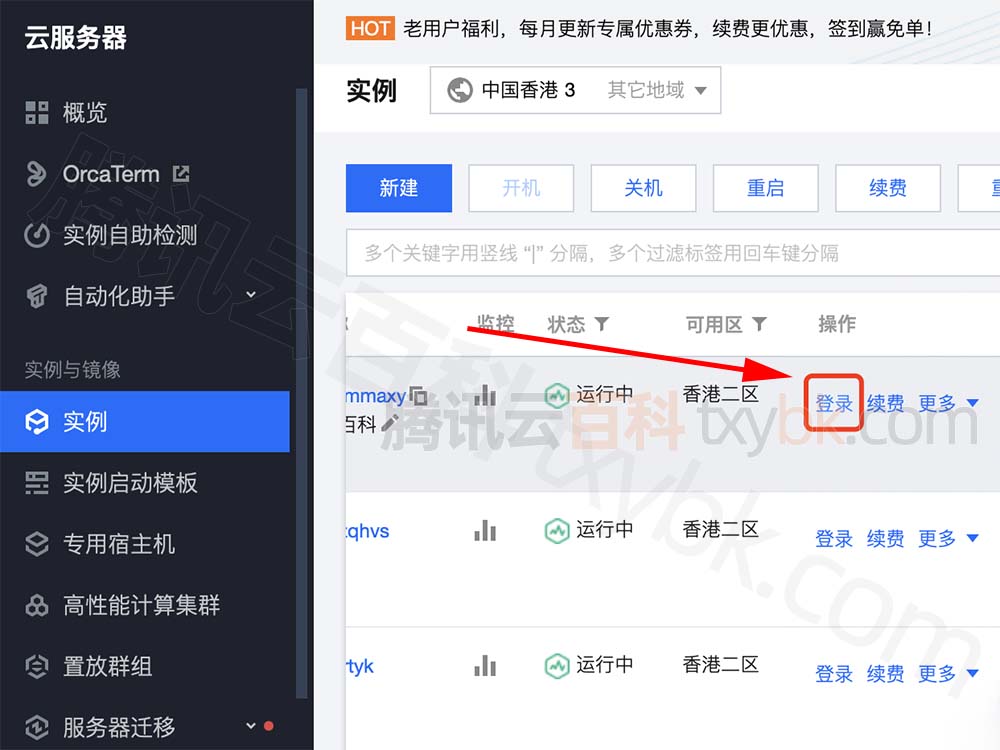

10.1 登录dashbroad

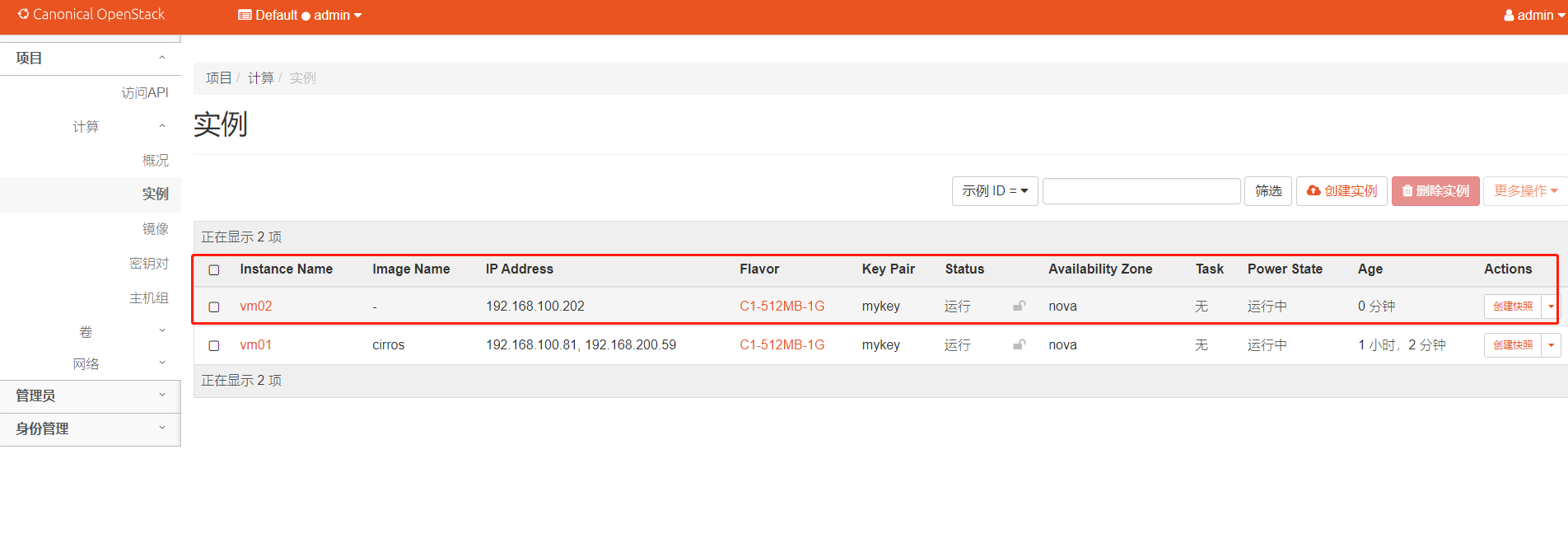

10.2 查看之前我们启动的虚拟机实例

10.3 管理实例

10.4 创建虚拟机实例

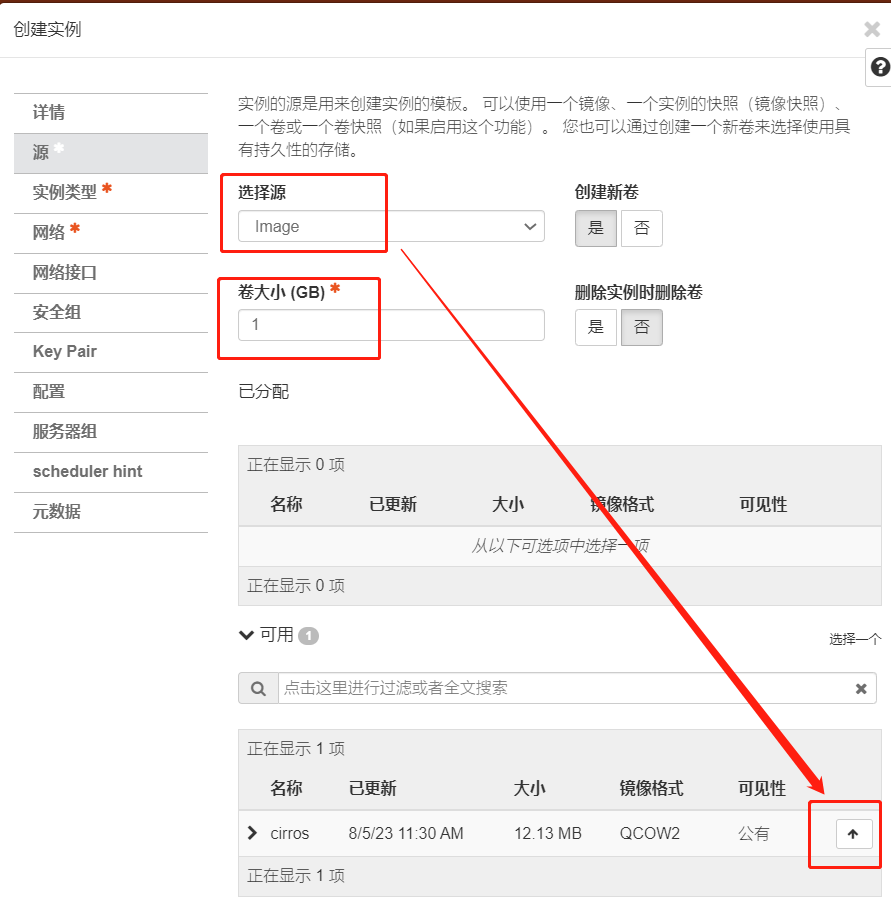

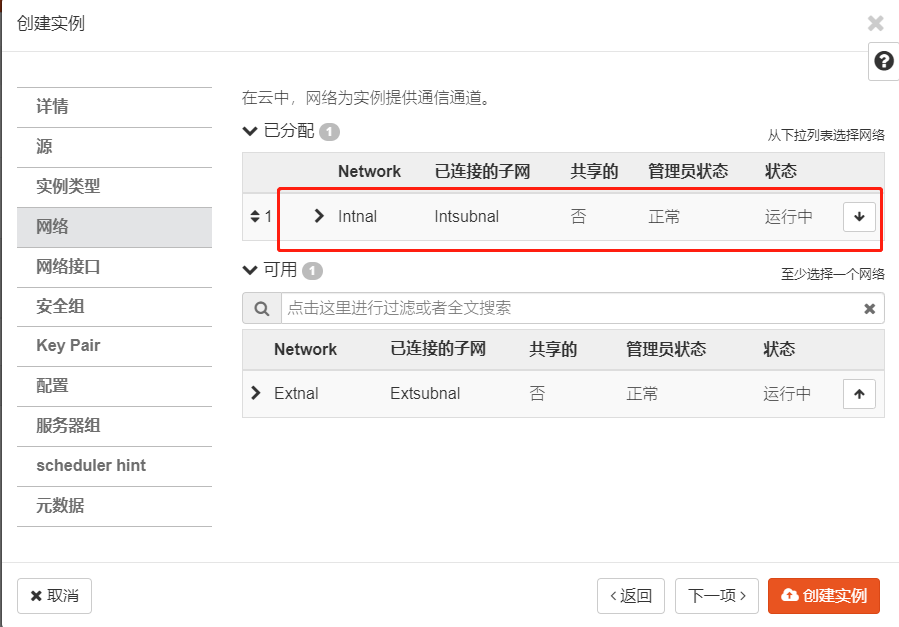

创建实例

选择源

选择实例类型

选择网络

安全组

密匙对

配置(可自动或者手动配置脚本,磁盘)

创建实例

查看实例

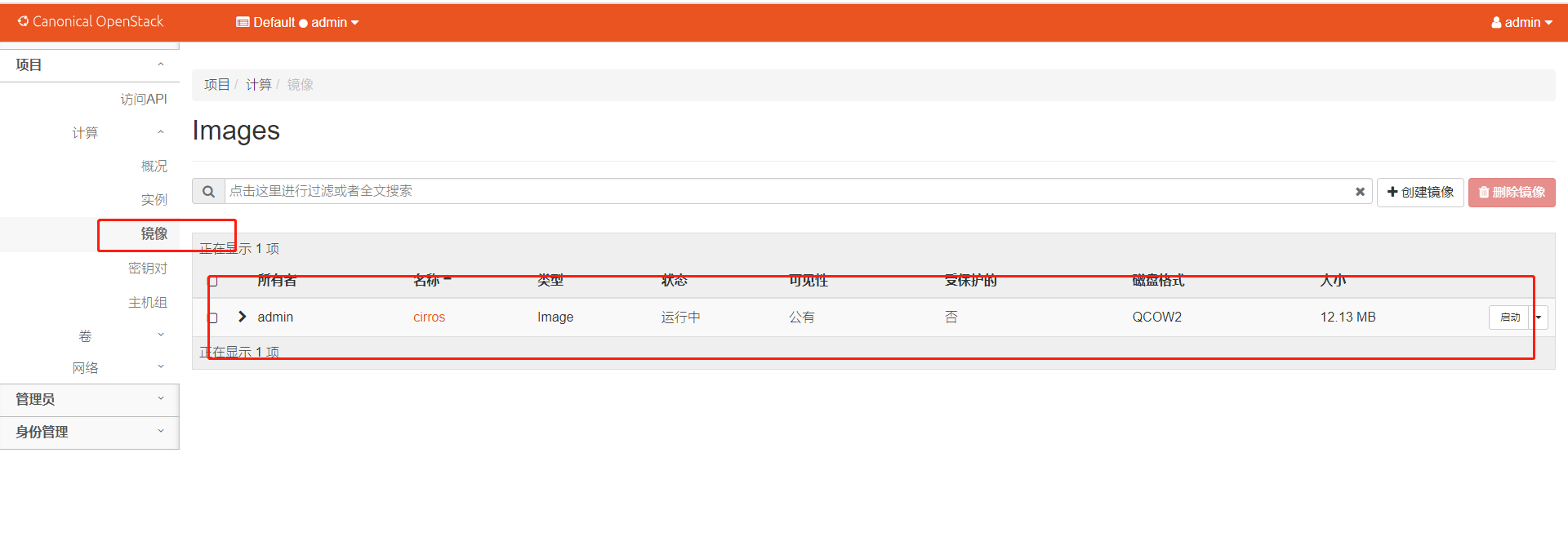

10.5 目前集群已上传的镜像

10.6 密匙对

10.7 创建主机组,可关联实例

10.8 卷

10.9 网络

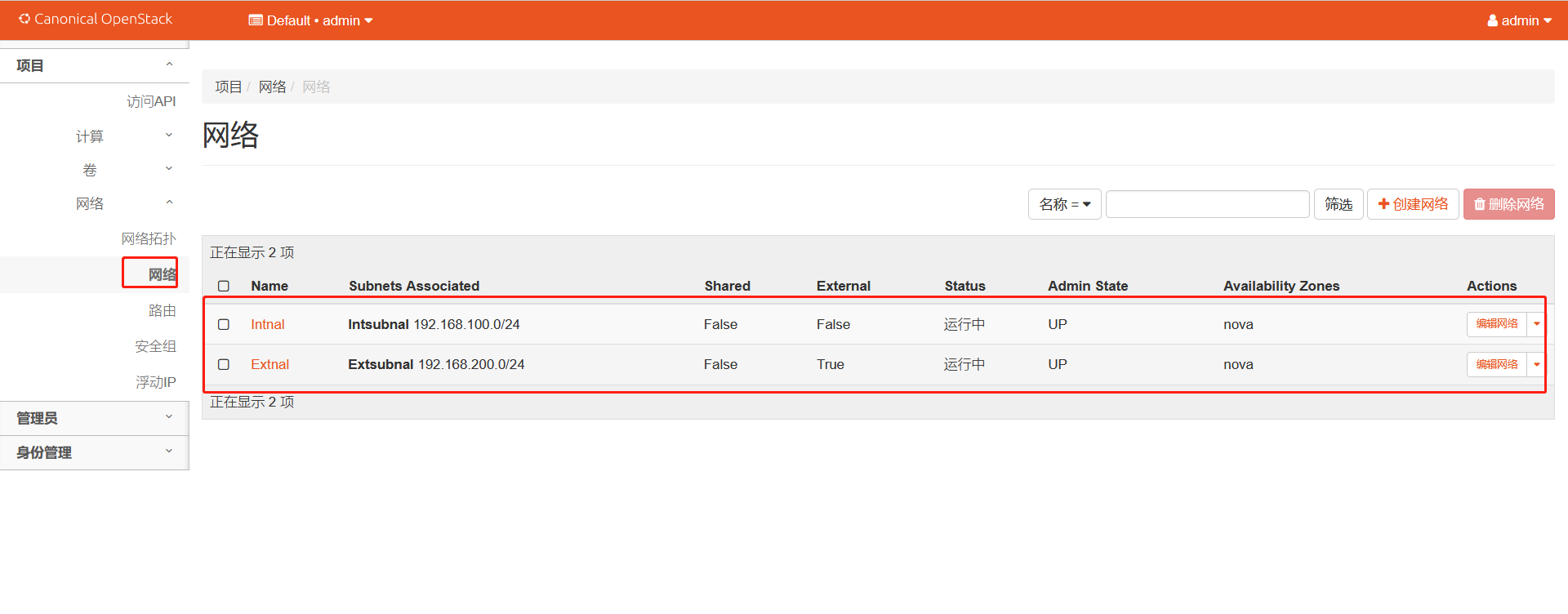

网络

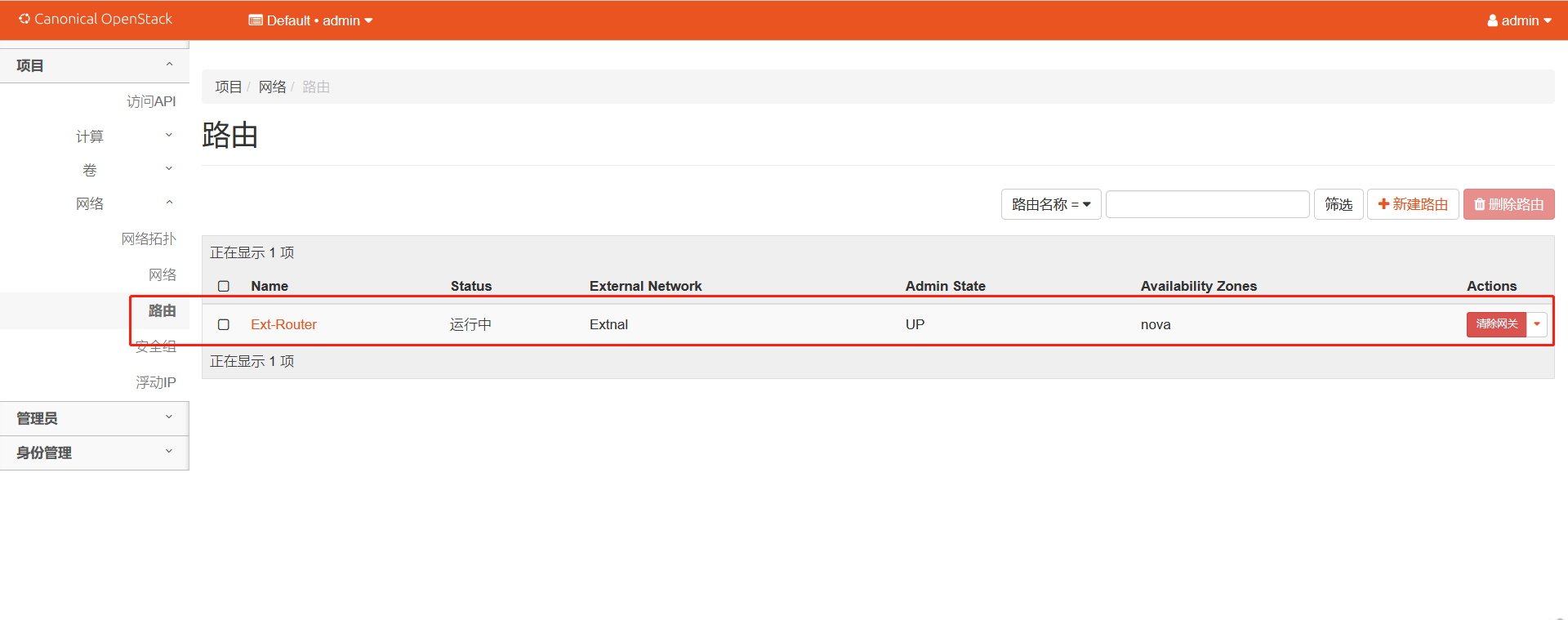

路由

安全组