前言

此文仅记录以Roformer-sim为基础模型做数据增强的过程,Roformer-sim模型细节请移步:SimBERTv2来了!融合检索和生成的RoFormer-Sim模型 - 科学空间|Scientific Spaces

https://github.com/ZhuiyiTechnology/roformer-sim

1.功能介绍

可以用作数据增强与文本相似度计算。

2.安装与使用

2.1 安装

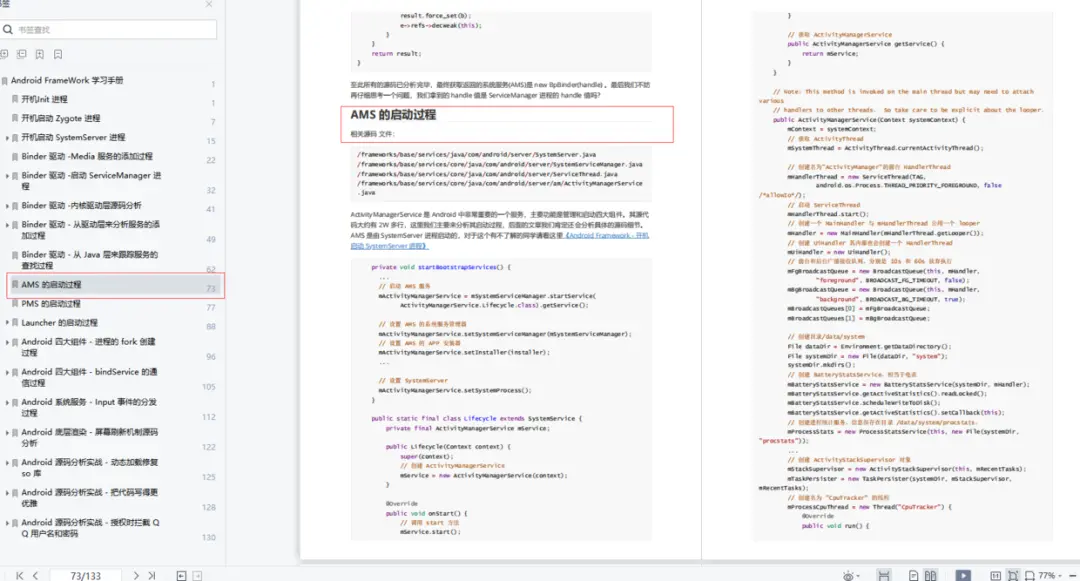

代码依赖的运行环境为 tensorflow 1.14、keras 2.2.5、bert4keras 0.10.6。

由于代码的运行依赖于tensor flow1.14,与现有base环境中的tensorflow版本冲突,所以为了避免环境中各个库的互相影响,在实践时新建了conda 环境。

2.1.1 创建

conda create --name DataAug python=3.62.1.2 激活

conda activate DataAug2.1.3 下载依赖项

pip install tensorflow==1.14 -i https://pypi.tuna.tsinghua.edu.cn/simple/

pip install keras==2.2.5 -i https://pypi.tuna.tsinghua.edu.cn/simple/

pip install bert4keras==0.10.6 -i https://pypi.tuna.tsinghua.edu.cn/simple/2.1.4 下载资源

git clone https://github.com/ZhuiyiTechnology/roformer-sim

2.1.5 下载模型

2.2 使用

2.2.1 运行generate.py

将其中的模型文件路径替换,运行generate.py即可。

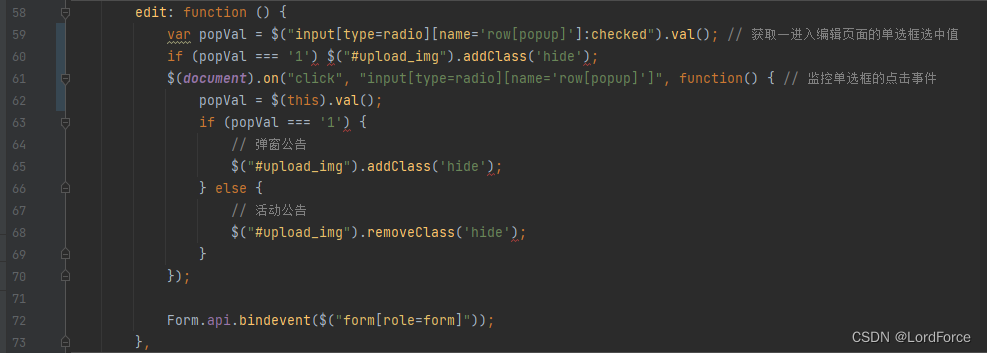

2.2.2 问题

假设报错:TensorFlow binary was not compiled to use: AVX2 FMA,那么在开头添加:

import os os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2' os.environ['KERAS_BACKEND']='tensorflow'2.2.3 开启服务

使用flask和tensorflow进行配合开启服务时,会报错。后期选择使用FastAPI开启服务。

#! -*- coding: utf-8 -*-

# RoFormer-Sim base 基本例子

# 测试环境:tensorflow 1.14 + keras 2.3.1 + bert4keras 0.10.6

#import flask

#from flask import request

import numpy as np

import json

import uvicorn

from fastapi import FastAPI

from bert4keras.backend import keras, K

from bert4keras.models import build_transformer_model

from bert4keras.tokenizers import Tokenizer

from bert4keras.snippets import sequence_padding, AutoRegressiveDecoder

from bert4keras.snippets import uniout

import os

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

os.environ['KERAS_BACKEND']='tensorflow'

os.environ['CUDA_VISIBLE_DEVICES'] = '1'

maxlen = 64

# 模型配置

config_path = '/ssd/dongzhenheng/Pretrain_Model/chinese_roformer-sim-char-ft_L-12_H-768_A-12/bert_config.json'

checkpoint_path = '/ssd/dongzhenheng/Pretrain_Model/chinese_roformer-sim-char-ft_L-12_H-768_A-12/bert_model.ckpt'

dict_path = '/ssd/dongzhenheng/Pretrain_Model/chinese_roformer-sim-char-ft_L-12_H-768_A-12/vocab.txt'

# 建立分词器

tokenizer = Tokenizer(dict_path, do_lower_case=True) # 建立分词器

# 建立加载模型

roformer = build_transformer_model(

config_path,

checkpoint_path,

model='roformer',

application='unilm',

with_pool='linear'

)

encoder = keras.models.Model(roformer.inputs, roformer.outputs[0])

seq2seq = keras.models.Model(roformer.inputs, roformer.outputs[1])

class SynonymsGenerator(AutoRegressiveDecoder):

"""seq2seq解码器

"""

@AutoRegressiveDecoder.wraps(default_rtype='probas')

def predict(self, inputs, output_ids, step):

token_ids, segment_ids = inputs

token_ids = np.concatenate([token_ids, output_ids], 1)

segment_ids = np.concatenate([segment_ids, np.ones_like(output_ids)], 1)

return self.last_token(seq2seq).predict([token_ids, segment_ids])

def generate(self, text, n=1, topp=0.95, mask_idxs=[]):

token_ids, segment_ids = tokenizer.encode(text, maxlen=maxlen)

for i in mask_idxs:

token_ids[i] = tokenizer._token_mask_id

output_ids = self.random_sample([token_ids, segment_ids], n,

topp=topp) # 基于随机采样

return [tokenizer.decode(ids) for ids in output_ids]

synonyms_generator = SynonymsGenerator(

start_id=None, end_id=tokenizer._token_end_id, maxlen=maxlen

)

def gen_synonyms(text, n,k, mask_idxs):

''''含义: 产生sent的n个相似句,然后返回最相似的k个。

做法:用seq2seq生成,并用encoder算相似度并排序。

'''

r = synonyms_generator.generate(text, n, mask_idxs=mask_idxs)

r = [i for i in set(r) if i != text]

r = [text] + r

X, S = [], []

for t in r:

x, s = tokenizer.encode(t)

X.append(x)

S.append(s)

X = sequence_padding(X)

S = sequence_padding(S)

Z = encoder.predict([X, S])

Z /= (Z**2).sum(axis=1, keepdims=True)**0.5

argsort = np.dot(Z[1:], -Z[0]).argsort()

return [r[i + 1] for i in argsort[:k]]

app = FastAPI()

@app.get("/sentence/{sentence}")

async def get_item(sentence:str):

resp = {}

result = gen_synonyms(text=sentence,n=50,k=20,mask_idxs=[])

resp['result'] = result

return json.dumps(resp, ensure_ascii=False)

if __name__ == "__main__":

uvicorn.run(app, host="0.0.0.0", port=9516)

#发送请求

prefix_url = 'http://0.0.0.0:9516/DataAugment?'

params = {

'sentence':text

}

response = requests.get(prefix_url,params)

result_list = response.json()['resp']![[LeetCode - Python]344.反转字符串(Easy);345. 反转字符串中的元音字母(Easy);977. 有序数组的平方(Easy)](https://img-blog.csdnimg.cn/156dfb26519a4e9cbf66eb3714f63c2d.png)

![[mongo]性能机制,分析工具](https://github.com/tutengdihuang/image/assets/31843331/23be66c1-5a09-4580-b9e3-94633e7065b7)