0.1 学习视频源于:b站:刘二大人《PyTorch深度学习实践》

0.2 本章内容为自主学习总结内容,若有错误欢迎指正!

代码(类比线性回归):

# 调用库

import torch

import torch.nn.functional as F

# 数据准备

x_data = torch.Tensor([[1.0], [2.0], [3.0]]) # 训练集输入值

y_data = torch.Tensor([[0], [0], [1]]) # 训练集输出值

# 定义逻辑回归模型

class LogisticRegressionModel(torch.nn.Module):

def __init__(self):

super(LogisticRegressionModel, self).__init__() # 调用父类构造函数

self.linear = torch.nn.Linear(1, 1) # 实例化torch库nn模块的Linear类,特征一维,输出一维

def forward(self, x):

"""

前馈运算

:param x: 输入值

:return: 线性回归预测结果

"""

y_pred = F.sigmoid(self.linear(x))

return y_pred

model = LogisticRegressionModel() # 实例化

criterion = torch.nn.BCELoss(size_average=False) # 损失函数

optimizer = torch.optim.SGD(model.parameters(), lr=0.01) # 优化器——梯度下降SGD

# 训练过程

for epoch in range(1000): # epoch:训练轮次

y_pred = model(x_data)

loss = criterion(y_pred, y_data)

print(epoch, loss.item())

optimizer.zero_grad() # 梯度归零

loss.backward() # 反向传播

optimizer.step() # 权重自动更新

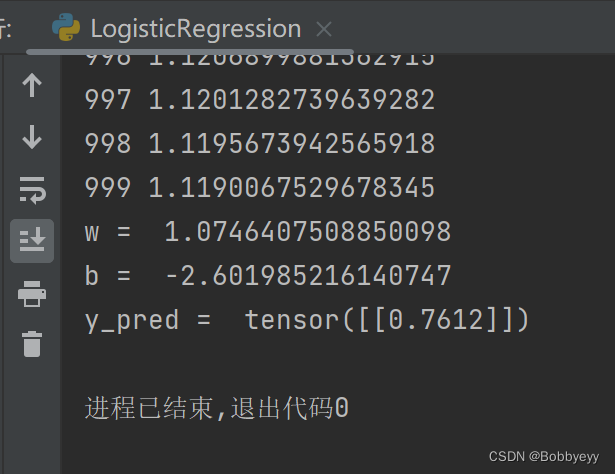

print("w = ", model.linear.weight.item())

print("b = ", model.linear.bias.item())

# 预测过程

x_test = torch.Tensor([[3.5]])

y_test = model(x_test)

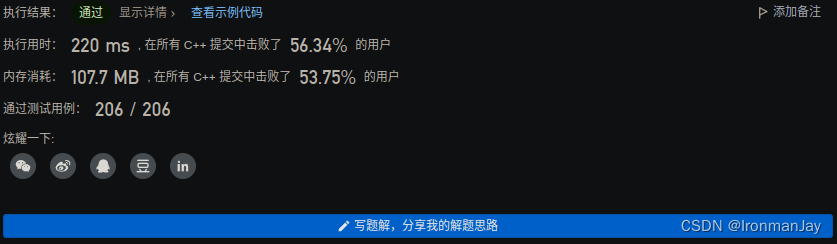

print("y_pred = ", y_test.data)结果:

注:输出结果为类别是1的概率。

![[保研/考研机试] KY187 二进制数 北京邮电大学复试上机题 C++实现](https://img-blog.csdnimg.cn/2ba7c4a470d3470eac0849d5ede4a2df.png)