叫回声消除的延迟时间估算不太合理,这里核心就是估算调用webrtc的条件边界,都知道webrtc回声消除的生效的前提就是一定要拿到远端声音的信息,然后拿近端声音和远端声音对齐,从近端声音中,结合远端声音模拟出远端声音在近端声音中的回声信息然后干掉回声信息,因此,若由于对齐的中保存的远端声音没有对应的声音,则webrtc的回声消除效果就会很差或者就没有效果,所以这个时间差,就很重要,需要结合硬件的情况进行综合权衡delay_time。

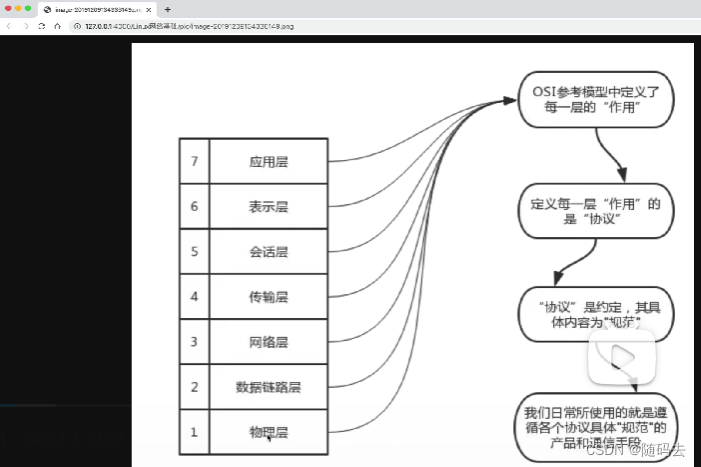

webrtc的回声抵消(aec、aecm)算法主要包括以下几个重要模块:

- 回声时延估计

- NLMS(归一化最小均方自适应算法)

- NLP(非线性滤波)

- CNG(舒适噪声产生)

回声时延估计

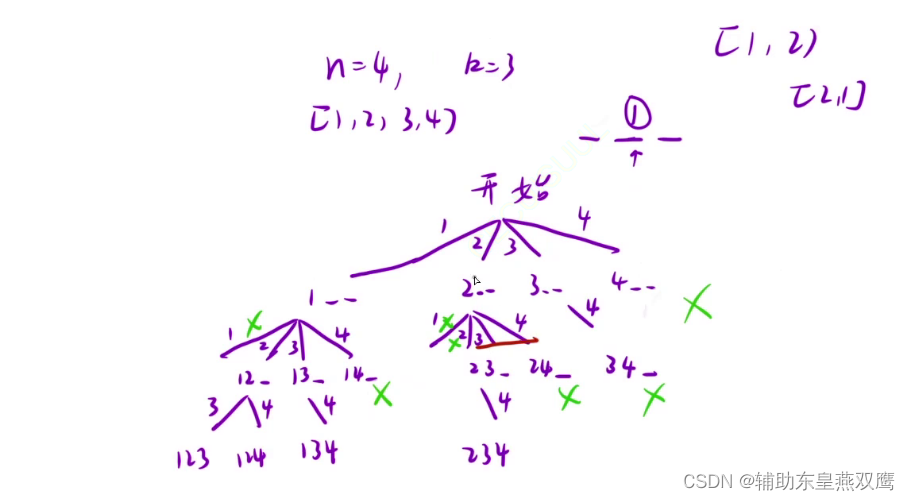

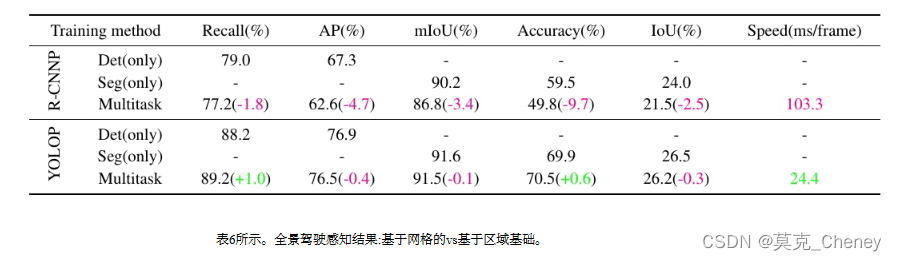

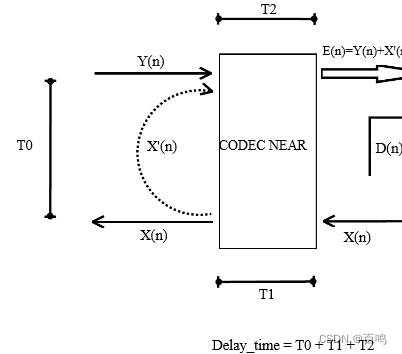

这张图很多东西可以无视,我们重点看T0,T1,T2三项。

- T0代表着声音从扬声器传到麦克风的时间,这个时间可以忽略,因为一般来说话筒和扬声器之间距离不会太远,考虑到声音340米每秒的速度,这个时间都不会超过1毫秒。

- T1代表远处传到你这来的声音,这个声音被传递到回声消除远端接口(WebRtcAec_BufferFarend)的到播放出来的时间。一般来说接收到的音频数据传入这个接口的时候也就是上层传入扬声器的时刻,所以可以理解成该声音防到播放队列中开始计时,到播放出来的时间。

- T2代表一段声音被扬声器采集到,然后到被送到近端处理函数(WebRtcAec_Process)的时刻,由于声音被采集到马上会做回声消除处理,所以这个时间可以理解成麦克风采集到声音开始计时,然后到你的代码拿到音频PCM数据所用的时间。

- delay=T0+T1+T2,其实也就是T1+T2。

一般来说,一个设备如果能找到合适的delay,那么这个设备再做回声消除处理就和降噪增益一样几乎没什么难度了。如iPhone的固定delay是60ms。不过这个要看代码所在位置,假如在芯片内部,时间还是比较少的,并且容易固定,假如在系统应用层软件,整个时间就不确定了。相对比较大了。apm_->set_stream_delay_ms(delay_ms_);

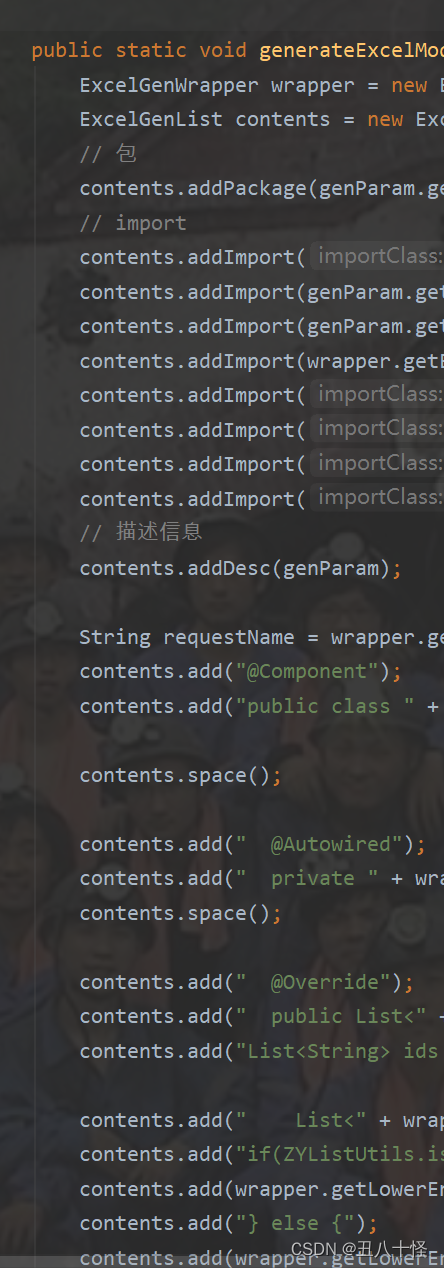

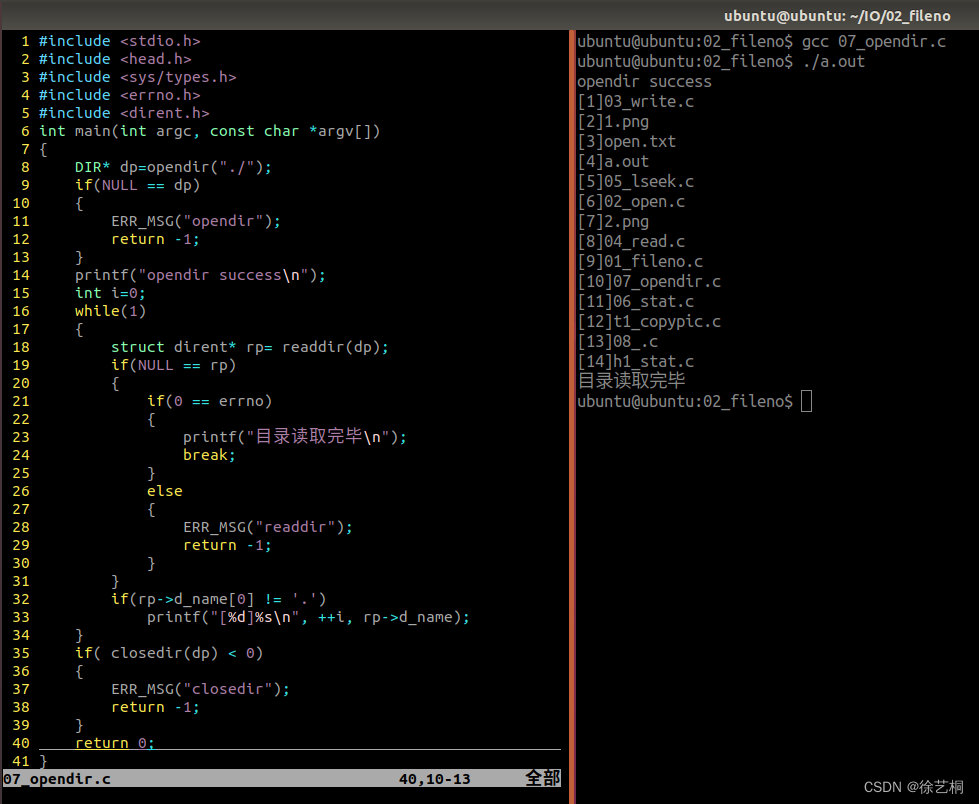

例如在android系统下的webrtc的封装调用:

#include "webrtc_apm.h"

//#include "webrtc/common_types.h"

#include "webrtc/modules/audio_processing/include/audio_processing.h"

#include "webrtc/modules/include/module_common_types.h"

#include "webrtc/api/audio/audio_frame.h"

#include "YuvConvert.h"

using namespace webrtc;

//using namespace cbase;

WebrtcAPM::WebrtcAPM(int process_smp, int reverse_smp)

: apm_(nullptr)

, far_frame_(new AudioFrame)

, near_frame_(new AudioFrame)

, ref_cnt_(0)

, sample_rate_(8000)

, samples_per_channel_(8000/100)

, channels_(1)

, frame_size_10ms_(8000/100*sizeof(int16_t))

, delay_ms_(60)

, process_sample_rate_(process_smp)//44100

, reverse_sample_rate_(reverse_smp)//48000

{

audio_send_ = new unsigned char[kMaxDataSizeSamples_];

audio_reverse_ = new unsigned char[kMaxDataSizeSamples_];

#if defined(__APPLE__)

delay_ms_ = 60;

#endif

#if defined(VAD_TEST)

//create webrtc vad

ty_vad_create(8000, 1);

ty_set_vad_level(vad_level::LOW);

ty_vad_set_recordfile("/sdcard/vadfile.pcm");

#endif

}

WebrtcAPM::~WebrtcAPM()

{

if(far_frame_)

{

delete far_frame_;

far_frame_ = NULL ;

}

if (near_frame_) {

delete near_frame_ ;

near_frame_ = NULL ;

}

if (audio_send_) {

delete[] audio_send_;

}

if (audio_reverse_) {

delete[] audio_reverse_;

}

#if defined(VAD_TEST)

//destory webrtc vad

ty_vad_destory();

#endif

}

void WebrtcAPM::set_sample_rate(int sample_rate)

{

sample_rate_ = sample_rate;

samples_per_channel_ = sample_rate_ / 100;

}

int WebrtcAPM::frame_size()

{

return frame_size_10ms_;

}

void WebrtcAPM::set_ace_delay(int delay)

{

LOGI("set aec delay to %d ms \n", delay);

delay_ms_ = delay;

}

void WebrtcAPM::set_reverse_stream(int reverse_sample_rate)

{

std::lock_guard<std::mutex> guard(mutex_);

reverse_sample_rate_ = reverse_sample_rate;

if (resampleReverse) {

delete resampleReverse;

resampleReverse = NULL ;

}

resampleReverse = new webrtc::Resampler(reverse_sample_rate_,sample_rate_,channels_);

int result = resampleReverse->Reset(reverse_sample_rate_,sample_rate_,channels_);

if (result != 0) {

LOGE("reset resampleReverse fail,%d!\n", result);

}

}

int WebrtcAPM::init()

{

std::lock_guard<std::mutex> guard(mutex_);

resampleReverse = new webrtc::Resampler(reverse_sample_rate_,sample_rate_,channels_);

int result = resampleReverse->Reset(reverse_sample_rate_,sample_rate_,channels_);

if (result != 0) {

LOGE("reset resampleReverse fail,%d!\n", result);

}

resampleIn = new webrtc::Resampler(sample_rate_,process_sample_rate_,channels_);

result = resampleIn->Reset(sample_rate_,process_sample_rate_,channels_);

if (result != 0) {

LOGE("reset resampleIn fail,%d!\n", result);

}

initAPM();

LOGI("initAPM success!");

return 0;//crash without this

}

void WebrtcAPM::uninit()

{

std::lock_guard<std::mutex> guard(mutex_);

deInitAPM();

safe_delete(resampleIn);

safe_delete(resampleReverse);

}

void WebrtcAPM::reset_apm(){

std::lock_guard<std::mutex> guard(mutex_);

deInitAPM();

initAPM();

}

int WebrtcAPM::initAPM(){

apm_ = AudioProcessingBuilder().Create();

if (apm_ == nullptr) {

LOGE("AudioProcessing create failed");

return -1;

}

AudioProcessing::Config config;

config.echo_canceller.enabled = true;

config.echo_canceller.mobile_mode = true;

config.gain_controller1.enabled = true;

config.gain_controller1.mode = AudioProcessing::Config::GainController1::kAdaptiveDigital;

config.gain_controller1.analog_level_minimum = 0;

config.gain_controller1.analog_level_maximum = 255;

config.noise_suppression.enabled = true;

config.noise_suppression.level = AudioProcessing::Config::NoiseSuppression::Level::kModerate;

config.gain_controller2.enabled = true;

config.high_pass_filter.enabled = true;

config.voice_detection.enabled = true;

apm_->ApplyConfig(config);

LOGI("AudioProcessing initialize success \n");

far_frame_->sample_rate_hz_ = sample_rate_;

far_frame_->samples_per_channel_ = samples_per_channel_;

far_frame_->num_channels_ = channels_;

near_frame_->sample_rate_hz_ = sample_rate_;

near_frame_->samples_per_channel_ = samples_per_channel_;

near_frame_->num_channels_ = channels_;

frame_size_10ms_ = samples_per_channel_ * channels_ * sizeof(int16_t);

//LOGI("AudioProcessing initialize success end\n");

return 0;

}

void WebrtcAPM::deInitAPM(){

//std::lock_guard<std::mutex> guard(mutex_);

//if (--ref_cnt_ == 0) {

LOGI("destroy WebrtcAPM \n");

safe_delete(apm_);

//}

}

//8000->44100

void WebrtcAPM::process_stream(uint8_t *buffer,int bufferLength, uint8_t *bufferOut, int* pOutLen, bool bUseAEC)

{

if (bUseAEC) {

std::lock_guard<std::mutex> guard(mutex_);

if (apm_) {

int frame_count = bufferLength / frame_size_10ms_;

for (int i = 0; i < frame_count; i++) {

apm_->set_stream_delay_ms(delay_ms_);

// webrtc apm process 10ms datas every time

memcpy((void*)near_frame_->data(), buffer + i*frame_size_10ms_, frame_size_10ms_);

int res = apm_->ProcessStream(near_frame_->data(),

StreamConfig(near_frame_->sample_rate_hz_, near_frame_->num_channels_),

StreamConfig(near_frame_->sample_rate_hz_, near_frame_->num_channels_),

(int16_t * const)near_frame_->data());

if (res != 0) {

LOGE("ProcessStream failed, ret %d \n",res);

}

#if defined(VAD_TEST)

bool ret = ty_vad_process(buffer + i*frame_size_10ms_, frame_size_10ms_);

#endif

memcpy(buffer + i*frame_size_10ms_, near_frame_->data(), frame_size_10ms_);

}

}

}

if (resampleIn && apm_) {

//resample

size_t outlen = 0 ;

int result = resampleIn->Push((int16_t*)buffer, bufferLength/sizeof(int16_t), (int16_t*)bufferOut, kMaxDataSizeSamples_/sizeof(int16_t), outlen);

if (result != 0) {

LOGE("resampleIn error, result = %d, outlen = %d\n", result, outlen);

}

*pOutLen = outlen;

}

}

//48000->8000

void WebrtcAPM::process_reverse_10ms_stream(uint8_t *bufferIn, int bufferLength, uint8_t *bufferOut, int* pOutLen, bool bUseAEC)

{

size_t outlen = 0 ;

if (resampleReverse && apm_) {

//resample

int result = resampleReverse->Push((int16_t*)bufferIn, bufferLength/sizeof(int16_t), (int16_t*)audio_reverse_, kMaxDataSizeSamples_/sizeof(int16_t), outlen);

if (result != 0) {

LOGE("resampleReverse error, result = %d, outlen = %d\n", result, outlen);

}

}

else {

memcpy(audio_reverse_, bufferIn, bufferLength);

outlen = bufferLength;

}

*pOutLen = outlen;

if (!bUseAEC){

//copy data and return

memcpy(bufferOut, audio_reverse_, frame_size_10ms_);

return;

}

std::lock_guard<std::mutex> guard(mutex_);

if (apm_) {

memcpy((void*)far_frame_->data(), audio_reverse_, frame_size_10ms_);

int res = apm_->ProcessReverseStream(far_frame_->data(),

StreamConfig(far_frame_->sample_rate_hz_, far_frame_->num_channels_),

StreamConfig(far_frame_->sample_rate_hz_, far_frame_->num_channels_),

(int16_t * const)far_frame_->data());

if (res != 0) {

LOGE("ProcessReverseStream failed, ret %d \n",res);

}

memcpy(bufferOut, audio_reverse_, frame_size_10ms_);//far_frame_->data()

}

}