目录

1.configs

2.具体实现

3.调用

3.1 注册

3.2 调用

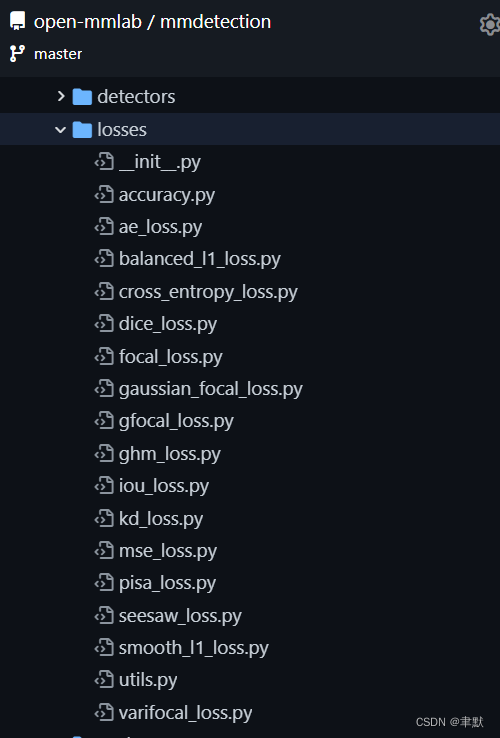

配置部分在configs/_base_/models目录下,具体实现在mmdet/models/loss目录下。

1.configs

有的时候写在head中作为参数,有的时候head内部进行默认调用。

我们以为例(这里没有直接写loss相关参数):

https://github.com/open-mmlab/mmdetection/blob/master/configs/yolox/yolox_s_8x8_300e_coco.py#L17

2.具体实现

从head文件中,写了4个loss。

以IoULoss为例,具体实现:

mmdetection/iou_loss.py at master · open-mmlab/mmdetection · GitHub

@LOSSES.register_module()

class IoULoss(nn.Module):

"""IoULoss.

Computing the IoU loss between a set of predicted bboxes and target bboxes.

Args:

linear (bool): If True, use linear scale of loss else determined

by mode. Default: False.

eps (float): Eps to avoid log(0).

reduction (str): Options are "none", "mean" and "sum".

loss_weight (float): Weight of loss.

mode (str): Loss scaling mode, including "linear", "square", and "log".

Default: 'log'

"""

def __init__(self,

linear=False,

eps=1e-6,

reduction='mean',

loss_weight=1.0,

mode='log'):

super(IoULoss, self).__init__()

assert mode in ['linear', 'square', 'log']

if linear:

mode = 'linear'

warnings.warn('DeprecationWarning: Setting "linear=True" in '

'IOULoss is deprecated, please use "mode=`linear`" '

'instead.')

self.mode = mode

self.linear = linear

self.eps = eps

self.reduction = reduction

self.loss_weight = loss_weight

def forward(self,

pred,

target,

weight=None,

avg_factor=None,

reduction_override=None,

**kwargs):

"""Forward function.

Args:

pred (torch.Tensor): The prediction.

target (torch.Tensor): The learning target of the prediction.

weight (torch.Tensor, optional): The weight of loss for each

prediction. Defaults to None.

avg_factor (int, optional): Average factor that is used to average

the loss. Defaults to None.

reduction_override (str, optional): The reduction method used to

override the original reduction method of the loss.

Defaults to None. Options are "none", "mean" and "sum".

"""

assert reduction_override in (None, 'none', 'mean', 'sum')

reduction = (

reduction_override if reduction_override else self.reduction)

if (weight is not None) and (not torch.any(weight > 0)) and (

reduction != 'none'):

if pred.dim() == weight.dim() + 1:

weight = weight.unsqueeze(1)

return (pred * weight).sum() # 0

if weight is not None and weight.dim() > 1:

# TODO: remove this in the future

# reduce the weight of shape (n, 4) to (n,) to match the

# iou_loss of shape (n,)

assert weight.shape == pred.shape

weight = weight.mean(-1)

loss = self.loss_weight * iou_loss(

pred,

target,

weight,

mode=self.mode,

eps=self.eps,

reduction=reduction,

avg_factor=avg_factor,

**kwargs)

return loss

3.调用

3.1 注册

注册创建类名字典。

@LOSSES.register_module()

3.2 调用

实例化在YOLOXHead该类中:

https://github.com/open-mmlab/mmdetection/blob/master/mmdet/models/dense_heads/yolox_head.py#L109

self.loss_cls = build_loss(loss_cls)

self.loss_bbox = build_loss(loss_bbox)

self.loss_obj = build_loss(loss_obj)

self.use_l1 = False # This flag will be modified by hooks.

self.loss_l1 = build_loss(loss_l1)代码通过调用YOLOX的基类中的forward_train函数调用到head的forward_train:

https://github.com/open-mmlab/mmdetection/blob/31c84958f54287a8be2b99cbf87a6dcf12e57753/mmdet/models/detectors/single_stage.py#L57

def forward_train(self,

img,

img_metas,

gt_bboxes,

gt_labels,

gt_bboxes_ignore=None):

"""

Args:

img (Tensor): Input images of shape (N, C, H, W).

Typically these should be mean centered and std scaled.

img_metas (list[dict]): A List of image info dict where each dict

has: 'img_shape', 'scale_factor', 'flip', and may also contain

'filename', 'ori_shape', 'pad_shape', and 'img_norm_cfg'.

For details on the values of these keys see

:class:`mmdet.datasets.pipelines.Collect`.

gt_bboxes (list[Tensor]): Each item are the truth boxes for each

image in [tl_x, tl_y, br_x, br_y] format.

gt_labels (list[Tensor]): Class indices corresponding to each box

gt_bboxes_ignore (None | list[Tensor]): Specify which bounding

boxes can be ignored when computing the loss.

Returns:

dict[str, Tensor]: A dictionary of loss components.

"""

super(SingleStageDetector, self).forward_train(img, img_metas)

x = self.extract_feat(img)

losses = self.bbox_head.forward_train(x, img_metas, gt_bboxes,

gt_labels, gt_bboxes_ignore)

return losses而head的forward_train,继承的其基类实现:

https://github.com/open-mmlab/mmdetection/blob/master/mmdet/models/dense_heads/base_dense_head.py#L303

def forward_train(self,

x,

img_metas,

gt_bboxes,

gt_labels=None,

gt_bboxes_ignore=None,

proposal_cfg=None,

**kwargs):

"""

Args:

x (list[Tensor]): Features from FPN.

img_metas (list[dict]): Meta information of each image, e.g.,

image size, scaling factor, etc.

gt_bboxes (Tensor): Ground truth bboxes of the image,

shape (num_gts, 4).

gt_labels (Tensor): Ground truth labels of each box,

shape (num_gts,).

gt_bboxes_ignore (Tensor): Ground truth bboxes to be

ignored, shape (num_ignored_gts, 4).

proposal_cfg (mmcv.Config): Test / postprocessing configuration,

if None, test_cfg would be used

Returns:

tuple:

losses: (dict[str, Tensor]): A dictionary of loss components.

proposal_list (list[Tensor]): Proposals of each image.

"""

outs = self(x)

if gt_labels is None:

loss_inputs = outs + (gt_bboxes, img_metas)

else:

loss_inputs = outs + (gt_bboxes, gt_labels, img_metas)

losses = self.loss(*loss_inputs, gt_bboxes_ignore=gt_bboxes_ignore)

if proposal_cfg is None:

return losses

else:

proposal_list = self.get_bboxes(

*outs, img_metas=img_metas, cfg=proposal_cfg)

return losses, proposal_list其中调用的self.loss函数,基类中未实现,所以loss函数调用的head的:

losses = self.loss(*loss_inputs, gt_bboxes_ignore=gt_bboxes_ignore)mmdetection/yolox_head.py at master · open-mmlab/mmdetection · GitHub

@force_fp32(apply_to=('cls_scores', 'bbox_preds', 'objectnesses'))

def loss(self,

cls_scores,

bbox_preds,

objectnesses,

gt_bboxes,

gt_labels,

img_metas,

gt_bboxes_ignore=None):

"""Compute loss of the head.

Args:

cls_scores (list[Tensor]): Box scores for each scale level,

each is a 4D-tensor, the channel number is

num_priors * num_classes.

bbox_preds (list[Tensor]): Box energies / deltas for each scale

level, each is a 4D-tensor, the channel number is

num_priors * 4.

objectnesses (list[Tensor], Optional): Score factor for

all scale level, each is a 4D-tensor, has shape

(batch_size, 1, H, W).

gt_bboxes (list[Tensor]): Ground truth bboxes for each image with

shape (num_gts, 4) in [tl_x, tl_y, br_x, br_y] format.

gt_labels (list[Tensor]): class indices corresponding to each box

img_metas (list[dict]): Meta information of each image, e.g.,

image size, scaling factor, etc.

gt_bboxes_ignore (None | list[Tensor]): specify which bounding

boxes can be ignored when computing the loss.

"""

num_imgs = len(img_metas)

featmap_sizes = [cls_score.shape[2:] for cls_score in cls_scores]

mlvl_priors = self.prior_generator.grid_priors(

featmap_sizes,

dtype=cls_scores[0].dtype,

device=cls_scores[0].device,

with_stride=True)

flatten_cls_preds = [

cls_pred.permute(0, 2, 3, 1).reshape(num_imgs, -1,

self.cls_out_channels)

for cls_pred in cls_scores

]

flatten_bbox_preds = [

bbox_pred.permute(0, 2, 3, 1).reshape(num_imgs, -1, 4)

for bbox_pred in bbox_preds

]

flatten_objectness = [

objectness.permute(0, 2, 3, 1).reshape(num_imgs, -1)

for objectness in objectnesses

]

flatten_cls_preds = torch.cat(flatten_cls_preds, dim=1)

flatten_bbox_preds = torch.cat(flatten_bbox_preds, dim=1)

flatten_objectness = torch.cat(flatten_objectness, dim=1)

flatten_priors = torch.cat(mlvl_priors)

flatten_bboxes = self._bbox_decode(flatten_priors, flatten_bbox_preds)

(pos_masks, cls_targets, obj_targets, bbox_targets, l1_targets,

num_fg_imgs) = multi_apply(

self._get_target_single, flatten_cls_preds.detach(),

flatten_objectness.detach(),

flatten_priors.unsqueeze(0).repeat(num_imgs, 1, 1),

flatten_bboxes.detach(), gt_bboxes, gt_labels)

# The experimental results show that ‘reduce_mean’ can improve

# performance on the COCO dataset.

num_pos = torch.tensor(

sum(num_fg_imgs),

dtype=torch.float,

device=flatten_cls_preds.device)

num_total_samples = max(reduce_mean(num_pos), 1.0)

pos_masks = torch.cat(pos_masks, 0)

cls_targets = torch.cat(cls_targets, 0)

obj_targets = torch.cat(obj_targets, 0)

bbox_targets = torch.cat(bbox_targets, 0)

if self.use_l1:

l1_targets = torch.cat(l1_targets, 0)

loss_bbox = self.loss_bbox(

flatten_bboxes.view(-1, 4)[pos_masks],

bbox_targets) / num_total_samples

loss_obj = self.loss_obj(flatten_objectness.view(-1, 1),

obj_targets) / num_total_samples

loss_cls = self.loss_cls(

flatten_cls_preds.view(-1, self.num_classes)[pos_masks],

cls_targets) / num_total_samples

loss_dict = dict(

loss_cls=loss_cls, loss_bbox=loss_bbox, loss_obj=loss_obj)

if self.use_l1:

loss_l1 = self.loss_l1(

flatten_bbox_preds.view(-1, 4)[pos_masks],

l1_targets) / num_total_samples

loss_dict.update(loss_l1=loss_l1)

return loss_dict而对应的里面的loss函数,来自:

https://github.com/open-mmlab/mmdetection/blob/master/mmdet/models/dense_heads/yolox_head.py#L327

self.loss_cls = build_loss(loss_cls)

self.loss_bbox = build_loss(loss_bbox)

self.loss_obj = build_loss(loss_obj)

self.use_l1 = False # This flag will be modified by hooks.

self.loss_l1 = build_loss(loss_l1)loss的具体实现在:https://github.com/open-mmlab/mmdetection/blob/master/mmdet/models/losses/iou_loss.py#L16

@LOSSES.register_module()

class IoULoss(nn.Module):

"""IoULoss.

Computing the IoU loss between a set of predicted bboxes and target bboxes.

Args:

linear (bool): If True, use linear scale of loss else determined

by mode. Default: False.

eps (float): Eps to avoid log(0).

reduction (str): Options are "none", "mean" and "sum".

loss_weight (float): Weight of loss.

mode (str): Loss scaling mode, including "linear", "square", and "log".

Default: 'log'

"""

def __init__(self,

linear=False,

eps=1e-6,

reduction='mean',

loss_weight=1.0,

mode='log'):

super(IoULoss, self).__init__()

assert mode in ['linear', 'square', 'log']

if linear:

mode = 'linear'

warnings.warn('DeprecationWarning: Setting "linear=True" in '

'IOULoss is deprecated, please use "mode=`linear`" '

'instead.')

self.mode = mode

self.linear = linear

self.eps = eps

self.reduction = reduction

self.loss_weight = loss_weight

def forward(self,

pred,

target,

weight=None,

avg_factor=None,

reduction_override=None,

**kwargs):

"""Forward function.

Args:

pred (torch.Tensor): The prediction.

target (torch.Tensor): The learning target of the prediction.

weight (torch.Tensor, optional): The weight of loss for each

prediction. Defaults to None.

avg_factor (int, optional): Average factor that is used to average

the loss. Defaults to None.

reduction_override (str, optional): The reduction method used to

override the original reduction method of the loss.

Defaults to None. Options are "none", "mean" and "sum".

"""

assert reduction_override in (None, 'none', 'mean', 'sum')

reduction = (

reduction_override if reduction_override else self.reduction)

if (weight is not None) and (not torch.any(weight > 0)) and (

reduction != 'none'):

if pred.dim() == weight.dim() + 1:

weight = weight.unsqueeze(1)

return (pred * weight).sum() # 0

if weight is not None and weight.dim() > 1:

# TODO: remove this in the future

# reduce the weight of shape (n, 4) to (n,) to match the

# iou_loss of shape (n,)

assert weight.shape == pred.shape

weight = weight.mean(-1)

loss = self.loss_weight * iou_loss(

pred,

target,

weight,

mode=self.mode,

eps=self.eps,

reduction=reduction,

avg_factor=avg_factor,

**kwargs)

return loss

![[附源码]计算机毕业设计基于VUE的网上订餐系统Springboot程序](https://img-blog.csdnimg.cn/6d63c1955d594bd9b8ba047a16d3a35a.png)

![[附源码]Python计算机毕业设计SSM基于框架的毕业生就业管理系统(程序+LW)](https://img-blog.csdnimg.cn/82c2cc0290f24ab4aa12e4ec864aaef0.png)

![[附源码]计算机毕业设计基于web的建设科技项目申报管理系统Springboot程序](https://img-blog.csdnimg.cn/dc00dcc661e14098bbf71efa71c0f3cf.png)